Tensors ndimensional w arrays Vector 1 D tensor

![Learnparameters: Loss Defineloss function Loss function for softmax_cross_entropy_with_logi ts(labels = tf. placeholder("float", [1, 3]) Learnparameters: Loss Defineloss function Loss function for softmax_cross_entropy_with_logi ts(labels = tf. placeholder("float", [1, 3])](https://slidetodoc.com/presentation_image_h2/cad47eae2b400877e1092709b55264b6/image-15.jpg)

![Add biase Biases initialized toszero … w = tf. Variable(tf. random_normal([3, 3])) b = Add biase Biases initialized toszero … w = tf. Variable(tf. random_normal([3, 3])) b =](https://slidetodoc.com/presentation_image_h2/cad47eae2b400877e1092709b55264b6/image-19.jpg)

![Make it deep Add layer s … x = tf. placeholder("float", [1, 3]) relu_out Make it deep Add layer s … x = tf. placeholder("float", [1, 3]) relu_out](https://slidetodoc.com/presentation_image_h2/cad47eae2b400877e1092709b55264b6/image-20.jpg)

- Slides: 36

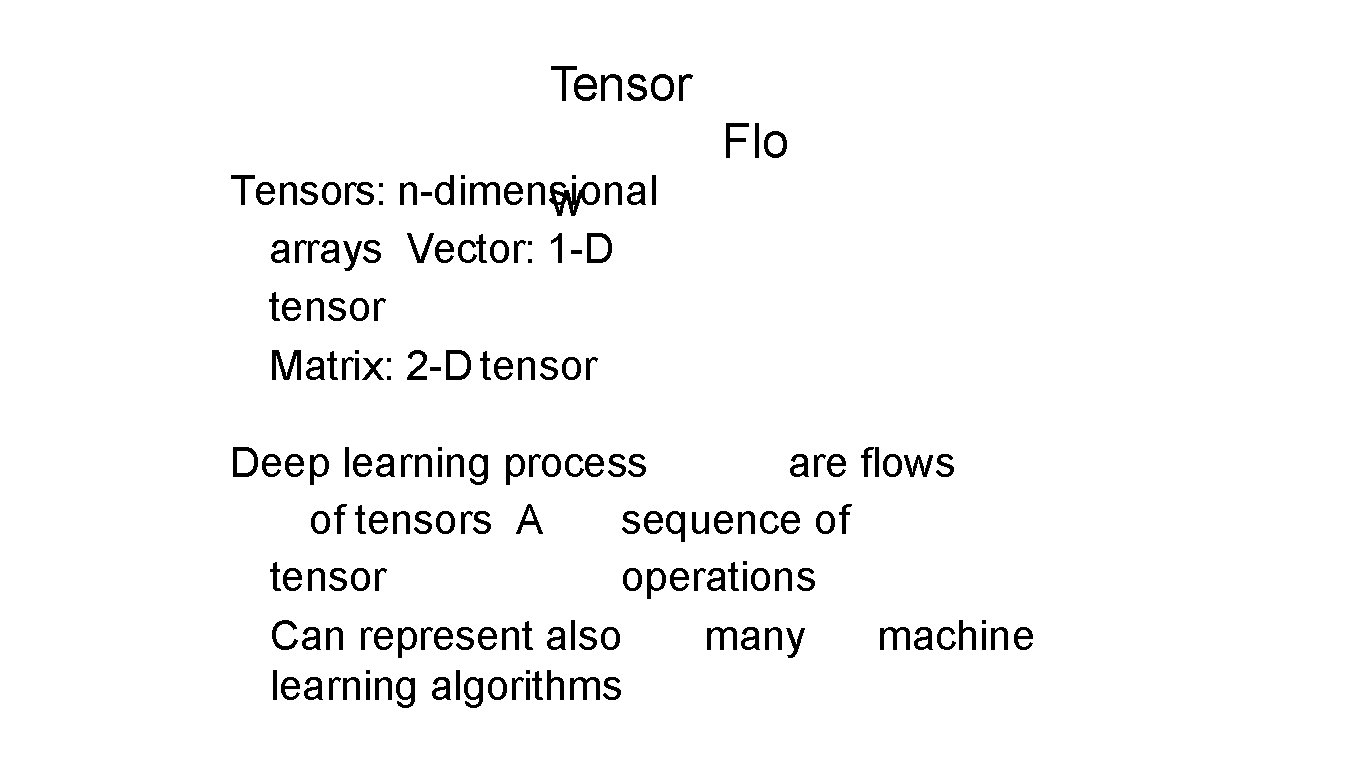

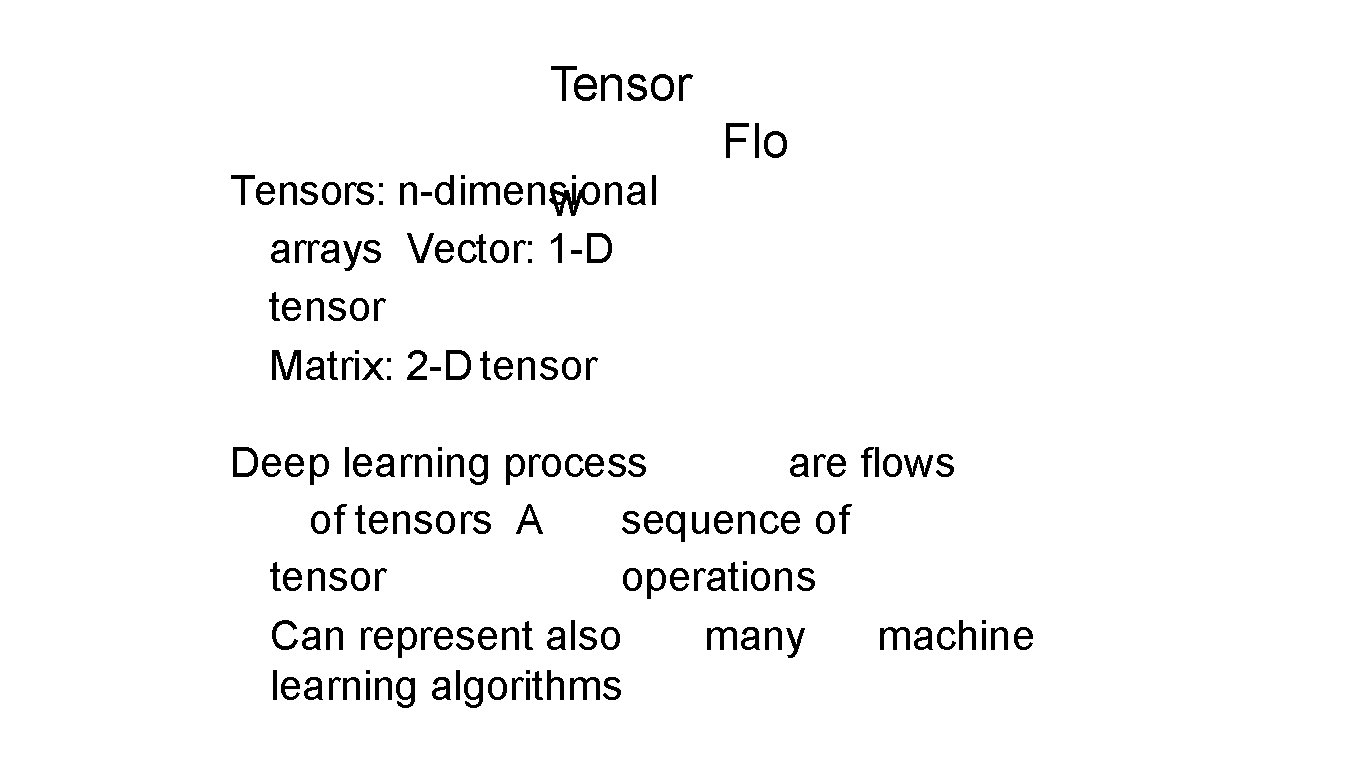

Tensors: n-dimensional w arrays Vector: 1 -D tensor Matrix: 2 -D tensor Flo Deep learning process are flows of tensors A sequence of tensor operations Can represent also many machine learning algorithms

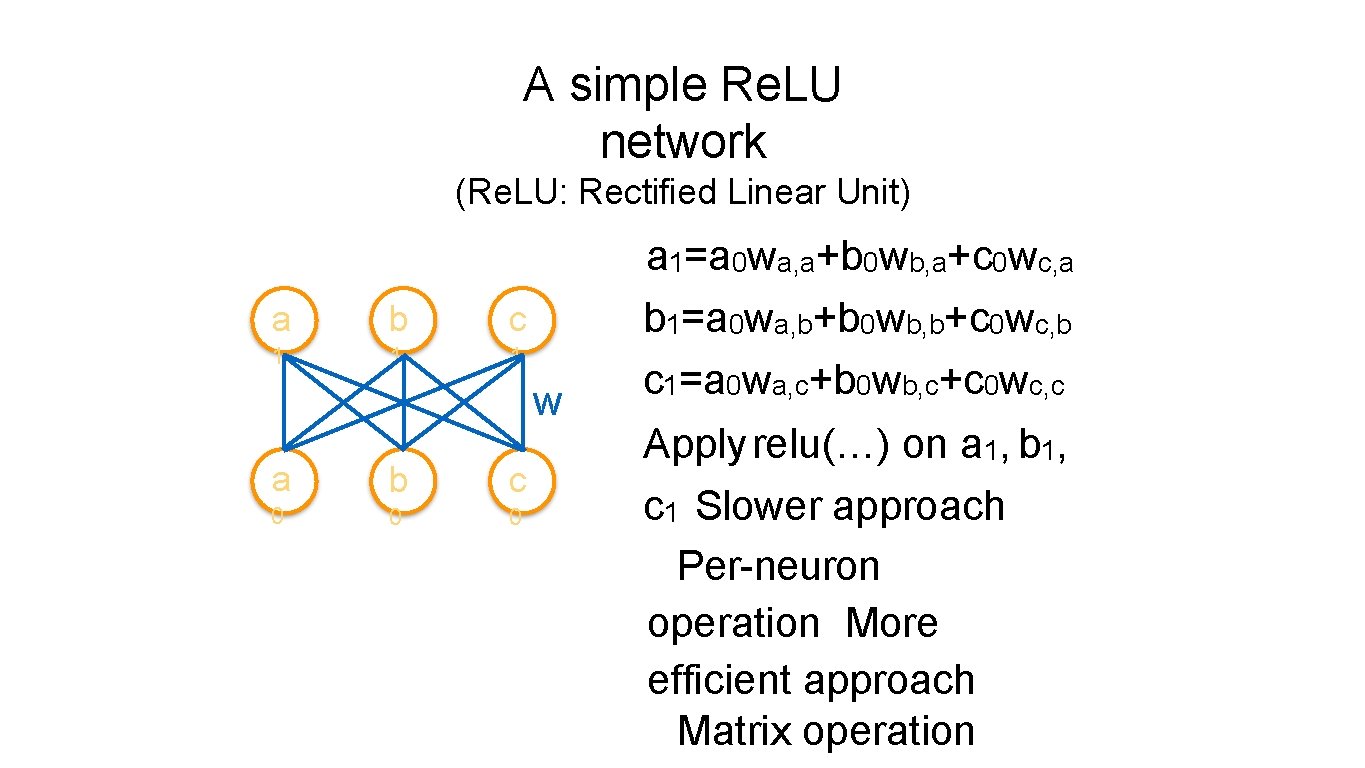

A simple Re. LU network (Re. LU: Rectified Linear Unit) a b c 1 1 1 w a b c 0 0 0 a 1=a 0 wa, a+b 0 wb, a+c 0 wc, a b 1=a 0 wa, b+b 0 wb, b+c 0 wc, b c 1=a 0 wa, c+b 0 wb, c+c 0 wc, c Apply relu(…) on a 1, b 1, c 1 Slower approach Per-neuron operation More efficient approach Matrix operation

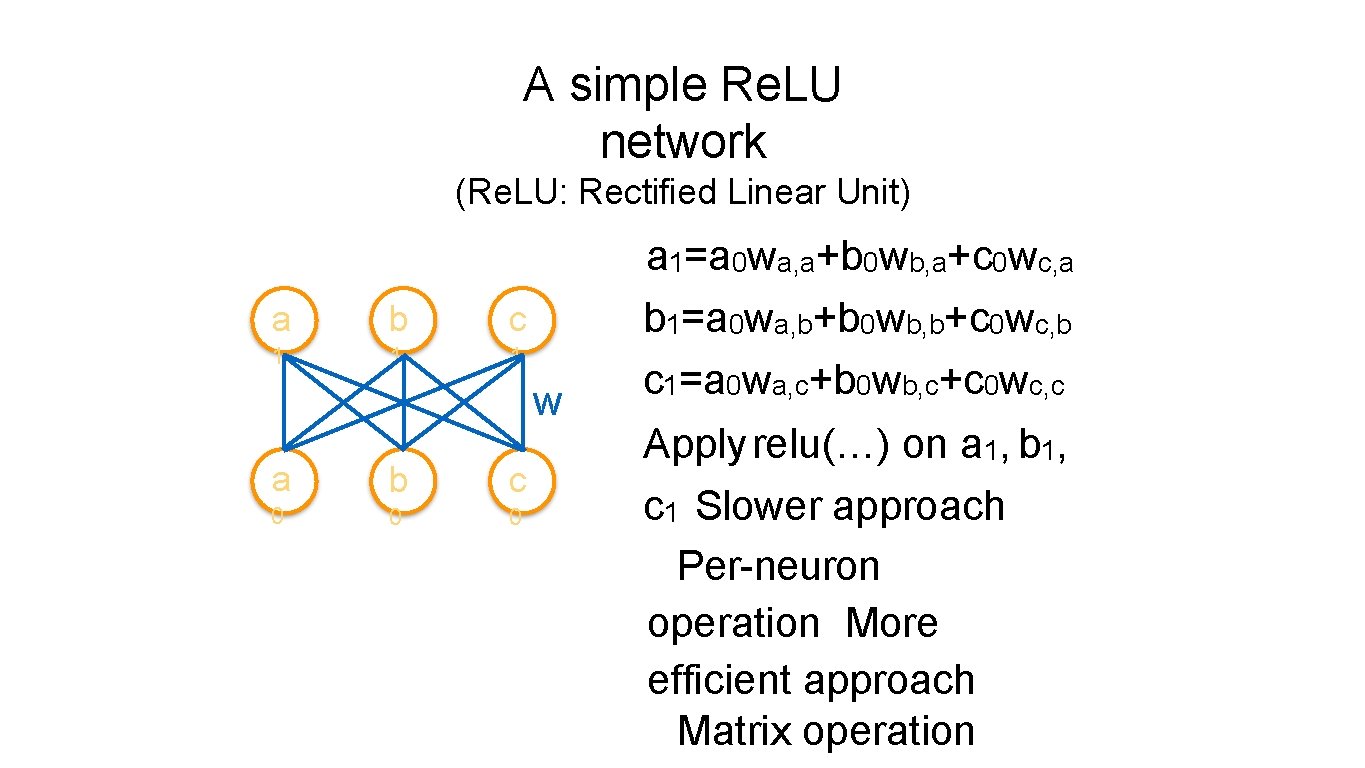

As matrix operations wa, a wa, b wa, c a 0 b 0 c 0. a b c 1 1 1 wb, a wb, b wb, c = a 1 b 1 c 1 wc, a wc, b wc, c w a b c 0 0 0 a 1 =relu( a 1 ) b 1 =relu( b 1 ) c 1 =relu( c 1 )

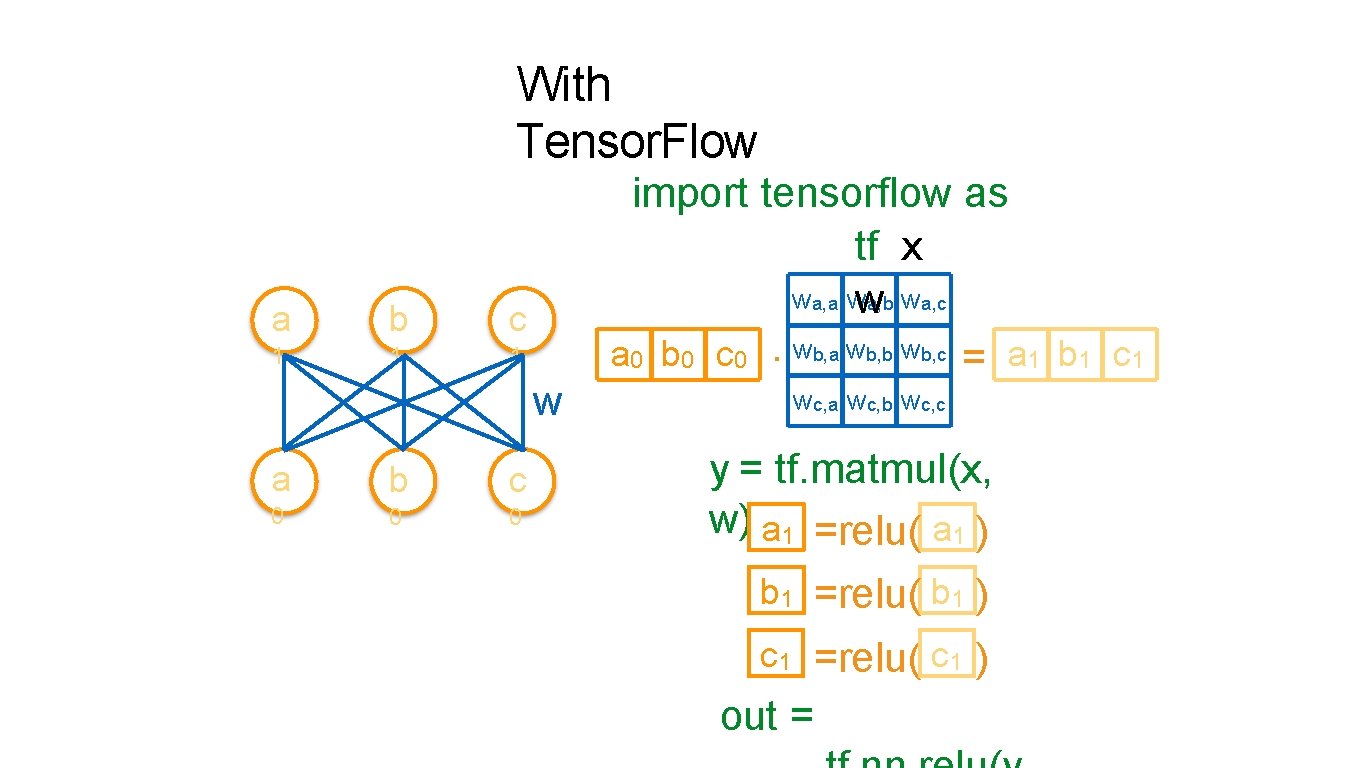

With Tensor. Flow a b c 1 1 1 w a b c 0 0 0 import tensorflow as tf x wa, a ww a, b wa, c a 0 b 0 c 0. wb, a wb, b wb, c = a 1 b 1 c 1 wc, a wc, b wc, c y = tf. matmul(x, w) a 1 =relu( a 1 ) b 1 =relu( b 1 ) c 1 =relu( c 1 ) out =

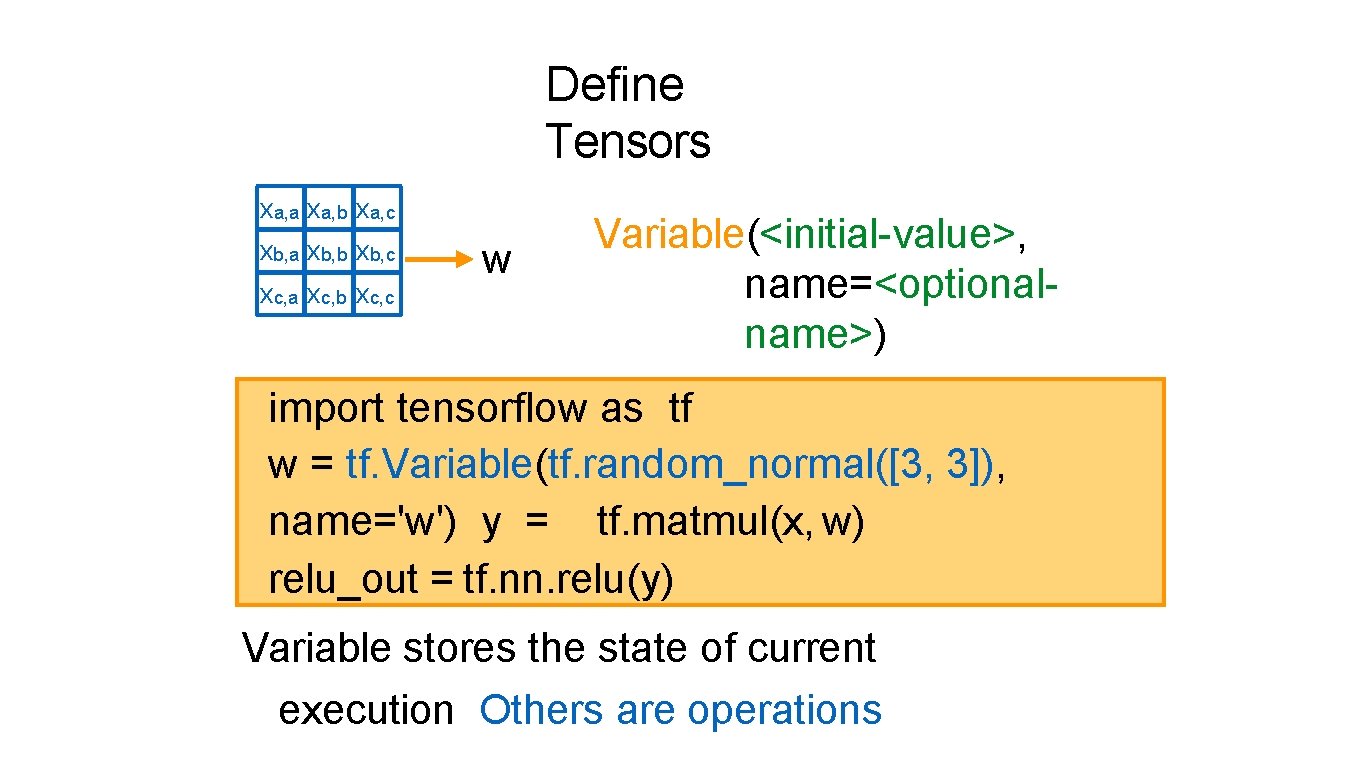

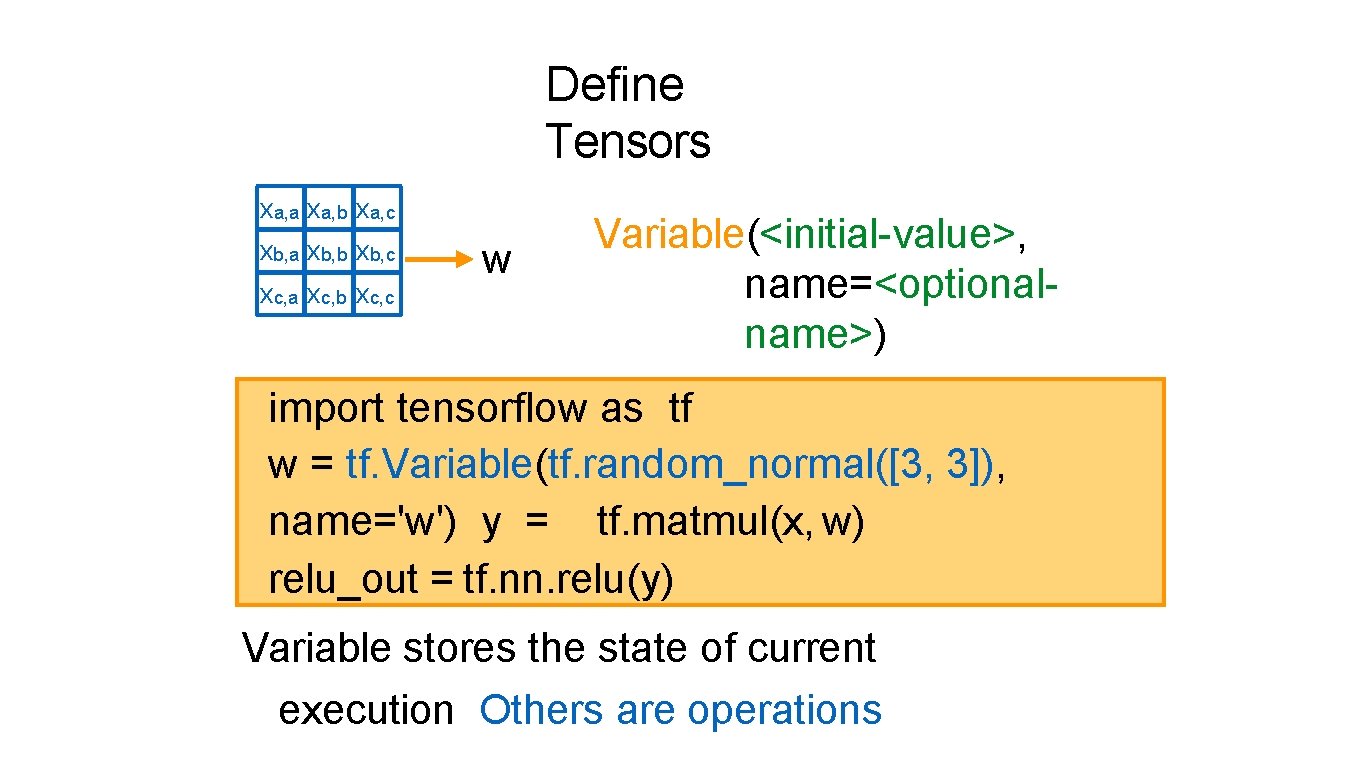

Define Tensors xa, a xa, b xa, c xb, a xb, b xb, c xc, a xc, b xc, c w Variable(<initial-value>, name=<optionalname>) import tensorflow as tf w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) Variable stores the state of current execution Others are operations

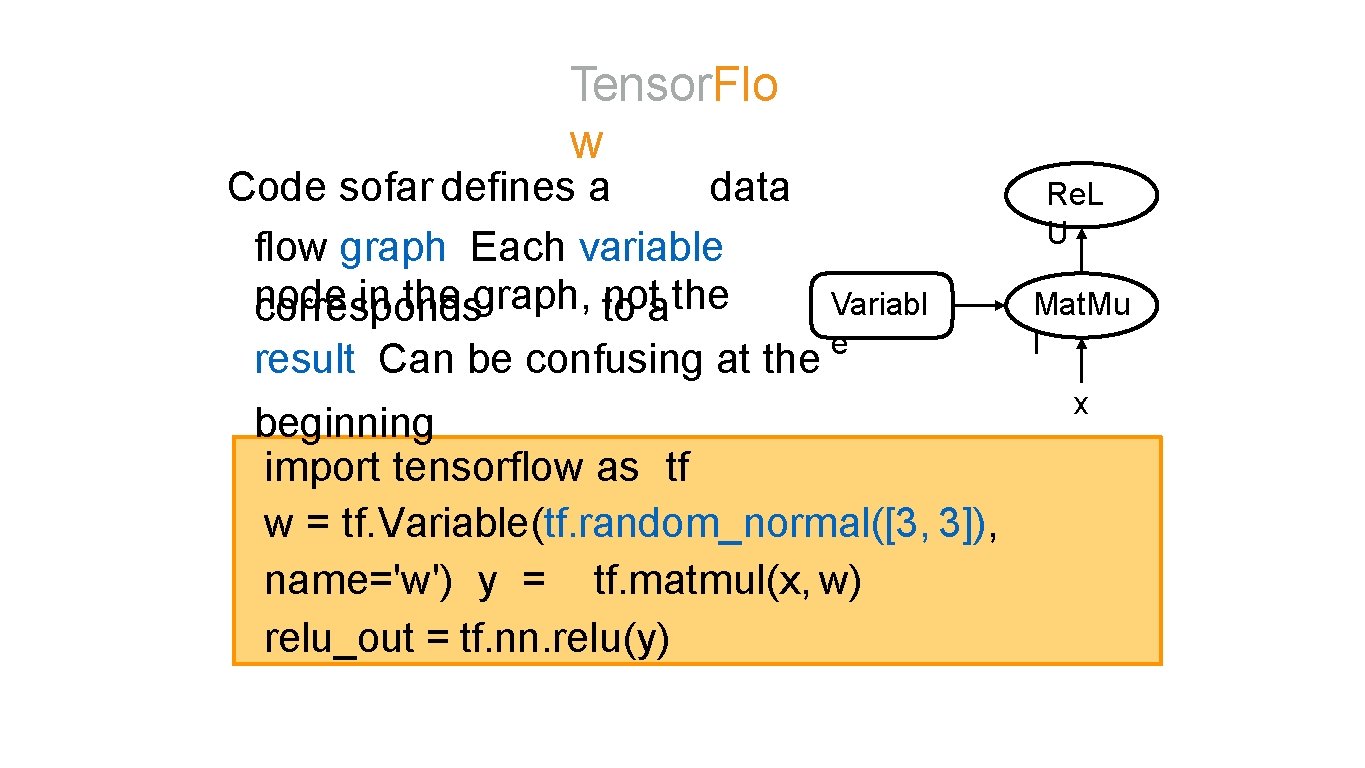

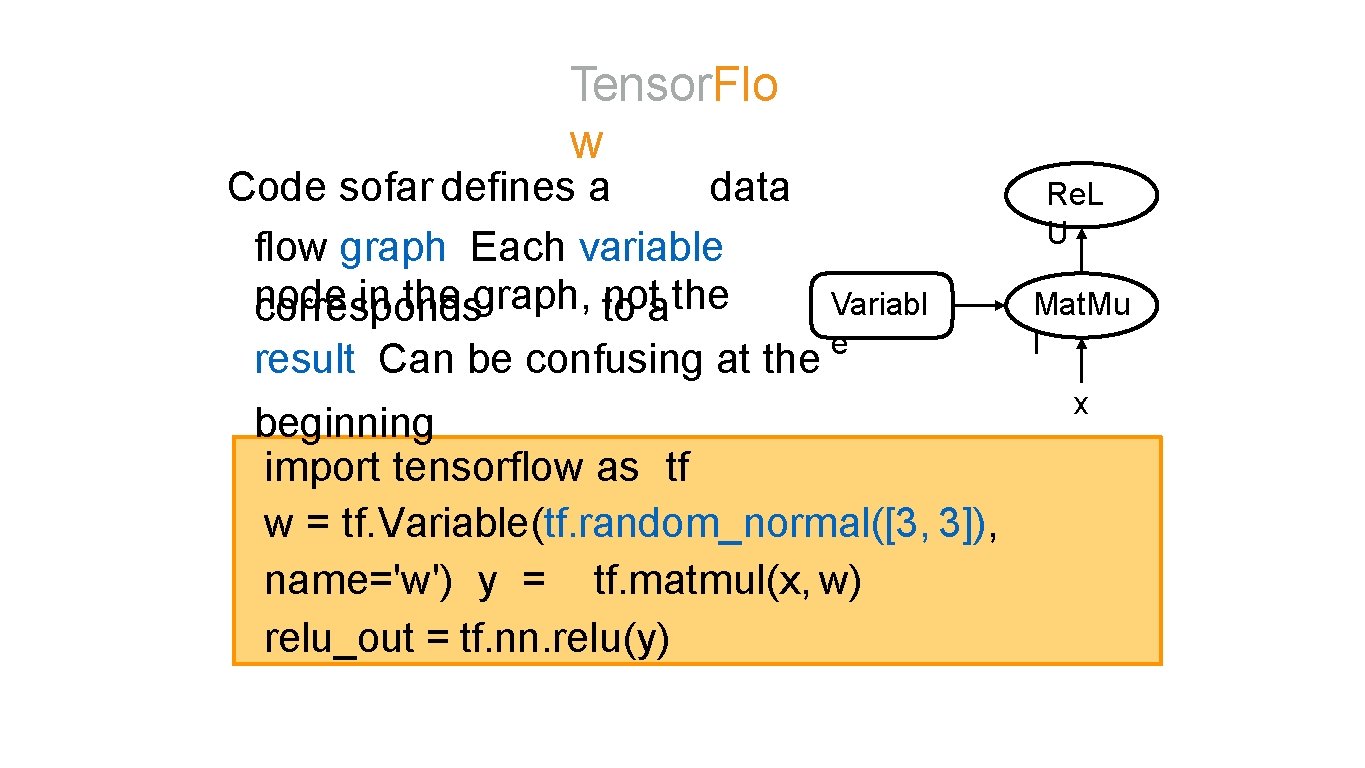

Tensor. Flo w Code sofar defines a data flow graph Each variable node in the graph, to nota the Variabl corresponds e result Can be confusing at the beginning import tensorflow as tf w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) Re. L U Mat. Mu l x

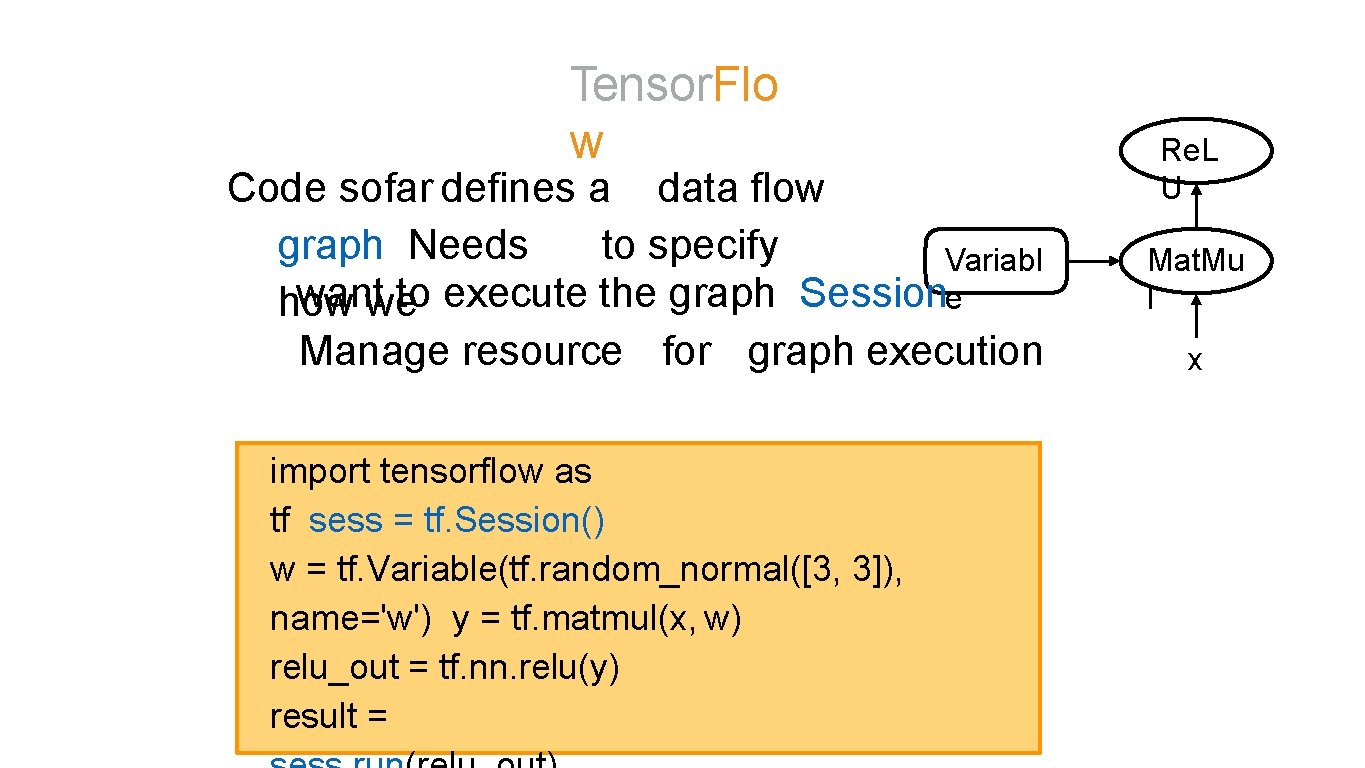

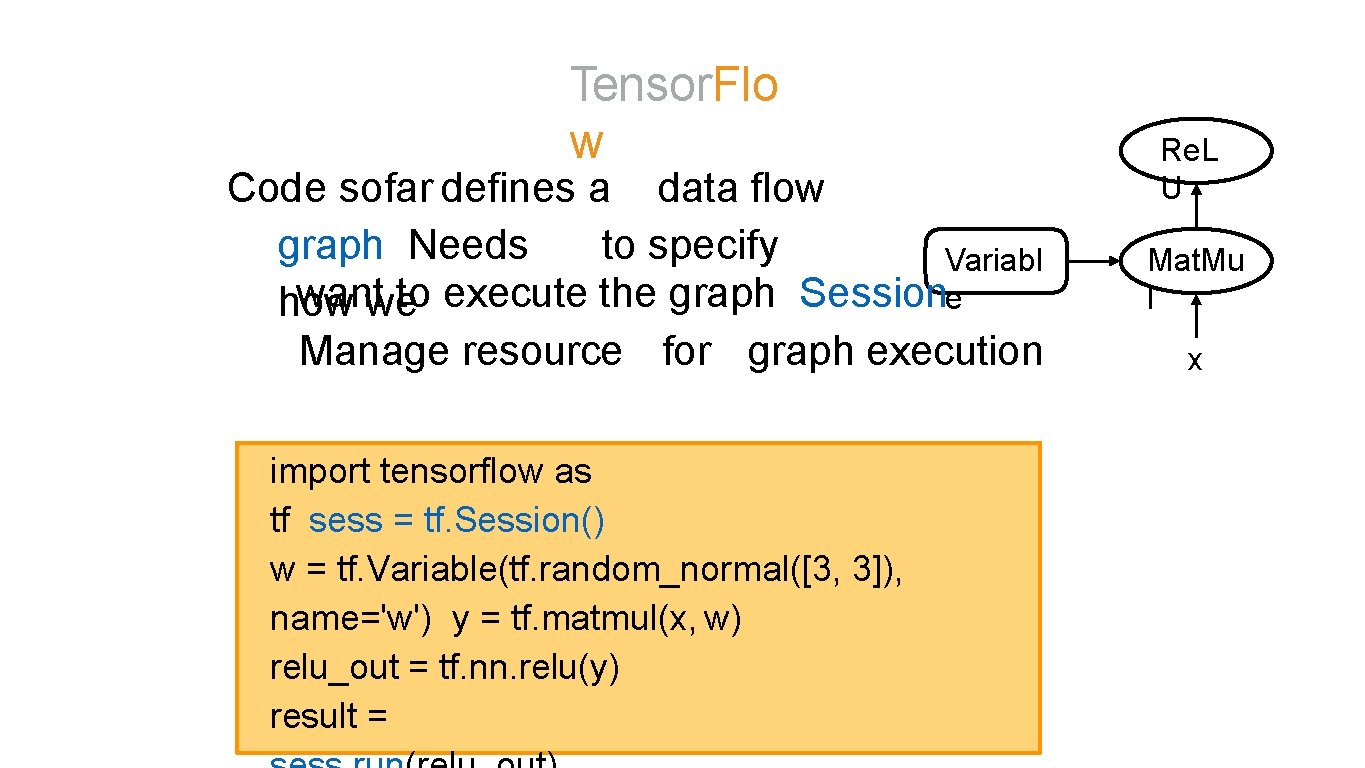

Tensor. Flo w Code sofar defines a data flow graph Needs to specify Variabl wantwe to execute the graph Sessione how Manage resource for graph execution import tensorflow as tf sess = tf. Session() w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) result = Re. L U Mat. Mu l x

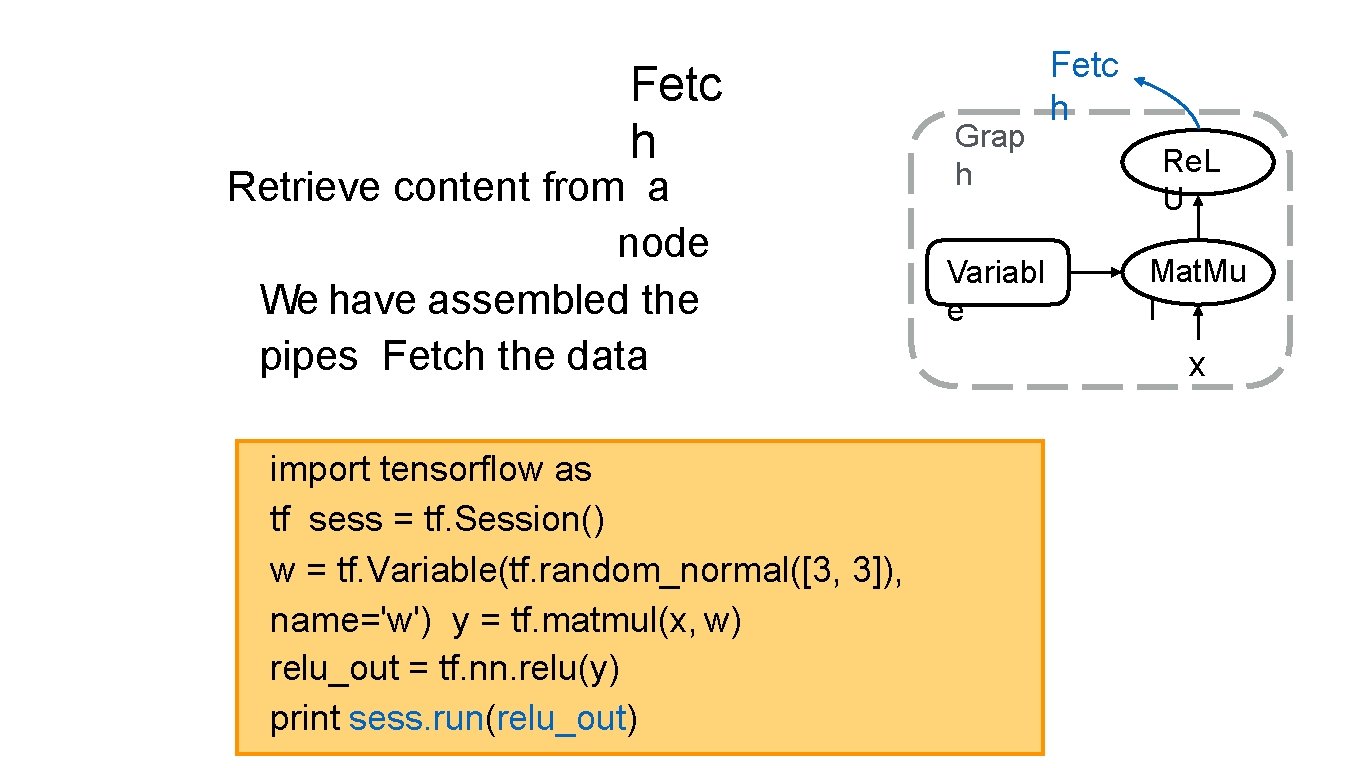

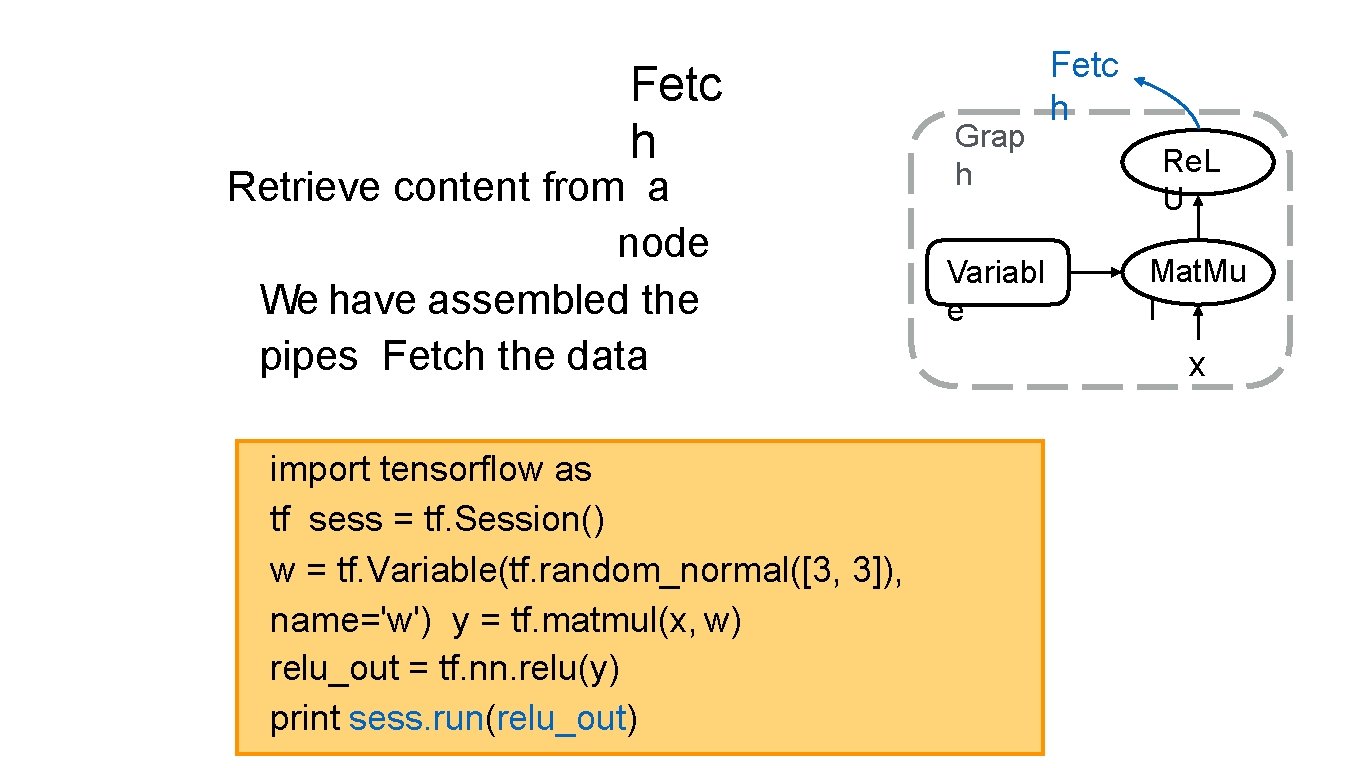

Fetc h Retrieve content from a node We have assembled the pipes Fetch the data import tensorflow as tf sess = tf. Session() w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) print sess. run(relu_out) Grap h Variabl e Fetc h Re. L U Mat. Mu l x

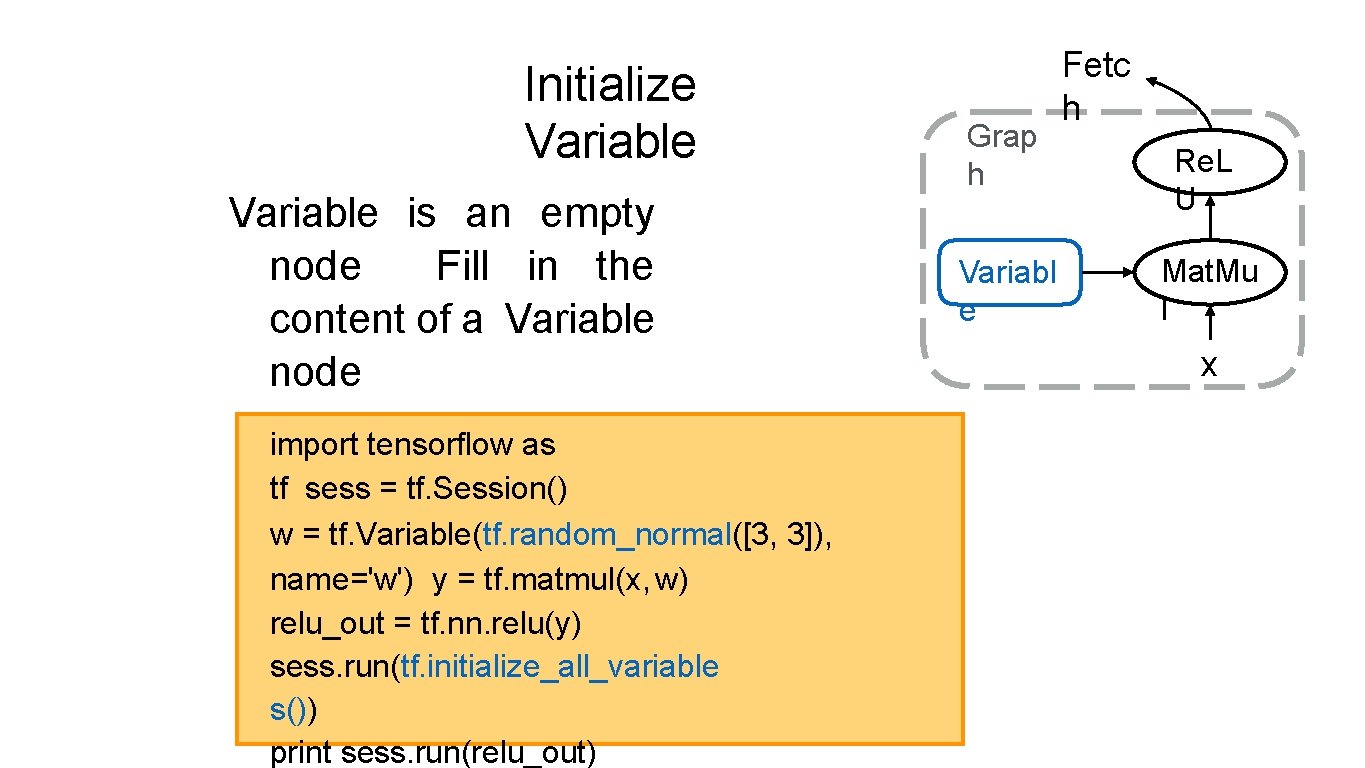

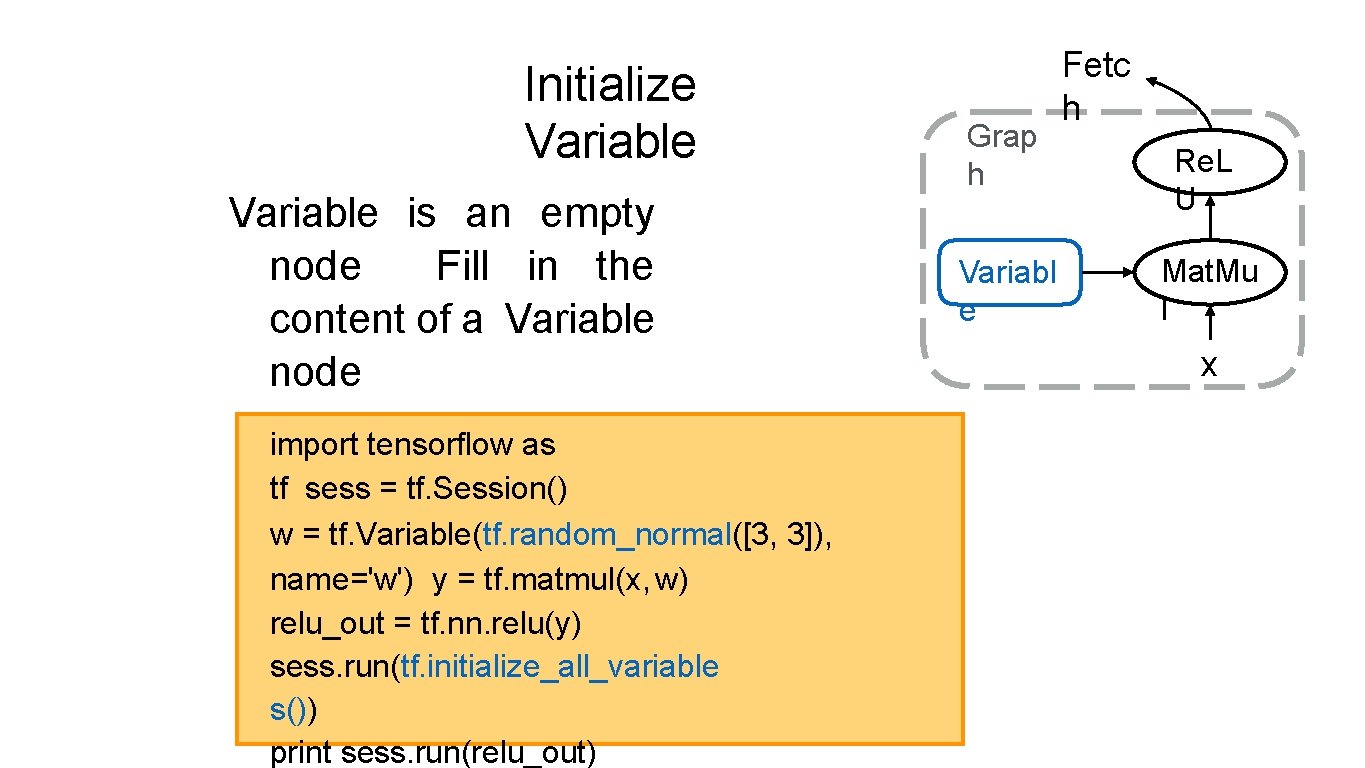

Initialize Variable is an empty node Fill in the content of a Variable node import tensorflow as tf sess = tf. Session() w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) sess. run(tf. initialize_all_variable s()) print sess. run(relu_out) Grap h Variabl e Fetc h Re. L U Mat. Mu l x

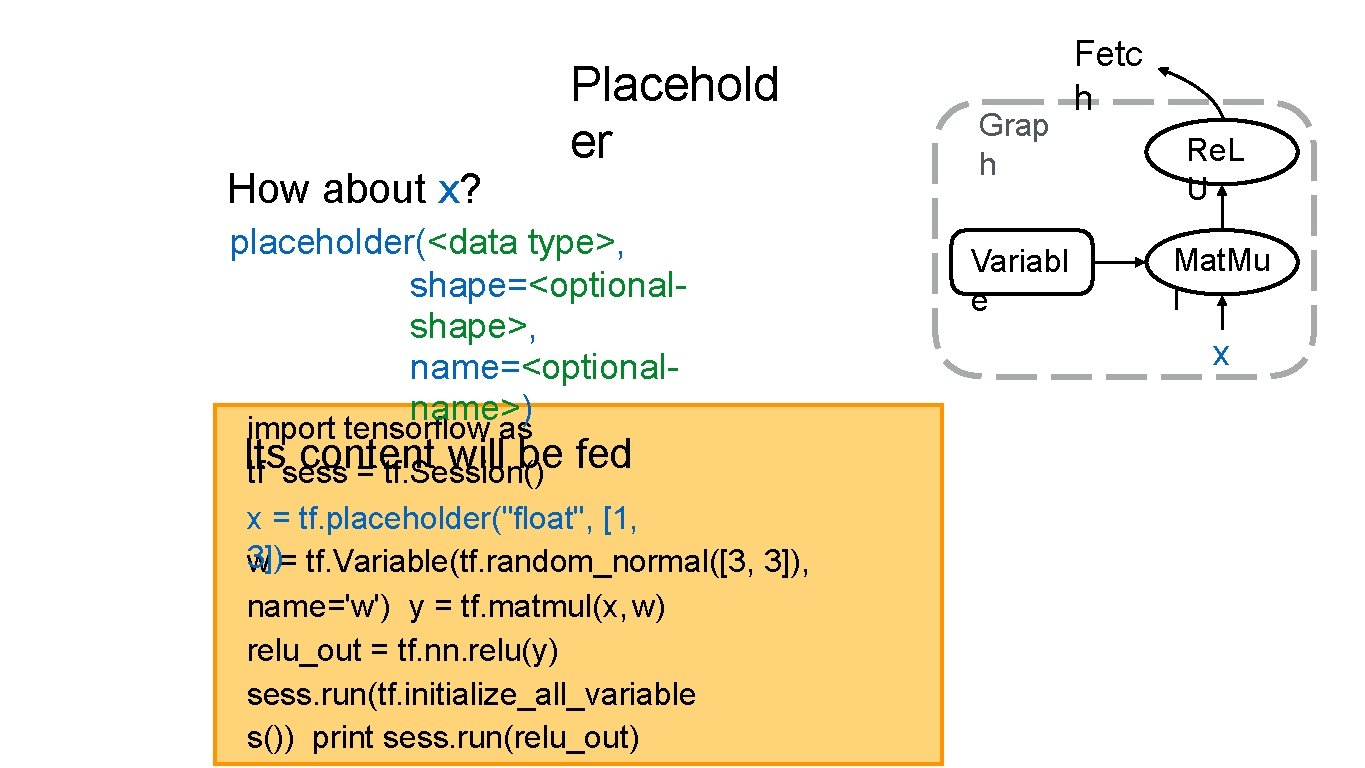

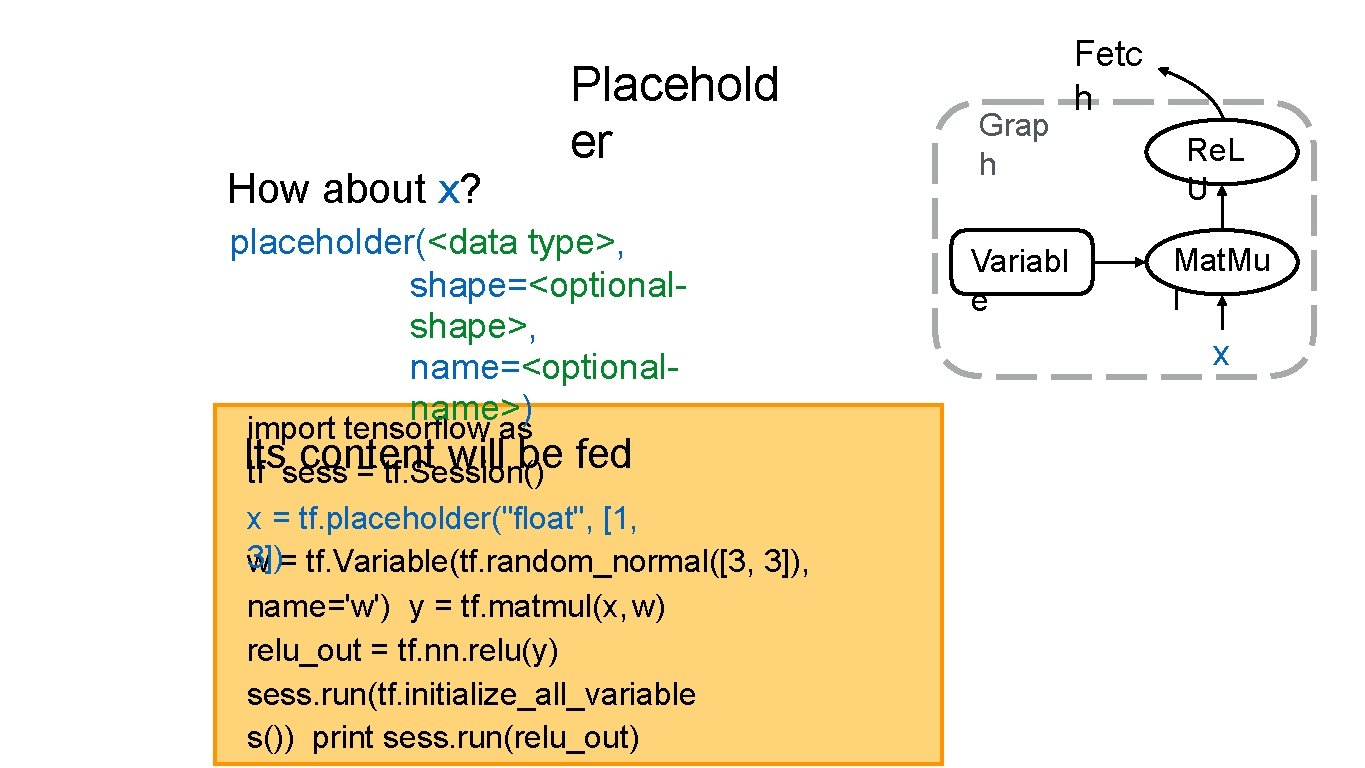

How about x? Placehold er placeholder(<data type>, shape=<optionalshape>, name=<optionalname>) import tensorflow as Its content will be fed tf sess = tf. Session() x = tf. placeholder("float", [1, 3]) w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) sess. run(tf. initialize_all_variable s()) print sess. run(relu_out) Grap h Variabl e Fetc h Re. L U Mat. Mu l x

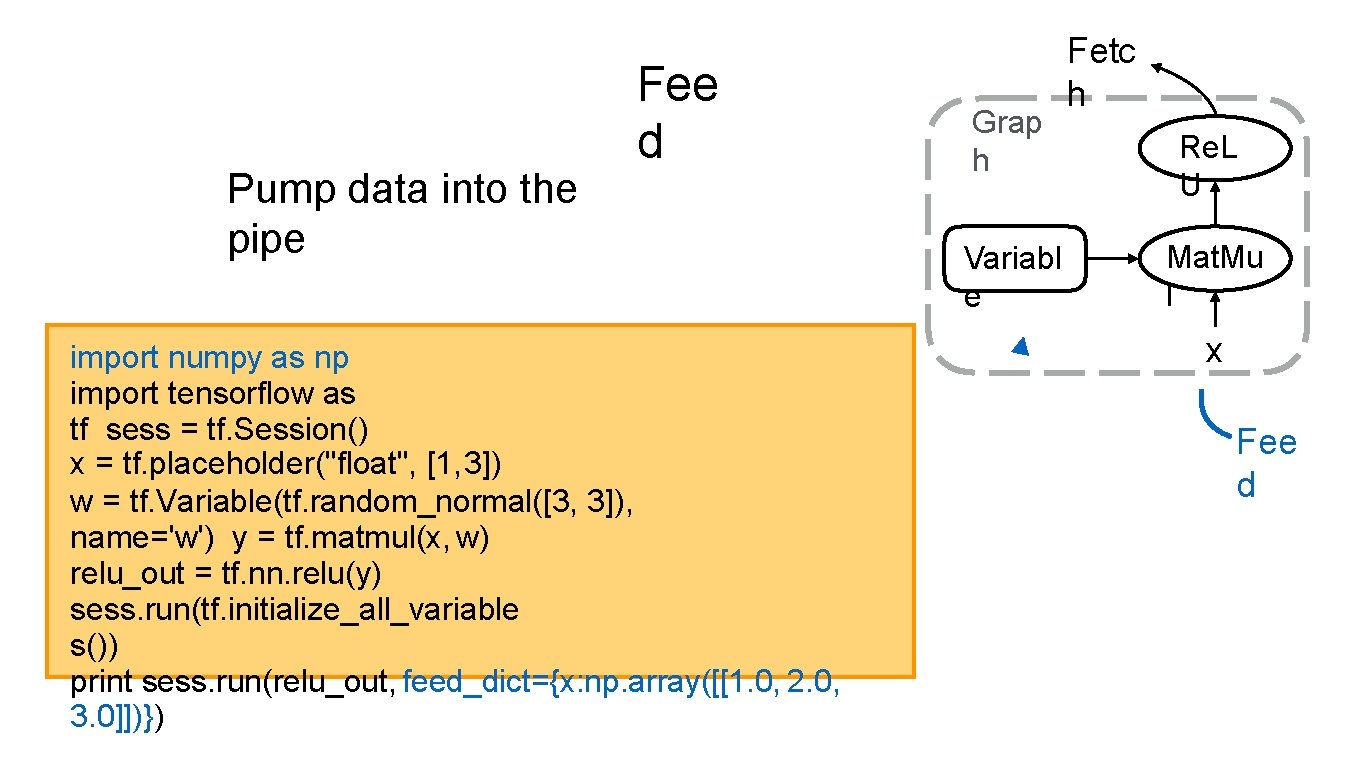

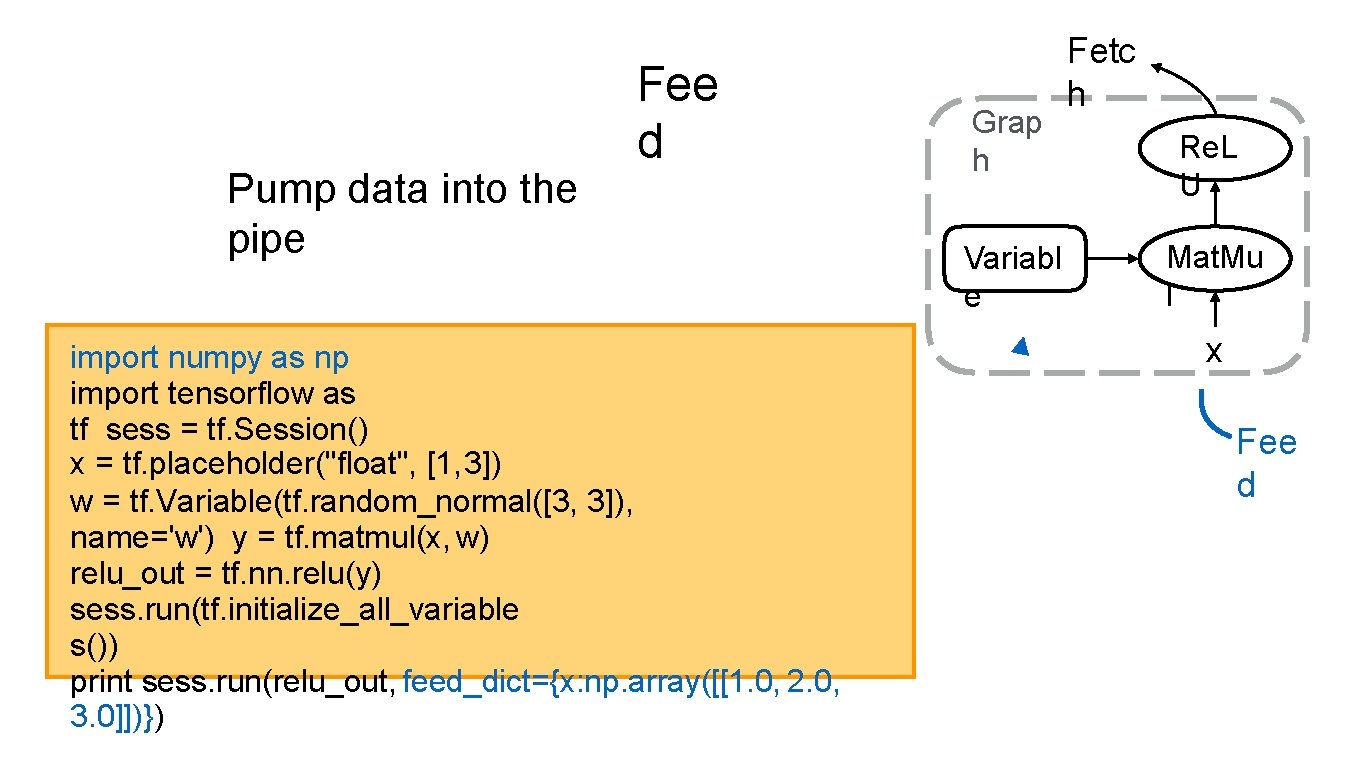

Pump data into the pipe Fee d import numpy as np import tensorflow as tf sess = tf. Session() x = tf. placeholder("float", [1, 3]) w = tf. Variable(tf. random_normal([3, 3]), name='w') y = tf. matmul(x, w) relu_out = tf. nn. relu(y) sess. run(tf. initialize_all_variable s()) print sess. run(relu_out, feed_dict={x: np. array([[1. 0, 2. 0, 3. 0]])}) Grap h Variabl e Fetc h Re. L U Mat. Mu l x Fee d

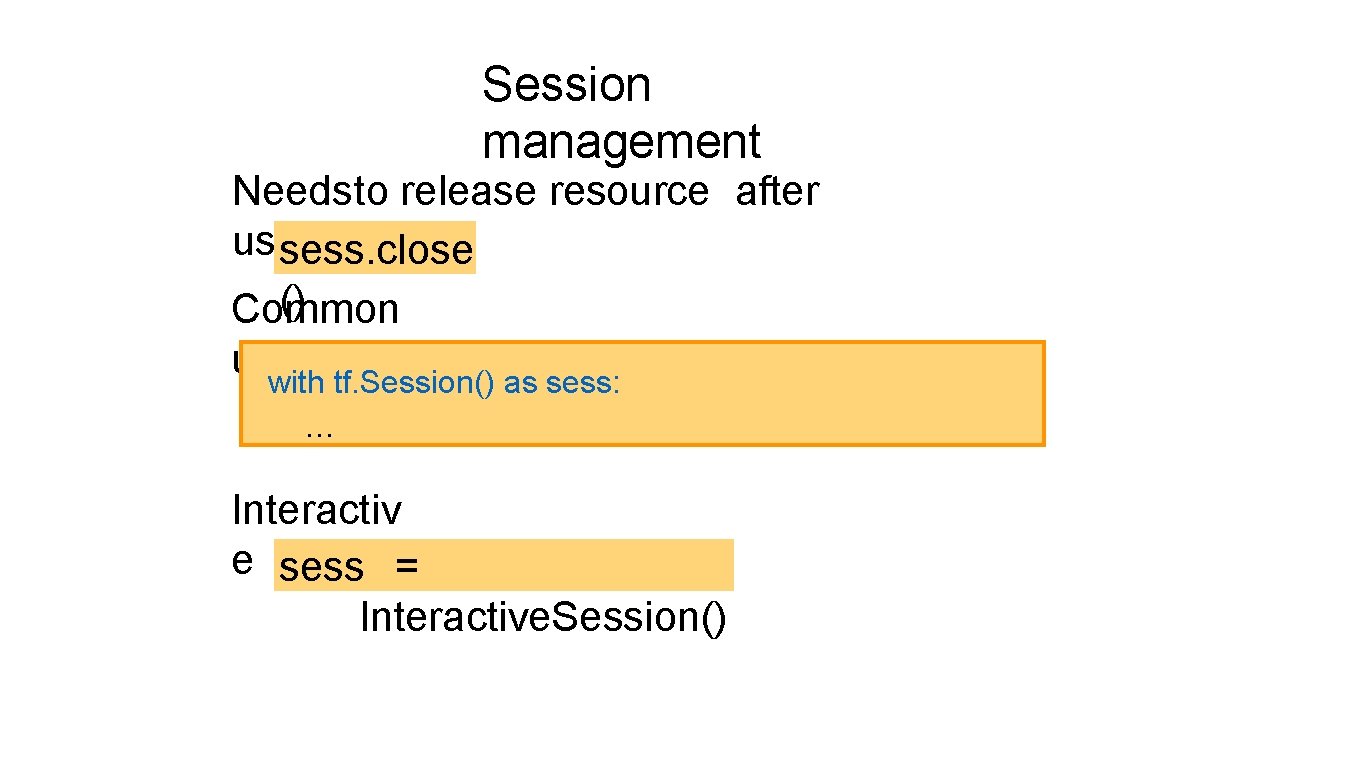

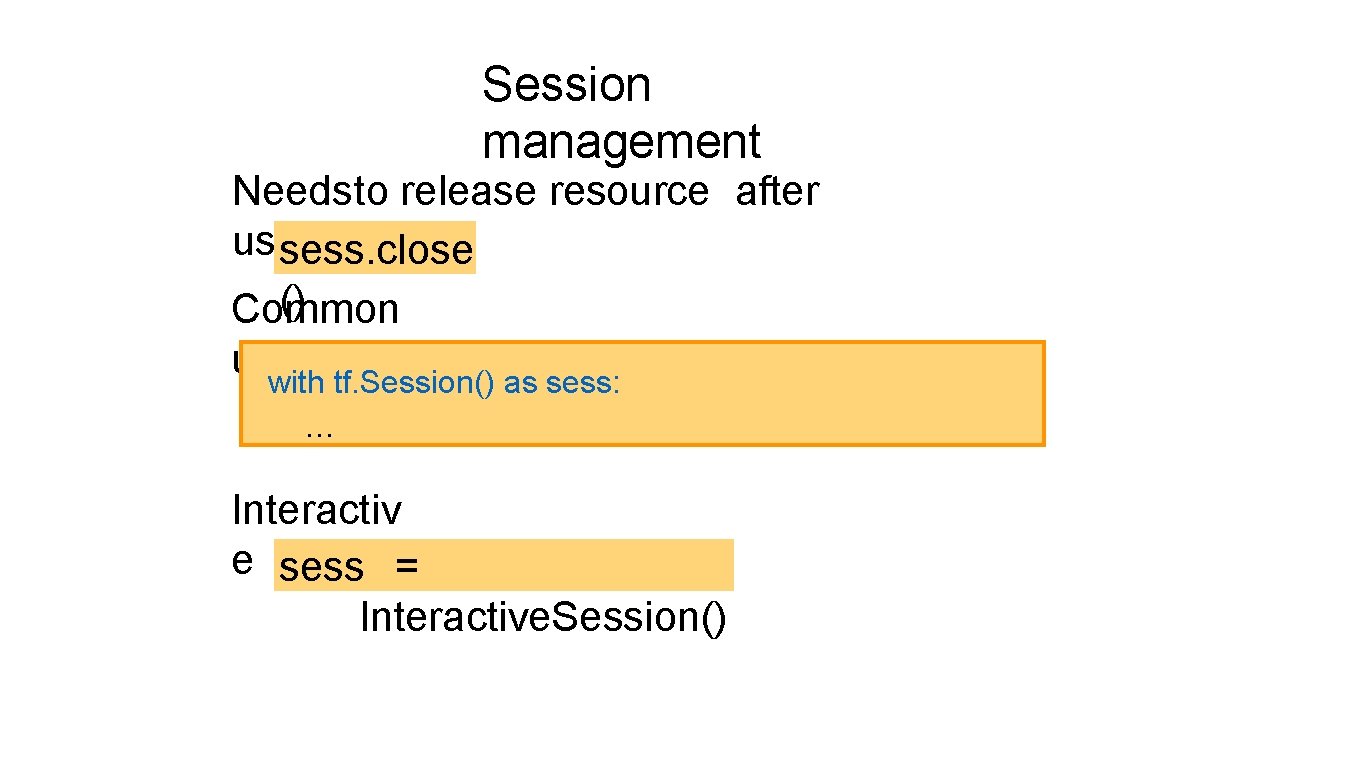

Session management Needsto release resource after usesess. close () Common usage with tf. Session() as sess: … Interactiv e sess = Interactive. Session()

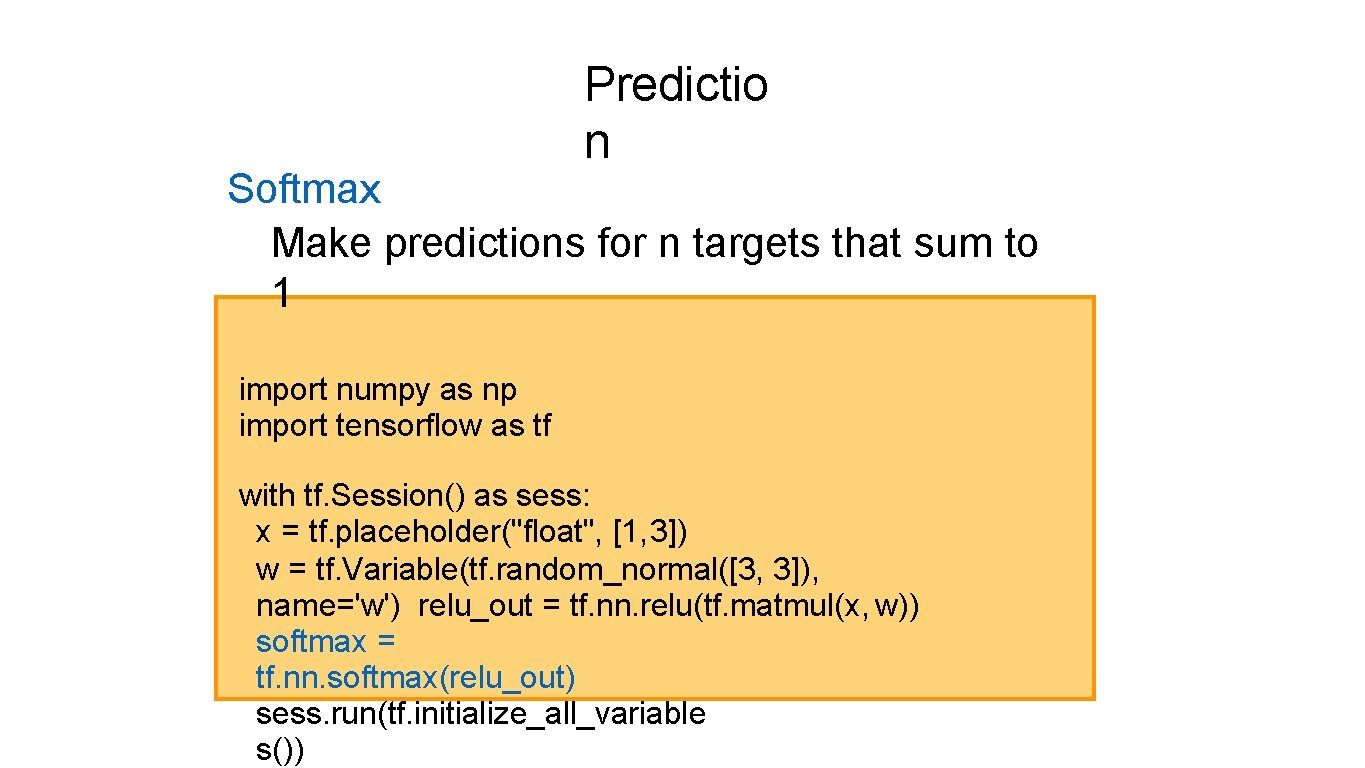

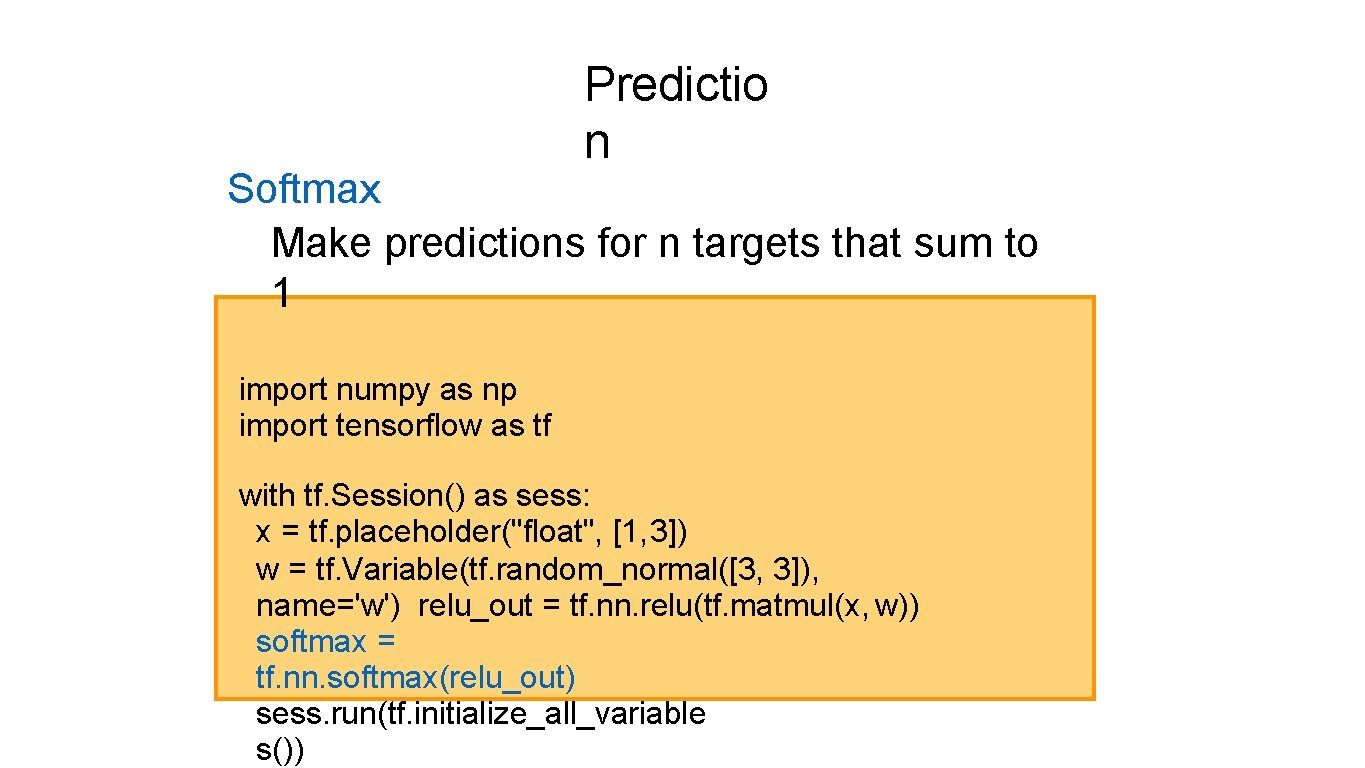

Predictio n Softmax Make predictions for n targets that sum to 1 import numpy as np import tensorflow as tf with tf. Session() as sess: x = tf. placeholder("float", [1, 3]) w = tf. Variable(tf. random_normal([3, 3]), name='w') relu_out = tf. nn. relu(tf. matmul(x, w)) softmax = tf. nn. softmax(relu_out) sess. run(tf. initialize_all_variable s())

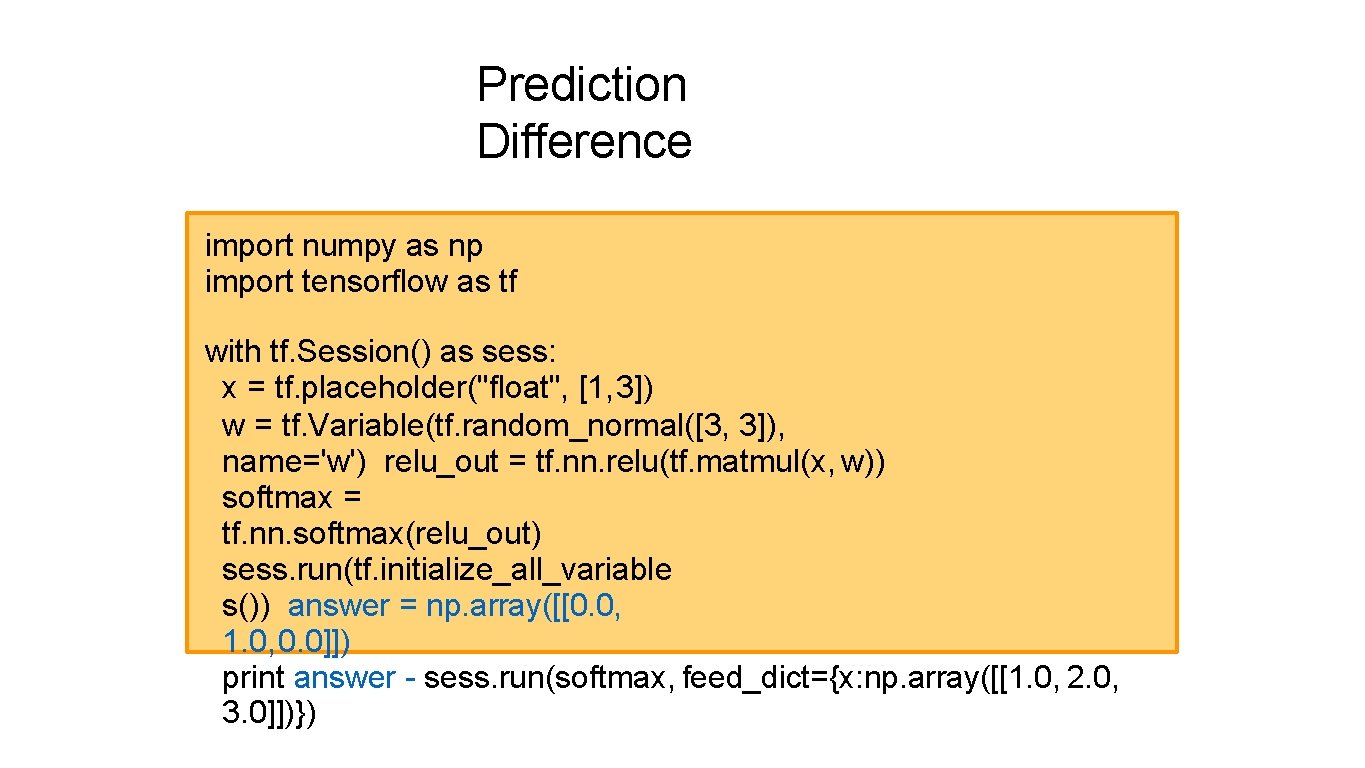

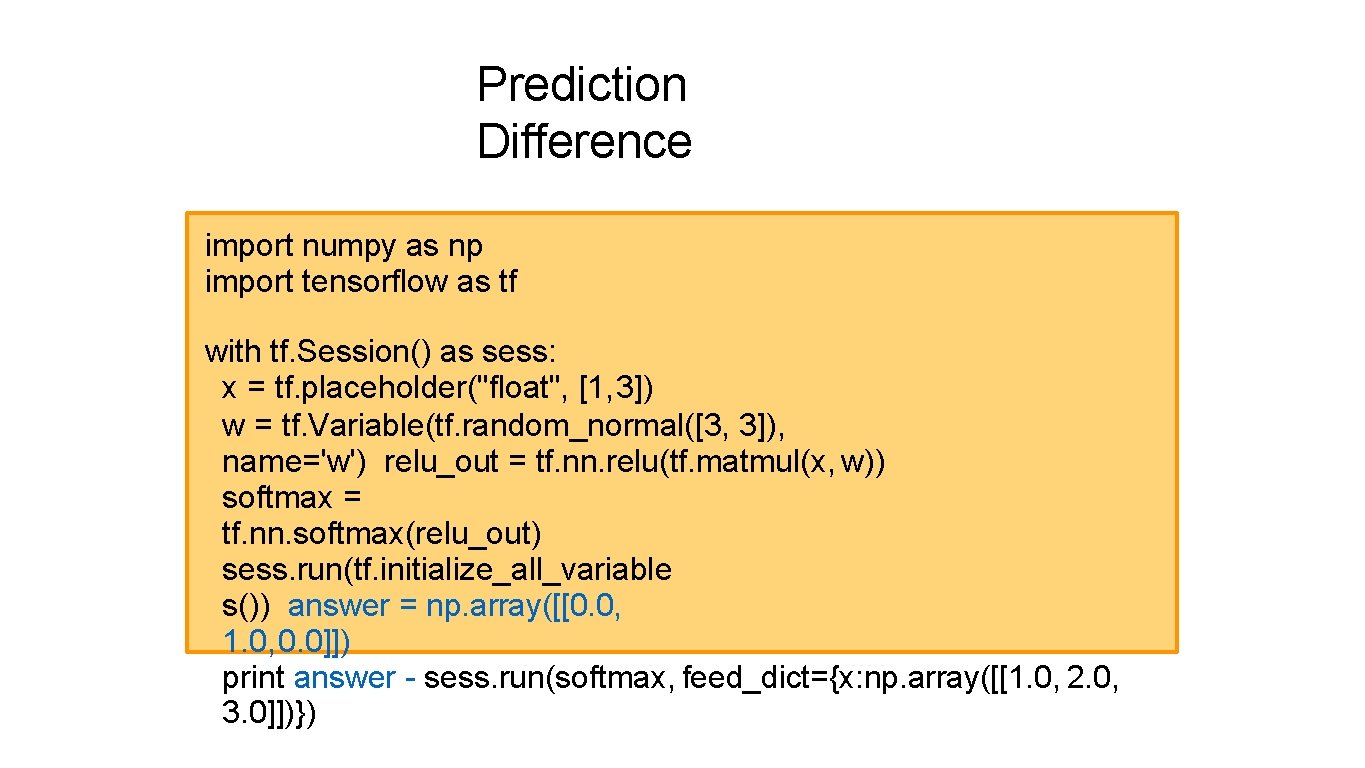

Prediction Difference import numpy as np import tensorflow as tf with tf. Session() as sess: x = tf. placeholder("float", [1, 3]) w = tf. Variable(tf. random_normal([3, 3]), name='w') relu_out = tf. nn. relu(tf. matmul(x, w)) softmax = tf. nn. softmax(relu_out) sess. run(tf. initialize_all_variable s()) answer = np. array([[0. 0, 1. 0, 0. 0]]) print answer - sess. run(softmax, feed_dict={x: np. array([[1. 0, 2. 0, 3. 0]])})

![Learnparameters Loss Defineloss function Loss function for softmaxcrossentropywithlogi tslabels tf placeholderfloat 1 3 Learnparameters: Loss Defineloss function Loss function for softmax_cross_entropy_with_logi ts(labels = tf. placeholder("float", [1, 3])](https://slidetodoc.com/presentation_image_h2/cad47eae2b400877e1092709b55264b6/image-15.jpg)

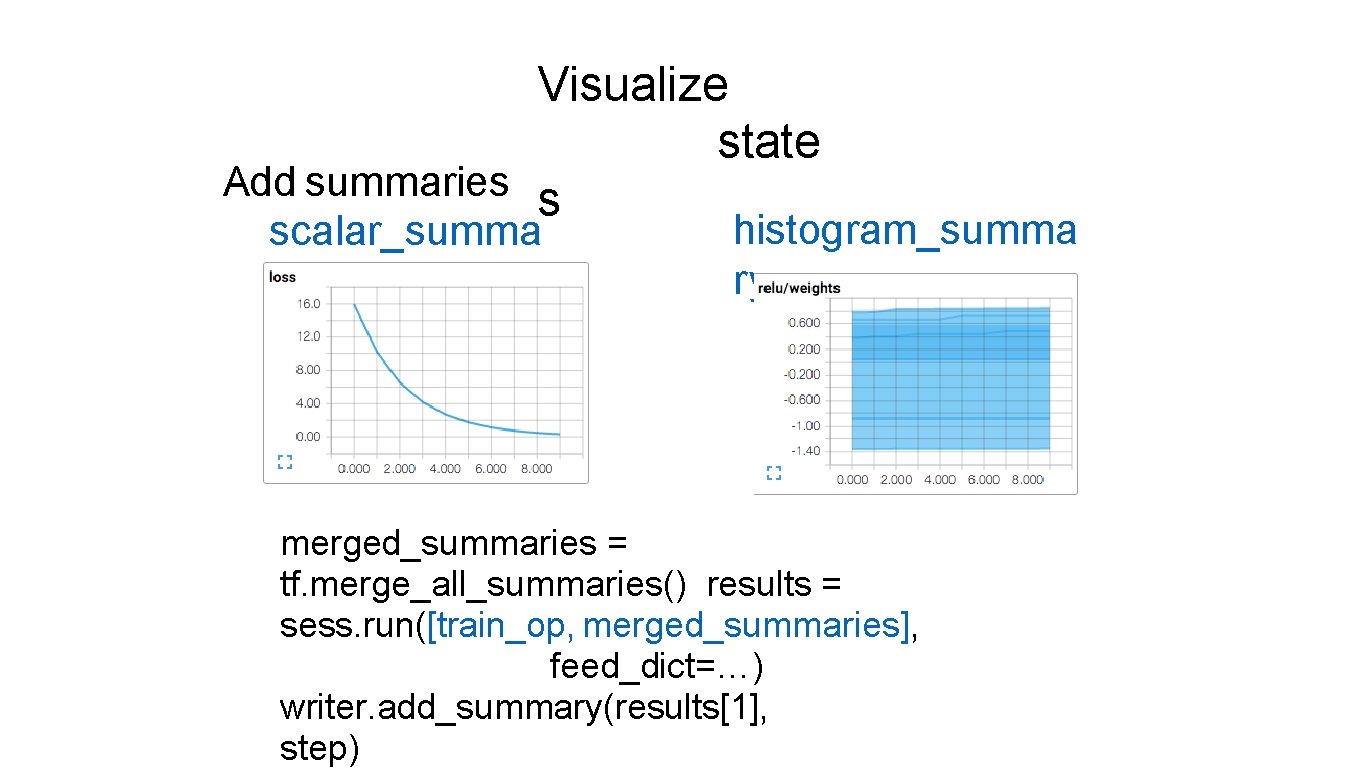

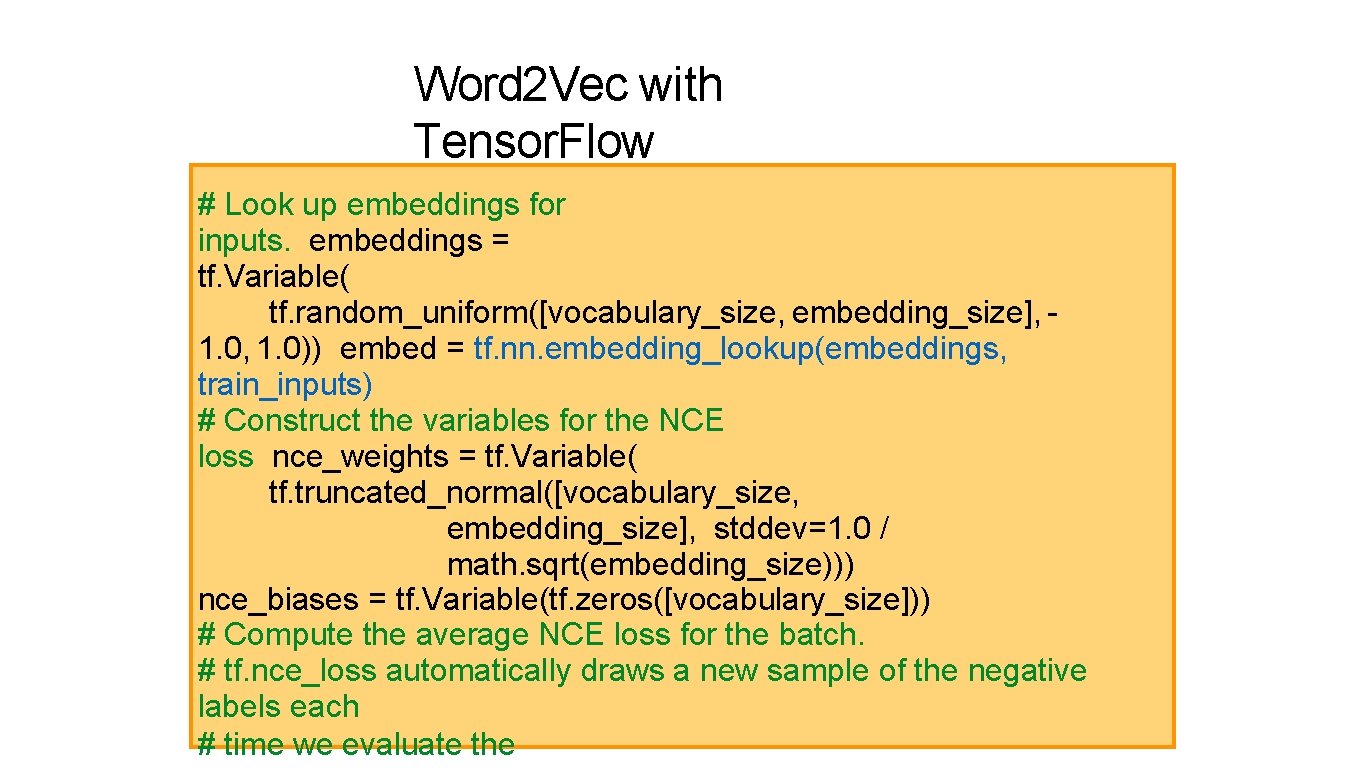

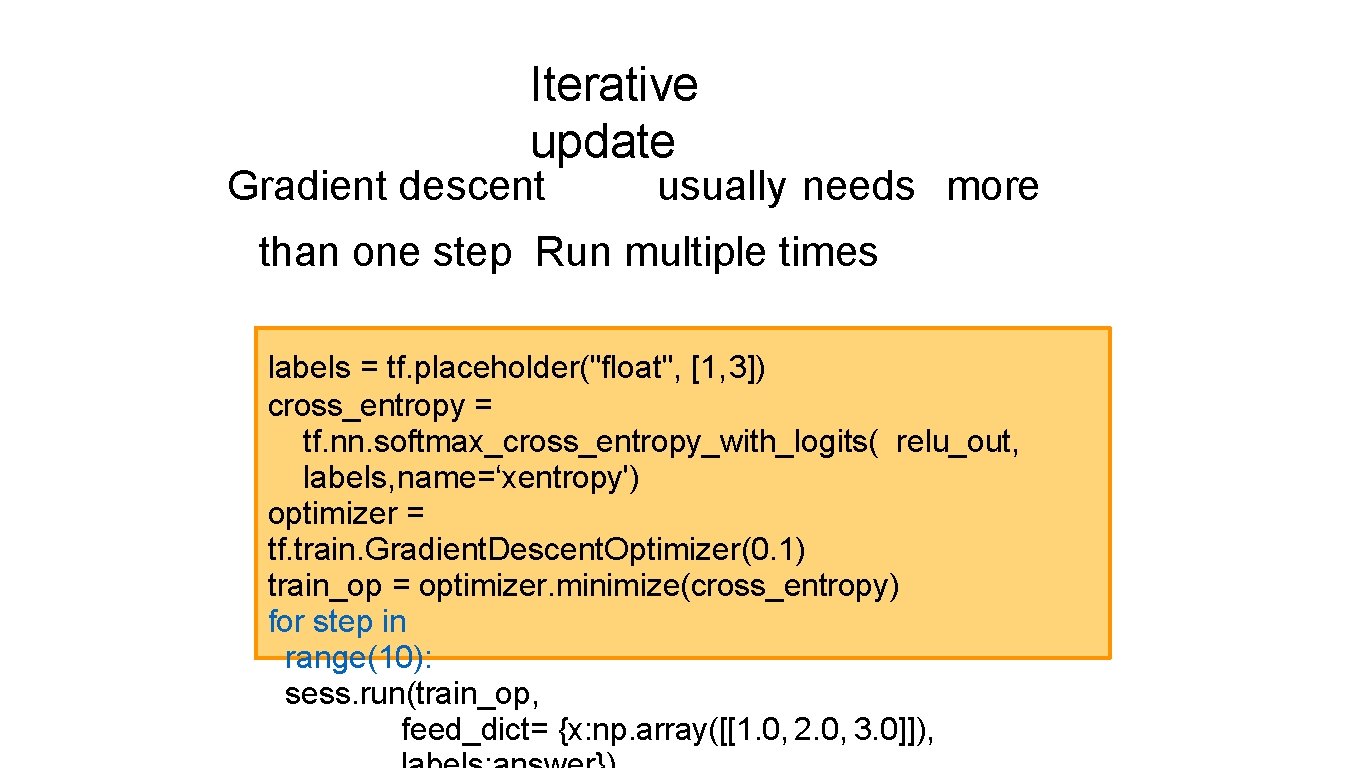

Learnparameters: Loss Defineloss function Loss function for softmax_cross_entropy_with_logi ts(labels = tf. placeholder("float", [1, 3]) cross_entropy = name=<optionallogits, labels, tf. nn. softmax_cross_entropy_with_logits( name>) labels, name='xentropy') relu_out,

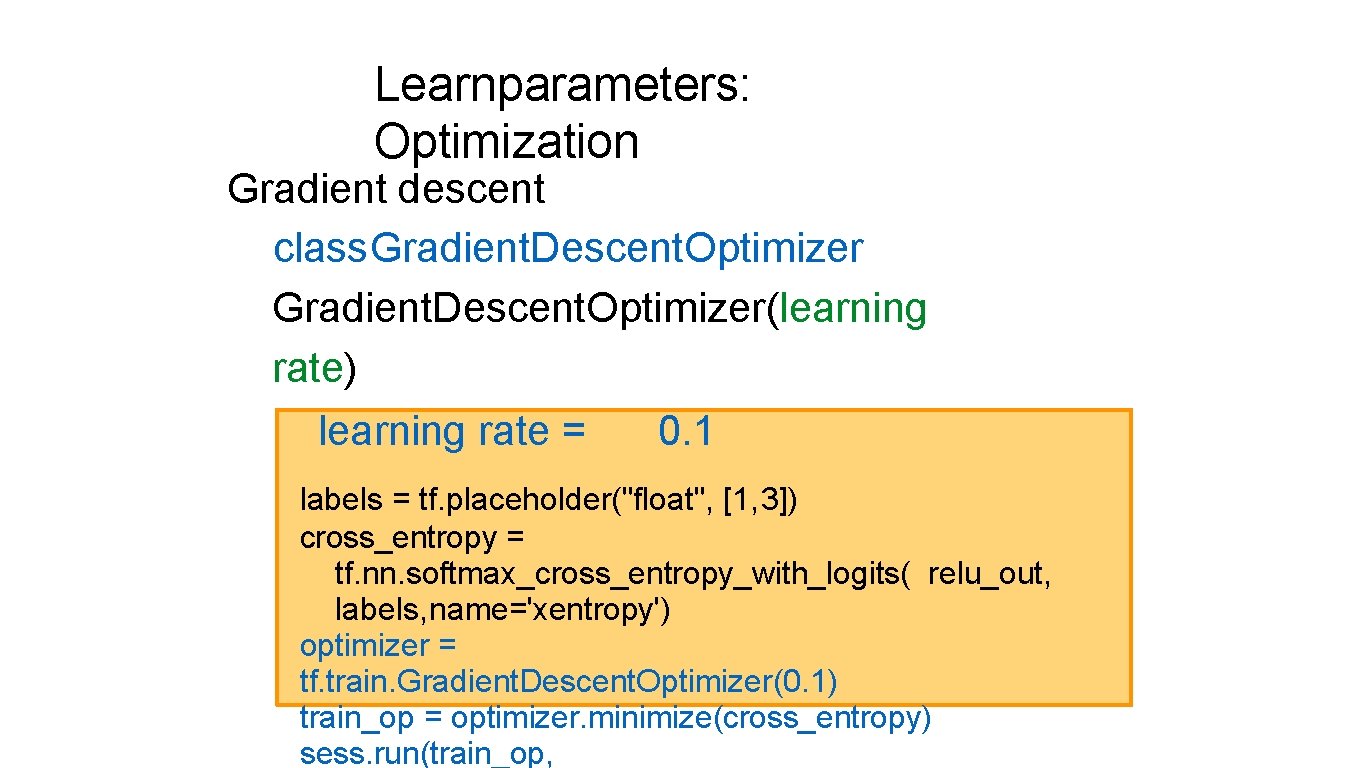

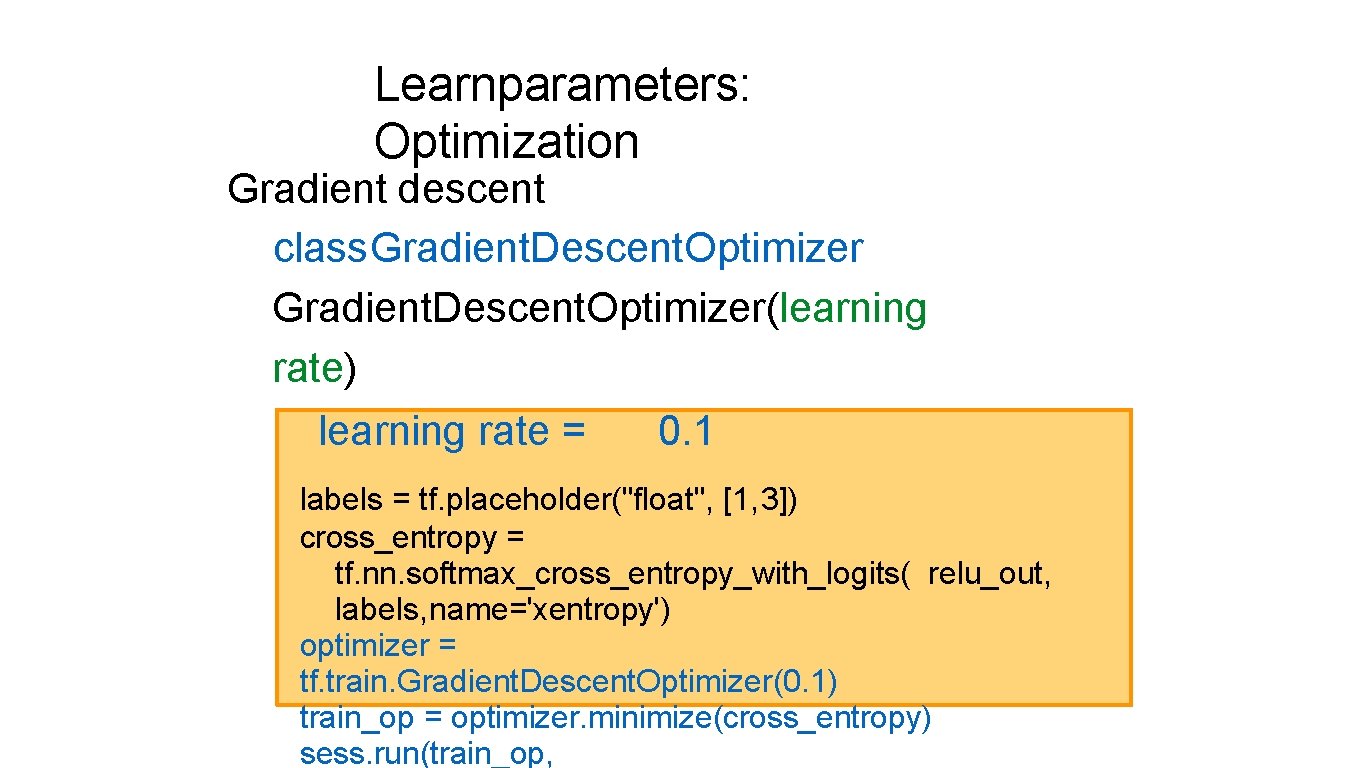

Learnparameters: Optimization Gradient descent class. Gradient. Descent. Optimizer(learning rate) learning rate = 0. 1 labels = tf. placeholder("float", [1, 3]) cross_entropy = tf. nn. softmax_cross_entropy_with_logits( relu_out, labels, name='xentropy') optimizer = tf. train. Gradient. Descent. Optimizer(0. 1) train_op = optimizer. minimize(cross_entropy) sess. run(train_op,

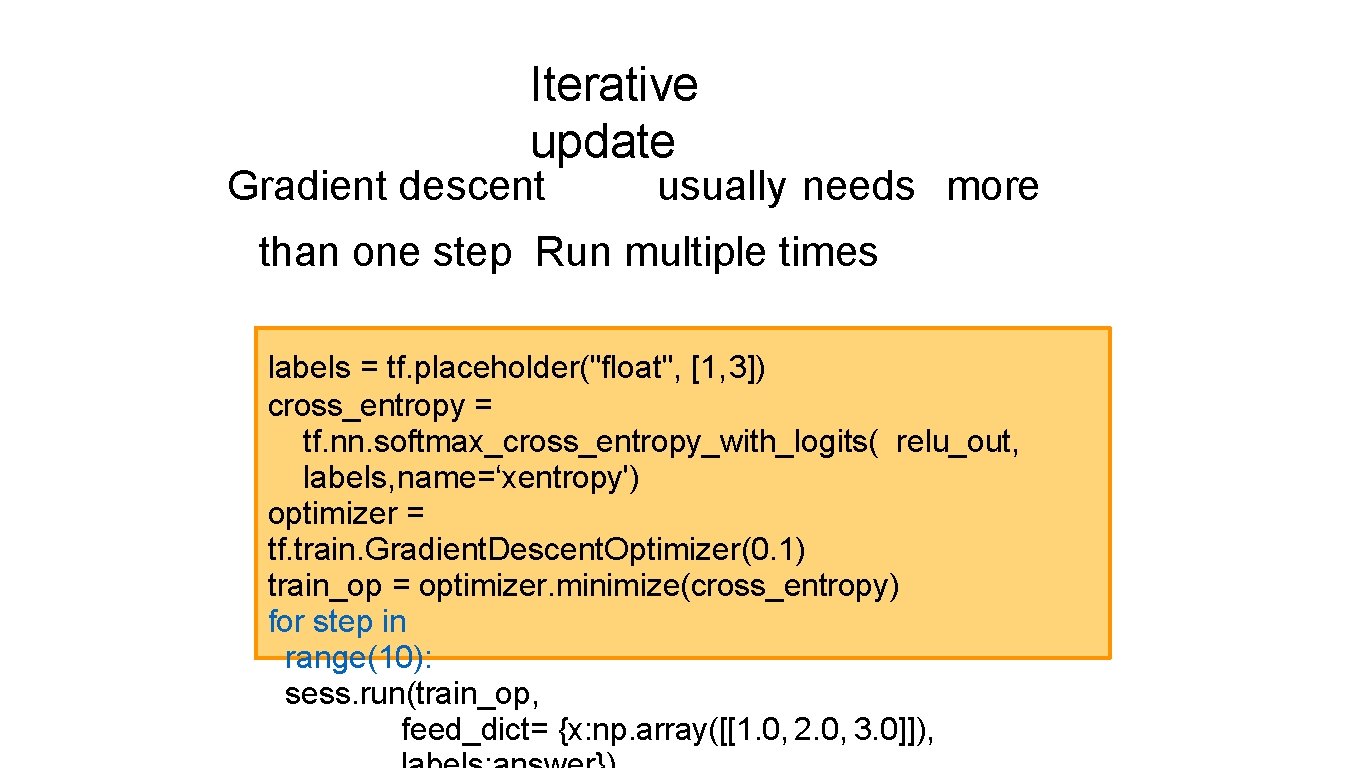

Iterative update Gradient descent usually needs more than one step Run multiple times labels = tf. placeholder("float", [1, 3]) cross_entropy = tf. nn. softmax_cross_entropy_with_logits( relu_out, labels, name=‘xentropy') optimizer = tf. train. Gradient. Descent. Optimizer(0. 1) train_op = optimizer. minimize(cross_entropy) for step in range(10): sess. run(train_op, feed_dict= {x: np. array([[1. 0, 2. 0, 3. 0]]),

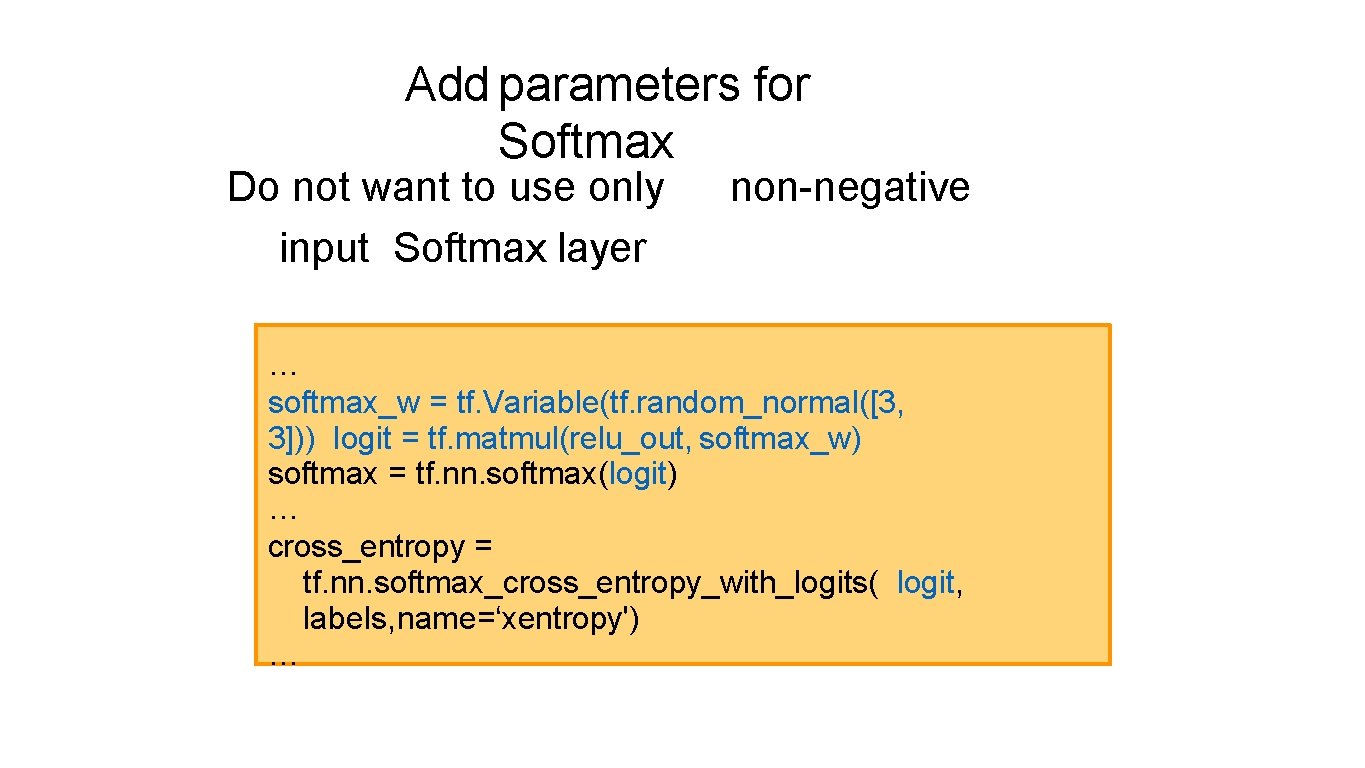

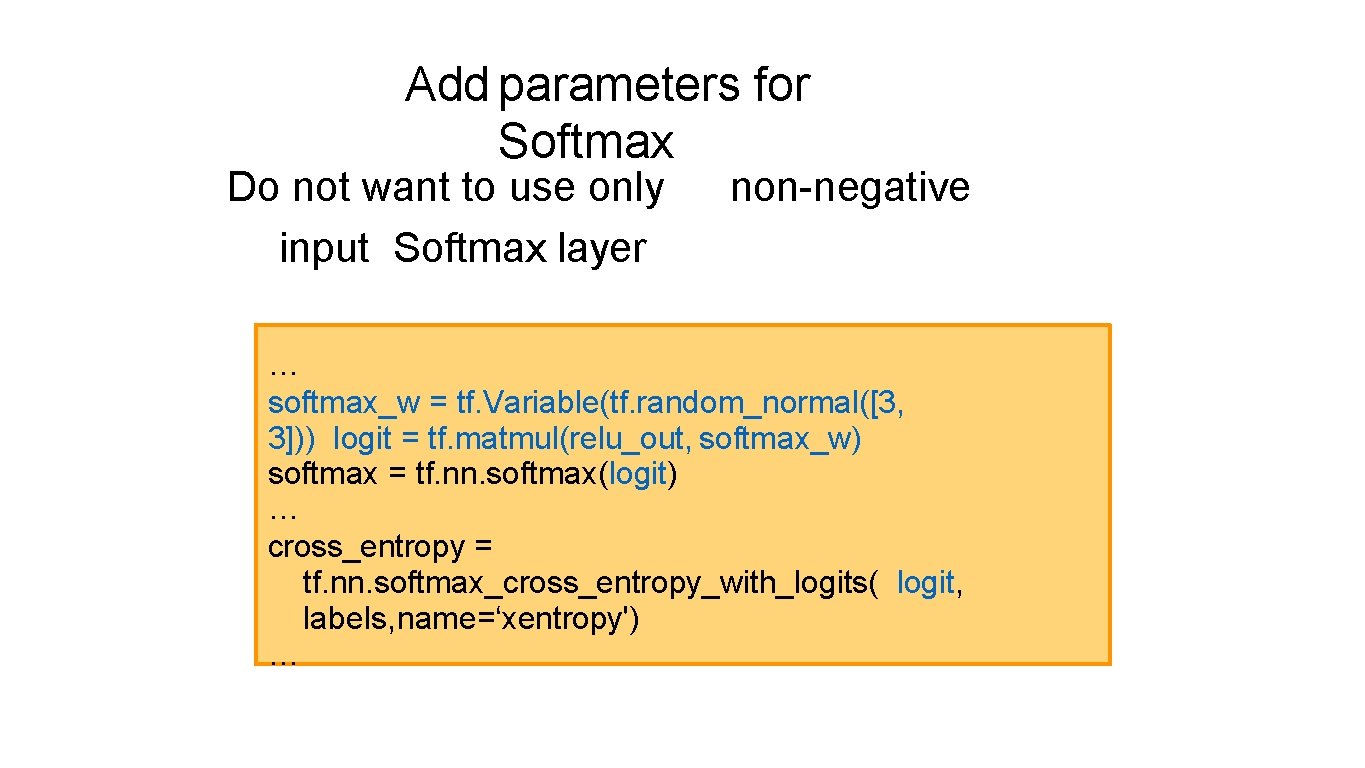

Add parameters for Softmax Do not want to use only input Softmax layer non-negative … softmax_w = tf. Variable(tf. random_normal([3, 3])) logit = tf. matmul(relu_out, softmax_w) softmax = tf. nn. softmax(logit) … cross_entropy = tf. nn. softmax_cross_entropy_with_logits( logit, labels, name=‘xentropy') …

![Add biase Biases initialized toszero w tf Variabletf randomnormal3 3 b Add biase Biases initialized toszero … w = tf. Variable(tf. random_normal([3, 3])) b =](https://slidetodoc.com/presentation_image_h2/cad47eae2b400877e1092709b55264b6/image-19.jpg)

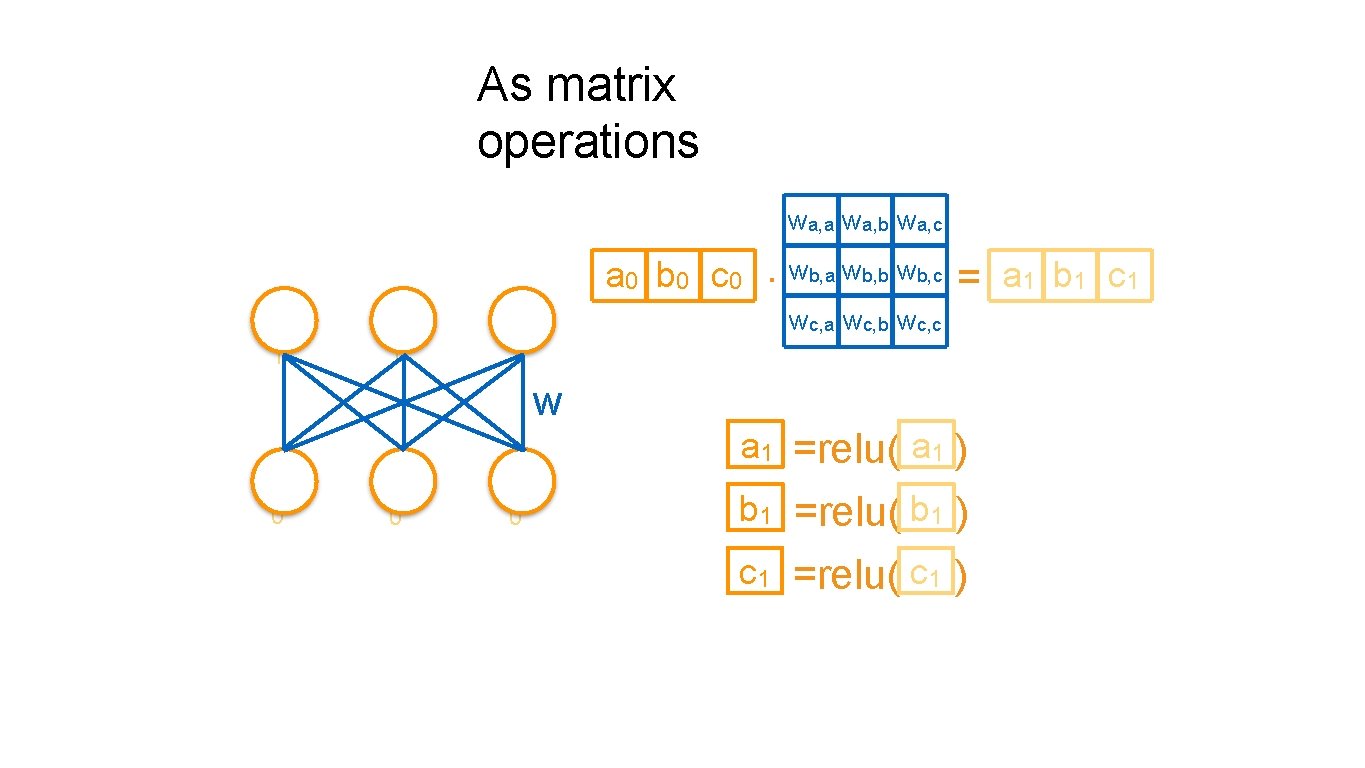

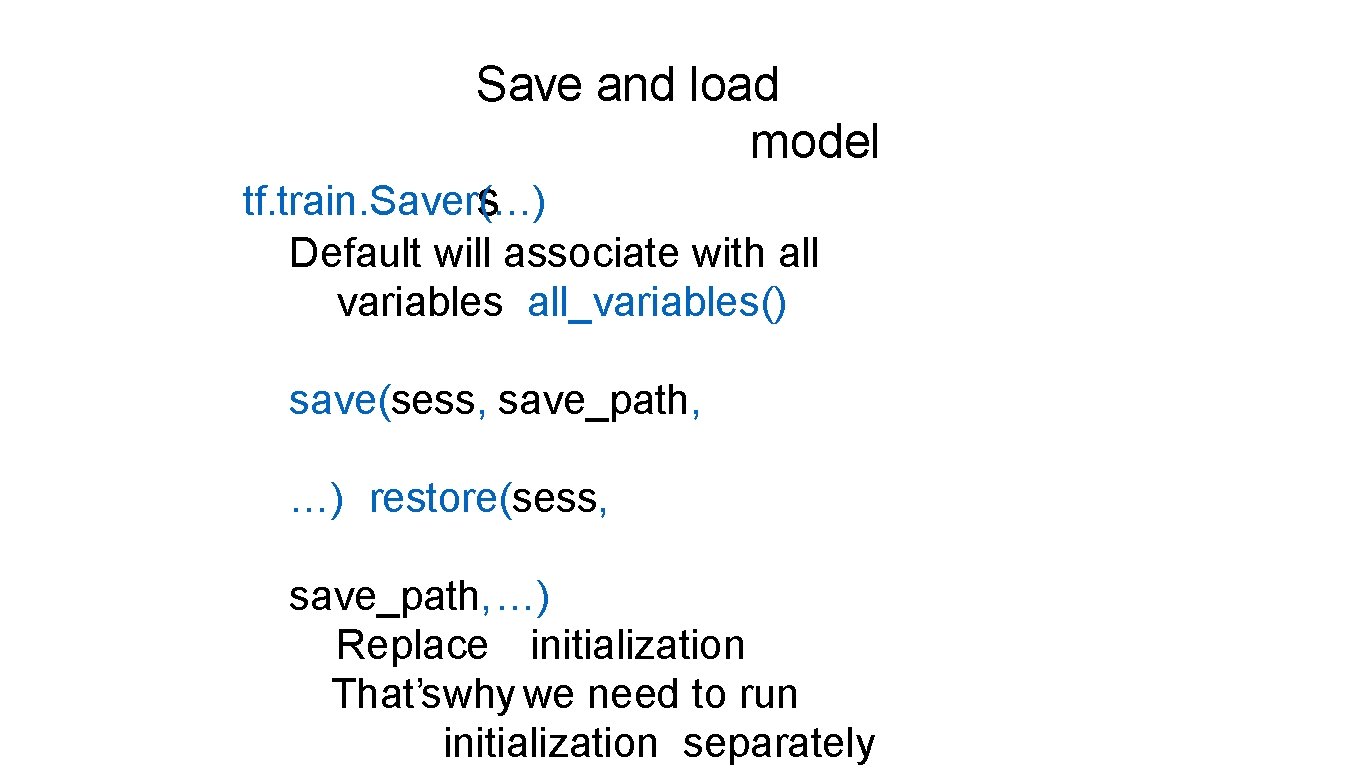

Add biase Biases initialized toszero … w = tf. Variable(tf. random_normal([3, 3])) b = tf. Variable(tf. zeros([1, 3])) relu_out = tf. nn. relu(tf. matmul(x, w) + b) softmax_w = tf. Variable(tf. random_normal([3, 3])) softmax_b = tf. Variable(tf. zeros([1, 3])) logit = tf. matmul(relu_out, softmax_w) + softmax_b softmax = tf. nn. softmax(logit) …

![Make it deep Add layer s x tf placeholderfloat 1 3 reluout Make it deep Add layer s … x = tf. placeholder("float", [1, 3]) relu_out](https://slidetodoc.com/presentation_image_h2/cad47eae2b400877e1092709b55264b6/image-20.jpg)

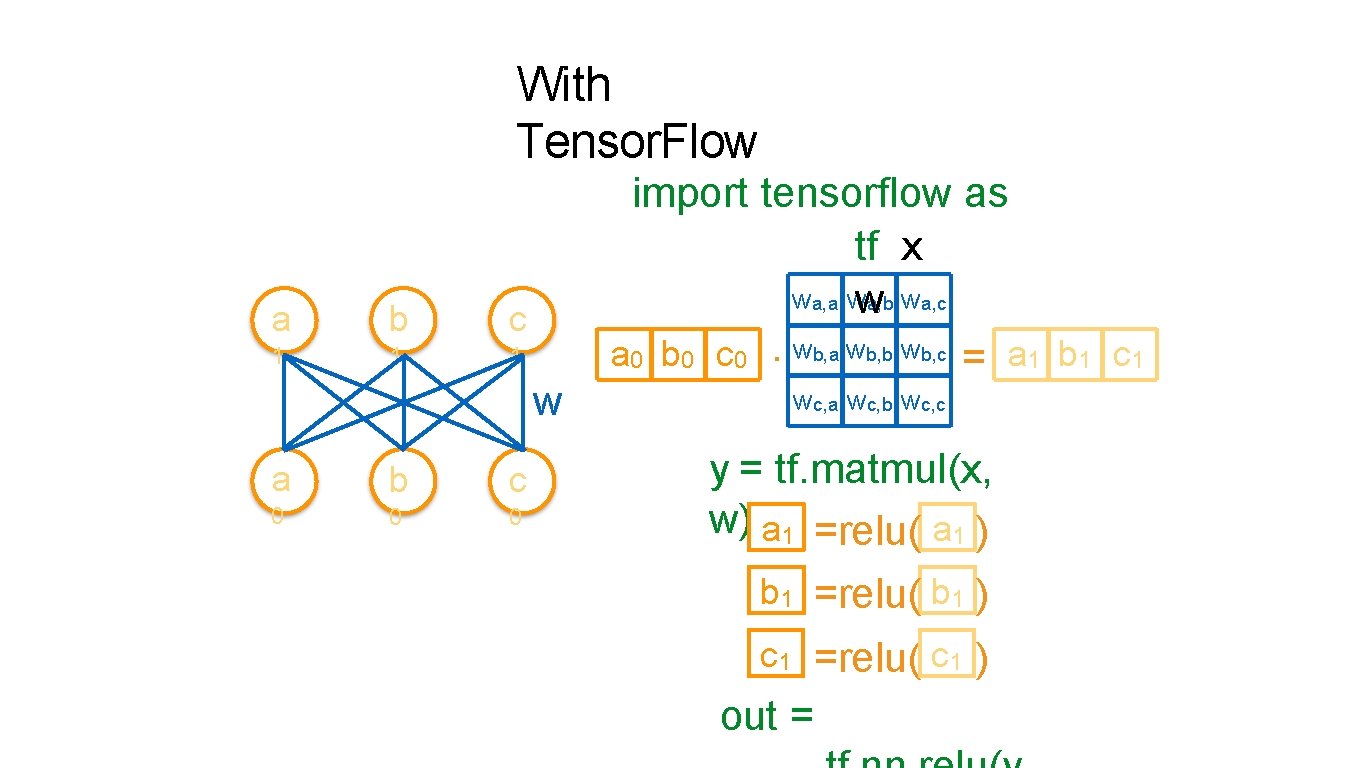

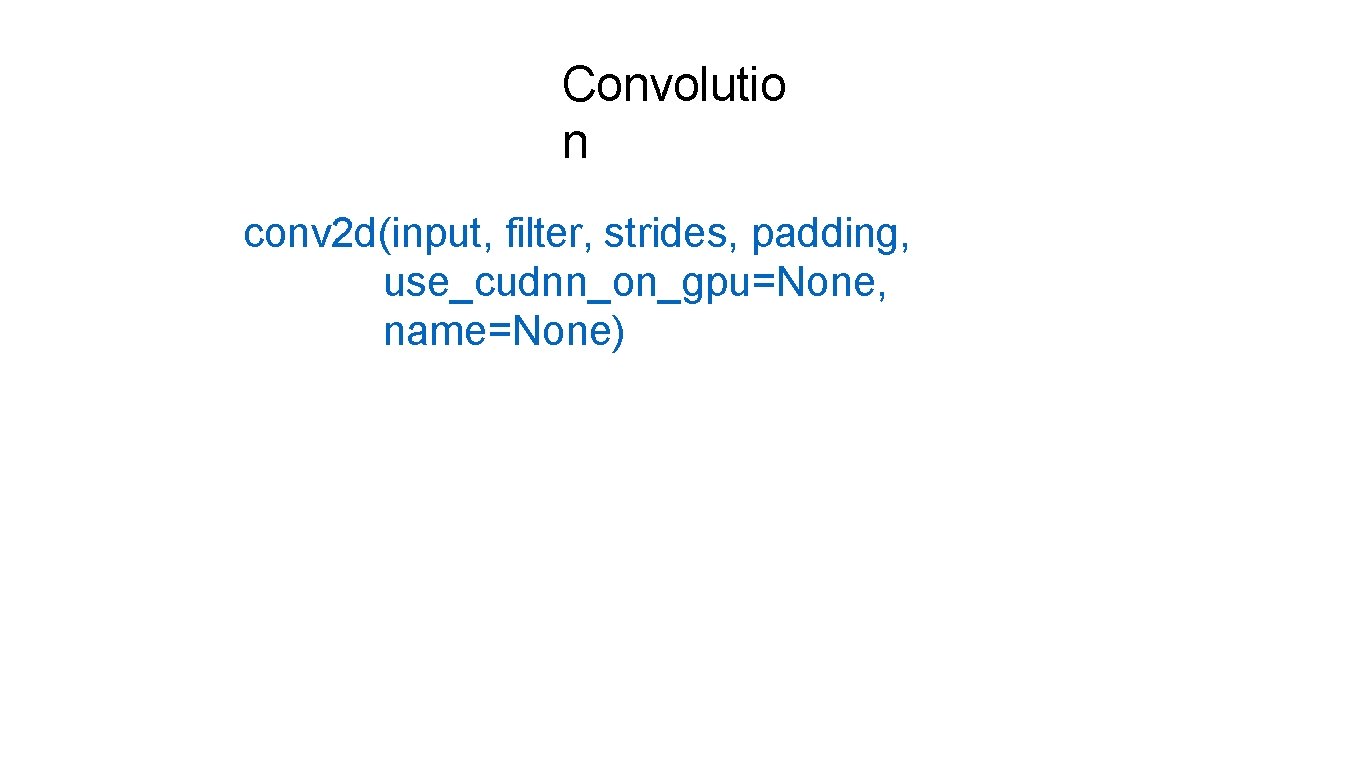

Make it deep Add layer s … x = tf. placeholder("float", [1, 3]) relu_out = x num_layers = 2 for layer in range(num_layers): w = tf. Variable(tf. random_normal([3, 3])) b = tf. Variable(tf. zeros([1, 3])) relu_out = tf. nn. relu(tf. matmul(relu_out, w) + b) …

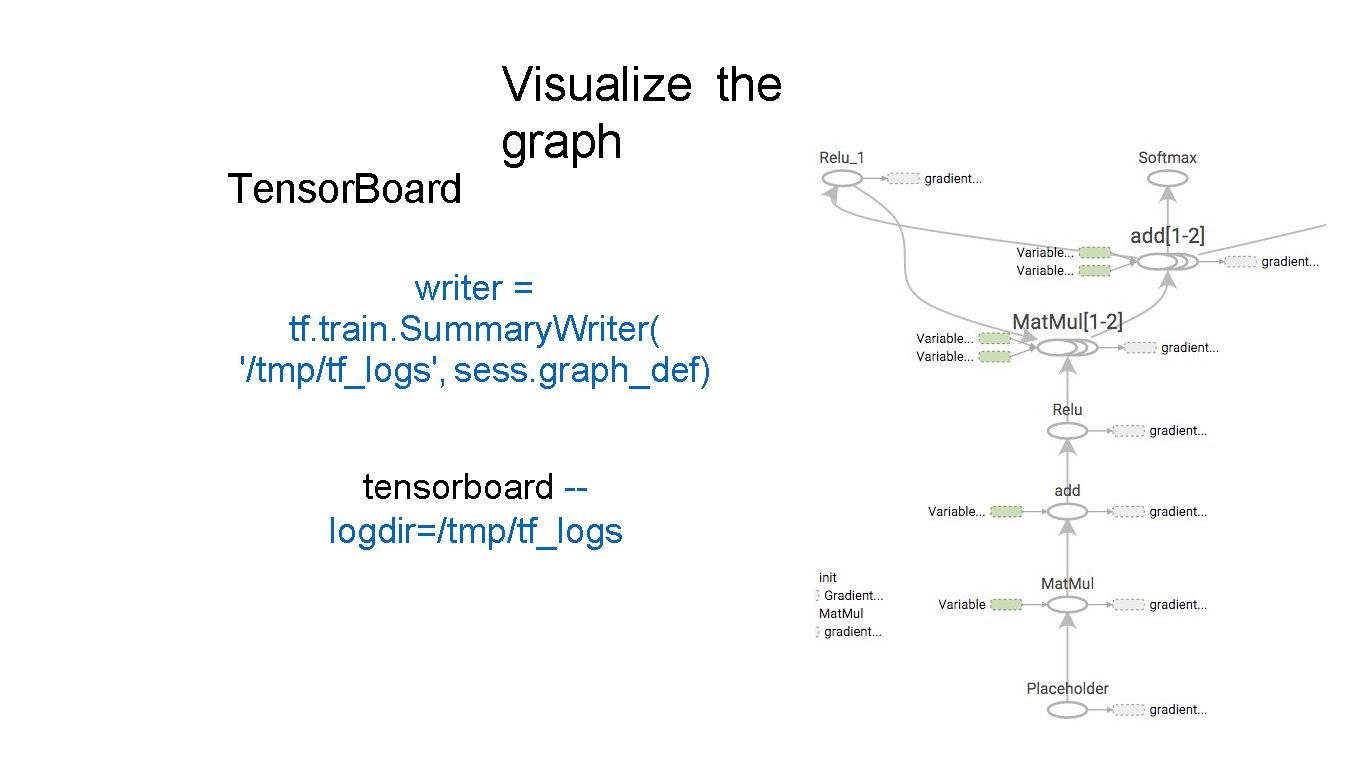

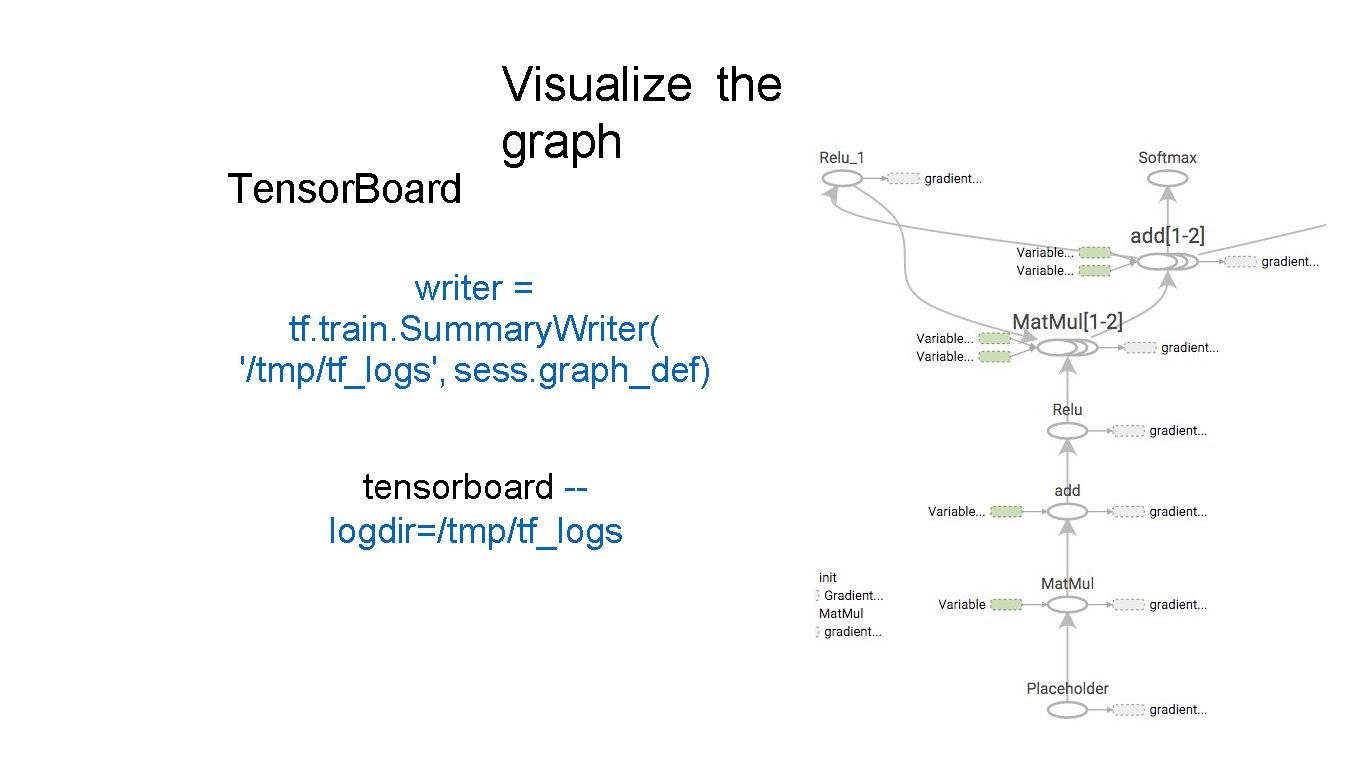

Tensor. Board Visualize the graph writer = tf. train. Summary. Writer( '/tmp/tf_logs', sess. graph_def) tensorboard -logdir=/tmp/tf_logs

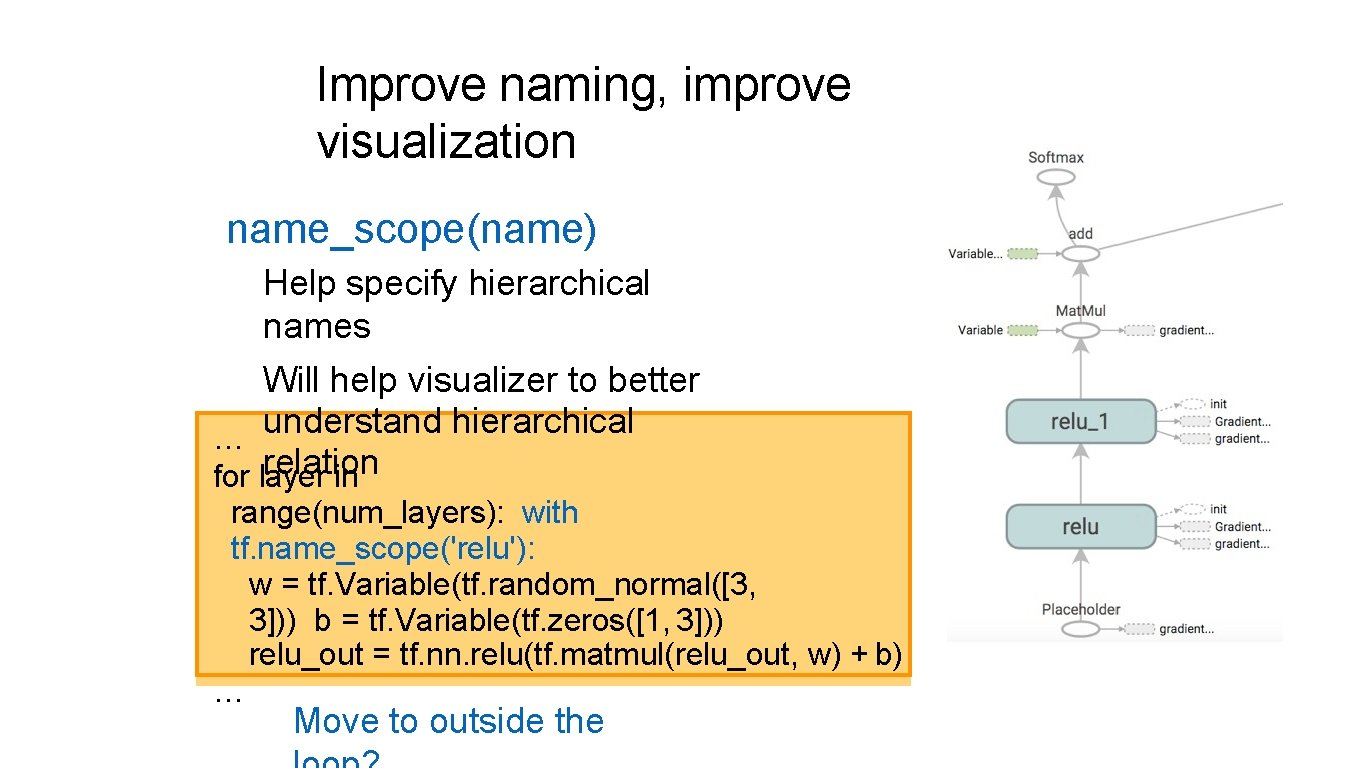

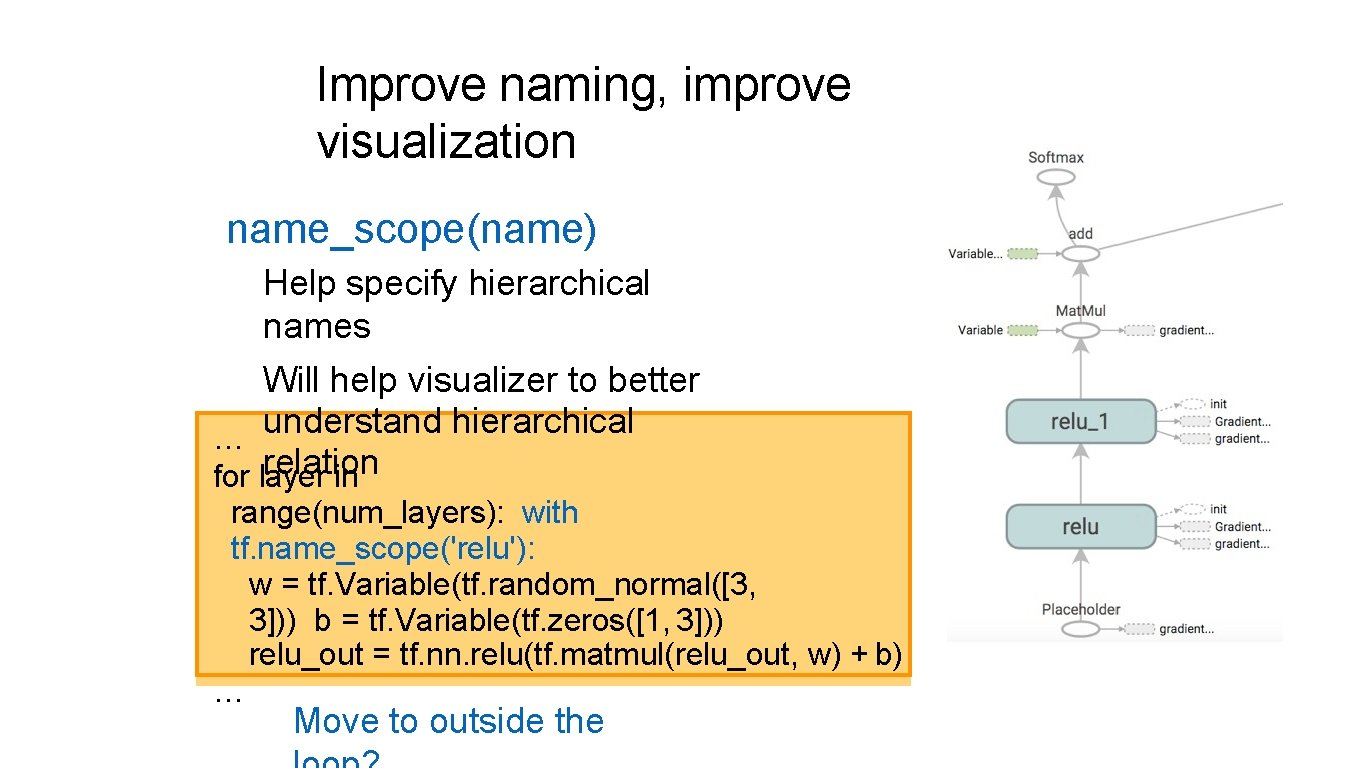

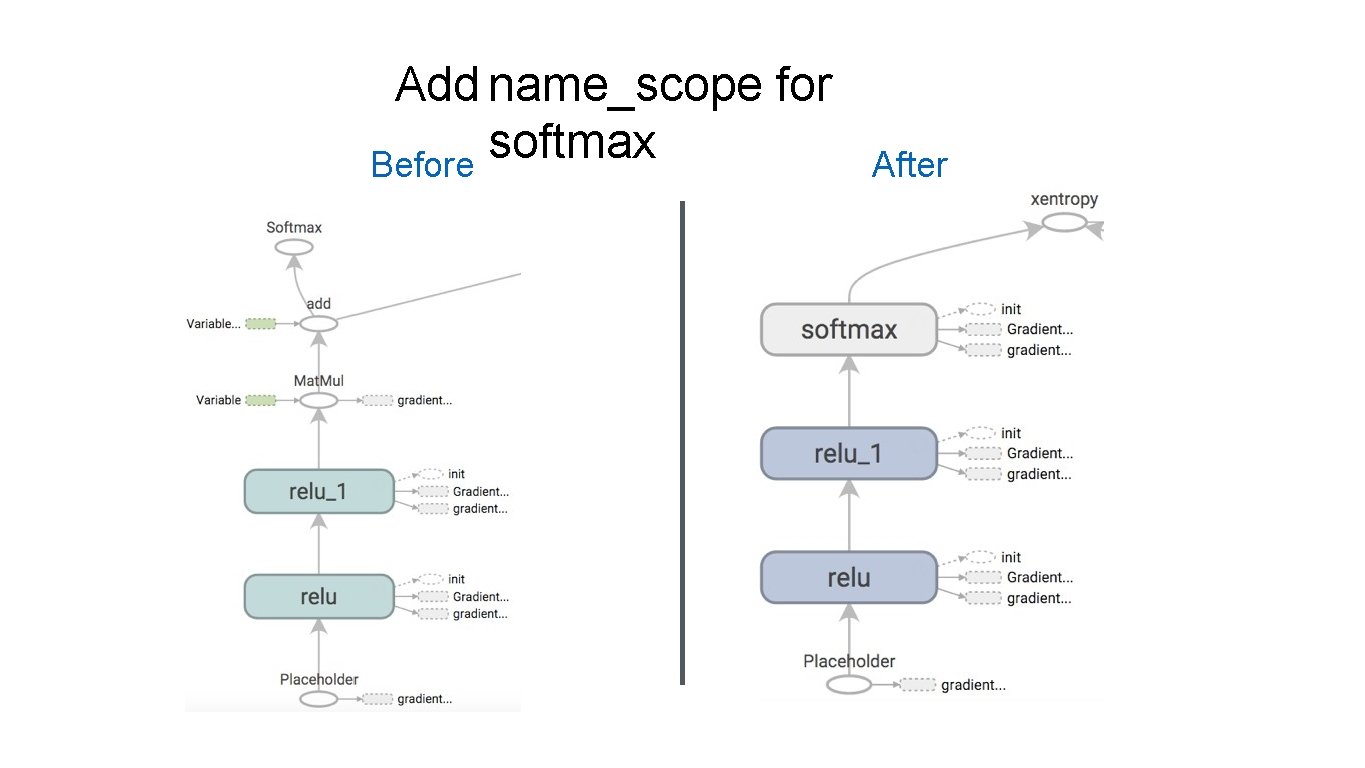

Improve naming, improve visualization name_scope(name) Help specify hierarchical names Will help visualizer to better understand hierarchical … relation for layer in range(num_layers): with tf. name_scope('relu'): w = tf. Variable(tf. random_normal([3, 3])) b = tf. Variable(tf. zeros([1, 3])) relu_out = tf. nn. relu(tf. matmul(relu_out, w) + b) … Move to outside the

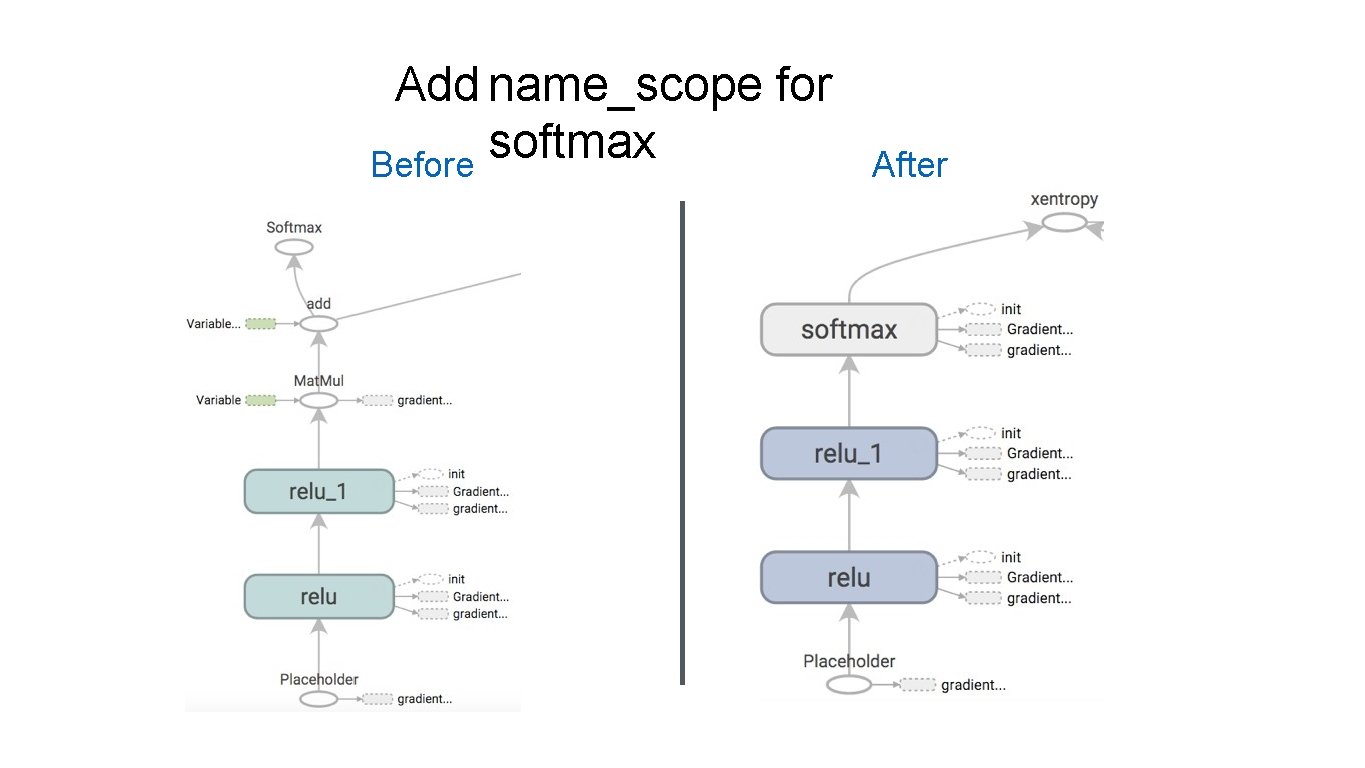

Add name_scope for softmax Before After

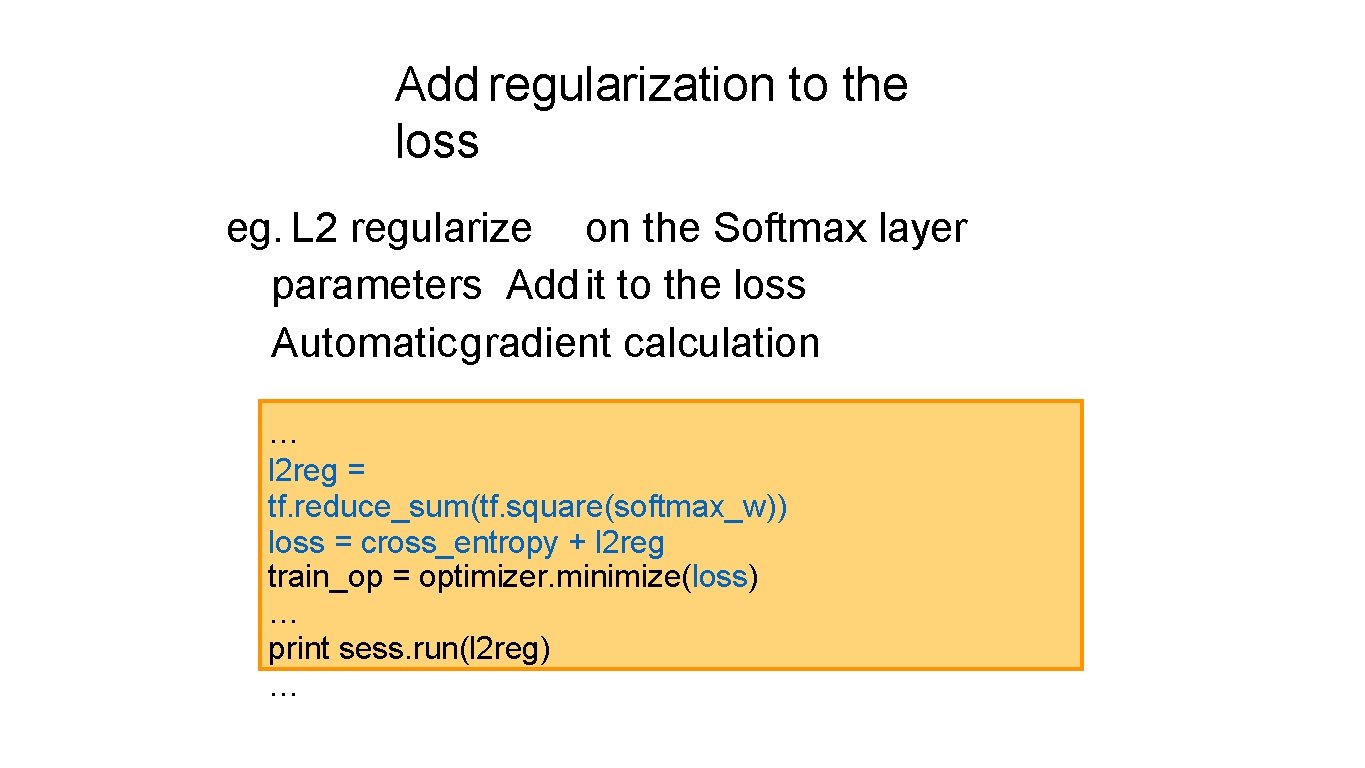

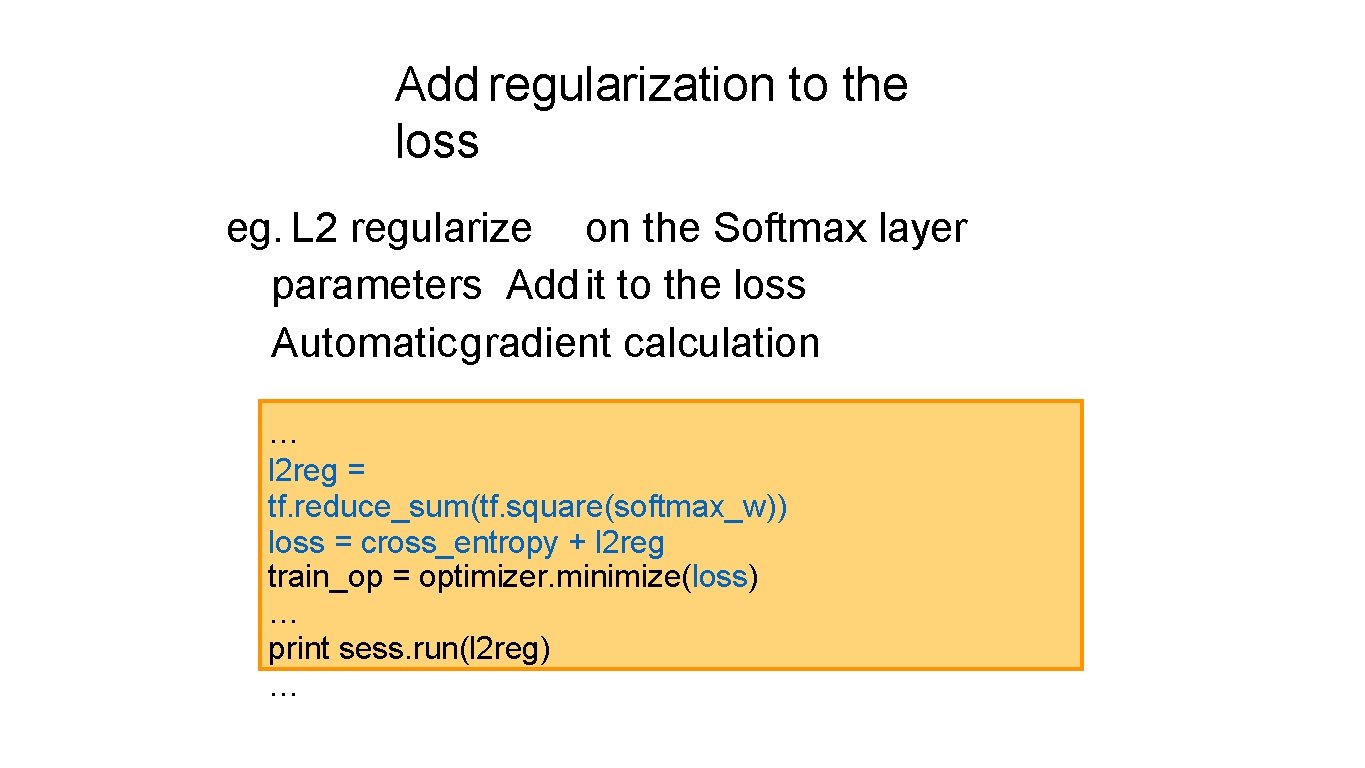

Add regularization to the loss eg. L 2 regularize on the Softmax layer parameters Add it to the loss Automaticgradient calculation … l 2 reg = tf. reduce_sum(tf. square(softmax_w)) loss = cross_entropy + l 2 reg train_op = optimizer. minimize(loss) … print sess. run(l 2 reg) …

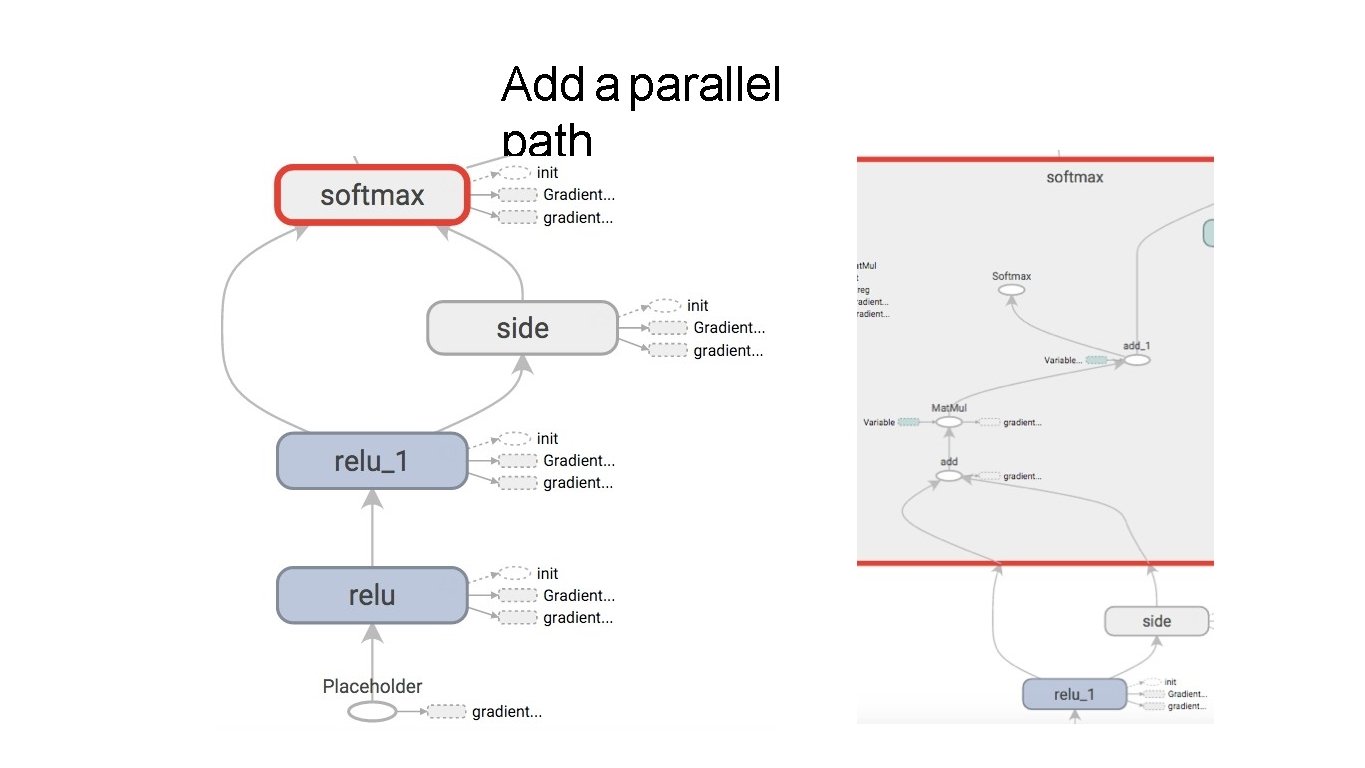

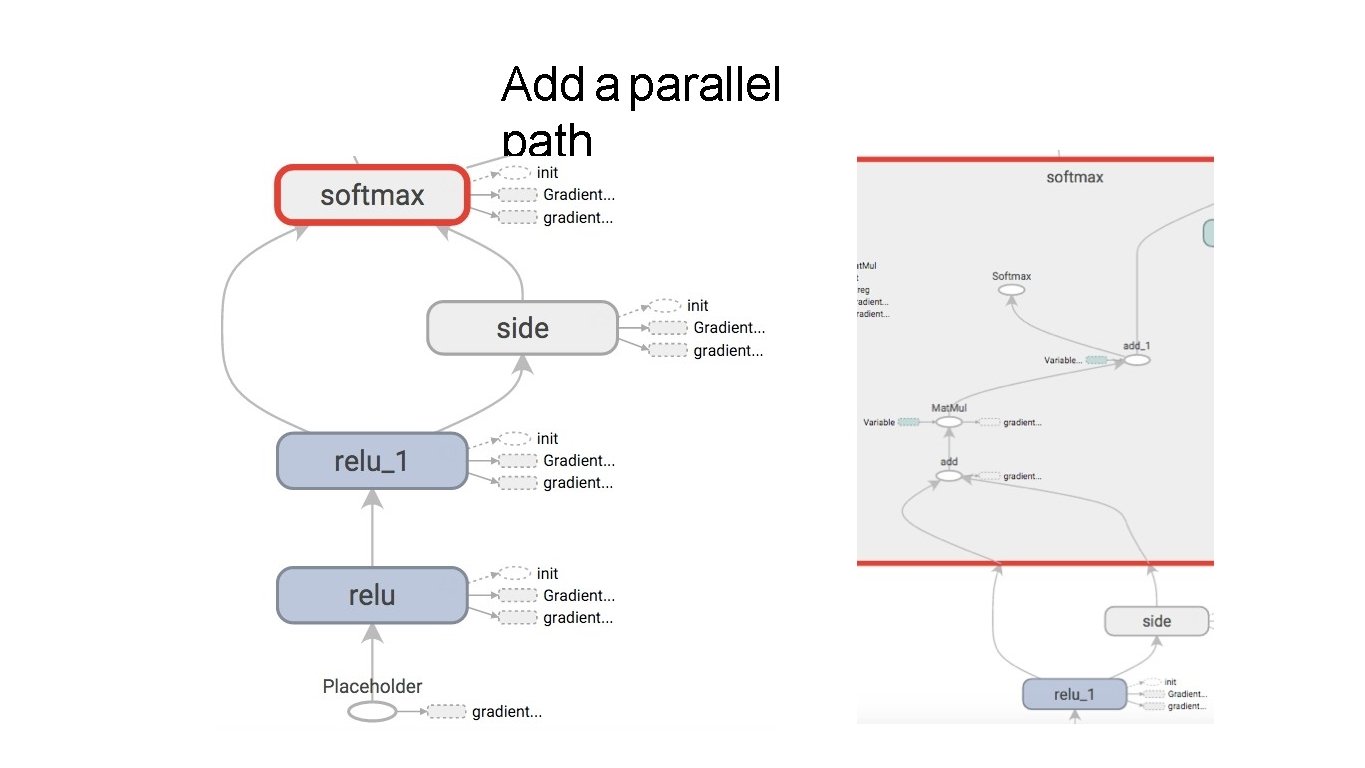

Add a parallel path

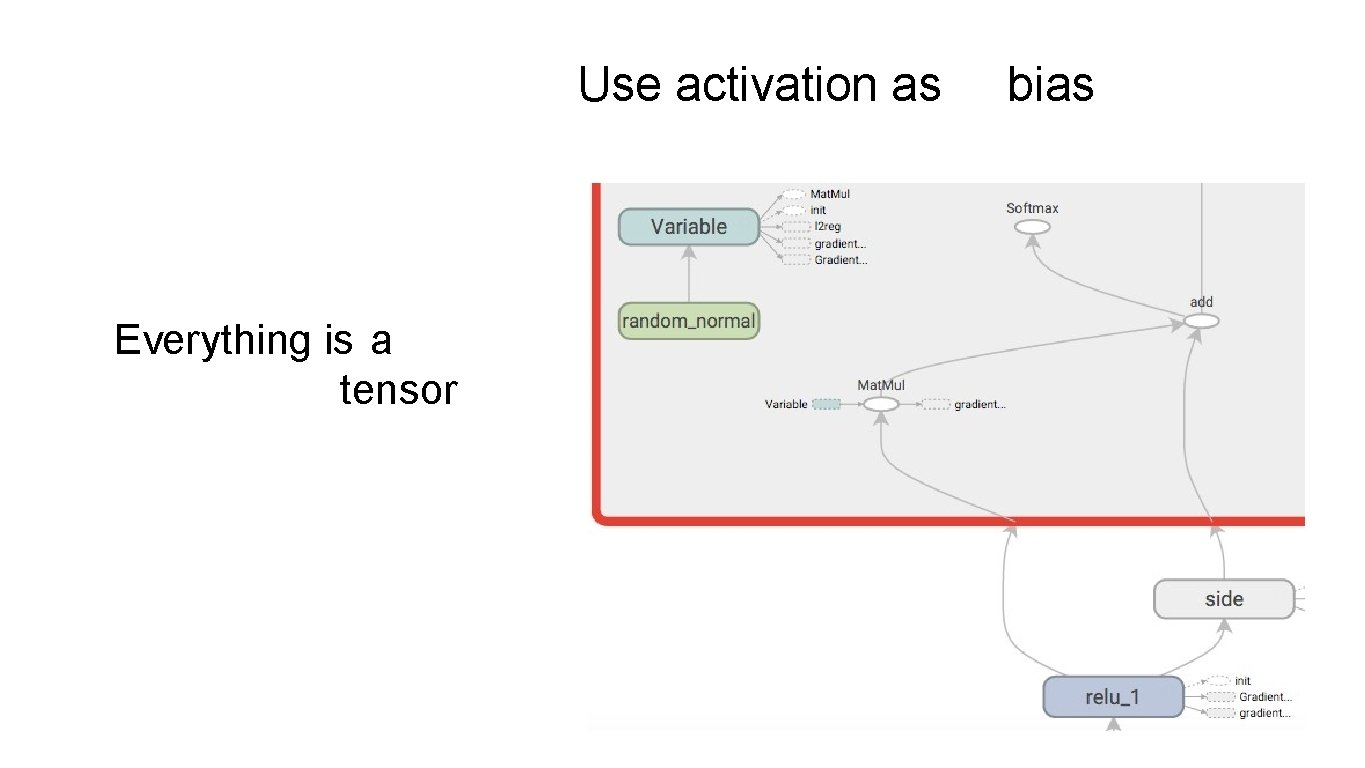

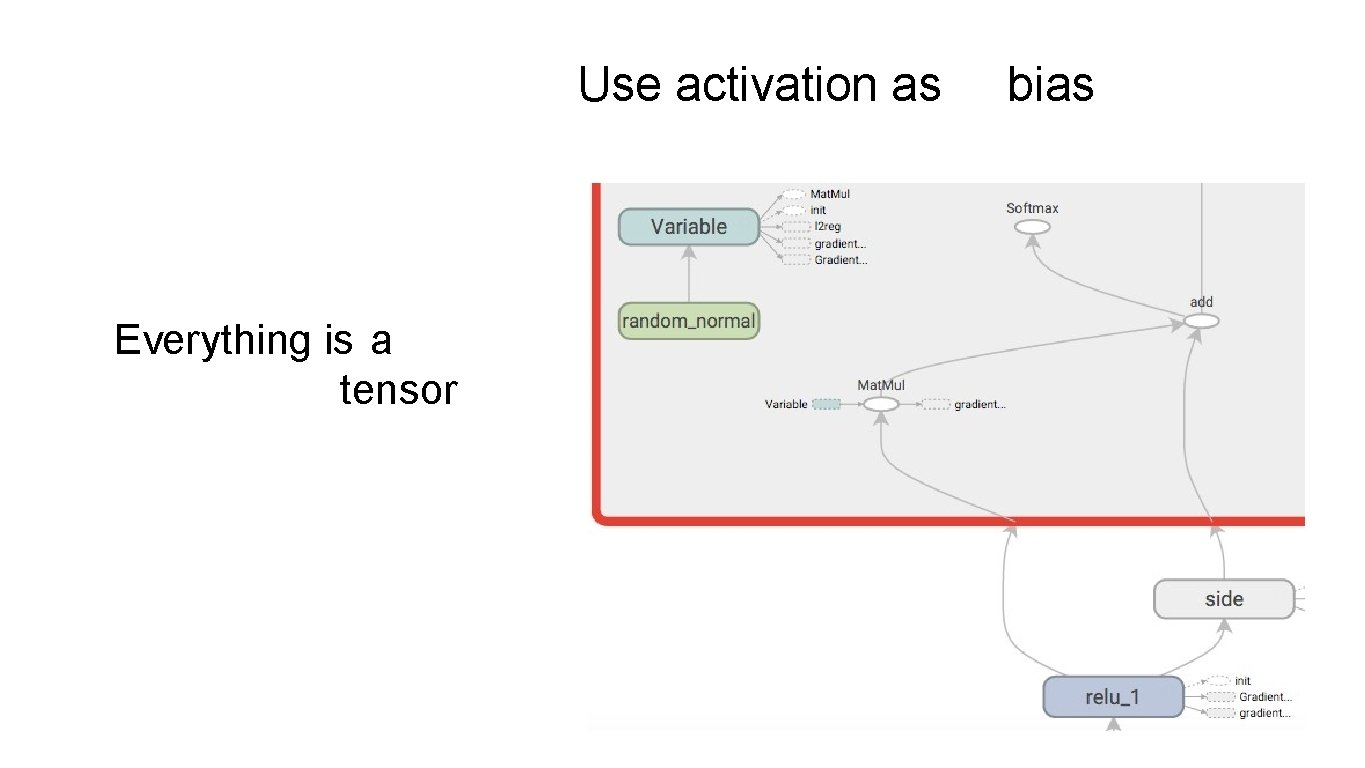

Use activation as Everything is a tensor bias

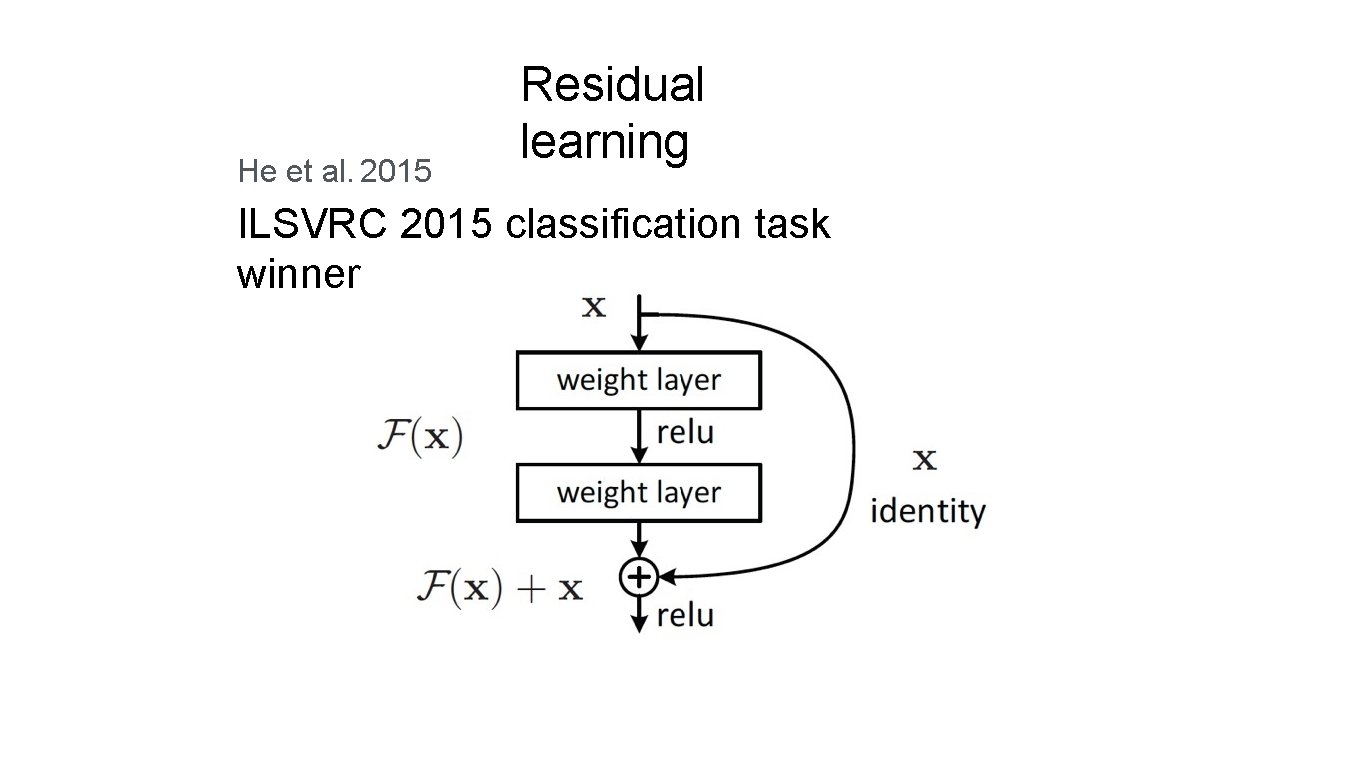

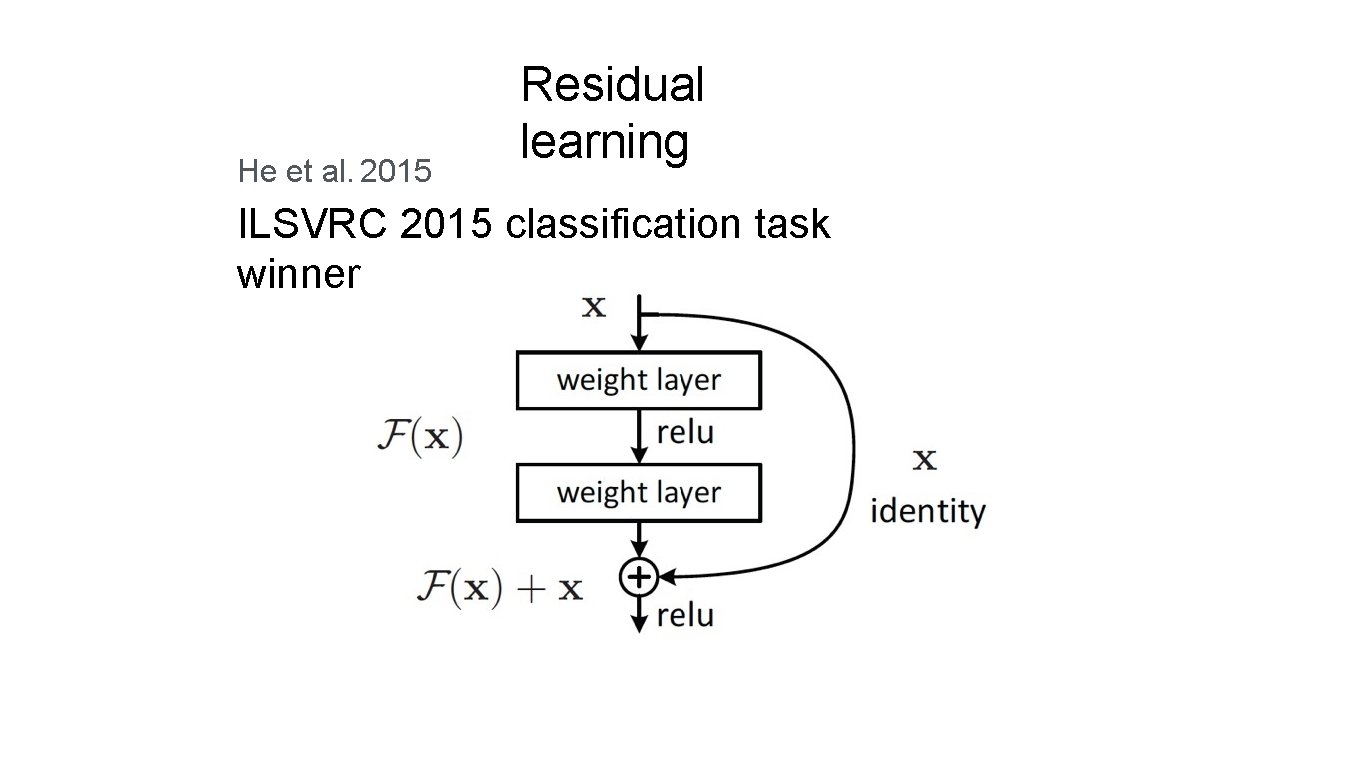

He et al. 2015 Residual learning ILSVRC 2015 classification task winner

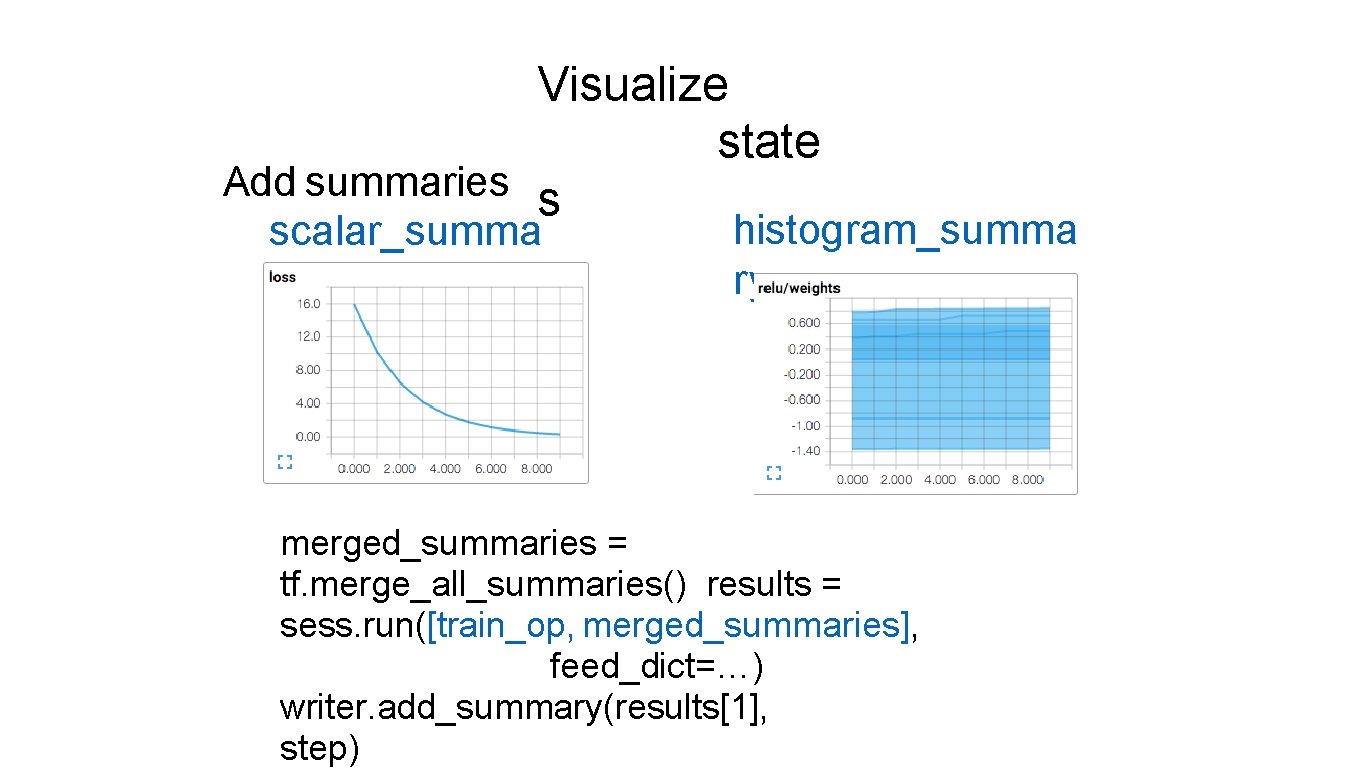

Visualize state Add summaries s scalar_summa ry histogram_summa ry merged_summaries = tf. merge_all_summaries() results = sess. run([train_op, merged_summaries], feed_dict=…) writer. add_summary(results[1], step)

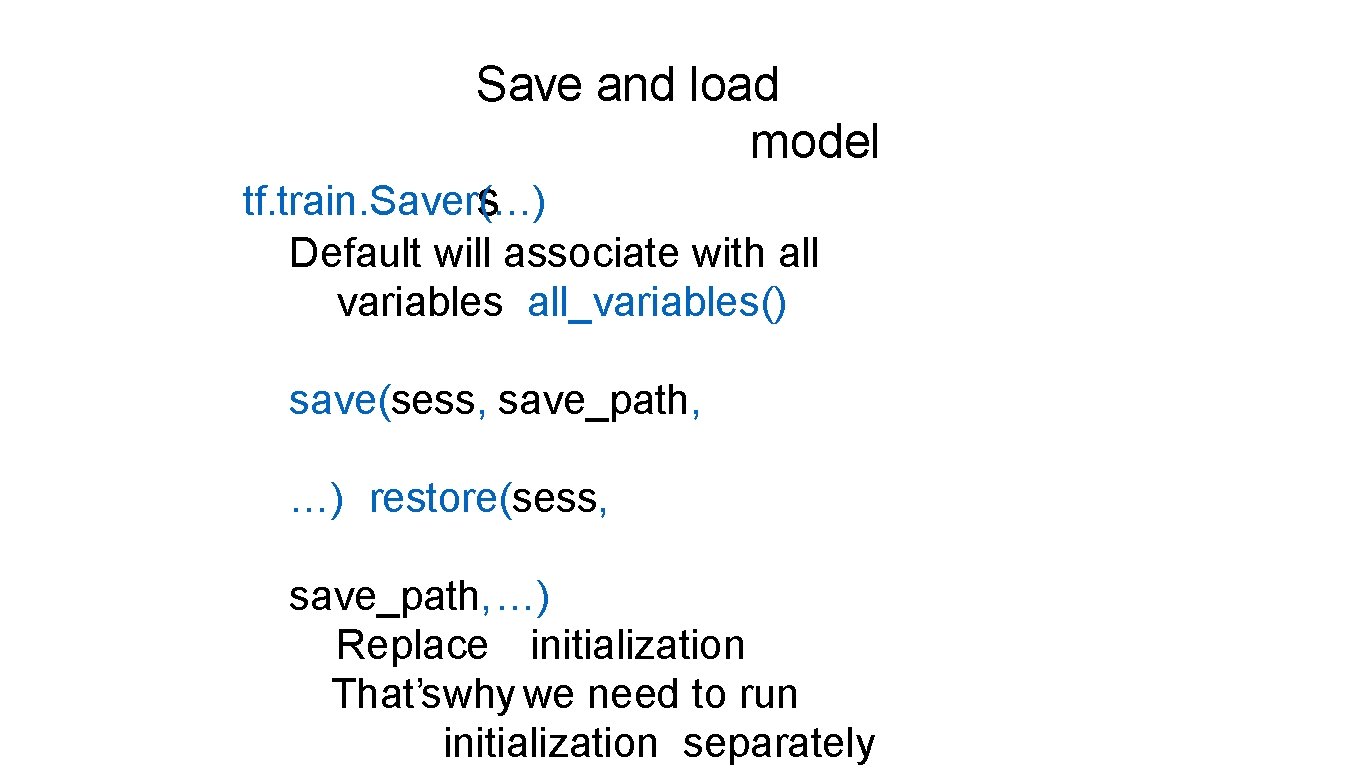

Save and load model tf. train. Saver(…) s Default will associate with all variables all_variables() save(sess, save_path, …) restore(sess, save_path, …) Replace initialization That’swhy we need to run initialization separately

Convolutio n conv 2 d(input, filter, strides, padding, use_cudnn_on_gpu=None, name=None)

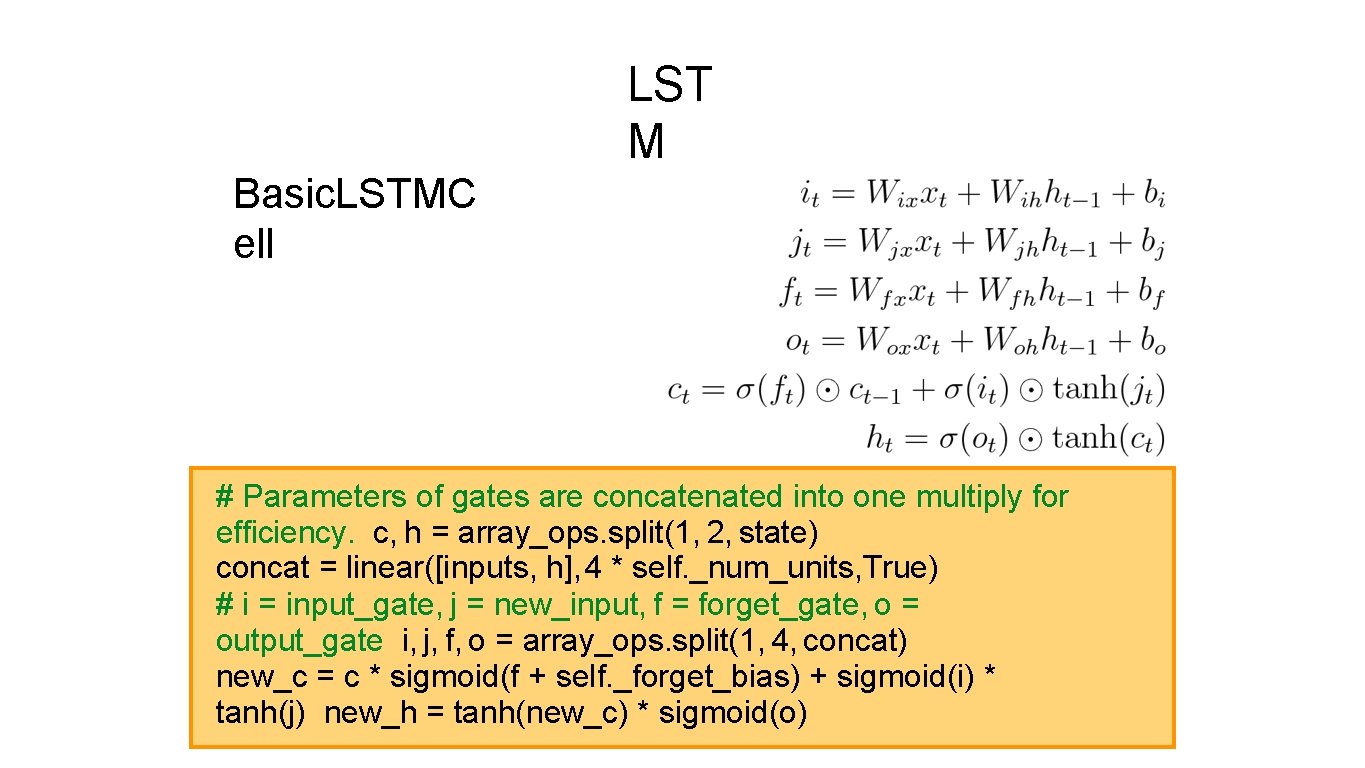

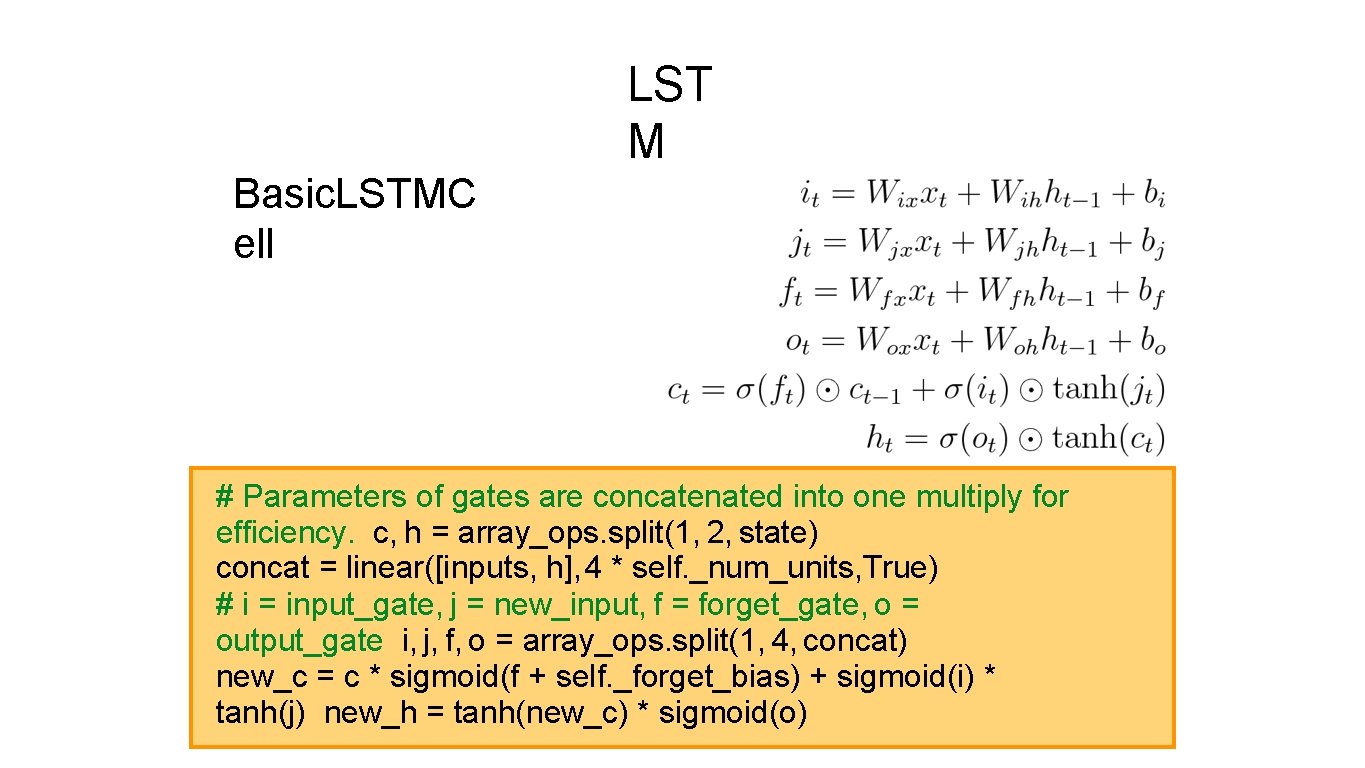

LST M Basic. LSTMC ell # Parameters of gates are concatenated into one multiply for efficiency. c, h = array_ops. split(1, 2, state) concat = linear([inputs, h], 4 * self. _num_units, True) # i = input_gate, j = new_input, f = forget_gate, o = output_gate i, j, f, o = array_ops. split(1, 4, concat) new_c = c * sigmoid(f + self. _forget_bias) + sigmoid(i) * tanh(j) new_h = tanh(new_c) * sigmoid(o)

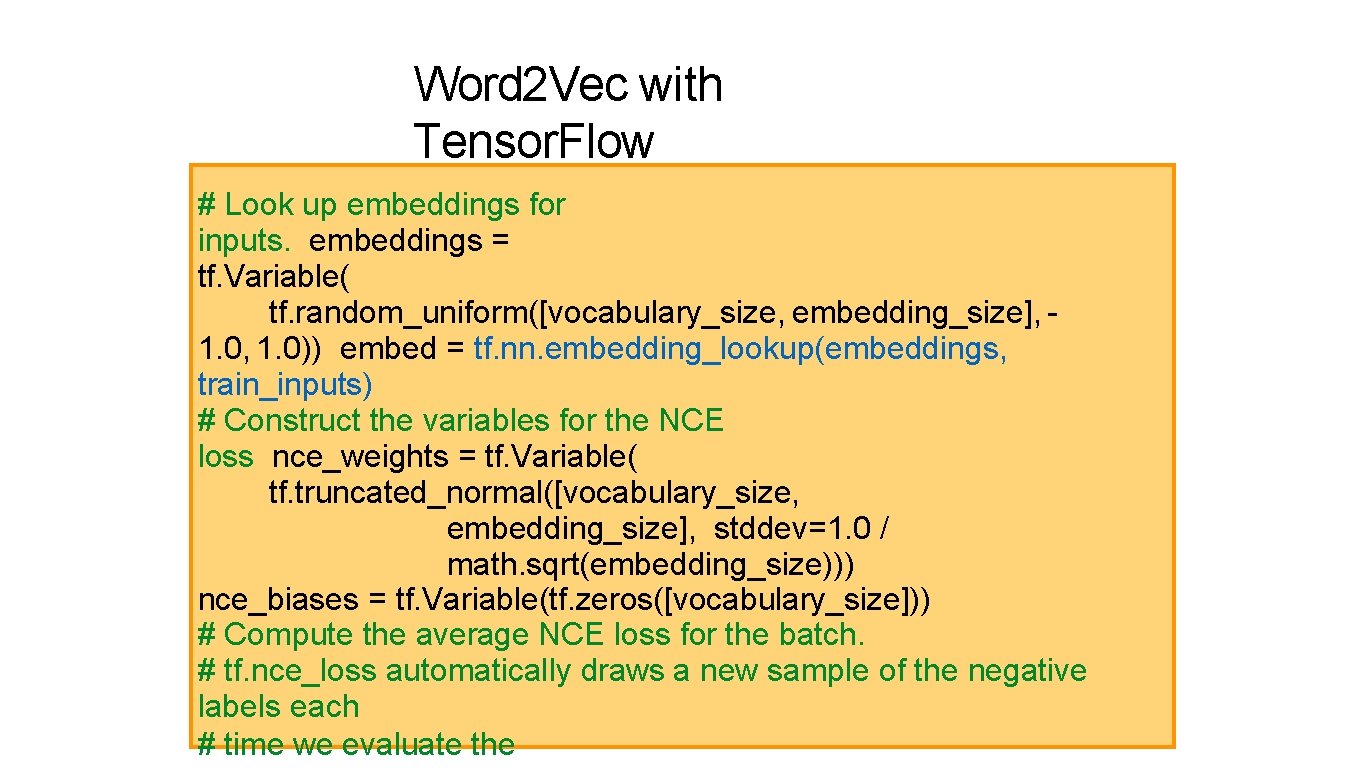

Word 2 Vec with Tensor. Flow # Look up embeddings for inputs. embeddings = tf. Variable( tf. random_uniform([vocabulary_size, embedding_size], 1. 0)) embed = tf. nn. embedding_lookup(embeddings, train_inputs) # Construct the variables for the NCE loss nce_weights = tf. Variable( tf. truncated_normal([vocabulary_size, embedding_size], stddev=1. 0 / math. sqrt(embedding_size))) nce_biases = tf. Variable(tf. zeros([vocabulary_size])) # Compute the average NCE loss for the batch. # tf. nce_loss automatically draws a new sample of the negative labels each # time we evaluate the

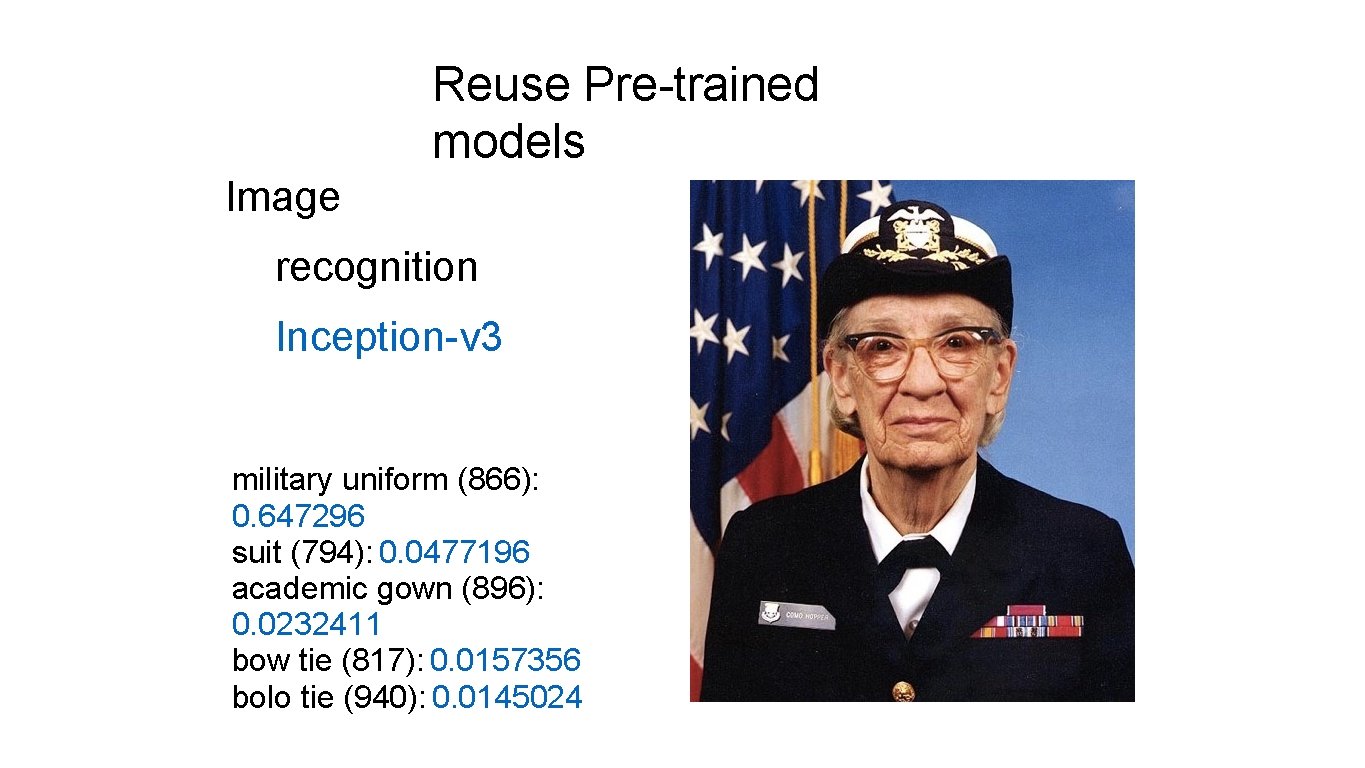

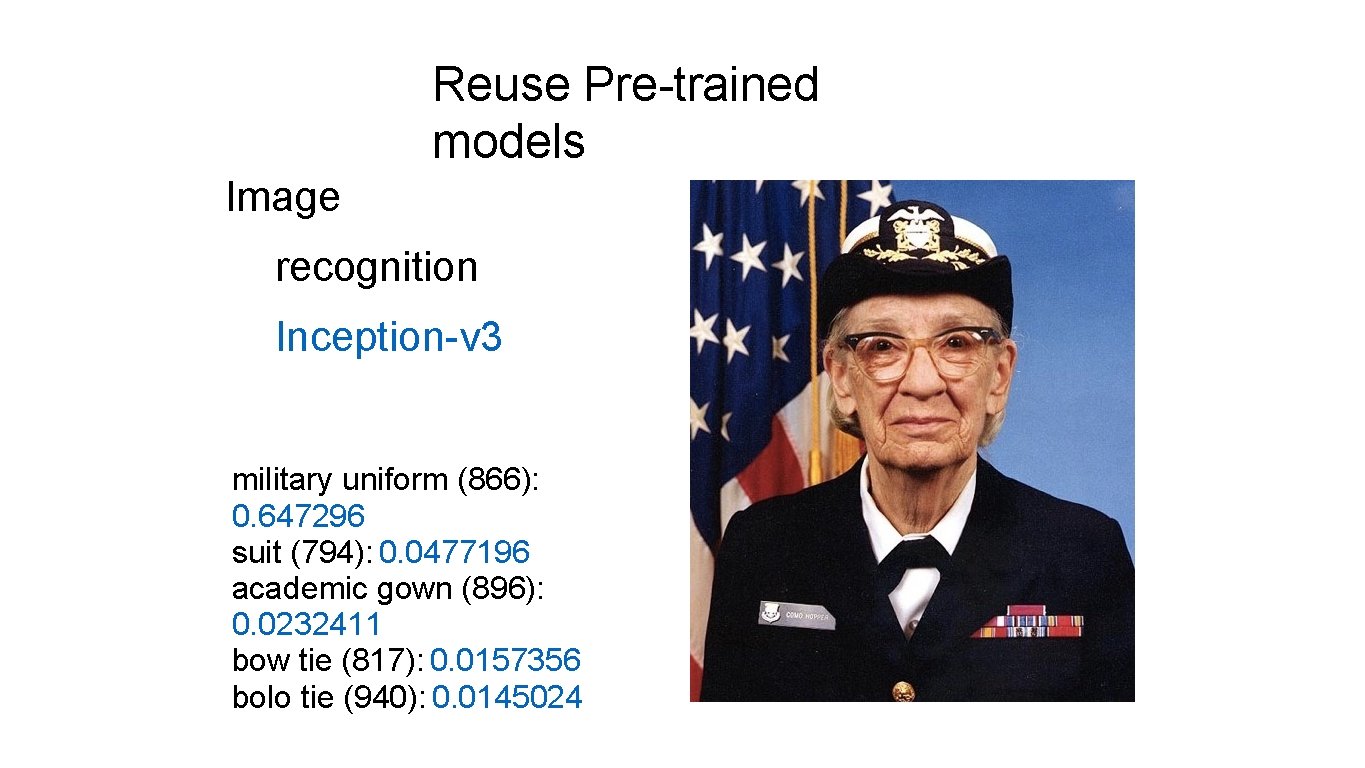

Reuse Pre-trained models Image recognition Inception-v 3 military uniform (866): 0. 647296 suit (794): 0. 0477196 academic gown (896): 0. 0232411 bow tie (817): 0. 0157356 bolo tie (940): 0. 0145024

Try it on your Android Tensorflow Android Camera Demo Uses a Google Inception model to classify camera frames in real-time, displaying the top results in an overlay on the camera image. github. com/tensorflow/tree/master/tensorfl ow/ examples/android

Reinforcement Learning using Tensor Flow github. com/nivwusquorum/tensorflow-

Using Deep Q Networks to Learn Video Game Strategi es github. com/asrivat 1/Deep. Learning. Video. G