Taxonomy Strategies LLC Testing Your Taxonomy Ron Daniel

- Slides: 29

Taxonomy Strategies LLC Testing Your Taxonomy Ron Daniel, Jr. Novemver 2, 2006 Copyright 2006 Taxonomy Strategies LLC. All rights reserved.

Testing Your Taxonomy Ø Your taxonomy will not be perfect or complete and will need to be modified based on changing content, user needs, and other practical considerations. Ø Developing a taxonomy incrementally requires measuring how well it is working in order to plan how to modify it. Ø In this session, you will learn qualitative and quantitative taxonomy testing methods including: § Tagging representative content to see if it works and determining how much content is good enough for validation. § Card-sorting, use-based scenario testing, and focus groups to determine if the taxonomy makes sense to your target audiences and to provide clues about how to fix it. § Benchmarks and metrics to evaluate usability test results, identify coverage gaps, and provide guidance for changes. Taxonomy Strategies LLC The business of organized information 2

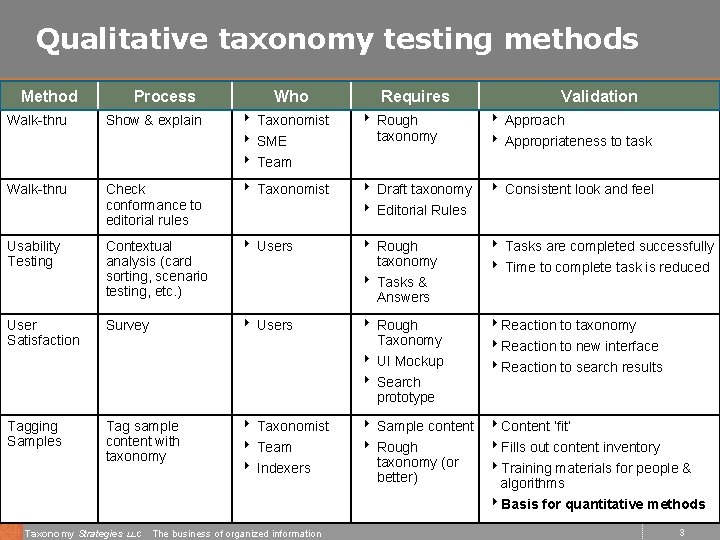

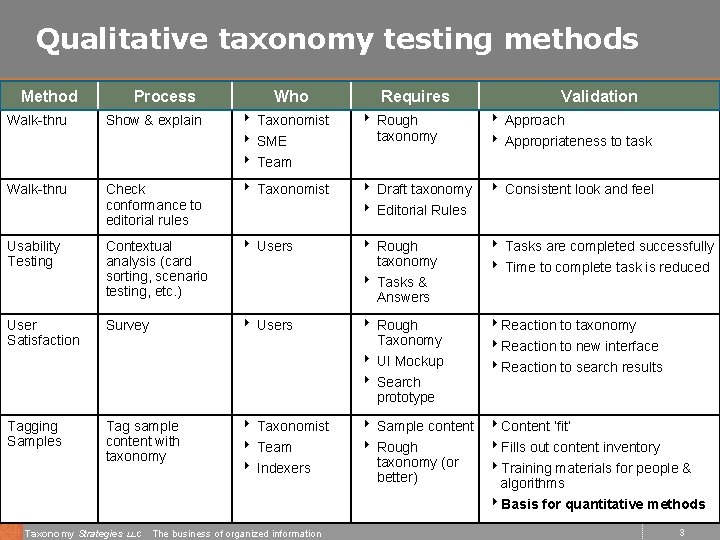

Qualitative taxonomy testing methods Method Process Who Requires Validation Walk-thru Show & explain 4 Taxonomist 4 SME 4 Team 4 Rough taxonomy 4 Approach 4 Appropriateness to task Walk-thru Check conformance to editorial rules 4 Taxonomist 4 Draft taxonomy 4 Editorial Rules 4 Consistent look and feel Usability Testing Contextual analysis (card sorting, scenario testing, etc. ) 4 Users 4 Rough taxonomy 4 Tasks & Answers 4 Tasks are completed successfully 4 Time to complete task is reduced User Satisfaction Survey 4 Users 4 Rough Taxonomy 4 UI Mockup 4 Search prototype 4 Reaction to taxonomy 4 Reaction to new interface 4 Reaction to search results Tagging Samples Tag sample content with taxonomy 4 Taxonomist 4 Team 4 Indexers 4 Sample content 4 Content ‘fit’ 4 Rough 4 Fills out content inventory taxonomy (or 4 Training materials for people & better) algorithms 4 Basis for quantitative methods Taxonomy Strategies LLC The business of organized information 3

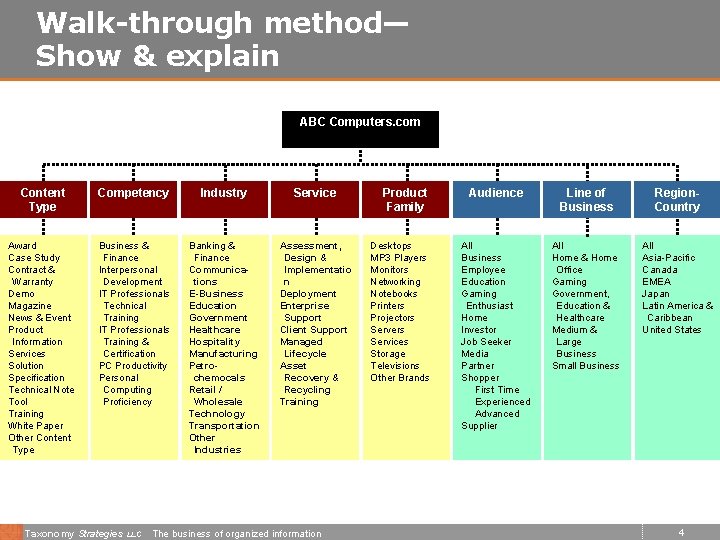

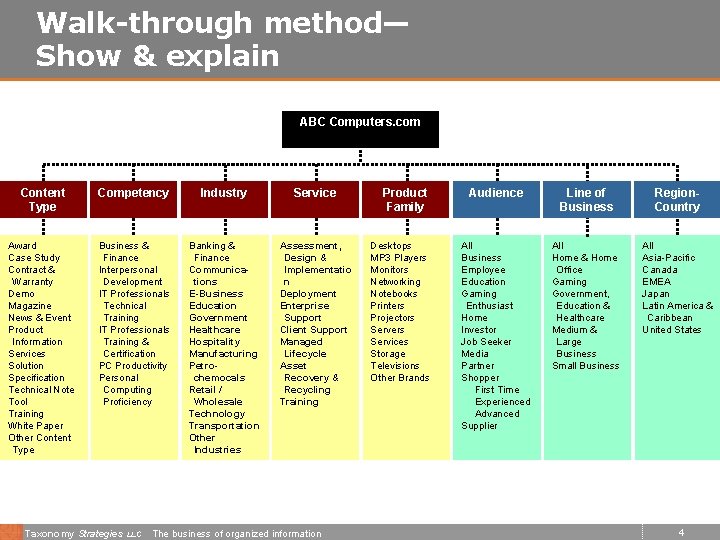

Walk-through method— Show & explain ABC Computers. com Content Type Competency Industry Service Award Case Study Contract & Warranty Demo Magazine News & Event Product Information Services Solution Specification Technical Note Tool Training White Paper Other Content Type Business & Finance Interpersonal Development IT Professionals Technical Training IT Professionals Training & Certification PC Productivity Personal Computing Proficiency Banking & Finance Communications E-Business Education Government Healthcare Hospitality Manufacturing Petrochemocals Retail / Wholesale Technology Transportation Other Industries Assessment, Design & Implementatio n Deployment Enterprise Support Client Support Managed Lifecycle Asset Recovery & Recycling Training Taxonomy Strategies LLC The business of organized information Product Family Desktops MP 3 Players Monitors Networking Notebooks Printers Projectors Servers Services Storage Televisions Other Brands Audience Line of Business Region. Country All Business Employee Education Gaming Enthusiast Home Investor Job Seeker Media Partner Shopper First Time Experienced Advanced Supplier All Home & Home Office Gaming Government, Education & Healthcare Medium & Large Business Small Business All Asia-Pacific Canada EMEA Japan Latin America & Caribbean United States 4

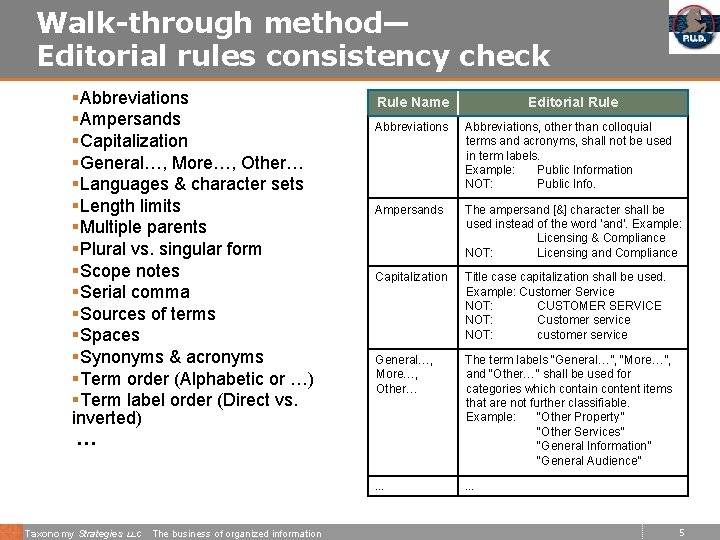

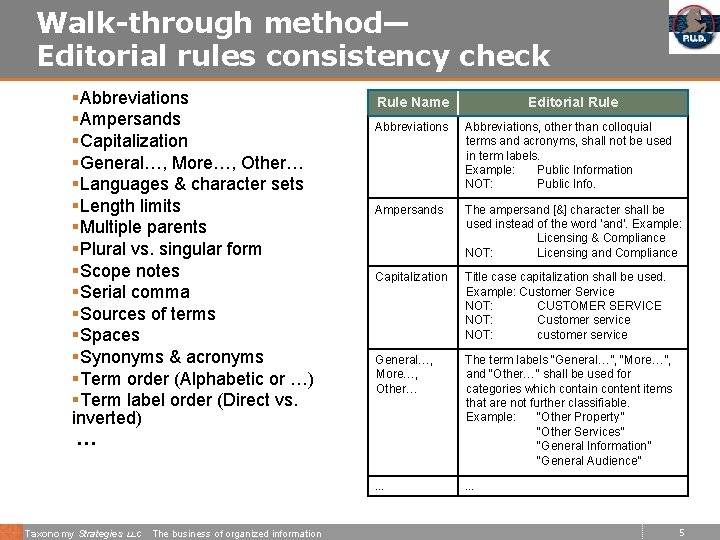

Walk-through method— Editorial rules consistency check §Abbreviations §Ampersands §Capitalization §General…, More…, Other… §Languages & character sets §Length limits §Multiple parents §Plural vs. singular form §Scope notes §Serial comma §Sources of terms §Spaces §Synonyms & acronyms §Term order (Alphabetic or …) §Term label order (Direct vs. Rule Name Abbreviations, other than colloquial terms and acronyms, shall not be used in term labels. Example: Public Information NOT: Public Info. Ampersands The ampersand [&] character shall be used instead of the word ‘and’. Example: Licensing & Compliance NOT: Licensing and Compliance Capitalization Title case capitalization shall be used. Example: Customer Service NOT: CUSTOMER SERVICE NOT: Customer service NOT: customer service General…, More…, Other… The term labels “General…”, “More…”, and “Other…” shall be used for categories which contain content items that are not further classifiable. Example: “Other Property” “Other Services” “General Information” “General Audience” … … inverted) … Taxonomy Strategies LLC The business of organized information Editorial Rule 5

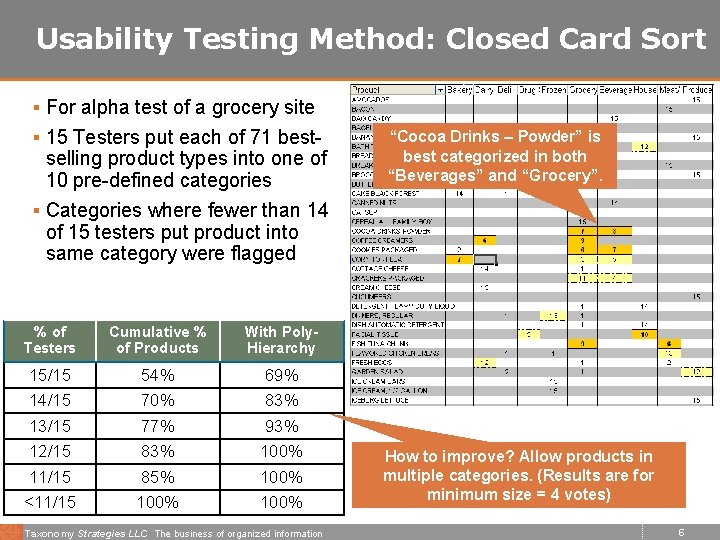

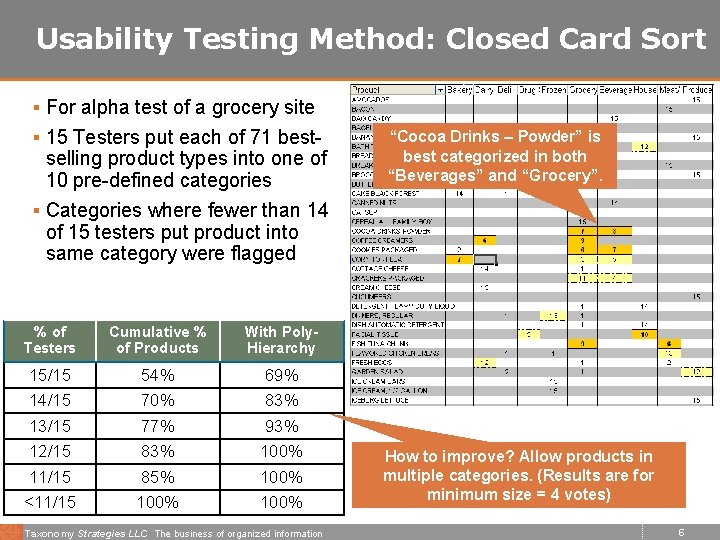

Usability Testing Method: Closed Card Sort § For alpha test of a grocery site § 15 Testers put each of 71 best- selling product types into one of 10 pre-defined categories § Categories where fewer than 14 of 15 testers put product into same category were flagged % of Testers Cumulative % of Products With Poly. Hierarchy 15/15 54% 69% 14/15 70% 83% 13/15 77% 93% 12/15 83% 100% 11/15 85% 100% <11/15 100% Taxonomy Strategies LLC The business of of organized information “Cocoa Drinks – Powder” is best categorized in both “Beverages” and “Grocery”. How to improve? Allow products in multiple categories. (Results are for minimum size = 4 votes) 6

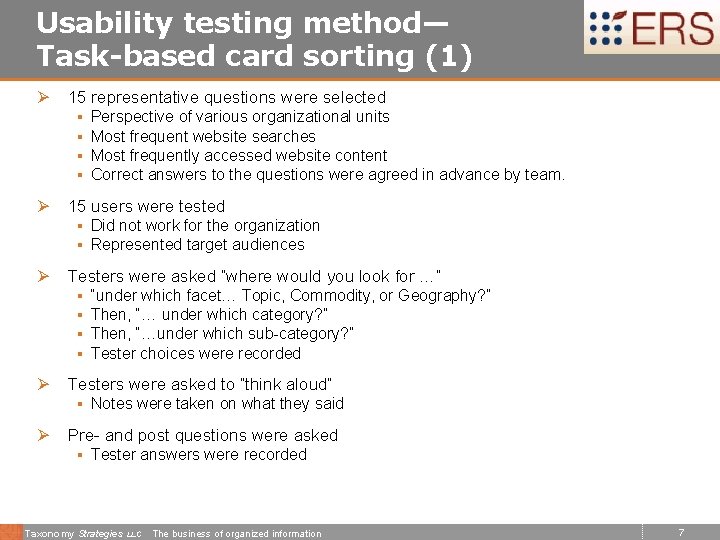

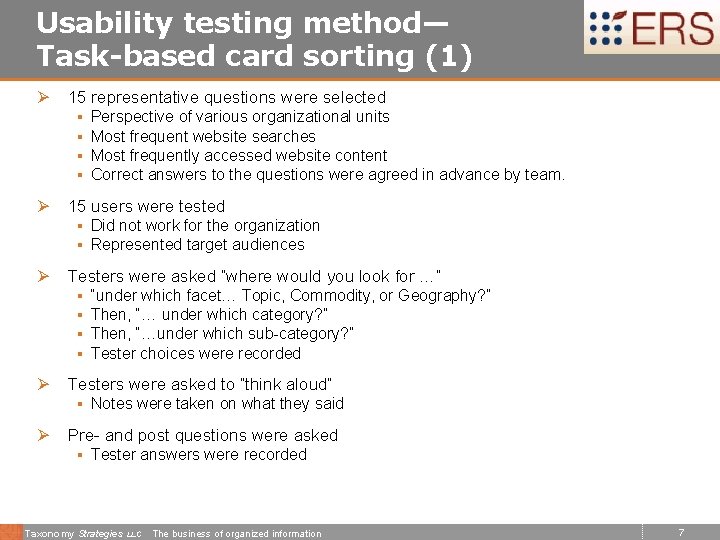

Usability testing method— Task-based card sorting (1) Ø 15 representative questions were selected § § Ø Perspective of various organizational units Most frequent website searches Most frequently accessed website content Correct answers to the questions were agreed in advance by team. 15 users were tested § Did not work for the organization § Represented target audiences Ø Testers were asked “where would you look for …” § § Ø “under which facet… Topic, Commodity, or Geography? ” Then, “… under which category? ” Then, “…under which sub-category? ” Tester choices were recorded Testers were asked to “think aloud” § Notes were taken on what they said Ø Pre- and post questions were asked § Tester answers were recorded Taxonomy Strategies LLC The business of organized information 7

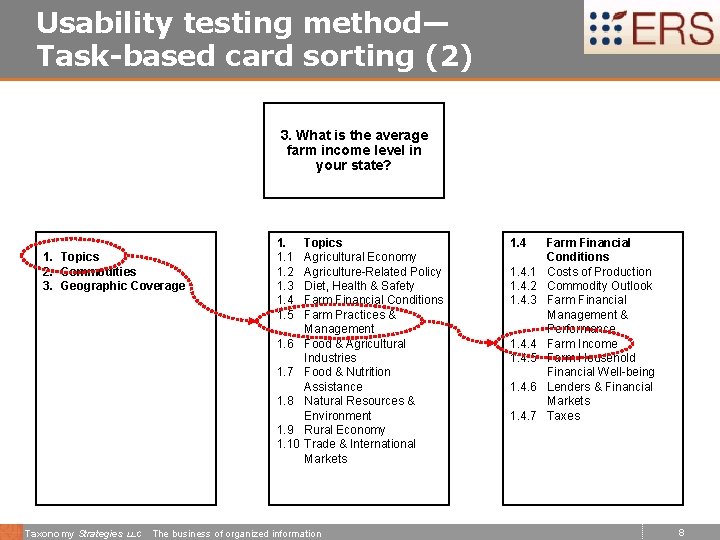

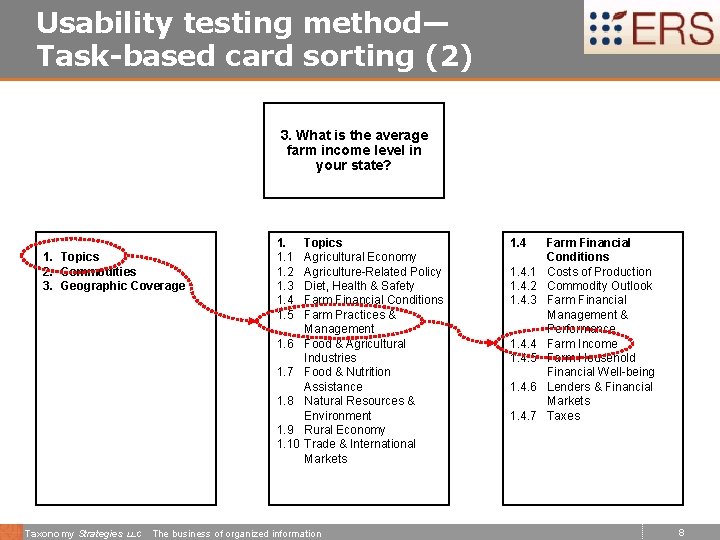

Usability testing method— Task-based card sorting (2) 3. What is the average farm income level in your state? 1. Topics 2. Commodities 3. Geographic Coverage 1. 1. 1 1. 2 1. 3 1. 4 1. 5 1. 6 1. 7 1. 8 1. 9 1. 10 Taxonomy Strategies LLC Topics Agricultural Economy Agriculture-Related Policy Diet, Health & Safety Farm Financial Conditions Farm Practices & Management Food & Agricultural Industries Food & Nutrition Assistance Natural Resources & Environment Rural Economy Trade & International Markets The business of organized information 1. 4. 1 1. 4. 2 1. 4. 3 1. 4. 4 1. 4. 5 1. 4. 6 1. 4. 7 Farm Financial Conditions Costs of Production Commodity Outlook Farm Financial Management & Performance Farm Income Farm Household Financial Well-being Lenders & Financial Markets Taxes 8

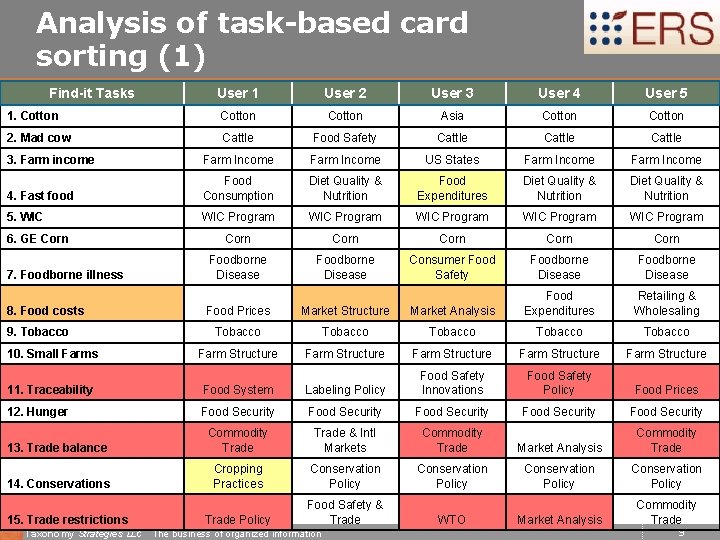

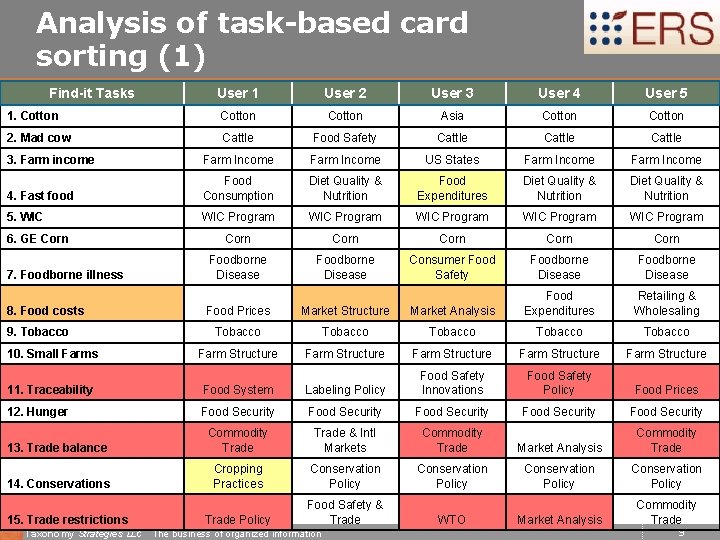

Analysis of task-based card sorting (1) Find-it Tasks User 1 User 2 User 3 User 4 User 5 1. Cotton Asia Cotton 2. Mad cow Cattle Food Safety Cattle 3. Farm income Farm Income US States Farm Income 4. Fast food Food Consumption Diet Quality & Nutrition Food Expenditures Diet Quality & Nutrition 5. WIC Program WIC Program Corn Corn 7. Foodborne illness Foodborne Disease Consumer Food Safety Foodborne Disease 8. Food costs Food Prices Market Structure Market Analysis Food Expenditures Retailing & Wholesaling Tobacco Tobacco Farm Structure Farm Structure Food Safety Policy Food Prices 6. GE Corn 9. Tobacco 10. Small Farms 11. Traceability Food System Labeling Policy Food Safety Innovations 12. Hunger Food Security Food Security 13. Trade balance Commodity Trade & Intl Markets Commodity Trade Market Analysis Commodity Trade 14. Conservations Cropping Practices Conservation Policy Trade Policy Food Safety & Trade WTO Market Analysis Commodity Trade 15. Trade restrictions Taxonomy Strategies LLC The business of organized information 9

Analysis of task-based card sorting (2) § In 80% of the trials users looked for information under the categories that we expected them to look for it. § Breaking-up topics into facets makes it easier to find information, especially information related to commodities. Taxonomy Strategies LLC The business of organized information 10

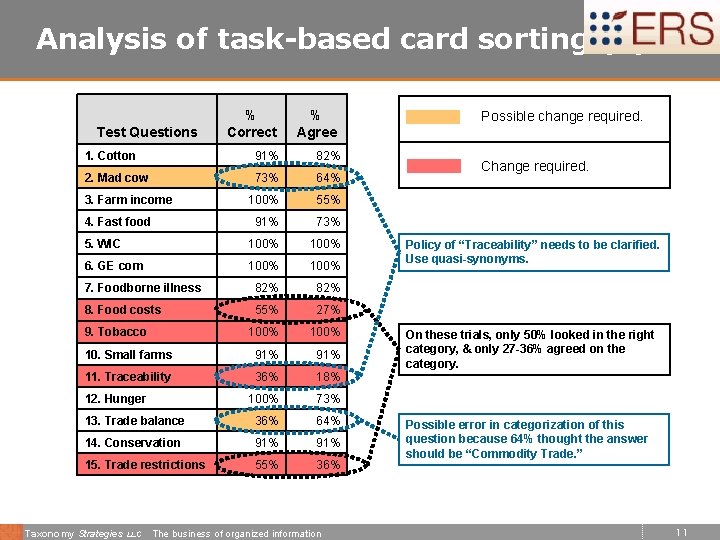

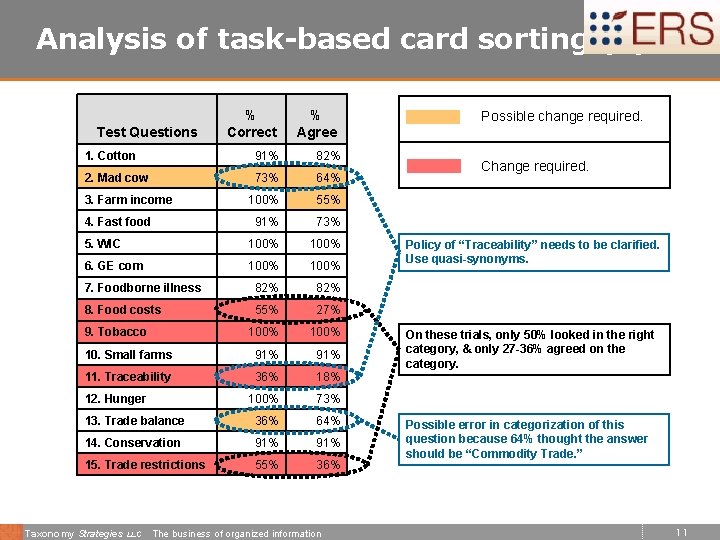

Analysis of task-based card sorting (3) Test Questions % Correct % Agree 1. Cotton 91% 82% 2. Mad cow 73% 64% 100% 55% 91% 73% 5. WIC 100% 6. GE corn 100% 7. Foodborne illness 82% 8. Food costs 55% 27% 100% 10. Small farms 91% 11. Traceability 36% 18% 100% 73% 13. Trade balance 36% 64% 14. Conservation 91% 15. Trade restrictions 55% 36% 3. Farm income 4. Fast food 9. Tobacco 12. Hunger Taxonomy Strategies LLC The business of organized information Possible change required. Change required. Policy of “Traceability” needs to be clarified. Use quasi-synonyms. On these trials, only 50% looked in the right category, & only 27 -36% agreed on the category. Possible error in categorization of this question because 64% thought the answer should be “Commodity Trade. ” 11

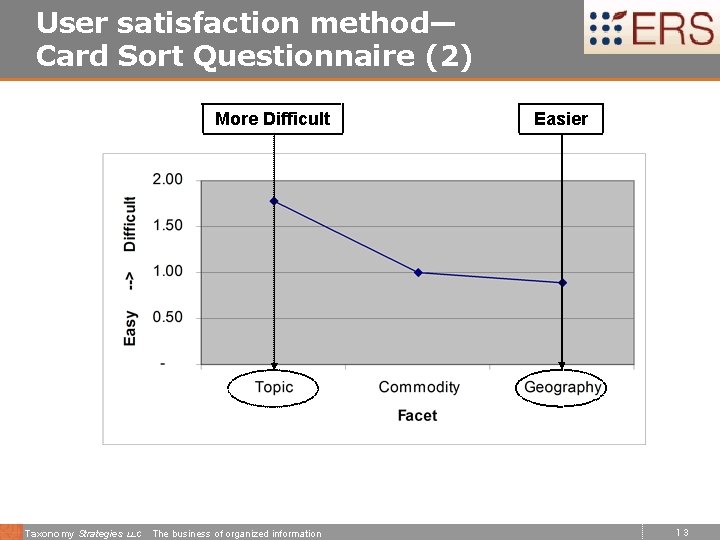

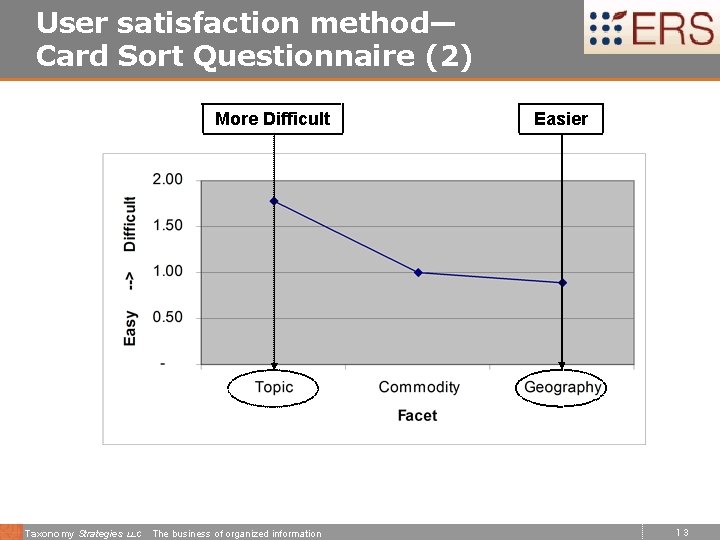

User satisfaction method— Card Sort Questionnaire (1) § Was it easy, medium or difficult to choose the appropriate Topic? § Easy § Medium § Difficult § Was it easy, medium or difficult to choose the appropriate Commodity? § Easy § Medium § Difficult § Was it easy, medium or difficult to choose the appropriate Geographic Coverage? § Easy § Medium § Difficult Taxonomy Strategies LLC The business of organized information 12

User satisfaction method— Card Sort Questionnaire (2) More Difficult Taxonomy Strategies LLC The business of organized information Easier 13

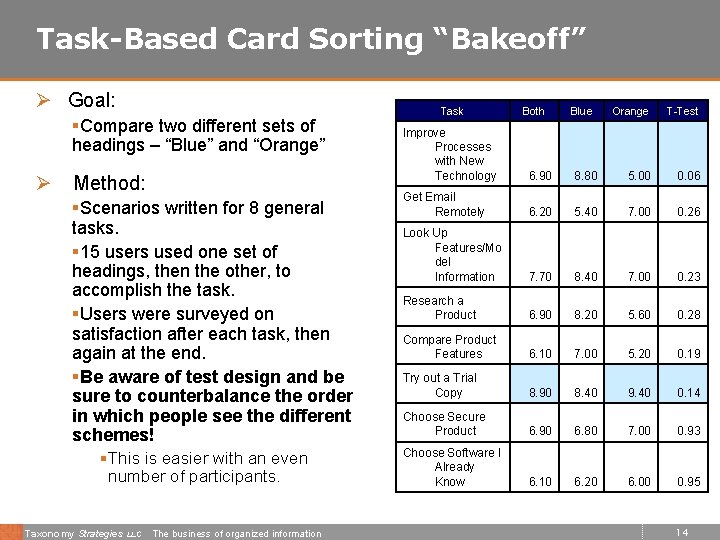

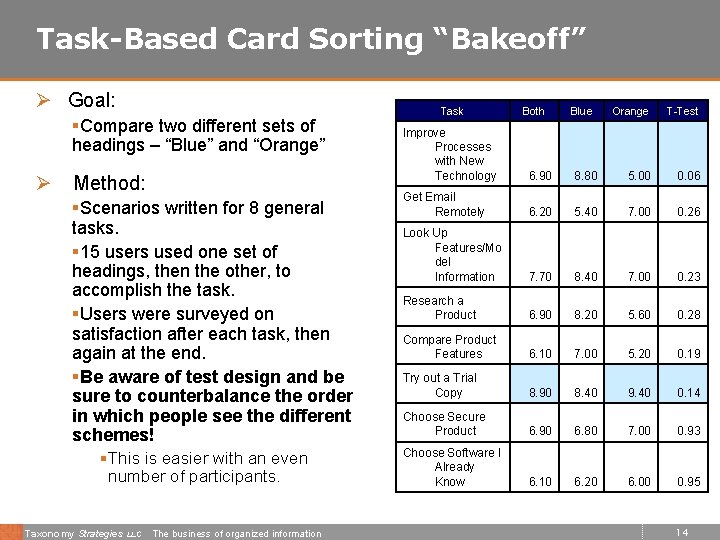

Task-Based Card Sorting “Bakeoff” Ø Goal: §Compare two different sets of headings – “Blue” and “Orange” Ø Method: §Scenarios written for 8 general tasks. § 15 users used one set of headings, then the other, to accomplish the task. §Users were surveyed on satisfaction after each task, then again at the end. §Be aware of test design and be sure to counterbalance the order in which people see the different schemes! § This is easier with an even number of participants. Taxonomy Strategies LLC The business of organized information Task Both Blue Orange T-Test Improve Processes with New Technology 6. 90 8. 80 5. 00 0. 06 Get Email Remotely 6. 20 5. 40 7. 00 0. 26 Look Up Features/Mo del Information 7. 70 8. 40 7. 00 0. 23 Research a Product 6. 90 8. 20 5. 60 0. 28 Compare Product Features 6. 10 7. 00 5. 20 0. 19 Try out a Trial Copy 8. 90 8. 40 9. 40 0. 14 Choose Secure Product 6. 90 6. 80 7. 00 0. 93 Choose Software I Already Know 6. 10 6. 20 6. 00 0. 95 14

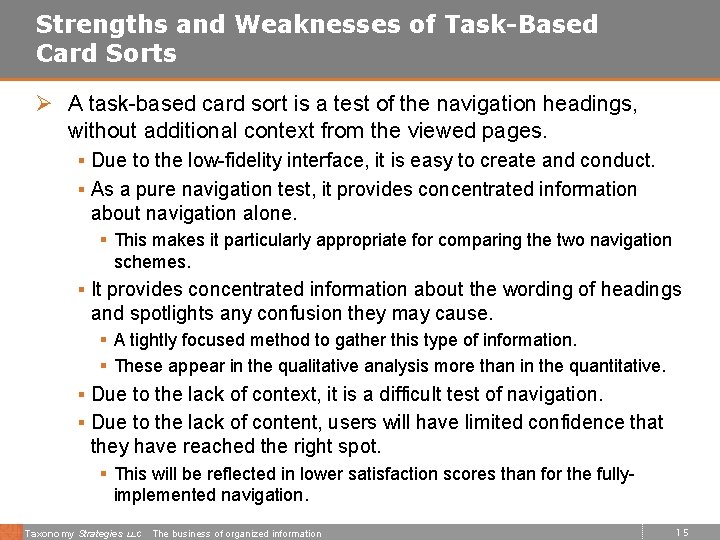

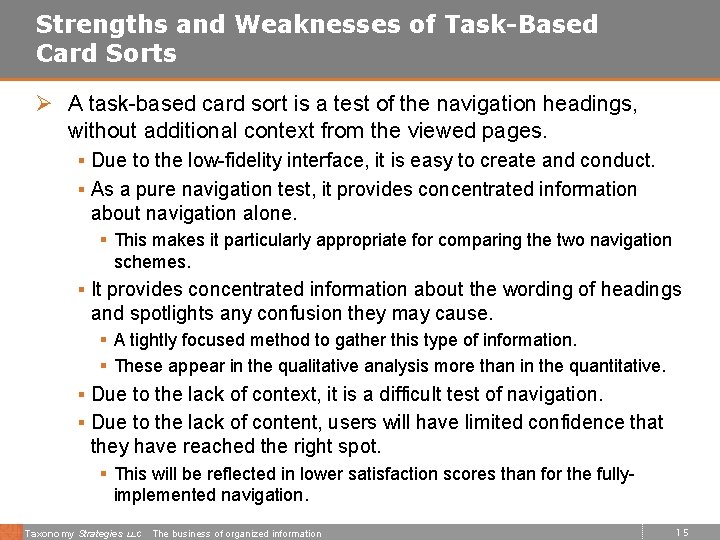

Strengths and Weaknesses of Task-Based Card Sorts Ø A task-based card sort is a test of the navigation headings, without additional context from the viewed pages. § Due to the low-fidelity interface, it is easy to create and conduct. § As a pure navigation test, it provides concentrated information about navigation alone. § This makes it particularly appropriate for comparing the two navigation schemes. § It provides concentrated information about the wording of headings and spotlights any confusion they may cause. § A tightly focused method to gather this type of information. § These appear in the qualitative analysis more than in the quantitative. § Due to the lack of context, it is a difficult test of navigation. § Due to the lack of content, users will have limited confidence that they have reached the right spot. § This will be reflected in lower satisfaction scores than for the fully- implemented navigation. Taxonomy Strategies LLC The business of organized information 15

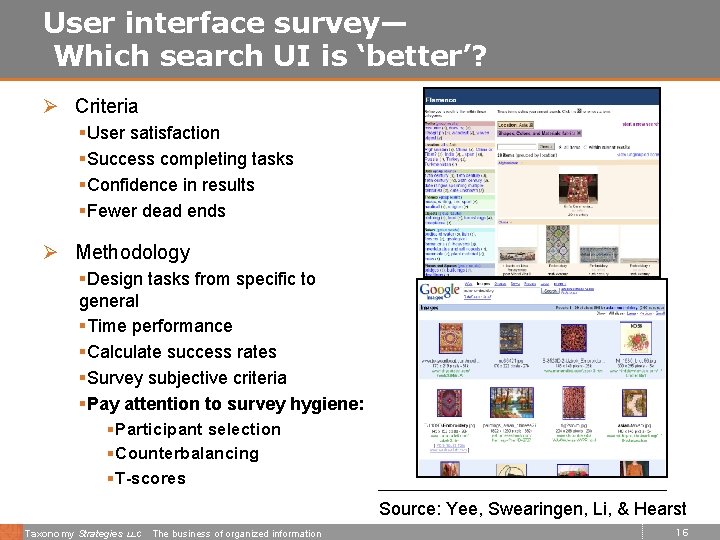

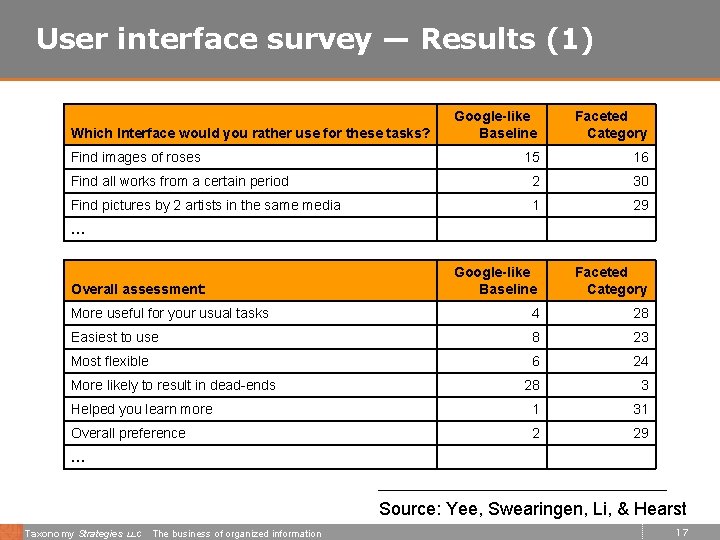

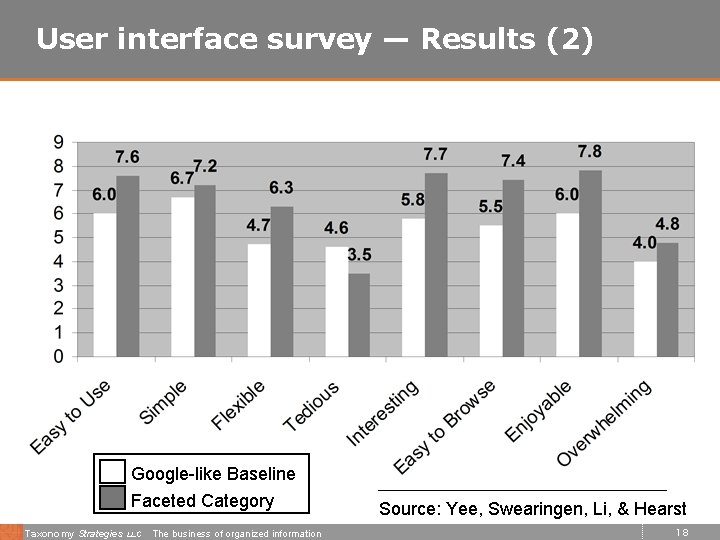

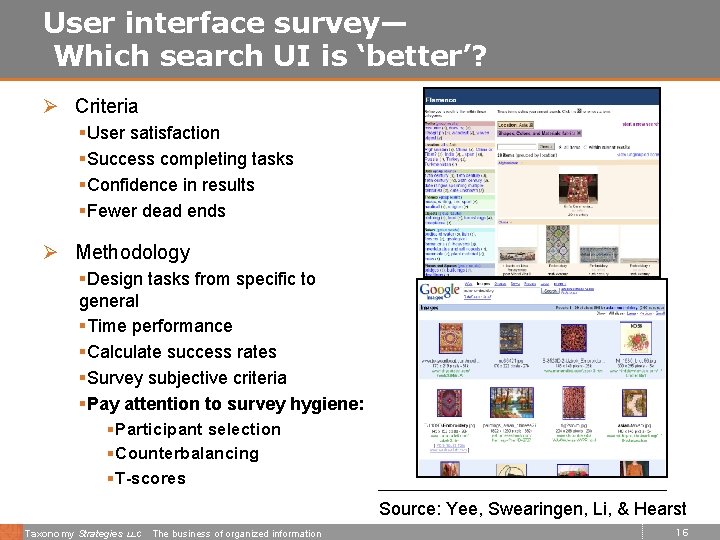

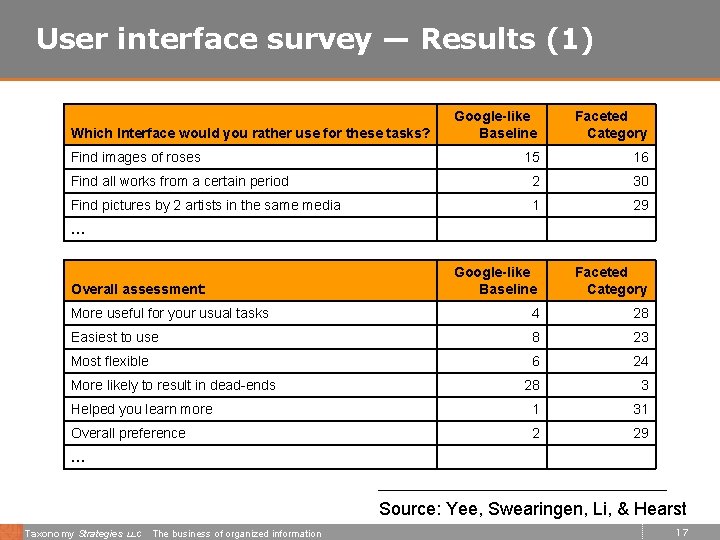

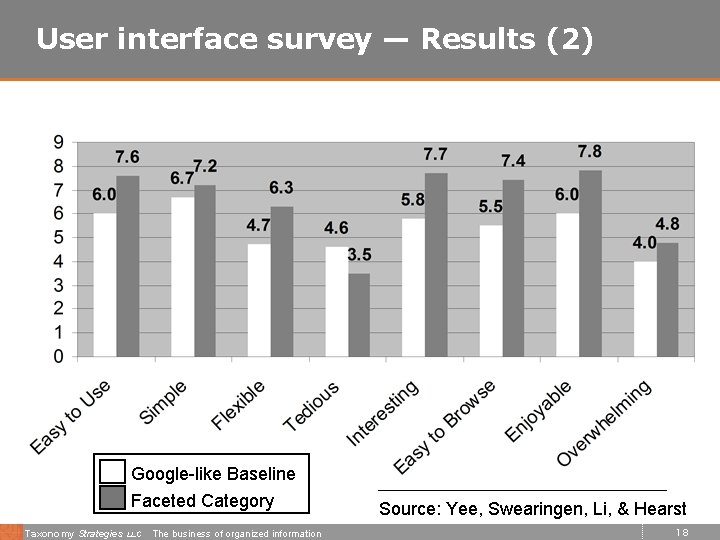

User interface survey— Which search UI is ‘better’? Ø Criteria §User satisfaction §Success completing tasks §Confidence in results §Fewer dead ends Ø Methodology §Design tasks from specific to general §Time performance §Calculate success rates §Survey subjective criteria §Pay attention to survey hygiene: § Participant selection § Counterbalancing § T-scores Source: Yee, Swearingen, Li, & Hearst Taxonomy Strategies LLC The business of organized information 16

User interface survey — Results (1) Google-like Baseline Faceted Category 15 16 Find all works from a certain period 2 30 Find pictures by 2 artists in the same media 1 29 Google-like Baseline Faceted Category More useful for your usual tasks 4 28 Easiest to use 8 23 Most flexible 6 24 28 3 Helped you learn more 1 31 Overall preference 2 29 Which Interface would you rather use for these tasks? Find images of roses … Overall assessment: More likely to result in dead-ends … Source: Yee, Swearingen, Li, & Hearst Taxonomy Strategies LLC The business of organized information 17

User interface survey — Results (2) Google-like Baseline Faceted Category Taxonomy Strategies LLC The business of organized information Source: Yee, Swearingen, Li, & Hearst 18

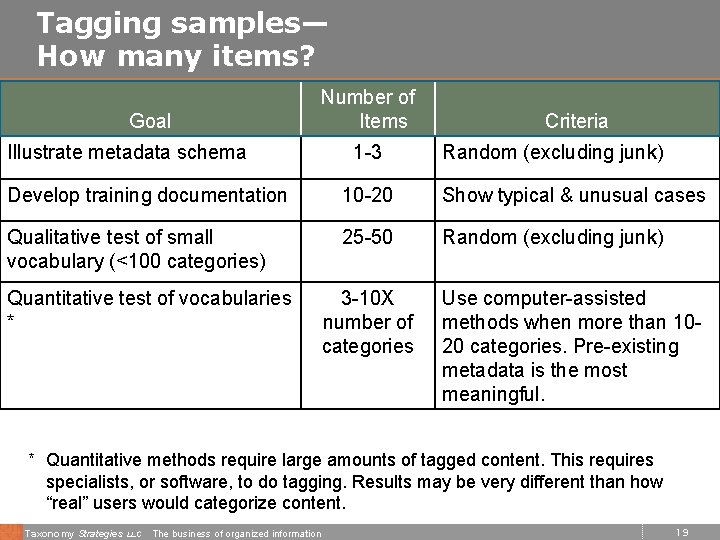

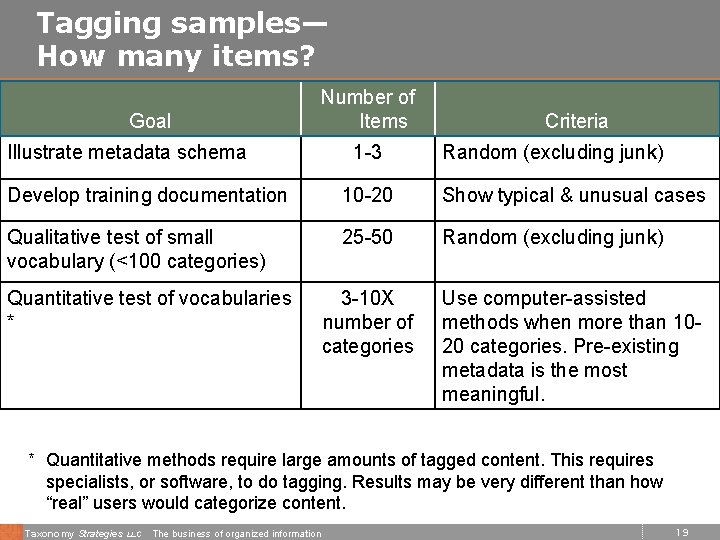

Tagging samples— How many items? Goal Illustrate metadata schema Number of Items 1 -3 Criteria Random (excluding junk) Develop training documentation 10 -20 Show typical & unusual cases Qualitative test of small vocabulary (<100 categories) 25 -50 Random (excluding junk) Quantitative test of vocabularies * 3 -10 X number of categories Use computer-assisted methods when more than 1020 categories. Pre-existing metadata is the most meaningful. * Quantitative methods require large amounts of tagged content. This requires specialists, or software, to do tagging. Results may be very different than how “real” users would categorize content. Taxonomy Strategies LLC The business of organized information 19

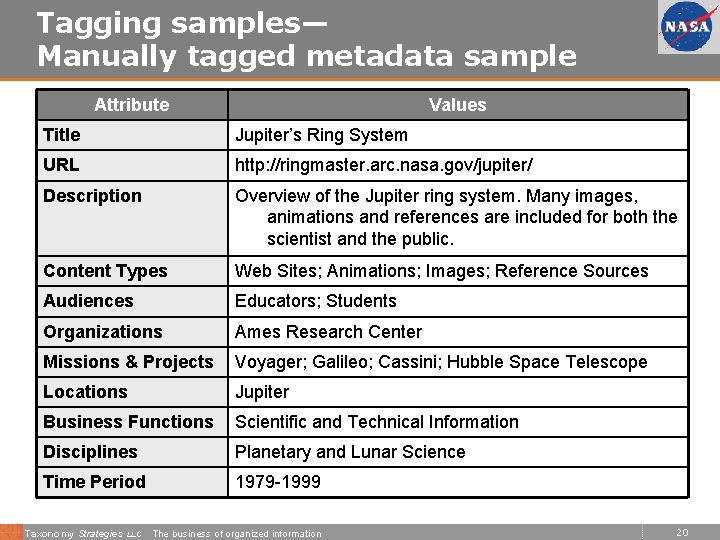

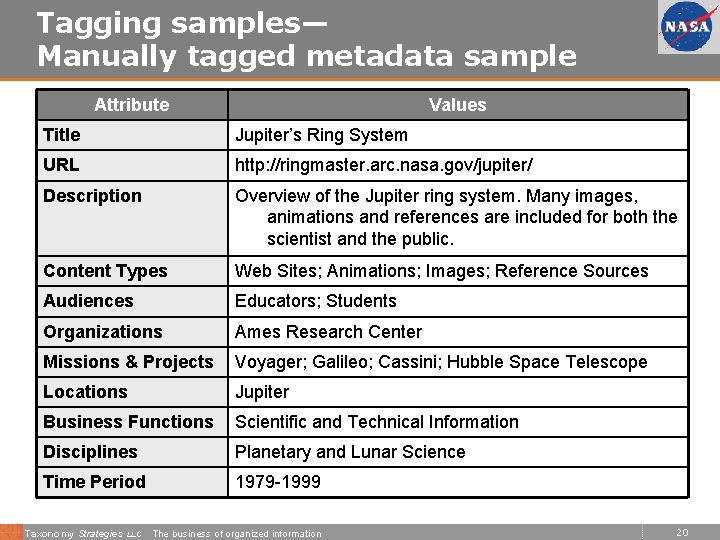

Tagging samples— Manually tagged metadata sample Attribute Values Title Jupiter’s Ring System URL http: //ringmaster. arc. nasa. gov/jupiter/ Description Overview of the Jupiter ring system. Many images, animations and references are included for both the scientist and the public. Content Types Web Sites; Animations; Images; Reference Sources Audiences Educators; Students Organizations Ames Research Center Missions & Projects Voyager; Galileo; Cassini; Hubble Space Telescope Locations Jupiter Business Functions Scientific and Technical Information Disciplines Planetary and Lunar Science Time Period 1979 -1999 Taxonomy Strategies LLC The business of organized information 20

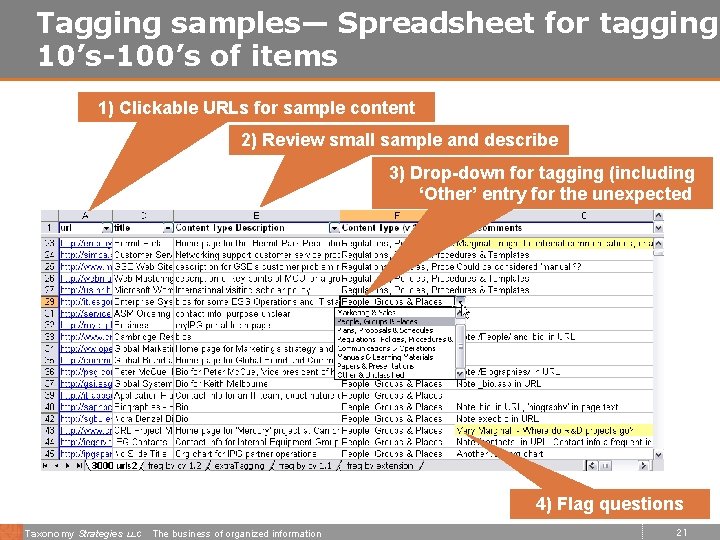

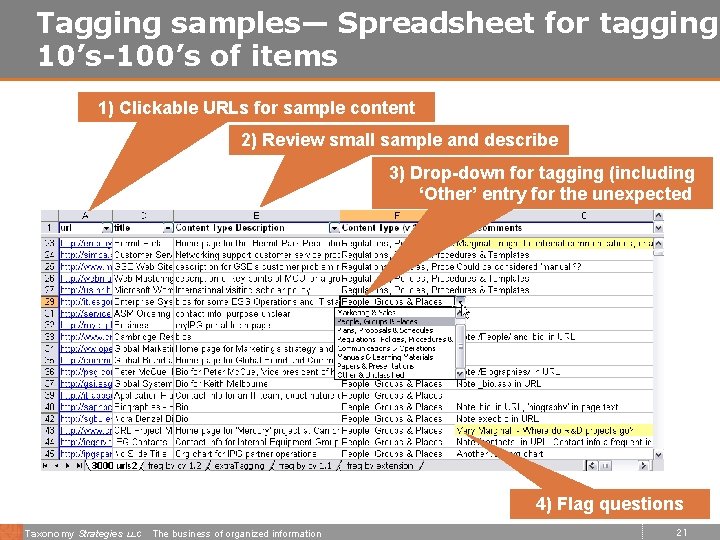

Tagging samples— Spreadsheet for tagging 10’s-100’s of items 1) Clickable URLs for sample content 2) Review small sample and describe 3) Drop-down for tagging (including ‘Other’ entry for the unexpected 4) Flag questions Taxonomy Strategies LLC The business of organized information 21

Rough Bulk Tagging— Facet Demo (1) § Collections: 4 content sources § NTRS, SIRTF, Webb, Lessons Learned § Taxonomy § Converted Multi. Tes format into RDF for Seamark § Metadata § Converted from existing metadata on web pages, or § Created using simple automatic classifier (string matching with terms & synonyms) § 250 k items, ~12 metadata fields, 1. 5 weeks effort § OOTB Seamark user interface, plus logo Taxonomy Strategies LLC The business of organized information 22

Rough Bulk Tagging— OOTB Facet Demo (2) Taxonomy Strategies LLC The business of organized information 23

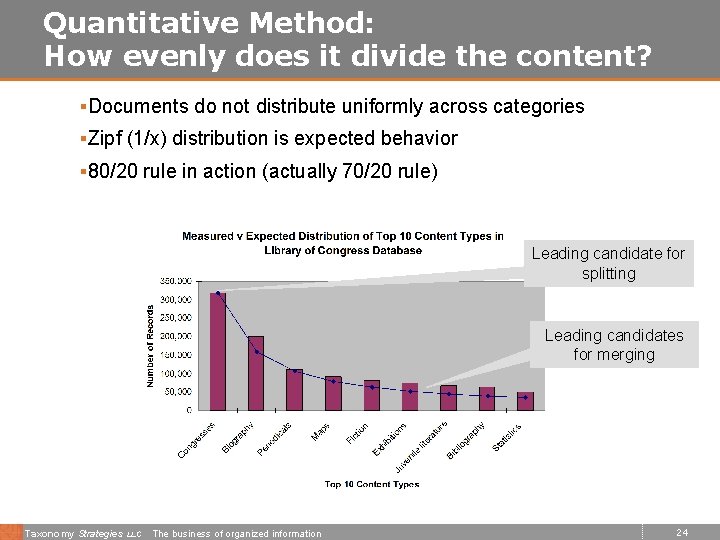

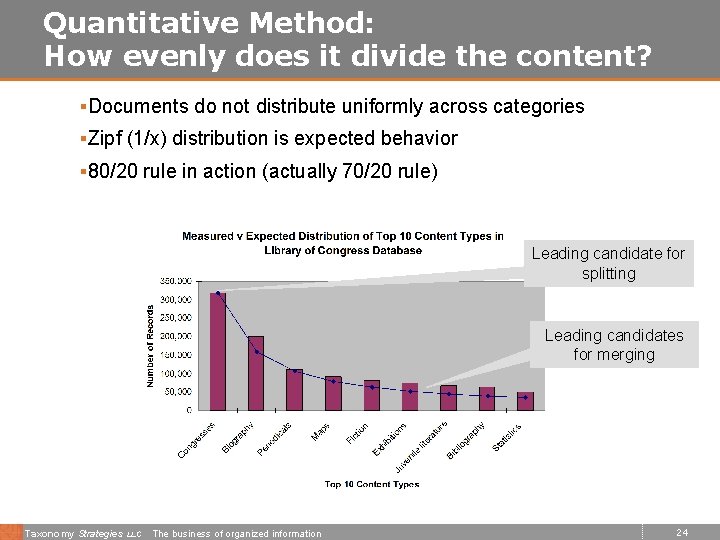

Quantitative Method: How evenly does it divide the content? §Documents do not distribute uniformly across categories §Zipf (1/x) distribution is expected behavior § 80/20 rule in action (actually 70/20 rule) Leading candidate for splitting Leading candidates for merging Taxonomy Strategies LLC The business of organized information 24

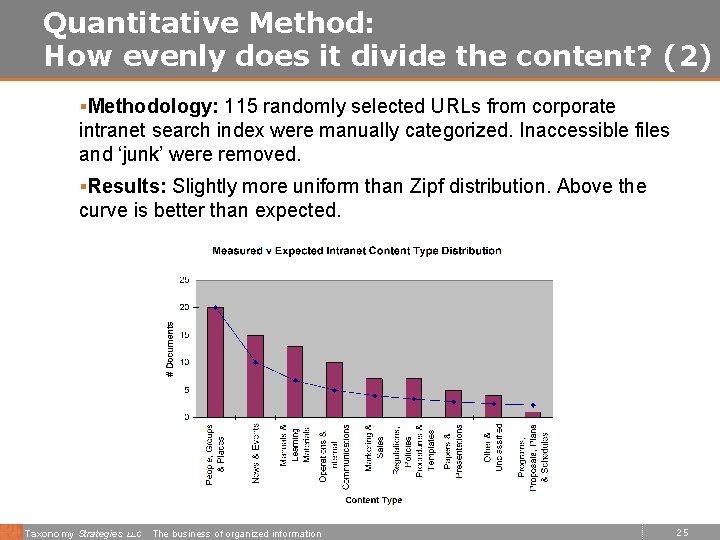

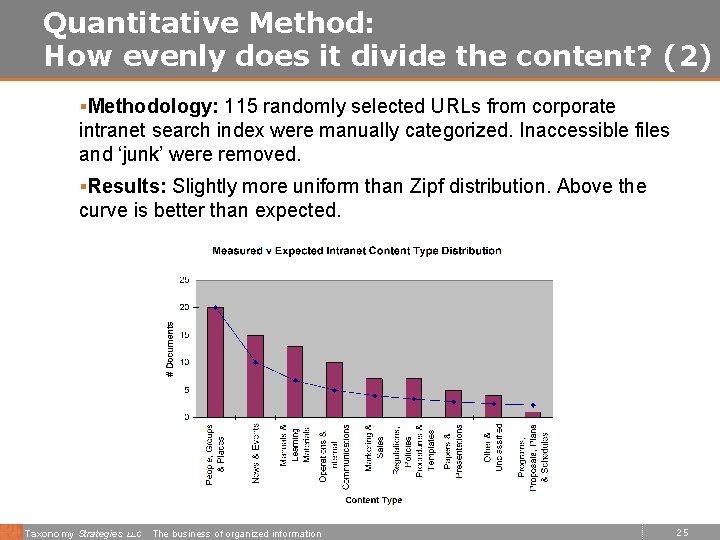

Quantitative Method: How evenly does it divide the content? (2) §Methodology: 115 randomly selected URLs from corporate intranet search index were manually categorized. Inaccessible files and ‘junk’ were removed. §Results: Slightly more uniform than Zipf distribution. Above the curve is better than expected. Taxonomy Strategies LLC The business of organized information 25

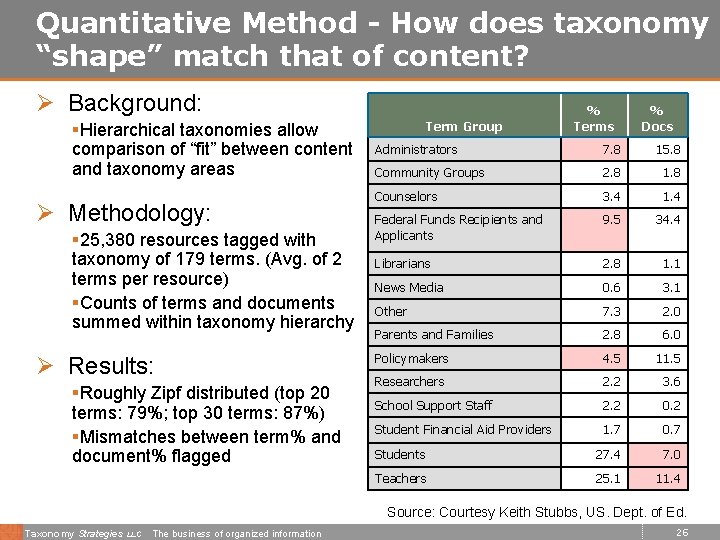

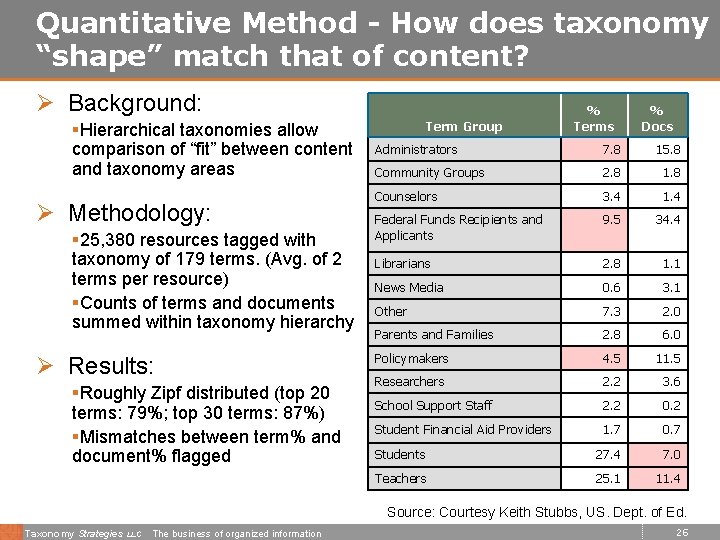

Quantitative Method - How does taxonomy “shape” match that of content? Ø Background: Term Group §Hierarchical taxonomies allow comparison of “fit” between content and taxonomy areas Ø Methodology: § 25, 380 resources tagged with taxonomy of 179 terms. (Avg. of 2 terms per resource) §Counts of terms and documents summed within taxonomy hierarchy Ø Results: §Roughly Zipf distributed (top 20 terms: 79%; top 30 terms: 87%) §Mismatches between term% and document% flagged % Terms % Docs Administrators 7. 8 15. 8 Community Groups 2. 8 1. 8 Counselors 3. 4 1. 4 Federal Funds Recipients and Applicants 9. 5 34. 4 Librarians 2. 8 1. 1 News Media 0. 6 3. 1 Other 7. 3 2. 0 Parents and Families 2. 8 6. 0 Policymakers 4. 5 11. 5 Researchers 2. 2 3. 6 School Support Staff 2. 2 0. 2 Student Financial Aid Providers 1. 7 0. 7 Students 27. 4 7. 0 Teachers 25. 1 11. 4 Source: Courtesy Keith Stubbs, US. Dept. of Ed. Taxonomy Strategies LLC The business of organized information 26

Conclusion Ø Simple walkthroughs are only the start of how to test a taxonomy. Ø Tagging modest amounts of content, and usability tests such as task-based card sorts, provide strong information about problems within the taxonomy. § Caveat: They may tell you which headings need to be changed, they won’t tell you what they should be changed to. Ø Taxonomy changes do not stand alone § Search system improvements § Navigation improvements § Content improvements § Process improvements Taxonomy Strategies LLC The business of organized information 27

Taxonomy Strategies LLC Questions? Ron Daniel, Jr. rdaniel@taxonomystrategies. com http: //ww. taxonomystrategies. com Novemver 2, 2006 Copyright 2006 Taxonomy Strategies LLC. All rights reserved.

Bibliography Ø K. Yee, K. Swearingen, K. Li, M. Hearst. "Searching and organizing: Faceted metadata for image search and browsing. " Proceedings of the Conference on Human Factors in Computing Systems (April 2003) http: //bailando. sims. berkeley. edu/papers/flamenco-chi 03. pdf Ø R. Daniel and J. Busch. "Benchmarking Your Search Function: A Maturity Model. ” http: //www. taxonomystrategies. com/presentations/maturity-2005 -0517%28 as-presented%29. ppt Ø Donna Maurer, “Card-Based Classification Evaluation”, Boxes and Arrows, April 7, 2003. http: //www. boxesandarrows. com/view/card_based_classification_eval uation Taxonomy Strategies LLC The business of organized information 29