Software Project Management 4 th Edition Chapter 5

- Slides: 34

Software Project Management 4 th Edition Chapter 5 Software effort estimation 1 ©The Mc. Graw-Hill Companies, 2005

What makes a successful project? Delivering: Stages: l agreed functionality 1. set targets l on time 2. Attempt to achieve targets l at the agreed cost l with the required quality BUT what if the targets are not achievable? 2 ©The Mc. Graw-Hill Companies, 2005

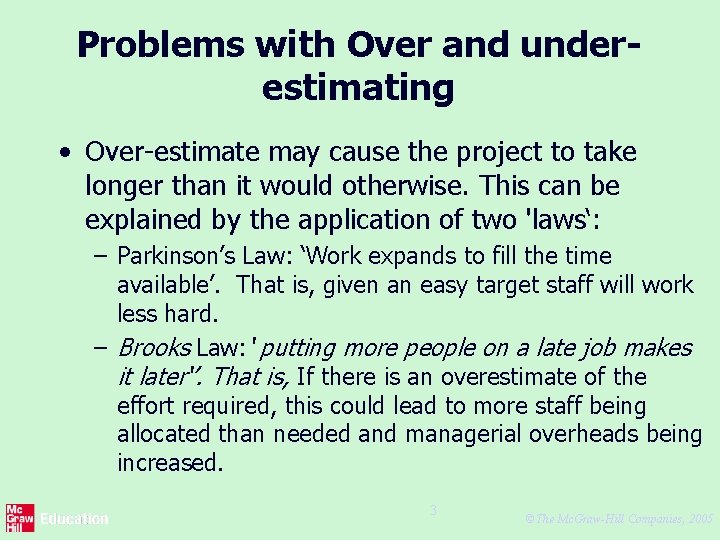

Problems with Over and underestimating • Over-estimate may cause the project to take longer than it would otherwise. This can be explained by the application of two 'laws‘: – Parkinson’s Law: ‘Work expands to fill the time available’. That is, given an easy target staff will work less hard. – Brooks Law: ‘ putting more people on a late job makes it later‘’. That is, If there is an overestimate of the effort required, this could lead to more staff being allocated than needed and managerial overheads being increased. 3 ©The Mc. Graw-Hill Companies, 2005

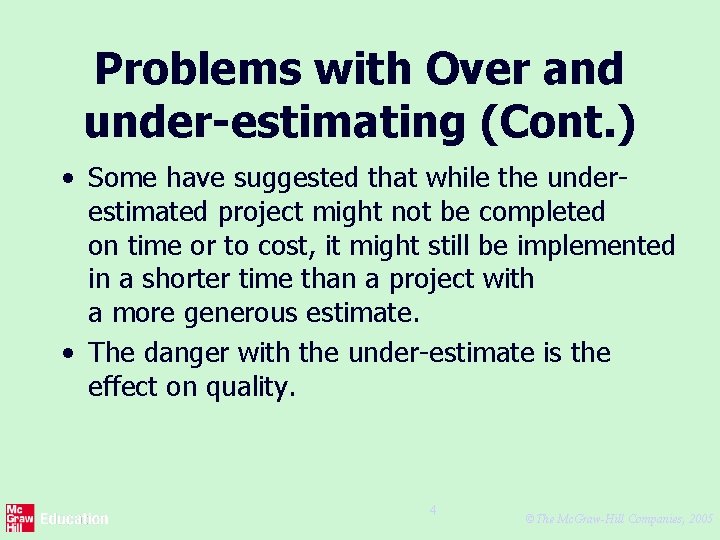

Problems with Over and under-estimating (Cont. ) • Some have suggested that while the underestimated project might not be completed on time or to cost, it might still be implemented in a shorter time than a project with a more generous estimate. • The danger with the under-estimate is the effect on quality. 4 ©The Mc. Graw-Hill Companies, 2005

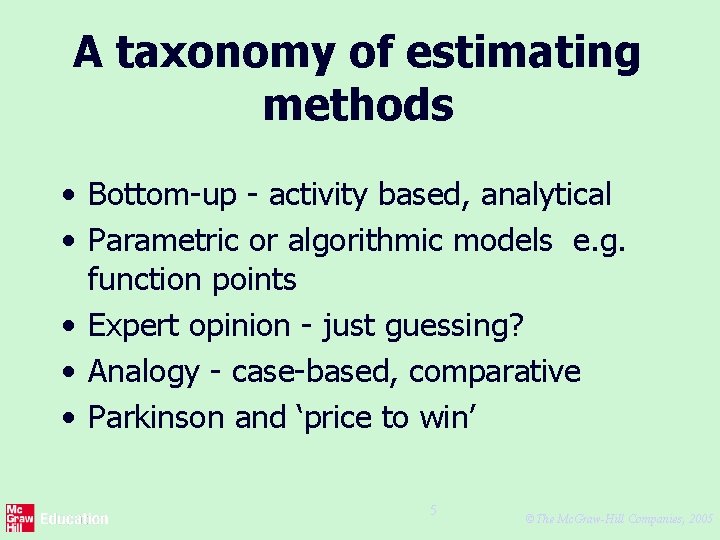

A taxonomy of estimating methods • Bottom-up - activity based, analytical • Parametric or algorithmic models e. g. function points • Expert opinion - just guessing? • Analogy - case-based, comparative • Parkinson and ‘price to win’ 5 ©The Mc. Graw-Hill Companies, 2005

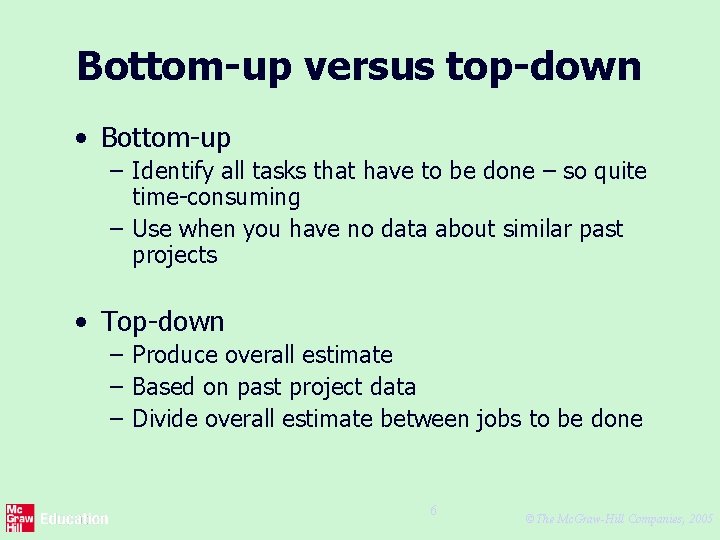

Bottom-up versus top-down • Bottom-up – Identify all tasks that have to be done – so quite time-consuming – Use when you have no data about similar past projects • Top-down – Produce overall estimate – Based on past project data – Divide overall estimate between jobs to be done 6 ©The Mc. Graw-Hill Companies, 2005

Bottom-up estimating 1. Break project into smaller and smaller components [2. Stop when you get to what one person can do in one/two weeks] 3. Estimate costs for the lowest level activities 4. At each higher level calculate estimate by adding estimates for lower levels 7 ©The Mc. Graw-Hill Companies, 2005

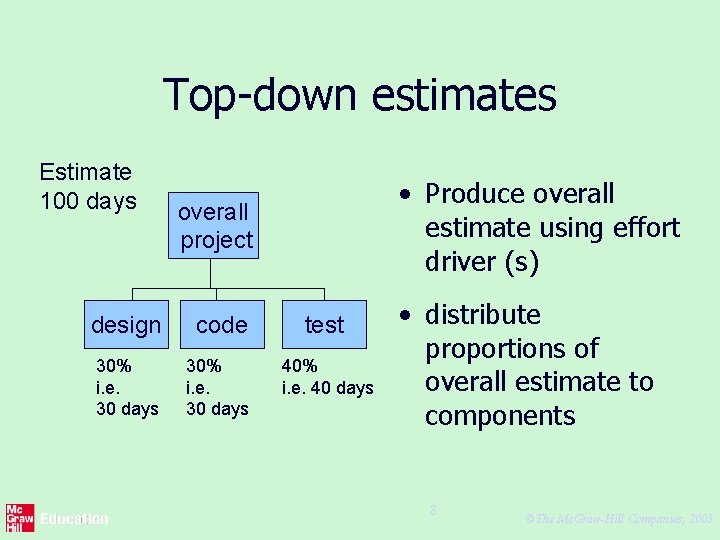

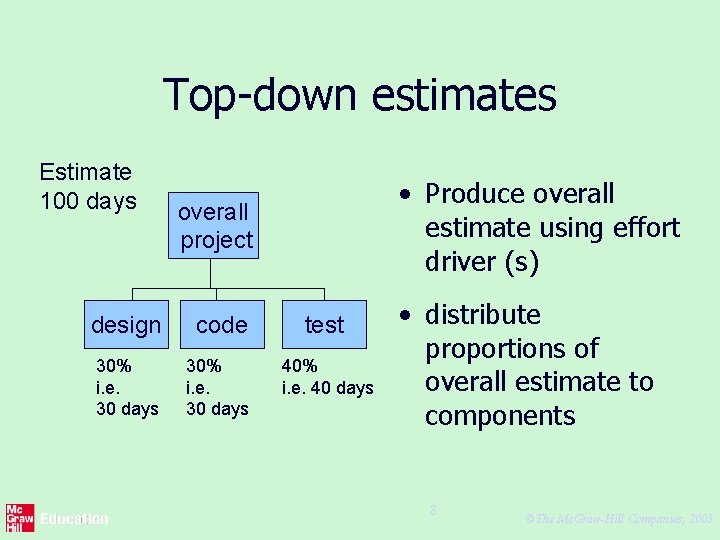

Top-down estimates Estimate 100 days • Produce overall estimate using effort driver (s) overall project design code test 30% i. e. 30 days 40% i. e. 40 days • distribute proportions of overall estimate to components 8 ©The Mc. Graw-Hill Companies, 2005

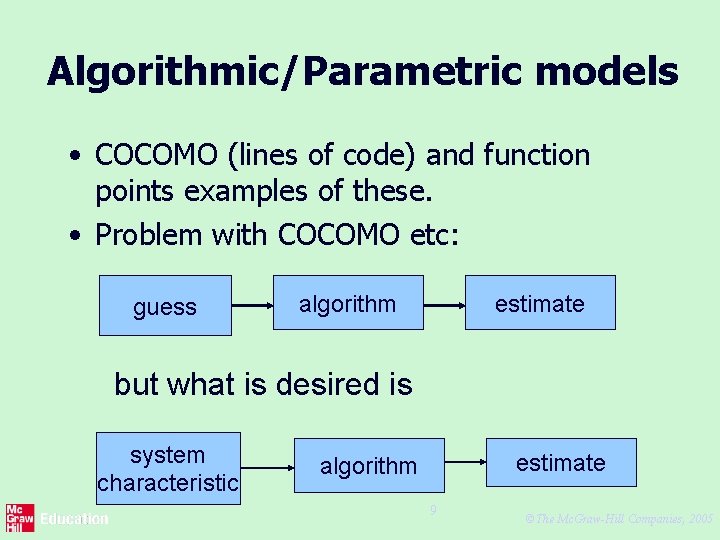

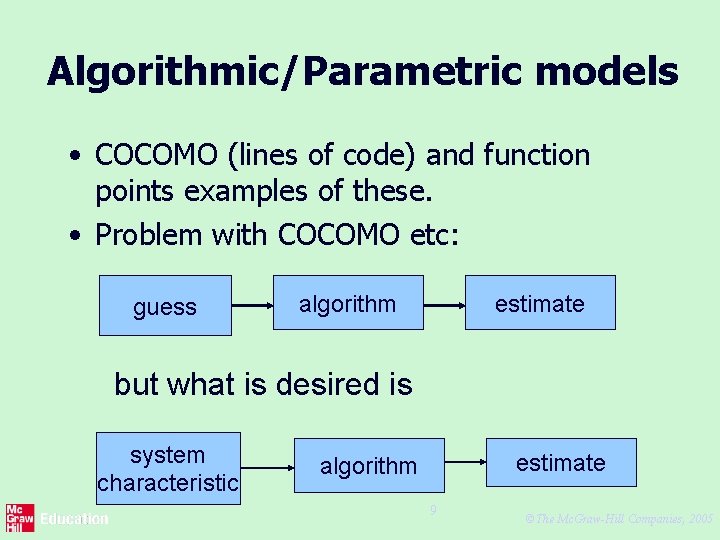

Algorithmic/Parametric models • COCOMO (lines of code) and function points examples of these. • Problem with COCOMO etc: guess algorithm estimate but what is desired is system characteristic estimate algorithm 9 ©The Mc. Graw-Hill Companies, 2005

Parametric models - continued • Examples of system characteristics – no of screens x 4 hours – no of reports x 2 days – no of entity types x 2 days • the quantitative relationship between the input and output products of a process can be used as the basis of a parametric model 10 ©The Mc. Graw-Hill Companies, 2005

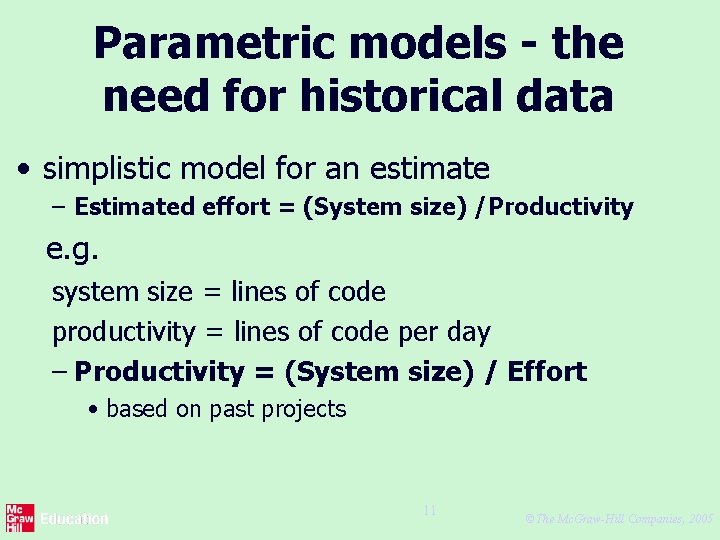

Parametric models - the need for historical data • simplistic model for an estimate – Estimated effort = (System size) /Productivity e. g. system size = lines of code productivity = lines of code per day – Productivity = (System size) / Effort • based on past projects 11 ©The Mc. Graw-Hill Companies, 2005

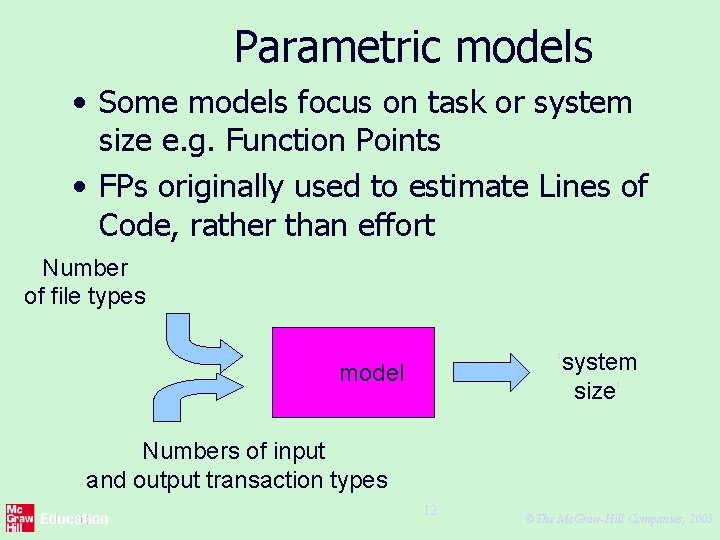

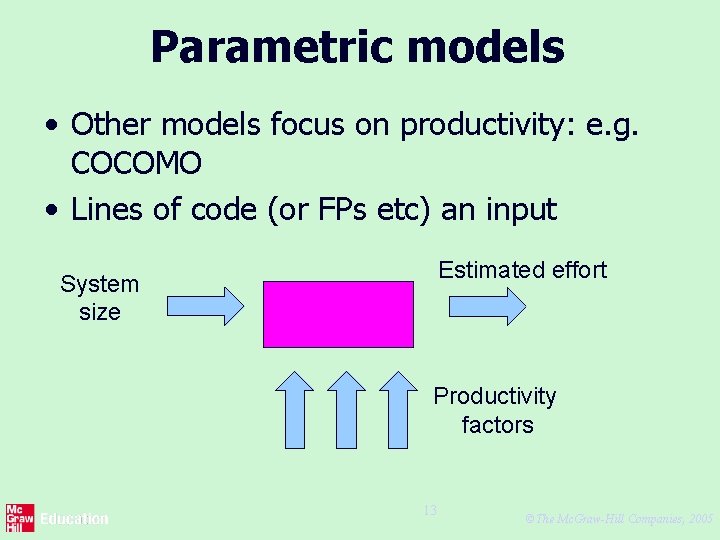

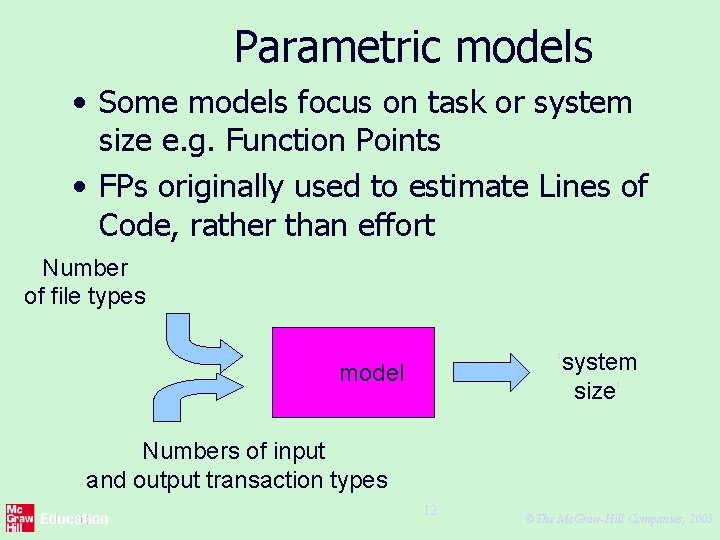

Parametric models • Some models focus on task or system size e. g. Function Points • FPs originally used to estimate Lines of Code, rather than effort Number of file types ‘system size’ model Numbers of input and output transaction types 12 ©The Mc. Graw-Hill Companies, 2005

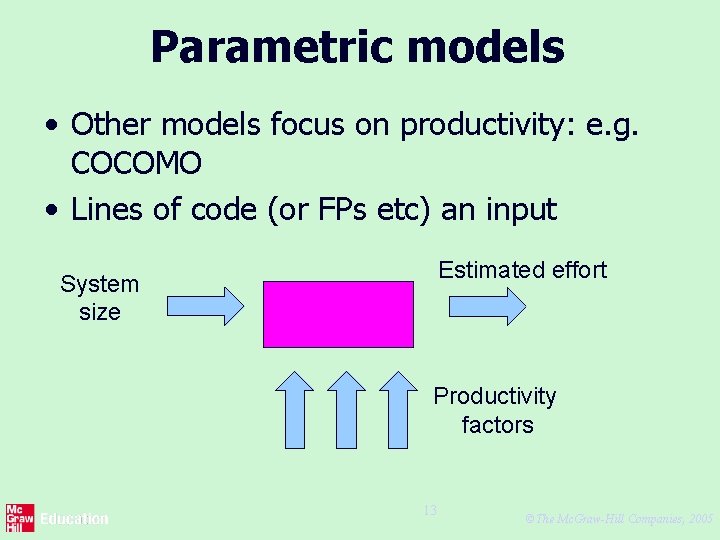

Parametric models • Other models focus on productivity: e. g. COCOMO • Lines of code (or FPs etc) an input Estimated effort System size Productivity factors 13 ©The Mc. Graw-Hill Companies, 2005

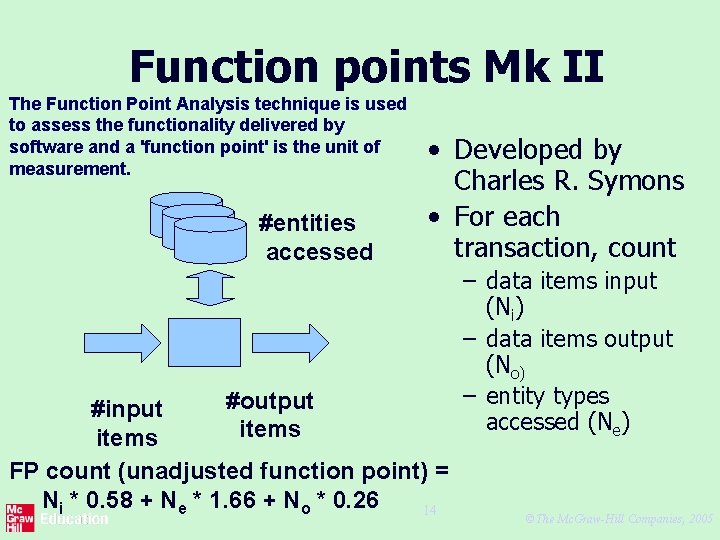

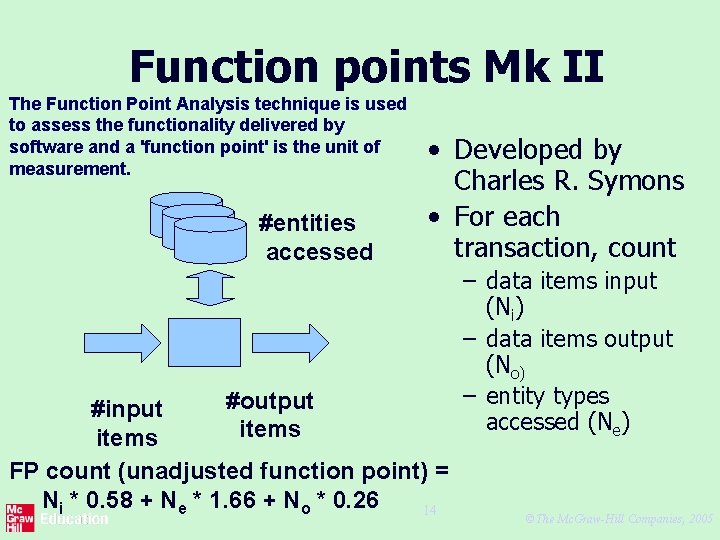

Function points Mk II The Function Point Analysis technique is used to assess the functionality delivered by software and a 'function point' is the unit of measurement. #entities accessed • Developed by Charles R. Symons • For each transaction, count #output #input items FP count (unadjusted function point) = Ni * 0. 58 + Ne * 1. 66 + No * 0. 26 14 – data items input (Ni) – data items output (No) – entity types accessed (Ne) ©The Mc. Graw-Hill Companies, 2005

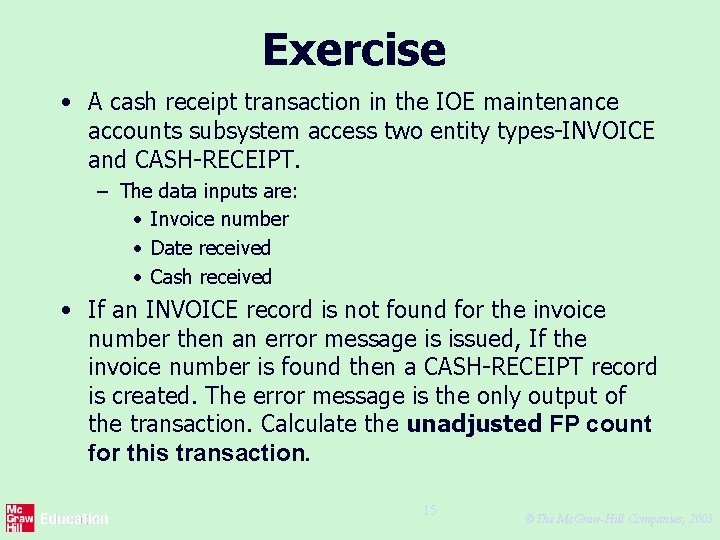

Exercise • A cash receipt transaction in the IOE maintenance accounts subsystem access two entity types-INVOICE and CASH-RECEIPT. – The data inputs are: • Invoice number • Date received • Cash received • If an INVOICE record is not found for the invoice number then an error message is issued, If the invoice number is found then a CASH-RECEIPT record is created. The error message is the only output of the transaction. Calculate the unadjusted FP count for this transaction. 15 ©The Mc. Graw-Hill Companies, 2005

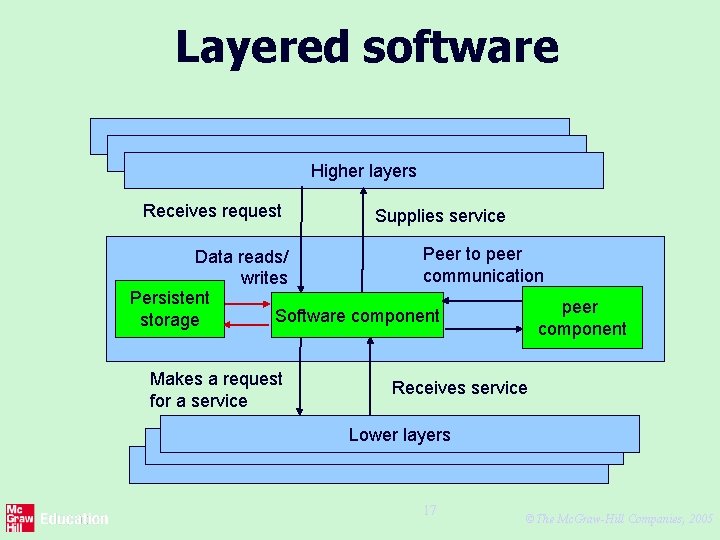

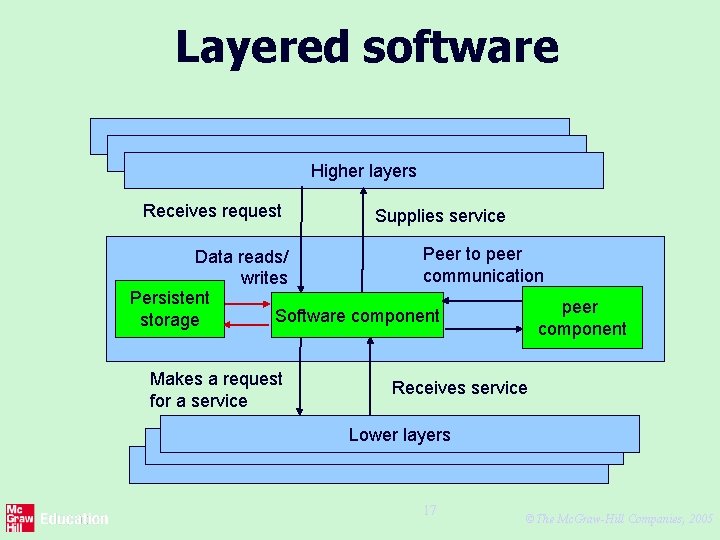

Function points for embedded systems • Mark II function points designed for information systems environments • COSMIC FPs attempt to extend concept to embedded systems • Embedded software seen as being in a particular ‘layer’ in the system • Communicates with other layers and also other components at same level 16 ©The Mc. Graw-Hill Companies, 2005

Layered software Higher layers Receives request Supplies service Peer to peer Data reads/ communication writes Persistent peer Software component storage component Makes a request for a service Receives service Lower layers 17 ©The Mc. Graw-Hill Companies, 2005

COSMIC FPs The following are counted: • Entries: movement of data into software component from a higher layer or a peer component • Exits: movements of data out • Reads: data movement from persistent storage • Writes: data movement to persistent storage Each counts as 1 ‘COSMIC functional size unit’ (Cfsu) 18 ©The Mc. Graw-Hill Companies, 2005

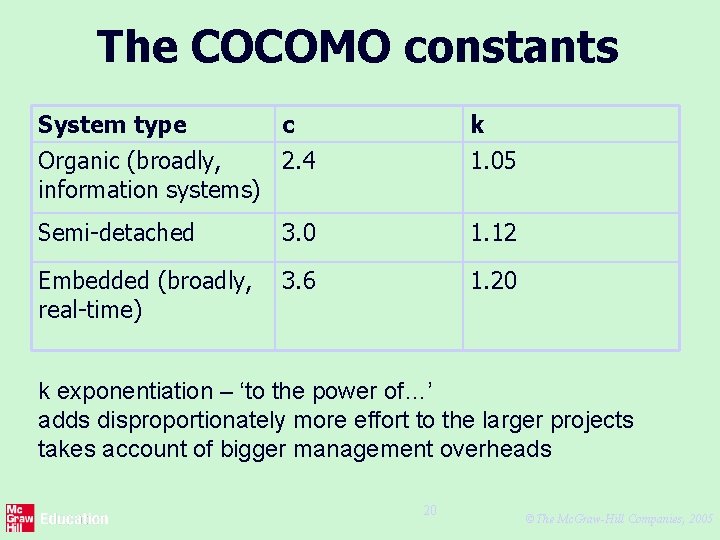

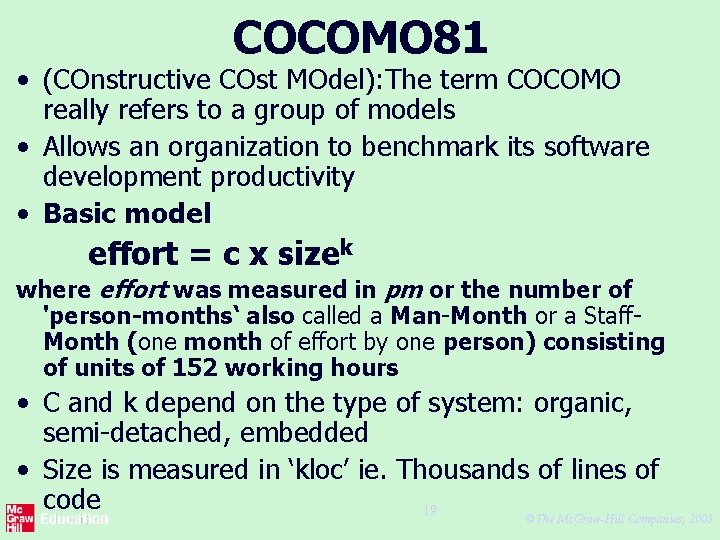

COCOMO 81 • (COnstructive COst MOdel): The term COCOMO really refers to a group of models • Allows an organization to benchmark its software development productivity • Basic model effort = c x sizek where effort was measured in pm or the number of 'person-months‘ also called a Man-Month or a Staff. Month (one month of effort by one person) consisting of units of 152 working hours • C and k depend on the type of system: organic, semi-detached, embedded • Size is measured in ‘kloc’ ie. Thousands of lines of code 19 ©The Mc. Graw-Hill Companies, 2005

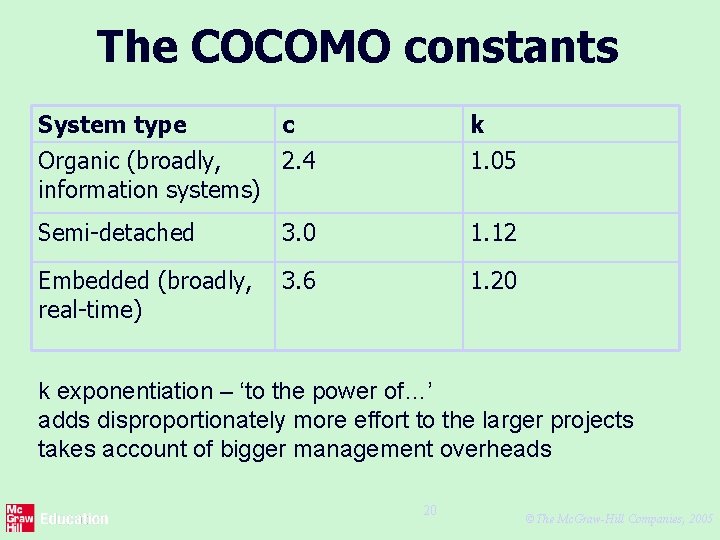

The COCOMO constants System type c k Organic (broadly, 2. 4 information systems) 1. 05 Semi-detached 3. 0 1. 12 Embedded (broadly, 3. 6 real-time) 1. 20 k exponentiation – ‘to the power of…’ adds disproportionately more effort to the larger projects takes account of bigger management overheads 20 ©The Mc. Graw-Hill Companies, 2005

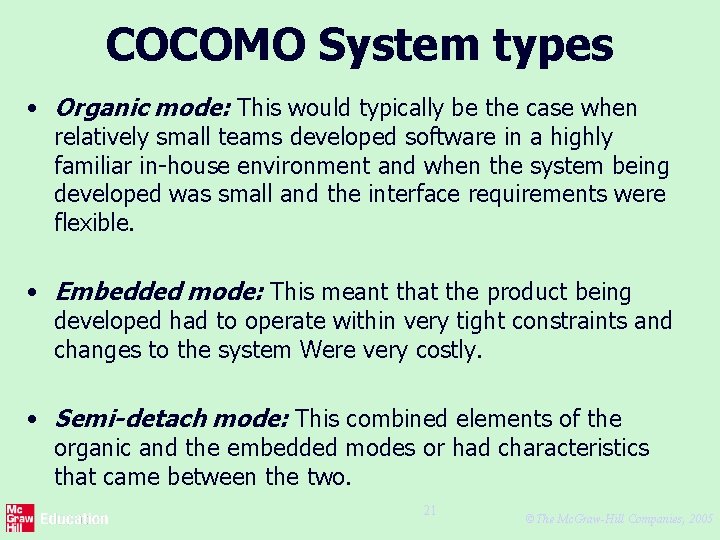

COCOMO System types • Organic mode: This would typically be the case when relatively small teams developed software in a highly familiar in-house environment and when the system being developed was small and the interface requirements were flexible. • Embedded mode: This meant that the product being developed had to operate within very tight constraints and changes to the system Were very costly. • Semi-detach mode: This combined elements of the organic and the embedded modes or had characteristics that came between the two. 21 ©The Mc. Graw-Hill Companies, 2005

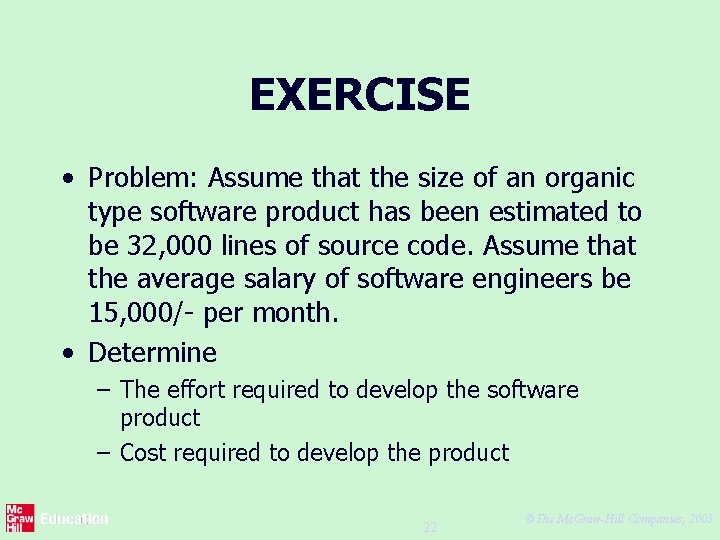

EXERCISE • Problem: Assume that the size of an organic type software product has been estimated to be 32, 000 lines of source code. Assume that the average salary of software engineers be 15, 000/- per month. • Determine – The effort required to develop the software product – Cost required to develop the product 22 ©The Mc. Graw-Hill Companies, 2005

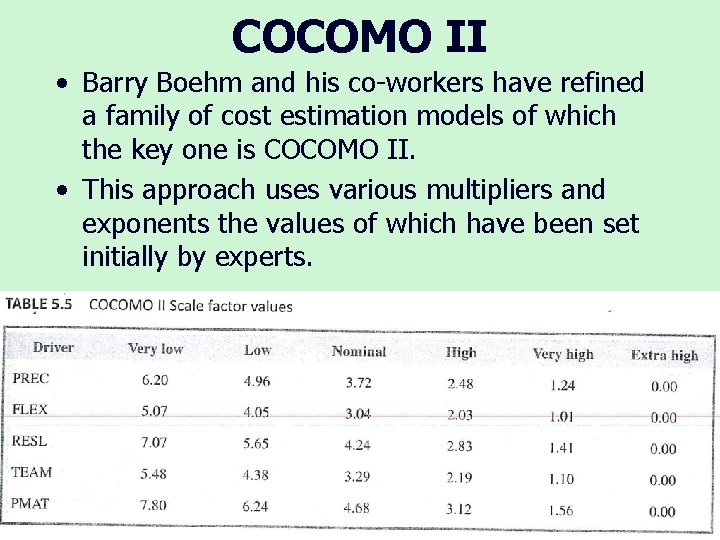

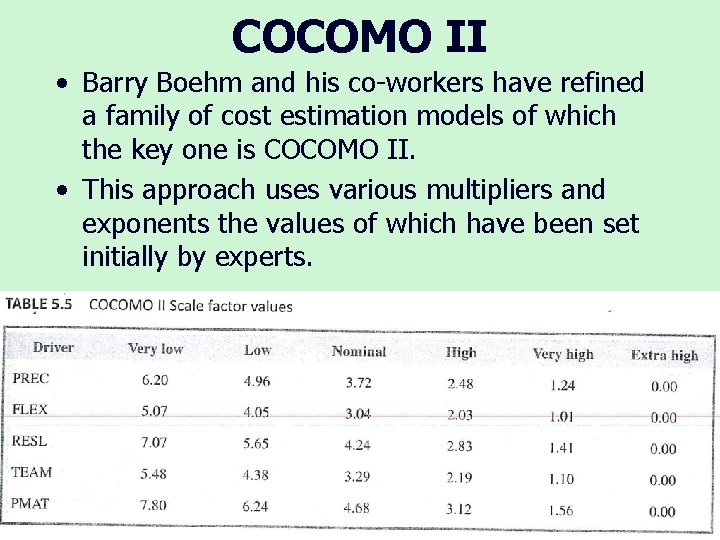

COCOMO II • Barry Boehm and his co-workers have refined a family of cost estimation models of which the key one is COCOMO II. • This approach uses various multipliers and exponents the values of which have been set initially by experts. 23 ©The Mc. Graw-Hill Companies, 2005

COCOMO II • Precedentedness (PREC) This quality is the degree to which there are precedents or similar past cases for the current project. • Development flexibility (FLEX) This reflects the number of different ways there are of meeting the requirements. • Architecture/risk resolution (RESL) This reflects the degree of uncertainty about the requirements. • Team cohesion (TEAM) This reflects the degree to which there is a large dispersed team (perhaps in several countries) as opposed to there being a small tightly knit team. • Process maturity (PMAT) The more structured and organized the way the software is produced, the lower the 24 ©The Mc. Graw-Hill Companies, 2005

COCOMO II • Each of the scale factors for project is rated according to a range of judgments: very low, nominal, high, very high, extra high. • There is a number related to each rating of the individual scale factors - see Table 5. 5. • These are summed, then multiplied by 0. 01 and added to the constant (B=0. 91) to get the overall exponent scale factor. 25 ©The Mc. Graw-Hill Companies, 2005

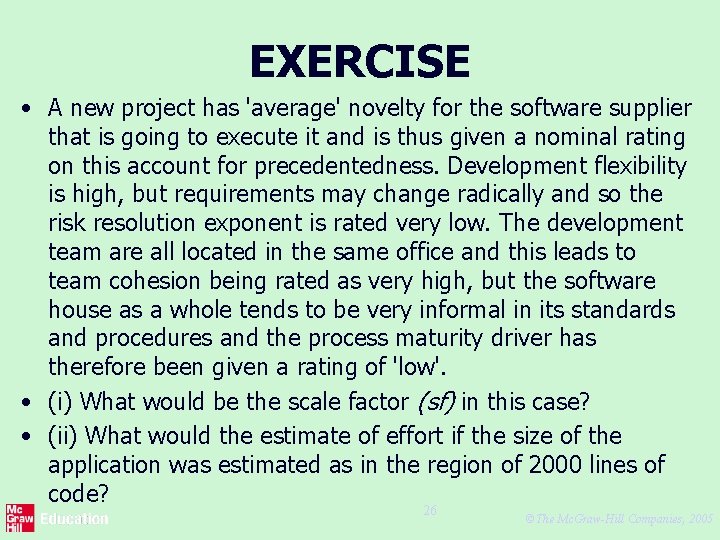

EXERCISE • A new project has 'average' novelty for the software supplier that is going to execute it and is thus given a nominal rating on this account for precedentedness. Development flexibility is high, but requirements may change radically and so the risk resolution exponent is rated very low. The development team are all located in the same office and this leads to team cohesion being rated as very high, but the software house as a whole tends to be very informal in its standards and procedures and the process maturity driver has therefore been given a rating of 'low'. • (i) What would be the scale factor (sf) in this case? • (ii) What would the estimate of effort if the size of the application was estimated as in the region of 2000 lines of code? 26 ©The Mc. Graw-Hill Companies, 2005

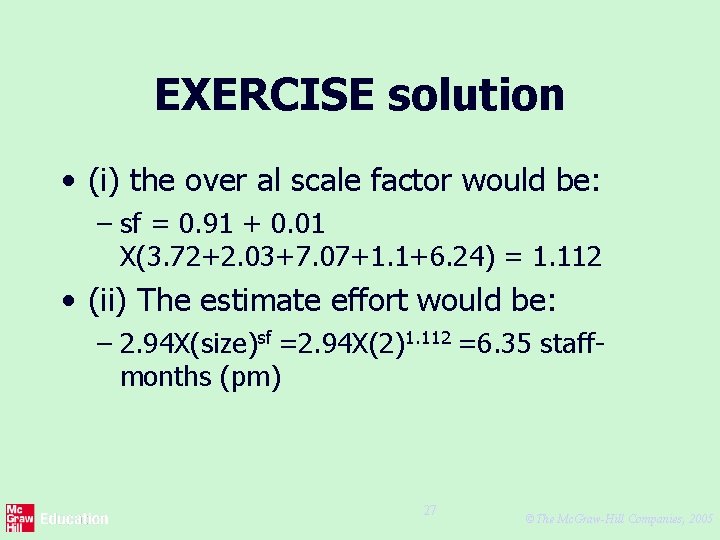

EXERCISE solution • (i) the over al scale factor would be: – sf = 0. 91 + 0. 01 X(3. 72+2. 03+7. 07+1. 1+6. 24) = 1. 112 • (ii) The estimate effort would be: – 2. 94 X(size)sf =2. 94 X(2)1. 112 =6. 35 staffmonths (pm) 27 ©The Mc. Graw-Hill Companies, 2005

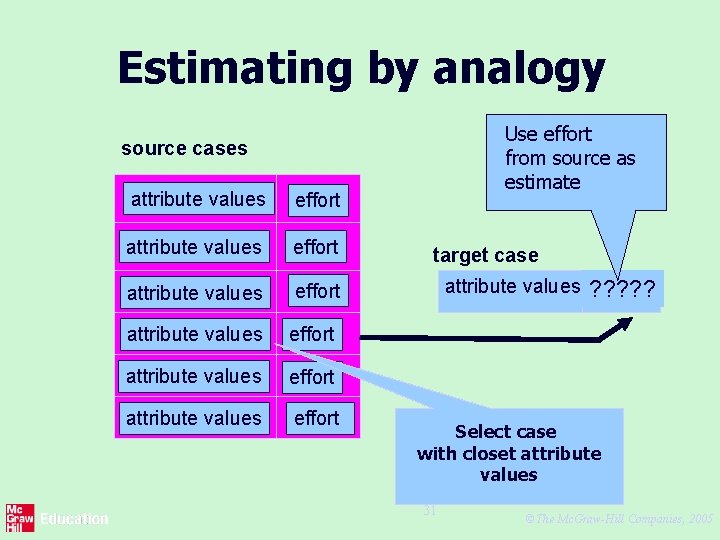

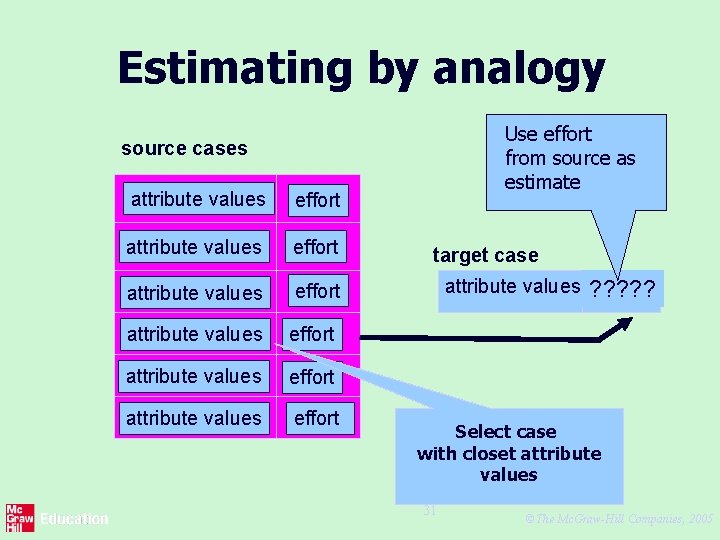

Estimating by analogy • This is also called case-based reasoning. • The estimator identifies completed projects (source cases) with similar characteristics to the new project (the target case). • The effort recorded for the matching source case is then used as a base estimate for the target. • The estimator then identifies differences between the target and the source and adjusts the base estimate to produce an estimate for the new project. 28 ©The Mc. Graw-Hill Companies, 2005

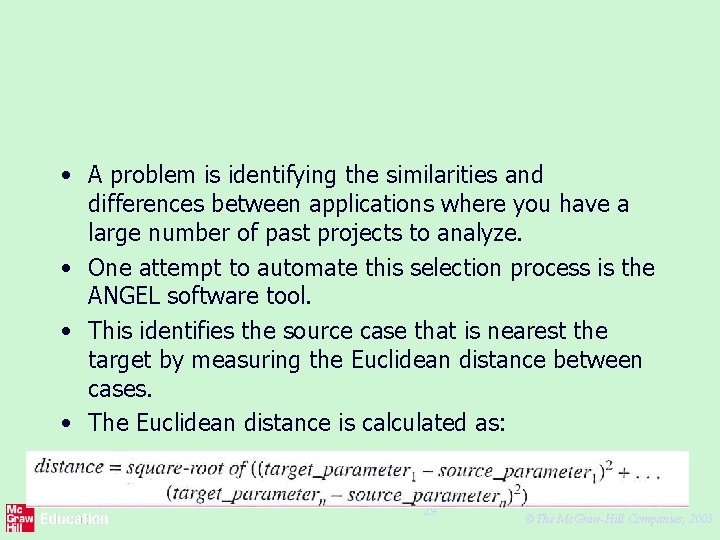

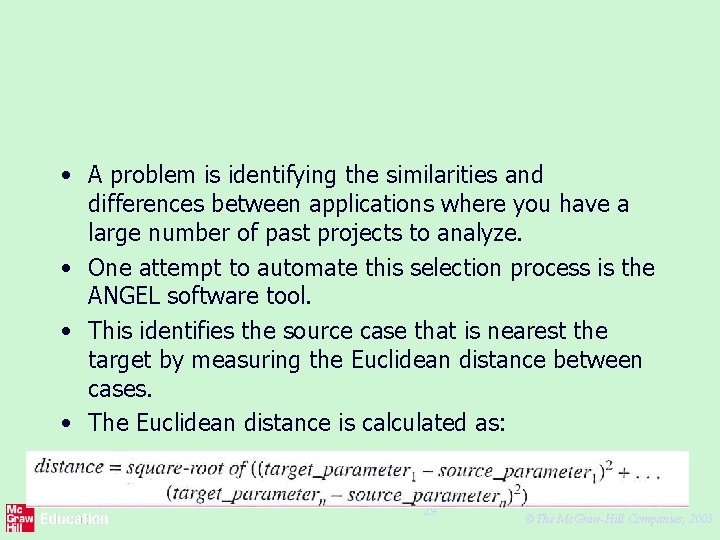

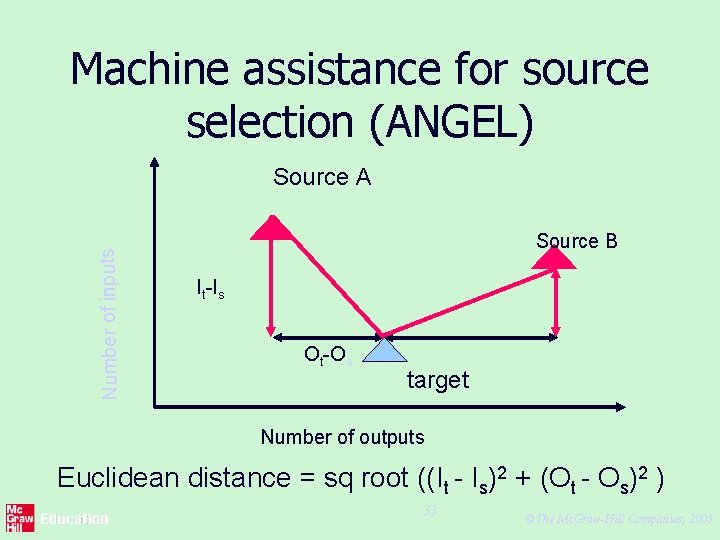

• A problem is identifying the similarities and differences between applications where you have a large number of past projects to analyze. • One attempt to automate this selection process is the ANGEL software tool. • This identifies the source case that is nearest the target by measuring the Euclidean distance between cases. • The Euclidean distance is calculated as: 29 ©The Mc. Graw-Hill Companies, 2005

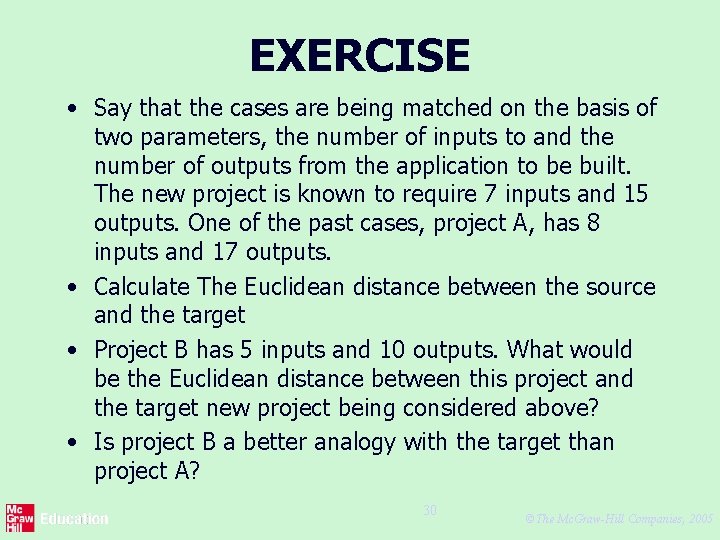

EXERCISE • Say that the cases are being matched on the basis of two parameters, the number of inputs to and the number of outputs from the application to be built. The new project is known to require 7 inputs and 15 outputs. One of the past cases, project A, has 8 inputs and 17 outputs. • Calculate The Euclidean distance between the source and the target • Project B has 5 inputs and 10 outputs. What would be the Euclidean distance between this project and the target new project being considered above? • Is project B a better analogy with the target than project A? 30 ©The Mc. Graw-Hill Companies, 2005

Estimating by analogy Use effort from source as estimate source cases attribute values effort attribute values effort target case attribute values ? ? ? Select case with closet attribute values 31 ©The Mc. Graw-Hill Companies, 2005

Stages: identify • Significant features of the current project • previous project(s) with similar features • differences between the current and previous projects • possible reasons for error (risk) • measures to reduce uncertainty 32 ©The Mc. Graw-Hill Companies, 2005

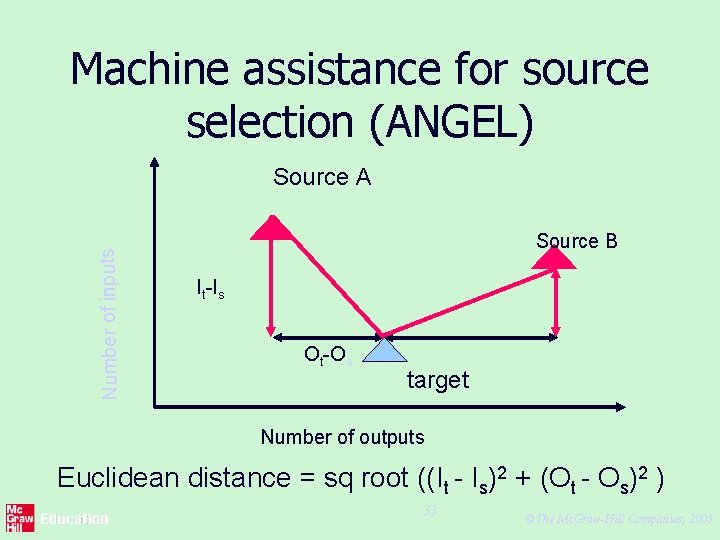

Machine assistance for source selection (ANGEL) Number of inputs Source A Source B It-Is Ot-Os target Number of outputs Euclidean distance = sq root ((It - Is)2 + (Ot - Os)2 ) 33 ©The Mc. Graw-Hill Companies, 2005

Some conclusions: how to review estimates Ask the following questions about an estimate • What are the task size drivers? • What productivity rates have been used? • Is there an example of a previous project of about the same size? • Are there examples of where the productivity rates used have actually been found? 34 ©The Mc. Graw-Hill Companies, 2005