Smallfootprint Keyword Spotting C Y Eddie Lin MIR

- Slides: 21

Small-footprint Keyword Spotting C. Y. Eddie Lin (林傳祐) MIR Lab, CSIE Dept. National Taiwan University eddie. lin@mirlab. org 2020/01/13

2022/1/10 Outline Introduction Dataset End-to-end architectures � TDNN+SWSA � TDNN only � Domain Adversarial Training Experimental setup and results Demo Conclusions and future work 2/21

2022/1/10 Introduction End-to-end models for small-footprint keyword spotting Advantages of end-to-end approaches 1. 2. 3. Directly outputs keyword detection No complicated searching involved No alignments needed beforehand Small-footprint requirement 1. 2. 3. Highly accurate Low latency Run in computationally constrained environment 3/21

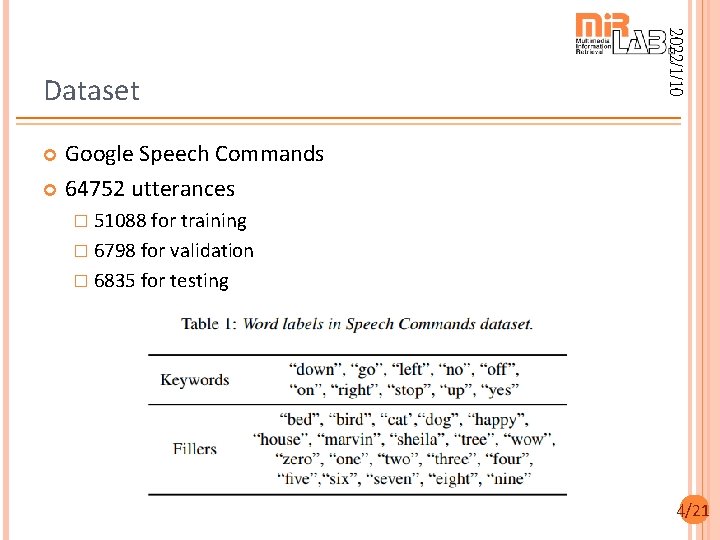

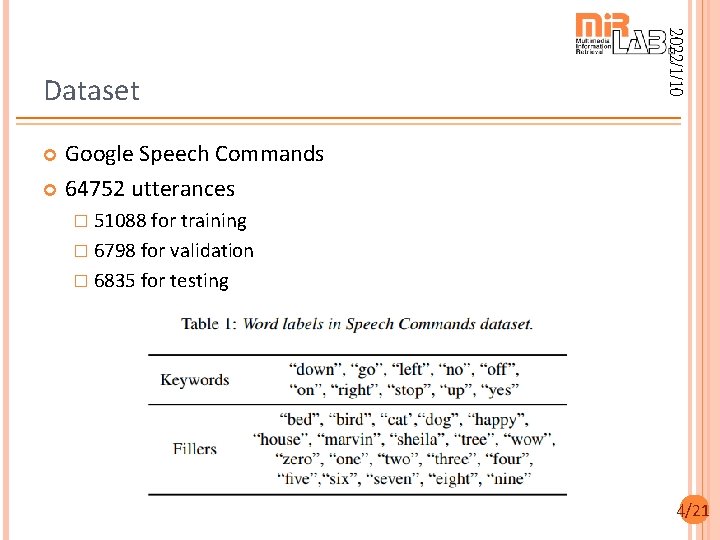

2022/1/10 Dataset Google Speech Commands 64752 utterances � 51088 for training � 6798 for validation � 6835 for testing 4/21

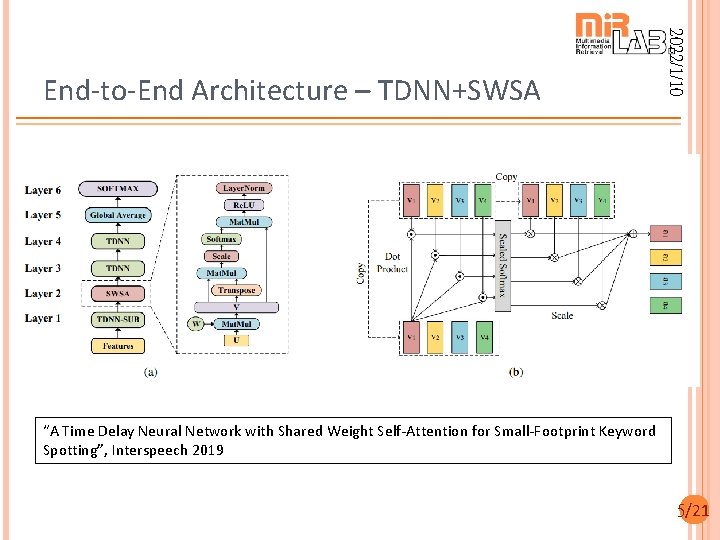

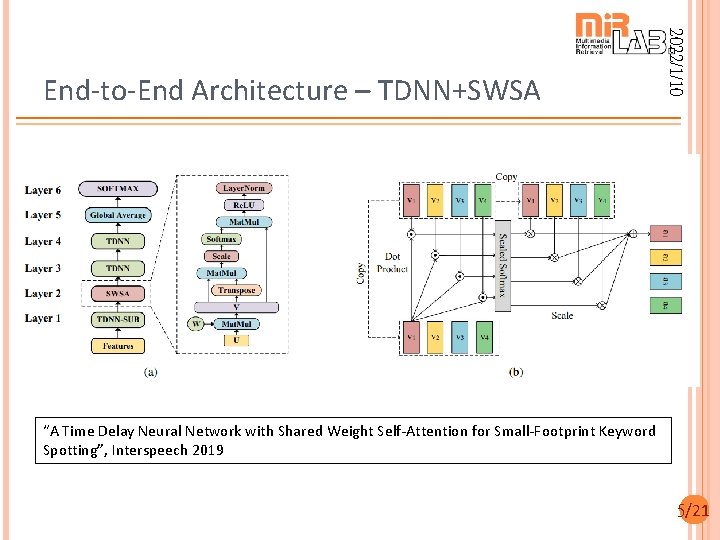

2022/1/10 End-to-End Architecture – TDNN+SWSA “A Time Delay Neural Network with Shared Weight Self-Attention for Small-Footprint Keyword Spotting”, Interspeech 2019 5/21

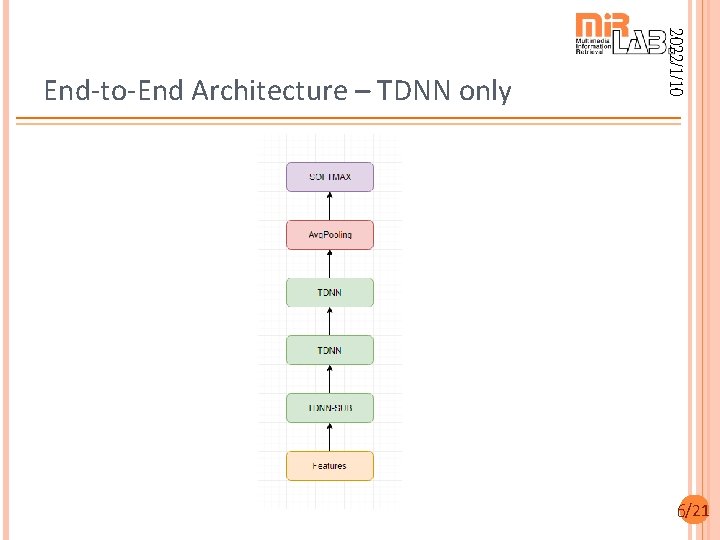

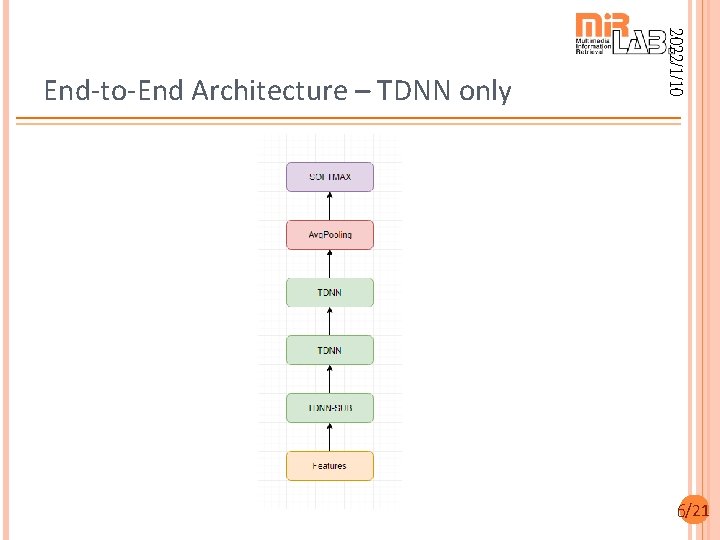

2022/1/10 End-to-End Architecture – TDNN only 6/21

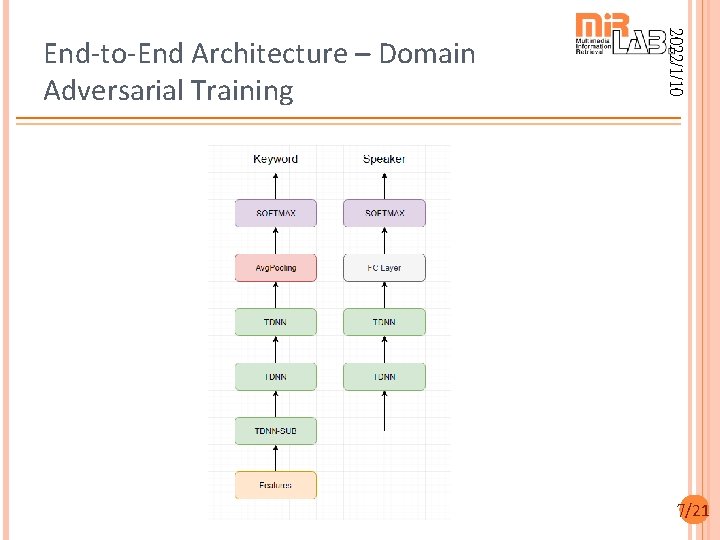

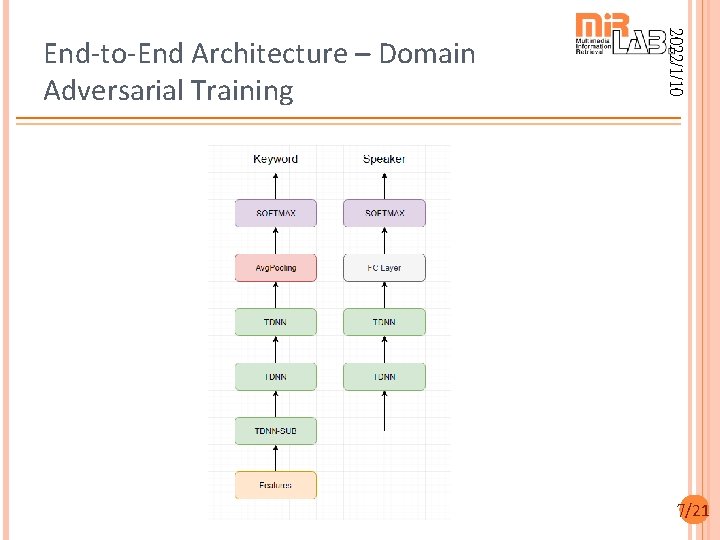

2022/1/10 End-to-End Architecture – Domain Adversarial Training 7/21

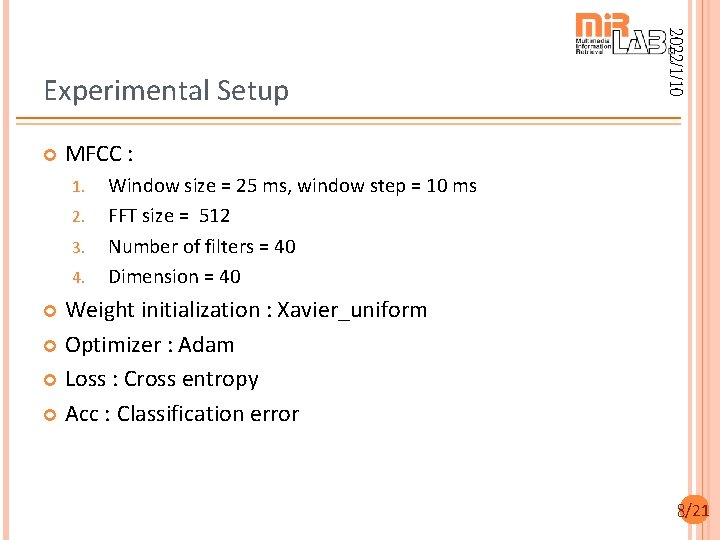

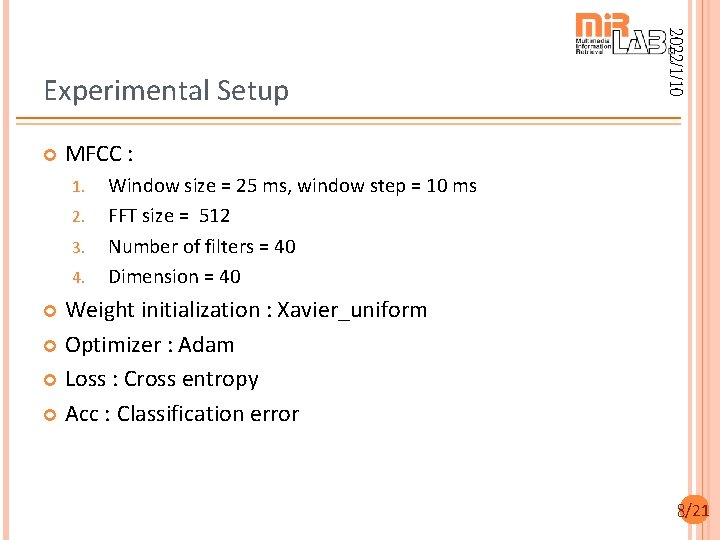

2022/1/10 Experimental Setup MFCC : 1. 2. 3. 4. Window size = 25 ms, window step = 10 ms FFT size = 512 Number of filters = 40 Dimension = 40 Weight initialization : Xavier_uniform Optimizer : Adam Loss : Cross entropy Acc : Classification error 8/21

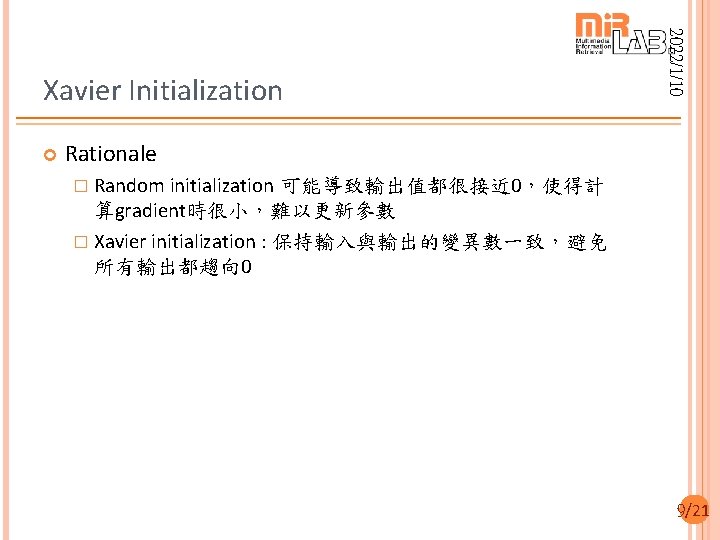

2022/1/10 Xavier Initialization Rationale � Random initialization 可能導致輸出值都很接近 0,使得計 算gradient時很小,難以更新參數 � Xavier initialization : 保持輸入與輸出的變異數一致,避免 所有輸出都趨向 0 9/21

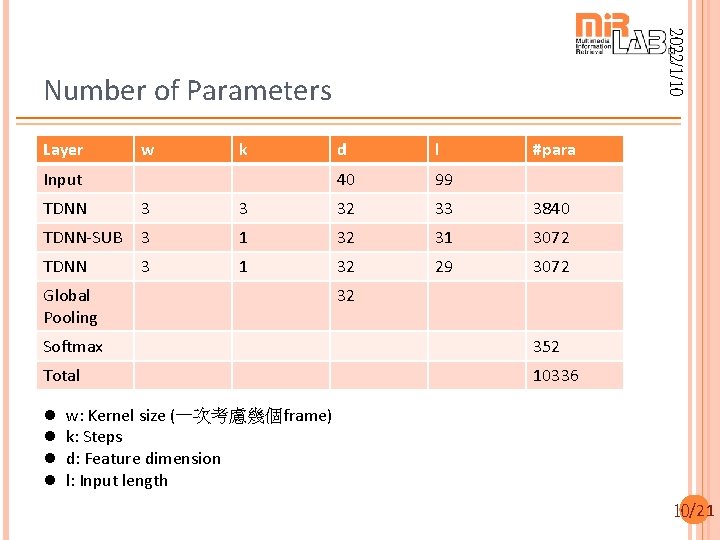

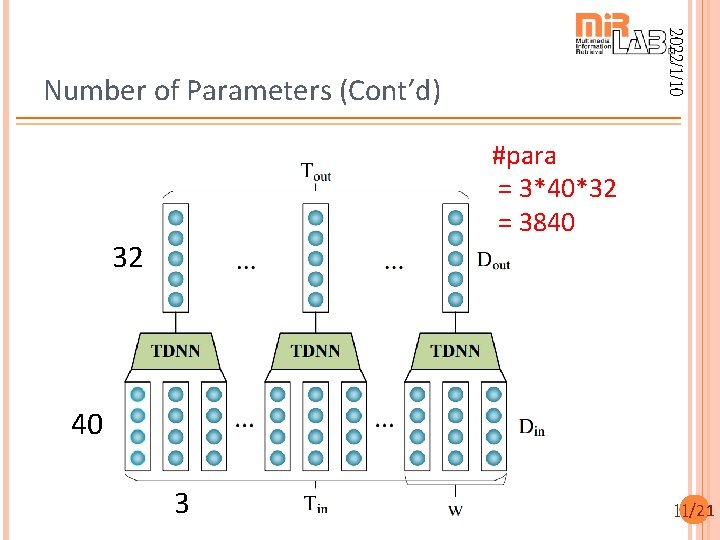

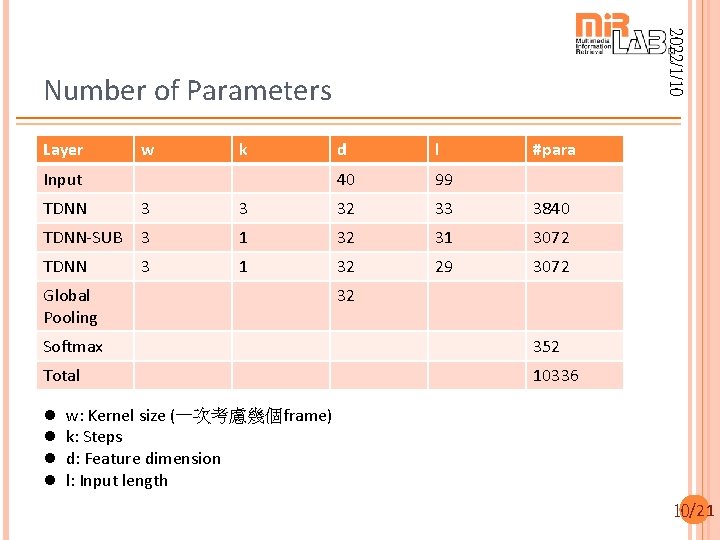

2022/1/10 Number of Parameters Layer w k Input d l 40 99 #para TDNN 3 3 32 33 3840 TDNN-SUB 3 1 32 31 3072 TDNN 3 1 32 29 3072 Global Pooling 32 Softmax 352 Total 10336 l l w: Kernel size (一次考慮幾個frame) k: Steps d: Feature dimension l: Input length 10/21

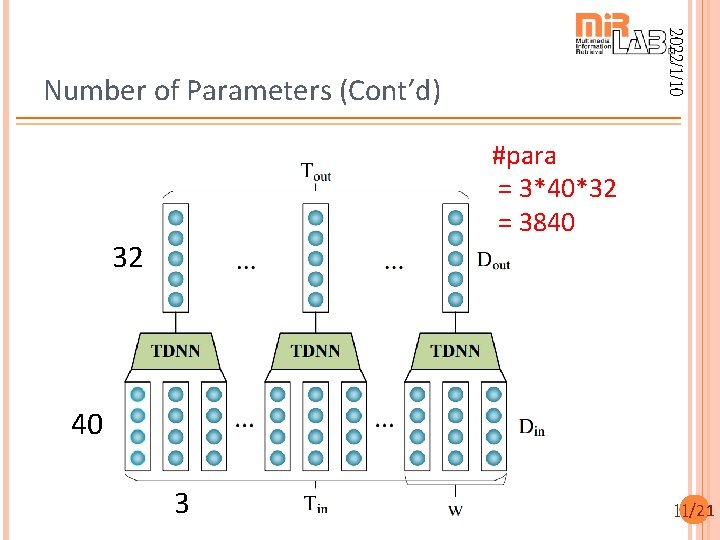

2022/1/10 Number of Parameters (Cont’d) #para = 3*40*32 = 3840 32 40 3 11/21

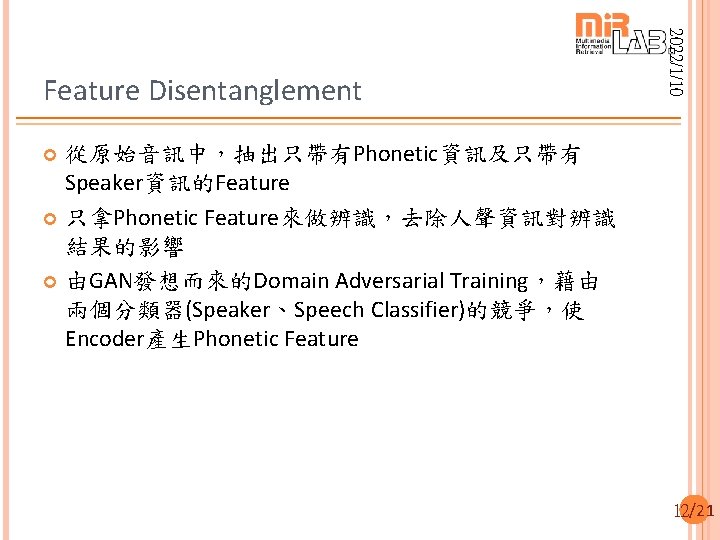

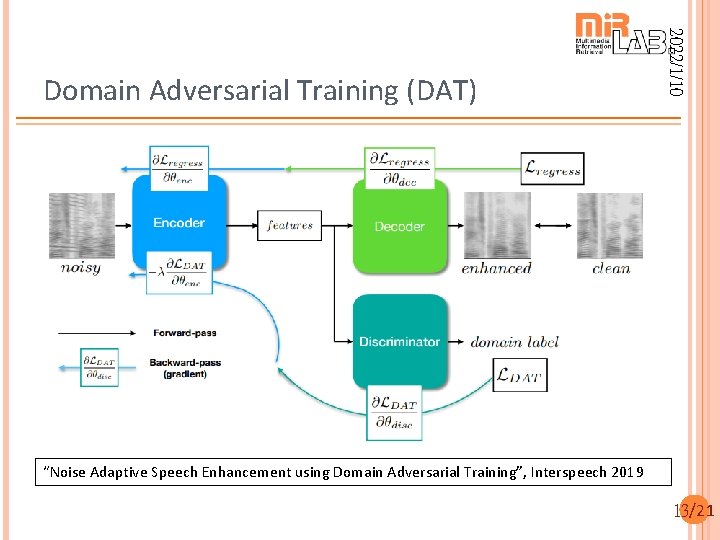

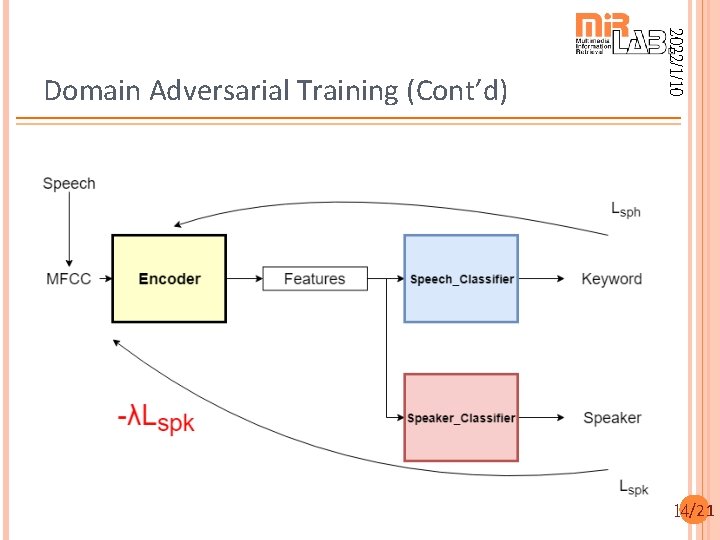

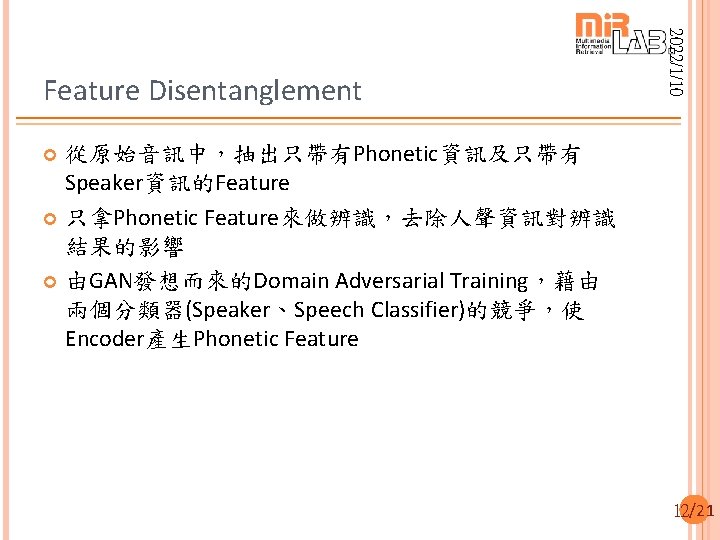

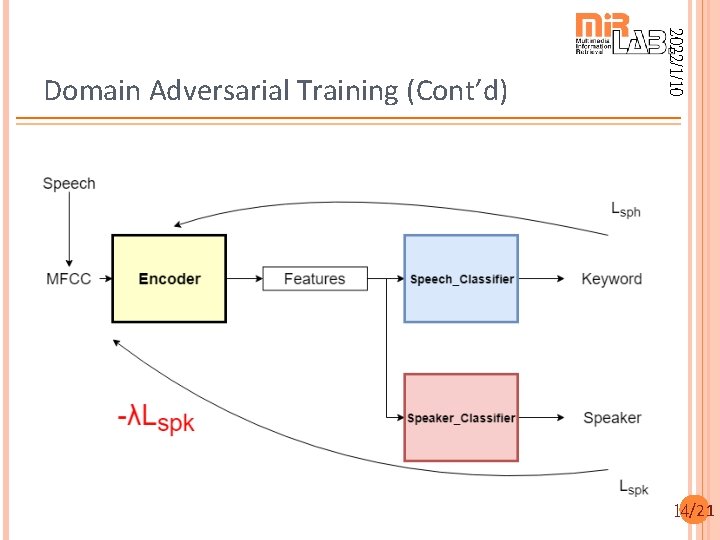

2022/1/10 Feature Disentanglement 從原始音訊中,抽出只帶有Phonetic資訊及只帶有 Speaker資訊的Feature 只拿Phonetic Feature來做辨識,去除人聲資訊對辨識 結果的影響 由GAN發想而來的Domain Adversarial Training,藉由 兩個分類器(Speaker、Speech Classifier)的競爭,使 Encoder產生Phonetic Feature 12/21

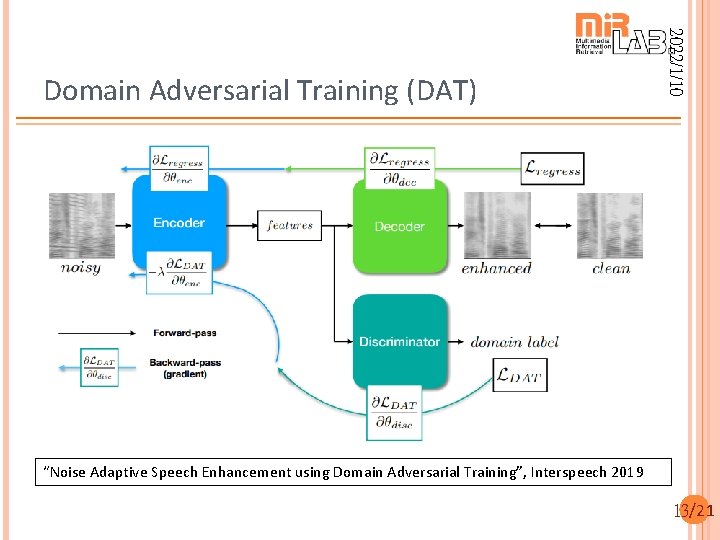

2022/1/10 Domain Adversarial Training (DAT) “Noise Adaptive Speech Enhancement using Domain Adversarial Training”, Interspeech 2019 13/21

2022/1/10 Domain Adversarial Training (Cont’d) 14/21

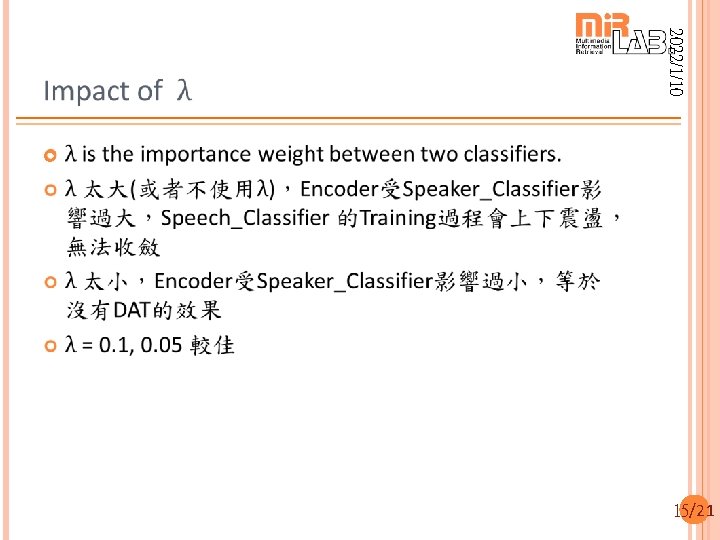

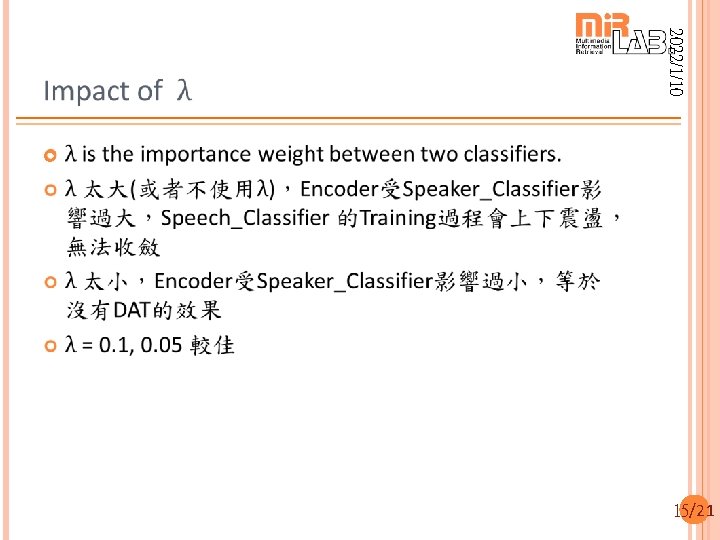

2022/1/10 15/21

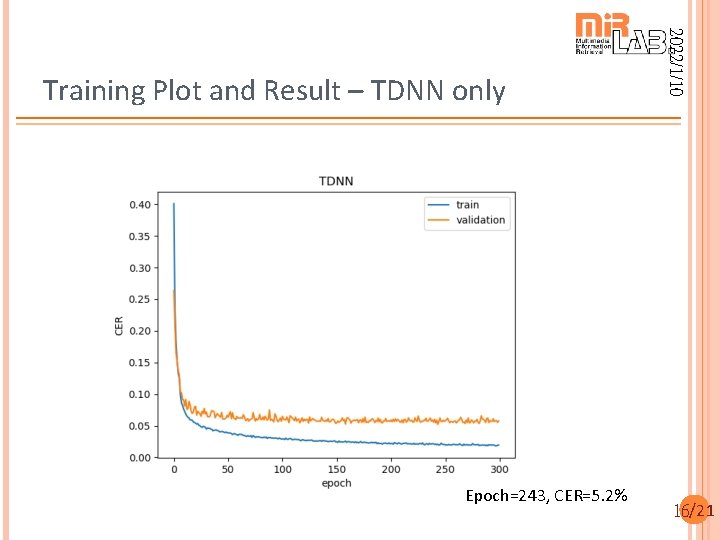

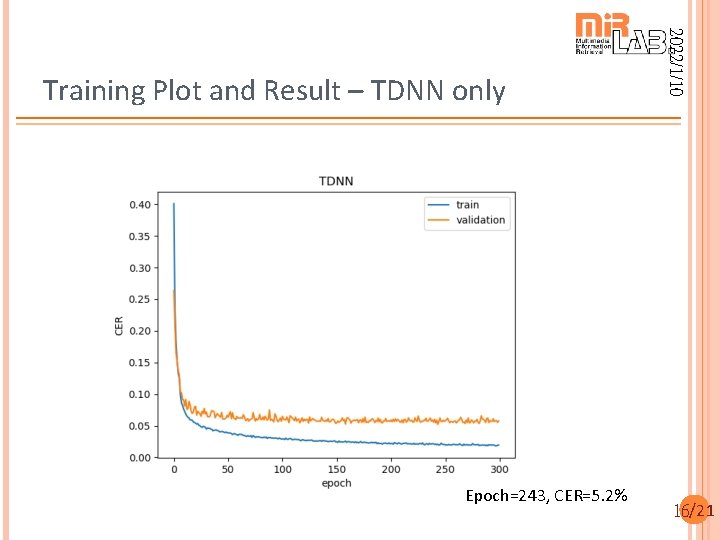

Epoch=243, CER=5. 2% 2022/1/10 Training Plot and Result – TDNN only 16/21

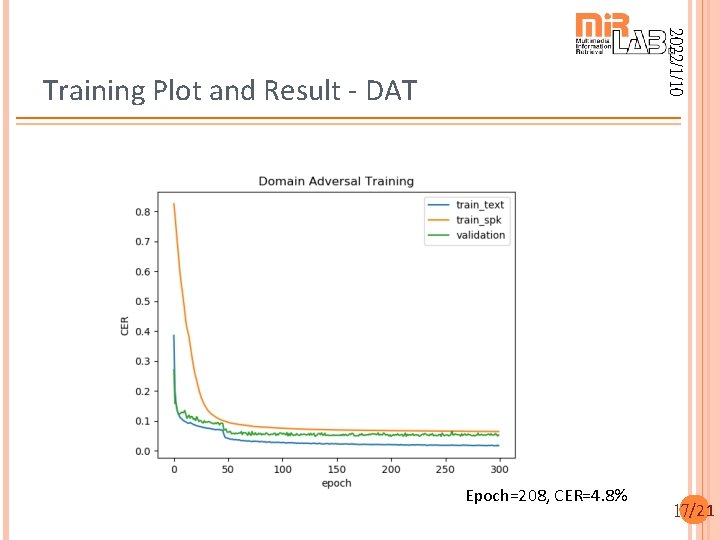

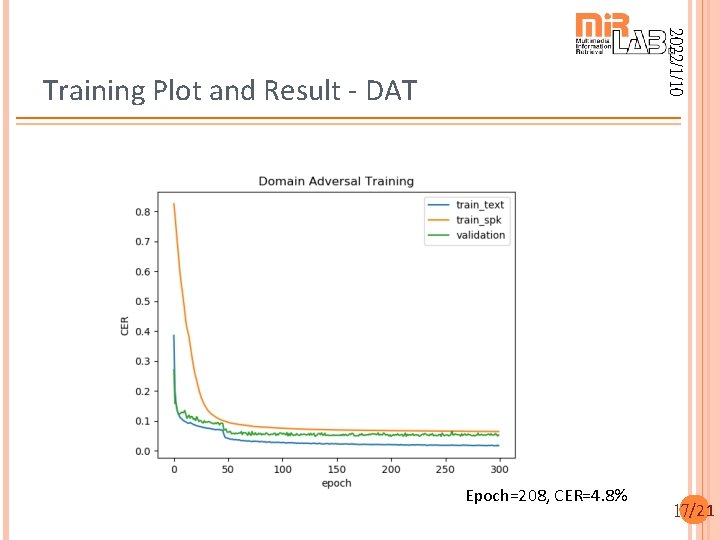

2022/1/10 Training Plot and Result - DAT Epoch=208, CER=4. 8% 17/21

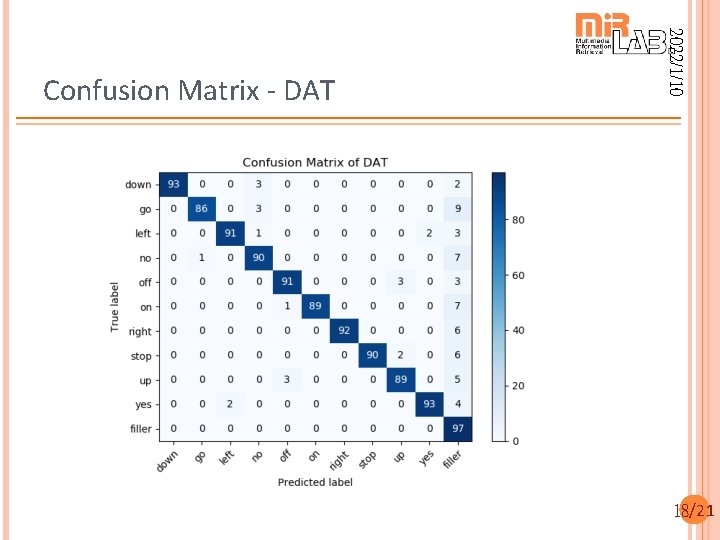

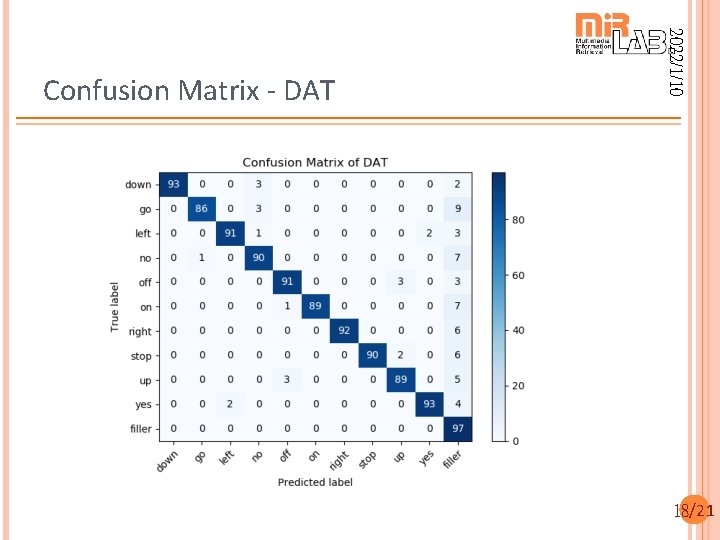

2022/1/10 Confusion Matrix - DAT 18/21

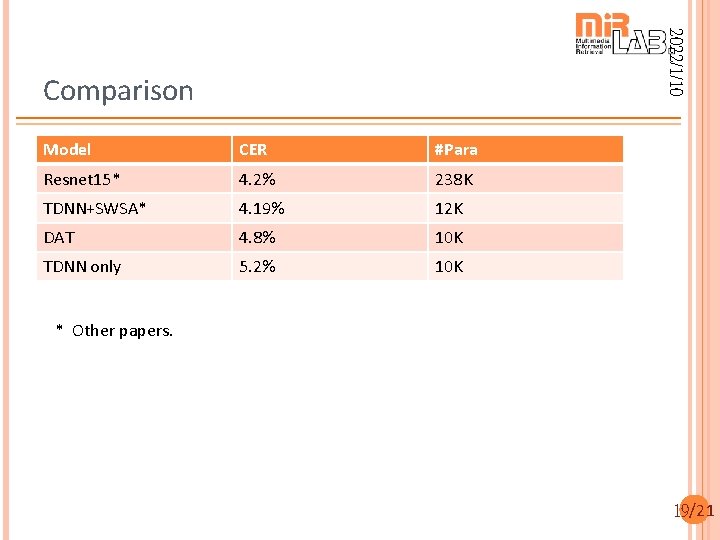

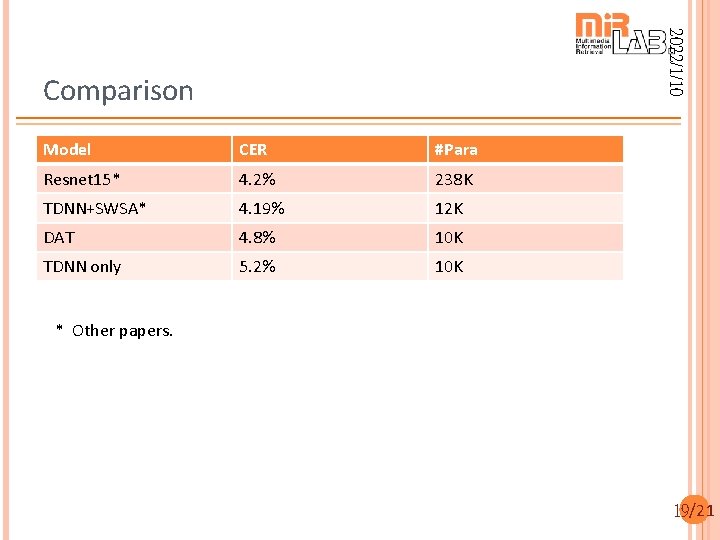

2022/1/10 Comparison Model CER #Para Resnet 15* 4. 2% 238 K TDNN+SWSA* 4. 19% 12 K DAT 4. 8% 10 K TDNN only 5. 2% 10 K * Other papers. 19/21

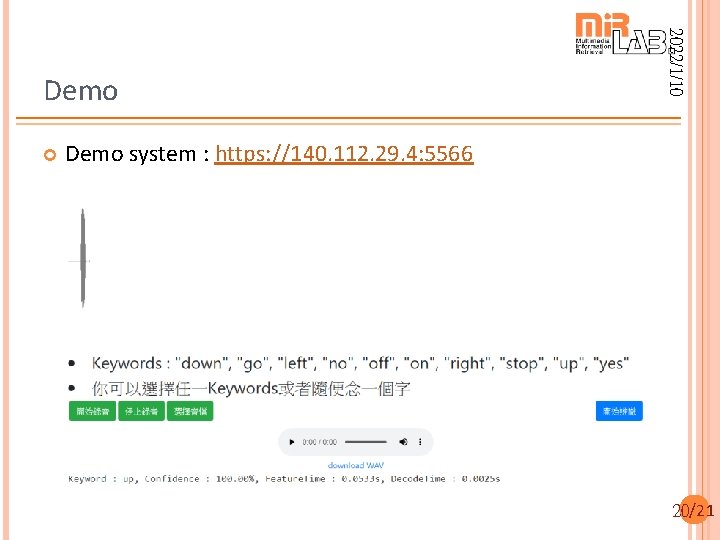

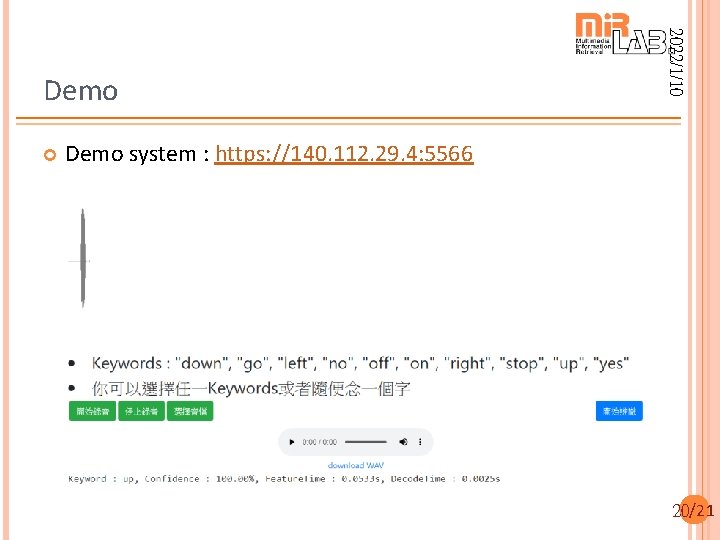

2022/1/10 Demo system : https: //140. 112. 29. 4: 5566 20/21

Hello edge

Hello edge Mir pravi mir daje krist gospodin naš

Mir pravi mir daje krist gospodin naš Postmenopausal endo thickness

Postmenopausal endo thickness Spotting ciclo

Spotting ciclo Word spotting

Word spotting Rao

Rao Fsh valori

Fsh valori Early pregnancy pictures of spotting during pregnancy

Early pregnancy pictures of spotting during pregnancy Warehouse ownership arrangements

Warehouse ownership arrangements Oddball: spotting anomalies in weighted graphs

Oddball: spotting anomalies in weighted graphs Ciclo emorragico

Ciclo emorragico Spotting differential diagnosis

Spotting differential diagnosis In a radio receiver with simple agc

In a radio receiver with simple agc Spotting ciclo

Spotting ciclo L'oiseau bleu eddie constantine

L'oiseau bleu eddie constantine Nppa code of ethics

Nppa code of ethics Eddie mersereau

Eddie mersereau Trace vs vouch

Trace vs vouch Eddie adams arc flash

Eddie adams arc flash Eddie offord

Eddie offord How global warming works

How global warming works Eddie and henry language paper 2

Eddie and henry language paper 2