Simulations and Software Volker Friese Simulations Software Computing

- Slides: 15

Simulations and Software Volker Friese Simulations Software Computing CBM Collaboration Meeting, GSI, 17 October 2008

Simulations • Key observables well in hands; many studies ongoing – to be ctd. with ever more realistic detector descriptions • Sufficient information delivered for start of detector engineering • Trigger considerations (charmonium, open charm) under way • Look into running at SIS 100: promising • First steps to study so far uncovered topics: – centrality determination – event plane resolution – flow – TOF with start detector Volker Friese CBM Collaboration Meeting, 17 October 2008 2

Software status • Software development is still rapid • Focus shifted from detector description to reconstruction / analysis • Needed / ongoing – consolidation / cleanup – control – quality assessment – documentation Volker Friese CBM Collaboration Meeting, 17 October 2008 3

Tools • Event generators: – Strategy of adding signal to background event (Ur. QMD) not valid for low-multiplicity events (e. g. p+C). Look for realistic generators for such events. – For PSD studies: proper fragmentation model needed. SHIELD is ok, but does not run with TGeant 3 • MC engines: – TGeant 3: our baseline. G 3 not developed any longer – TGeant 4: works (some problems), but physics list to be determined – TFluka: not operational. – Native Fluka used for radiation level studies Volker Friese CBM Collaboration Meeting, 17 October 2008 4

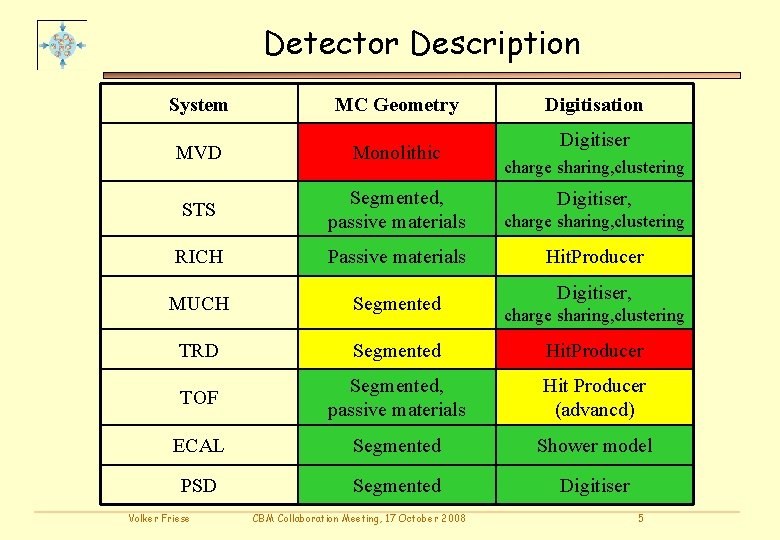

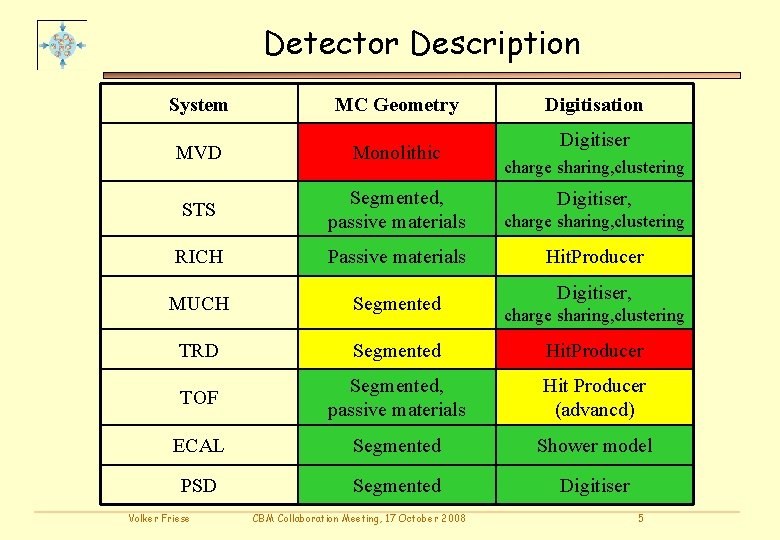

Detector Description System MC Geometry MVD Monolithic STS Segmented, passive materials charge sharing, clustering RICH Passive materials Hit. Producer MUCH Segmented TRD Segmented Hit. Producer TOF Segmented, passive materials Hit Producer (advancd) ECAL Segmented Shower model PSD Segmented Digitiser Volker Friese CBM Collaboration Meeting, 17 October 2008 Digitisation Digitiser charge sharing, clustering Digitiser, charge sharing, clustering 5

Software status • Geometrical description fairly well advanced; supports etc. mostly missing • Detector response models ("digitizer") implemented for almost all subsystems • Parameters taken from literature or educated guesses • Have to be tuned to detector tests / prototype measurements Volker Friese CBM Collaboration Meeting, 17 October 2008 6

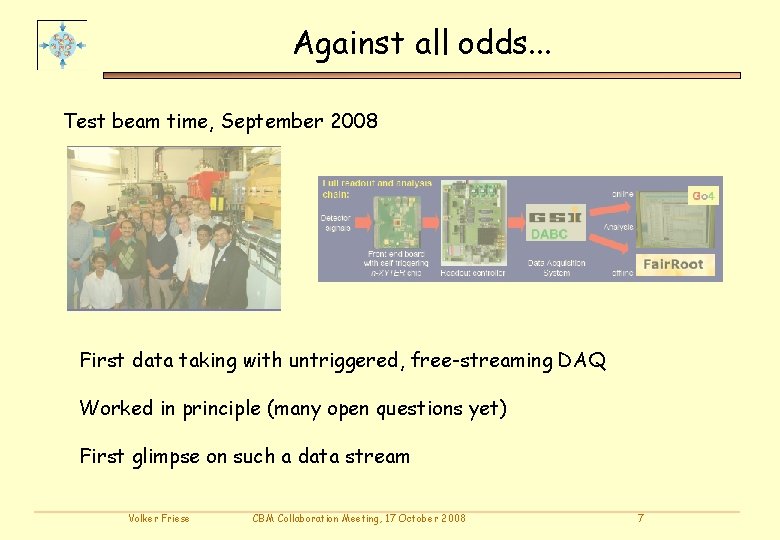

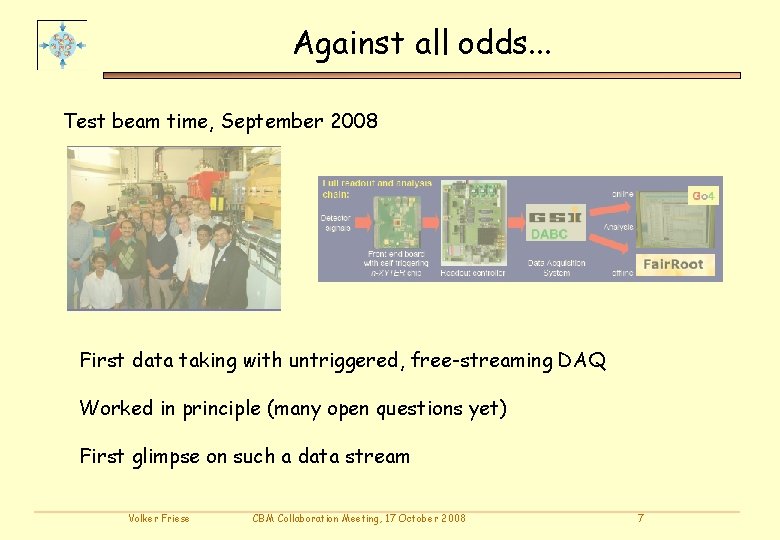

Against all odds. . . Test beam time, September 2008 First data taking with untriggered, free-streaming DAQ Worked in principle (many open questions yet) First glimpse on such a data stream Volker Friese CBM Collaboration Meeting, 17 October 2008 7

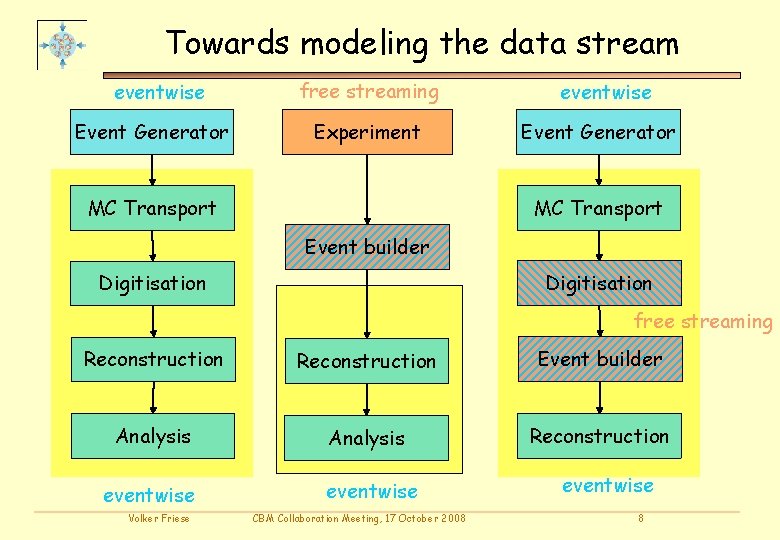

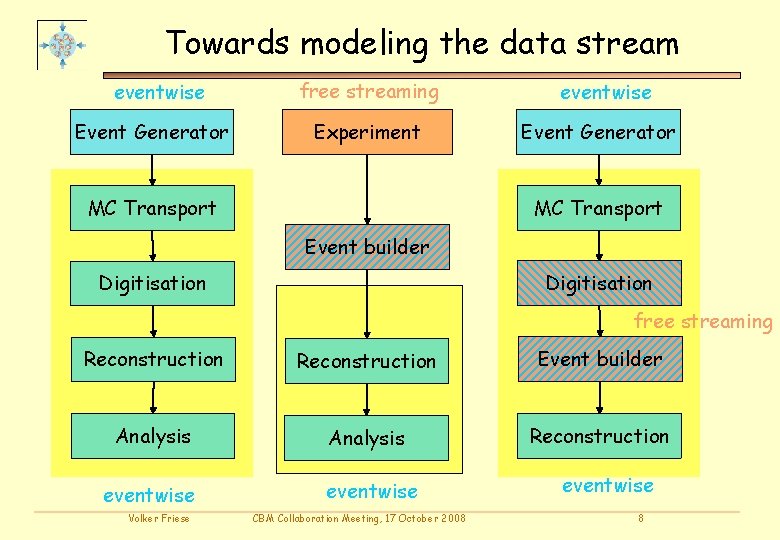

Towards modeling the data stream eventwise Event Generator free streaming Experiment eventwise Event Generator MC Transport Event builder Digitisation free streaming Reconstruction Event builder Analysis Reconstruction eventwise Volker Friese CBM Collaboration Meeting, 17 October 2008 eventwise 8

Online and Offline • Online reconstruction (L 1 / Hough) will not be implemented on "normal" architectures • Implementation on FPGA / multi-core requires dedicated programming languages • Up to now, models for the algorithms are implemented in CBMROOT. Will not continue to be so (? ) • Integration / connection of framework and online software to be rethought Volker Friese CBM Collaboration Meeting, 17 October 2008 9

Computing • CBM computing model to be worked out • Some facts: – 1 TB/s from detector – archival rate 1 GB/s 5 PB per CBM year • Online processing will (most probably) be on site • Reconstruction can in principle be distributed • Analysis should be distributed • Can full reconstruction be done online? • If yes, will raw data be stored? • Connections to FAIR computing concept? Volker Friese CBM Collaboration Meeting, 17 October 2008 10

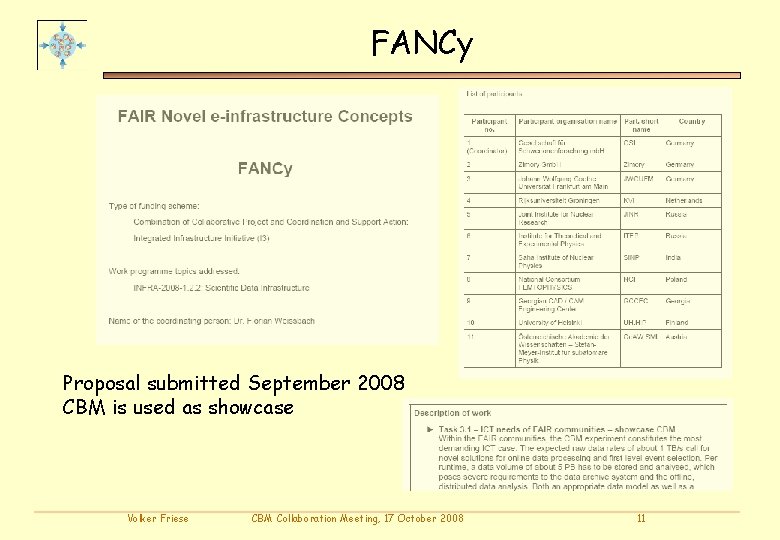

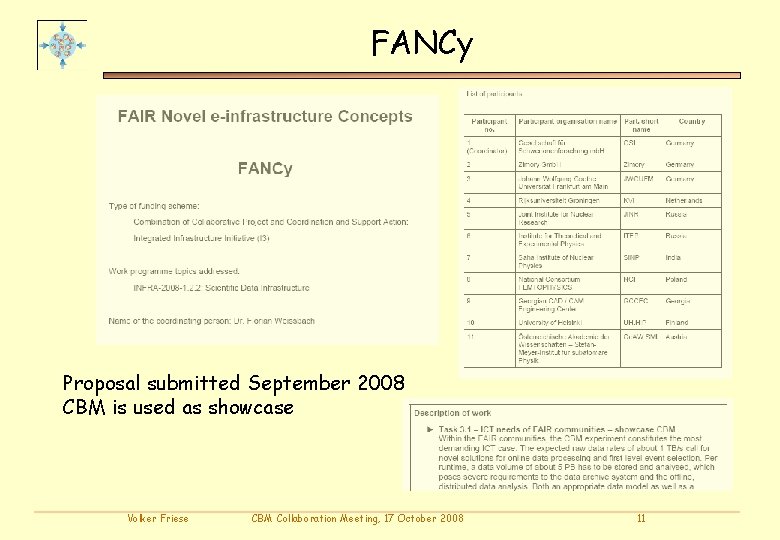

FANCy Proposal submitted September 2008 CBM is used as showcase Volker Friese CBM Collaboration Meeting, 17 October 2008 11

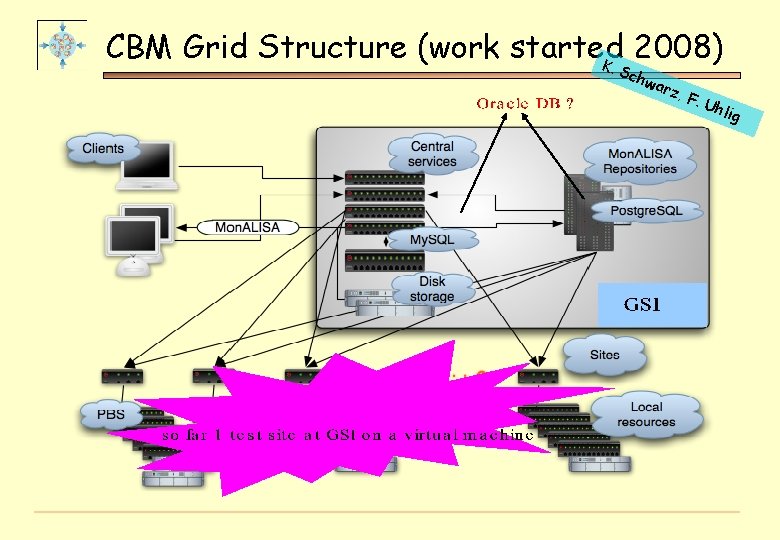

CBM GRID • Aims: – facilitate simulations by use of ressources other than GSI – enable larger statistics: 105 events 107 events – gain experience for using distributed computing for real data processing • Status: – Central services installed at GSI, tests ongoing • Perspectives: – 2008: Small test grid (3 -4 sites), test data challenge – 2009: Production mode Volker Friese CBM Collaboration Meeting, 17 October 2008 12

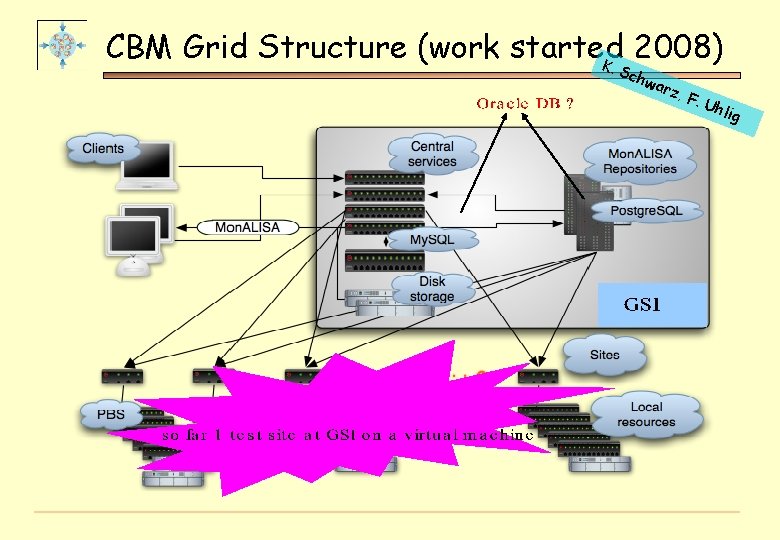

CBM Grid Structure (work started 2008) K. Sch war z, F . Uh lig

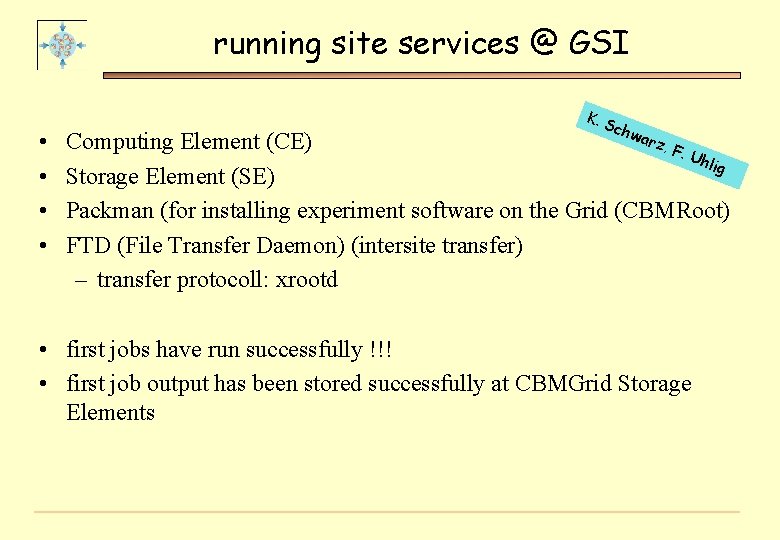

running site services @ GSI • • K. S chw arz Computing Element (CE) , F. Uhl ig Storage Element (SE) Packman (for installing experiment software on the Grid (CBMRoot) FTD (File Transfer Daemon) (intersite transfer) – transfer protocoll: xrootd • first jobs have run successfully !!! • first job output has been stored successfully at CBMGrid Storage Elements

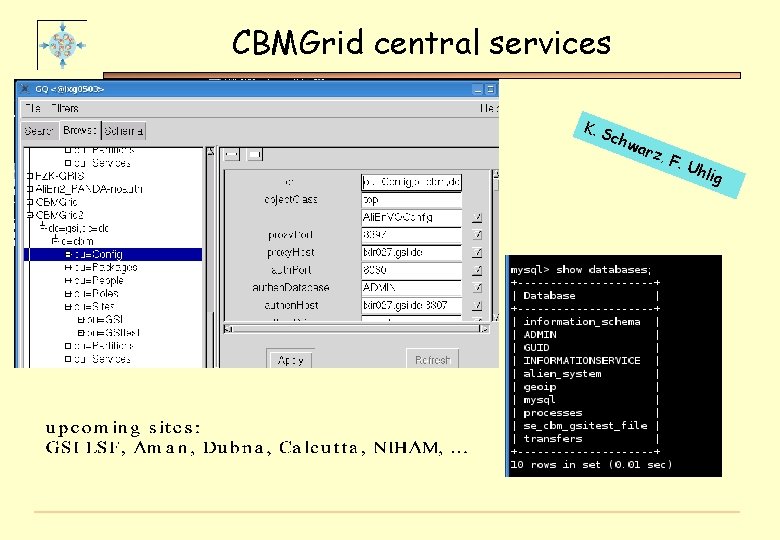

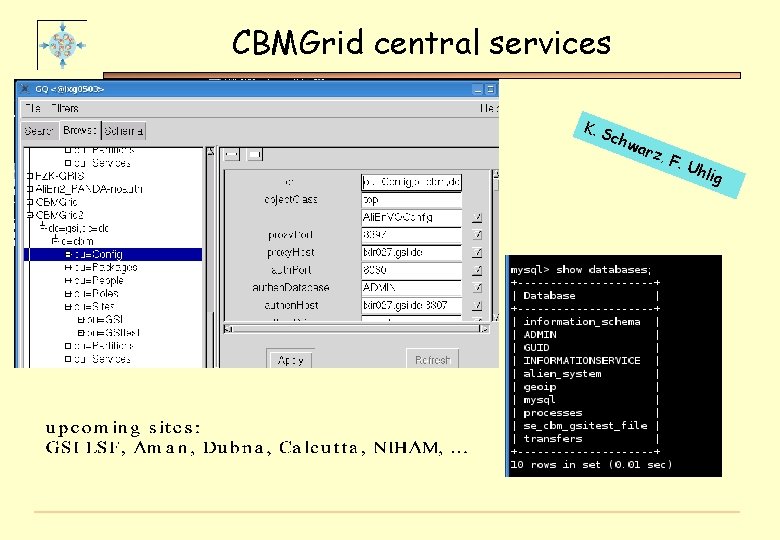

CBMGrid central services K. S chw arz , F. U hlig