Relying on Autonomous Multipath Routing to Achieve InterDomain

- Slides: 23

Relying on Autonomous Multipath Routing to Achieve Inter-Domain Load Balancing in the Internet Robert Löfman Ph. D Student

The Goal of the Research • Provide better Qo. S (not guaranteed), end-toend, by traffic engineering – Means: Multipath Routing – Focus: Throughput – Can improve performance of • apps. insensitive to packet reordering but also, • Video-on-demand (if there is time to wait for buffering, when packets are reordered) • A/V Conferencing apps. (if packet reordering rate is acceptable) Multipath routing = some or all routers can forward to more than one nexthop

Some Other Traffic Engineering Techniques: • Queuing policies (needed if strict Qo. S is to be guaranteed) – – Diff. Serv: class of packets receive preferential treatment Int. Serv: resources reserved on-demand Permanent packet scheduling (Priority Q, Fair Q, Weighted FQ…. ) Drop schemes (Random Early Detection) • Source Routing – MPLS • Fast layer-2 forwarding, based on virtual circuits. • Enables better addressability and discrimination of routes. • Over provisioning – Have so much hardware that capacity cannot run out

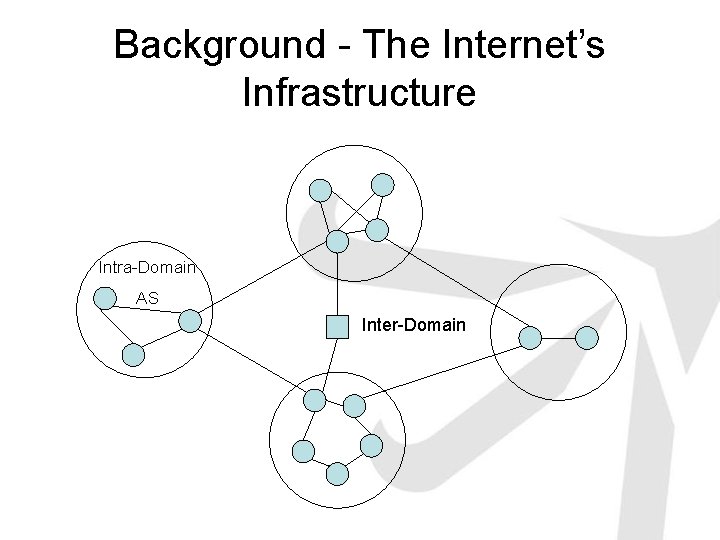

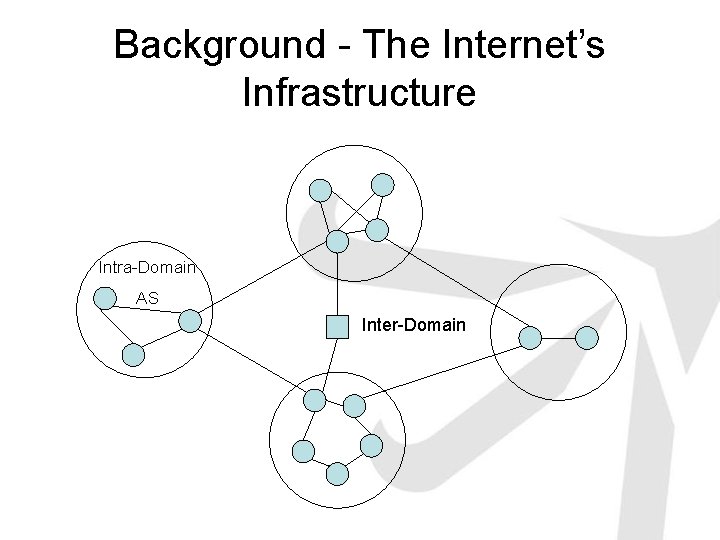

Background - The Internet’s Infrastructure • Intra-Domain (Autonomous System, AS) – A network of a single organization – Private routing (RIP, OSPF, EIGRP…) – Spans a local region. • Inter-Domain – The routes between ASes. – Routing domain is global. – Border Gateway Protocol (BGP)

Background - The Internet’s Infrastructure Intra-Domain AS Inter-Domain

Intra-domain Multipath Schemes • • EIGRP, proportional routing = traffic is dispersed proportionally to metrics OSPF-Equal Cost MPR, shares load on equal optimal routes. OSPF Optimized MPR, disseminates load levels and can reroute around cong. MP extended Link State and Distance Vector, calculate and address the kbest paths. • “A survey of multipath routing for traffic engineering", Gyu Myoung Lee, Jin Seek Choi. • • • Multi. Path Algorithm (MPA) Discount Shortest Path Algorithm (DSPA) Capacity Removal Algorithm (CRA) Multipath Distance Vector Algorithm (MDVA) Multipath Partial Dissemination Algorithm (MPDA) Quality Multiple Partial Dissemination Algorithm (QMPDA) • • Diffusing Algorithm for Shortest Multipath (DASM) MPATH

Inter-domain Multipath Routing • In use: – There are none, only BGP is used. • BGP installs only a single route. • Multihomed ASes can have some basic TE by policy • Some proposed methods for enabling MPR in BGP: – Dynamic egress-router selection for an multihomed AS – Advertisement of multiple AS-paths, and inter-domain source routing by means of hashed IDs, which are based on the ASpaths. – Advertisement of the min. SLA-guaranteed BW that the path supports, so that paths can be discriminated – Overlay Networks • Probe for “better” routes to destinations via other overlay nodes

The Problem • Initial research: – “Can throughput be expected to improve by letting intermediate routers disperse traffic arbitrarily on possibly joint routes, without cross-domain routing ? ” • If so, – no changes to routing protocols would be needed in order to obtain better performance – The hierarchical structure of the Internet may be preserved. • Biggest obstacle: The intra-domain routes are not visible to the interdomain. – Proportional routing impossible. Þ Might disperse too much traffic on a low BW route • An answer was sought by simulation.

Simulation – Set-up • OPNET Simulator • Partial Internet Routes – Inter/Intra-domains and LANs (BGP and EIGRP) – Fictional (generated) and real WANs • Full end-to-end path diversity: – Intra-domains may have mult. routes to mult. egress points. – Inter-domain routers also use MPR (tweaked BGP) • Paths may have joint links

Simulation – Set-up • Studied: – Multi. Path Routing 2 and 3 (MPR-2, MPR-3) • MPR-2, all routers forward packets to 2 nexthops, if possible • Realized by allowing 2 or 3 sub-optimal routes in EIGRP in each AS. – Compared to: Single. Path Routing (SPR) • Every router forwards to one nexthop

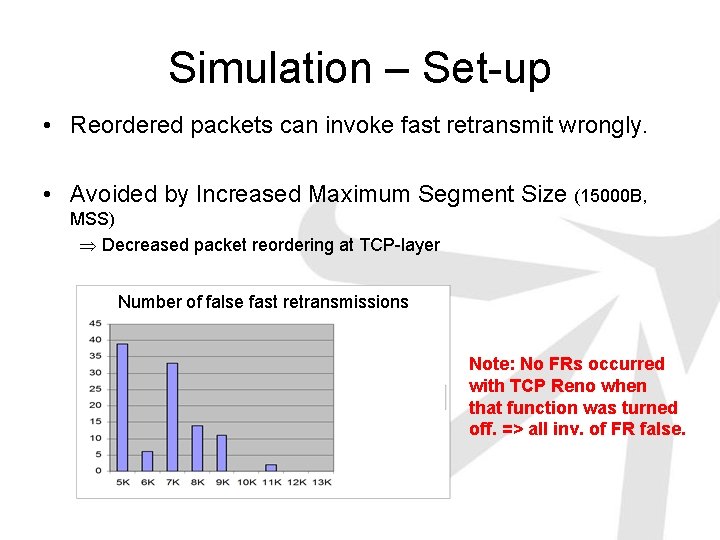

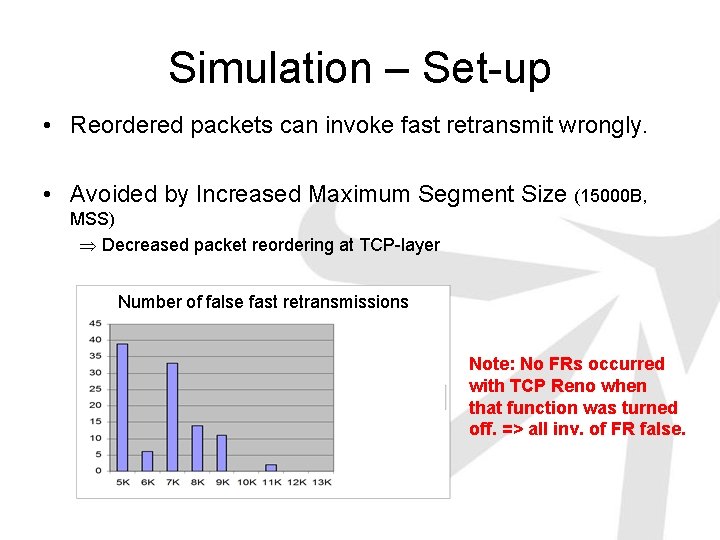

Simulation – Set-up • Reordered packets can invoke fast retransmit wrongly. • Avoided by Increased Maximum Segment Size (15000 B, MSS) Þ Decreased packet reordering at TCP-layer Number of false fast retransmissions Note: No FRs occurred with TCP Reno when that function was turned off. => all inv. of FR false.

Simulation – Set-up • Large MSS (15000 bytes): - Forces fragmentation ÞIncreased chance that all segments have fragments which traverses the “slowest” path – Increases buffering at IP-layer as segments “wait” for delayed fragments Þ Smoothes the segment delay deviation (15 K MSS had an order of magnitude less deviation) – Now it is possible to examine TCP over MPR beyond the FR-problem. • Infinite receive buffers assumed • Last Mile-link capacity 1 Gb/s

Simulation – Set-up – Background traffic • Packet size of 40 B, 570 B or 1500 B randomly • Measurements taken during 0, 25, 50, 75, 90, 95 and 96 percent back. load and averaged. – Delay-metrics set equal. – BW-metric set to the true BW of the link. – The allowed variance of sub-optimal routes was kept low to avoid loops (loops where detected when TTL values dropped to 0)

Simulation – Results, UDP over MPR • Starting point: UDP, because it does not constrain its transmission. Throughput: Transmission rate is only 200 Throughput: Transmission rate is 300 million pkt/h of size 1500 B each. A function of back. load. pkt/h. MPR better perf. • Indication: – MPR performs best when trans. rate is high or residual BW low. • Sending must be at a rate which the SP can’t handle. (Trans_Rate / Res_BWSP > 1) • Dispersing packets while the SP has enough BW increases the delay

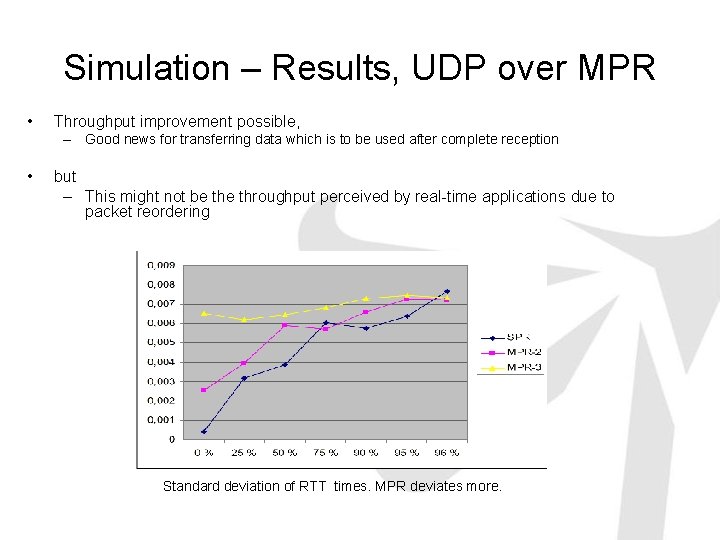

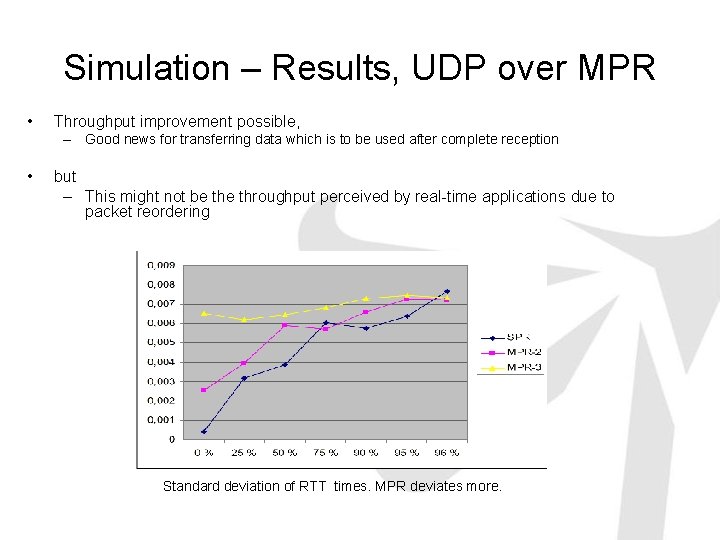

Simulation – Results, UDP over MPR • Throughput improvement possible, – Good news for transferring data which is to be used after complete reception • but – This might not be throughput perceived by real-time applications due to packet reordering Standard deviation of RTT times. MPR deviates more.

Simulation – Results, UDP over MPR • In-sequence BW = the rate of packets/s that arrive in-order. • Out-of-sequence BW = the rate of packets/s that arrive. • Especially important for real-time applications. • If all paths in MPR have a BW greater than an nth of the BW of the SP: Þ possible to transmit more than n packets in parallel before n packets are transmitted on the SP. Þ also the in-sequence BW of MPR would be greater than SPR

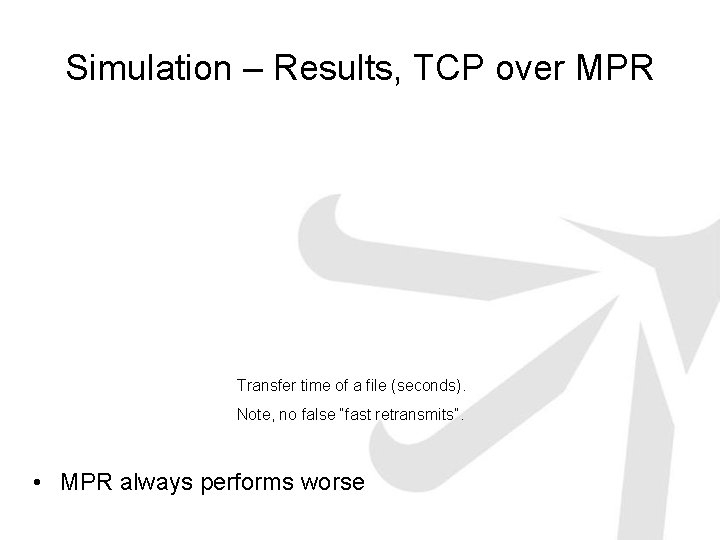

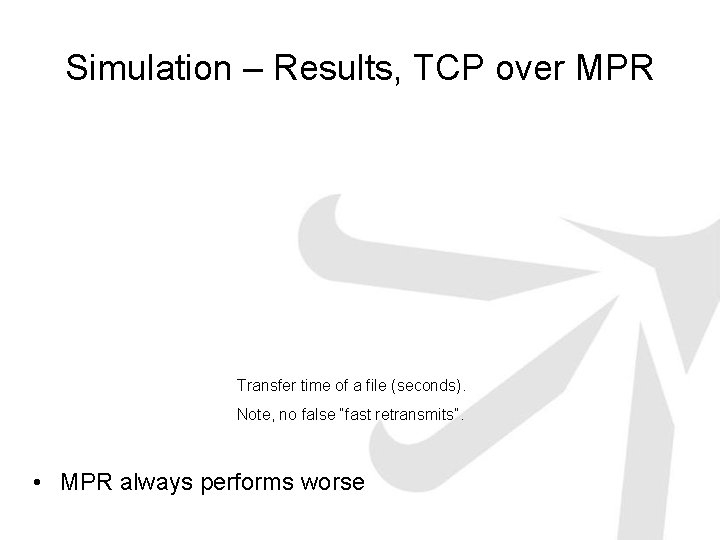

Simulation – Results, TCP over MPR Transfer time of a file (seconds). Note, no false “fast retransmits”. • MPR always performs worse

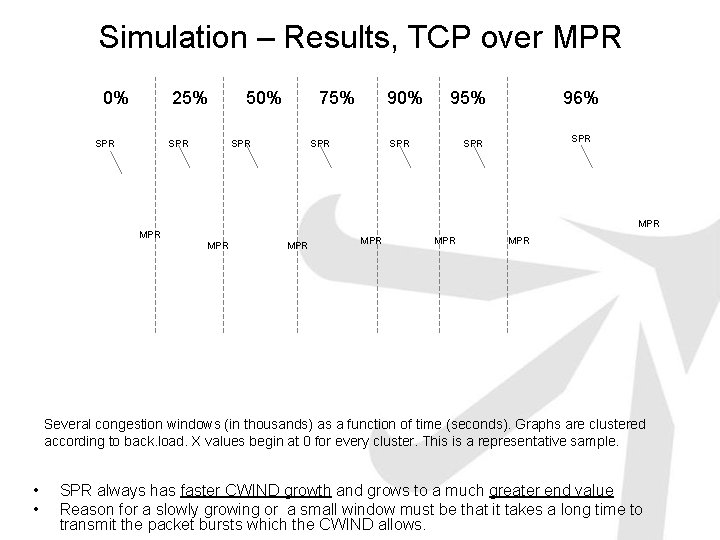

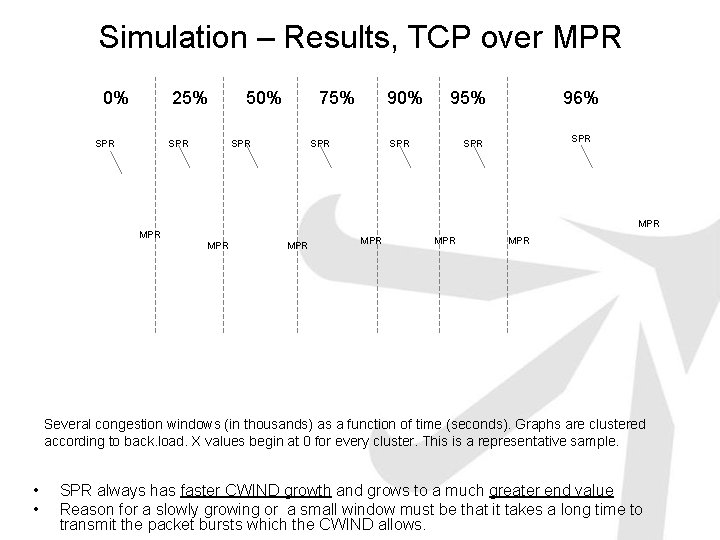

Simulation – Results, TCP over MPR 0% 25% SPR 50% 75% SPR 90% SPR 95% SPR 96% SPR MPR MPR Several congestion windows (in thousands) as a function of time (seconds). Graphs are clustered according to back. load. X values begin at 0 for every cluster. This is a representative sample. • • SPR always has faster CWIND growth and grows to a much greater end value Reason for a slowly growing or a small window must be that it takes a long time to transmit the packet bursts which the CWIND allows.

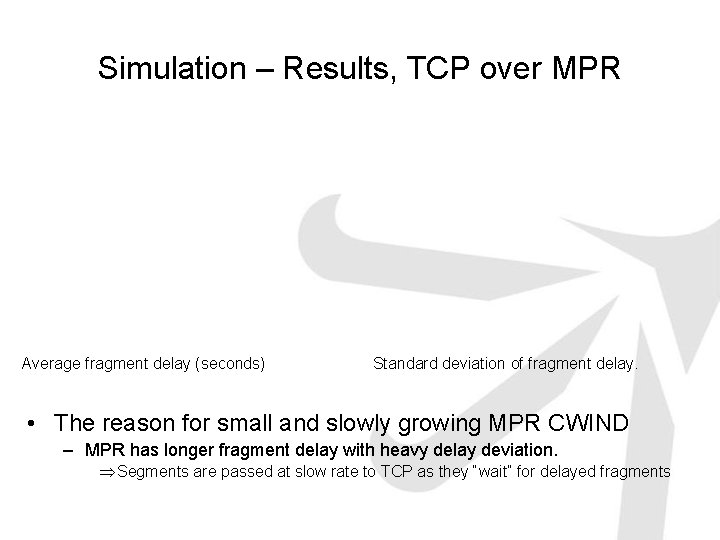

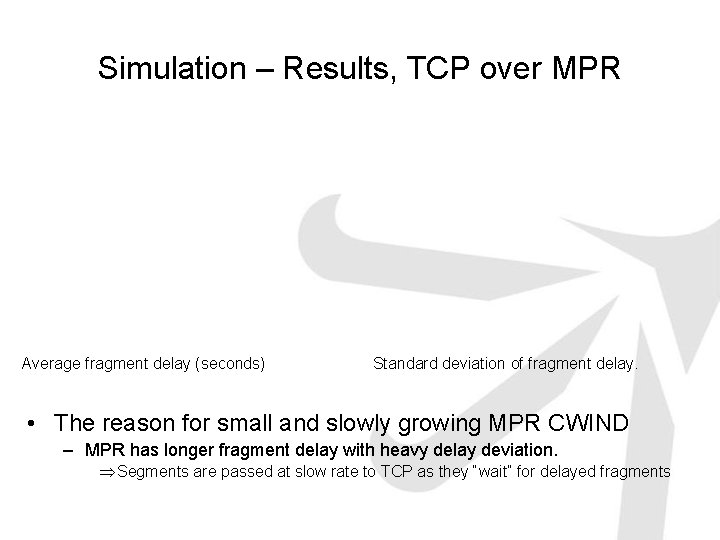

Simulation – Results, TCP over MPR Average fragment delay (seconds) Standard deviation of fragment delay. • The reason for small and slowly growing MPR CWIND – MPR has longer fragment delay with heavy delay deviation. Þ Segments are passed at slow rate to TCP as they “wait” for delayed fragments

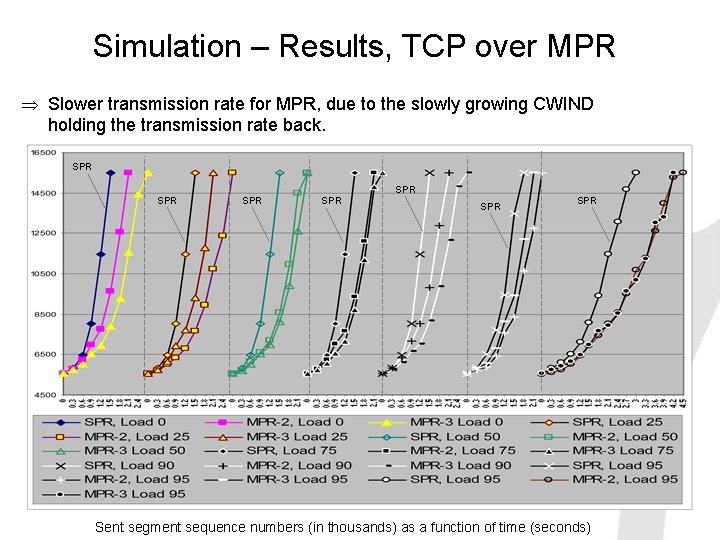

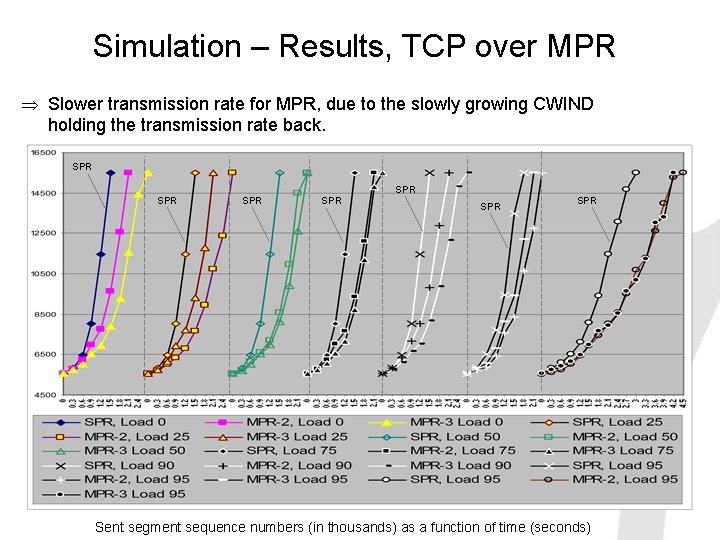

Simulation – Results, TCP over MPR Þ Slower transmission rate for MPR, due to the slowly growing CWIND holding the transmission rate back. SPR SPR Sent segment sequence numbers (in thousands) as a function of time (seconds)

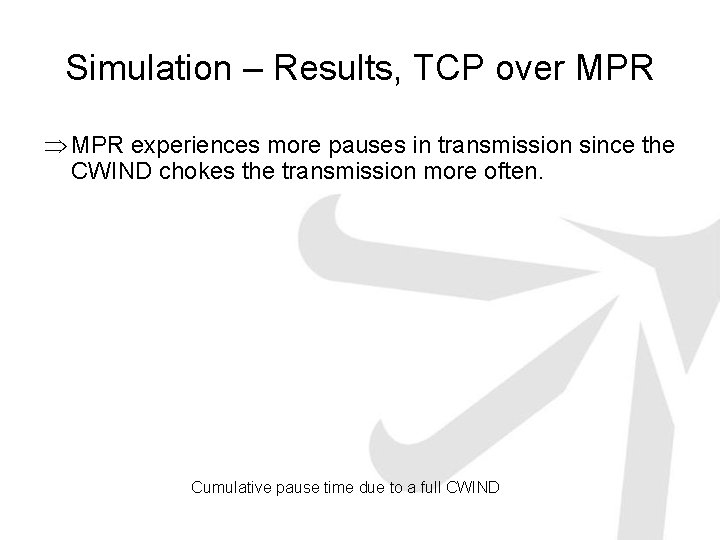

Simulation – Results, TCP over MPR Þ MPR experiences more pauses in transmission since the CWIND chokes the transmission more often. Cumulative pause time due to a full CWIND

Simulation – Interpretation of Results, TCP over MPR 1. In the beginning, the transmission is very dependant on a small delay (CWIND portions transmission into small burst) in order to get the CWIND opened quickly. 2. MPR: Too many fragments are put on high delay paths as there is no proportional forwarding. Þ Segments “wait” for delayed fragments (although it has a large BW which isn’t needed at this point) Þ Burst transmission takes longer Þ slower CWIND growth Þ Vicious circle: future allowed burst are also smaller (compared to SPR) – – SPR: Gets the CWIND quickly opened and therefore can transmit large bursts which allow even larger future bursts. Need to get the in-sequence BW higher in order for MPR to be successful.

Future Work • Goal: Provide I-D prop. forwarding without cross-domain routing • Possible solution algorithm: – At the creation of a new flow, use SPR – Probe for highest possible throughput – Raise the MPR-X value, in order to let packets take sub-optimal routes also. – Then probe for a new throughput high, and so on… – Mark packets for every probe with an ID, and require routers to disperse packets with same ID always to the same nexthops Þ the transmission rate increment from every probe is always put on the same paths (might not scale due to the need for per flow record-keeping)

Static routing and dynamic routing

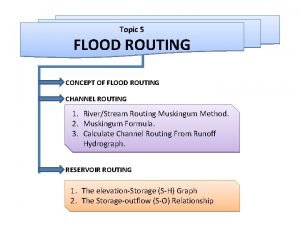

Static routing and dynamic routing Hydrologic continuity equation

Hydrologic continuity equation Clock and power routing in vlsi

Clock and power routing in vlsi Goodrich method

Goodrich method Multipath model of abnormality

Multipath model of abnormality Matlab

Matlab Multipath

Multipath Multipath

Multipath Multipath model of anxiety disorders

Multipath model of anxiety disorders Federal state autonomous educational institution

Federal state autonomous educational institution Autonomous people mover

Autonomous people mover Ftc autonomous code blocks

Ftc autonomous code blocks Autonomous investment

Autonomous investment Non autonomous differential equation

Non autonomous differential equation Am nn

Am nn Autonomous ship

Autonomous ship Autonomous expenditure

Autonomous expenditure Self-driving car ppt

Self-driving car ppt Autonomous investment

Autonomous investment Autonomous data harvesting

Autonomous data harvesting Equilibrium output

Equilibrium output Astratum

Astratum Autonomous buildings sustainability

Autonomous buildings sustainability Spains largest city

Spains largest city