Reg Less JustinTime Operand Staging for GPUs John

- Slides: 26

Reg. Less: Just-in-Time Operand Staging for GPUs John Kloosterman, Jonathan Beaumont, D. Anoushe Jamshidi, Jonathan Bailey, Trevor Mudge, Scott Mahlke University of Michigan Electrical Engineering and Computer Science

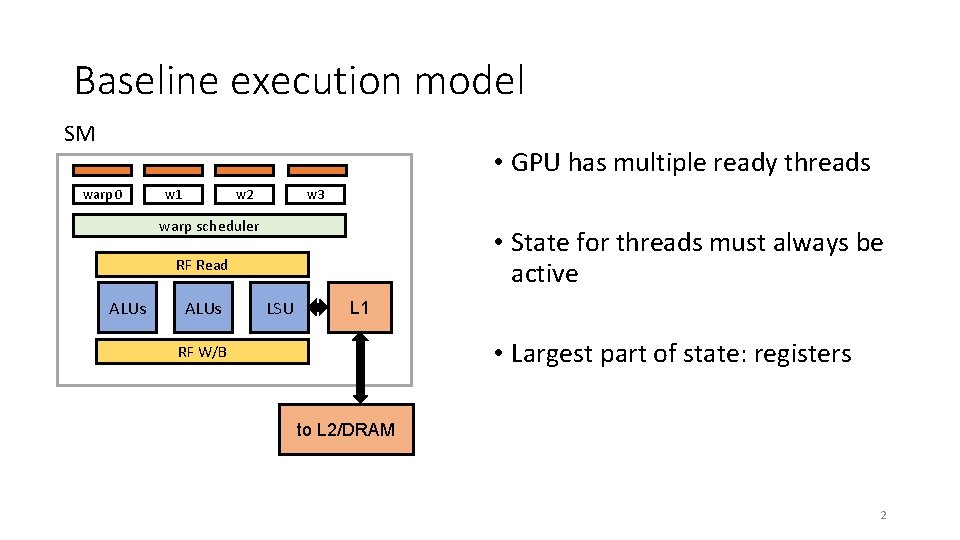

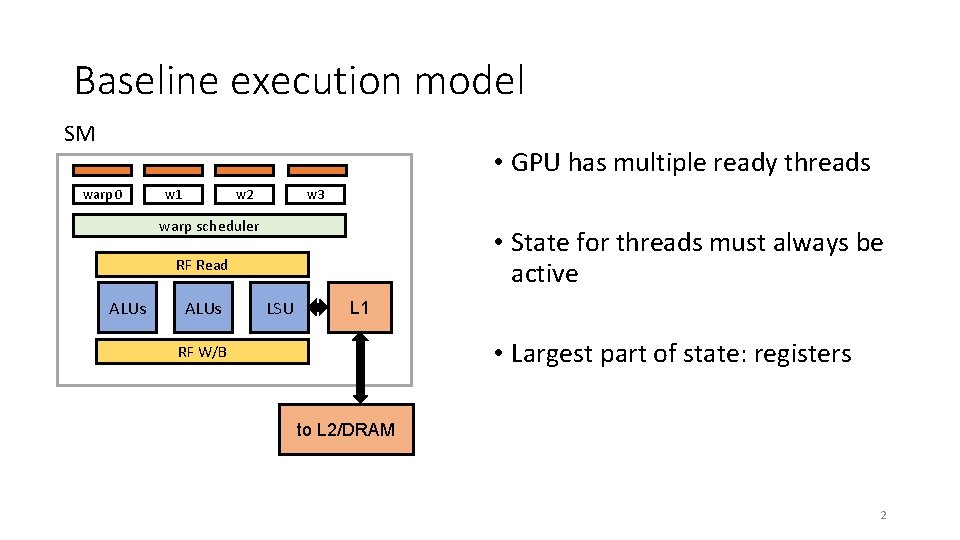

Baseline execution model SM • GPU has multiple ready threads warp 0 w 3 w 2 w 1 warp scheduler • State for threads must always be active RF Read ALUs LSU L 1 • Largest part of state: registers RF W/B to L 2/DRAM 2

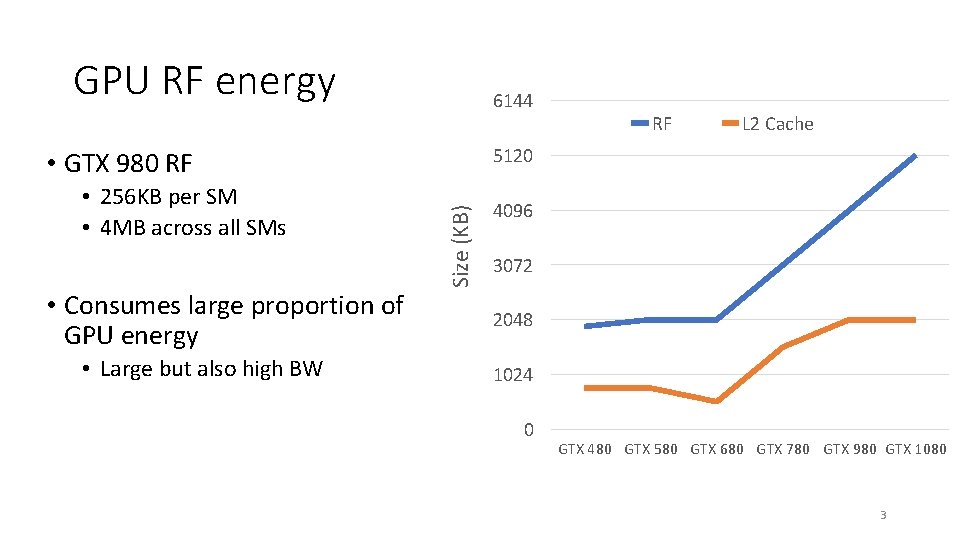

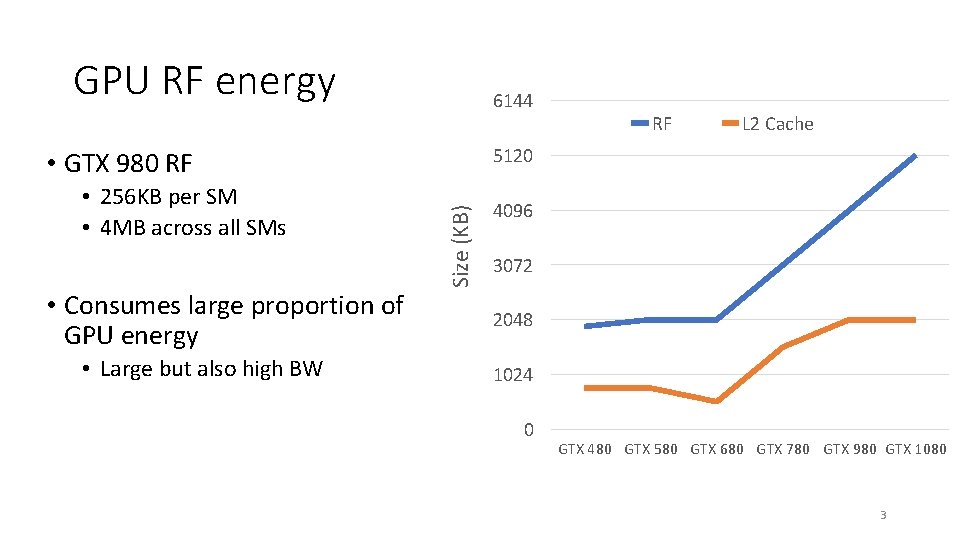

GPU RF energy 6144 • Large but also high BW Size (KB) • Consumes large proportion of GPU energy L 2 Cache 5120 • GTX 980 RF • 256 KB per SM • 4 MB across all SMs RF 4096 3072 2048 1024 0 GTX 480 GTX 580 GTX 680 GTX 780 GTX 980 GTX 1080 3

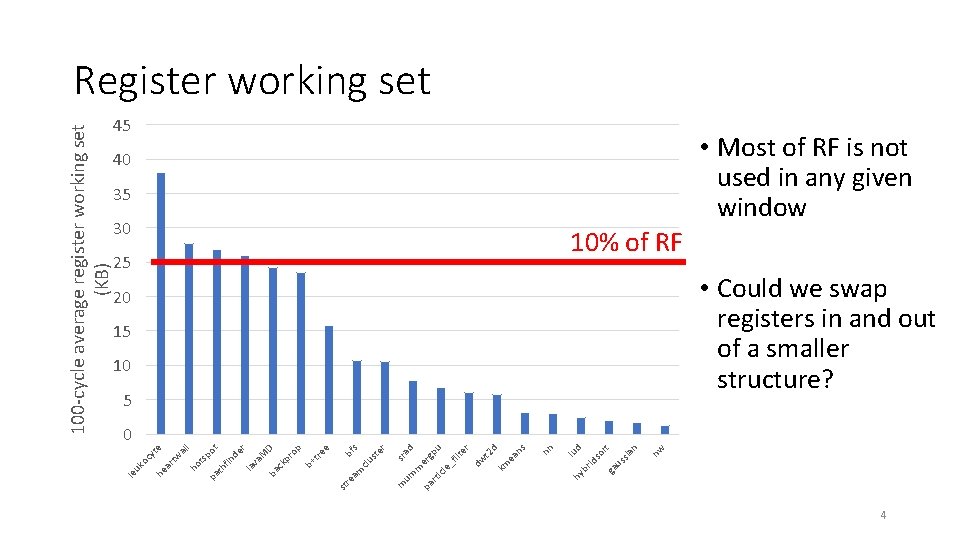

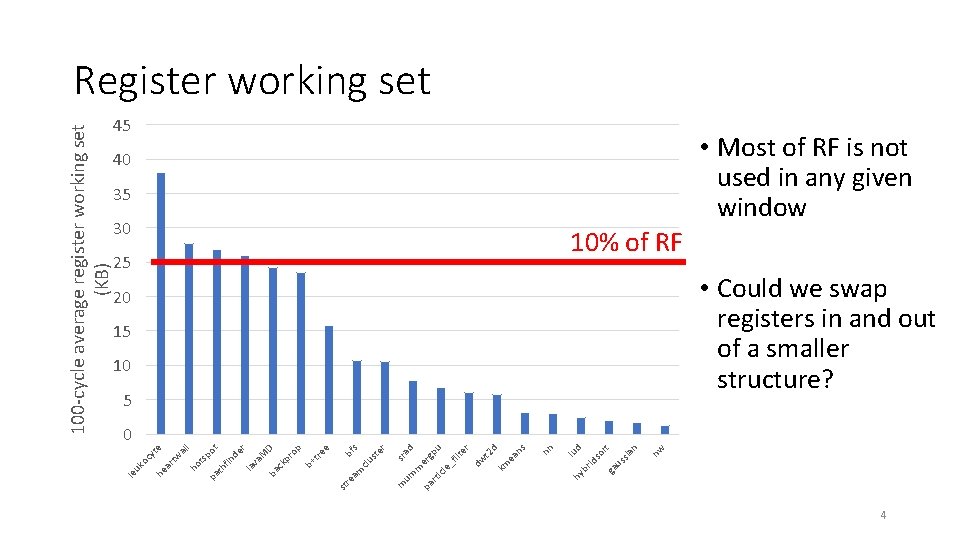

nw n rt d sia us ga id so lu 25 br nn s ea n d 30 hy km t 2 dw er u gp er ad sr _f ilt le tic pa r m um m er st bf s e op D tre b+ clu am re st M de r pr ck ba va la l ot al sp in th f pa ho t e yt ar tw he uk oc le 100 -cycle average register working set (KB) Register working set 45 40 35 10% of RF 20 15 10 5 • Most of RF is not used in any given window • Could we swap registers in and out of a smaller structure? 0 4

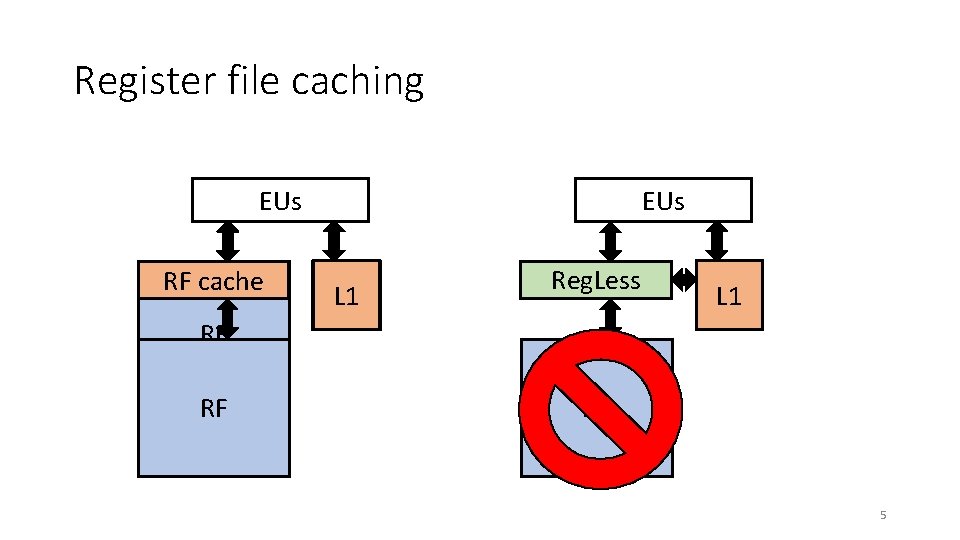

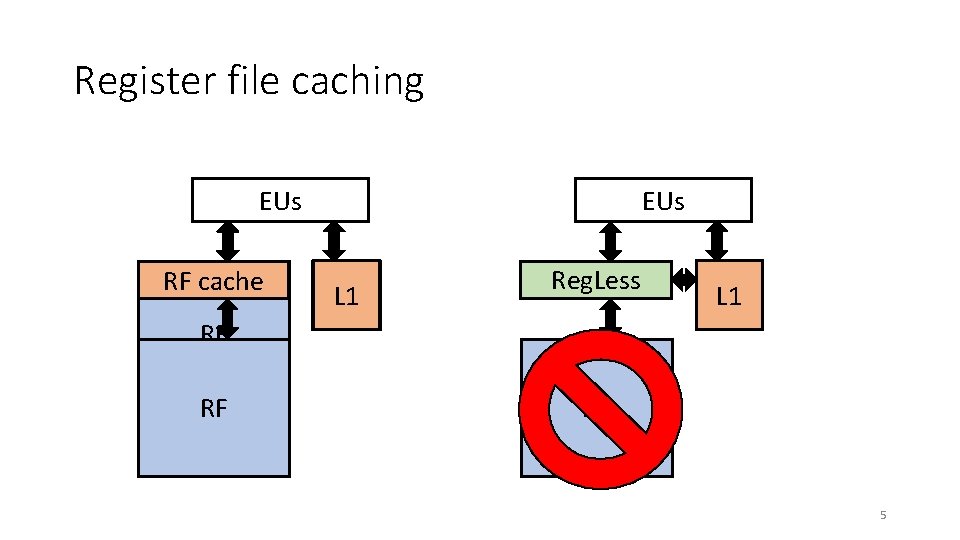

Register file caching EUs RF cache EUs L 1 Reg. Less RF cache L 1 RF RF RF 5

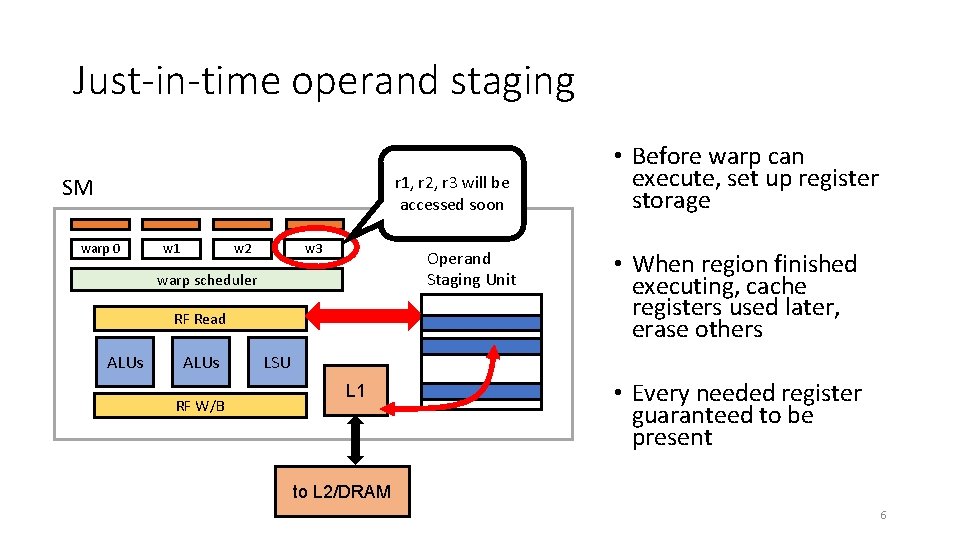

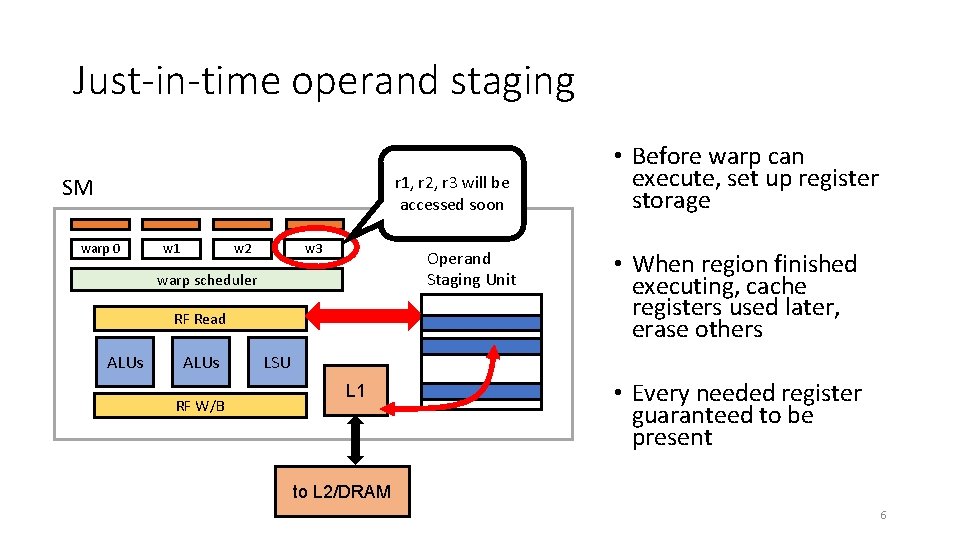

Just-in-time operand staging r 1, r 2, r 3 will be accessed soon SM warp 0 w 3 w 2 w 1 Operand Staging Unit warp scheduler RF Read ALUs RF W/B • Before warp can execute, set up register storage • When region finished executing, cache registers used later, erase others LSU L 1 • Every needed register guaranteed to be present to L 2/DRAM 6

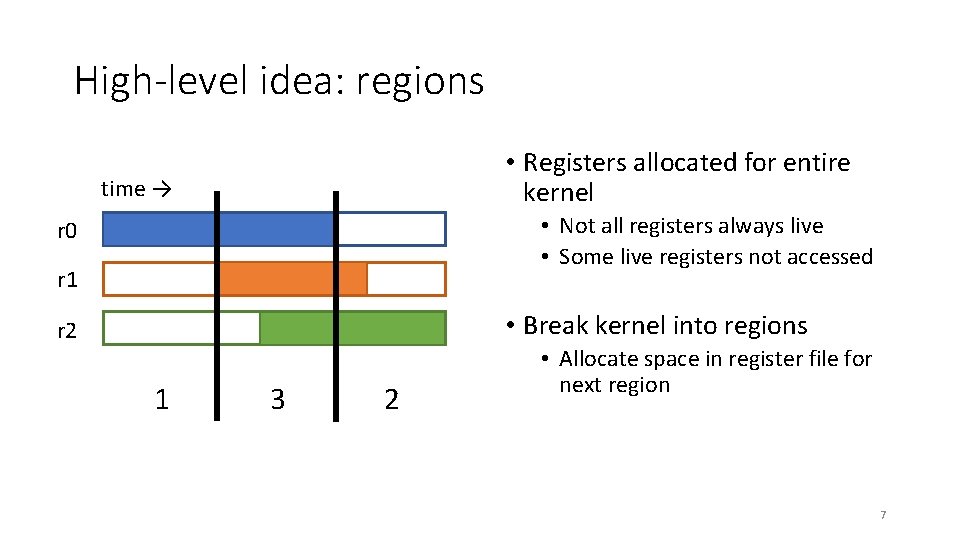

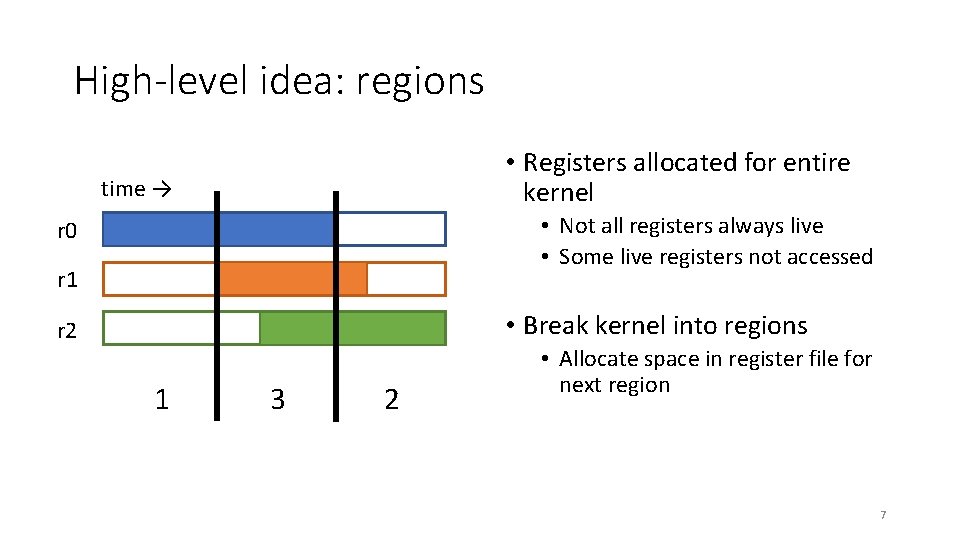

High-level idea: regions • Registers allocated for entire kernel time → • Not all registers always live • Some live registers not accessed r 0 r 1 • Break kernel into regions r 2 1 3 2 • Allocate space in register file for next region 7

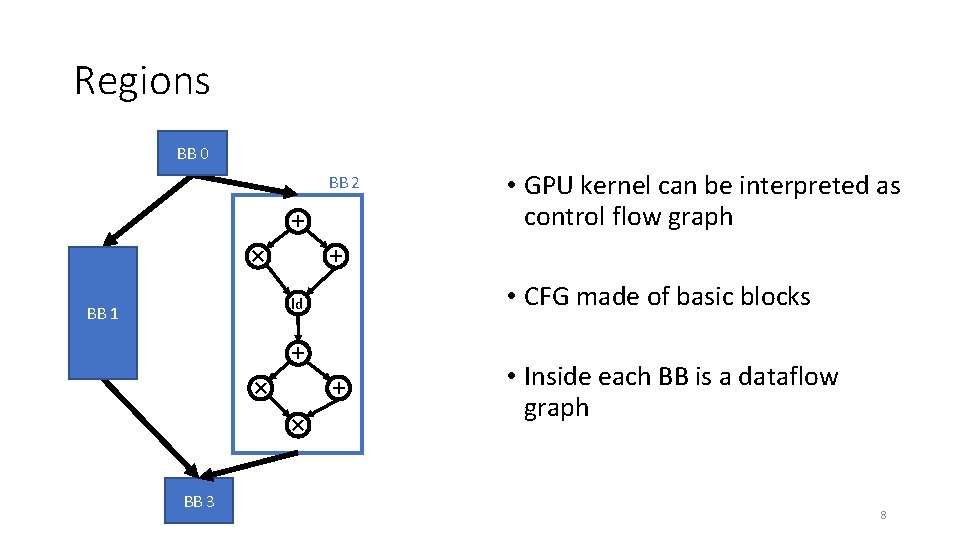

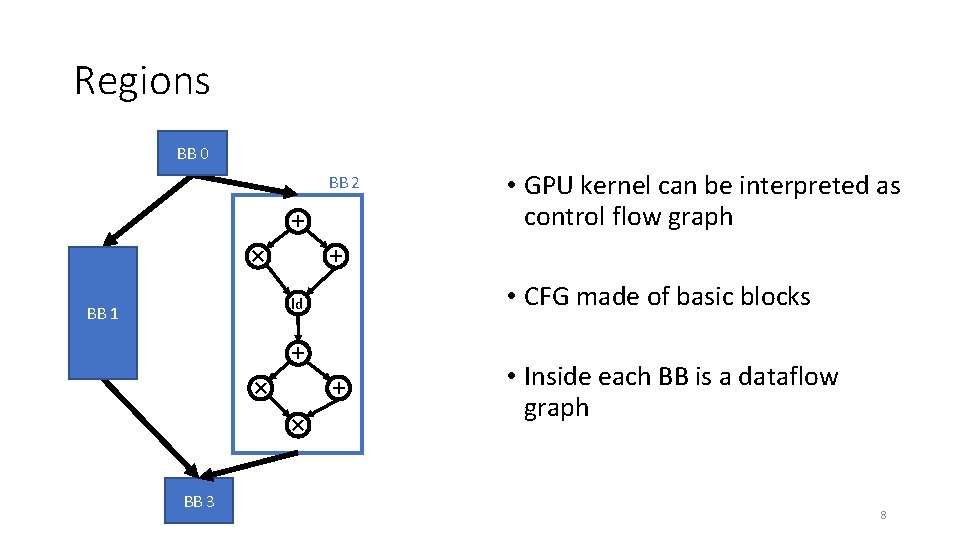

Regions BB 0 BB 2 + × + • CFG made of basic blocks ld BB 1 + × BB 3 • GPU kernel can be interpreted as control flow graph • Inside each BB is a dataflow graph 8

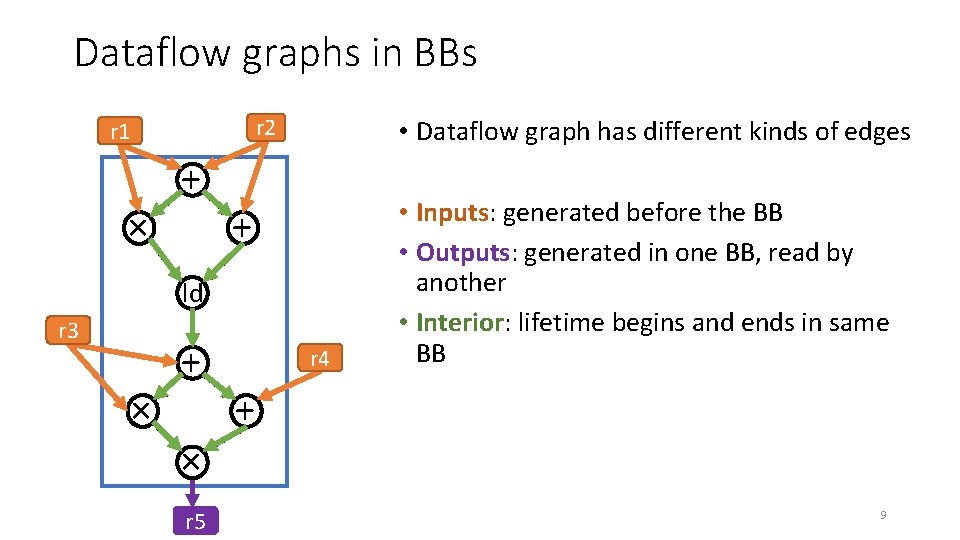

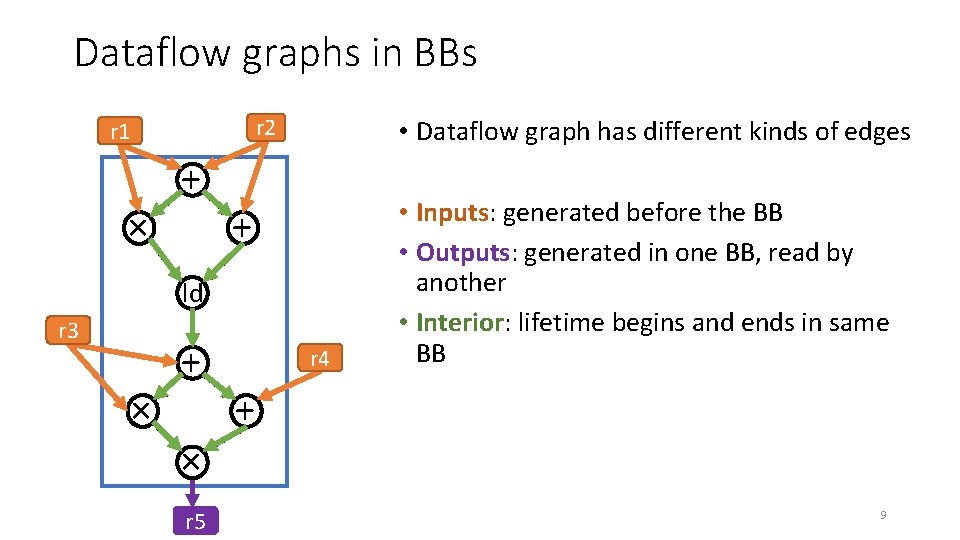

Dataflow graphs in BBs r 2 r 1 × + • Dataflow graph has different kinds of edges + ld r 3 × + r 4 • Inputs: generated before the BB • Outputs: generated in one BB, read by another • Interior: lifetime begins and ends in same BB + × r 5 9

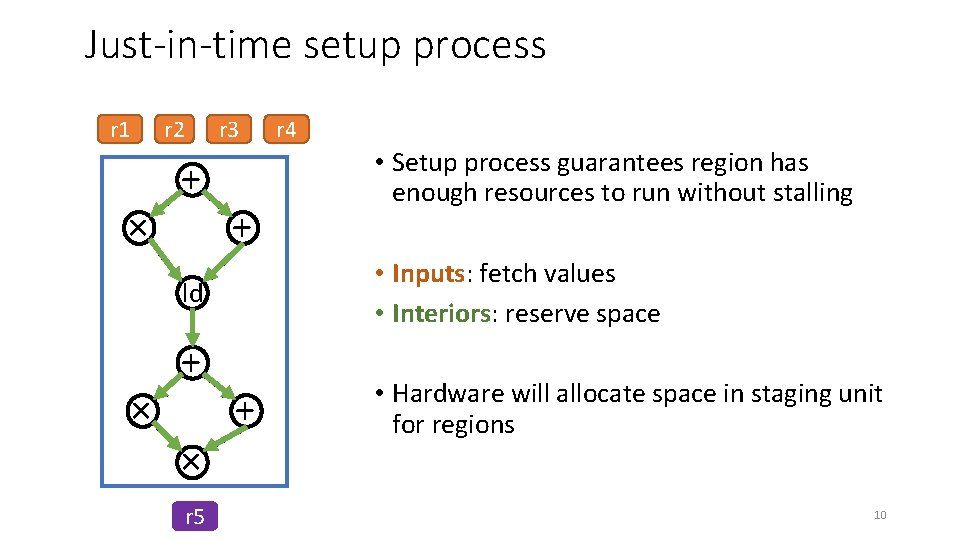

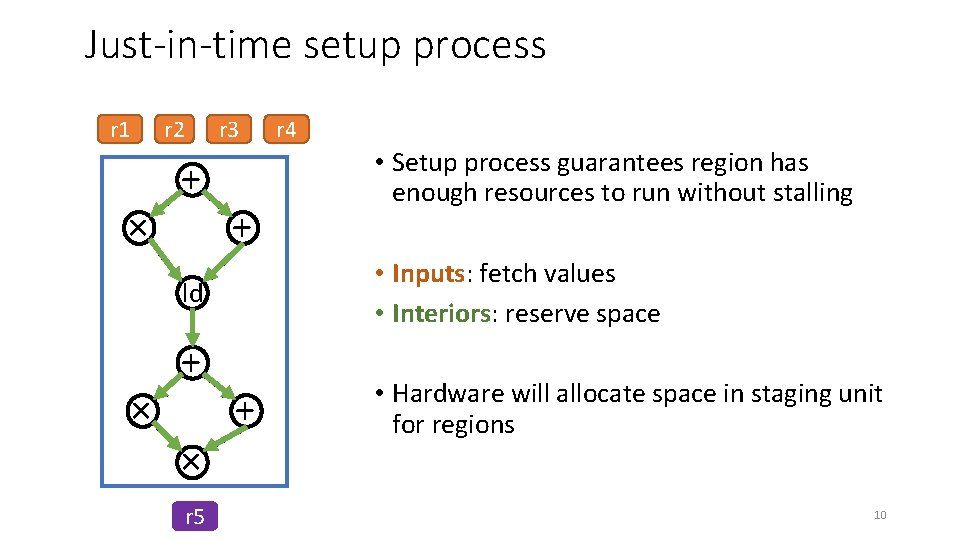

Just-in-time setup process r 1 r 2 × + r 3 + × × r 5 • Setup process guarantees region has enough resources to run without stalling • Inputs: fetch values • Interiors: reserve space ld + r 4 + • Hardware will allocate space in staging unit for regions 10

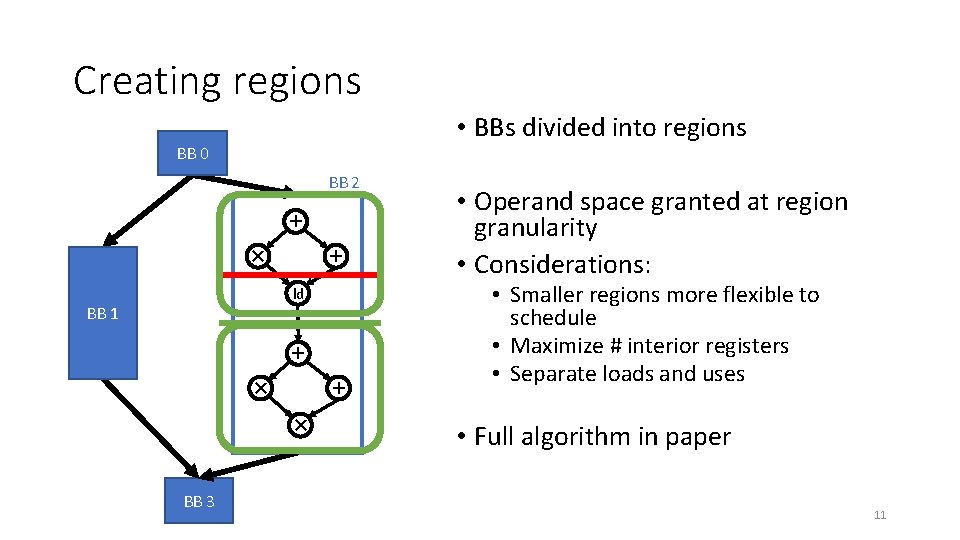

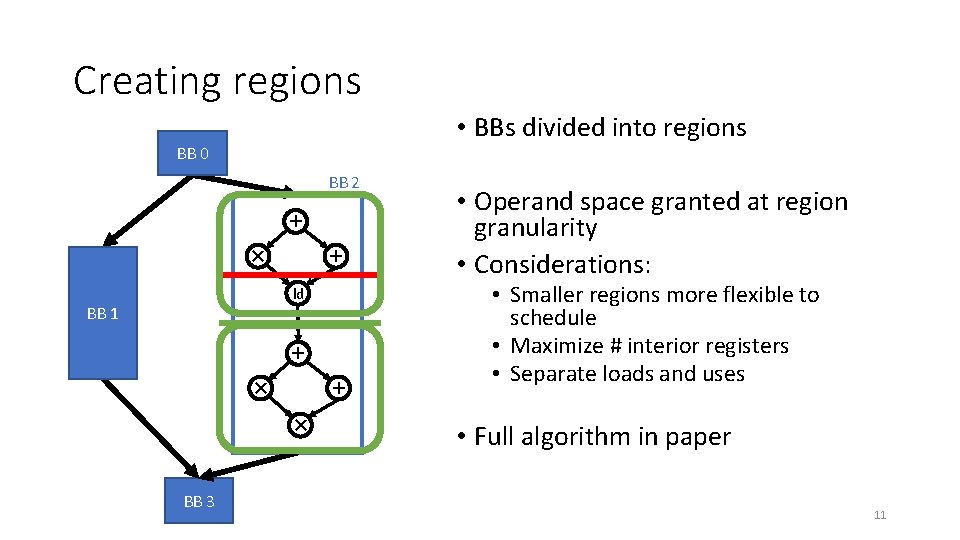

Creating regions • BBs divided into regions BB 0 BB 2 + × + ld BB 1 + × BB 3 • Operand space granted at region granularity • Considerations: • Smaller regions more flexible to schedule • Maximize # interior registers • Separate loads and uses • Full algorithm in paper 11

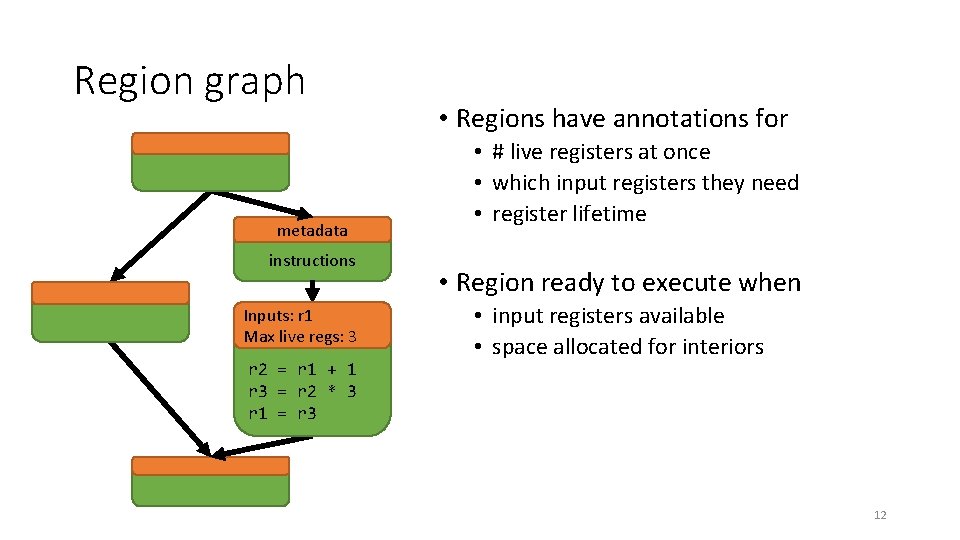

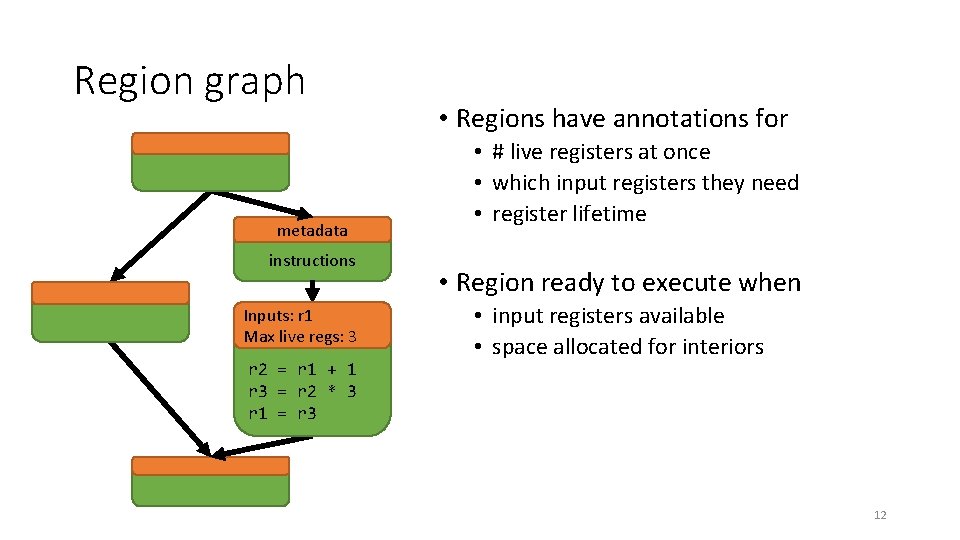

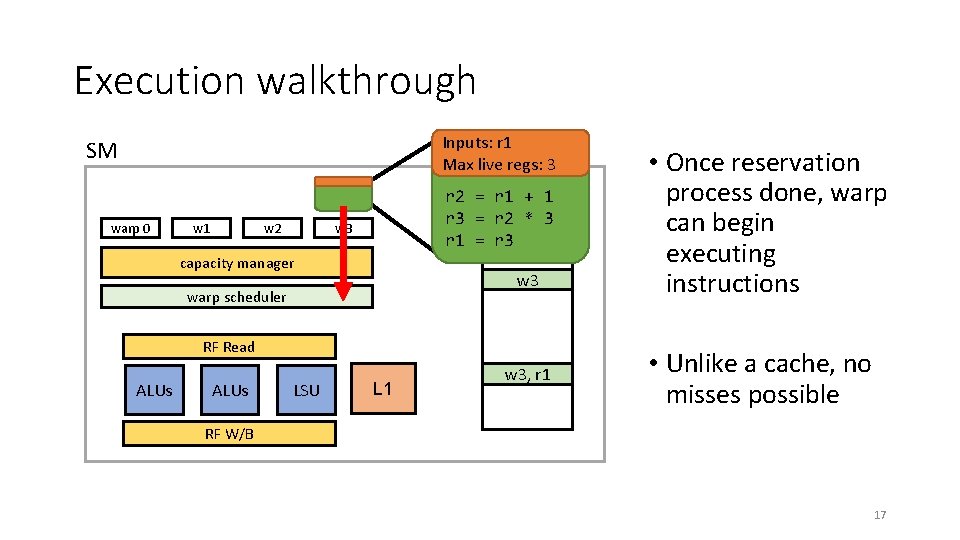

Region graph metadata instructions Inputs: r 1 Max live regs: 3 r 2 = r 1 + 1 r 3 = r 2 * 3 r 1 = r 3 • Regions have annotations for • # live registers at once • which input registers they need • register lifetime • Region ready to execute when • input registers available • space allocated for interiors 12

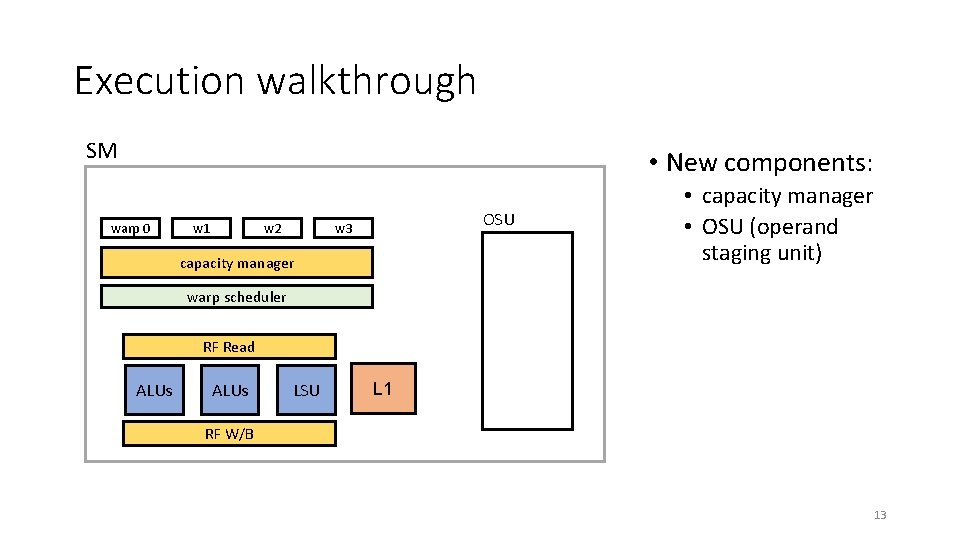

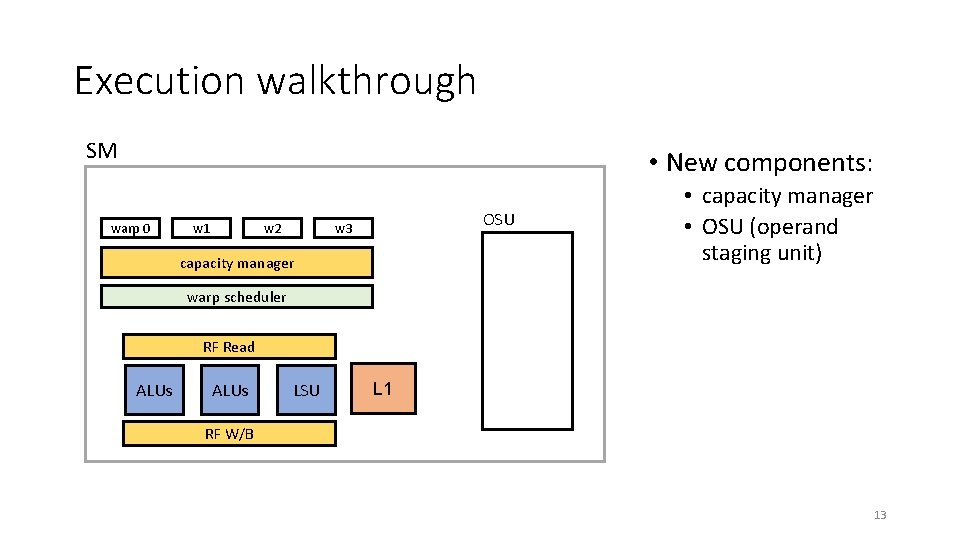

Execution walkthrough SM • New components: warp 0 OSU w 3 w 2 w 1 capacity manager • capacity manager • OSU (operand staging unit) warp scheduler RF Read ALUs LSU L 1 RF W/B 13

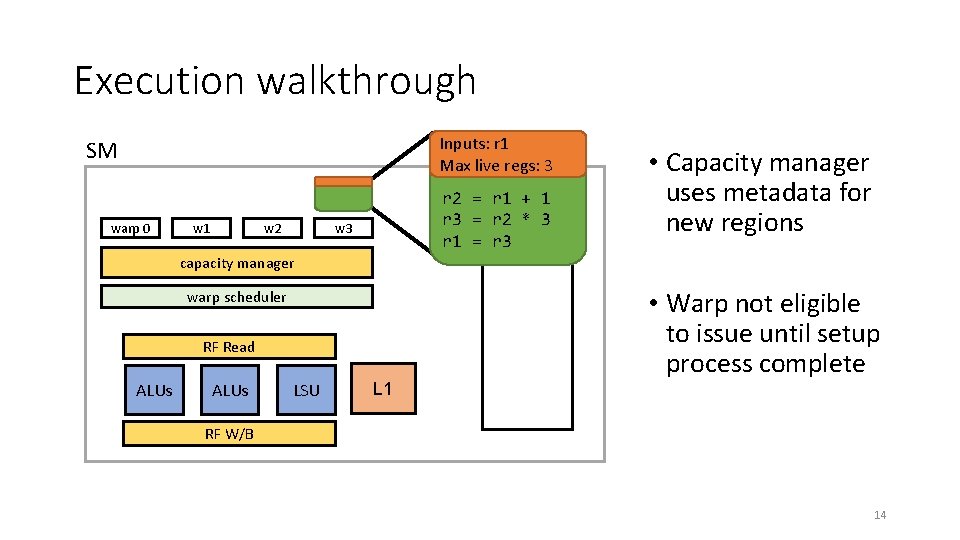

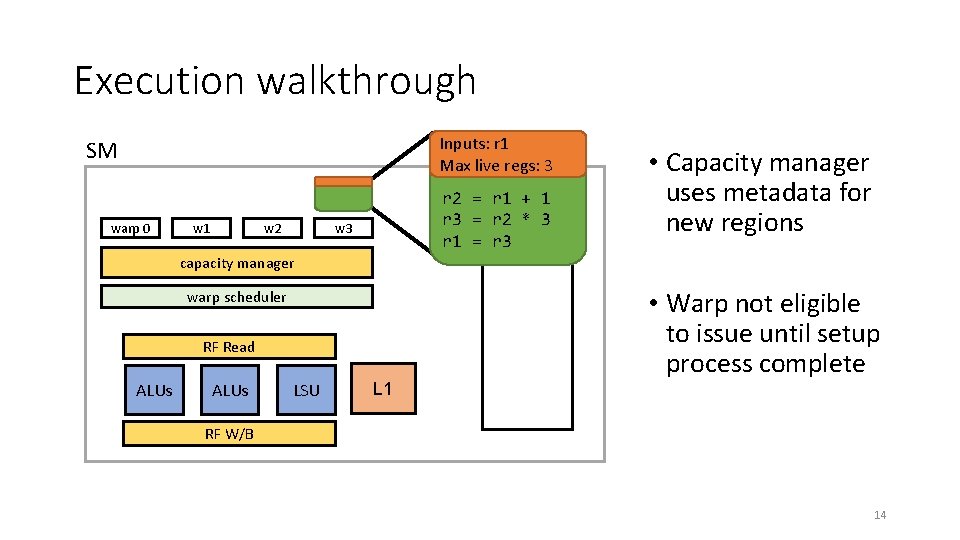

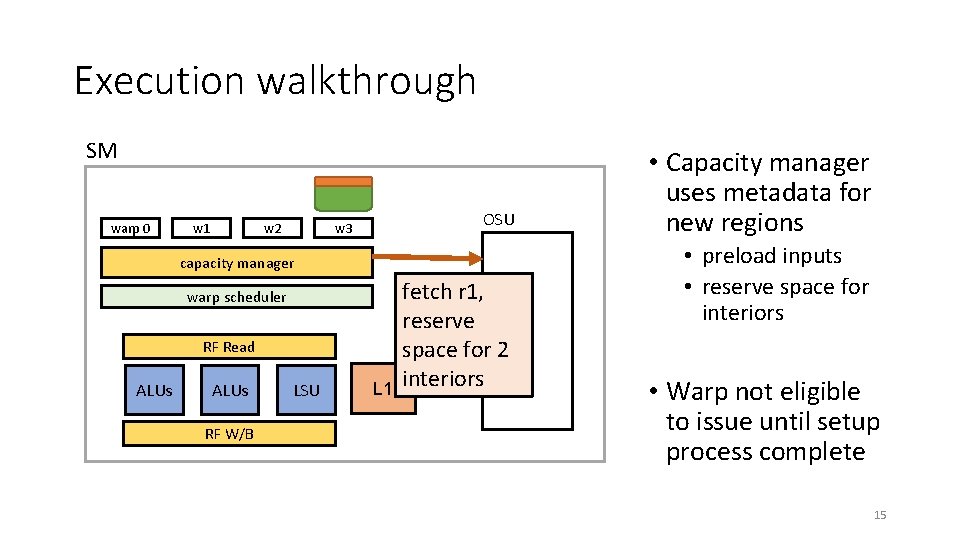

Execution walkthrough Inputs: r 1 Max live regs: 3 SM warp 0 w 3 w 2 w 1 r 2 = r 1 + 1 r 3 = OSU r 2 * 3 r 1 = r 3 • Capacity manager uses metadata for new regions capacity manager warp scheduler RF Read ALUs LSU L 1 • Warp not eligible to issue until setup process complete RF W/B 14

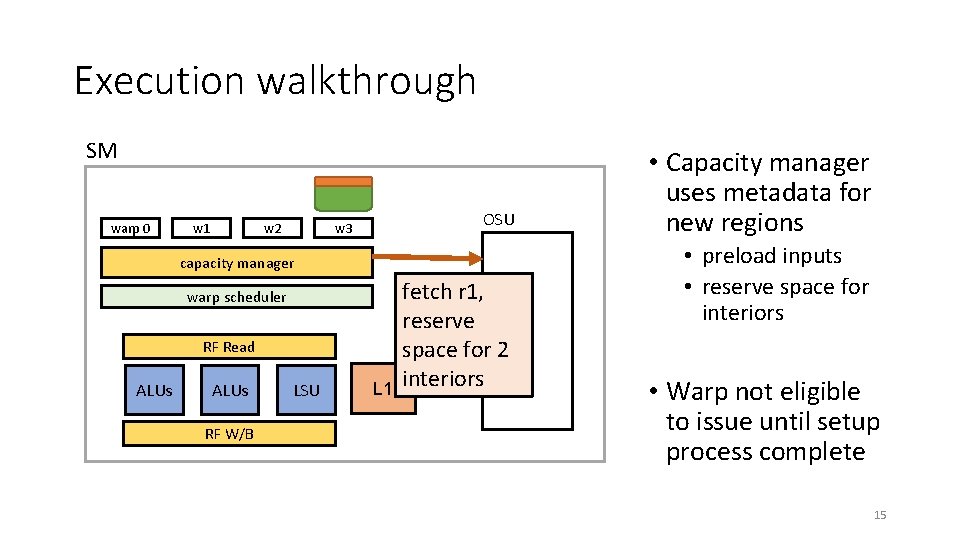

Execution walkthrough SM warp 0 OSU w 3 w 2 w 1 capacity manager warp scheduler RF Read ALUs RF W/B LSU L 1 fetch r 1, reserve space for 2 interiors • Capacity manager uses metadata for new regions • preload inputs • reserve space for interiors • Warp not eligible to issue until setup process complete 15

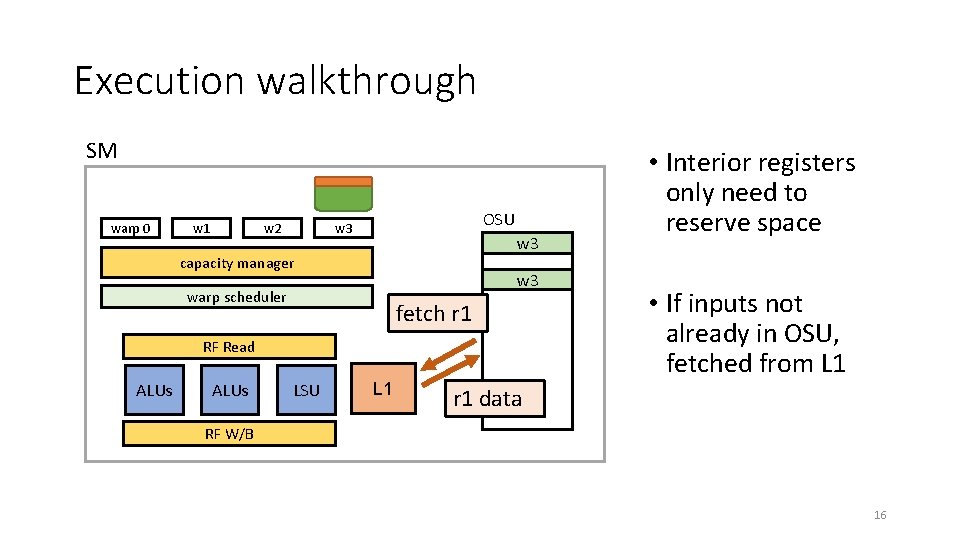

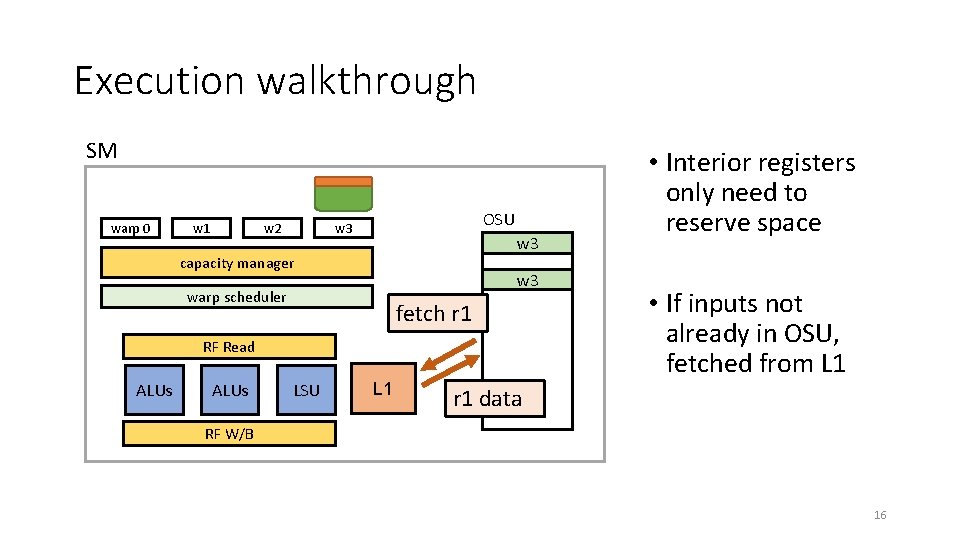

Execution walkthrough SM warp 0 OSU w 3 w 2 w 1 w 3 capacity manager w 3 warp scheduler fetch r 1 RF Read ALUs LSU L 1 • Interior registers only need to reserve space • If inputs not already in OSU, fetched from L 1 r 1 data RF W/B 16

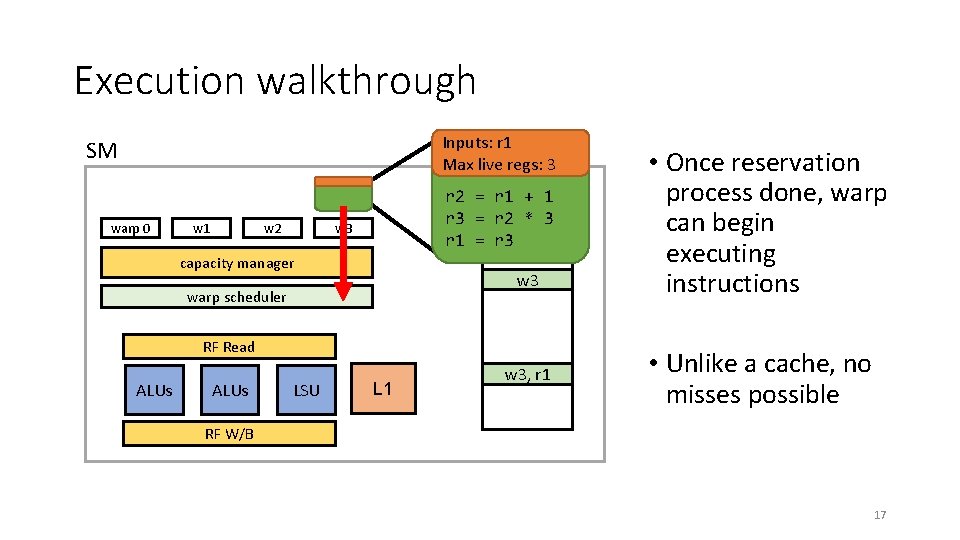

Execution walkthrough Inputs: r 1 Max live regs: 3 SM warp 0 w 3 w 2 w 1 r 2 = r 1 + 1 r 3 =OSU r 2 * 3 r 1 = r 3 w 3 capacity manager w 3 warp scheduler RF Read ALUs LSU L 1 w 3, r 1 • Once reservation process done, warp can begin executing instructions • Unlike a cache, no misses possible RF W/B 17

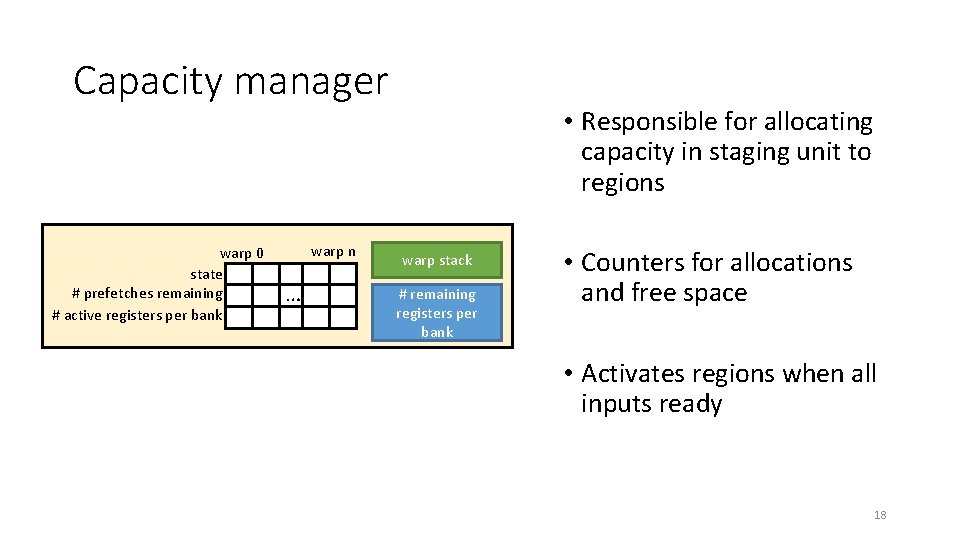

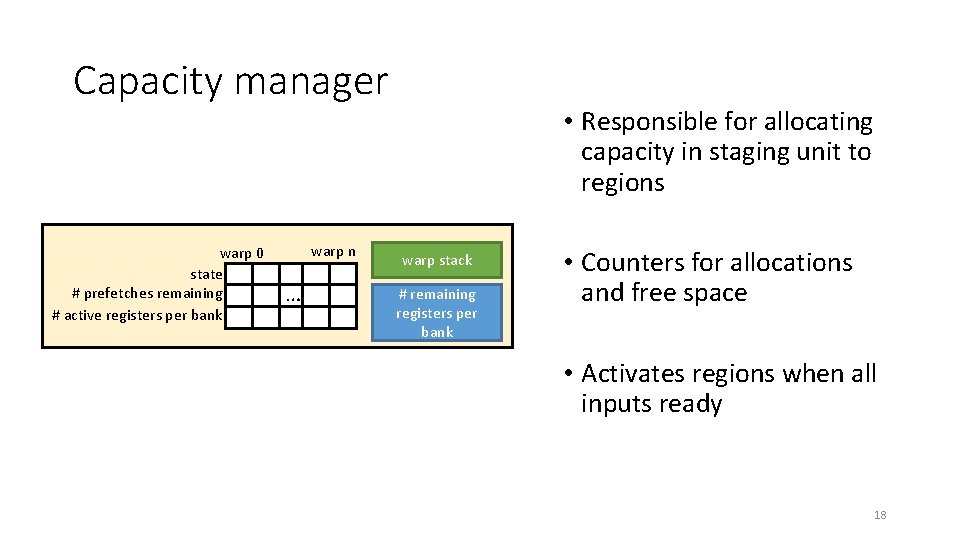

Capacity manager warp 0 state # prefetches remaining # active registers per bank warp n … • Responsible for allocating capacity in staging unit to regions warp stack # remaining registers per bank • Counters for allocations and free space • Activates regions when all inputs ready 18

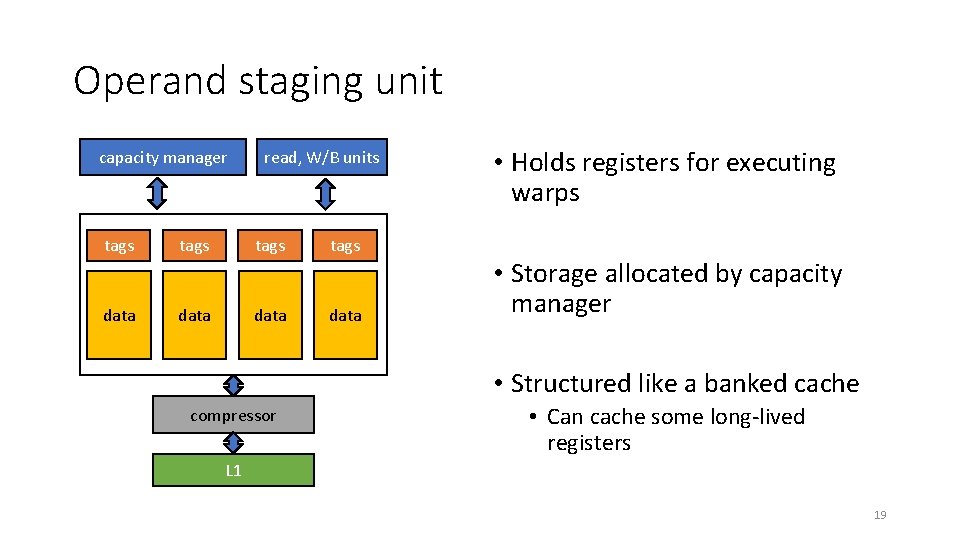

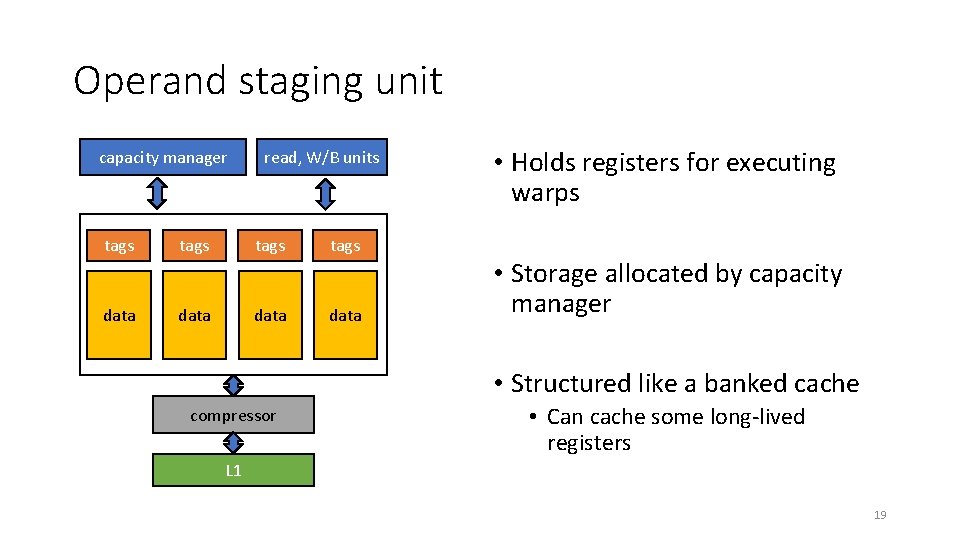

Operand staging unit capacity manager read, W/B units tags data • Holds registers for executing warps • Storage allocated by capacity manager • Structured like a banked cache compressor • Can cache some long-lived registers L 1 19

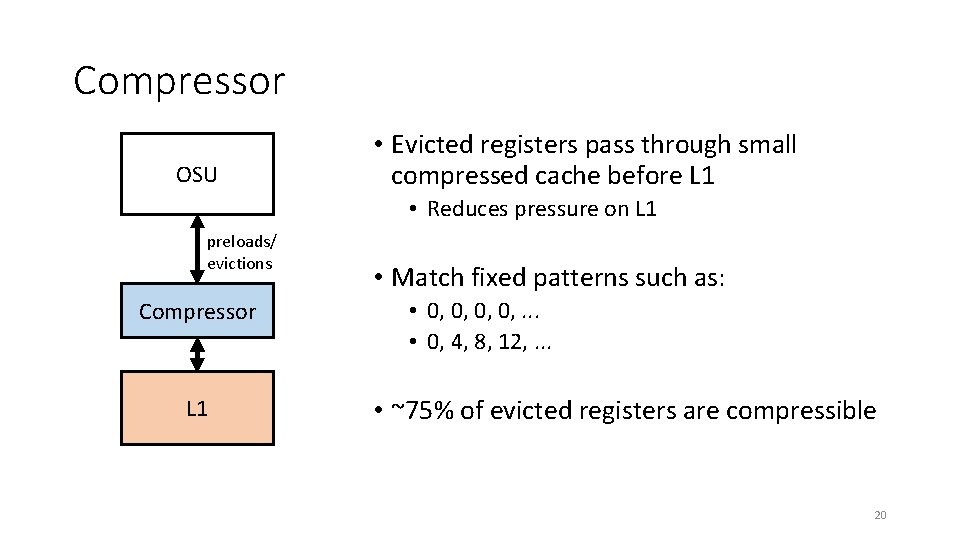

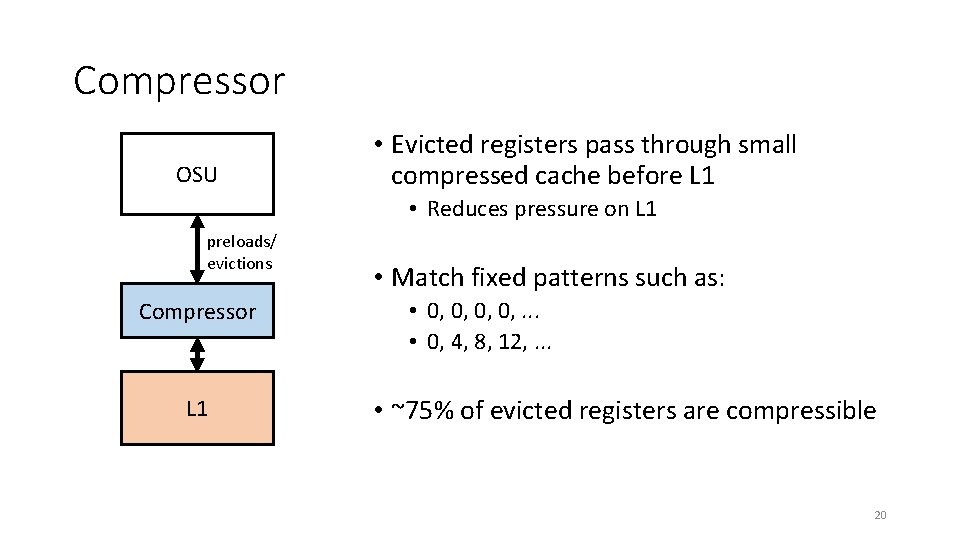

Compressor OSU • Evicted registers pass through small compressed cache before L 1 • Reduces pressure on L 1 preloads/ evictions Compressor L 1 • Match fixed patterns such as: • 0, 0, . . . • 0, 4, 8, 12, . . . • ~75% of evicted registers are compressible 20

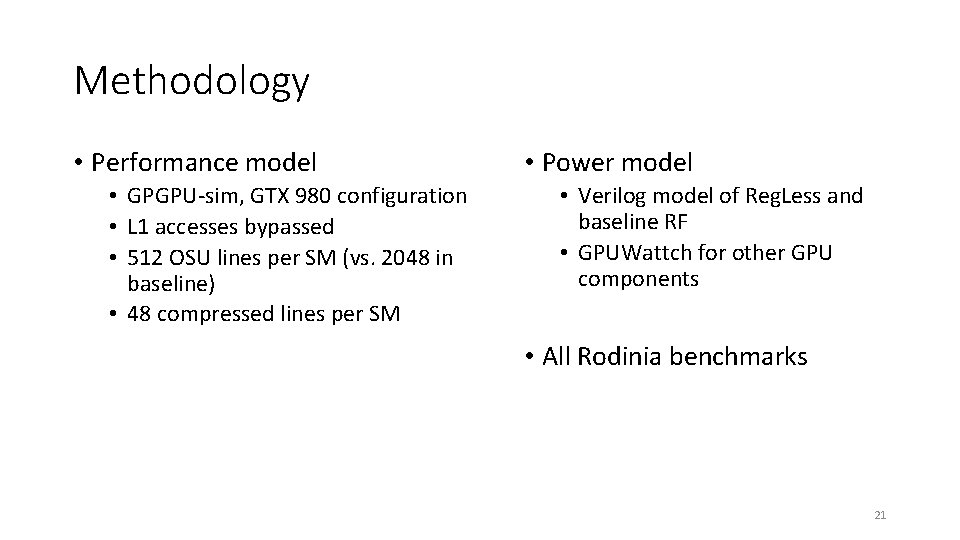

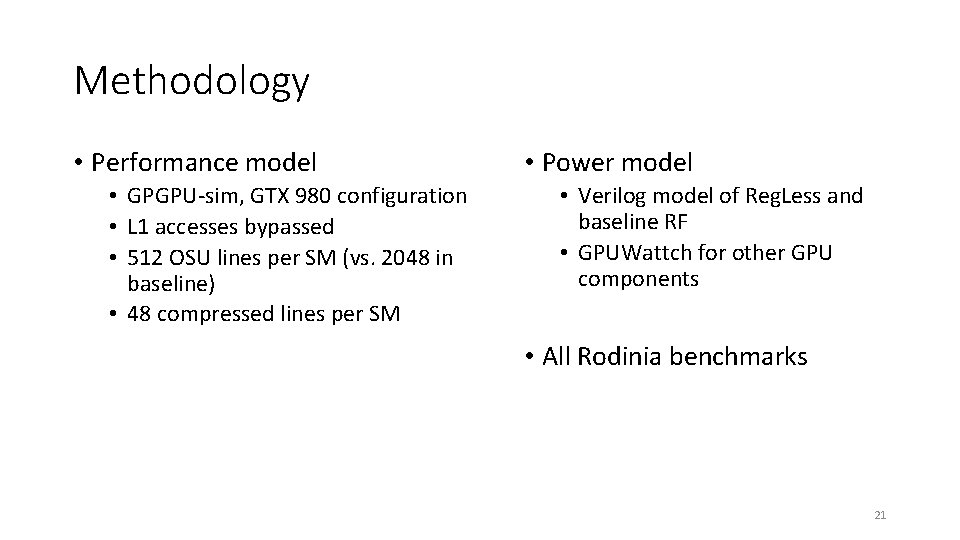

Methodology • Performance model • GPGPU-sim, GTX 980 configuration • L 1 accesses bypassed • 512 OSU lines per SM (vs. 2048 in baseline) • 48 compressed lines per SM • Power model • Verilog model of Reg. Less and baseline RF • GPUWattch for other GPU components • All Rodinia benchmarks 21

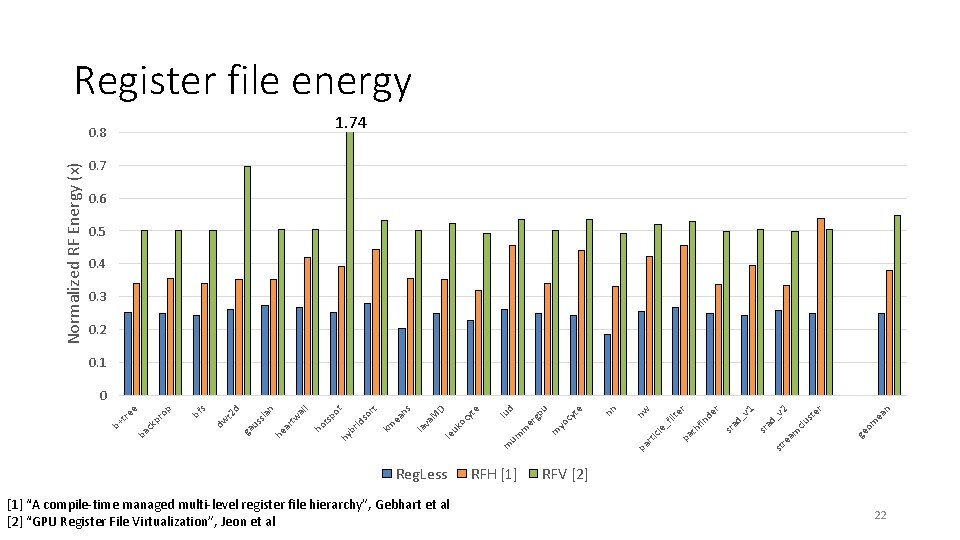

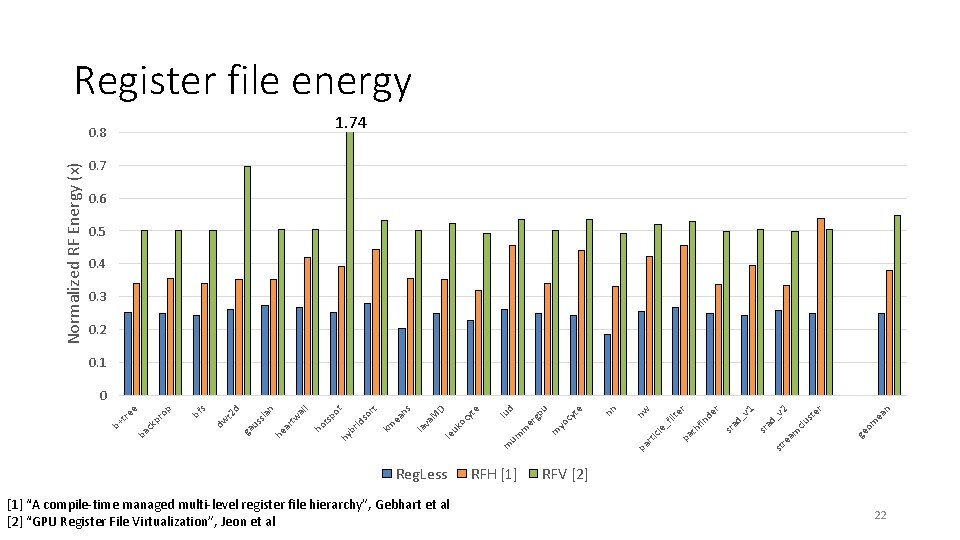

Register file energy 1. 74 Normalized RF Energy (x) 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 Reg. Less [1] “A compile-time managed multi-level register file hierarchy”, Gebhart et al [2] “GPU Register File Virtualization”, Jeon et al RFH [1] ea om ge st clu st re am n er 2 _v ad 1 sr _v sr ad de r th f in er pa le _f ilt nw tic pa r nn cy yo m er m m um te gp u d lu e yt le uk oc va M D s la ea n km rt hy br id so ot sp l ho t al ar tw he sia n us d ga t 2 dw bf s pr ck ba b+ tre e op 0 RFV [2] 22

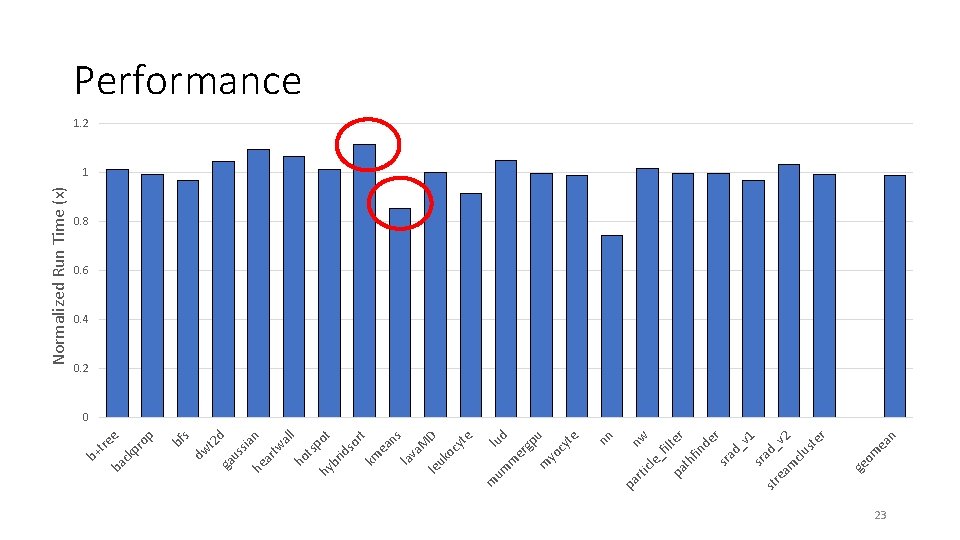

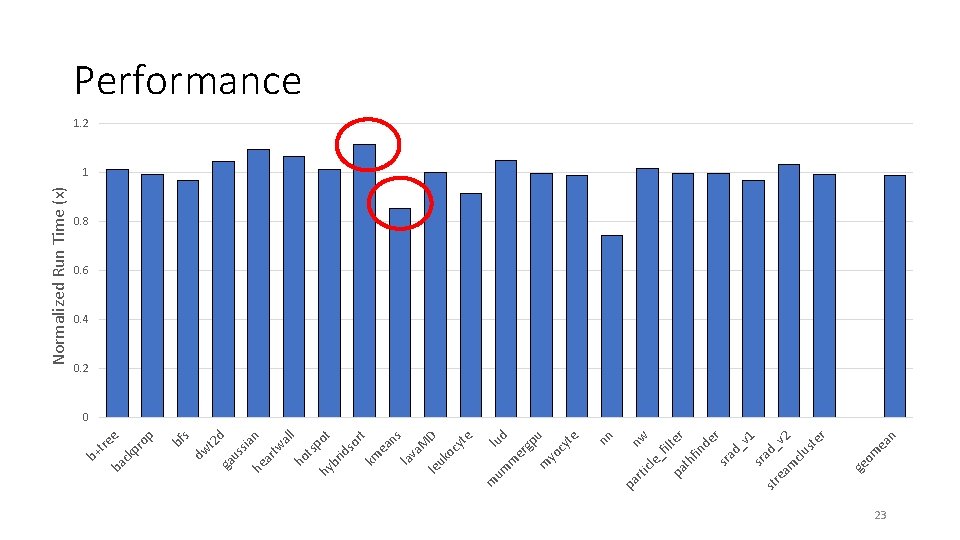

n ea om ge d_ v 1 sr a st re d_v 2 am clu st er sr a r te de r fin th pa nw _f il cle nn e u cy t yo m lu d gp er m um rti pa m br t id so rt km ea ns la va M D le uk oc yt e hy ts po l n al sia d t 2 s bf rtw ho he a us ga dw e op pr ck ba tre b+ Normalized Run Time (x) Performance 1. 2 1 0. 8 0. 6 0. 4 0. 2 0 23

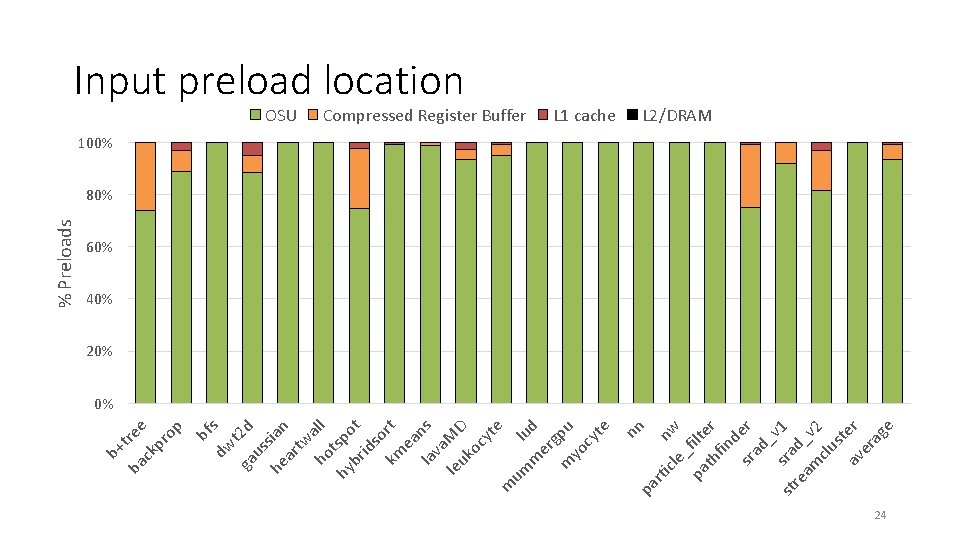

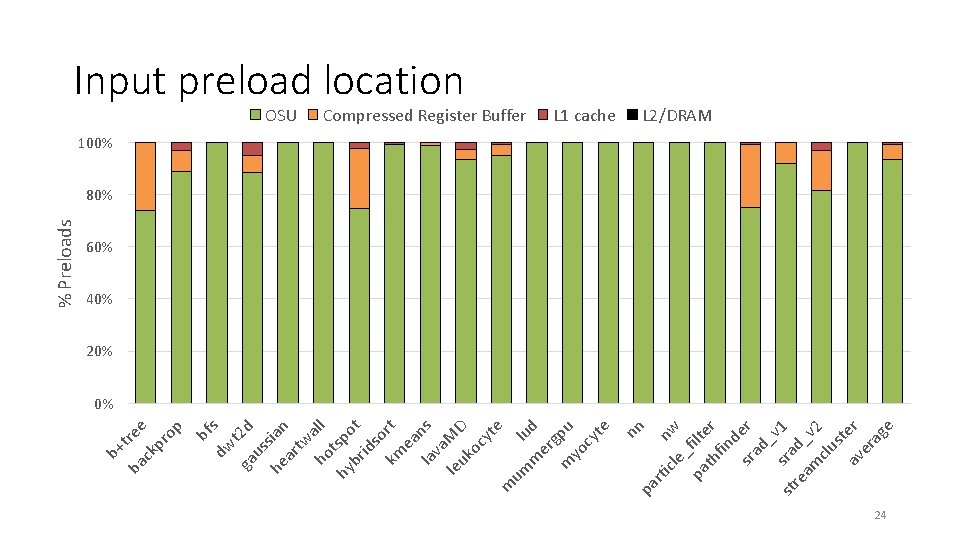

cle nw _ pa filte th r fin d sr er ad _v s st ra 1 re d_ am v clu 2 st av er er ag e L 1 cache rti Compressed Register Buffer nn OSU pa ba tree ck pr op bf dw s ga t 2 d us he sian ar tw ho all hy tspo br t id so km rt ea la ns va le MD uk oc yt e m um lu m d er g m pu yo cy te b+ % Preloads Input preload location L 2/DRAM 100% 80% 60% 40% 20% 0% 24

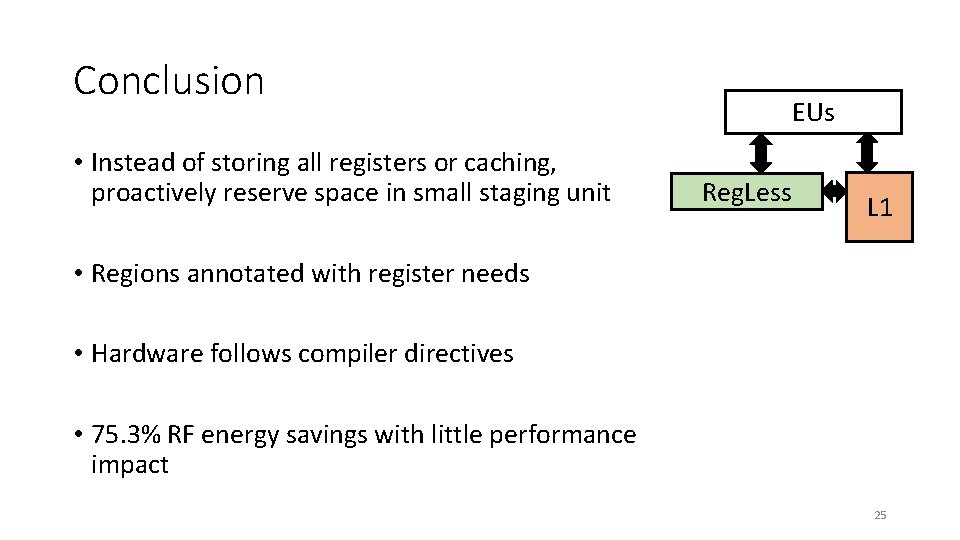

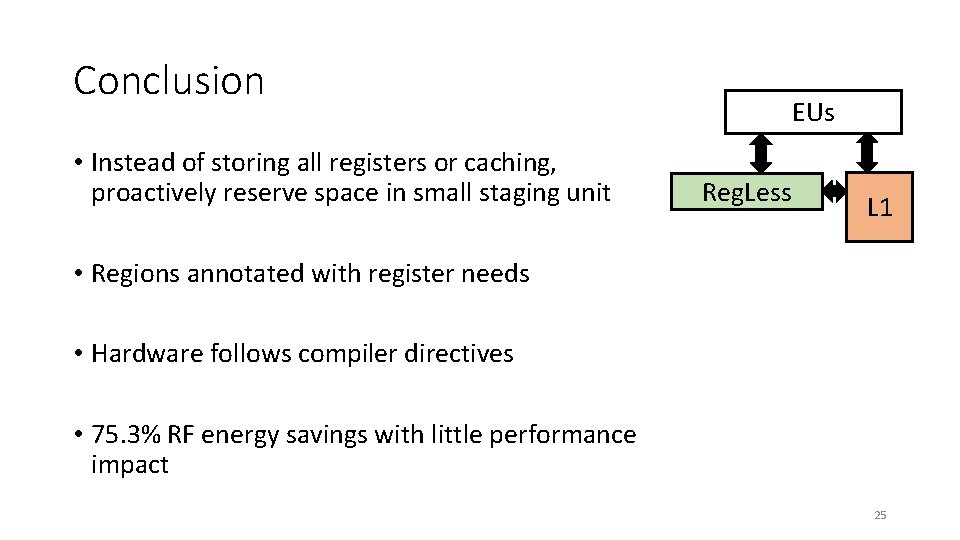

Conclusion • Instead of storing all registers or caching, proactively reserve space in small staging unit EUs Reg. Less L 1 • Regions annotated with register needs • Hardware follows compiler directives • 75. 3% RF energy savings with little performance impact 25

Reg. Less: Just-in-Time Operand Staging for GPUs John Kloosterman, Jonathan Beaumont, D. Anoushe Jamshidi, Jonathan Bailey, Trevor Mudge, Scott Mahlke University of Michigan Electrical Engineering and Computer Science