Rain Forest A Framework for Fast Decision Tree

- Slides: 19

Rain. Forest - A Framework for Fast Decision Tree Construction of Large Datasets J. Gehrke, R. Ramakrishnan, V. Ganti Dept. of Computer Sciences University of Wisconsin. Madison Presented By: Hui Yang April 18, 2001

Introduction to Classification n n An important Data Mining Problem Input: a database of training records n n n Goal n n Class label attributes Predictor Attributes to build a concise model of the distribution of class label in terms of predictor attributes Applications n scientific experiments, medical diagnosis, fraud detection, etc.

Decision Tree: A Classification Model n n It is one of the most attractive classification models There a large number of algorithms to construct decision trees n n n E. g. : SLIQ, CART, C 4. 5 SPRINT Most are main memory algorithms Tradeoff between supporting large databases, performance and constructing more accurate decision trees

Motivation of Rain. Forest n n Developing a unifying framework that can be applied to most decision tree algorithms, and results in a scalable version of this algorithm without modifying the results. Separating the scalability aspects of these algorithms from the central features that determine the quality of the decision trees

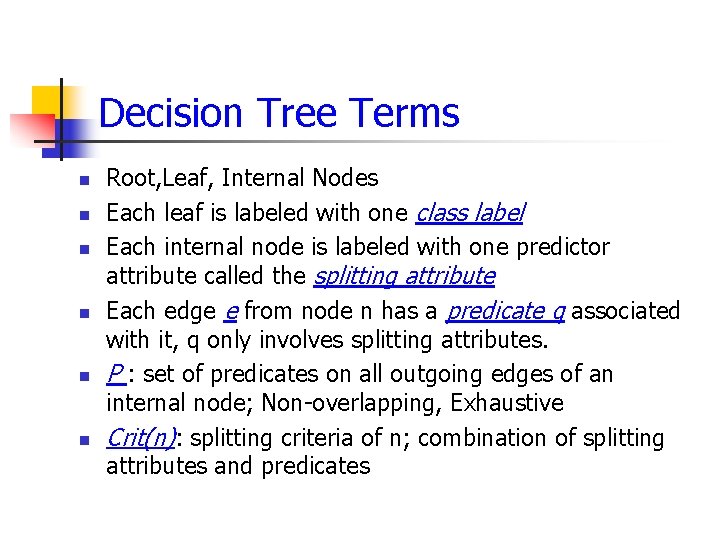

Decision Tree Terms n n n Root, Leaf, Internal Nodes Each leaf is labeled with one class label Each internal node is labeled with one predictor attribute called the splitting attribute Each edge e from node n has a predicate q associated with it, q only involves splitting attributes. P : set of predicates on all outgoing edges of an internal node; Non overlapping, Exhaustive Crit(n): splitting criteria of n; combination of splitting attributes and predicates

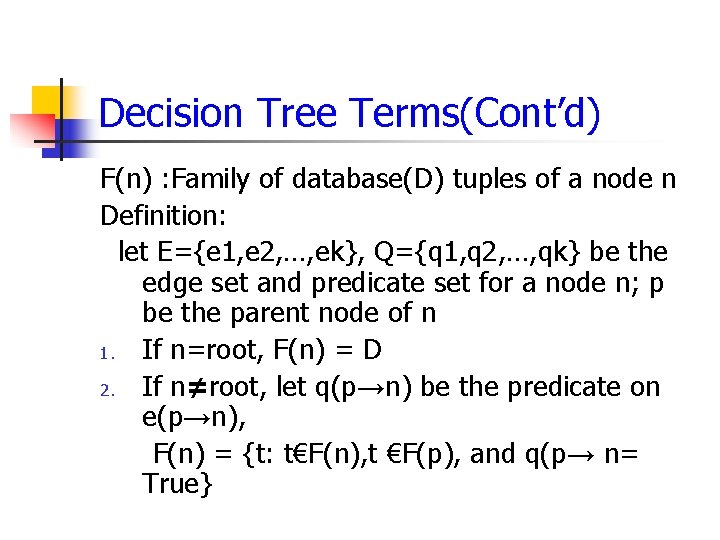

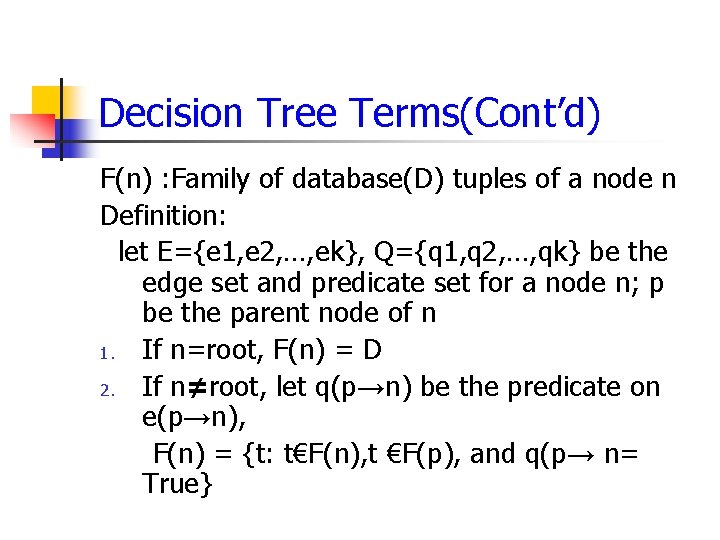

Decision Tree Terms(Cont’d) F(n) : Family of database(D) tuples of a node n Definition: let E={e 1, e 2, …, ek}, Q={q 1, q 2, …, qk} be the edge set and predicate set for a node n; p be the parent node of n 1. If n=root, F(n) = D 2. If n≠root, let q(p→n) be the predicate on e(p→n), F(n) = {t: t€F(n), t €F(p), and q(p→ n= True}

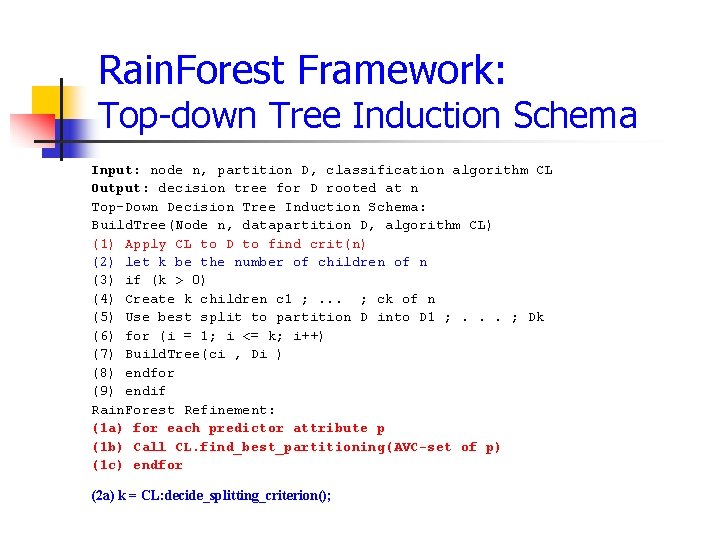

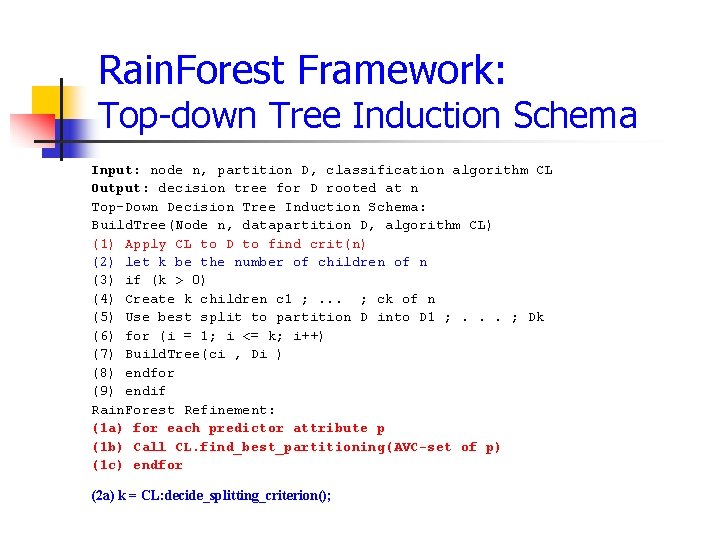

Rain. Forest Framework: Top down Tree Induction Schema Input: node n, partition D, classification algorithm CL Output: decision tree for D rooted at n Top Down Decision Tree Induction Schema: Build. Tree(Node n, datapartition D, algorithm CL) (1) Apply CL to D to find crit(n) (2) let k be the number of children of n (3) if (k > 0) (4) Create k children c 1 ; . . . ; ck of n (5) Use best split to partition D into D 1 ; . . . ; Dk (6) for (i = 1; i <= k; i++) (7) Build. Tree(ci , Di ) (8) endfor (9) endif Rain. Forest Refinement: (1 a) for each predictor attribute p (1 b) Call CL. find_best_partitioning(AVC set of p) (1 c) endfor (2 a) k = CL: decide_splitting_criterion();

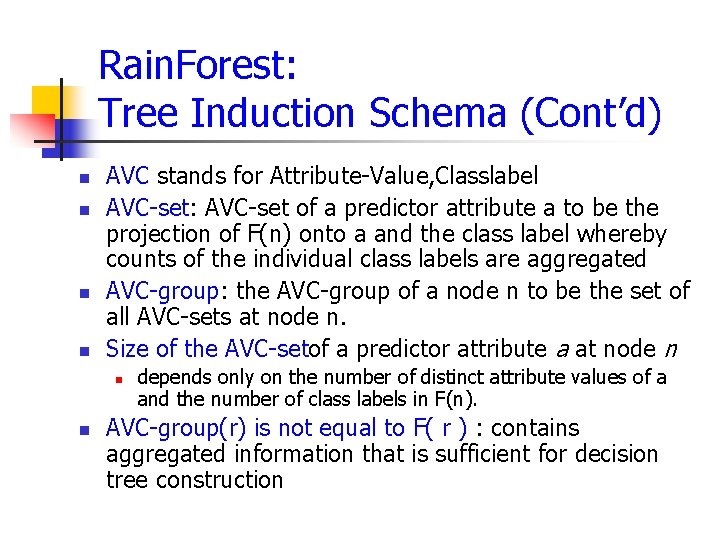

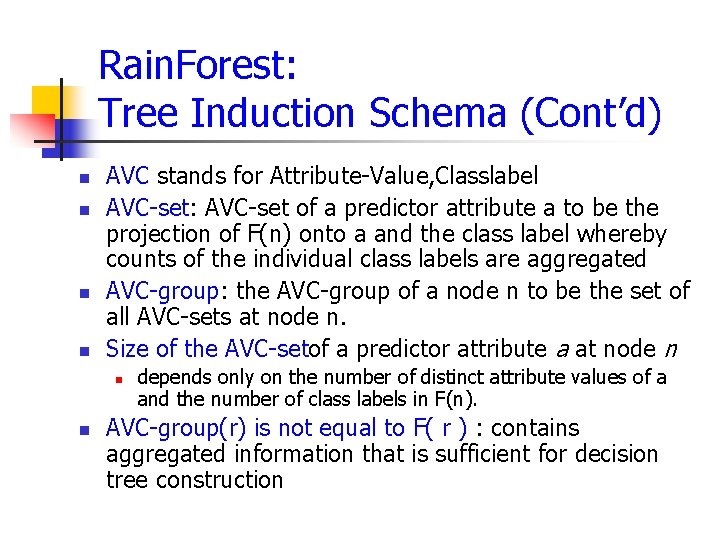

Rain. Forest: Tree Induction Schema (Cont’d) n n AVC stands for Attribute Value, Classlabel AVC set: AVC set of a predictor attribute a to be the projection of F(n) onto a and the class label whereby counts of the individual class labels are aggregated AVC group: the AVC group of a node n to be the set of all AVC sets at node n. Size of the AVC setof a predictor attribute a at node n n n depends only on the number of distinct attribute values of a and the number of class labels in F(n). AVC group(r) is not equal to F( r ) : contains aggregated information that is sufficient for decision tree construction

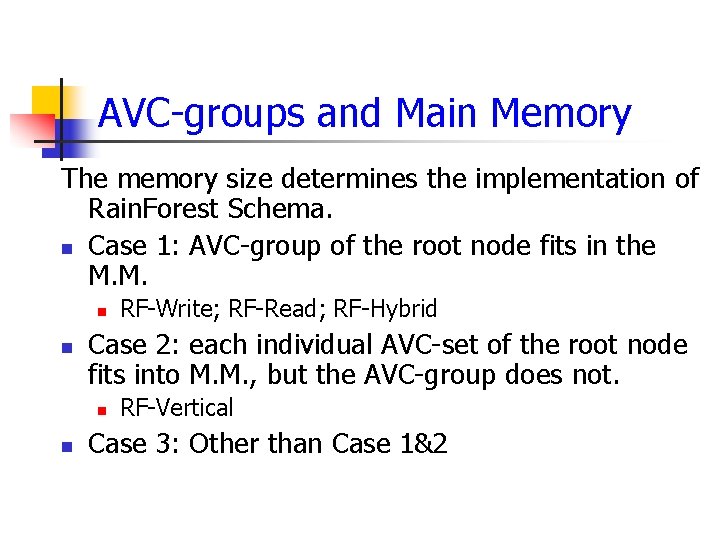

AVC groups and Main Memory The memory size determines the implementation of Rain. Forest Schema. n Case 1: AVC group of the root node fits in the M. M. n n Case 2: each individual AVC set of the root node fits into M. M. , but the AVC group does not. n n RF Write; RF Read; RF Hybrid RF Vertical Case 3: Other than Case 1&2

Steps for Algorithms in Rain. Forest Family 1. AVC group Construction 2. Choose Splitting Attribute and Predicate n This step uses the decision tree algorithm CL that is being scaled using the Rain. Forest framework 3. Partition D Across the Children Nodes n We must read the entire dataset and write out all records, partitioning them into child ``buckets'' according to the splitting criterion chosen in the previous step.

Algorithms: RF Write/RF Read Prerequisite: AVC group fits into M. M. n RF Write: n n For each level of the tree, it reads the entire database twice and writes the entire database once RF Read n n Makes an increasing number of scans of entire database Marks one end of the design spectrum in the Rain. Forest framework

Algorithm: RF Hybrid n n Combination of RF Write and RF Read Performance can be improved by concurrent construction of AVC sets

Algorithm: RF Vertical Prerequisite: individual AVC set can fit into M. M. n For very large sets, a temporary file is generated for each node, the large sets are constructed from this temporary file. n For small sets, construct them in M. M.

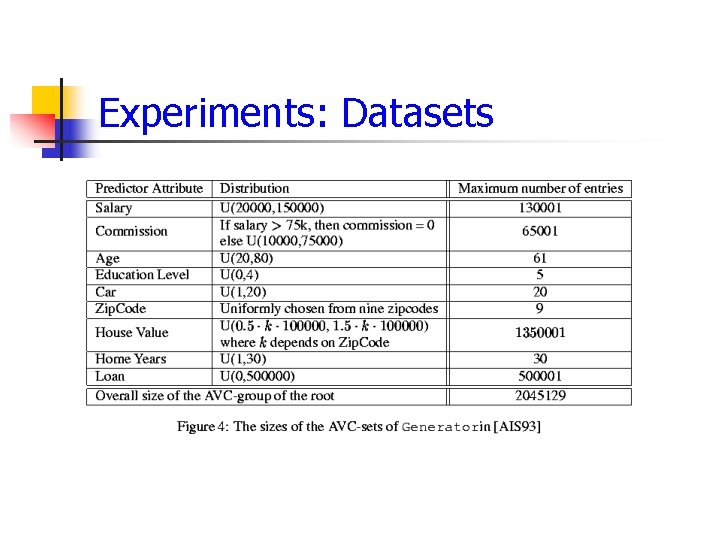

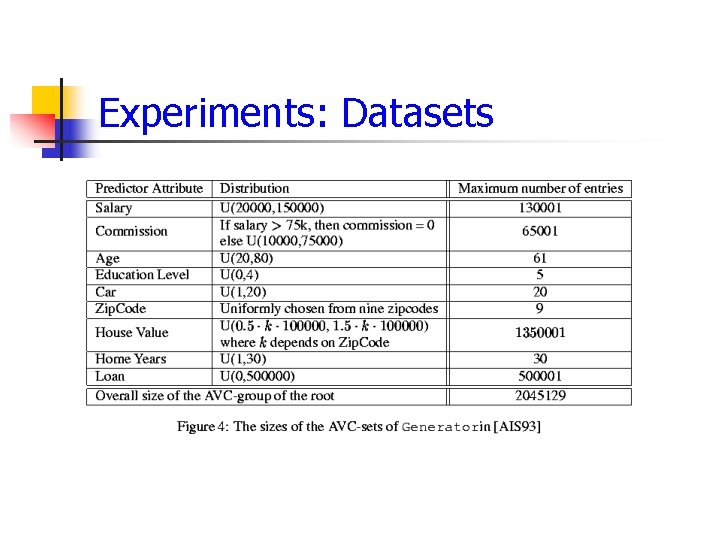

Experiments: Datasets

Experiment Results: (1) n When the overall maximum number of entries in the AVC group of the root node is about 2. 1 million, requiring a maximum memory size of 17 MB.

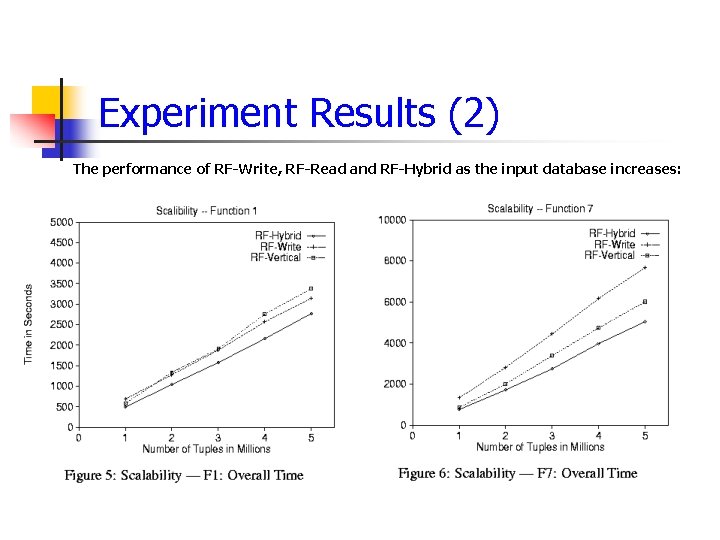

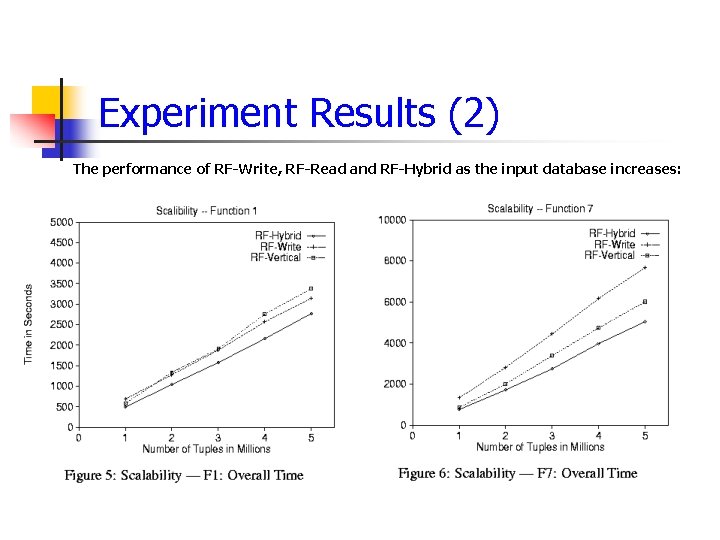

Experiment Results (2) The performance of RF-Write, RF-Read and RF-Hybrid as the input database increases:

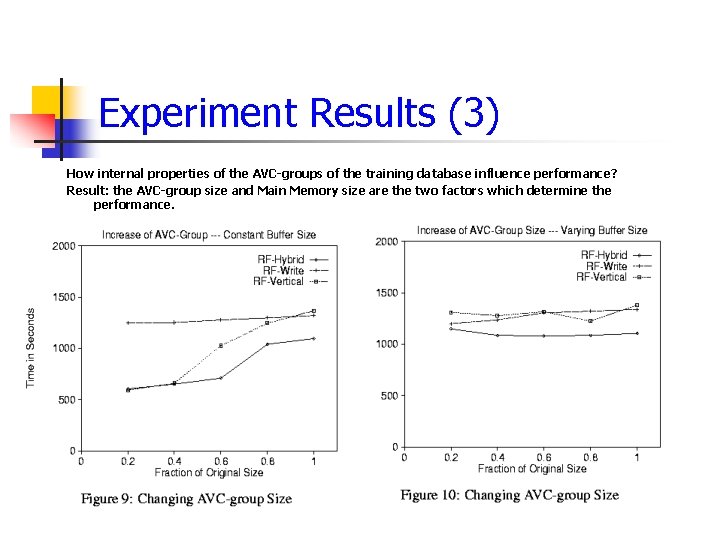

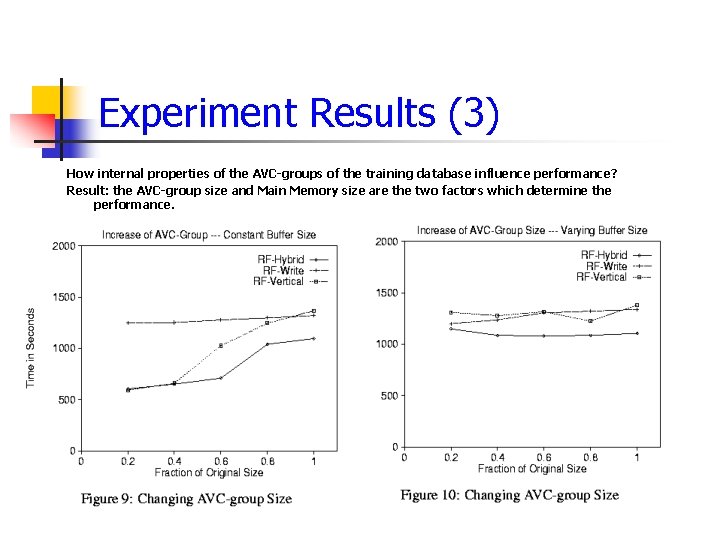

Experiment Results (3) How internal properties of the AVC-groups of the training database influence performance? Result: the AVC-group size and Main Memory size are the two factors which determine the performance.

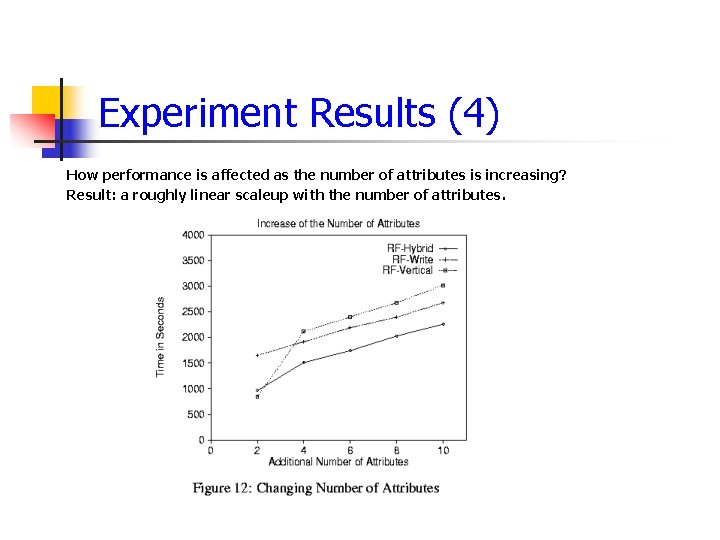

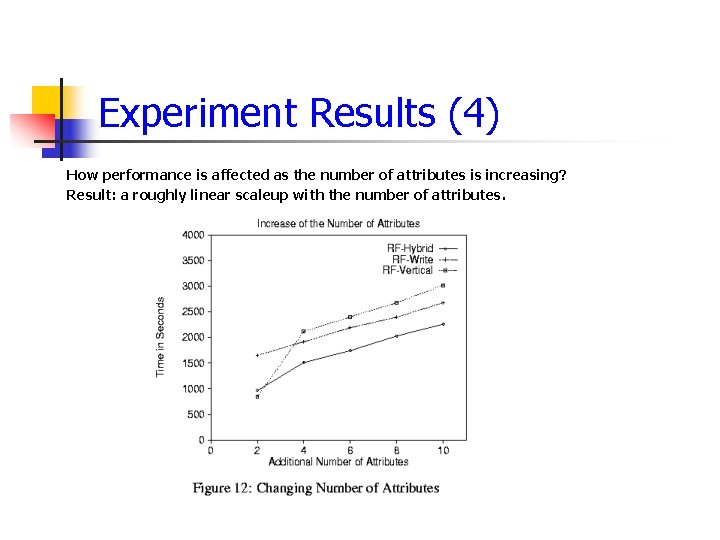

Experiment Results (4) How performance is affected as the number of attributes is increasing? Result: a roughly linear scaleup with the number of attributes.

Conclusion n n A scaling decision tree algorithm that is applicable to all decision tree algorithms at that time. AVC group is the key idea. Database scan at each level of the decision tree Too much dependence over the size of available main memory