Photos placed in horizontal position with even amount

- Slides: 33

Photos placed in horizontal position with even amount of white space between photos and header Adaptive Neural Algorithms The What, Why, and How Brad Aimone, Ph. D. Hardware Acceleration of Adaptive Neural Algorithms Project Sandia National Laboratories is a multi-program laboratory managed and operated by Sandia Corporation, a wholly owned subsidiary of Lockheed Martin Corporation, for the U. S. Department of Energy’s National Nuclear Security Administration under contract DE-AC 04 -94 AL 85000. SAND NO. 2011 -XXXXP Brad Aimone SNL 2015 NICE 1

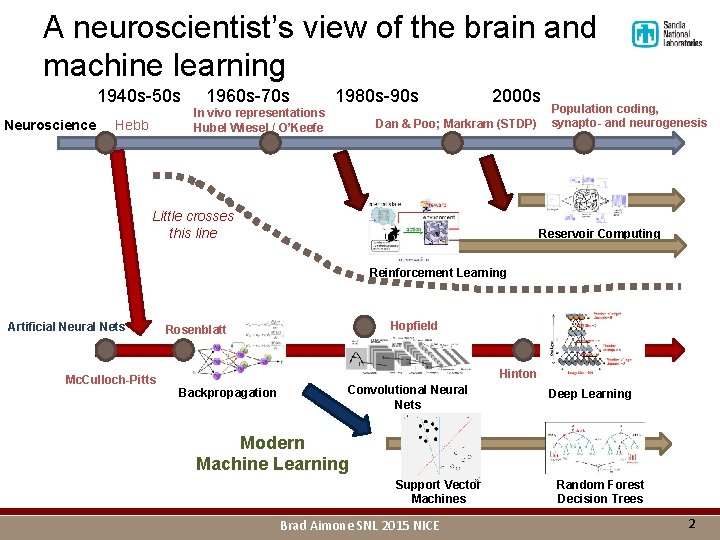

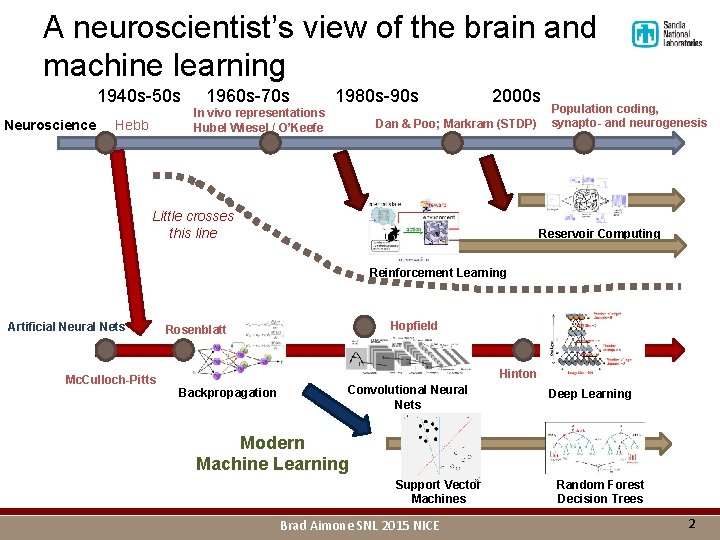

A neuroscientist’s view of the brain and machine learning 1940 s-50 s Neuroscience 1960 s-70 s 1980 s-90 s In vivo representations Hubel Wiesel / O’Keefe Hebb 2000 s Dan & Poo; Markram (STDP) Little crosses this line Population coding, synapto- and neurogenesis Reservoir Computing Reinforcement Learning Artificial Neural Nets Mc. Culloch-Pitts Hopfield Rosenblatt Hinton Backpropagation Convolutional Neural Nets Deep Learning Modern Machine Learning Support Vector Machines Brad Aimone SNL 2015 NICE Random Forest Decision Trees 2

What is special about the brain? § Many things… § § § Spiking High dimensional representations and dynamics Hierarchical architectures Sparse distributed representations … § Continuous, online adaptation Brad Aimone SNL 2015 NICE 3

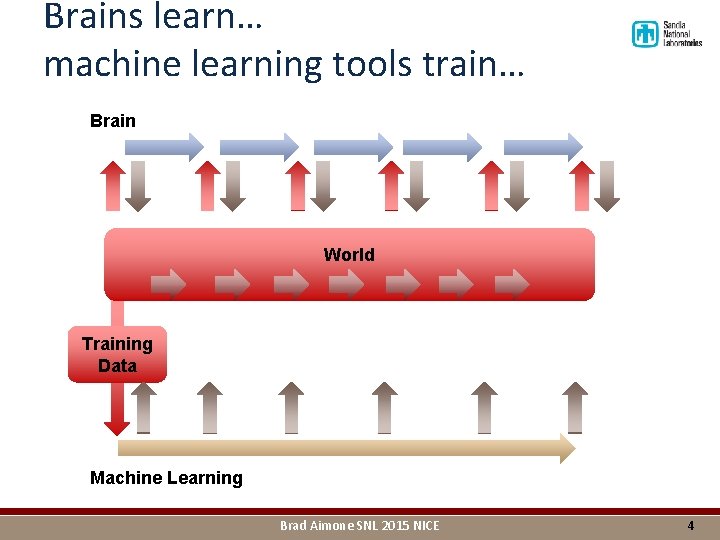

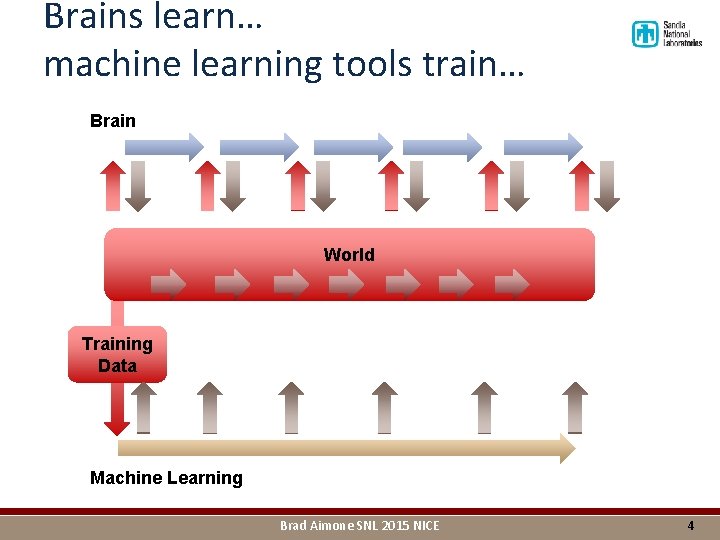

Brains learn… machine learning tools train… Brain World Training Data Machine Learning Brad Aimone SNL 2015 NICE 4

Potential reasons for why there is little hardware adaptation § Is learning really needed? Brad Aimone SNL 2015 NICE 5

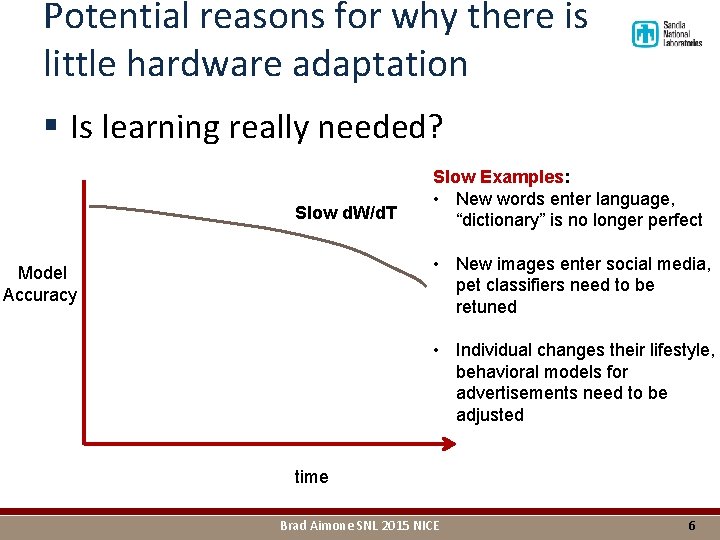

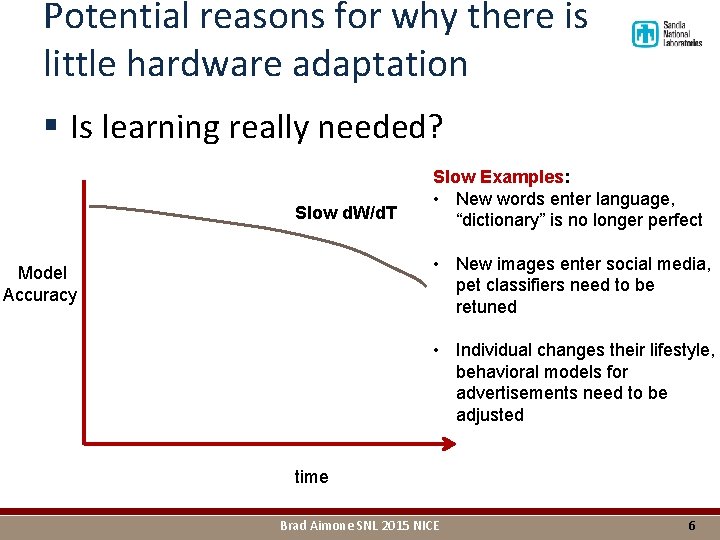

Potential reasons for why there is little hardware adaptation § Is learning really needed? Slow d. W/d. T Slow Examples: • New words enter language, “dictionary” is no longer perfect • New images enter social media, pet classifiers need to be retuned Model Accuracy • Individual changes their lifestyle, behavioral models for advertisements need to be adjusted time Brad Aimone SNL 2015 NICE 6

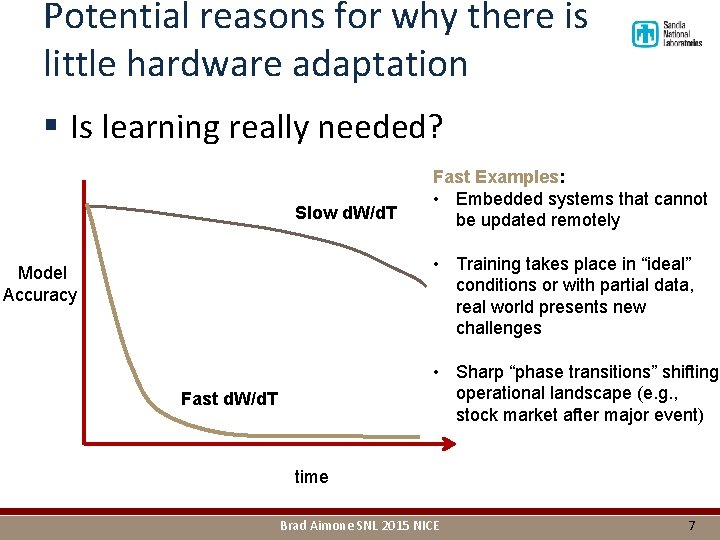

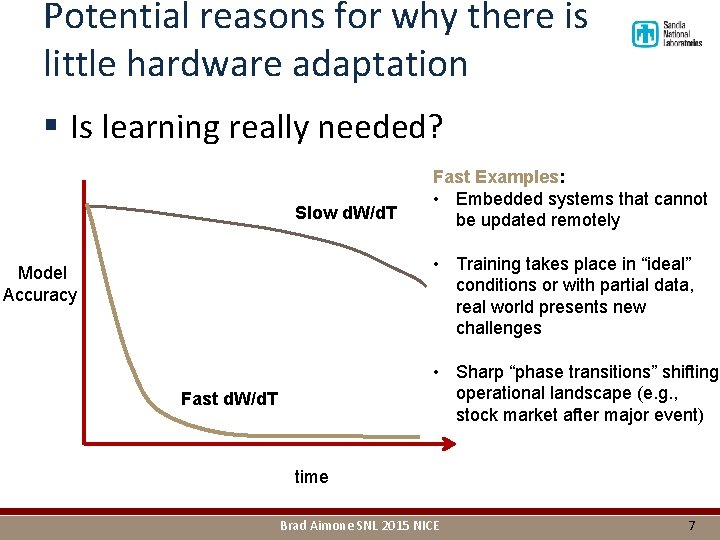

Potential reasons for why there is little hardware adaptation § Is learning really needed? Slow d. W/d. T Fast Examples: • Embedded systems that cannot be updated remotely • Training takes place in “ideal” conditions or with partial data, real world presents new challenges Model Accuracy • Sharp “phase transitions” shifting operational landscape (e. g. , stock market after major event) Fast d. W/d. T time Brad Aimone SNL 2015 NICE 7

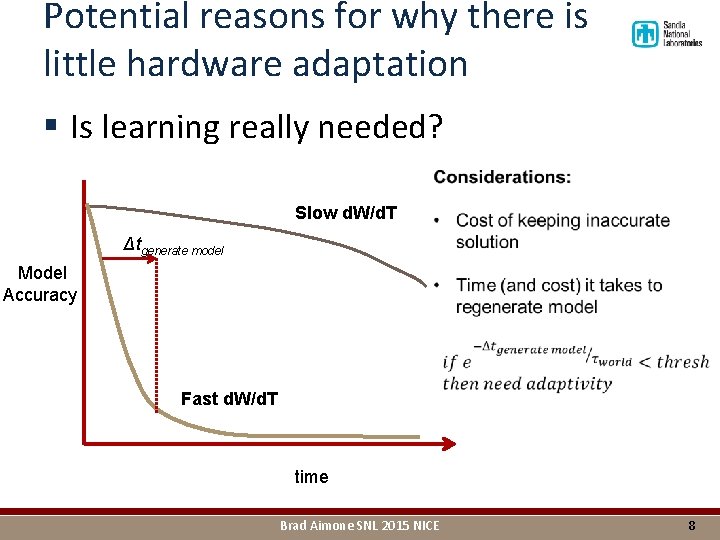

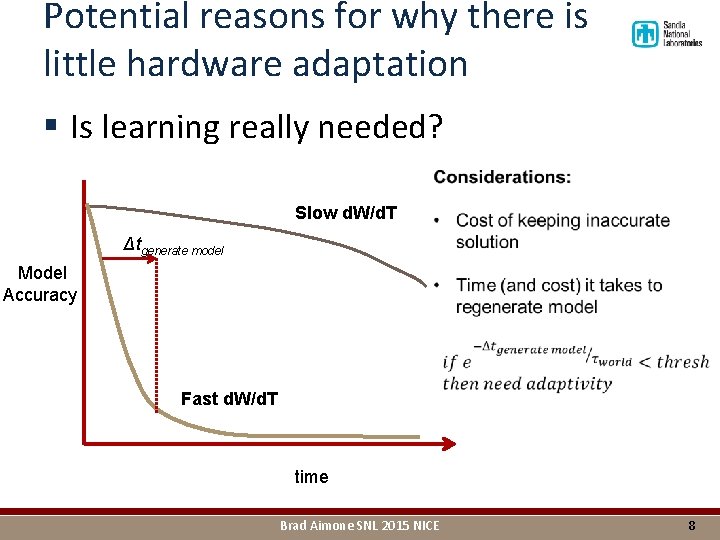

Potential reasons for why there is little hardware adaptation § Is learning really needed? Slow d. W/d. T Δtgenerate model Model Accuracy Fast d. W/d. T time Brad Aimone SNL 2015 NICE 8

Potential reasons for why there is little hardware adaptation § Is learning really needed? § Implementing learning is hard and costly Brad Aimone SNL 2015 NICE 9

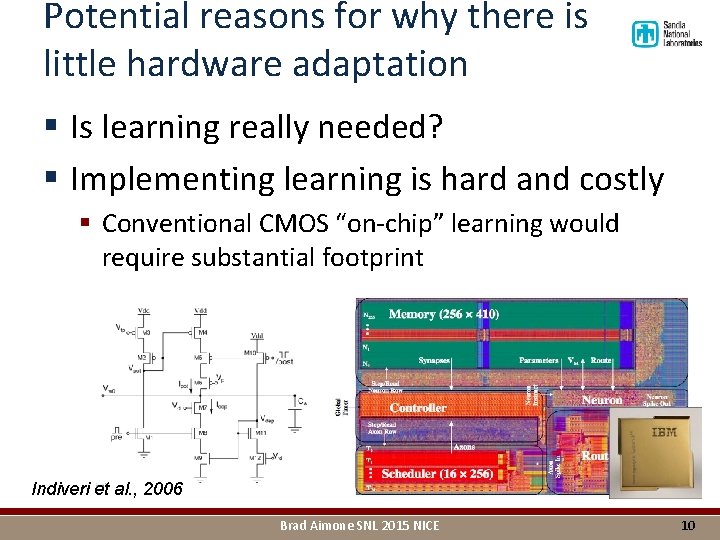

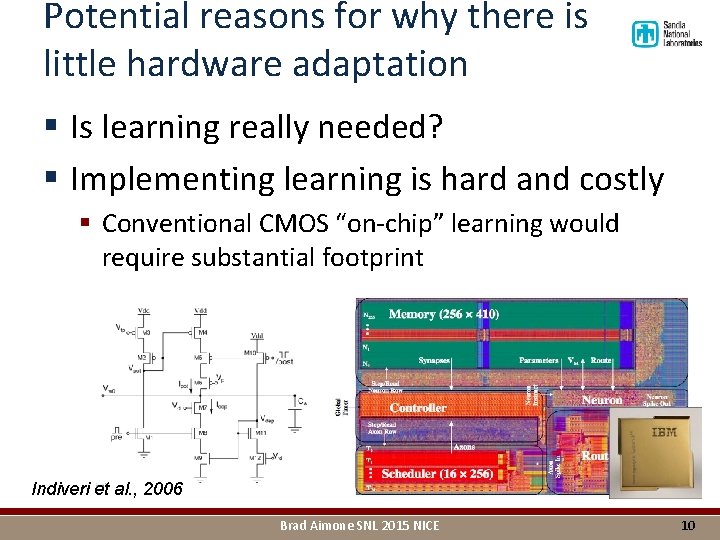

Potential reasons for why there is little hardware adaptation § Is learning really needed? § Implementing learning is hard and costly § Conventional CMOS “on-chip” learning would require substantial footprint Indiveri et al. , 2006 Brad Aimone SNL 2015 NICE 10

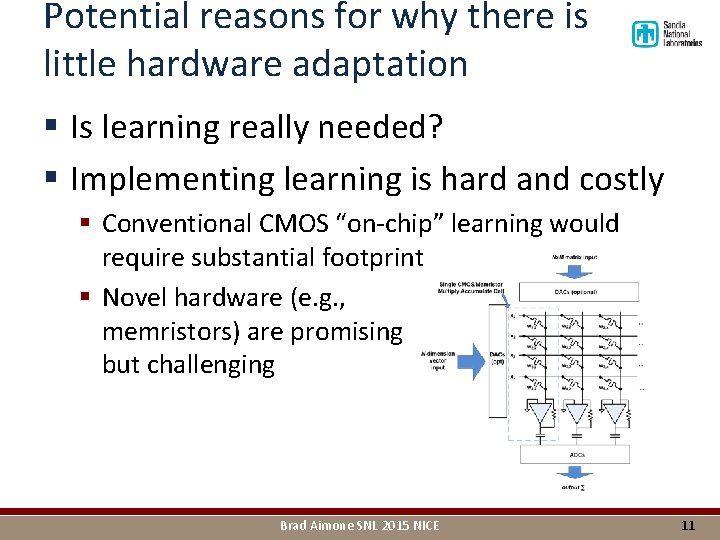

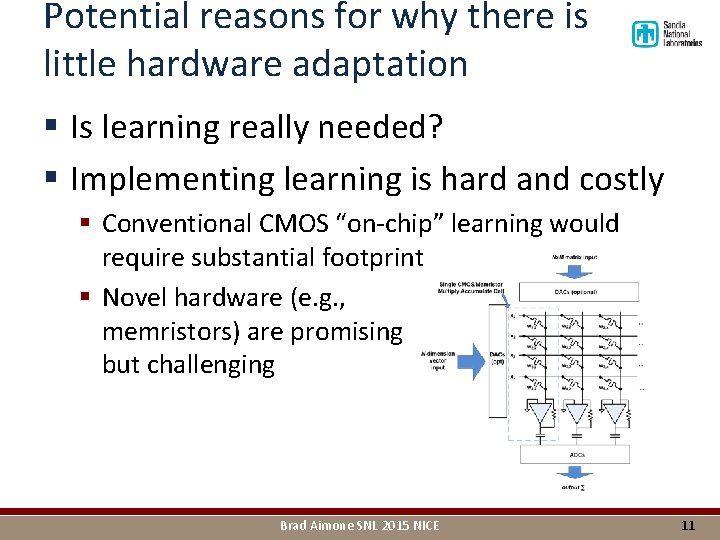

Potential reasons for why there is little hardware adaptation § Is learning really needed? § Implementing learning is hard and costly § Conventional CMOS “on-chip” learning would require substantial footprint § Novel hardware (e. g. , memristors) are promising but challenging Brad Aimone SNL 2015 NICE 11

Potential reasons for why there is little hardware adaptation § Is learning really needed? § Implementing learning is hard and costly § We don’t really know how to implement it algorithmically… Brad Aimone SNL 2015 NICE 12

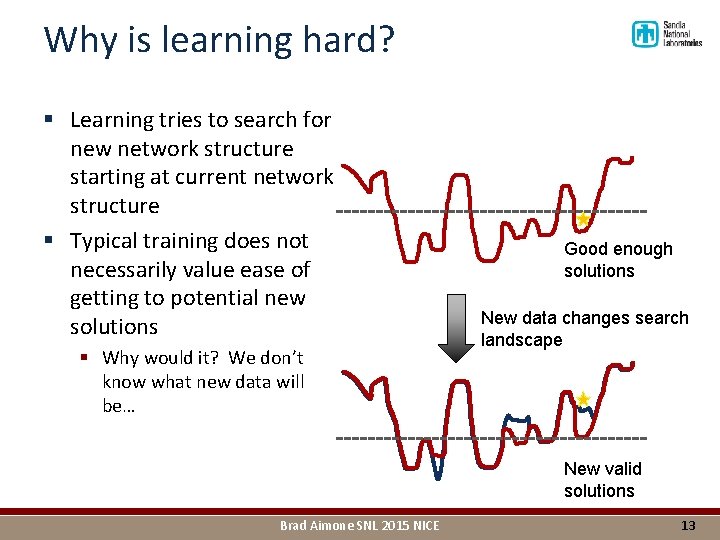

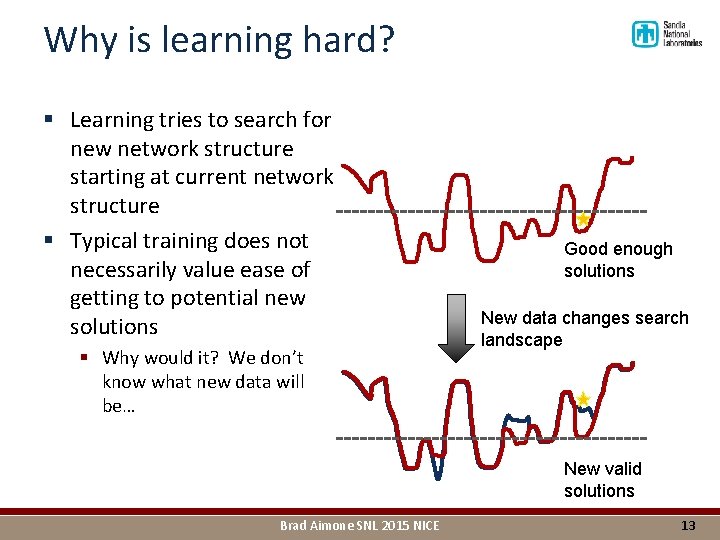

Why is learning hard? § Learning tries to search for new network structure starting at current network structure § Typical training does not necessarily value ease of getting to potential new solutions § Why would it? We don’t know what new data will be… Good enough solutions New data changes search landscape New valid solutions Brad Aimone SNL 2015 NICE 13

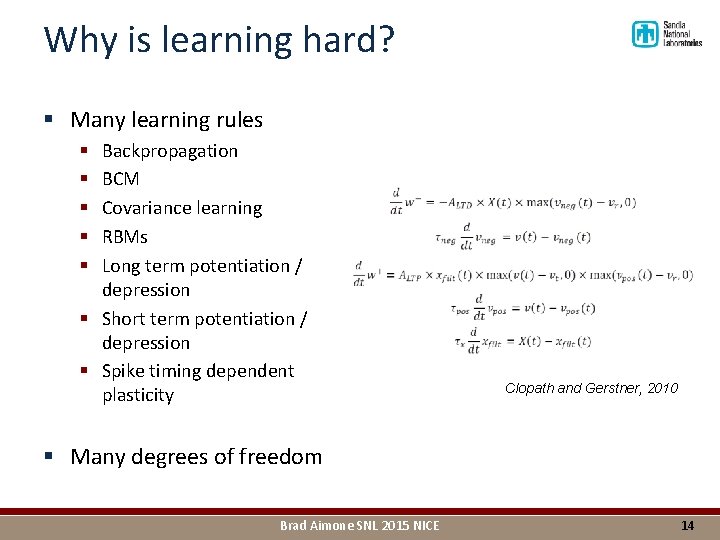

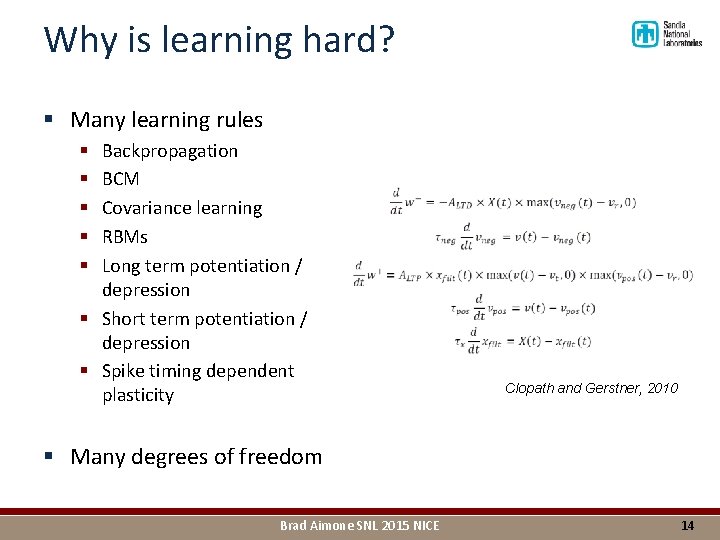

Why is learning hard? § Many learning rules Backpropagation BCM Covariance learning RBMs Long term potentiation / depression § Short term potentiation / depression § Spike timing dependent plasticity § § § Clopath and Gerstner, 2010 § Many degrees of freedom Brad Aimone SNL 2015 NICE 14

Lessons from how the brain does it 1. Learning is where it needs to be. Brad Aimone SNL 2015 NICE 15

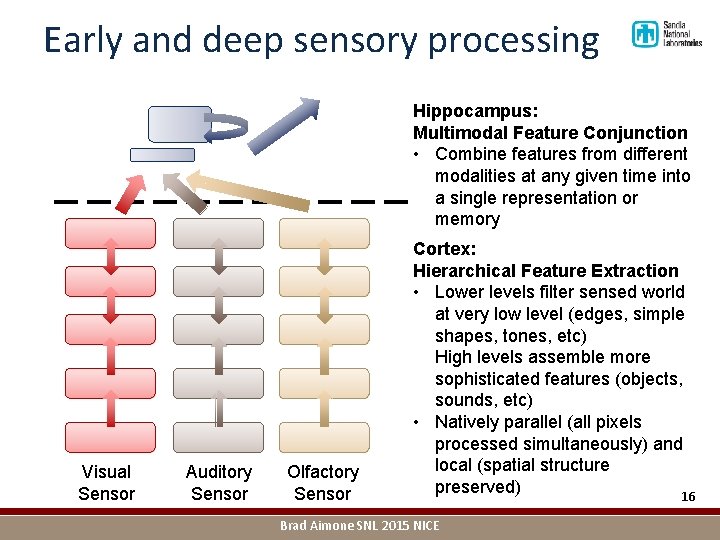

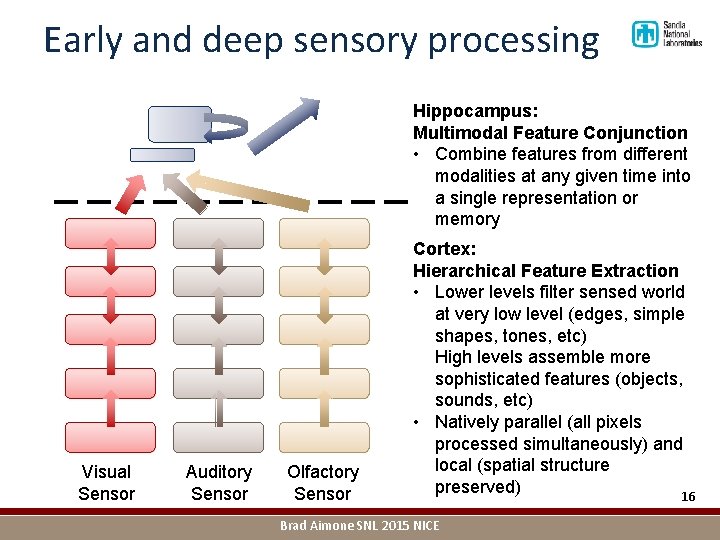

Early and deep sensory processing Hippocampus: Multimodal Feature Conjunction • Combine features from different modalities at any given time into a single representation or memory Visual Sensor Auditory Sensor Olfactory Sensor Cortex: Hierarchical Feature Extraction • Lower levels filter sensed world at very low level (edges, simple shapes, tones, etc) High levels assemble more sophisticated features (objects, sounds, etc) • Natively parallel (all pixels processed simultaneously) and local (spatial structure preserved) 16 Brad Aimone SNL 2015 NICE

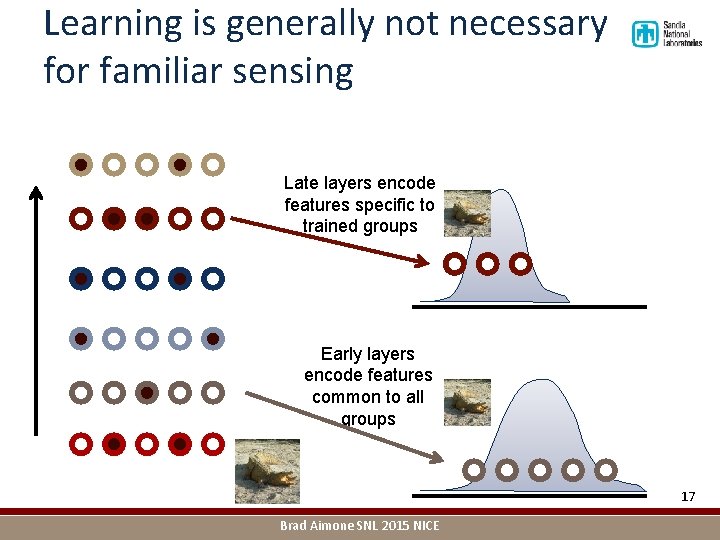

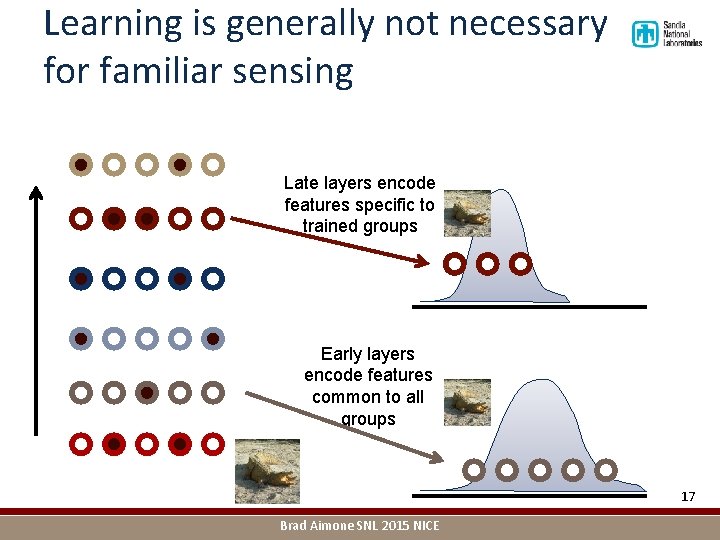

Learning is generally not necessary for familiar sensing Late layers encode features specific to trained groups Early layers encode features common to all groups 17 Brad Aimone SNL 2015 NICE

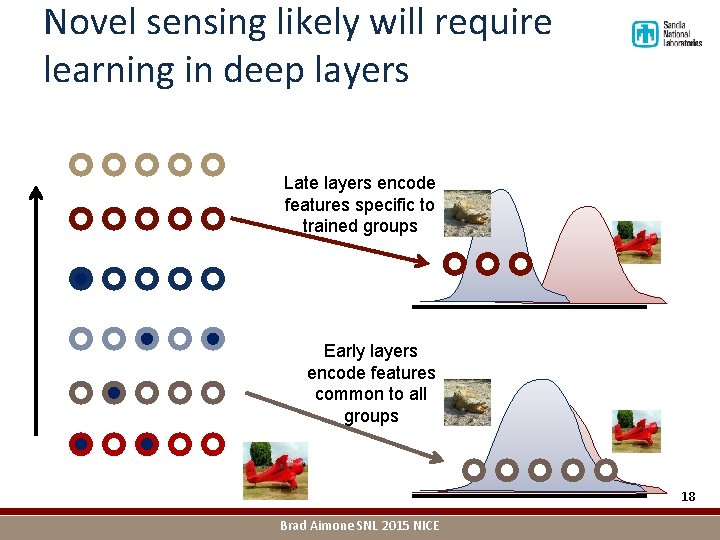

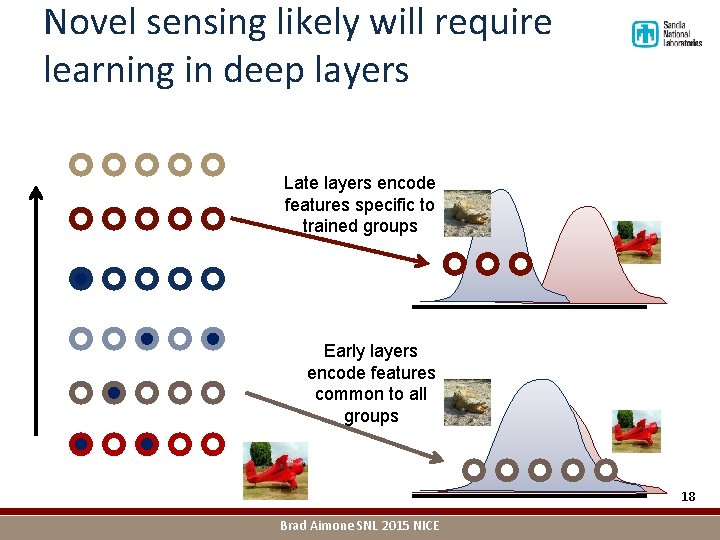

Novel sensing likely will require learning in deep layers Late layers encode features specific to trained groups Early layers encode features common to all groups 18 Brad Aimone SNL 2015 NICE

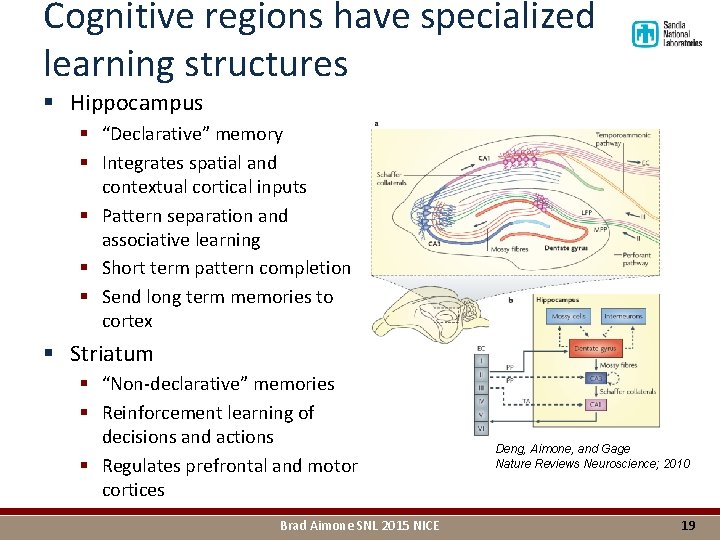

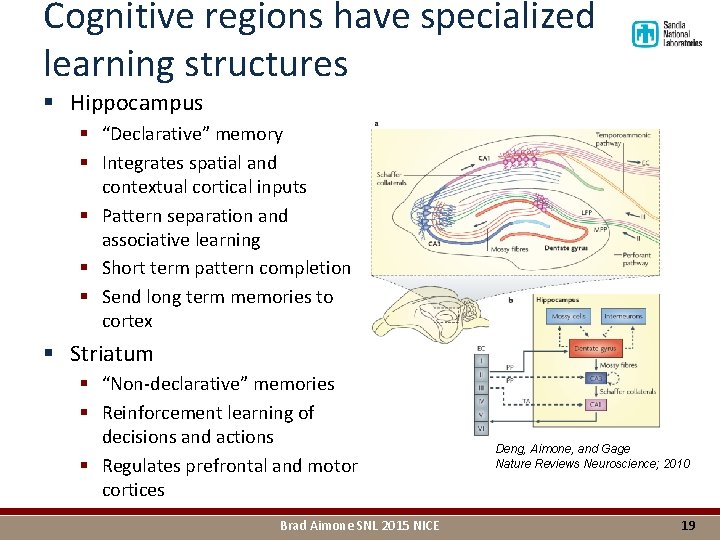

Cognitive regions have specialized learning structures § Hippocampus § “Declarative” memory § Integrates spatial and contextual cortical inputs § Pattern separation and associative learning § Short term pattern completion § Send long term memories to cortex § Striatum § “Non-declarative” memories § Reinforcement learning of decisions and actions § Regulates prefrontal and motor cortices Brad Aimone SNL 2015 NICE Deng, Aimone, and Gage Nature Reviews Neuroscience; 2010 19

Lessons from how the brain does it 1. Learning is where it needs to be. 2. Function matters. Brad Aimone SNL 2015 NICE 20

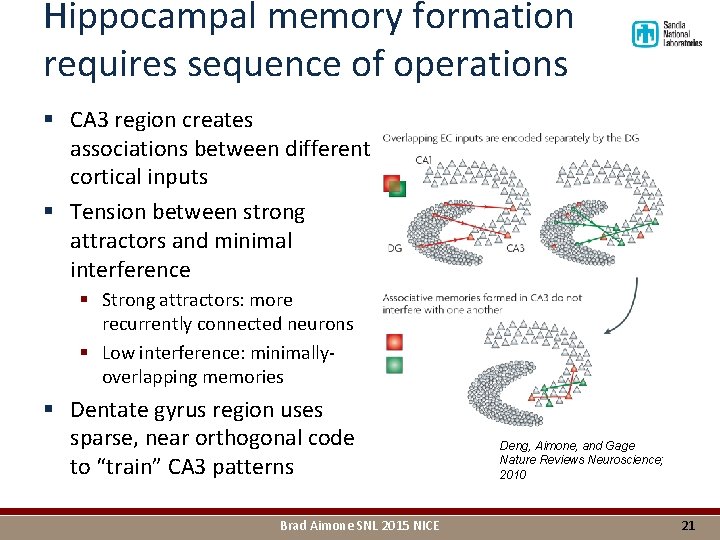

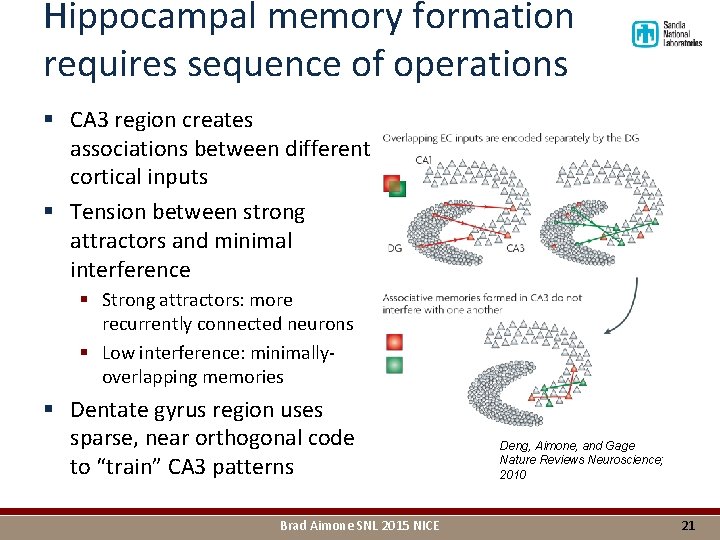

Hippocampal memory formation requires sequence of operations § CA 3 region creates associations between different cortical inputs § Tension between strong attractors and minimal interference § Strong attractors: more recurrently connected neurons § Low interference: minimallyoverlapping memories § Dentate gyrus region uses sparse, near orthogonal code to “train” CA 3 patterns Brad Aimone SNL 2015 NICE Deng, Aimone, and Gage Nature Reviews Neuroscience; 2010 21

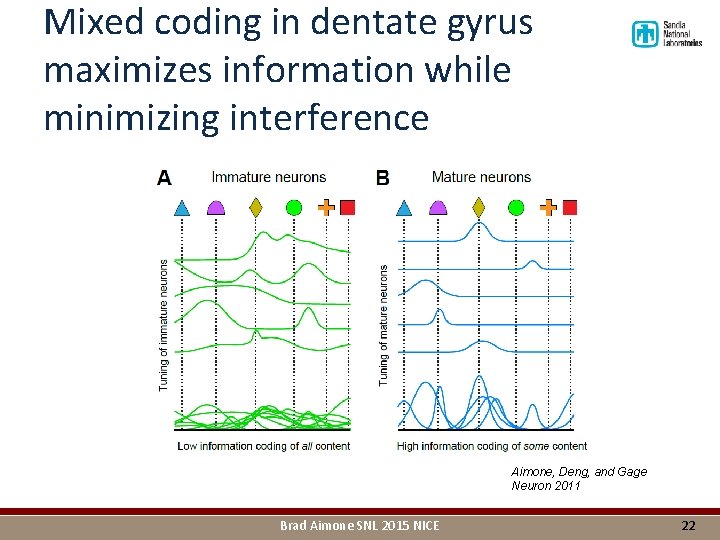

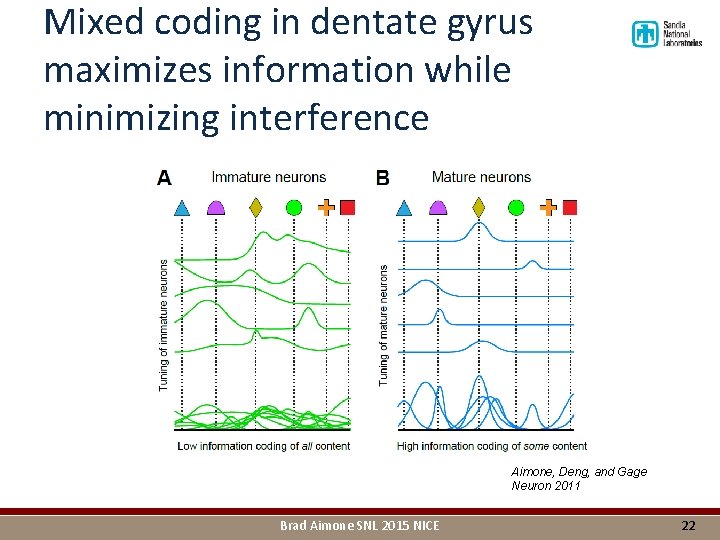

Mixed coding in dentate gyrus maximizes information while minimizing interference Aimone, Deng, and Gage Neuron 2011 Brad Aimone SNL 2015 NICE 22

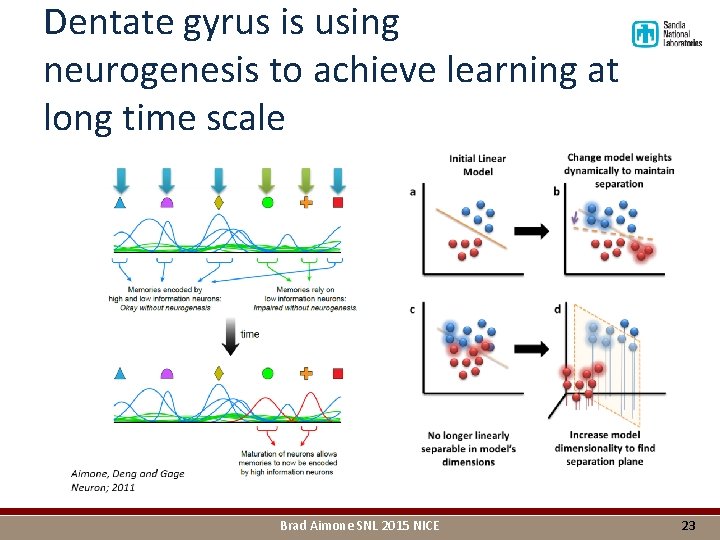

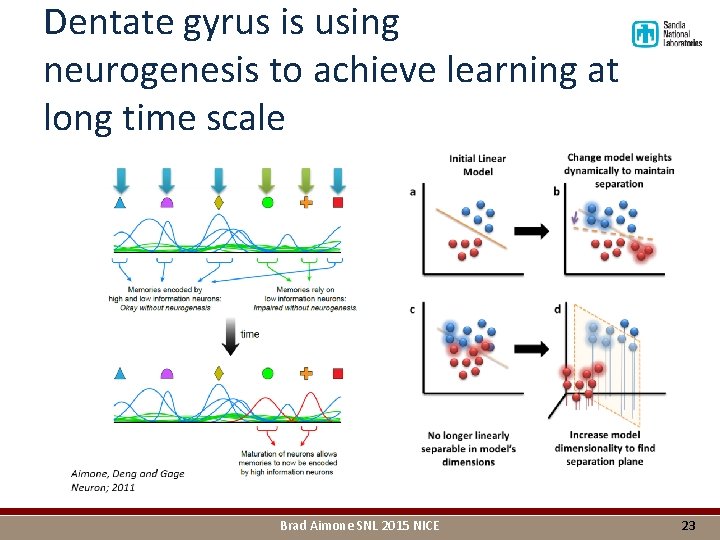

Dentate gyrus is using neurogenesis to achieve learning at long time scale Brad Aimone SNL 2015 NICE 23

Lessons from how the brain does it 1. Learning is where it needs to be. 2. Function matters. 3. No such thing as a small brain. Brad Aimone SNL 2015 NICE 24

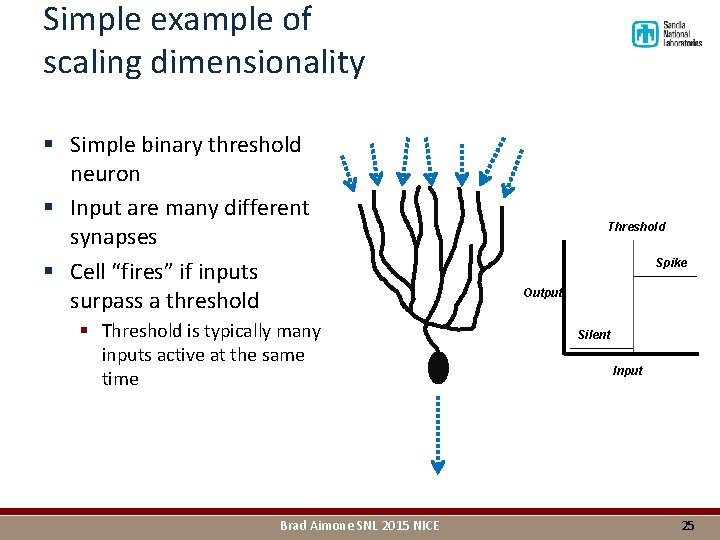

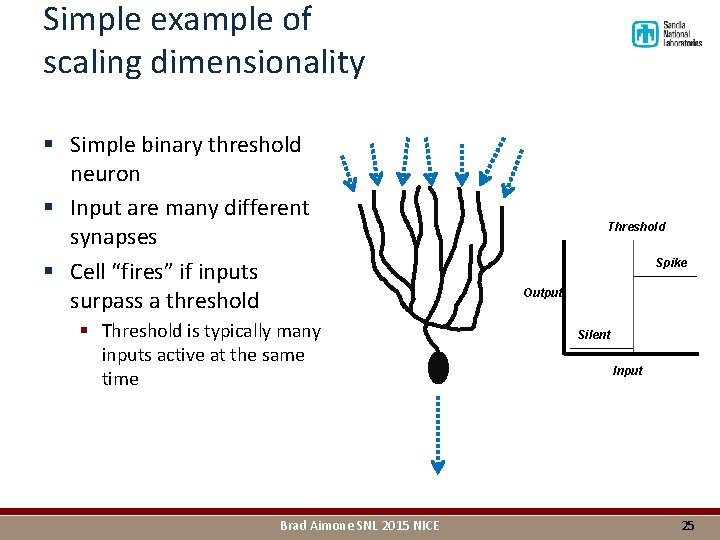

Simple example of scaling dimensionality § Simple binary threshold neuron § Input are many different synapses § Cell “fires” if inputs surpass a threshold § Threshold is typically many inputs active at the same time Brad Aimone SNL 2015 NICE Threshold Spike Output Silent Input 25

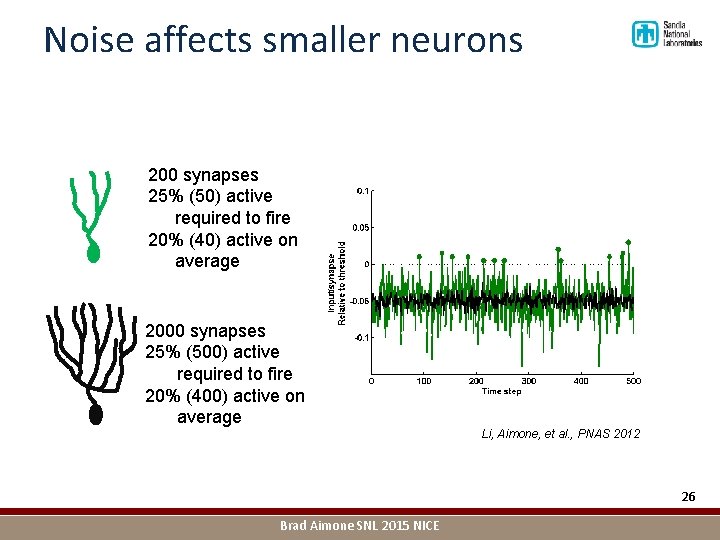

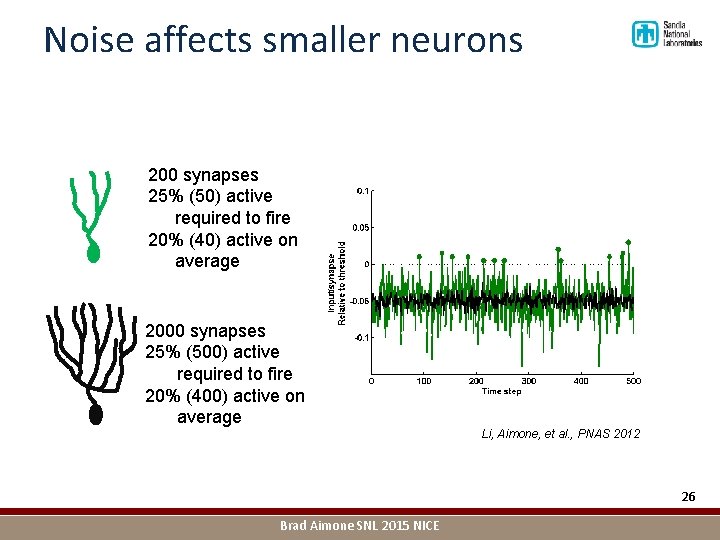

Noise affects smaller neurons 200 synapses 25% (50) active required to fire 20% (40) active on average 2000 synapses 25% (500) active required to fire 20% (400) active on average Li, Aimone, et al. , PNAS 2012 26 Brad Aimone SNL 2015 NICE

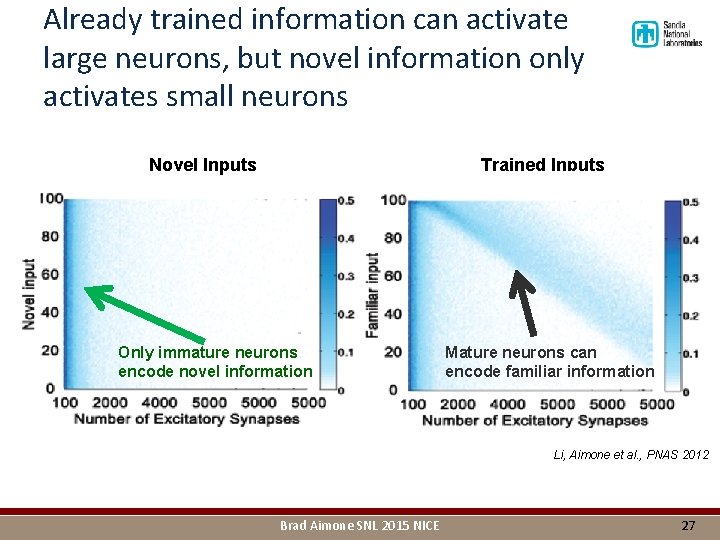

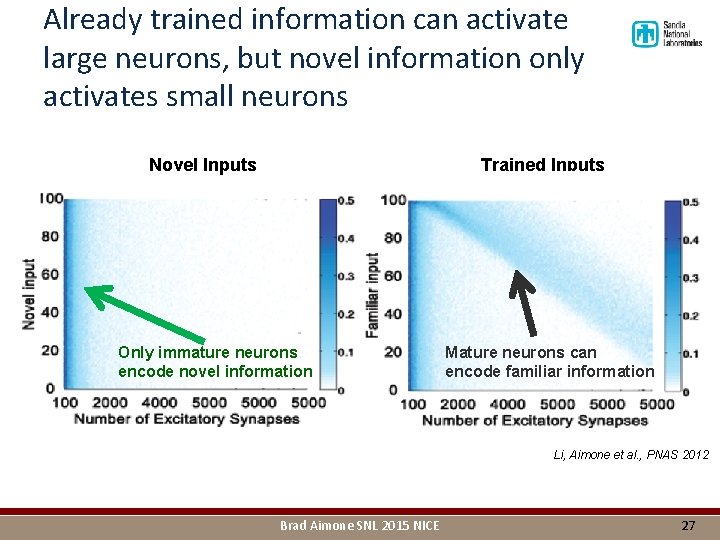

Already trained information can activate large neurons, but novel information only activates small neurons Novel Inputs Trained Inputs Only immature neurons encode novel information Mature neurons can encode familiar information Li, Aimone et al. , PNAS 2012 Brad Aimone SNL 2015 NICE 27

Lessons from how the brain does it 1. Learning is where it needs to be. 2. Function matters. 3. No such thing as a small brain. 4. Learning is local, regulation is global. Brad Aimone SNL 2015 NICE 28

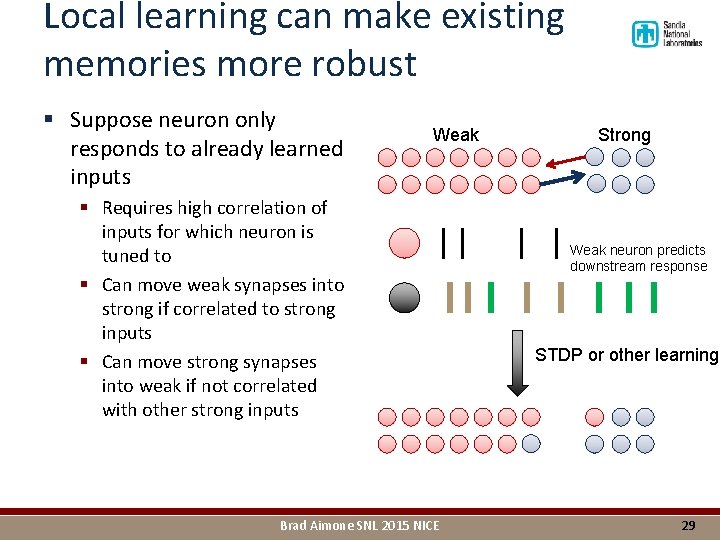

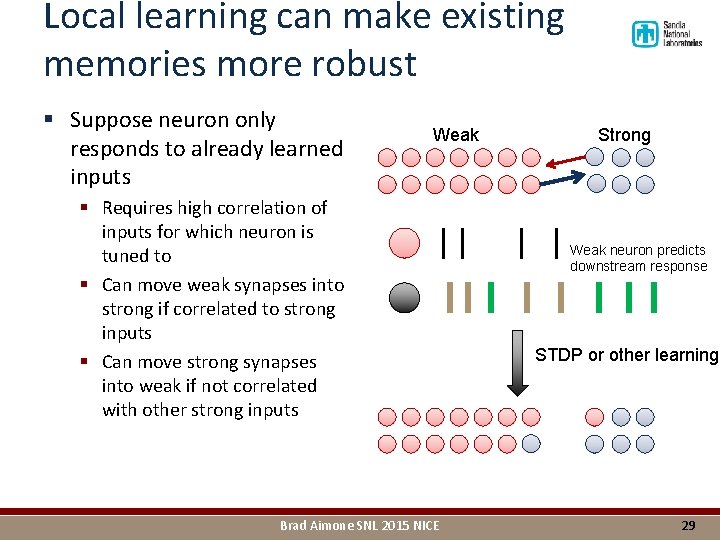

Local learning can make existing memories more robust § Suppose neuron only responds to already learned inputs Weak § Requires high correlation of inputs for which neuron is tuned to § Can move weak synapses into strong if correlated to strong inputs § Can move strong synapses into weak if not correlated with other strong inputs Brad Aimone SNL 2015 NICE Strong Weak neuron predicts downstream response STDP or other learning 29

Lessons from how the brain does it 1. Learning is where it needs to be. 2. Function matters. 3. No such thing as a small brain. 4. Learning is local, regulation is global. 5. There is more than one type of learning. Brad Aimone SNL 2015 NICE 30

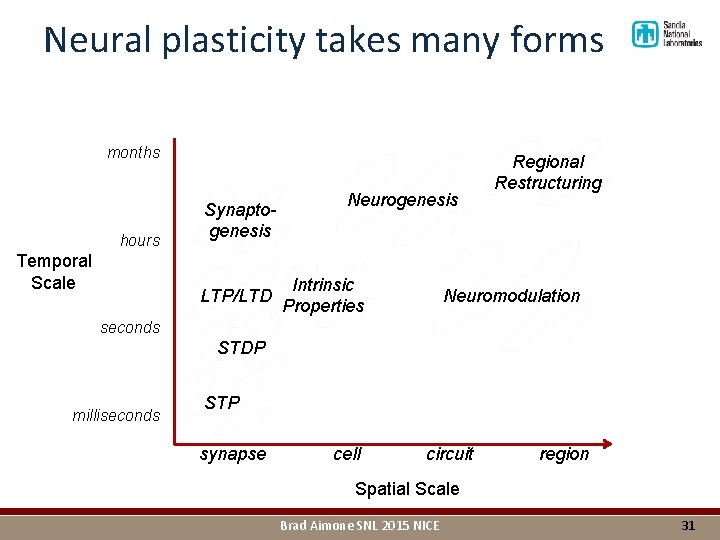

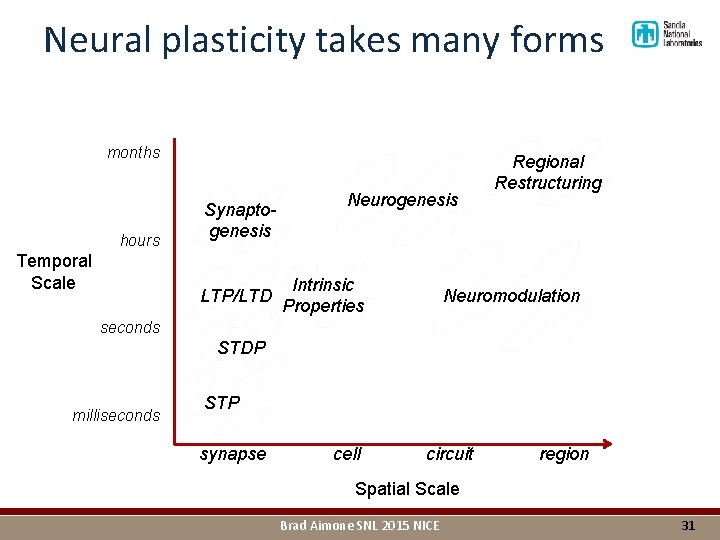

Neural plasticity takes many forms months hours Temporal Scale Synaptogenesis LTP/LTD Neurogenesis Intrinsic Properties Regional Restructuring Neuromodulation seconds STDP milliseconds STP synapse cell circuit region Spatial Scale Brad Aimone SNL 2015 NICE 31

Path forward § Desired function should be a guide for learning strategy § Dentate Gyrus example § Goal: maximize information throughput while minimizing correlations § Synaptic learning modifies existing representations at short timescales § Neurogenesis captures entirely novel information and develops specialty over long timescales § Sensory cortex § Neuroscience provides large toolkit, but different methods are not universal § For applications, we need function guided design process § Fit the algorithm and learning to the data, not the other way around… Brad Aimone SNL 2015 NICE 32

Thanks! Brad Aimone SNL 2015 NICE 33