Personalizing Search Jaime Teevan MIT Susan T Dumais

- Slides: 27

Personalizing Search Jaime Teevan, MIT Susan T. Dumais, MSR and Eric Horvitz, MSR

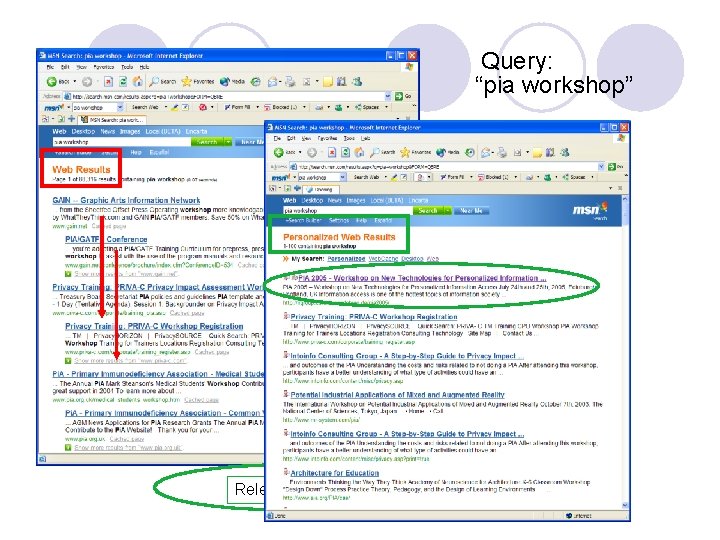

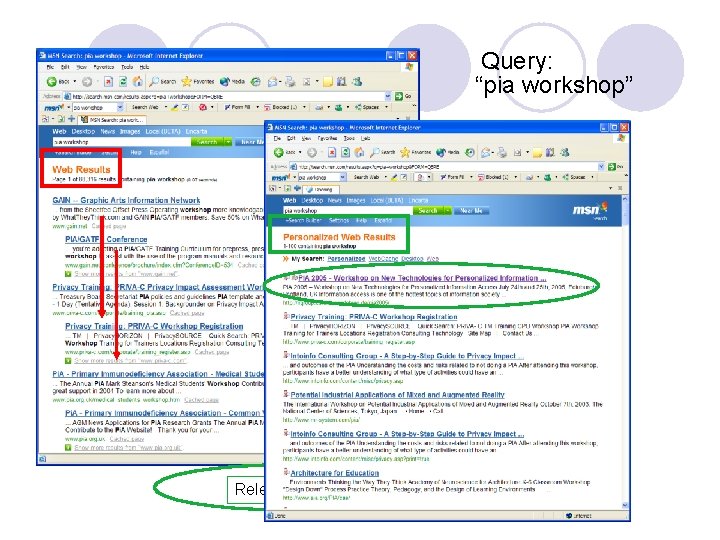

Query: “pia workshop” Relevant result

Outline l Approaches to personalization l The PS algorithm l Evaluation l Results l Future work

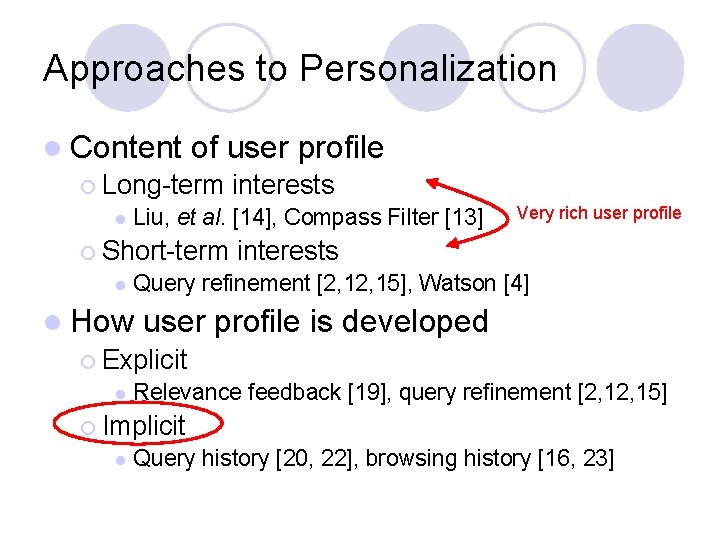

Approaches to Personalization l Content of user profile ¡ Long-term interests l Liu, et al. [14], Compass Filter [13] ¡ Short-term l interests Query refinement [2, 15], Watson [4] l How user ¡ Explicit l Very rich user profile is developed Relevance feedback [19], query refinement [2, 15] ¡ Implicit l Query history [20, 22], browsing history [16, 23]

PS Search Engine ry e qu

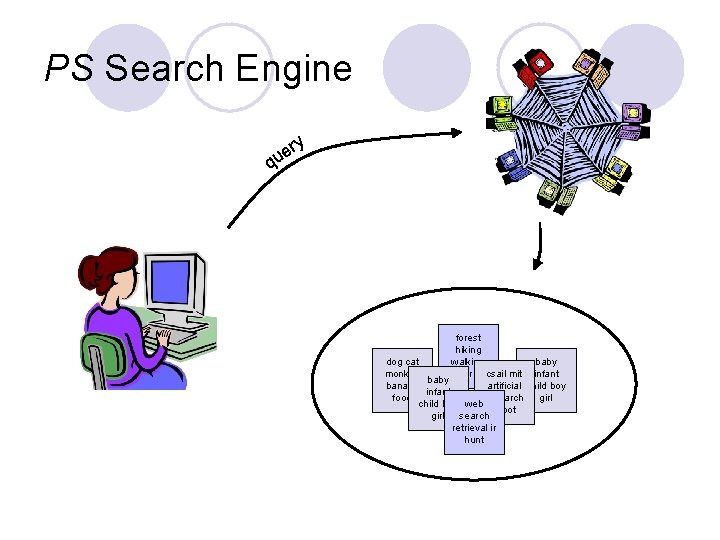

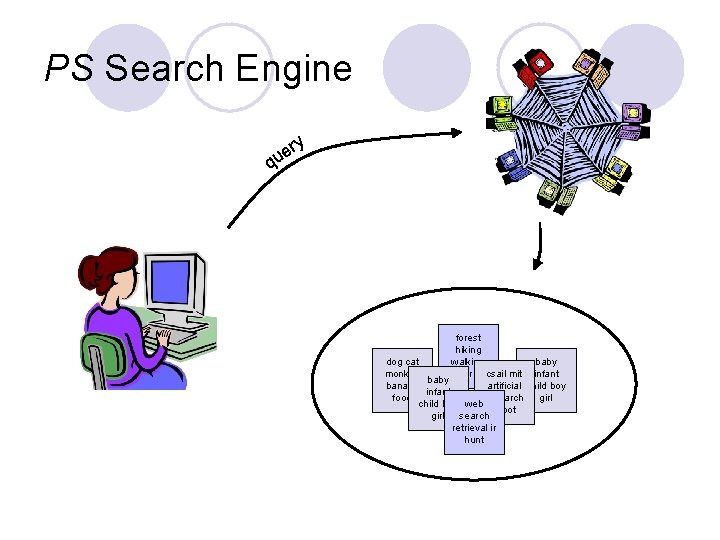

PS Search Engine ry e qu forest hiking dog cat baby walking monkey gorp csail mit infant baby artificial child boy banana infant food research girl child boy web robot girl search retrieval ir hunt

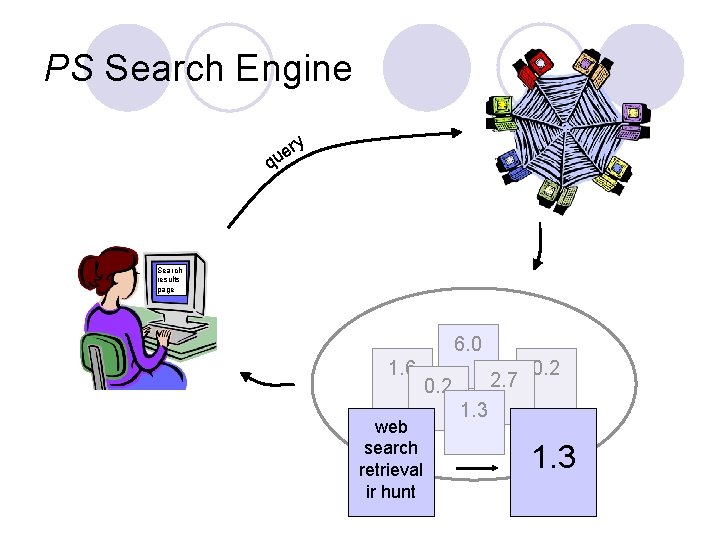

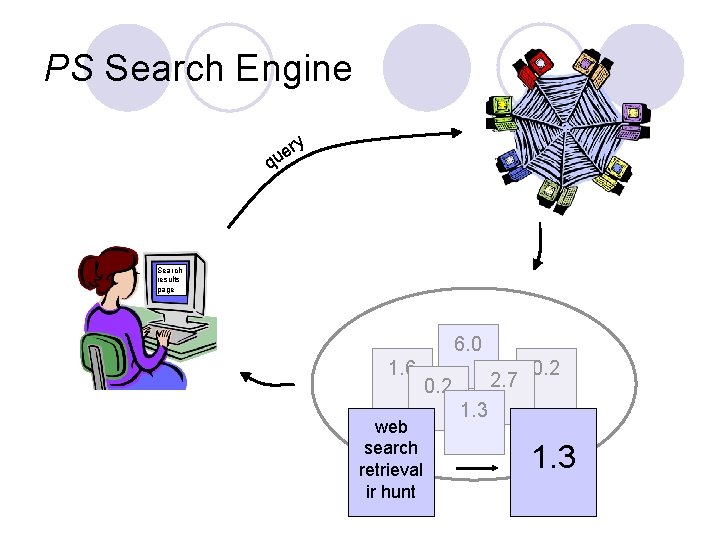

PS Search Engine ry e qu Search results page 6. 0 1. 6 web search retrieval ir hunt 2. 7 0. 2 1. 3

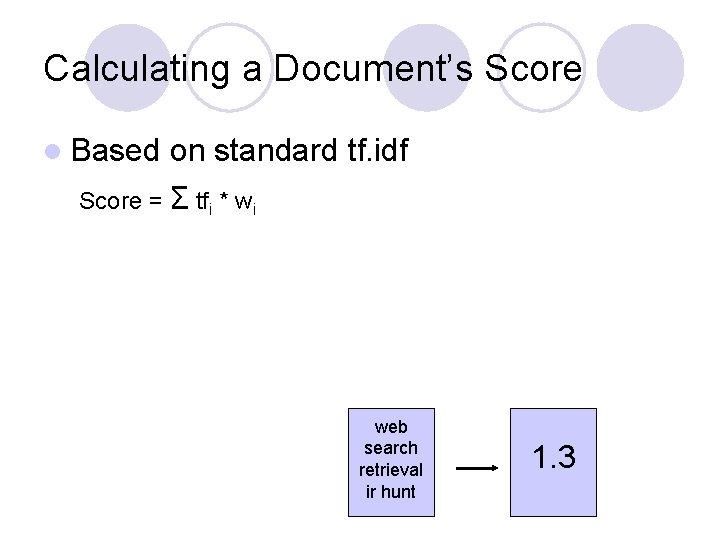

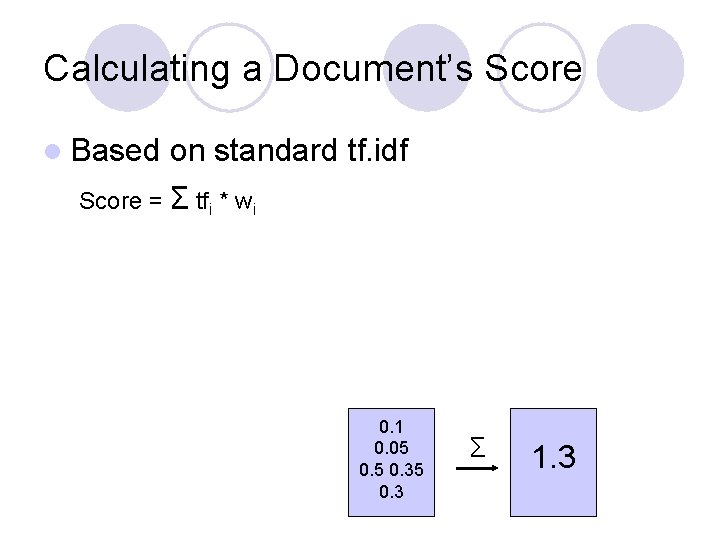

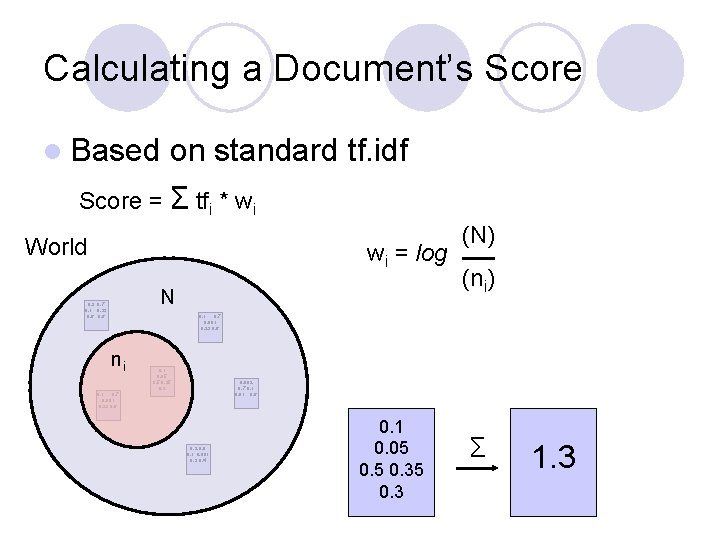

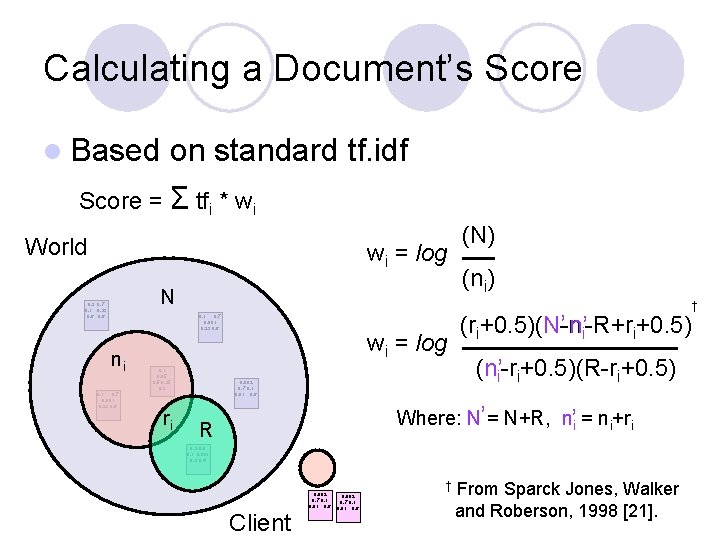

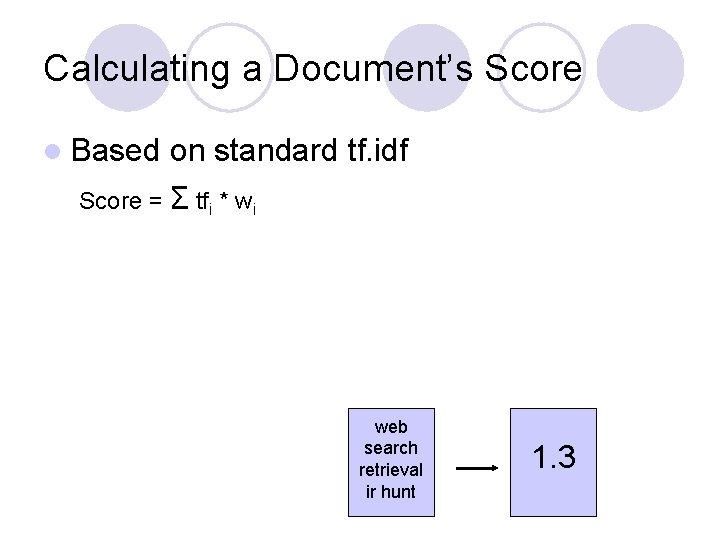

Calculating a Document’s Score l Based on standard tf. idf Score = Σ tfi * wi web search retrieval ir hunt 1. 3

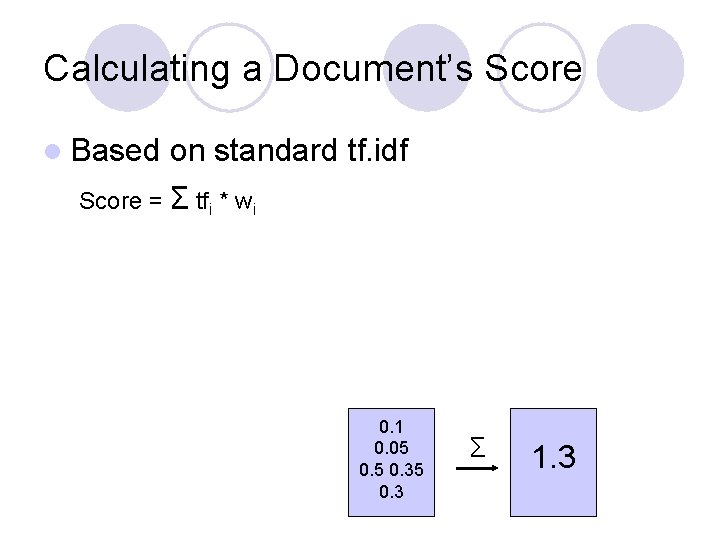

Calculating a Document’s Score l Based on standard tf. idf Score = Σ tfi * wi 0. 1 0. 05 0. 35 0. 3 Σ 1. 3

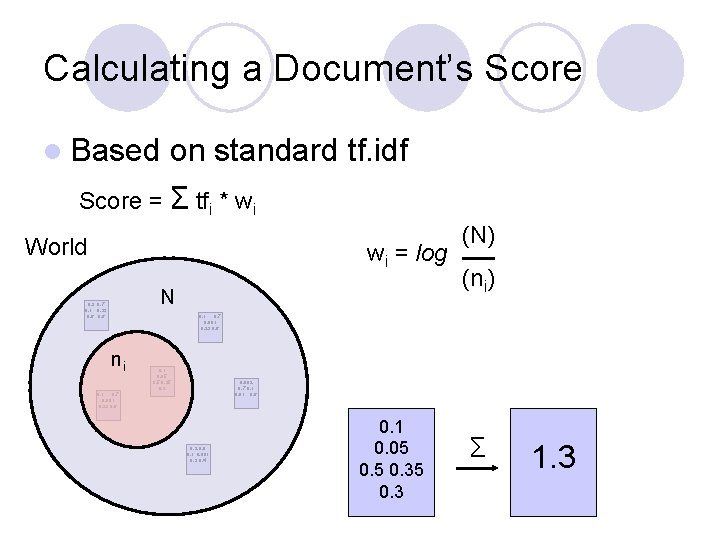

Calculating a Document’s Score l Based on standard tf. idf Score = Σ tfi * wi World wi = log N 0. 3 0. 7 0. 1 0. 23 0. 6 (N) (ni) 0. 1 0. 7 0. 001 0. 23 0. 6 ni 0. 1 0. 05 0. 35 0. 3 0. 002 0. 7 0. 1 0. 01 0. 6 0. 1 0. 7 0. 001 0. 23 0. 6 0. 2 0. 8 0. 1 0. 001 0. 3 0. 4 0. 1 0. 05 0. 35 0. 3 Σ 1. 3

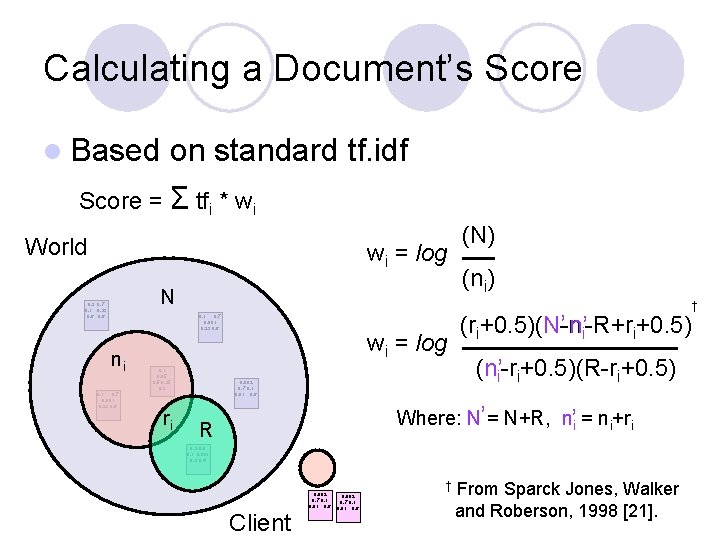

Calculating a Document’s Score l Based on standard tf. idf Score = Σ tfi * wi World wi = log N 0. 3 0. 7 0. 1 0. 23 0. 6 0. 1 0. 7 0. 001 0. 23 0. 6 0. 1 0. 05 0. 35 0. 3 ri (ni) † 0. 1 0. 7 0. 001 0. 23 0. 6 ni (N) wi = log 0. 002 0. 7 0. 1 0. 01 0. 6 ’ i’-R+ri+0. 5) (ri+0. 5)(N-n (ni’-ri+0. 5)(R-ri+0. 5) Where: N’ = N+R, n’i = ni+ri R 0. 2 0. 8 0. 1 0. 001 0. 3 0. 4 0. 002 0. 7 0. 1 0. 01 0. 6 Client † 0. 002 0. 7 0. 1 0. 01 0. 6 From Sparck Jones, Walker and Roberson, 1998 [21].

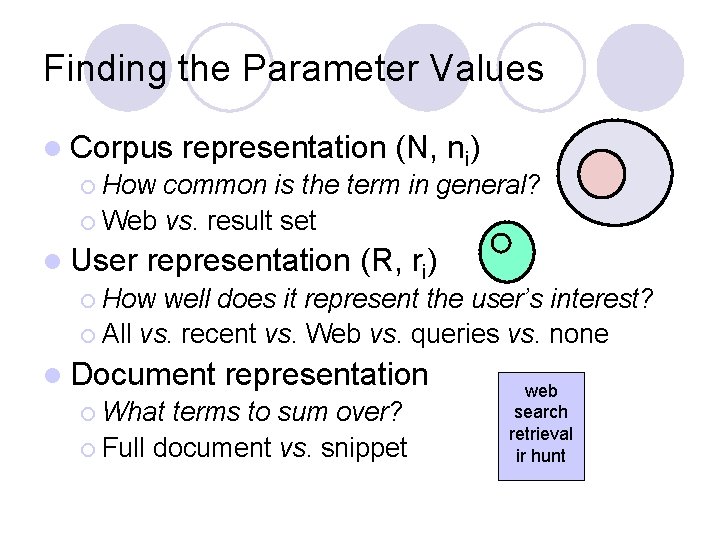

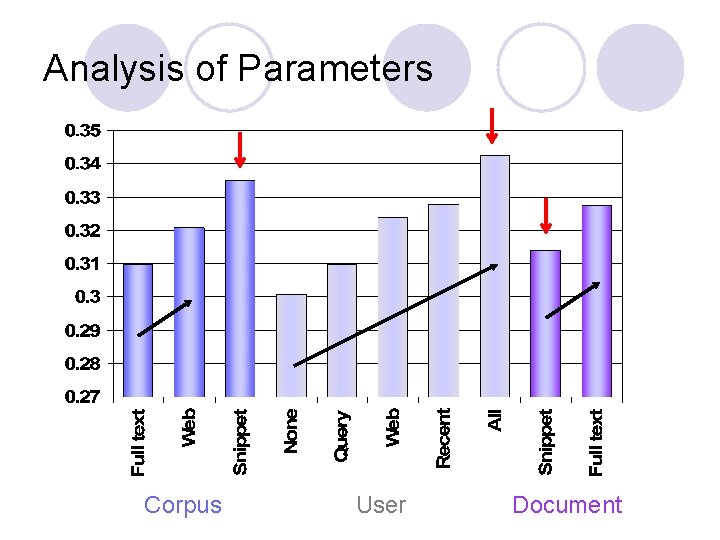

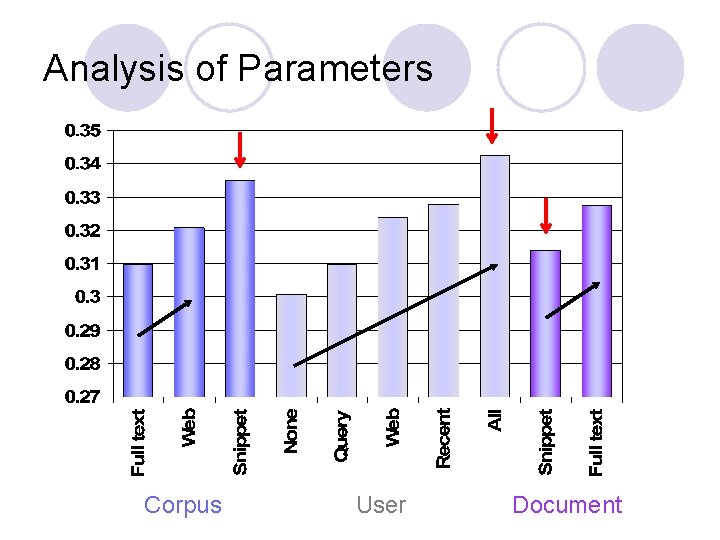

Finding the Parameter Values l Corpus representation (N, ni) ¡ How common is the term in general? ¡ Web vs. result set l User representation (R, ri) ¡ How well does it represent the user’s interest? ¡ All vs. recent vs. Web vs. queries vs. none l Document representation ¡ What terms to sum over? ¡ Full document vs. snippet web search retrieval ir hunt

Building a Test Bed l 15 evaluators x ~10 queries ¡ 131 queries total l Personally meaningful queries ¡ Selected from a list ¡ Queries issued earlier (kept diary) l Evaluate ¡ Highly l Index 50 results for each query relevant / irrelevant of personal information

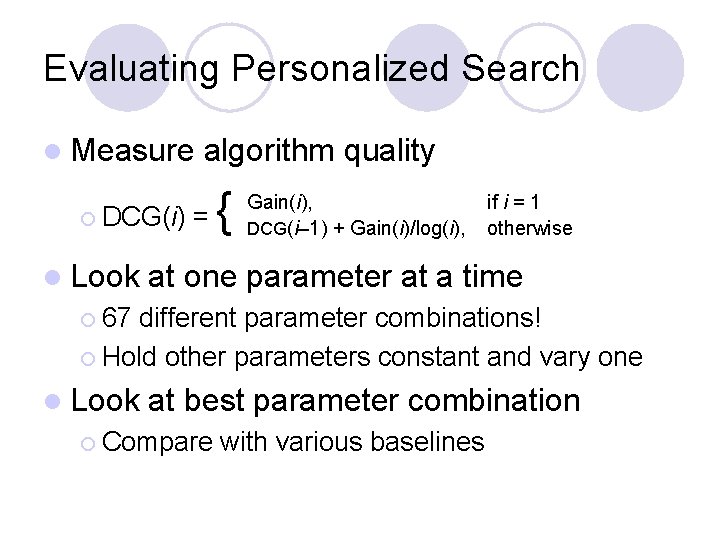

Evaluating Personalized Search l Measure ¡ DCG(i) l Look algorithm quality = { Gain(i), DCG(i– 1) + Gain(i)/log(i), if i = 1 otherwise at one parameter at a time ¡ 67 different parameter combinations! ¡ Hold other parameters constant and vary one l Look at best parameter combination ¡ Compare with various baselines

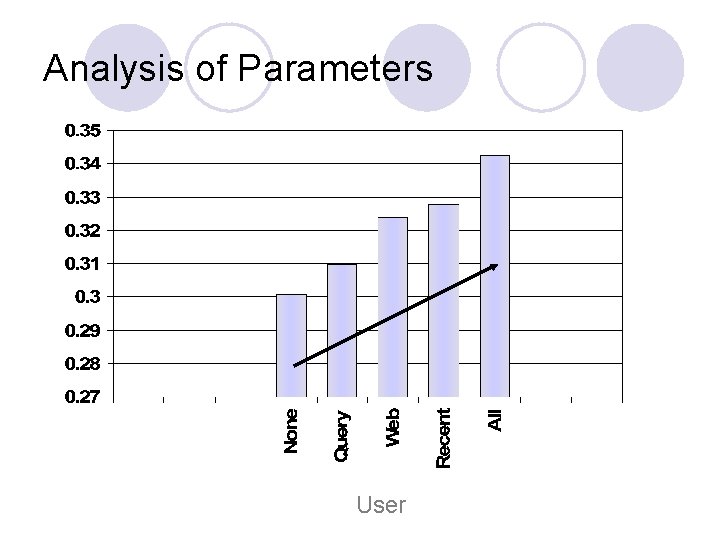

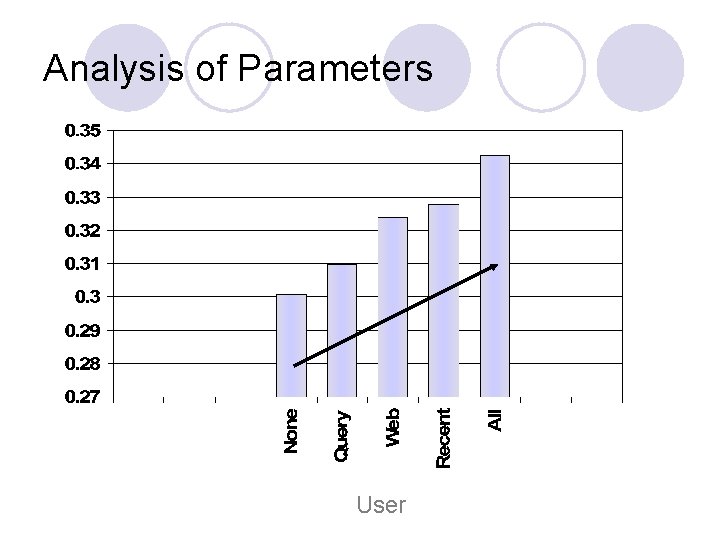

Analysis of Parameters User

Analysis of Parameters Corpus User Document

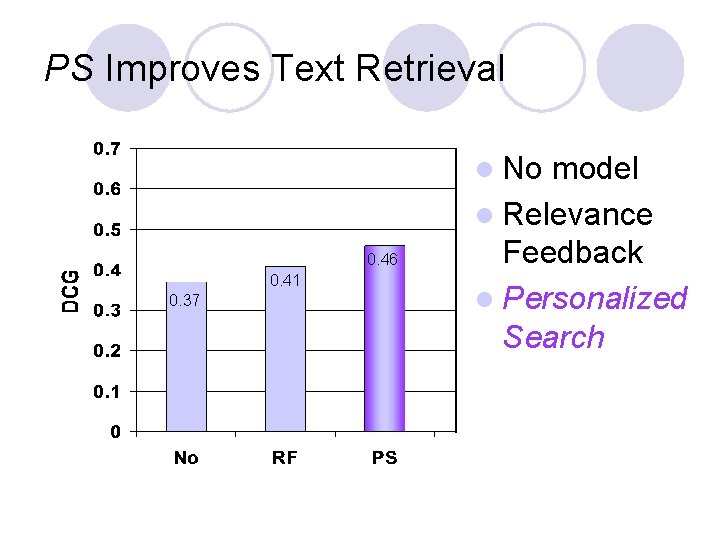

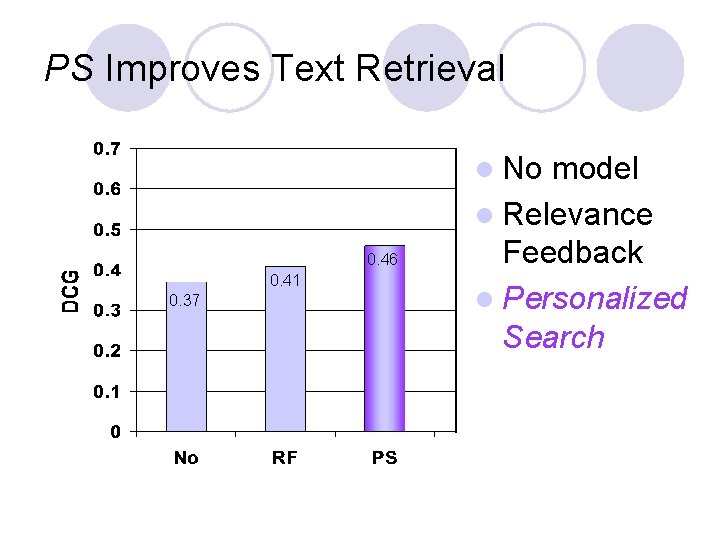

PS Improves Text Retrieval l No 0. 46 0. 41 0. 37 model l Relevance Feedback l Personalized Search

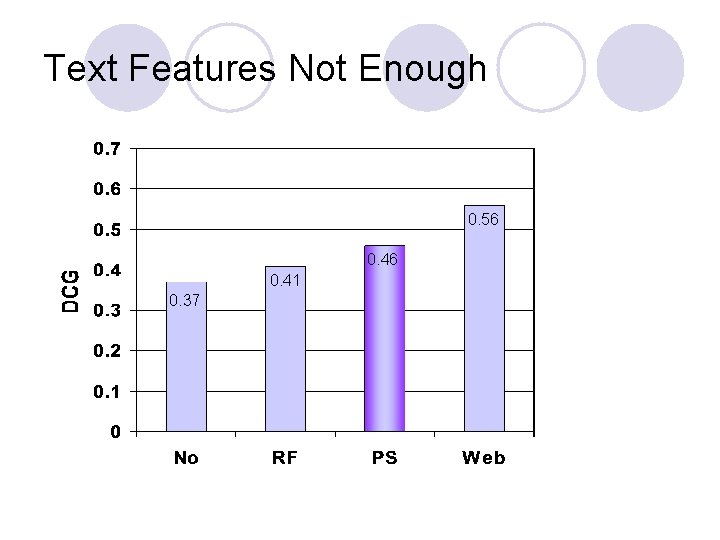

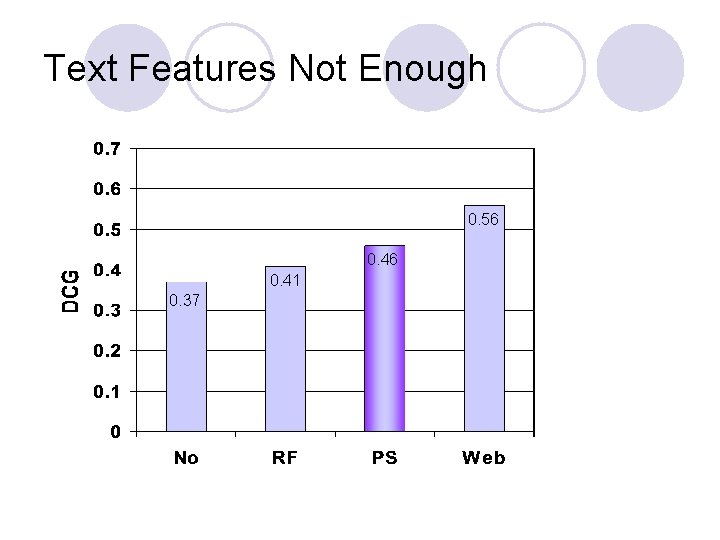

Text Features Not Enough 0. 56 0. 41 0. 37

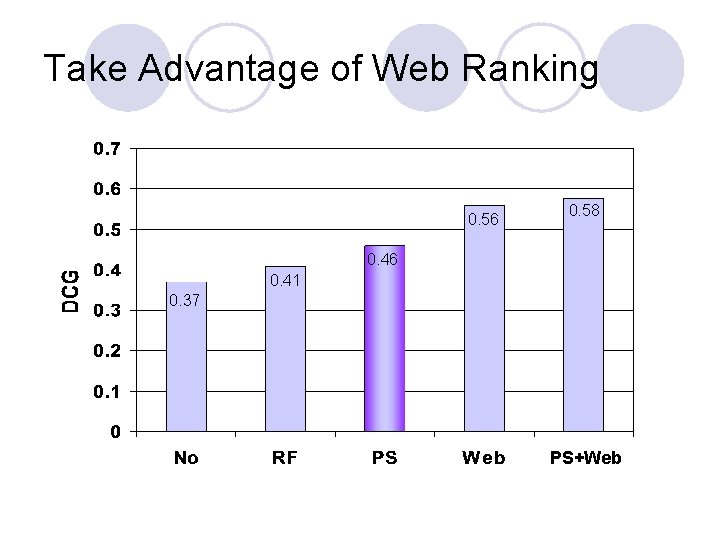

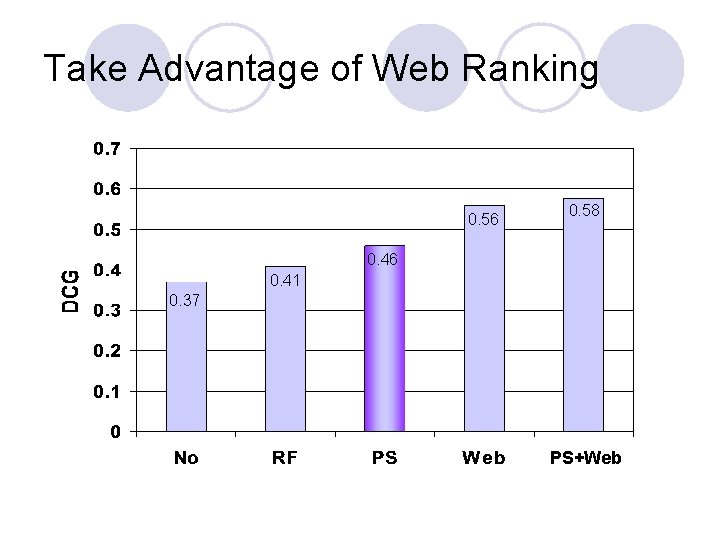

Take Advantage of Web Ranking 0. 56 0. 58 0. 46 0. 41 0. 37 PS+Web

Summary l Personalization of Web search ¡ Result re-ranking ¡ User’s documents as relevance feedback l Rich representations important ¡ Rich user profile particularly important ¡ Efficiency hacks possible ¡ Need to incorporate features beyond text

Further Exploration l Improved non-text components ¡ Usage data ¡ Personalized Page. Rank l Learn parameters ¡ Based on individual ¡ Based on query ¡ Based on results l UIs for user control

User Interface Issues l Make personalization transparent l Give user control over personalization ¡ Slider between Web and personalized results ¡ Allows for background computation l Exacerbates ¡ Results problem with re-finding change as user model changes ¡ Thesis research – Re: Search Engine

Thank you! teevan@csail. mit. edu sdumais@microsoft. com horvitz@microsoft. com

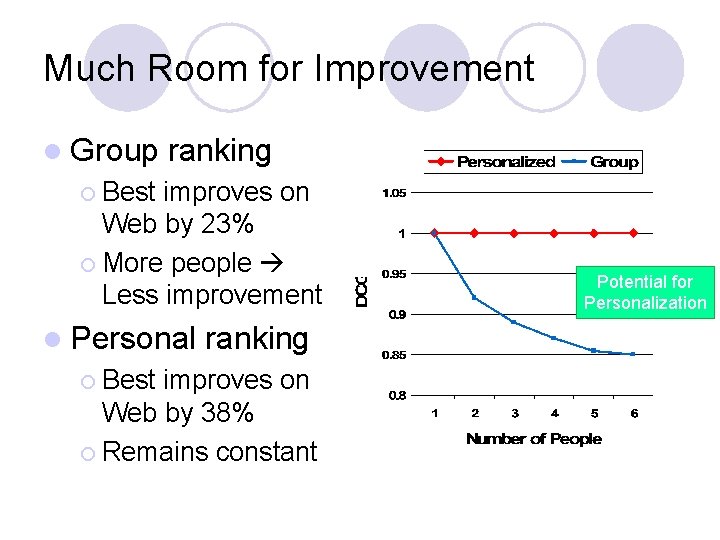

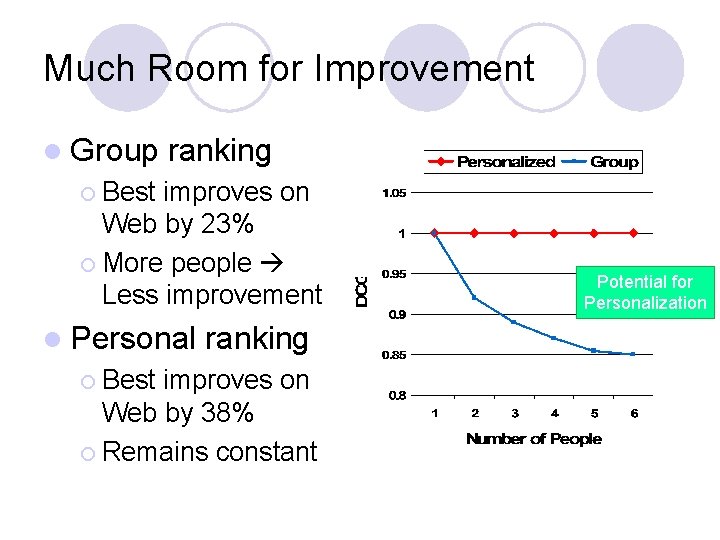

Much Room for Improvement l Group ranking ¡ Best improves on Web by 23% ¡ More people Less improvement l Personal ¡ Best ranking improves on Web by 38% ¡ Remains constant Potential for Personalization

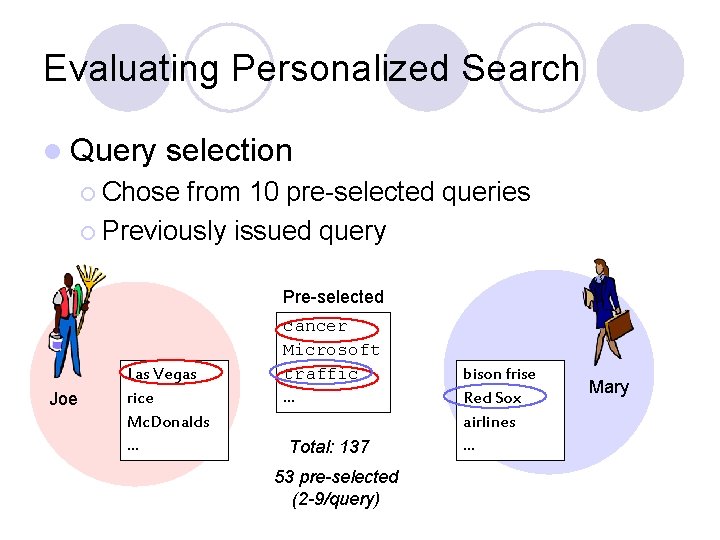

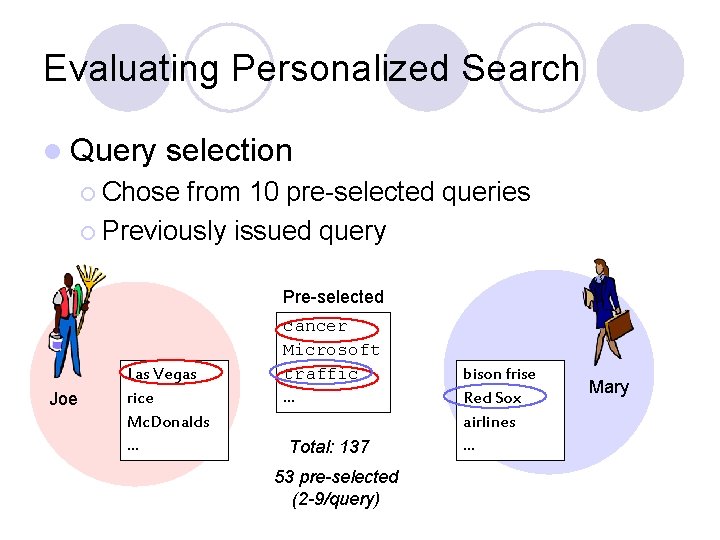

Evaluating Personalized Search l Query selection ¡ Chose from 10 pre-selected queries ¡ Previously issued query Pre-selected Joe Las Vegas rice Mc. Donalds … cancer Microsoft traffic … Total: 137 53 pre-selected (2 -9/query) bison frise Red Sox airlines … Mary

Making PS Practical l Learn most about personalization by deploying a system l Best algorithm reasonably efficient l Merging server and client ¡ Query l expansion Get more relevant results in the set to be re-ranked ¡ Design snippets for personalization