PATENT SEARCH USING IPC CLASSIFICATION VECTORS MANISHA VERMA

- Slides: 17

PATENT SEARCH USING IPC CLASSIFICATION VECTORS MANISHA VERMA, VASUDEVA VARMA

OUTLINE • Motivation • Proposed Approach • Vector Generation • Vector Propagation • Evaluation • • • Dataset Cosine similarity (CS) Graded Cosine Similarity (GSC) Text Score + Similarity Score Results • Future Work

SEARCH PATENTS, BUT HOW ? • Input : A patent application • Process : • • • Study the patent Find key words related to the invention Formulate query with help of several operators Query the patent database Refine the query depending on the results • Problem : Search results depend on the query, query depends on keywords selection. Quality of search results depends heavily on the choice of words and their weight in the query

MOTIVATION • Selection of keywords requires domain expertise, restricts the number and areas of examiner’s patent searches. • Manual and tedious process of query creation. So many words and so many operators, optimal combination needs expertise. • What claims to focus on, which ones to leave. Some patent applications have over 100 claims.

WHAT MAY HELP • Automatic query creation and refinement. • Exploiting the meta-data present in the application to improve results.

WHAT'S IN PATENTS • Inventor information • IPC (International Patent Classification) class code information. • Date of filling • Citations • Images • And of course the patent text – Title, Abstract, Description and Claims

OUR APPROACH 1. Use Category and citation information in patents to filter results. 2. Use text search to improve precision.

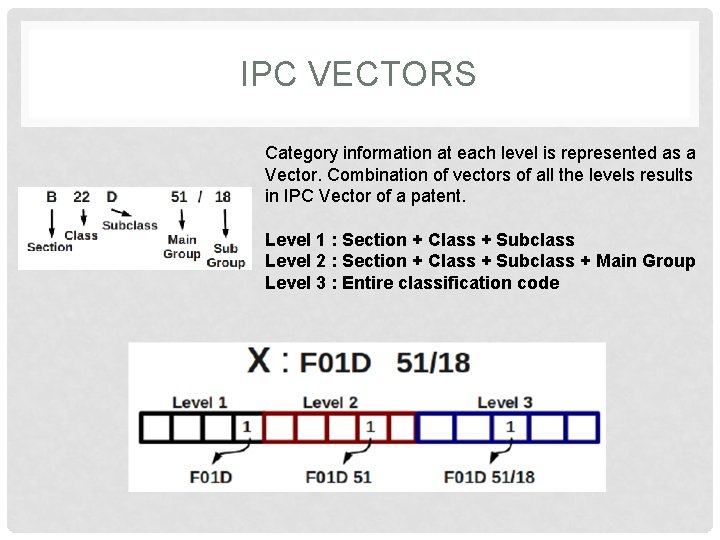

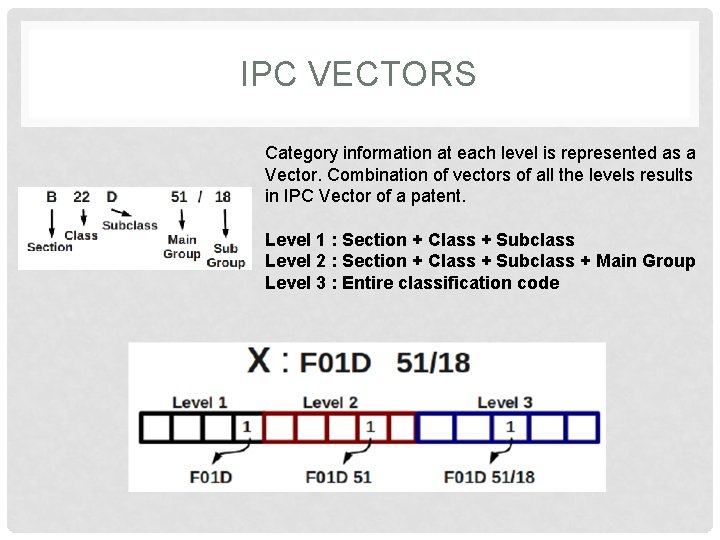

IPC VECTORS Category information at each level is represented as a Vector. Combination of vectors of all the levels results in IPC Vector of a patent. Level 1 : Section + Class + Subclass Level 2 : Section + Class + Subclass + Main Group Level 3 : Entire classification code

VECTOR PROPAGATION • Citation graph of patents is used to enrich the vector. It is a directed graph which has a link from Node A to Node B if patent A cites patent B. • Inlinks (incoming edges) of a node are used to add information to its vector. • Propagation ensures that if Node A is retrieved then its neighbors are also present in the solution set, this improves the recall of the system

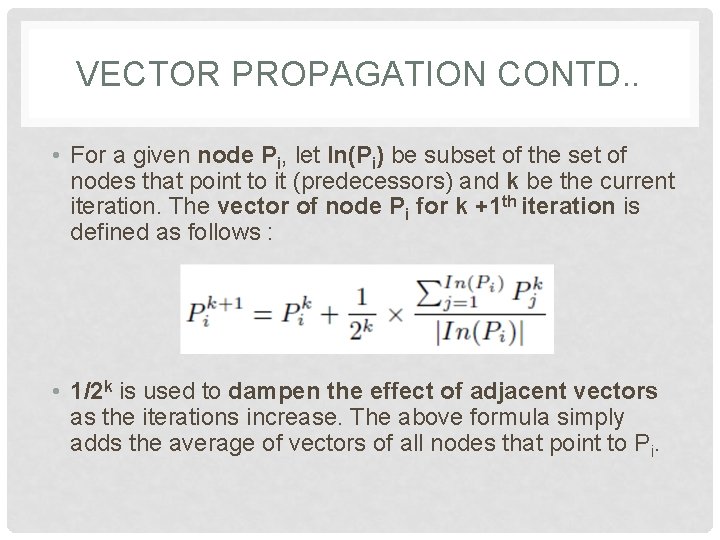

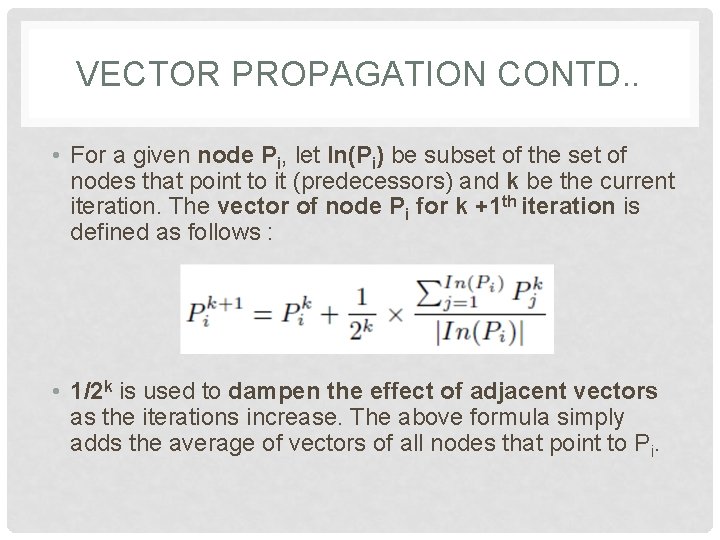

VECTOR PROPAGATION CONTD. . • For a given node Pi, let In(Pi) be subset of the set of nodes that point to it (predecessors) and k be the current iteration. The vector of node Pi for k +1 th iteration is defined as follows : • 1/2 k is used to dampen the effect of adjacent vectors as the iterations increase. The above formula simply adds the average of vectors of all nodes that point to Pi.

EVALUATION • We use the CLEF-IP 2011 collection of Prior Art Search (PAC) task that has 2. 6 million patents pertaining to 1. 3 million patents European Patent Office (EPO) with content in English, German and French, and extended by documents from WIPO. • There are 300 sample patent applications as queries with the dataset. • Both English and original patents are used for making queries.

EVALUATION • Base: Simple Text Retrieval, 20 words, from the query patent, with high tf-idf values are used to form a weighted query. The weight of each word is its tf-idf score. • COS: Cosine Similarity, IPC information present in the patent is used to make the vector. Entire vector is used to calculate cosine similarity between a patent and query. • GCS: Graded Cosine Similarity, calculating similarity at each level and linearly combining them to get final score.

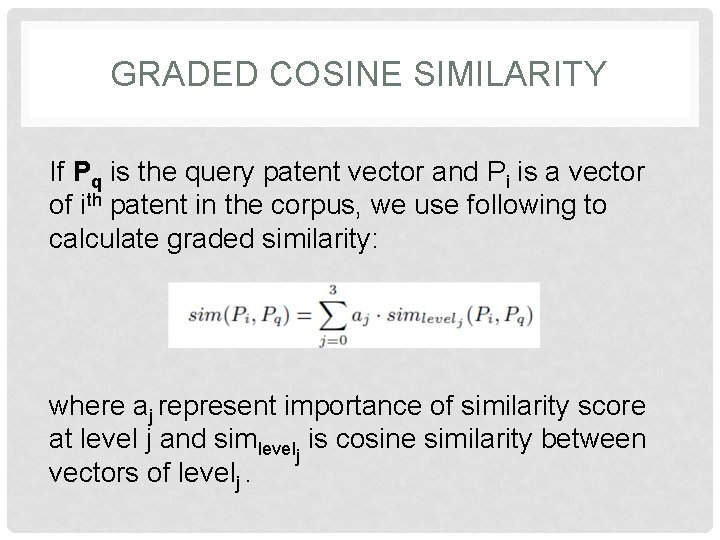

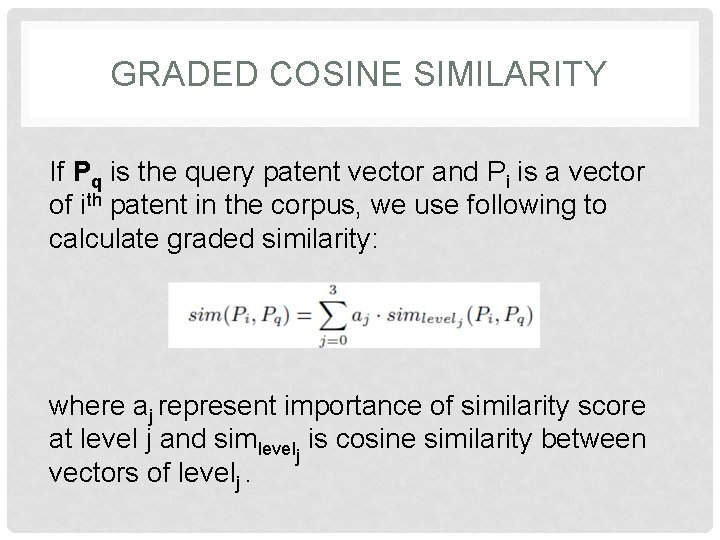

GRADED COSINE SIMILARITY If Pq is the query patent vector and Pi is a vector of ith patent in the corpus, we use following to calculate graded similarity: where aj represent importance of similarity score at level j and simlevelj is cosine similarity between vectors of levelj.

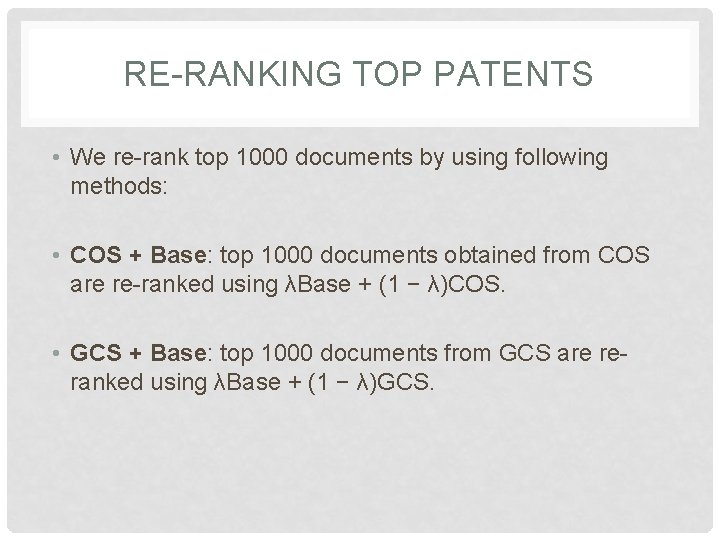

RE-RANKING TOP PATENTS • We re-rank top 1000 documents by using following methods: • COS + Base: top 1000 documents obtained from COS are re-ranked using λBase + (1 − λ)COS. • GCS + Base: top 1000 documents from GCS are reranked using λBase + (1 − λ)GCS.

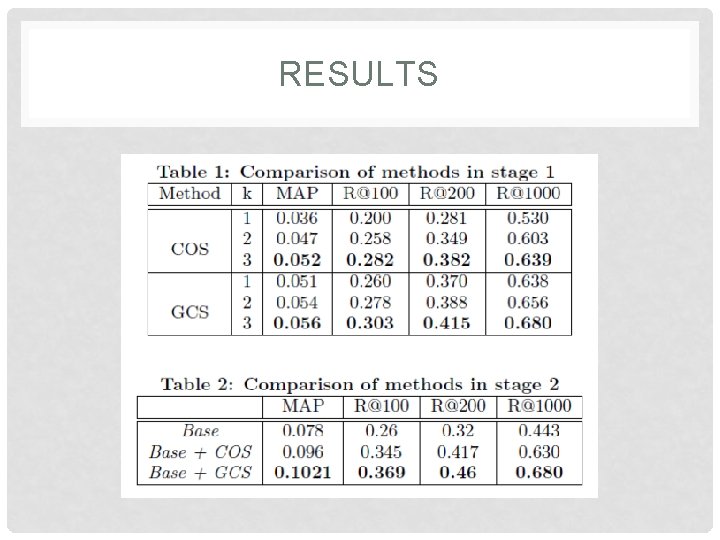

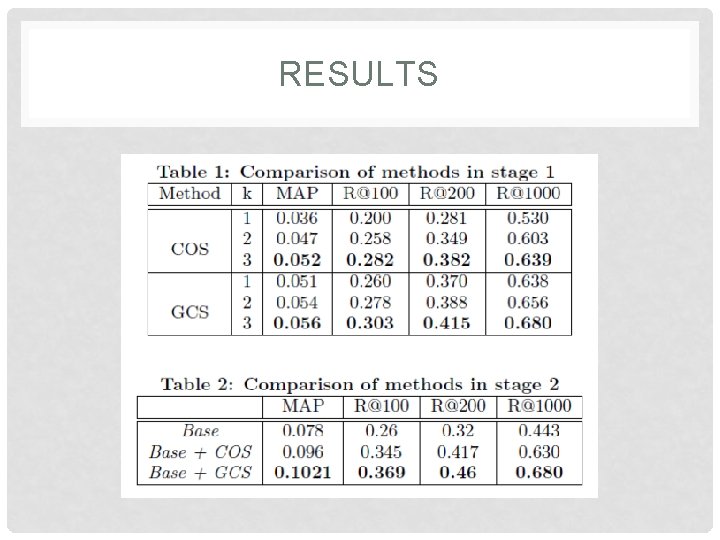

RESULTS

CONCLUSION AND FUTURE WORK • Both IPC based representation and re-ranking on sample queries of CLEF-IP 2011 dataset perform better than the baseline i. e. text based retrieval in terms of precision and recall. • An extension to this work would be to use a learning-torank approach to re-rank top documents. It would be interesting to observe effects of combining both vector representation with patent text to avoid re-ranking.

QUESTIONS ? ? ? • THANK YOU