PARCC Data Forensics A Multifaceted Approach Jeffrey T

- Slides: 28

PARCC Data Forensics: A Multifaceted Approach Jeffrey T. Steedle, Pearson CCSSO National Conference on Student Assessment June 21, 2016 PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 1

Outline • Overview of PARCC Approach • Non-Statistical Methods • Lessons Learned PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 2

Overview of PARCC Approach PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 3

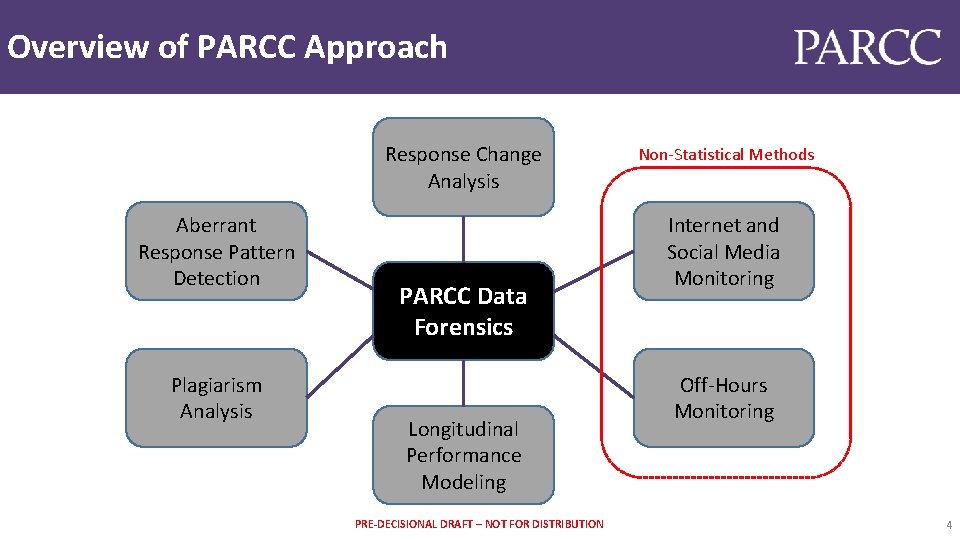

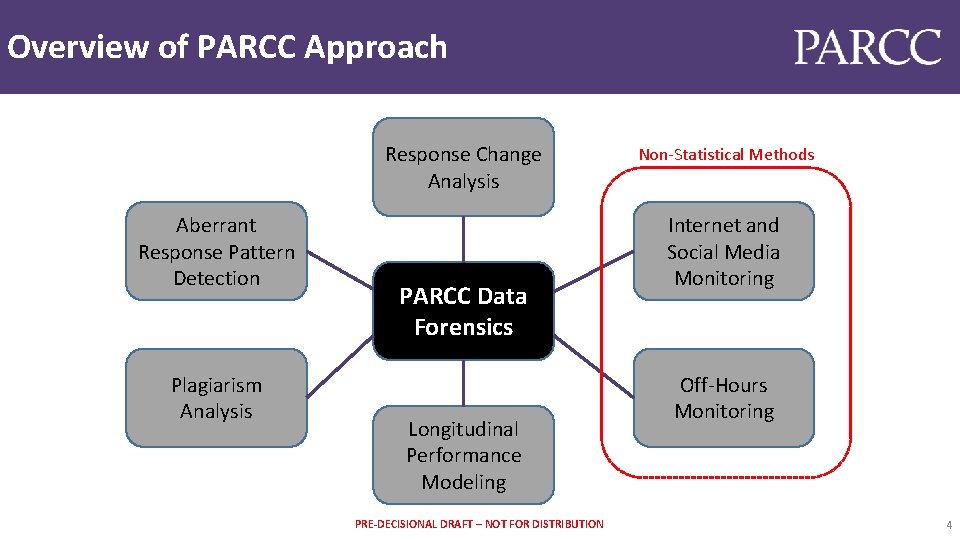

Overview of PARCC Approach Response Change Analysis Aberrant Response Pattern Detection Plagiarism Analysis PARCC Data Forensics Longitudinal Performance Modeling PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION Non-Statistical Methods Internet and Social Media Monitoring Off-Hours Monitoring 4

Non-Statistical Methods PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 5

Internet and Social Media Monitoring • Caveon, L. L. C. monitors Internet sites and forums for potential security breaches. This service generates regular updates and categorizes risk levels. • Pearson reviews alerts and takes action with the impacted state (if known) and PARCC, Inc. • PARCC States follow their internal security breach procedures when working with districts and schools. • Monitoring occurs until the content has been removed. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 6

Off-Hours Monitoring • Each state sets the permissible testing hours, and the test administration system does not permit off-hours testing. • If a school must conduct off-hours testing, it works with the state to override the system. • A report can be pulled at any time to see which schools were granted permission for off-hours testing. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 7

Statistical Methods PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 8

Response Change Analysis • There can be no light marks (or “erasure”) analysis for students who test online. • Instead, the focus is on instances of points gained (when a student’s item score increased from the initial response to the final response). • Instances of points gained (per student) were aggregated to identify atypically high average instances of points gained at the test administrator, school, and district levels. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 9

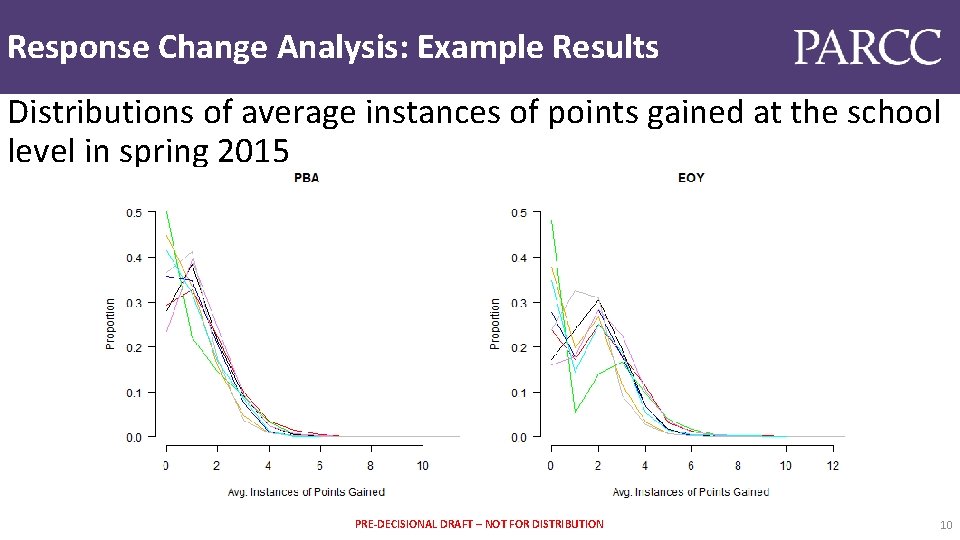

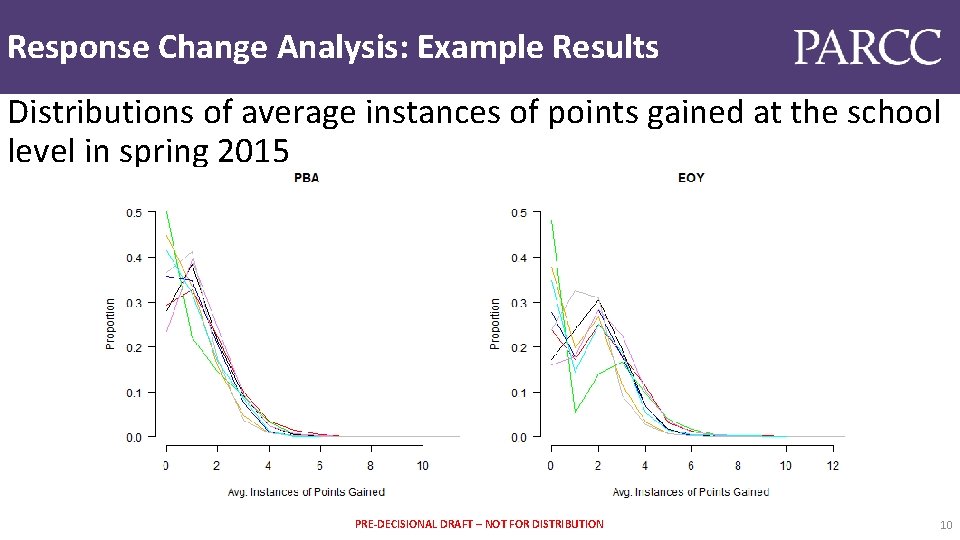

Response Change Analysis: Example Results Distributions of average instances of points gained at the school level in spring 2015 PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 10

Plagiarism Detection • This analysis was conducted for Prose Constructed Response (PCR) tasks administered online using Latent Semantic Analysis (LSA). • LSA involves pairwise comparisons of responses and identifies similarity even when responses have synonymous words or phrases. • An “exploratory” method was used to identify responses for the analysis (i. e. , the schools with atypically high writing performance given their reading performance). PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 11

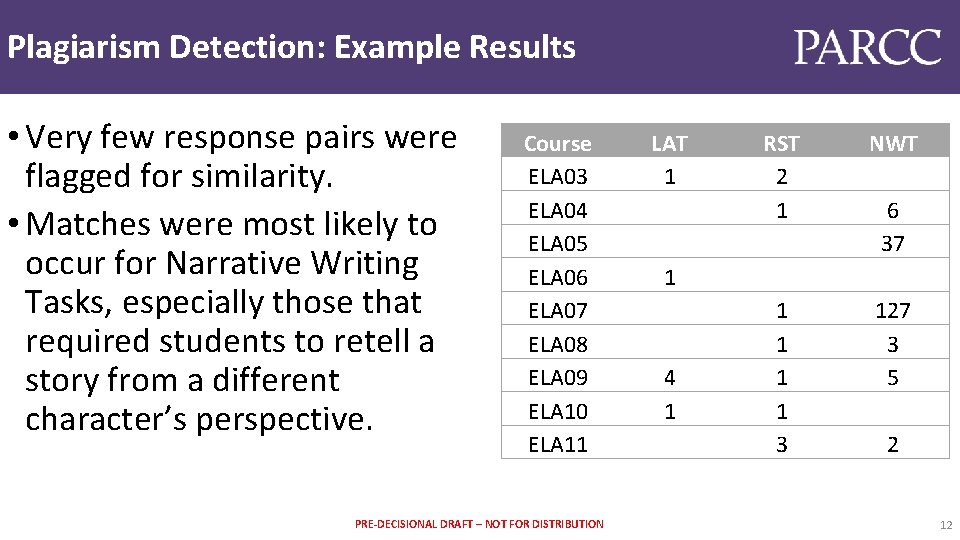

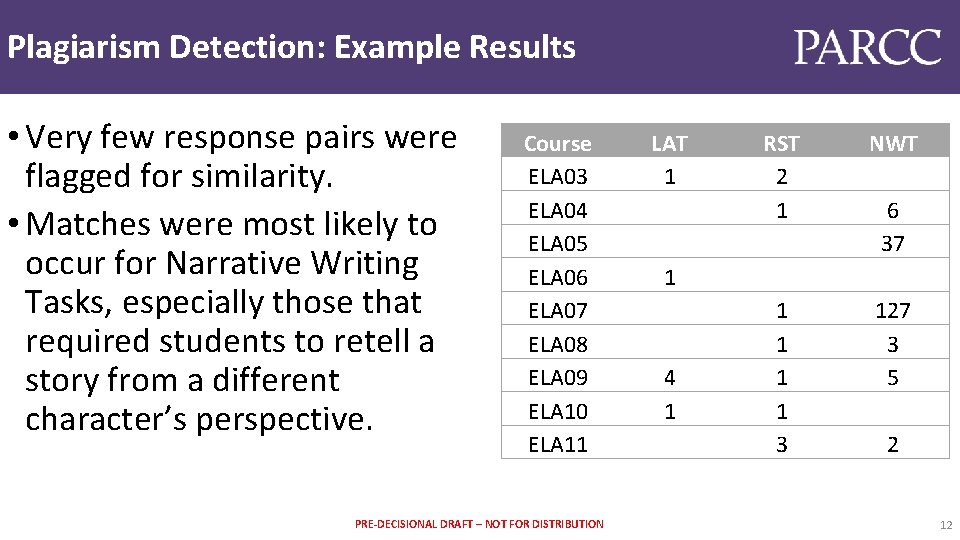

Plagiarism Detection: Example Results • Very few response pairs were flagged for similarity. • Matches were most likely to occur for Narrative Writing Tasks, especially those that required students to retell a story from a different character’s perspective. Course ELA 03 ELA 04 ELA 05 ELA 06 ELA 07 ELA 08 ELA 09 ELA 10 ELA 11 PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION LAT 1 RST 2 1 NWT 1 1 3 127 3 5 6 37 1 4 1 2 12

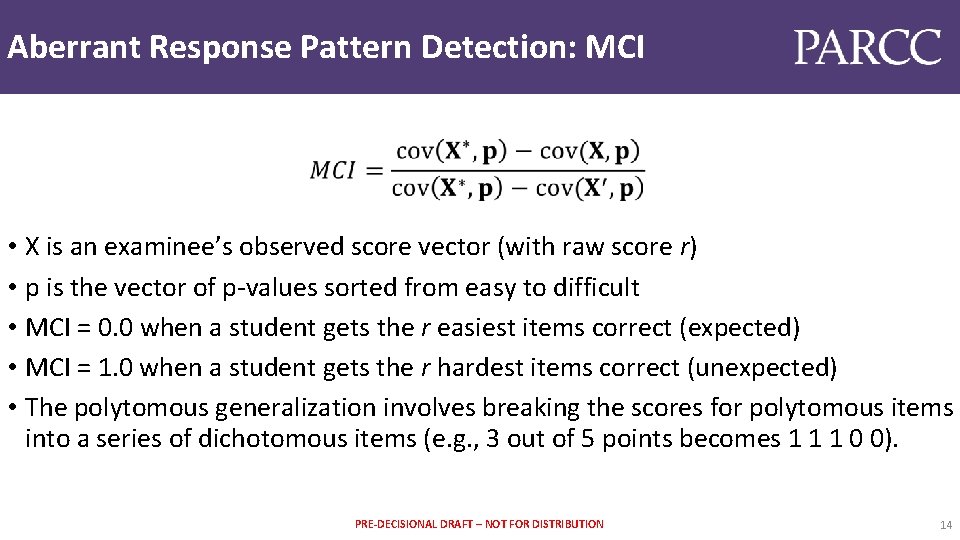

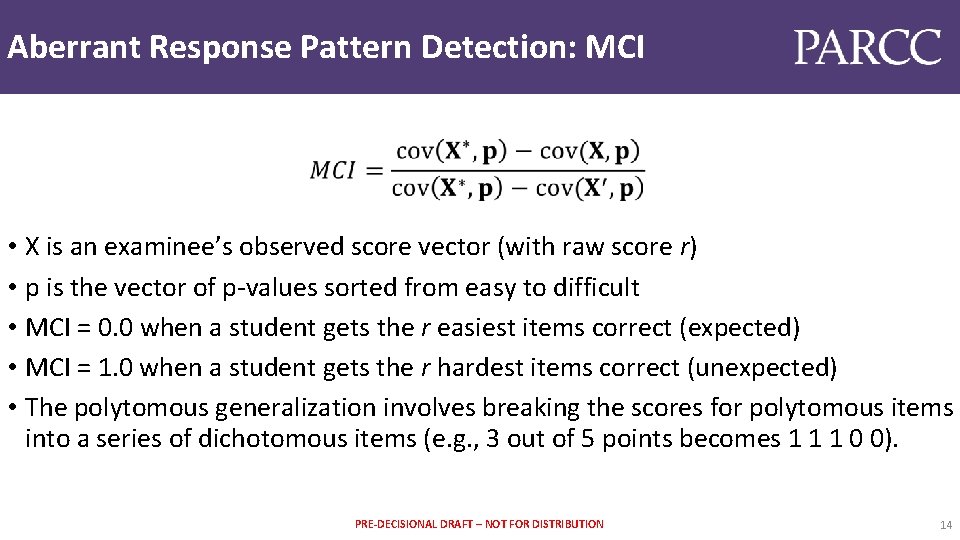

Aberrant Response Pattern Detection • Aberrant response pattern detection analysis examines the unusualness of student task scores compared with what would be expected. • The proposal was to use the Modified Caution Index (MCI; Harnisch & Linn, 1981; Tendeiro & Meijer, 2013), which quantifies the extent to which higher-scoring students perform poorly on easy tasks and lower-scoring students perform well on difficult tasks. • MCI was previously researched and implemented with multiple -choice items. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 13

Aberrant Response Pattern Detection: MCI • X is an examinee’s observed score vector (with raw score r) • p is the vector of p-values sorted from easy to difficult • MCI = 0. 0 when a student gets the r easiest items correct (expected) • MCI = 1. 0 when a student gets the r hardest items correct (unexpected) • The polytomous generalization involves breaking the scores for polytomous items into a series of dichotomous items (e. g. , 3 out of 5 points becomes 1 1 1 0 0). PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 14

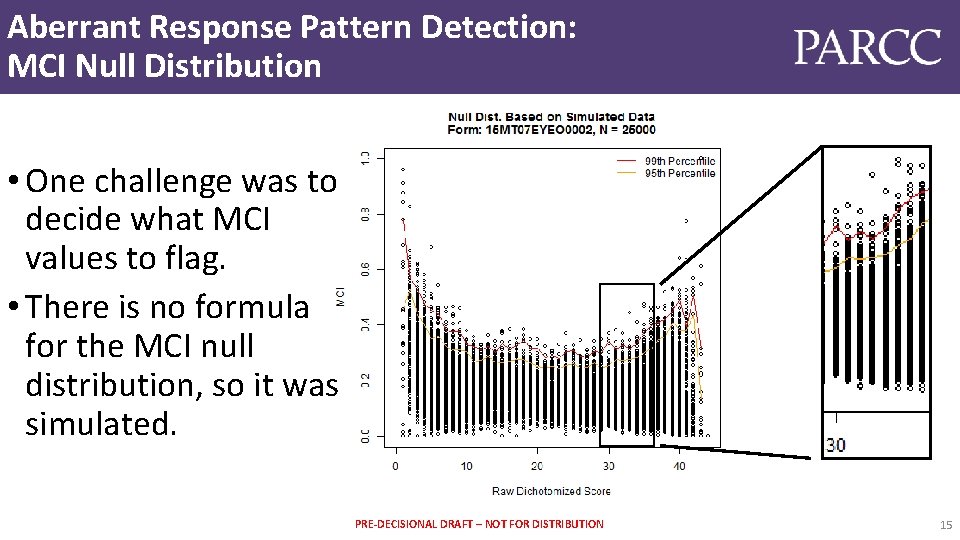

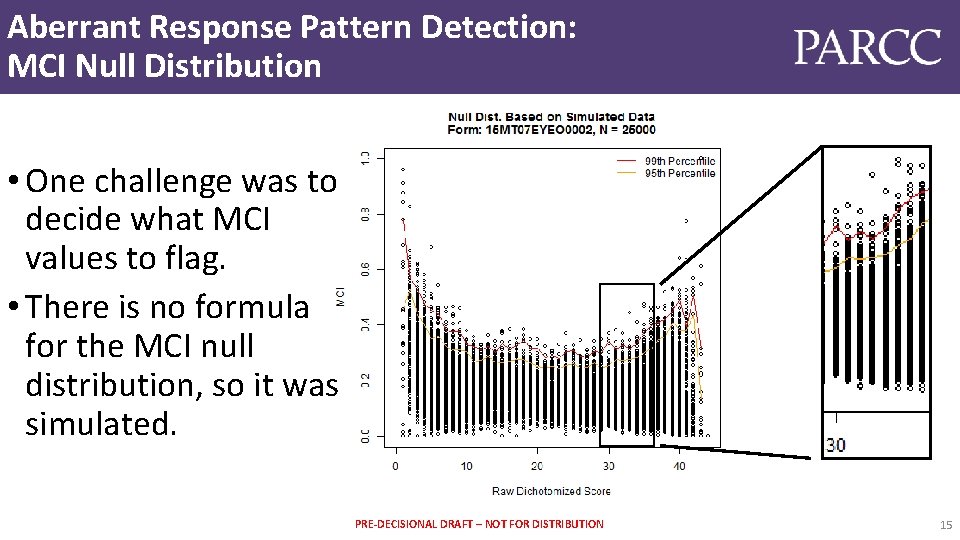

Aberrant Response Pattern Detection: MCI Null Distribution • One challenge was to decide what MCI values to flag. • There is no formula for the MCI null distribution, so it was simulated. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 15

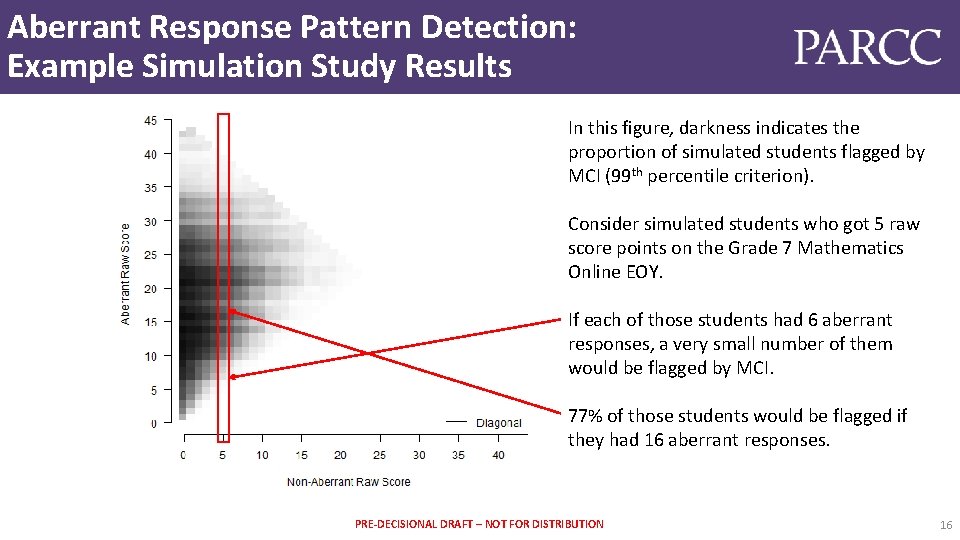

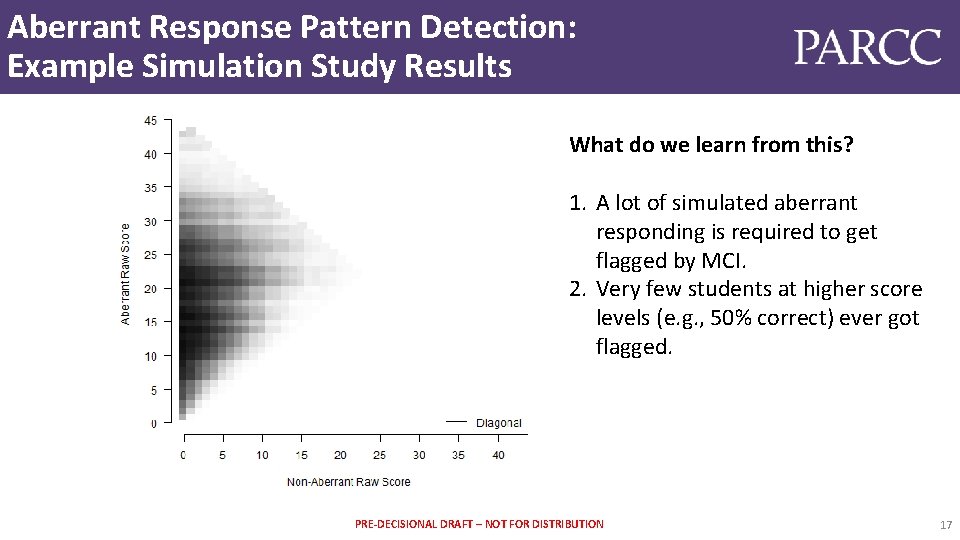

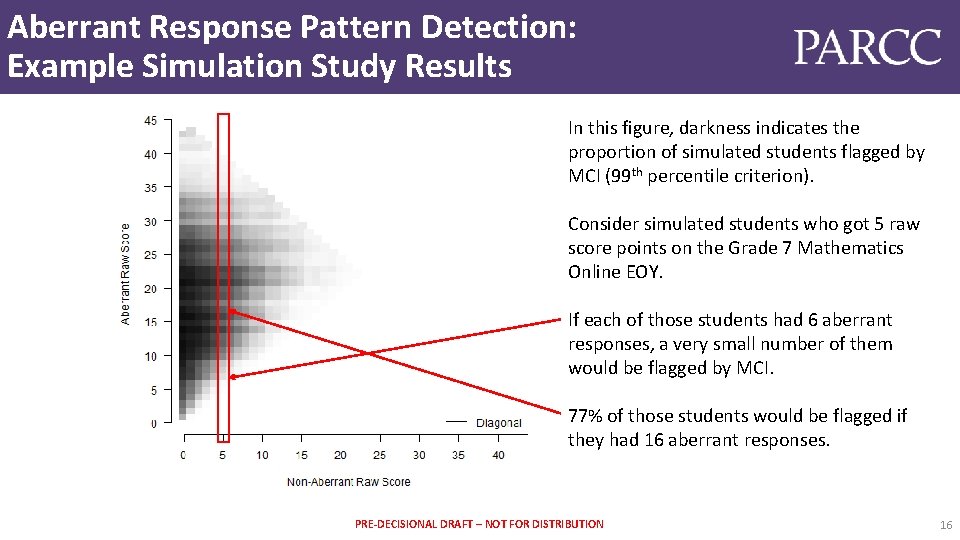

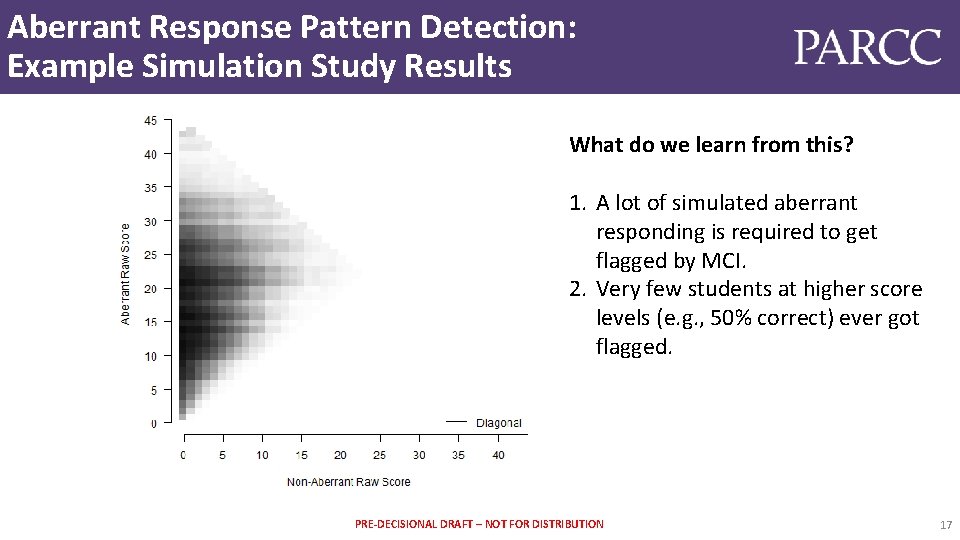

Aberrant Response Pattern Detection: Example Simulation Study Results In this figure, darkness indicates the proportion of simulated students flagged by MCI (99 th percentile criterion). Consider simulated students who got 5 raw score points on the Grade 7 Mathematics Online EOY. If each of those students had 6 aberrant responses, a very small number of them would be flagged by MCI. 77% of those students would be flagged if they had 16 aberrant responses. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 16

Aberrant Response Pattern Detection: Example Simulation Study Results What do we learn from this? 1. A lot of simulated aberrant responding is required to get flagged by MCI. 2. Very few students at higher score levels (e. g. , 50% correct) ever got flagged. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 17

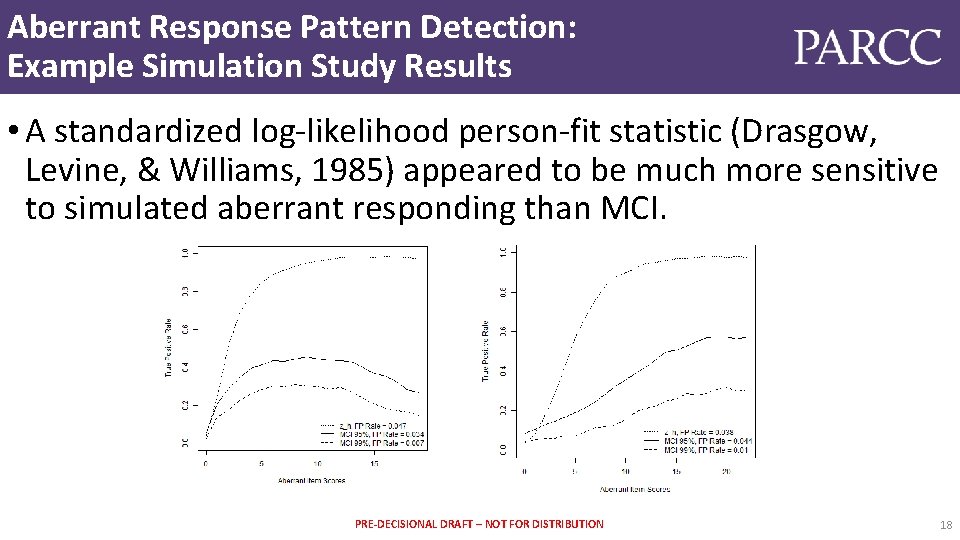

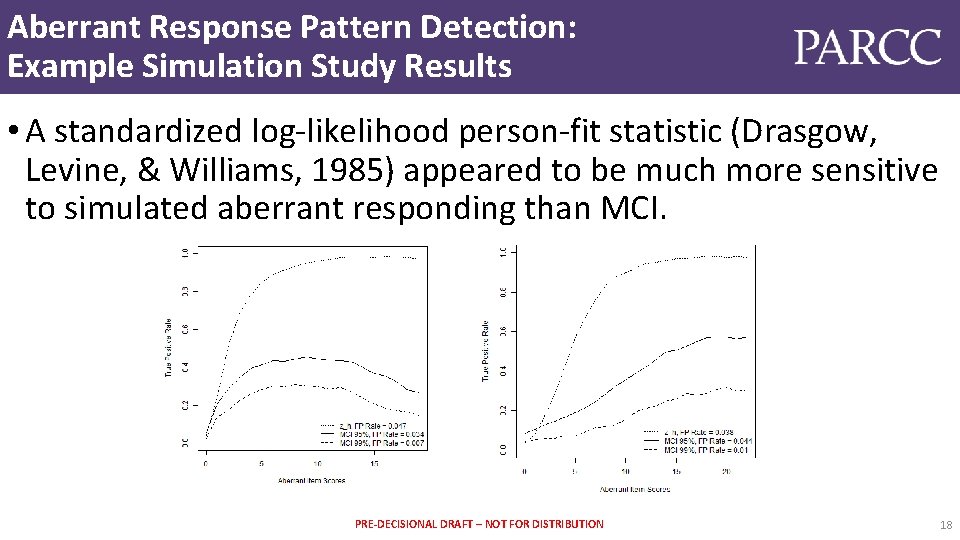

Aberrant Response Pattern Detection: Example Simulation Study Results • A standardized log-likelihood person-fit statistic (Drasgow, Levine, & Williams, 1985) appeared to be much more sensitive to simulated aberrant responding than MCI. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 18

Longitudinal Performance Modeling • Longitudinal performance modeling evaluates the performance on PARCC assessments across test administrations and identifies unusual performance gains in the unit of interest (e. g. , school or district). • The current plan is to use the cumulative logit regression model approach (Clark, Skorupski, Jirka, Mc. Bride, Wang & Murphy, 2014) to identify unusual changes in test performance across two consecutive administrations of the PARCC assessment. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 19

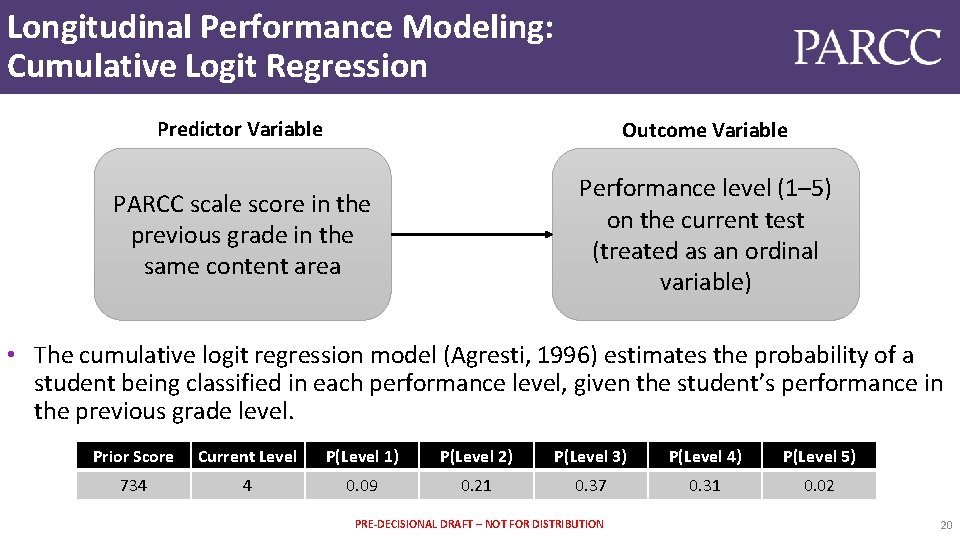

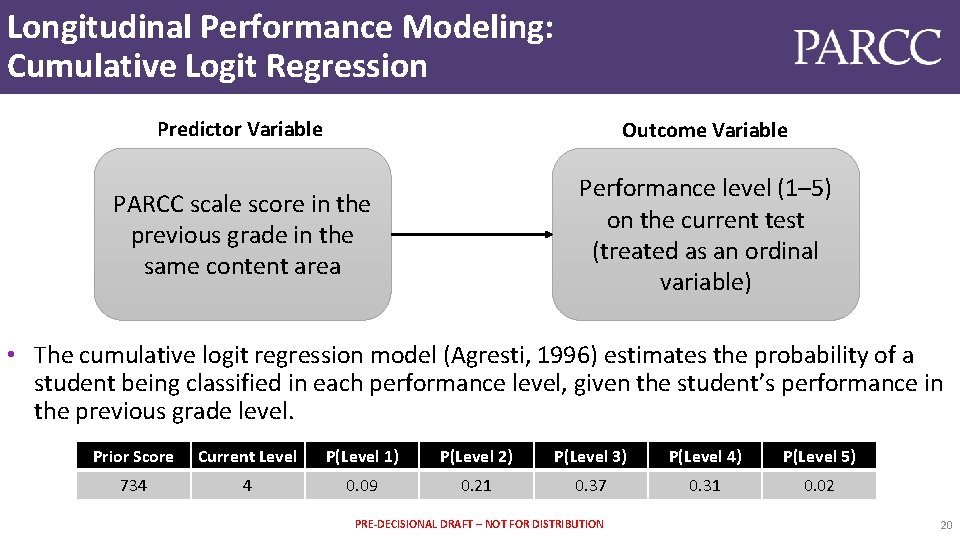

Longitudinal Performance Modeling: Cumulative Logit Regression Predictor Variable Outcome Variable PARCC scale score in the previous grade in the same content area Performance level (1– 5) on the current test (treated as an ordinal variable) • The cumulative logit regression model (Agresti, 1996) estimates the probability of a student being classified in each performance level, given the student’s performance in the previous grade level. Prior Score Current Level P(Level 1) P(Level 2) P(Level 3) P(Level 4) P(Level 5) 734 4 0. 09 0. 21 0. 37 0. 31 0. 02 PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 20

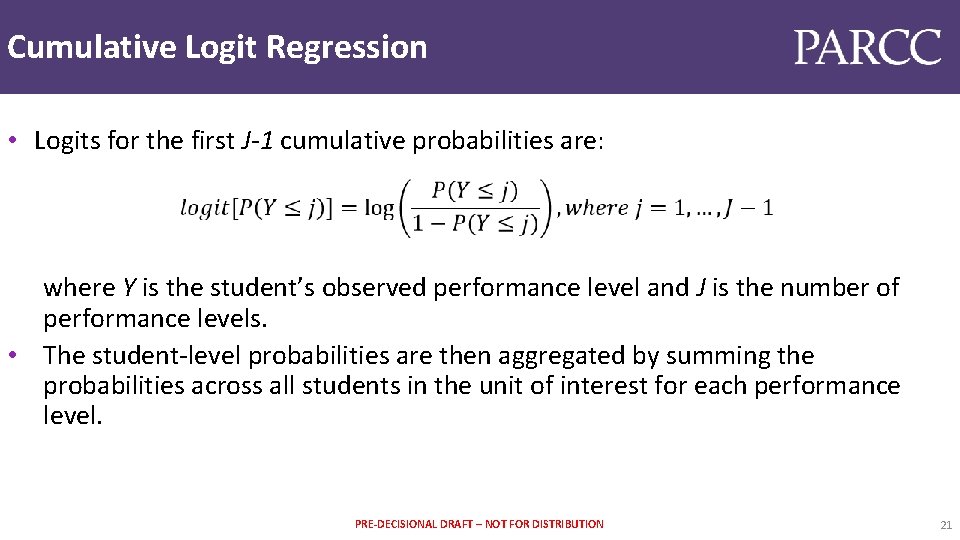

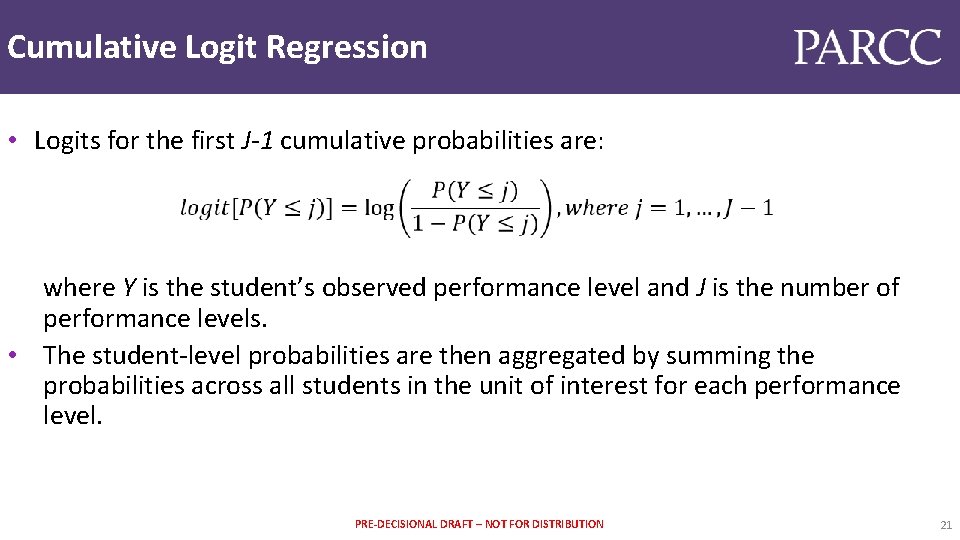

Cumulative Logit Regression • Logits for the first J-1 cumulative probabilities are: where Y is the student’s observed performance level and J is the number of performance levels. • The student-level probabilities are then aggregated by summing the probabilities across all students in the unit of interest for each performance level. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 21

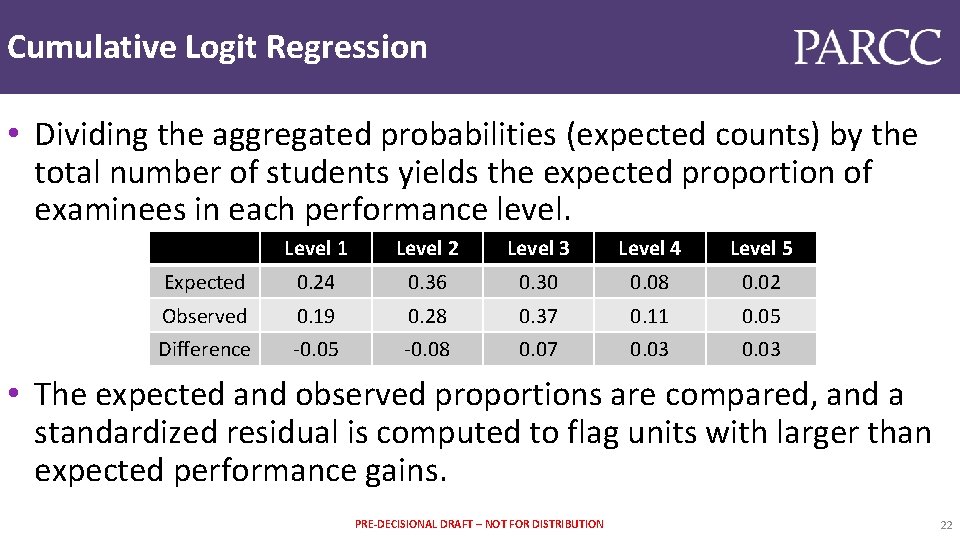

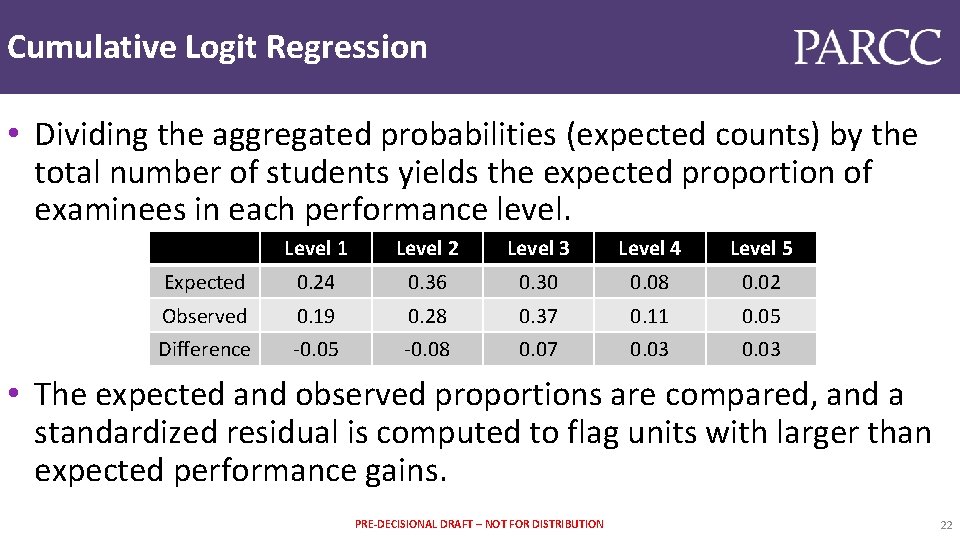

Cumulative Logit Regression • Dividing the aggregated probabilities (expected counts) by the total number of students yields the expected proportion of examinees in each performance level. Level 1 Level 2 Level 3 Level 4 Level 5 Expected 0. 24 0. 36 0. 30 0. 08 0. 02 Observed 0. 19 0. 28 0. 37 0. 11 0. 05 Difference -0. 05 -0. 08 0. 07 0. 03 • The expected and observed proportions are compared, and a standardized residual is computed to flag units with larger than expected performance gains. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 22

Cumulative Logit Regression • Clark et al. (2014) found that using this approach to detect cases of test misconduct yielded good detection power with conservative Type I error (false positive) rates in a number of simulated conditions. • However, the model has never been implemented as a data forensics method in an operational setting. Thus, an exploratory study using data from an operational PARCC administrations is currently planned. • An investigation will be conducted using PARCC results from spring 2015 and spring 2016. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 23

Lessons Learned PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 24

Response Change Analysis • The response change analysis will continue. • There has been some consideration of different rules for identifying “score increases” (e. g. , examining final score vs. scores from first response, first non-blank response, response preceding the final response). • Some PARCC states have expressed the desire to obtain response documents (for paper testing) and to know the items on which students had score increases. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 25

Plagiarism Analysis • Plagiarism on PCRs may be uncommon and/or difficult to perpetrate. • If there were any plagiarizers, the method for selecting papers for comparison may have missed them. • Since that analysis did not detect any suspicious behavior, future analyses will permit PARCC states to request that certain districts/schools be included in the analysis because other sources of information suggest potential test security violations. • Some PARCC states have expressed a desire to receive responses flagged for similarity. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 26

Aberrant Response Pattern Detection • The “simplest” method is not necessarily the best choice. • MCI generalized to polytomous items was not sensitive to simulated aberrant responding. • In January 2016, the PARCC TAC did not endorse MCI as an aberrant response pattern detection method. • PARCC will calculate standardized log-likelihood person-fit statistics to identify aberrant responding starting in 2016. PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 27

Contact Jeffrey T. Steedle jeffrey. steedle@pearson. com PRE-DECISIONAL DRAFT – NOT FOR DISTRIBUTION 28