Parallel Programming in SplitC David E Culler et

- Slides: 8

Parallel Programming in Split-C David E. Culler et al. (UC-Berkeley) Presented by Dan Sorin 1/20/06

Introduction • Extension of C to allow programmers to specify parallelism to compiler • Shared memory programming model – Global objects and pointers • New types of data accesses – Split-phase accesses – Stores that signal the receiving node • New types of data layout specifiers • Initially developed for Thinking Machines CM-5 – Message passing (super)-computer 2

Shared Memory Model • Any node can access: – Its own local memory – Any shared global memory • Programmer specifies if object/pointer is global – Global address = <processor number, address on that processor> • Relative cost for accessing remote vs. local – Remote access > local access – Encourages programmer to minimize global variables • Must be careful when doing global pointer arithmetic – Subtle distinction between global pointers and spread pointers 3

Performance Issues • How can we minimize remote accesses? – – – Use ghost copies (cached copies) of remote data (nodes in EM 3 D) Bulk transfers Overlap latency of communication with computation Push data instead of pulling it Better data layout 4

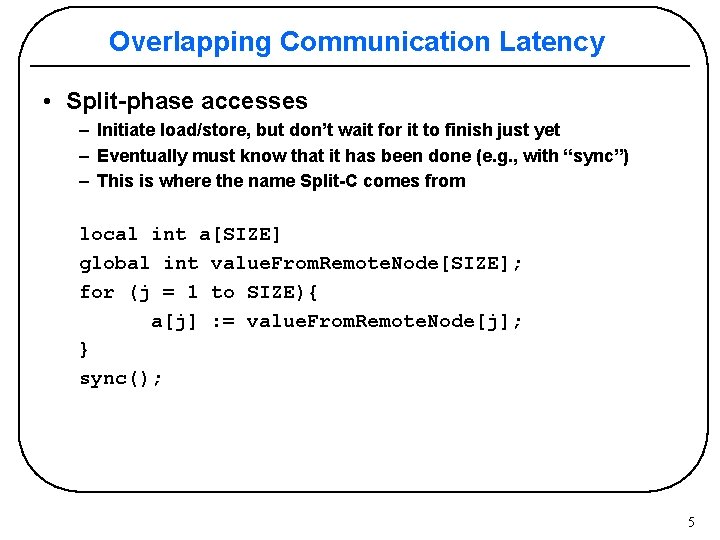

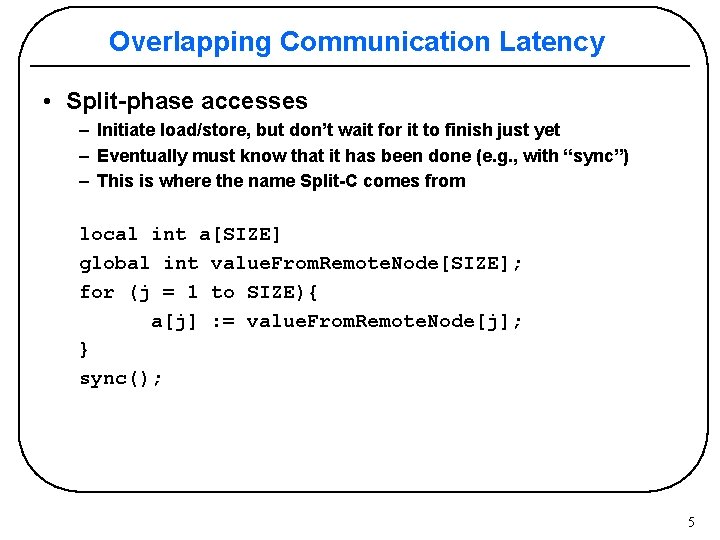

Overlapping Communication Latency • Split-phase accesses – Initiate load/store, but don’t wait for it to finish just yet – Eventually must know that it has been done (e. g. , with “sync”) – This is where the name Split-C comes from local int a[SIZE] global int value. From. Remote. Node[SIZE]; for (j = 1 to SIZE){ a[j] : = value. From. Remote. Node[j]; } sync(); 5

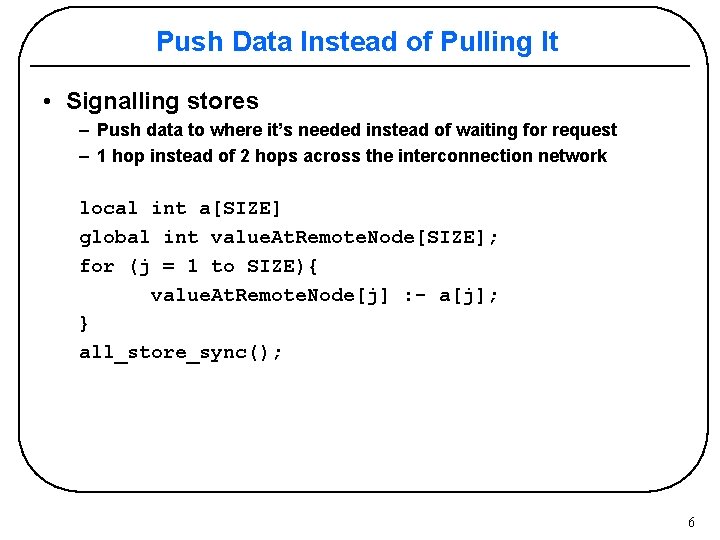

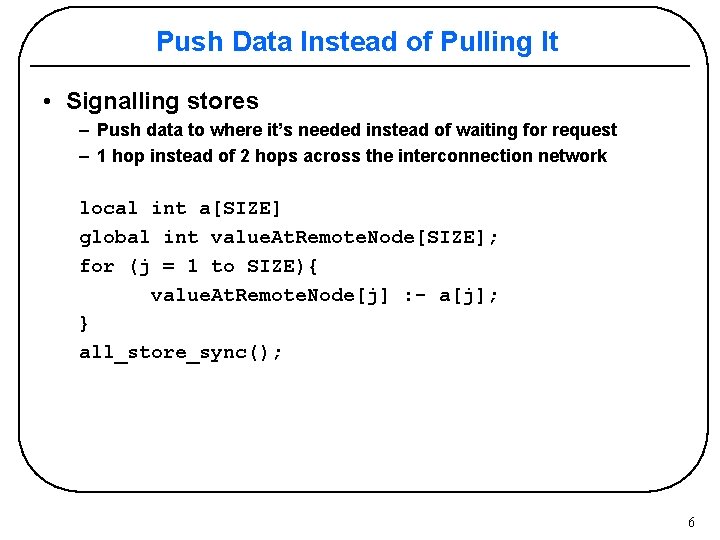

Push Data Instead of Pulling It • Signalling stores – Push data to where it’s needed instead of waiting for request – 1 hop instead of 2 hops across the interconnection network local int a[SIZE] global int value. At. Remote. Node[SIZE]; for (j = 1 to SIZE){ value. At. Remote. Node[j] : - a[j]; } all_store_sync(); 6

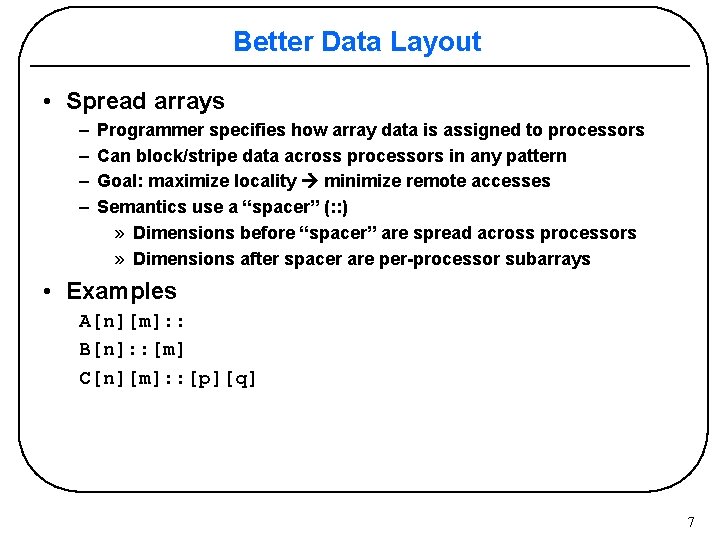

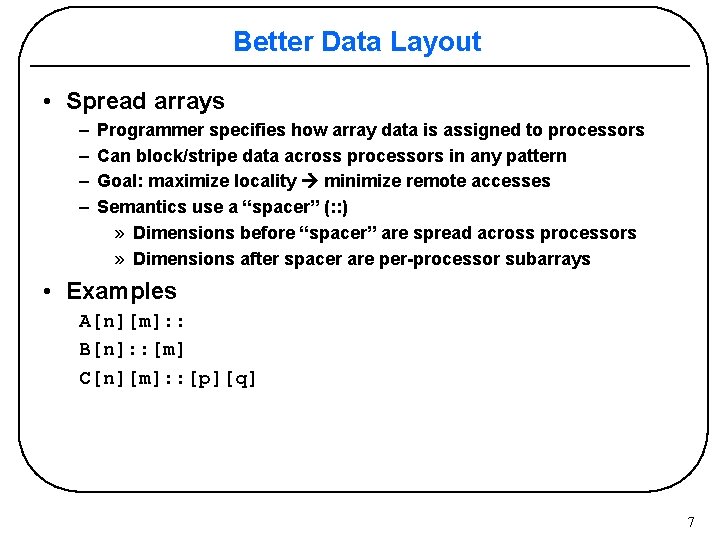

Better Data Layout • Spread arrays – – Programmer specifies how array data is assigned to processors Can block/stripe data across processors in any pattern Goal: maximize locality minimize remote accesses Semantics use a “spacer” (: : ) » Dimensions before “spacer” are spread across processors » Dimensions after spacer are per-processor subarrays • Examples A[n][m]: : B[n]: : [m] C[n][m]: : [p][q] 7

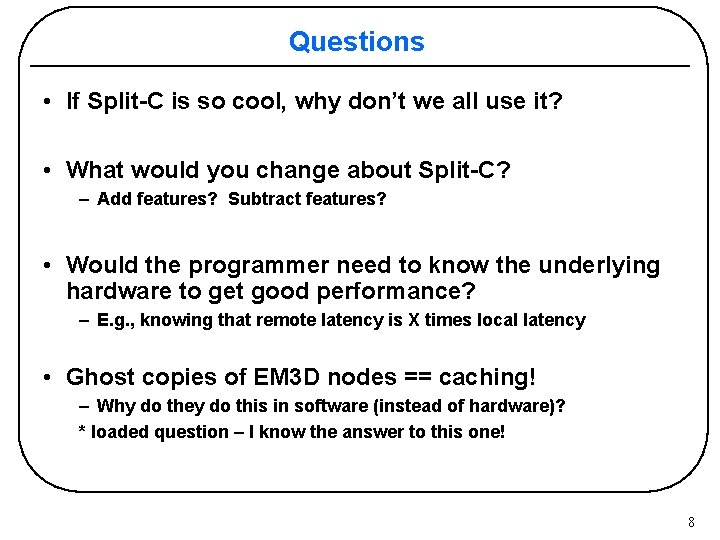

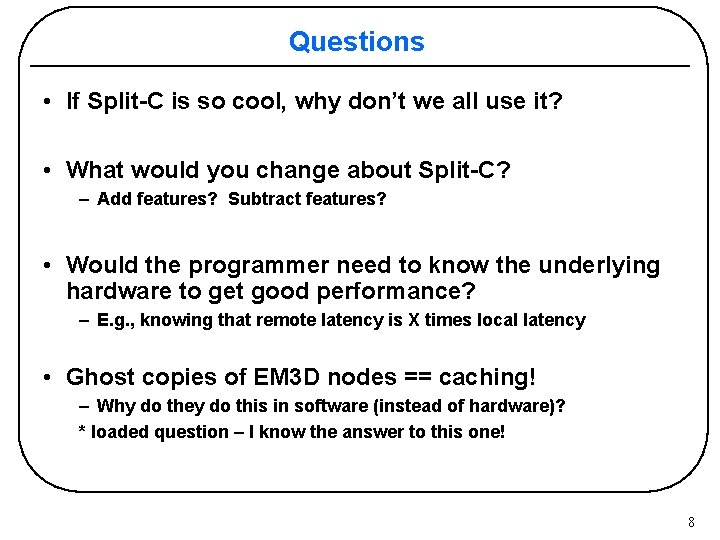

Questions • If Split-C is so cool, why don’t we all use it? • What would you change about Split-C? – Add features? Subtract features? • Would the programmer need to know the underlying hardware to get good performance? – E. g. , knowing that remote latency is X times local latency • Ghost copies of EM 3 D nodes == caching! – Why do they do this in software (instead of hardware)? * loaded question – I know the answer to this one! 8