My SQL Data Warehousing Survival Guide Marius Moscovici

- Slides: 31

My. SQL Data Warehousing Survival Guide Marius Moscovici (marius@metricinsights. com) Steffan Mejia (steffan@lindenlab. com)

Topics • The size of the beast • Evolution of a Warehouse • Lessons Learned • Survival Tips • Q&A

Size of the beast • 43 Servers o 36 active o 7 standby spares • 16 TB of data in My. SQL • 12 TB archived (pre S 3 staging) • 4 TB archived (S 3) • 3. 5 B rows in main warehouse • Largest table ~ 500 M rows (My. SQL)

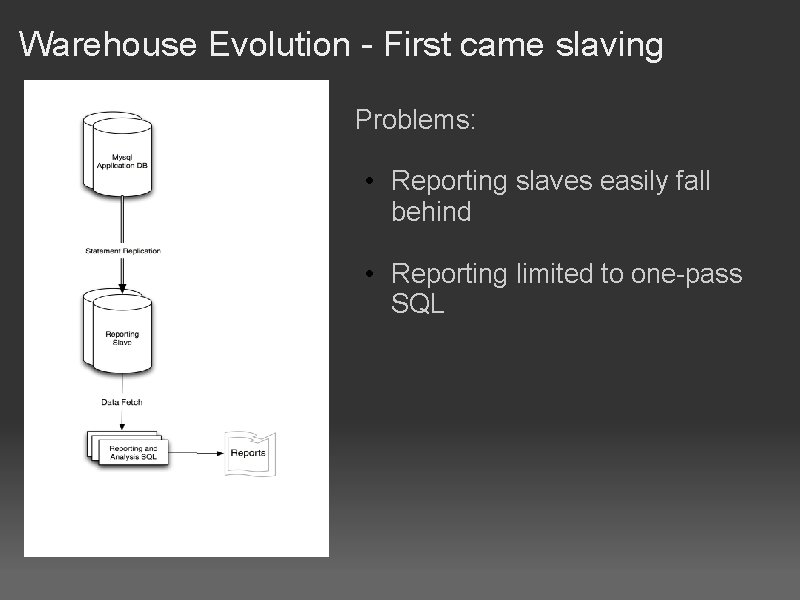

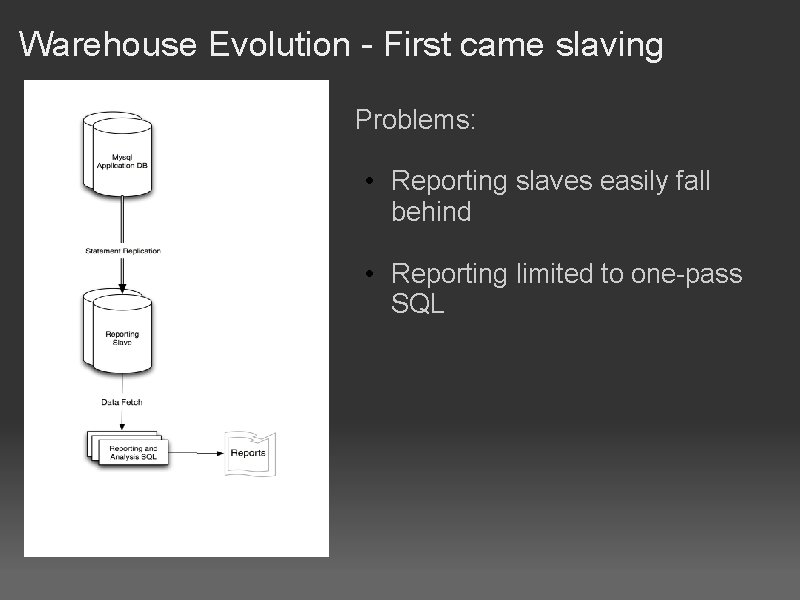

Warehouse Evolution - First came slaving Problems: • Reporting slaves easily fall behind • Reporting limited to one-pass SQL

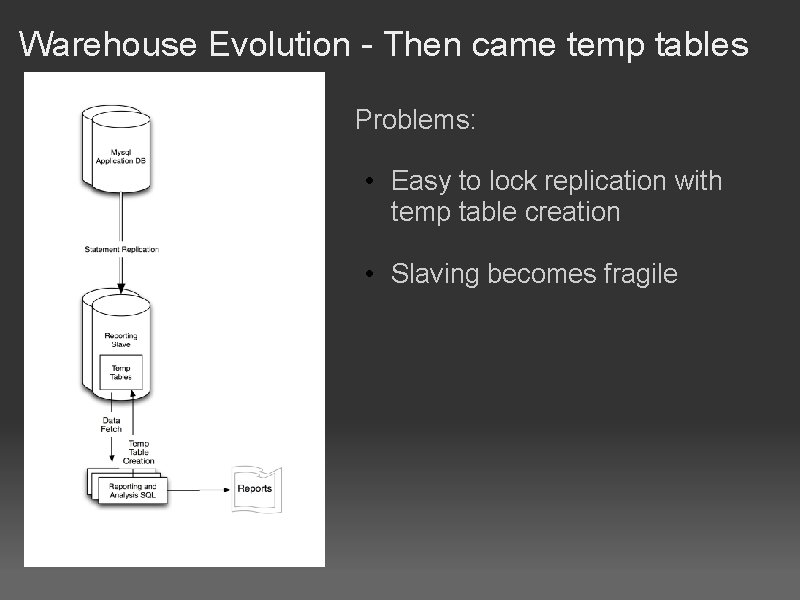

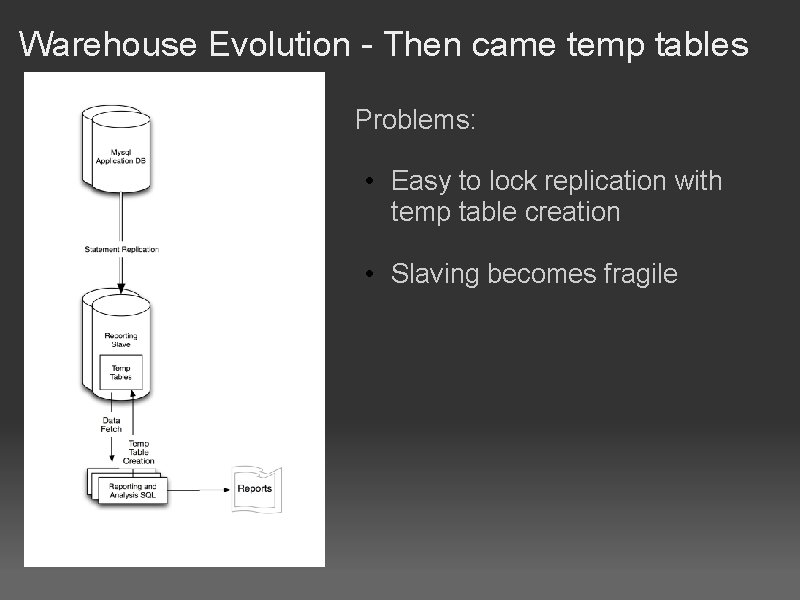

Warehouse Evolution - Then came temp tables Problems: • Easy to lock replication with temp table creation • Slaving becomes fragile

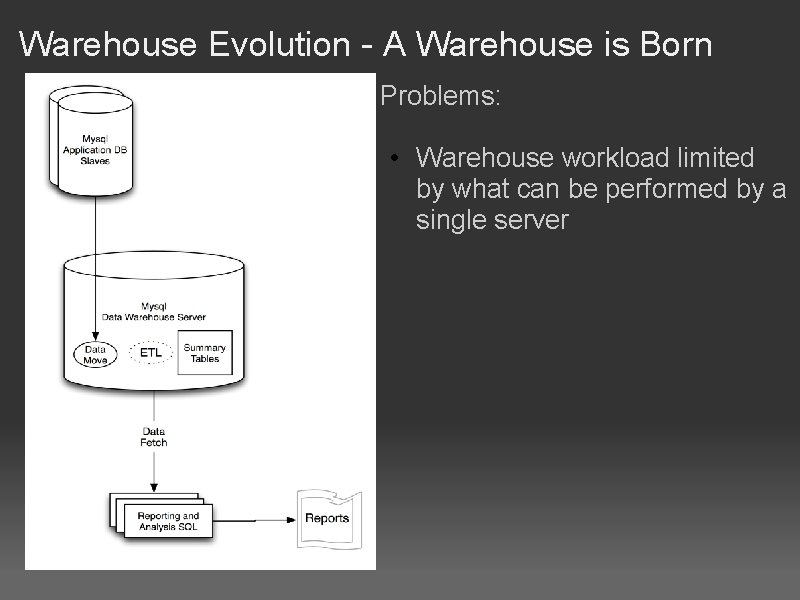

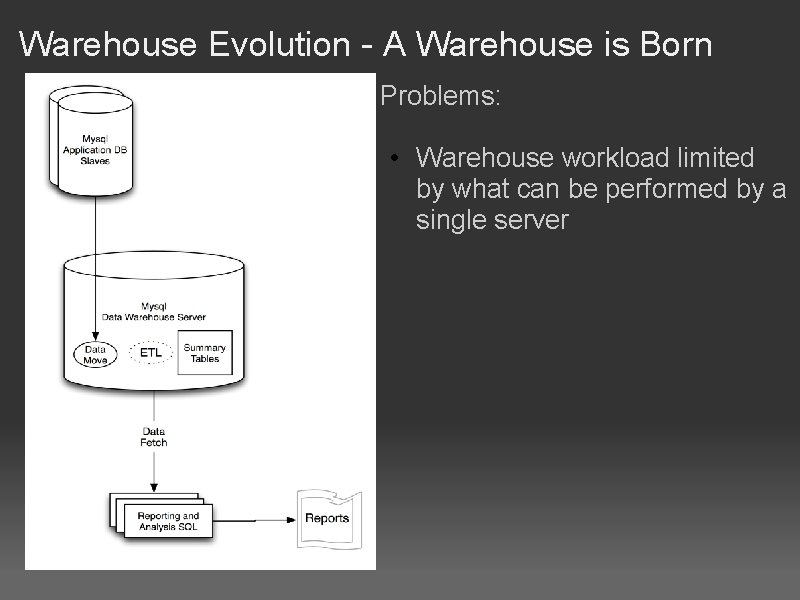

Warehouse Evolution - A Warehouse is Born Problems: • Warehouse workload limited by what can be performed by a single server

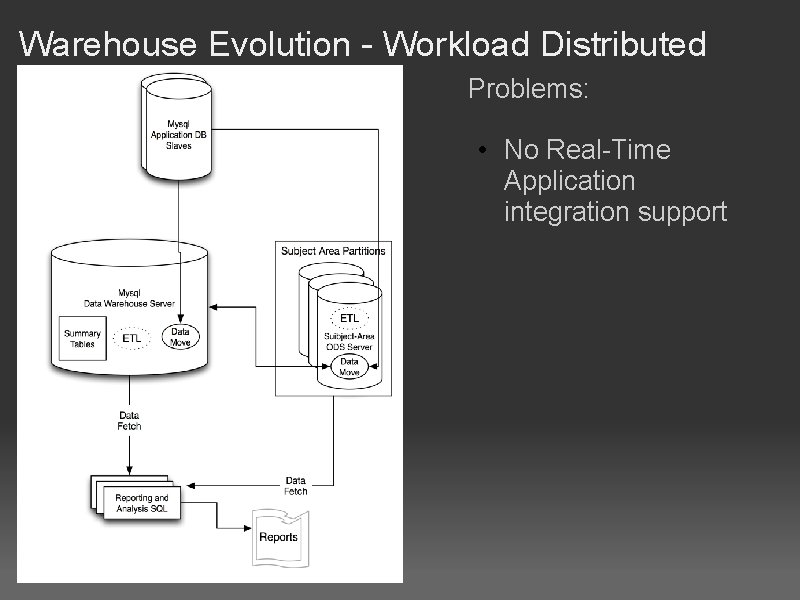

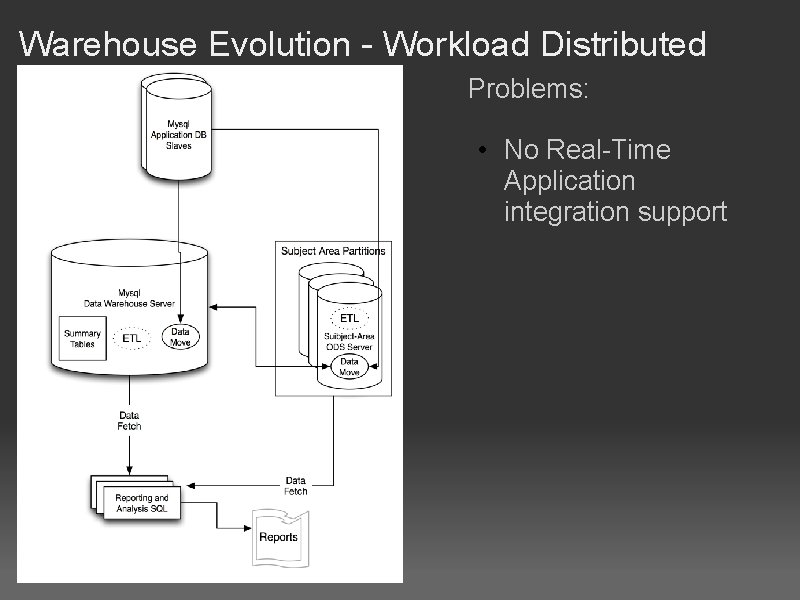

Warehouse Evolution - Workload Distributed Problems: • No Real-Time Application integration support

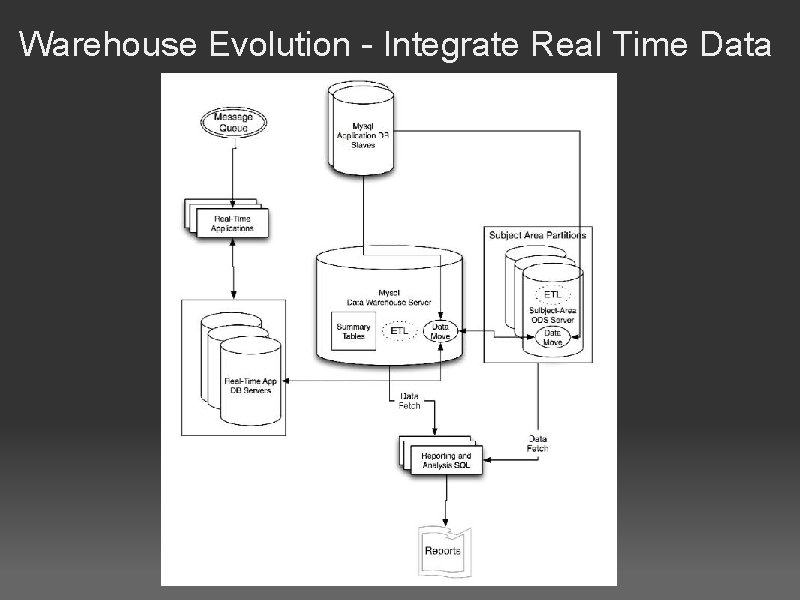

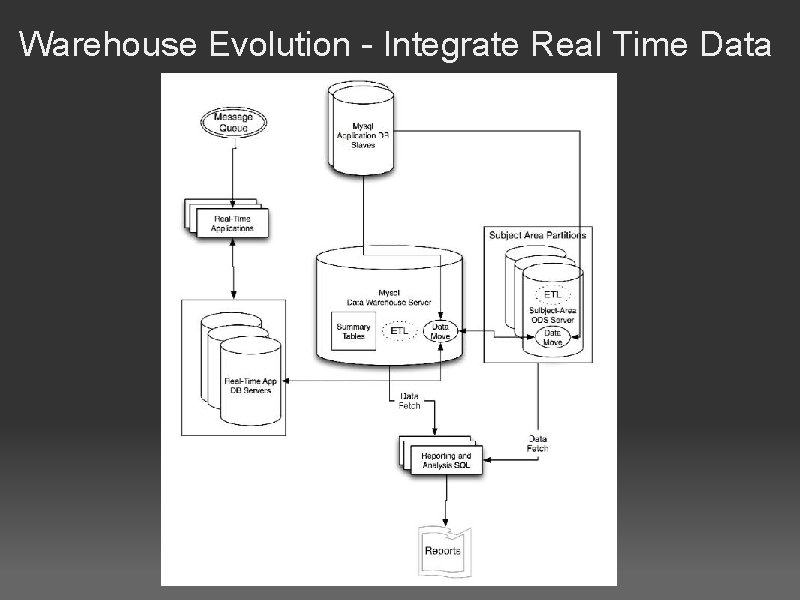

Warehouse Evolution - Integrate Real Time Data

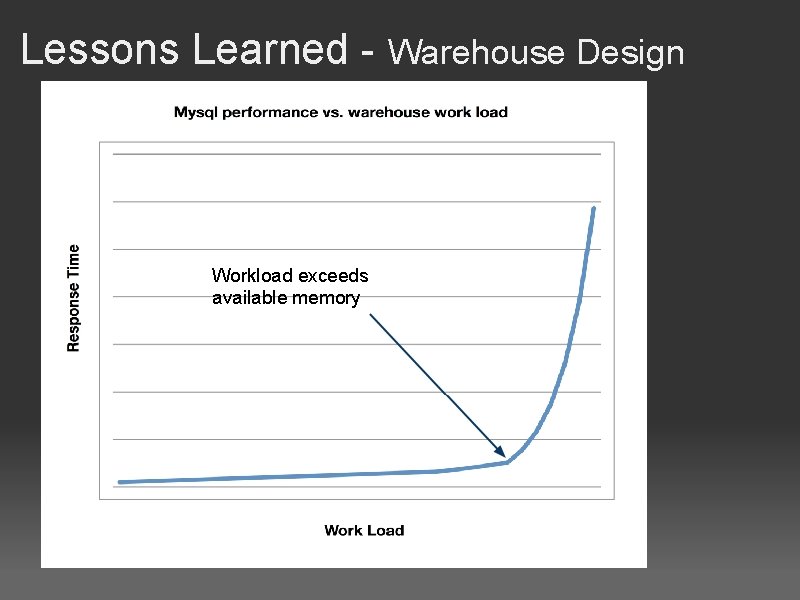

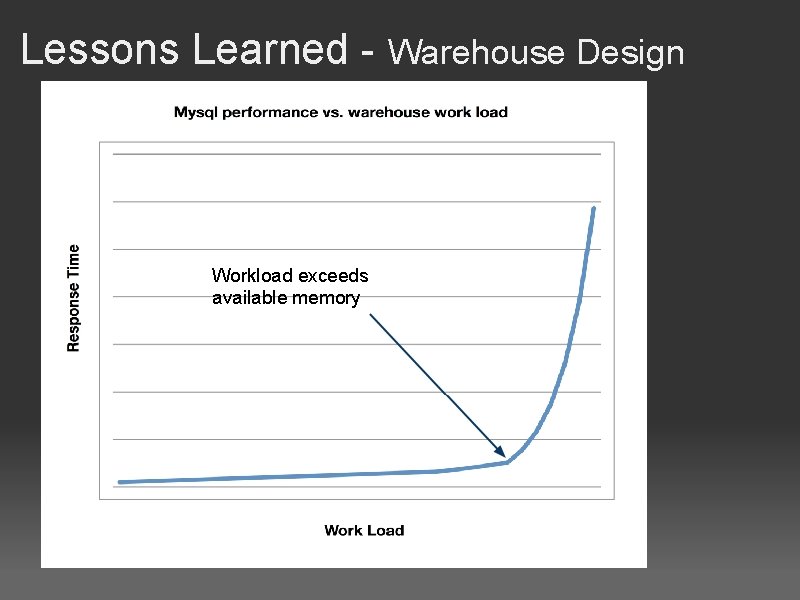

Lessons Learned - Warehouse Design Workload exceeds available memory

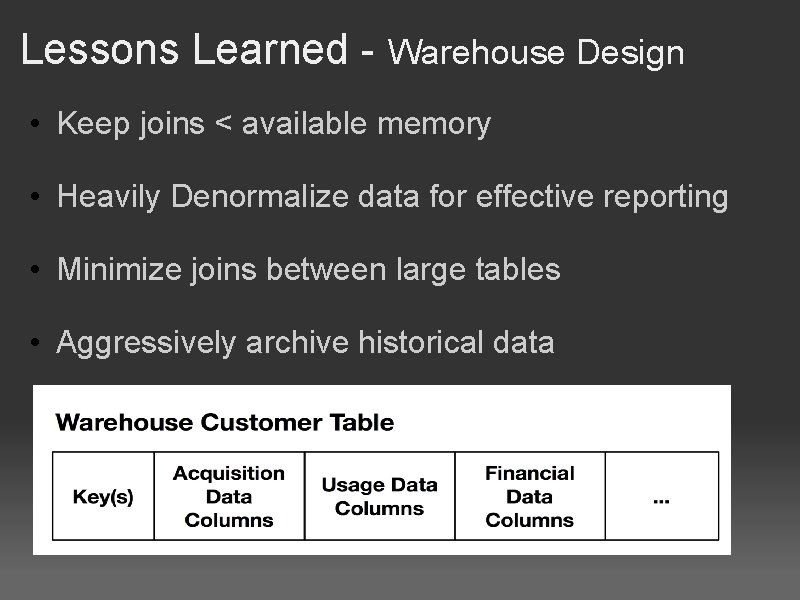

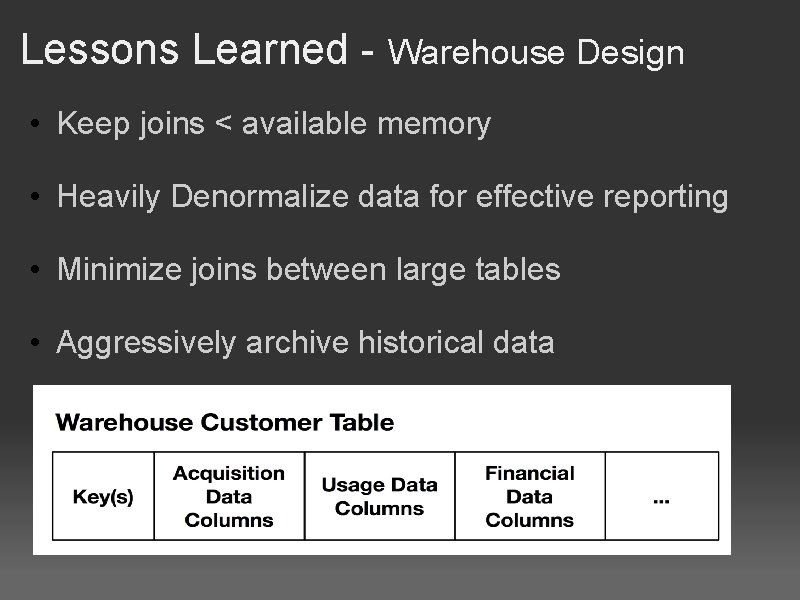

Lessons Learned - Warehouse Design • Keep joins < available memory • Heavily Denormalize data for effective reporting • Minimize joins between large tables • Aggressively archive historical data

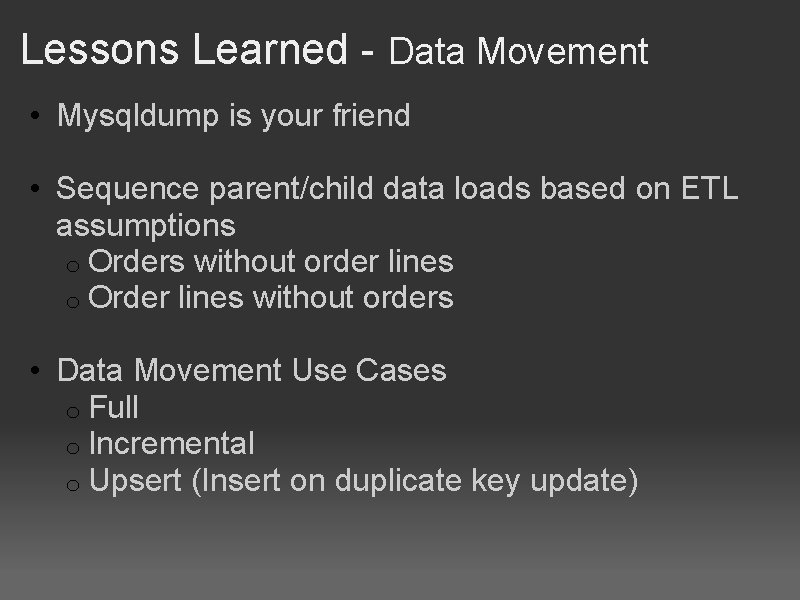

Lessons Learned - Data Movement • Mysqldump is your friend • Sequence parent/child data loads based on ETL assumptions o Orders without order lines o Order lines without orders • Data Movement Use Cases o Full o Incremental o Upsert (Insert on duplicate key update)

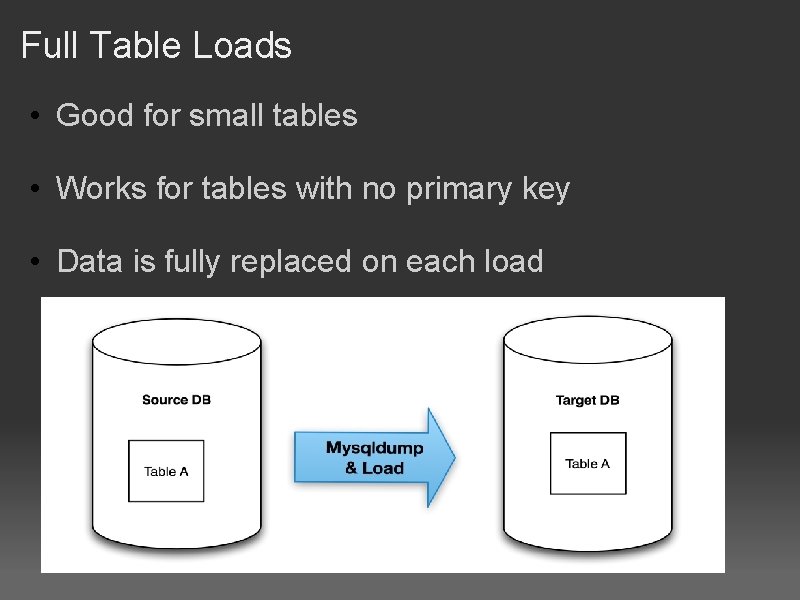

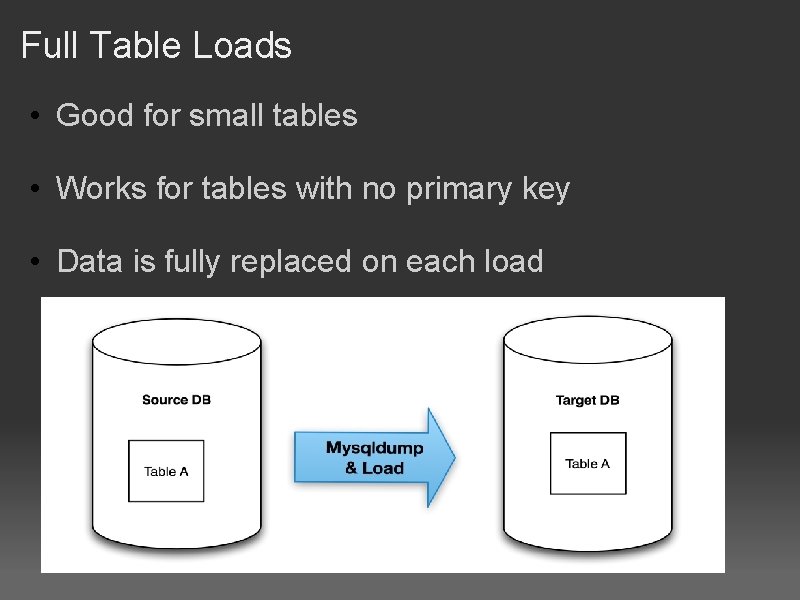

Full Table Loads • Good for small tables • Works for tables with no primary key • Data is fully replaced on each load

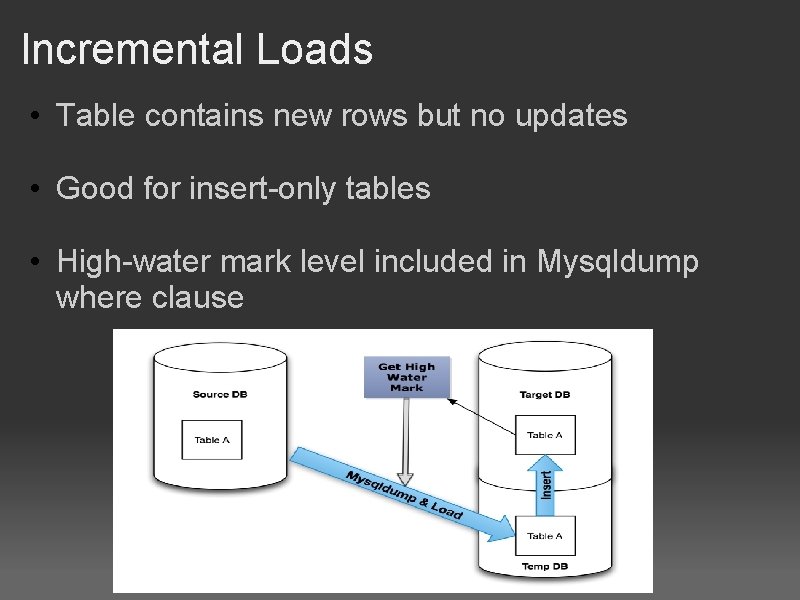

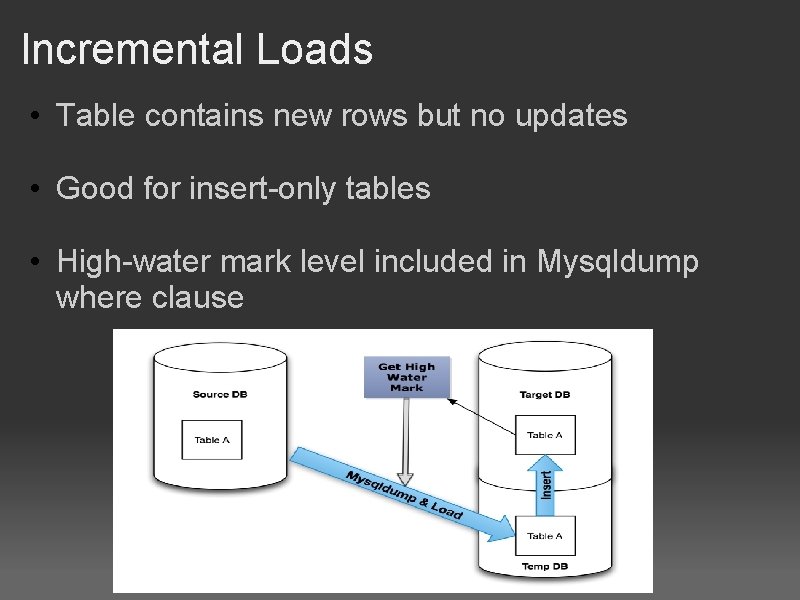

Incremental Loads • Table contains new rows but no updates • Good for insert-only tables • High-water mark level included in Mysqldump where clause

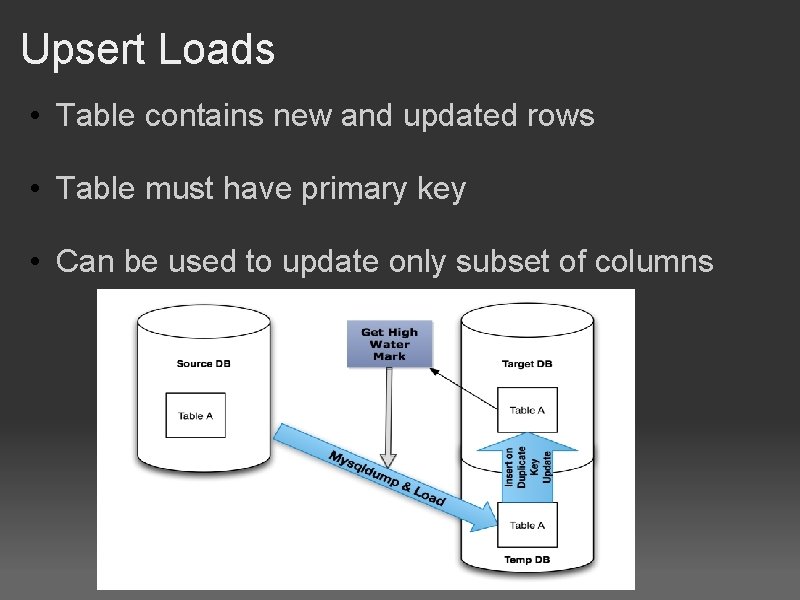

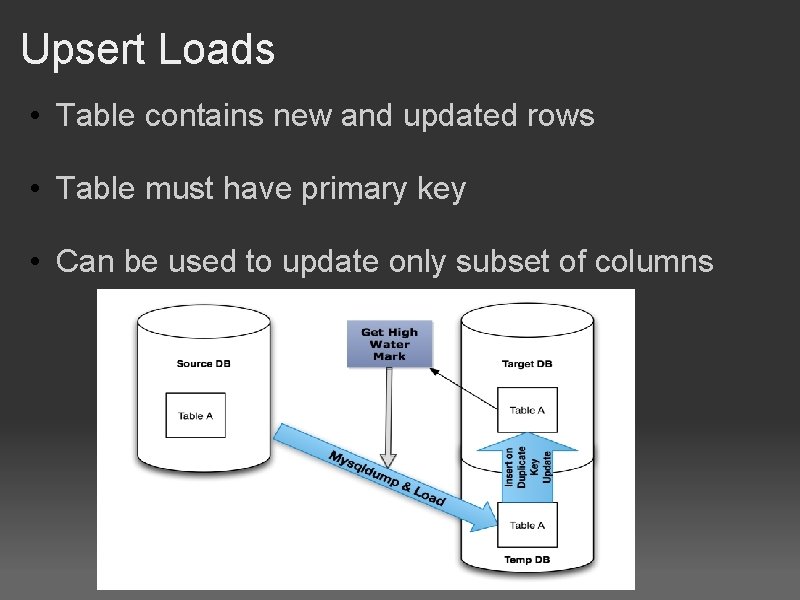

Upsert Loads • Table contains new and updated rows • Table must have primary key • Can be used to update only subset of columns

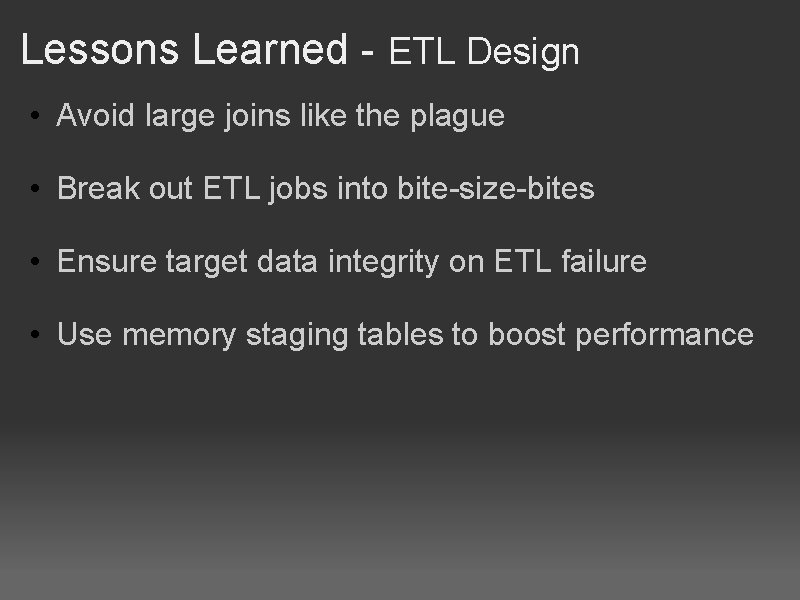

Lessons Learned - ETL Design • Avoid large joins like the plague • Break out ETL jobs into bite-size-bites • Ensure target data integrity on ETL failure • Use memory staging tables to boost performance

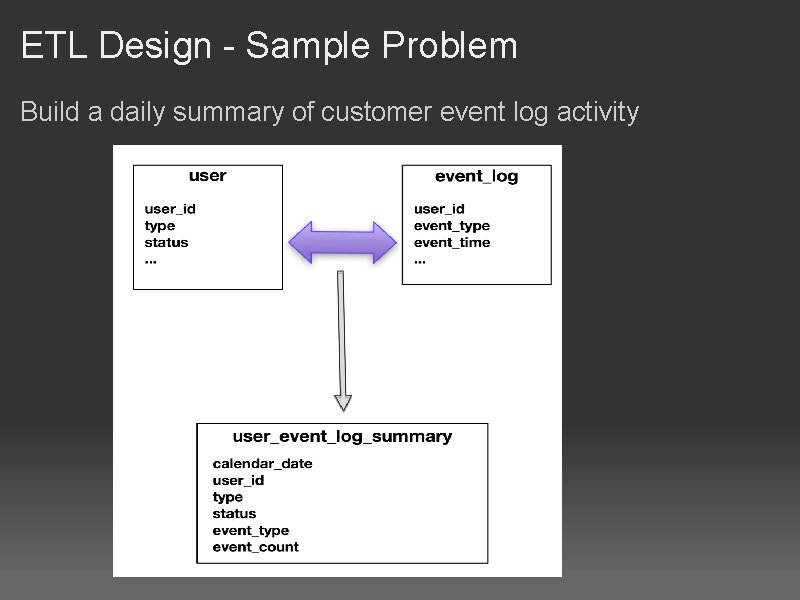

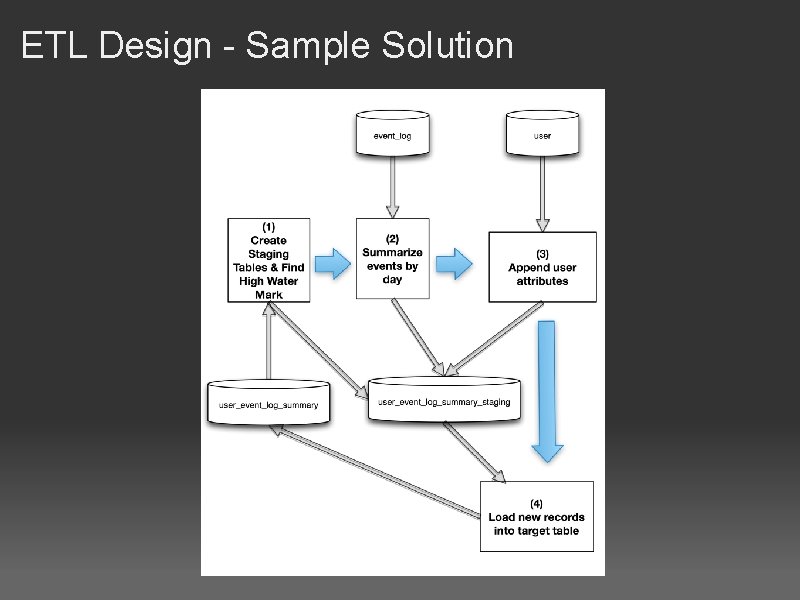

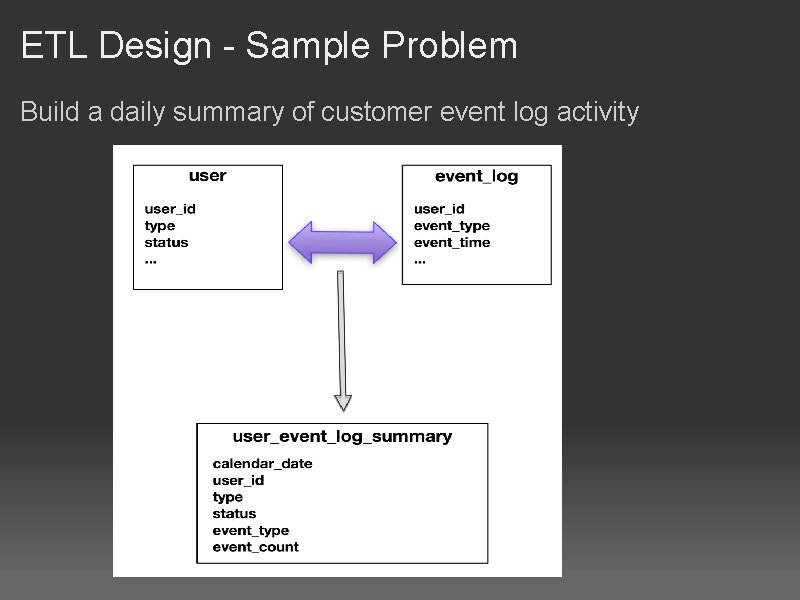

ETL Design - Sample Problem Build a daily summary of customer event log activity

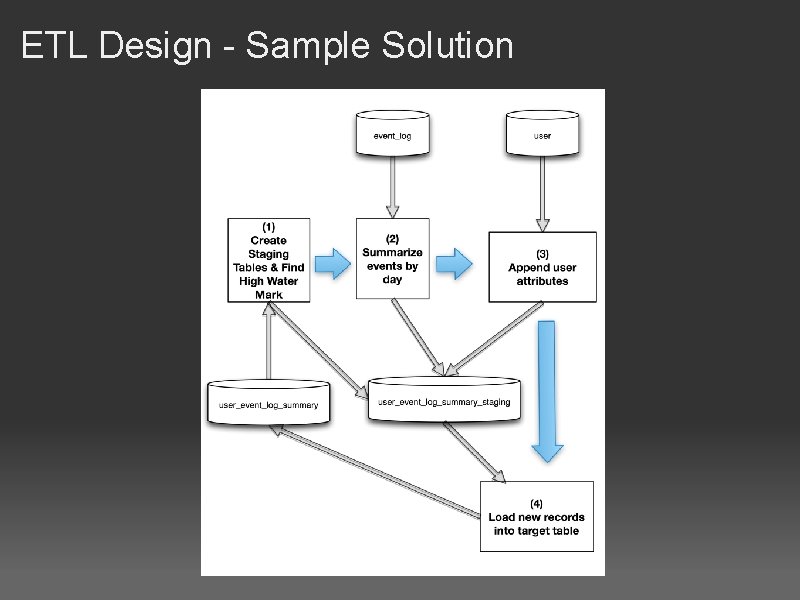

ETL Design - Sample Solution

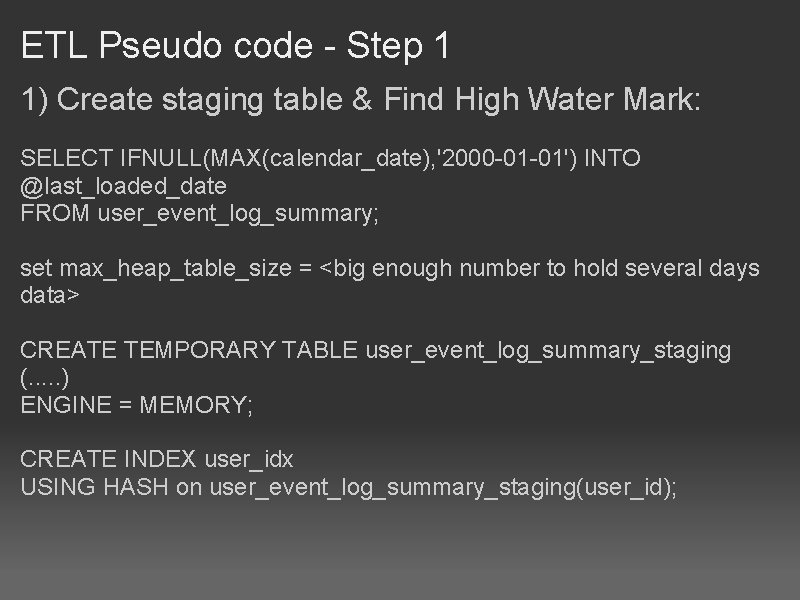

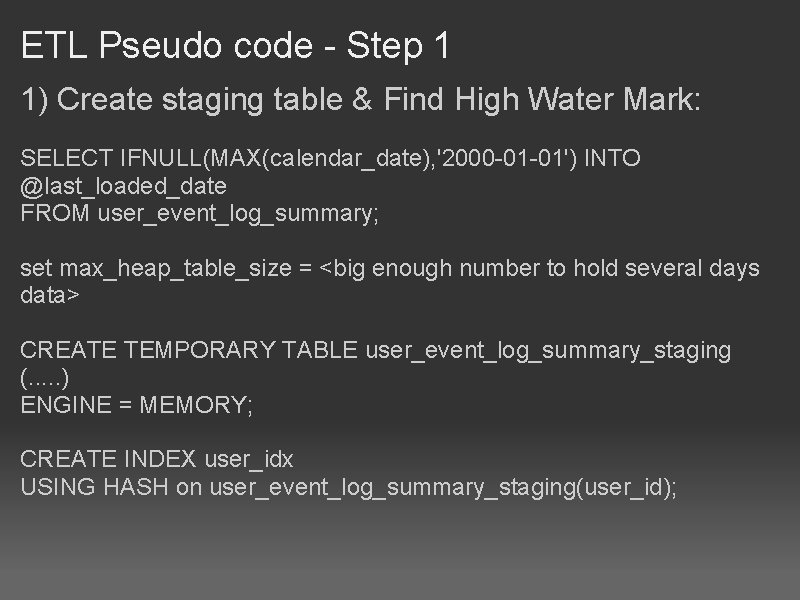

ETL Pseudo code - Step 1 1) Create staging table & Find High Water Mark: SELECT IFNULL(MAX(calendar_date), '2000 -01 -01') INTO @last_loaded_date FROM user_event_log_summary; set max_heap_table_size = <big enough number to hold several days data> CREATE TEMPORARY TABLE user_event_log_summary_staging (. . . ) ENGINE = MEMORY; CREATE INDEX user_idx USING HASH on user_event_log_summary_staging(user_id);

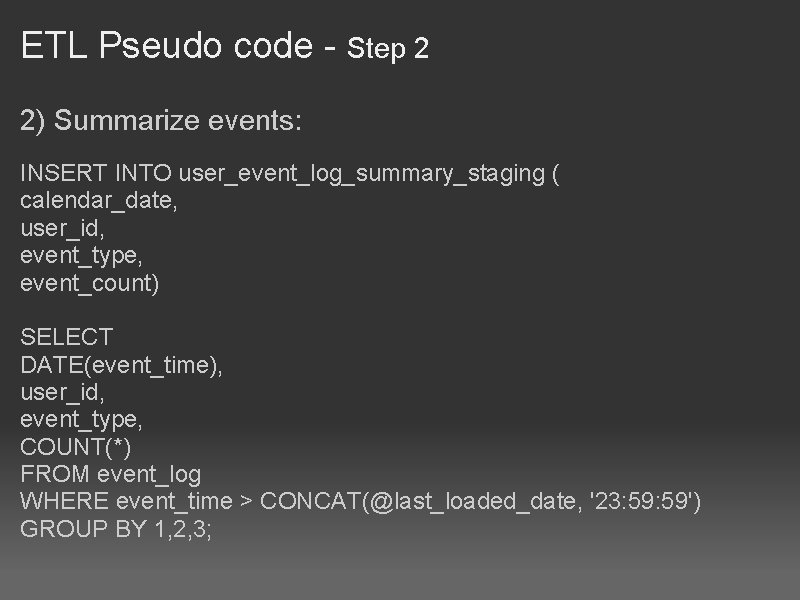

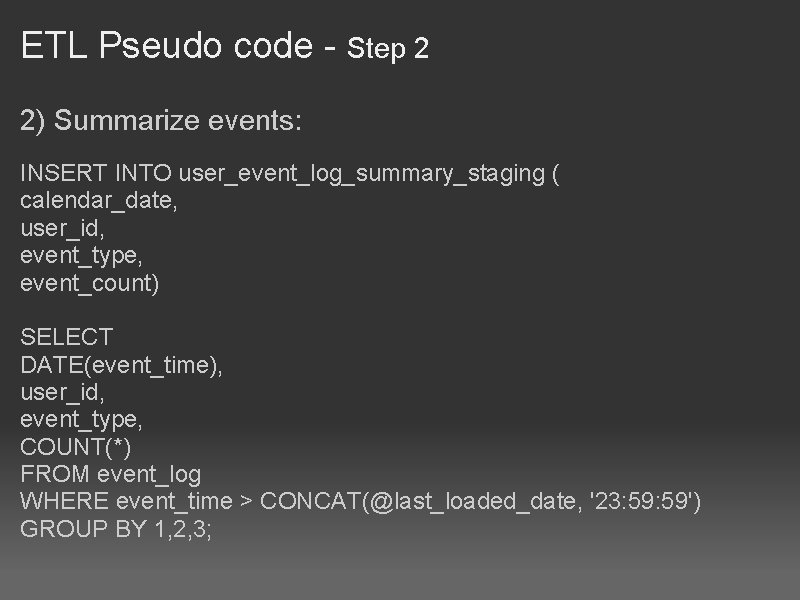

ETL Pseudo code - Step 2 2) Summarize events: INSERT INTO user_event_log_summary_staging ( calendar_date, user_id, event_type, event_count) SELECT DATE(event_time), user_id, event_type, COUNT(*) FROM event_log WHERE event_time > CONCAT(@last_loaded_date, '23: 59') GROUP BY 1, 2, 3;

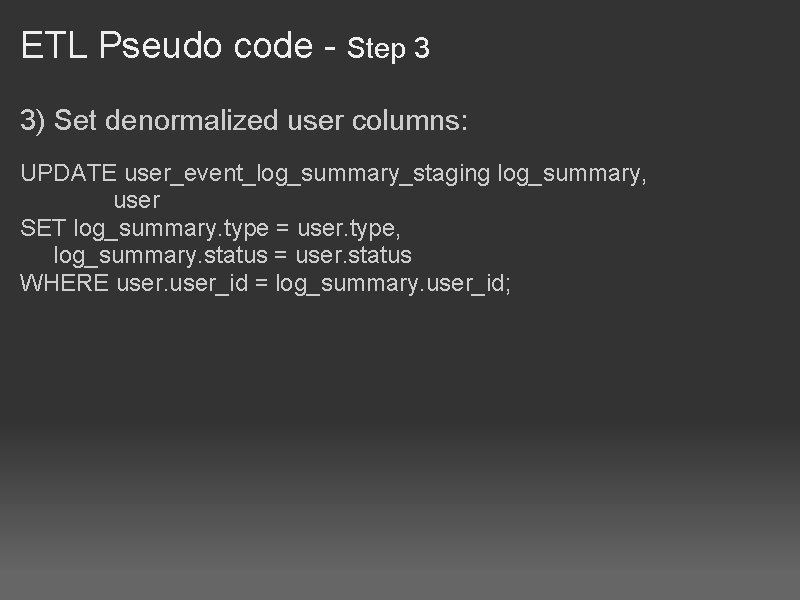

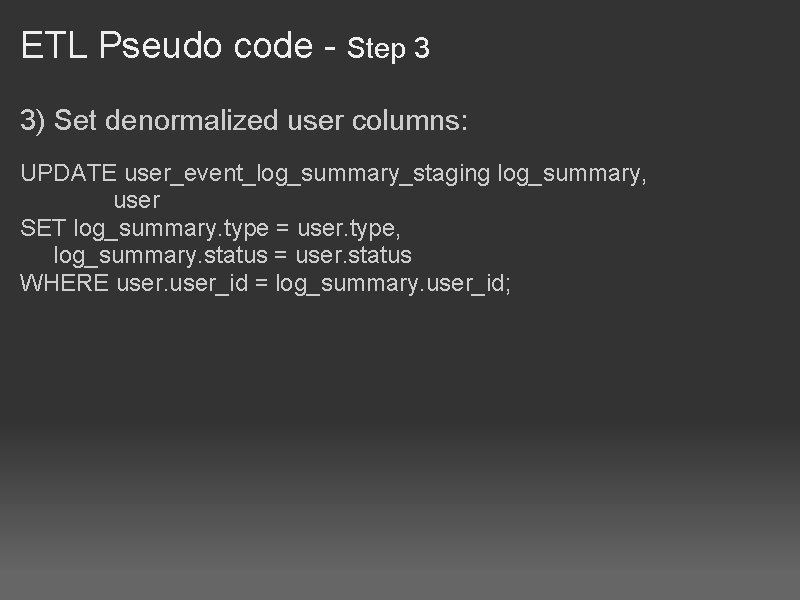

ETL Pseudo code - Step 3 3) Set denormalized user columns: UPDATE user_event_log_summary_staging log_summary, user SET log_summary. type = user. type, log_summary. status = user. status WHERE user_id = log_summary. user_id;

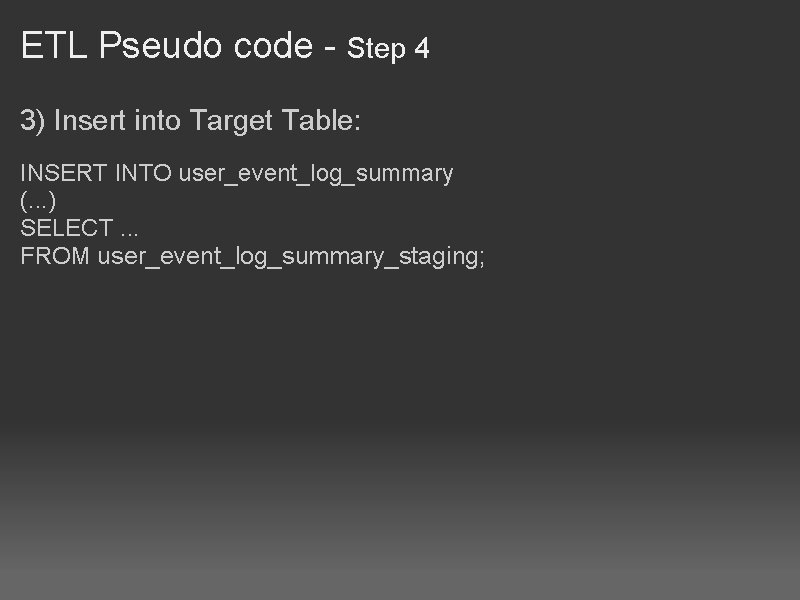

ETL Pseudo code - Step 4 3) Insert into Target Table: INSERT INTO user_event_log_summary (. . . ) SELECT. . . FROM user_event_log_summary_staging;

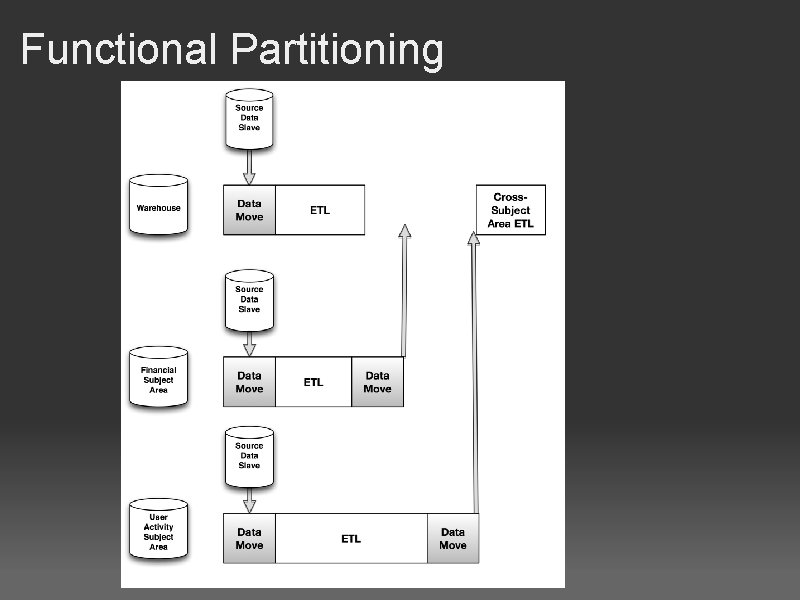

Functional Partitioning • Benefits depend on o Partition Execution Times o Data Move Times o Dependencies between functional partitions

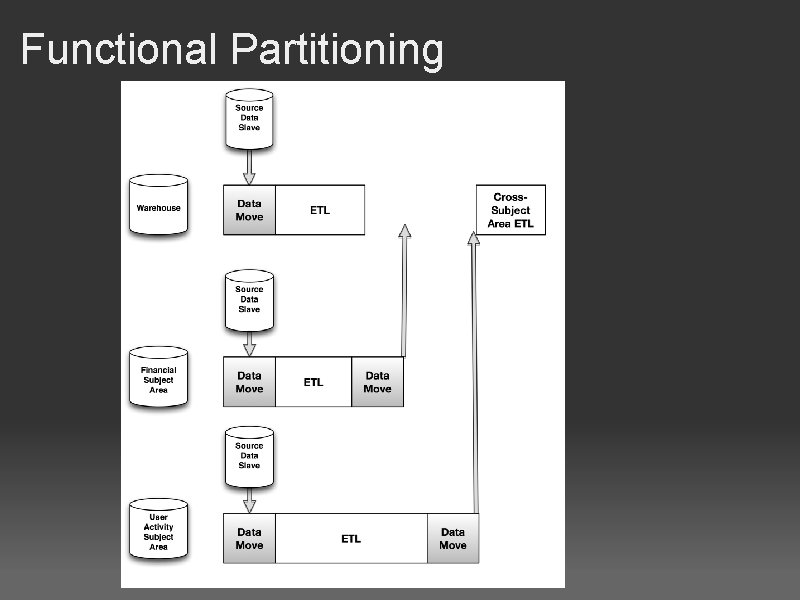

Functional Partitioning

Job Management • Run everything single-threaded on a server • Handle dependencies between jobs across servers • Smart re-start key to survival • Implemented 3 -level hierarchy of processing o Process (collection of build steps and data moves) o Build Steps (ETL 'units of work') o Data Moves

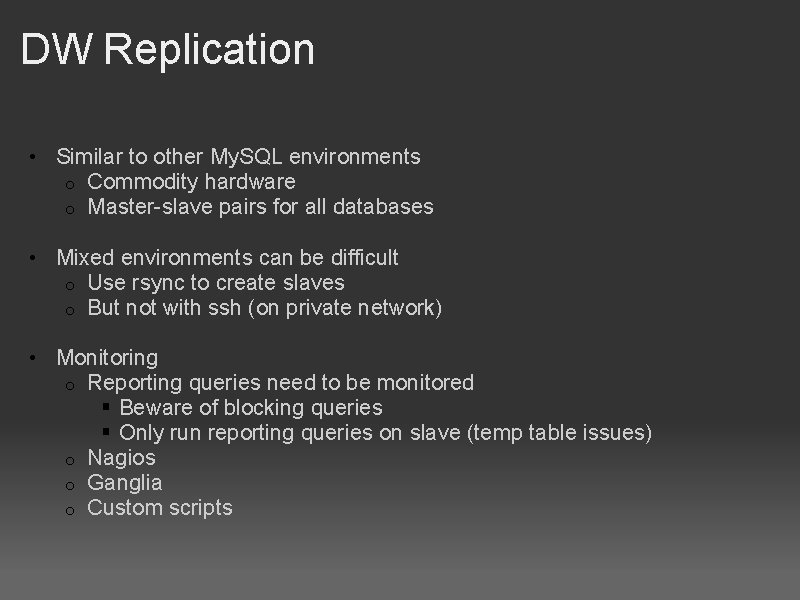

DW Replication • Similar to other My. SQL environments o Commodity hardware o Master-slave pairs for all databases • Mixed environments can be difficult o Use rsync to create slaves o But not with ssh (on private network) • Monitoring o Reporting queries need to be monitored § Beware of blocking queries § Only run reporting queries on slave (temp table issues) o Nagios o Ganglia o Custom scripts

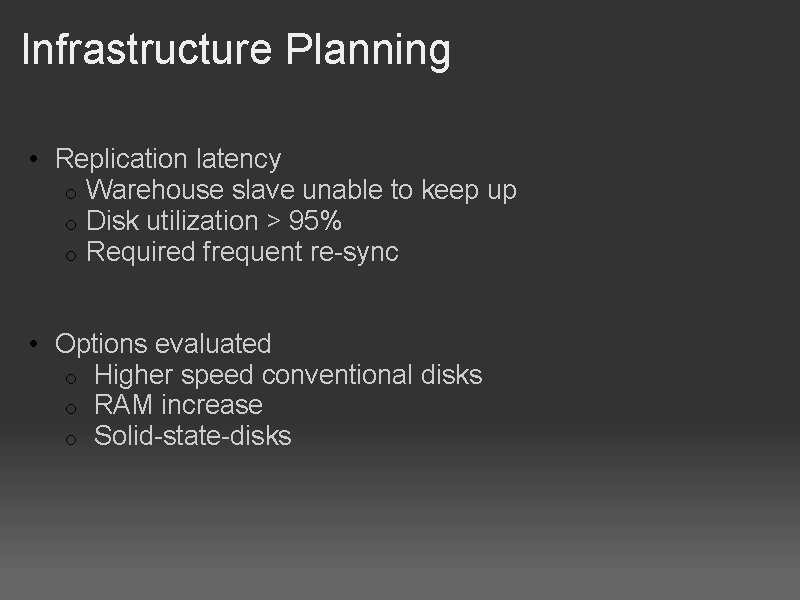

Infrastructure Planning • Replication latency o Warehouse slave unable to keep up o Disk utilization > 95% o Required frequent re-sync • Options evaluated o Higher speed conventional disks o RAM increase o Solid-state-disks

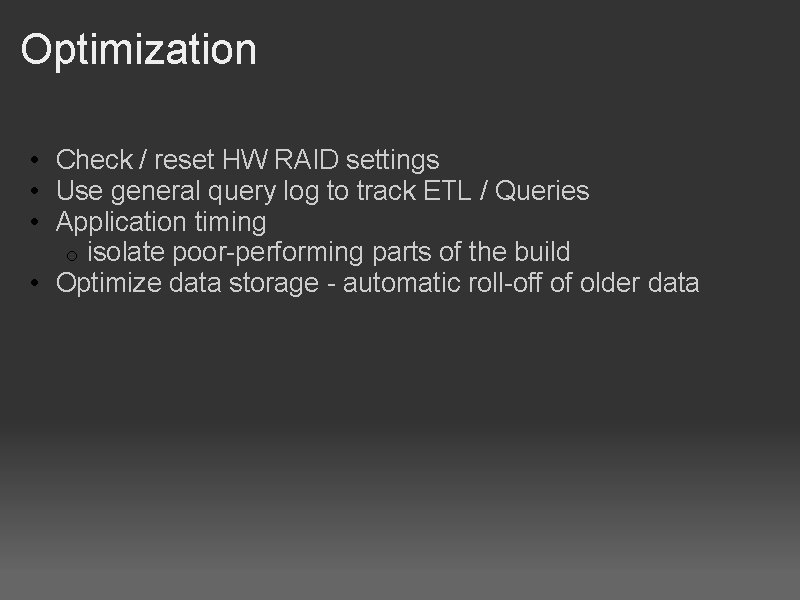

Optimization • Check / reset HW RAID settings • Use general query log to track ETL / Queries • Application timing o isolate poor-performing parts of the build • Optimize data storage - automatic roll-off of older data

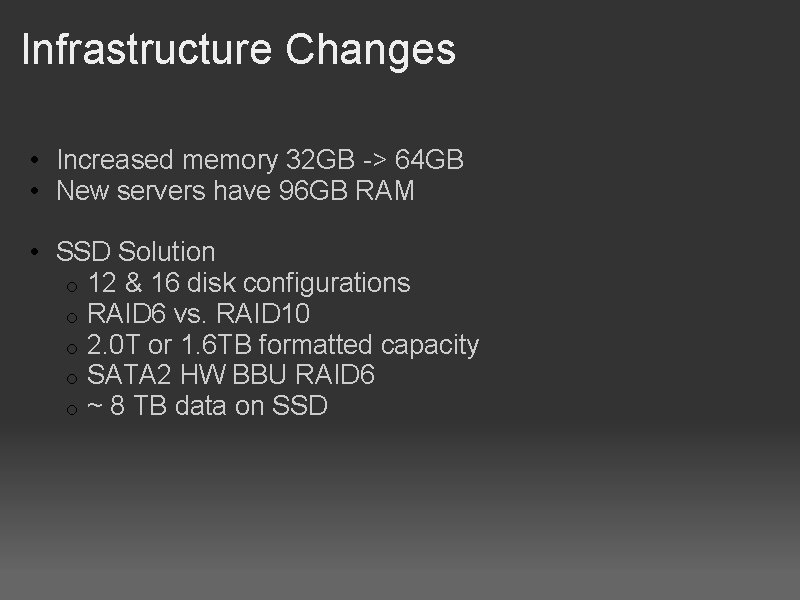

Infrastructure Changes • Increased memory 32 GB -> 64 GB • New servers have 96 GB RAM • SSD Solution o 12 & 16 disk configurations o RAID 6 vs. RAID 10 o 2. 0 T or 1. 6 TB formatted capacity o SATA 2 HW BBU RAID 6 o ~ 8 TB data on SSD

Results • Sometimes it pays to throw hardware at a problem o 15 -hour warehouse builds on old system o 6 hours on optimized system o No application changes

Finally. . . Archive Two-tiered solution • Move data into archive tables in separate DB • Use select to dump data - efficient and fast • Archive server handles migration o Dump data o GPG o Push to S 3

Survival Tips • Efforts to scale are non-linear o As you scale, it becomes increasingly difficult to manage o Be prepared to supplement your warehouse strategy § Dedicated appliance § Distributed processing (Hadoop, etc) • You can gain a great deal of headroom by optimizing I/O o Optimize current disk I/O path o Examine SSD / Flash solutions o Be pragmatic about table designs • It's important to stay ahead of the performance curve o Be proactive - monitor growth, scale early • Monitor everything, including your users o Bad queries can bring replication down