Measurement The hardest part of doing research Youll

- Slides: 32

Measurement • The hardest part of doing research? You’ll see when we begin operationalizing concepts May seem easy/trivial/even boring, but it is crucial • Most important part of research? Fancy statistics on poor measurements are a problem.

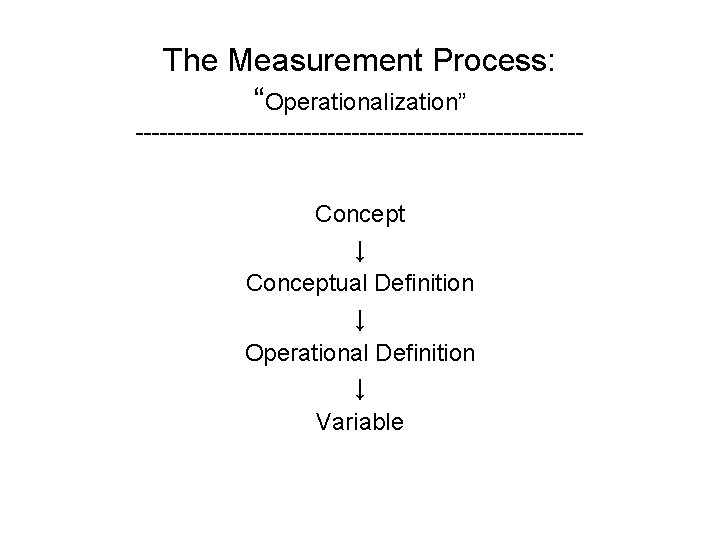

The Measurement Process: “Operationalization” ----------------------------Concept ↓ Conceptual Definition ↓ Operational Definition ↓ Variable

Concepts are vague Empirical political research analyzes concepts and the relationship between them but what is – Education? – Feminism? – Globalization? – Liberalism? – Democracy?

• Even “easier” concepts may be hard to define: Partisanship of voters Number of political parties in a country Political tolerance

Conceptual Definition: properties and subjects Must communicate three things: 1. The variation within a characteristic 2. The subject or groups to which the concept applies 3. How the characteristic is to be measured 4. E. g. : The concept of ______ is defined as the extent to which _____ exhibits the characteristic of ______. 5. Try: tolerance, democracy, capitalism, liberalism, etc.

Operational Definition: How does one measure the concept? • Critical/necessary step for analysis to be possible • Toughest part One needs to be very specific • Easiest to criticize Almost always problems/exceptions Need to defend measures thoroughly

Operationalization A simple example • Education (how well individuals are educated) How might we measure it? • Problems with possible definitions? • What operationalization is actually used?

• Advantages? Simple to use Seems right in most instances Almost impossible to think of a better measure • Disadvantages Some examples are problematic

Operationalization A more difficult example • People’s political partisanship Conceptual definition: how people feel about the Democratic v. the Republican party (or loyalty to the parties, or party attachments) How might we measure it? • Problems with possible definitions? • What operationalization is actually used?

• Advantages? Applies to voters and nonvoters alike Avoids problems of which elections to use, etc. Notion of deviating from ID is useful As often asked, provides strength of ID as well as direction

• Disadvantages? The leaner problem (text, p. 17) It doesn’t travel well. • A point about its use You see it a lot in the media E. g. , did Bush win over Dem’s? How men and women differ on ID? Has the % of ind’s increased?

Operationalization A deceptively hard example • Number of political parties in a country Appears easy: any problems with it? • What operationalization is actually used?

• Advantages? The way it deals with small parties • Disadvantages Some examples are problematic • A point about its use How good it is may depend on what it is used for A conceptual question again

Reliability and validity • How well does an operationalization work? • Begin (see text, p. 14) by defining Measurement = Intended characteristic + Systematic error + Random error. • Usually judged by assessing Validity Reliability

Validity • Definition is easy: Does a measure gauge (or, measure) the intended characteristic and only that characteristic • But it is difficult to apply: How do we know what is being measured? • Refers to problems of systematic error But saying that doesn’t help a whole lot

Validity tests • Face validity: does the measure look like it measures what it’s supposed to? Occasionally useful—at least if a measure does not pass this test. Usually no explicit tests are made to determine face validity, but the term is used loosely (Shull & Vanderleeuw)

• Construct validity: concerned with the relationship of a given measure with other measures—e. g. , is the SAT a good predictor of success in college? Useful to a degree But how strong a relationship is required? • Other, related tests (content, criterionrelated validity) are similar

An aside on Hawthorne effects • Effects that are a result of individuals’ awareness that they are being tested Origin in an industrial study • Very important in experiments Disguising the purpose of an experiment helps • Analogous impact in psc is in surveys E. g. , survey on elections makes people more attentive to them, more likely to vote

Reliability • A measure is reliable to the extent that it is consistent—i. e. , there is no random error Scale, or guns, are good examples Note: Reliability ≠ Validity • Random Error (noise), never without Unlike with validity, there are tests of reliability

Evaluating Reliability • Four methods (two mentioned in text) Test-retest method. Problem: learning effect Alternative forms. Problem: equivalent forms? Split-half method. Problem: multiple halves Internal consistency. Generalization of split half. Best; most often used

• Reliability methods All rely on correlations (later in course) Best internal consistency method averages all split-half correlations This method is called alpha. Simple formula you can learn if you need to (Varies between 0 and 1. )

• Validity/reliability concepts apply not just to tests or survey items. Think about: Profit as measure of CEO ability Gun registrations as measure of gun ownership Reported crimes as a measure of the crime rate • Even “hard” data can be invalid/unreliable

A real-world example • Interesting, important concept: support for democracy • Conceptual definition: how much people in various countries say they support (or prefer, or would like) a democratic government. • Operationalization (survey): Agree or disagree: “Democracy has its problems, but it’s better than any other form of government. ”

• Surveys have often found high levels of support for democracy using this kind of measure • Question: is this a valid measure of support for democracy?

Variables • Actual measurement of the concept Variable name v. variable’s values As long as you remember this distinction, you shouldn’t have a problem • Examples: Religion (Protestant, Catholic, Jewish, etc. ) Height (values in feet and inches)

Variables (cont. ) • Residual categories--a small, but often nagging point Cases (respondents, counties, countries, etc. ) for which the data is missing • We’ll deal these later—just note the problem here

Levels of Measurement • Nominal (least precise): categorical – E. g. Protestant, Catholic, Jewish, Atheist • Ordinal: relative difference (higher/lower; for/against) – E. g. support, neutral, oppose • Interval (most precise): exact difference in units – Common in Aggregate Data: turnout, budget, GDP, numbers of members, deaths in war – Less common in individual level data. • non-quantifiable (religion, region, etc. ) • no agreed-upon scale (happiness, tolerance)

Levels (cont. ) • In practice, the distinction is not always observed. • We’ll see that later on. Note that level of measurement and reliability are not the same thing • Interval-level data can be unreliable and invalid (crime rates? )

Unit of analysis • The entity we are describing Individual—we mean individual people Aggregate—any grouping of individuals • Often, a single concept can be studied at multiple levels Example: professionalization of state legislators

Unit of analysis (cont. ) • May want to measure and explain why some individual legislators show more signs of professionalization • May want to measure and explain why legislatures in some states are more professionalized

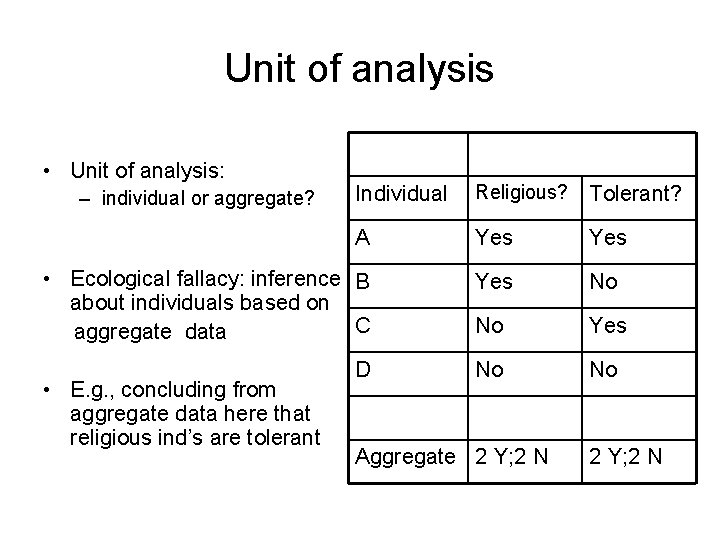

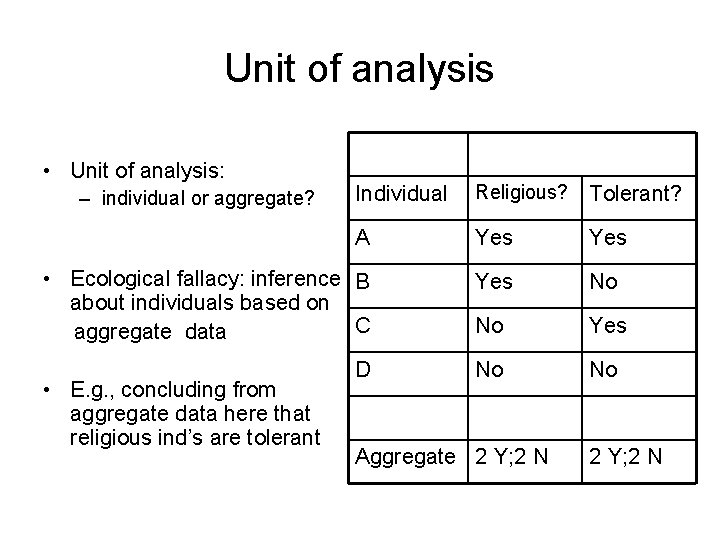

Unit of analysis • Unit of analysis: Individual Religious? Tolerant? A Yes • Ecological fallacy: inference B about individuals based on C aggregate data Yes No No – individual or aggregate? • E. g. , concluding from aggregate data here that religious ind’s are tolerant D Aggregate 2 Y; 2 N

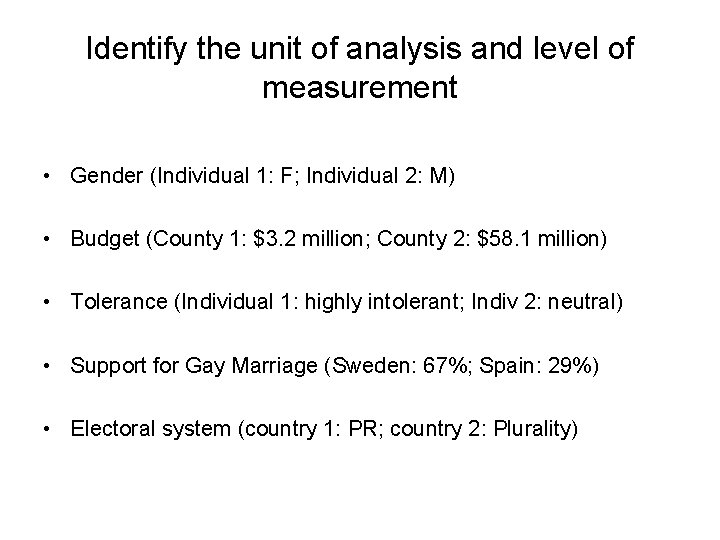

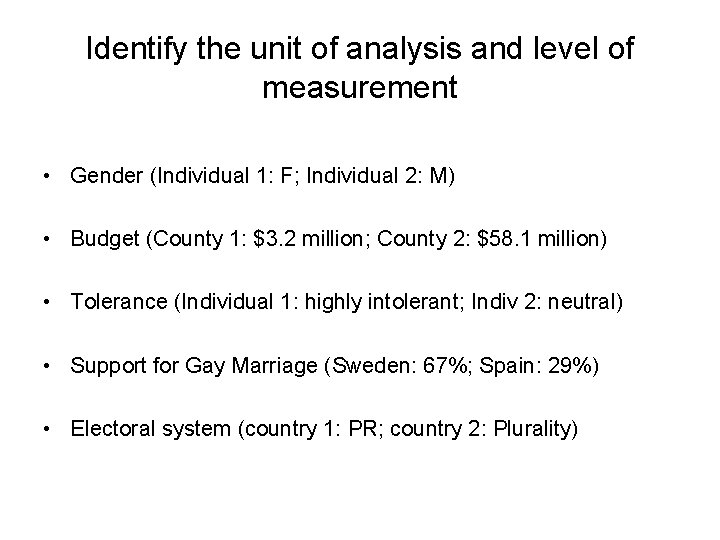

Identify the unit of analysis and level of measurement • Gender (Individual 1: F; Individual 2: M) • Budget (County 1: $3. 2 million; County 2: $58. 1 million) • Tolerance (Individual 1: highly intolerant; Indiv 2: neutral) • Support for Gay Marriage (Sweden: 67%; Spain: 29%) • Electoral system (country 1: PR; country 2: Plurality)

Anything worth doing is not necessarily worth doing well

Anything worth doing is not necessarily worth doing well Doing nothing is doing ill

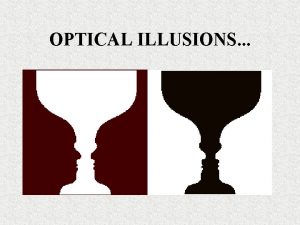

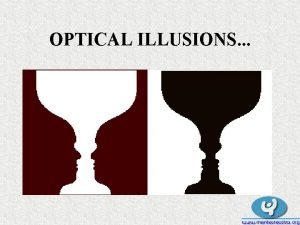

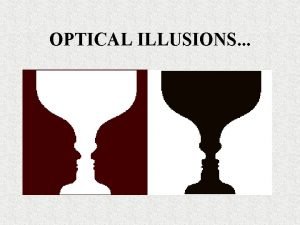

Doing nothing is doing ill Hardest optical illusions

Hardest optical illusions Learning objectives

Learning objectives Man playing saxophone illusion

Man playing saxophone illusion What is the figurative language in nothing gold can stay

What is the figurative language in nothing gold can stay Slyvia path

Slyvia path Hardest thing to say

Hardest thing to say Think win-win scenarios

Think win-win scenarios Hardest quiz ever game

Hardest quiz ever game Personification in nothing gold can stay

Personification in nothing gold can stay Sorry seems to be the hardest word meaning

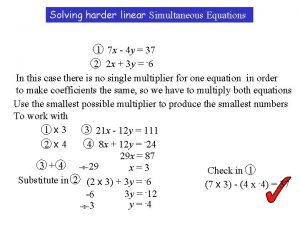

Sorry seems to be the hardest word meaning Simultaneous equations step by step

Simultaneous equations step by step Phonological awareness skills from easiest to hardest

Phonological awareness skills from easiest to hardest The hardest quiz game

The hardest quiz game Which is the hardest

Which is the hardest Reading optical illusions

Reading optical illusions Biographical research design

Biographical research design Product disassembly example

Product disassembly example Criteria for good scale

Criteria for good scale Measurement scales in research

Measurement scales in research Ratio data

Ratio data Measurement scales in research

Measurement scales in research Primary scales of measurement in marketing research

Primary scales of measurement in marketing research Attitude measurement in marketing research

Attitude measurement in marketing research Hát kết hợp bộ gõ cơ thể

Hát kết hợp bộ gõ cơ thể Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào Glasgow thang điểm

Glasgow thang điểm Alleluia hat len nguoi oi

Alleluia hat len nguoi oi Kể tên các môn thể thao

Kể tên các môn thể thao