Localitysensitive hashing and its applications Paolo Ferragina University

![A key property p versus q Pr[picking x s. t. p[x]=q[x]]= (d - D(p, A key property p versus q Pr[picking x s. t. p[x]=q[x]]= (d - D(p,](https://slidetodoc.com/presentation_image_h2/6dca49195569474fa28a61e6344171b6/image-8.jpg)

- Slides: 19

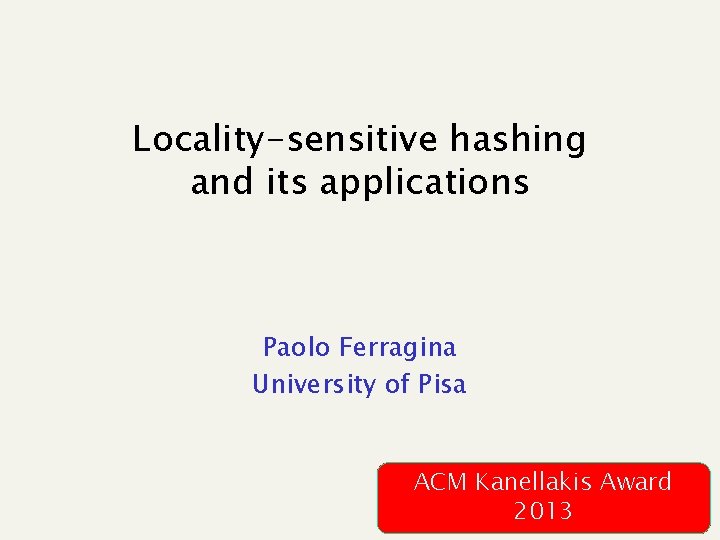

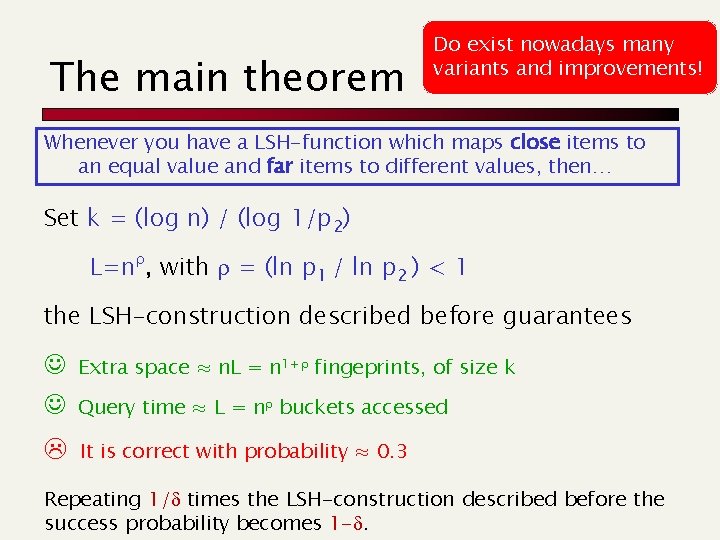

Locality-sensitive hashing and its applications Paolo Ferragina University of Pisa ACM Kanellakis Award 2013

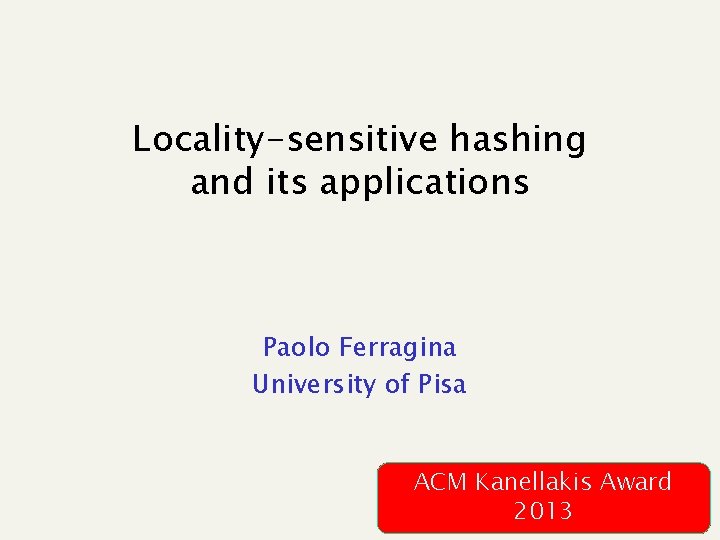

A frequent issue Given U users, described with a set of d features, the goal is to find (the largest) group of similar users Features = Personal data, preferences, purchases, navigational behavior, search behavior, followers/ing, … n A feature is typically a numerical value: binary or real Similarity(u 1, u 2) is a function that, taken the set of features of users u 1 and u 2, returns a value in [0, 1] 000110010 Users could also be Web pages (dedup), 0. 1 010001110 0. 3 products (recommendation), 0. 7 tweets/news/search results (visualization) 10010

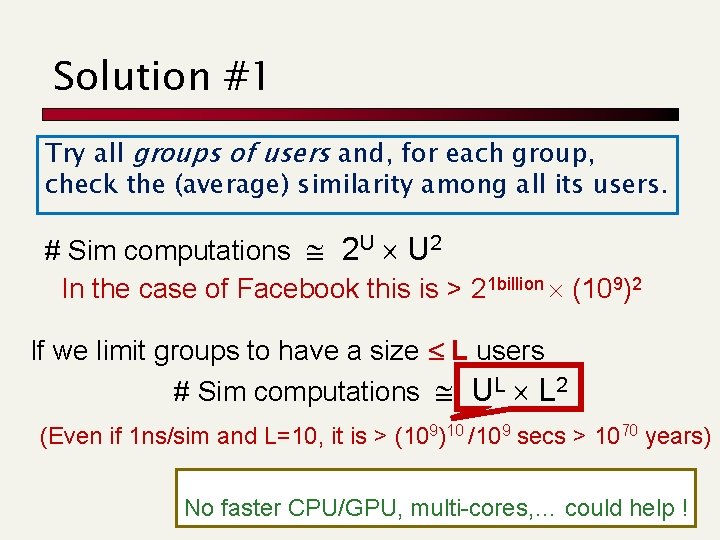

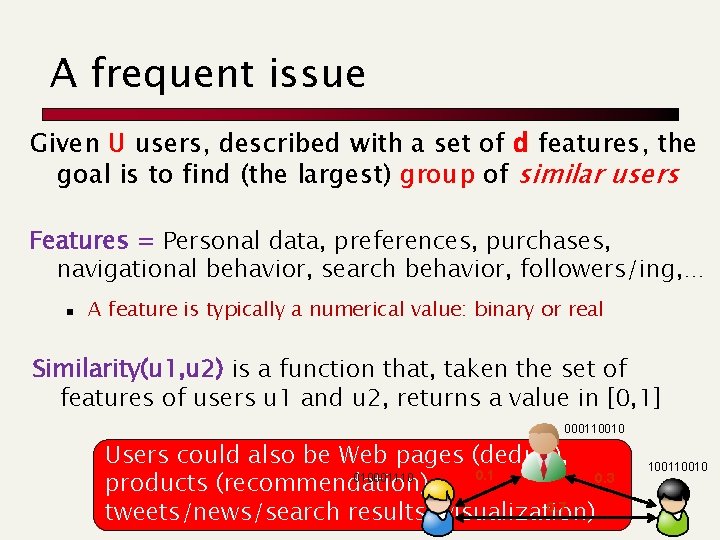

Solution #1 Try all groups of users and, for each group, check the (average) similarity among all its users. # Sim computations 2 U U 2 In the case of Facebook this is > 21 billion (109)2 If we limit groups to have a size L users # Sim computations UL L 2 (Even if 1 ns/sim and L=10, it is > (109)10 /109 secs > 1070 years) No faster CPU/GPU, multi-cores, … could help !

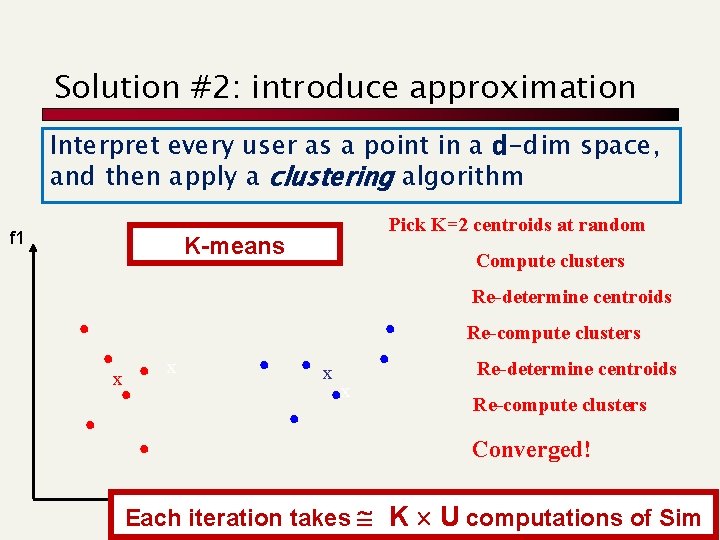

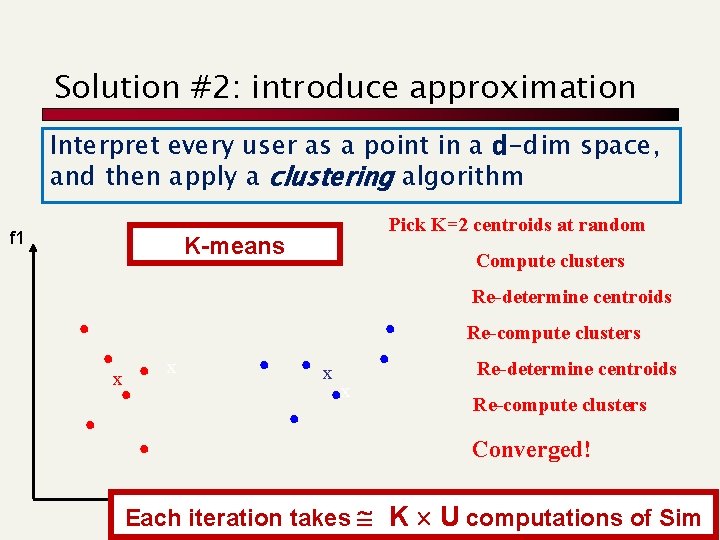

Solution #2: introduce approximation Interpret every user as a point in a d-dim space, and then apply a clustering algorithm f 1 Pick K=2 centroids at random K-means Compute clusters Re-determine centroids Re-compute clusters x x Re-determine centroids Re-compute clusters Converged! Each iteration takes K Uf 2 computations of Sim

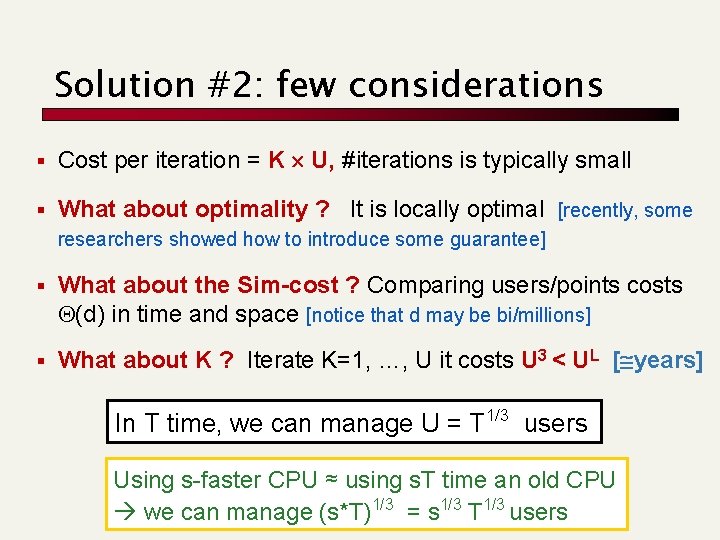

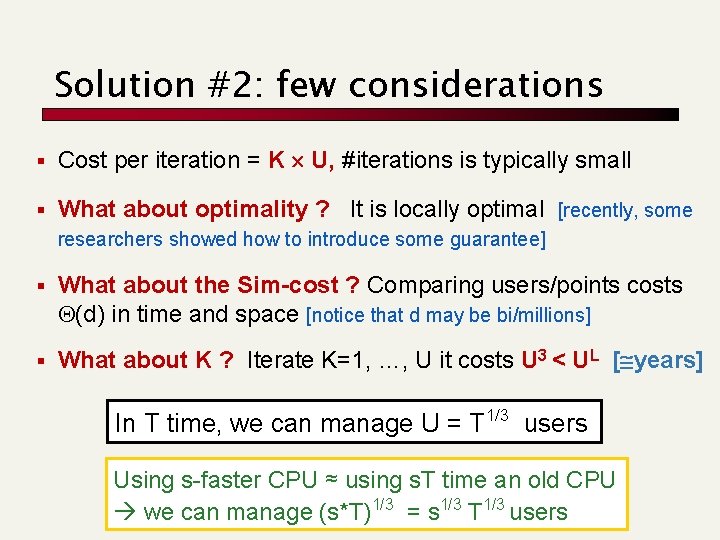

Solution #2: few considerations § Cost per iteration = K U, #iterations is typically small § What about optimality ? It is locally optimal [recently, some researchers showed how to introduce some guarantee] § What about the Sim-cost ? Comparing users/points costs Q(d) in time and space [notice that d may be bi/millions] § What about K ? Iterate K=1, …, U it costs U 3 < UL [ years] In T time, we can manage U = T 1/3 users Using s-faster CPU ≈ using s. T time an old CPU we can manage (s*T)1/3 = s 1/3 T 1/3 users

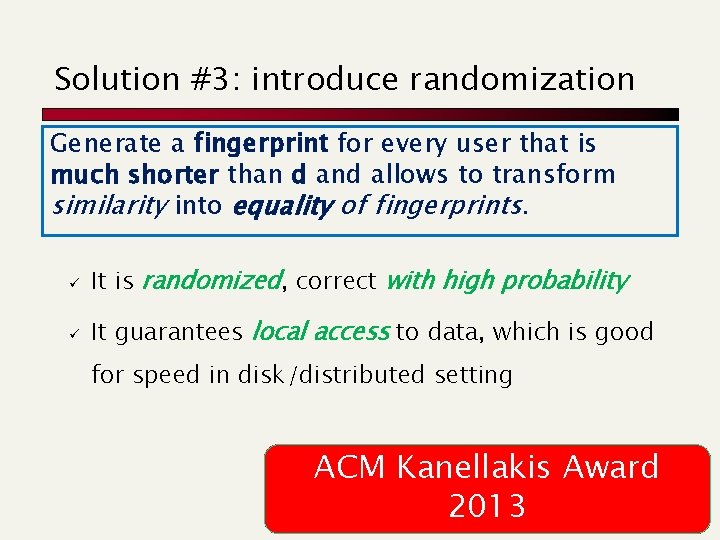

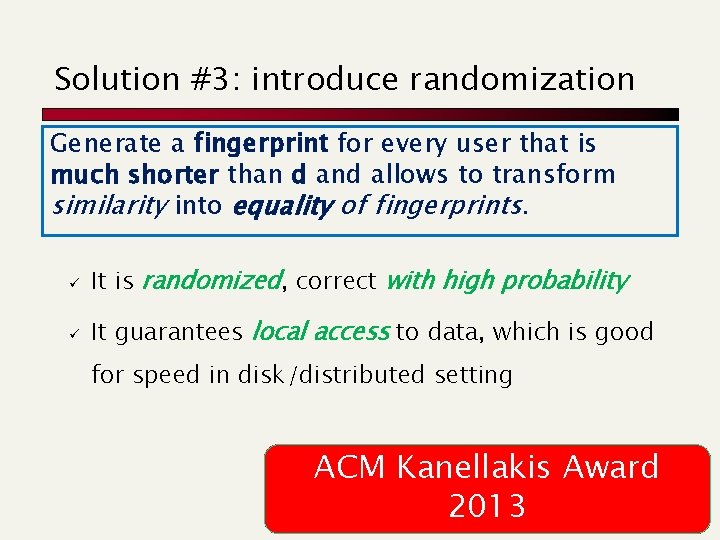

Solution #3: introduce randomization Generate a fingerprint for every user that is much shorter than d and allows to transform similarity into equality of fingerprints. ü It is randomized, correct with high probability ü It guarantees local access to data, which is good for speed in disk/distributed setting ACM Kanellakis Award 2013

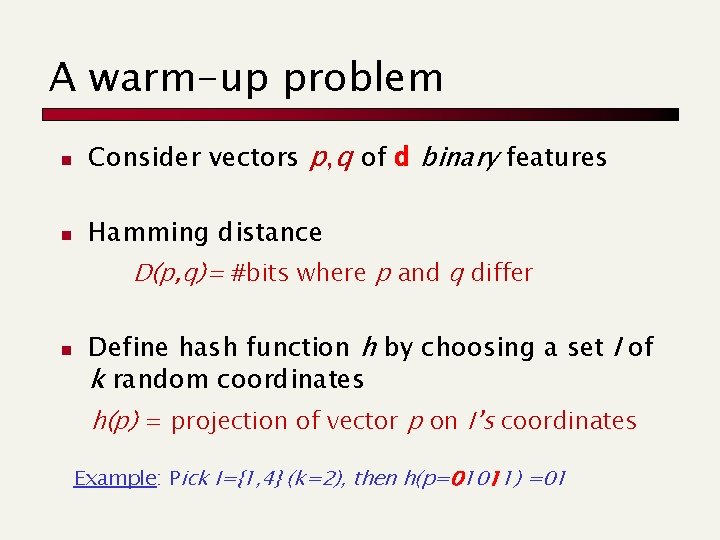

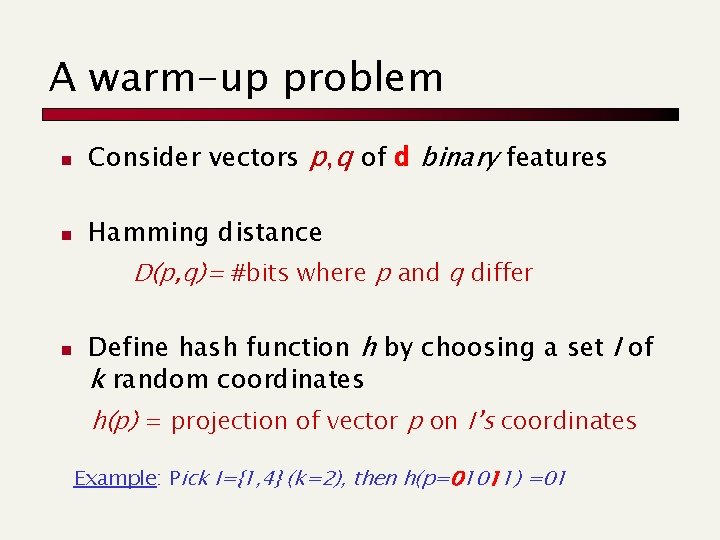

A warm-up problem n Consider vectors p, q of d binary features n Hamming distance D(p, q)= #bits where p and q differ n Define hash function h by choosing a set I of k random coordinates h(p) = projection of vector p on I’s coordinates Example: Pick I={1, 4} (k=2), then h(p=01011) =01

![A key property p versus q Prpicking x s t pxqx d Dp A key property p versus q Pr[picking x s. t. p[x]=q[x]]= (d - D(p,](https://slidetodoc.com/presentation_image_h2/6dca49195569474fa28a61e6344171b6/image-8.jpg)

A key property p versus q Pr[picking x s. t. p[x]=q[x]]= (d - D(p, q))/d 1 We can vary the probability by changing k Pr k=2 Pr k=4 2 …. # = D(p, q) # = d - D(p, q) = Sk where s is the similarity between p and q Larger k distance d Smaller False Positive What about False Negatives? distance

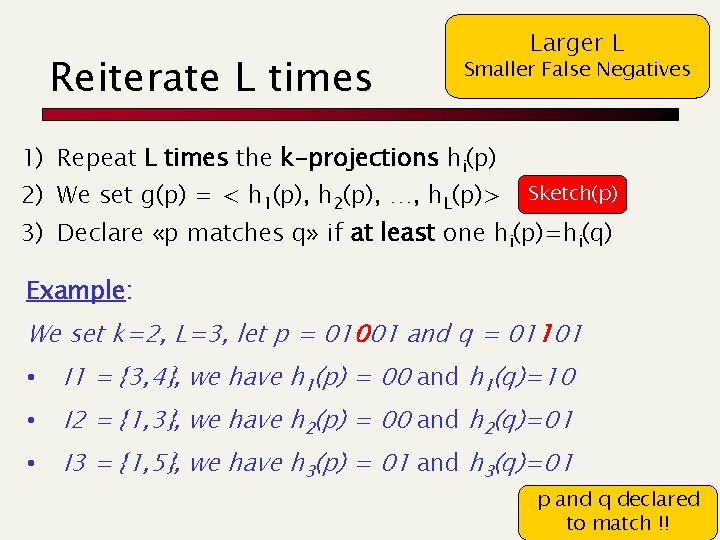

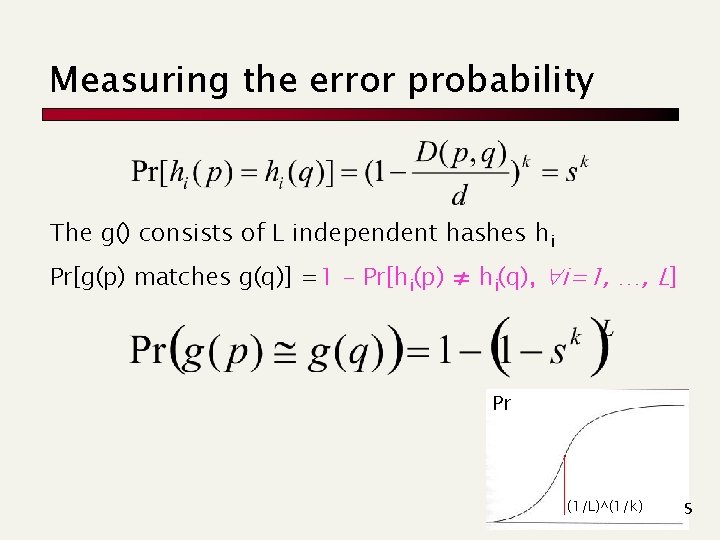

Reiterate L times Larger L Smaller False Negatives 1) Repeat L times the k-projections hi(p) 2) We set g(p) = < h 1(p), h 2(p), …, h. L(p)> Sketch(p) 3) Declare «p matches q» if at least one hi(p)=hi(q) Example: We set k=2, L=3, let p = 01001 and q = 01101 • I 1 = {3, 4}, we have h 1(p) = 00 and h 1(q)=10 • I 2 = {1, 3}, we have h 2(p) = 00 and h 2(q)=01 • I 3 = {1, 5}, we have h 3(p) = 01 and h 3(q)=01 p and q declared to match !!

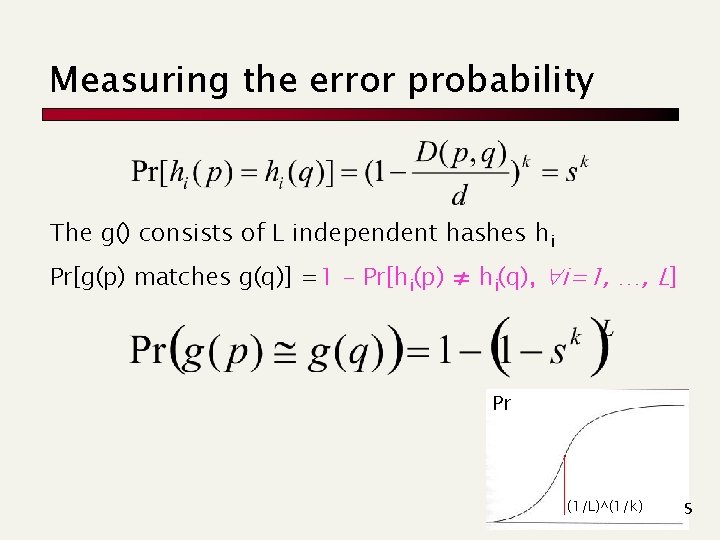

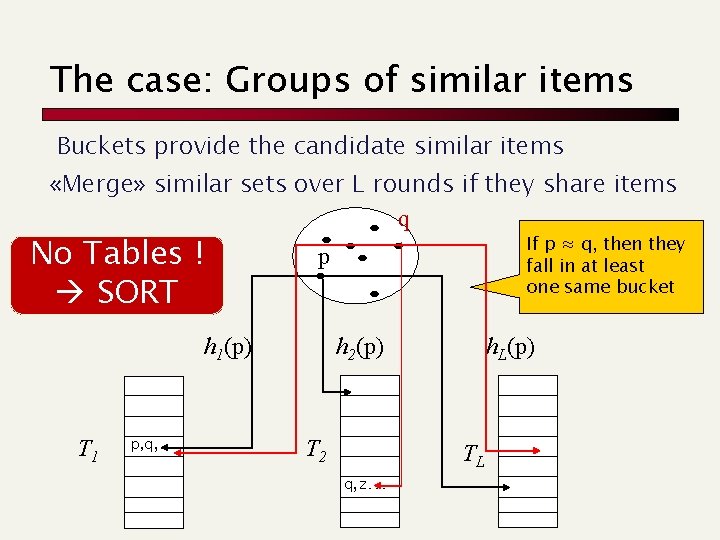

Measuring the error probability The g() consists of L independent hashes hi Pr[g(p) matches g(q)] =1 - Pr[hi(p) ≠ hi(q), i=1, …, L] Pr (1/L)^(1/k) s

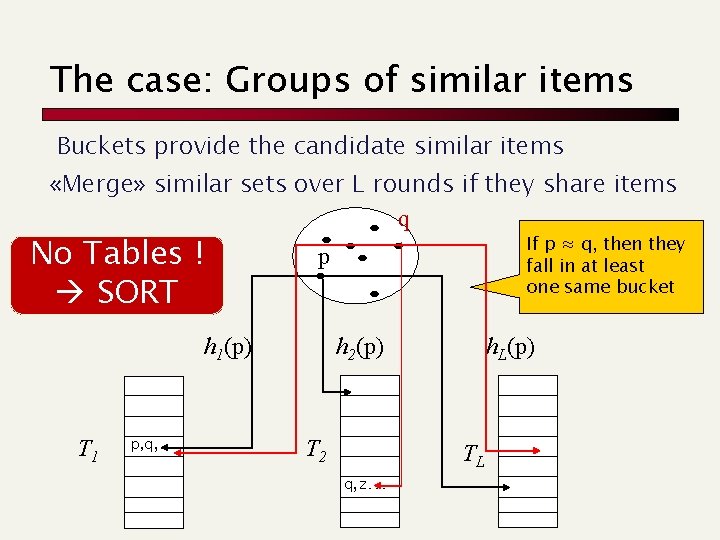

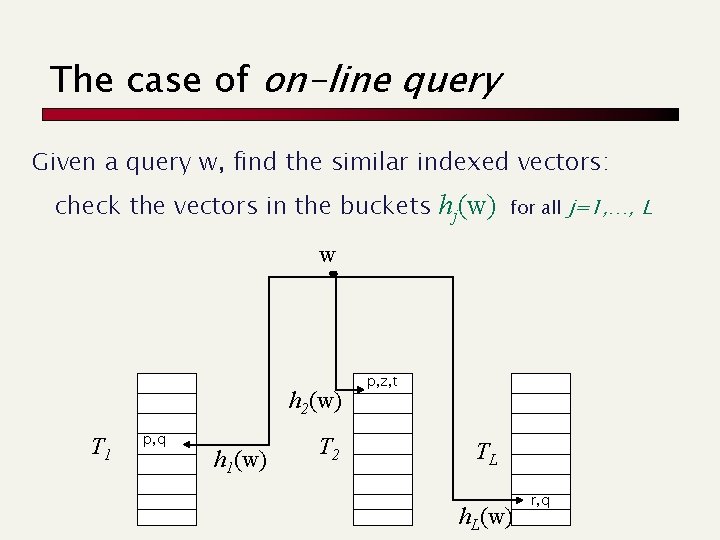

The case: Groups of similar items Buckets provide the candidate similar items «Merge» similar sets over L rounds if they share items q No Tables ! SORT p h 1(p) T 1 p, q, … If p ≈ q, then they fall in at least one same bucket h 2(p) T 2 q , z… h. L(p) TL

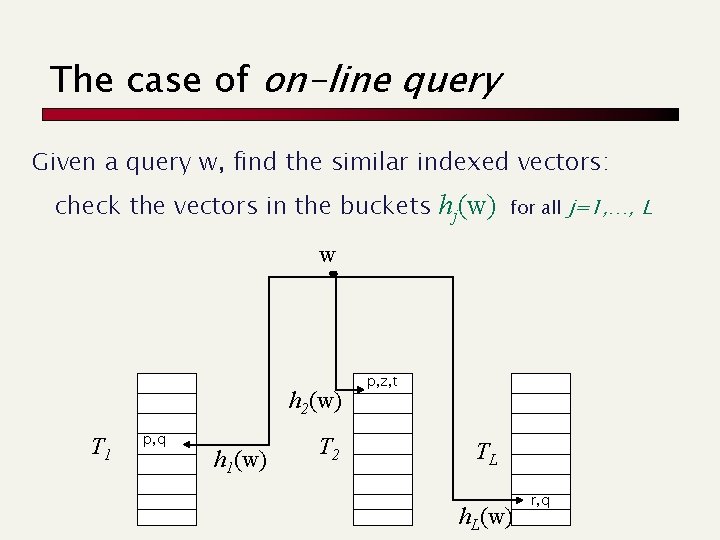

The case of on-line query Given a query w, find the similar indexed vectors: check the vectors in the buckets hj(w) for all j=1, …, L w h 2(w) T 1 p, q h 1(w) T 2 p, z, t TL h. L(w) r, q

LSH versus K-means § What about optimality ? K-means is locally optimal [LSH finds correct clusters with high probability] § What about the Sim-cost ? K-means compares vectors of d components [LSH compares very short (sketch) vectors] § What about the cost per iteration? Typically K-means requires few iterations, each costs K U d [LSH sorts U short items, few scans] § What about K ? In principle have to iterate K=1, …, U [LSH does not need to know the number of clusters] You could apply K-means over LSH-sketch vectors !!

More applications

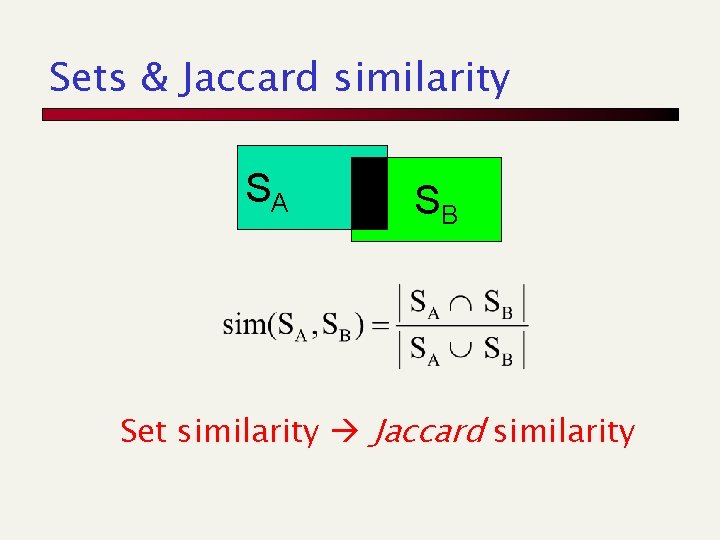

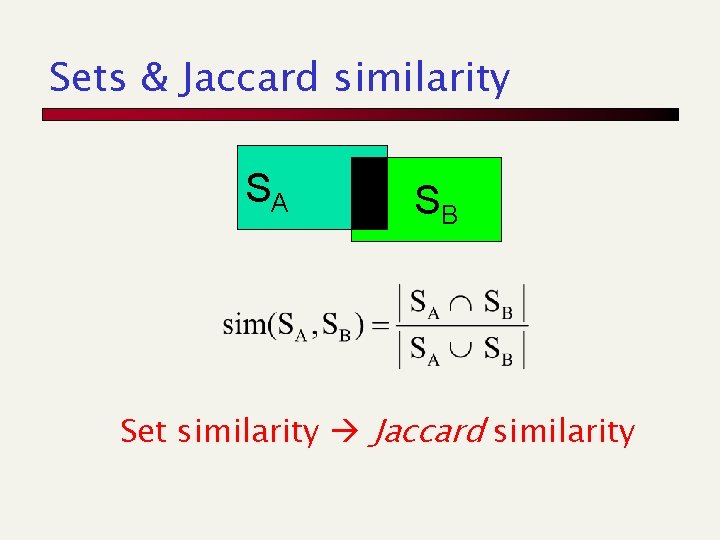

Sets & Jaccard similarity SA SB Set similarity Jaccard similarity

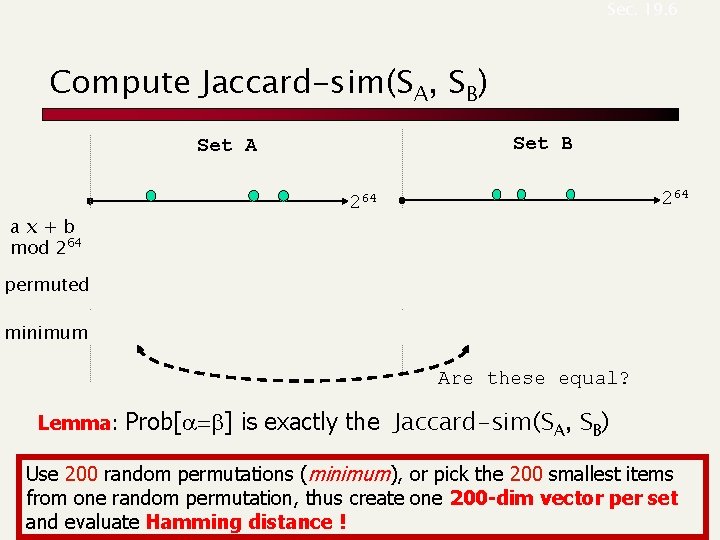

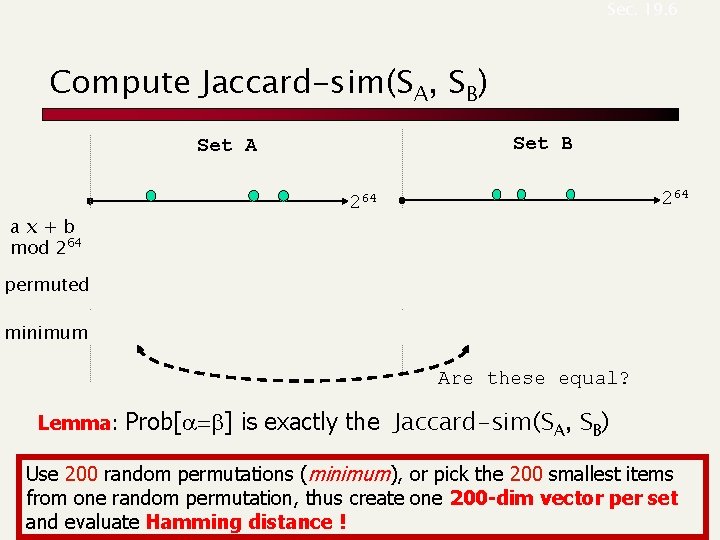

Sec. 19. 6 Compute Jaccard-sim(SA, SB) Set B Set A ax+b mod 264 permuted minimum a 264 264 b 264 Are these equal? Lemma: Prob[a=b] is exactly the Jaccard-sim(SA, SB) Use 200 random permutations (minimum), or pick the 200 smallest items from one random permutation, thus create one 200 -dim vector per set and evaluate Hamming distance !

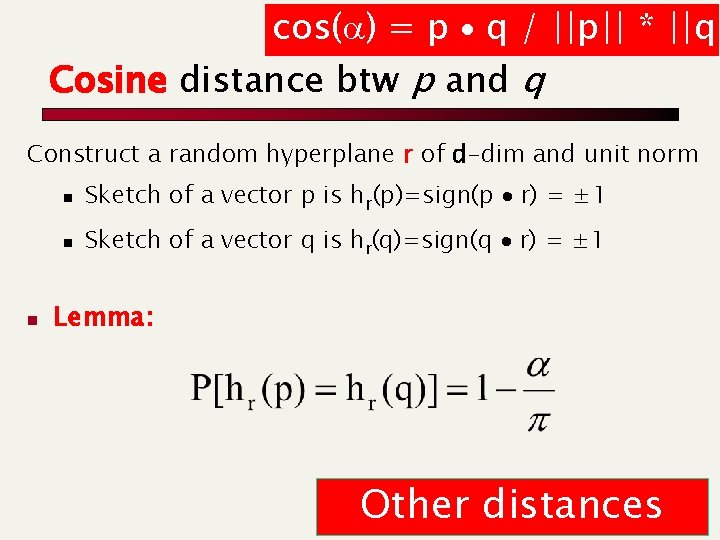

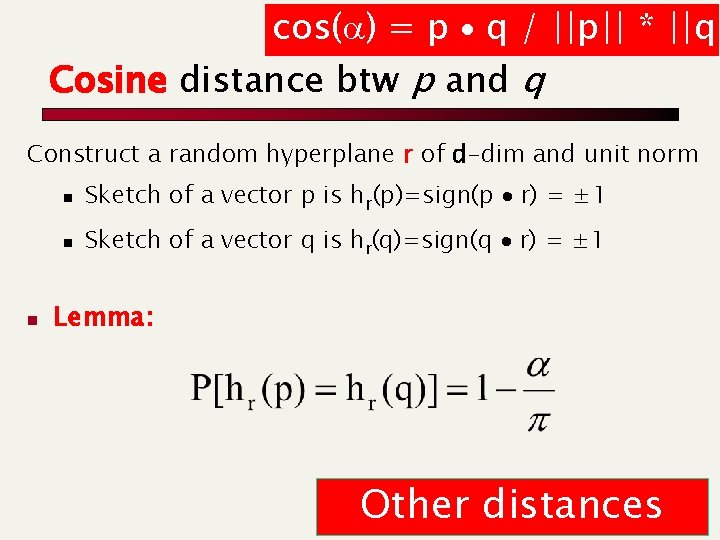

cos(a) = p q / ||p|| * ||q| Cosine distance btw p and q Construct a random hyperplane r of d-dim and unit norm n n Sketch of a vector p is hr(p)=sign(p r) = ± 1 n Sketch of a vector q is hr(q)=sign(q r) = ± 1 Lemma: Other distances

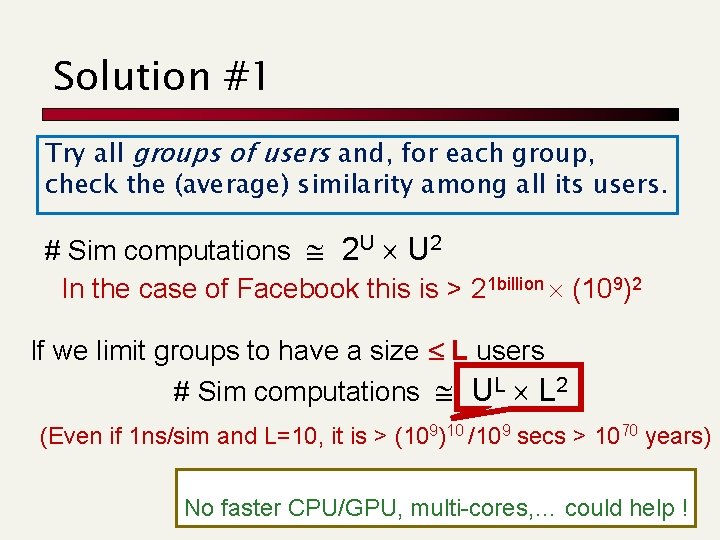

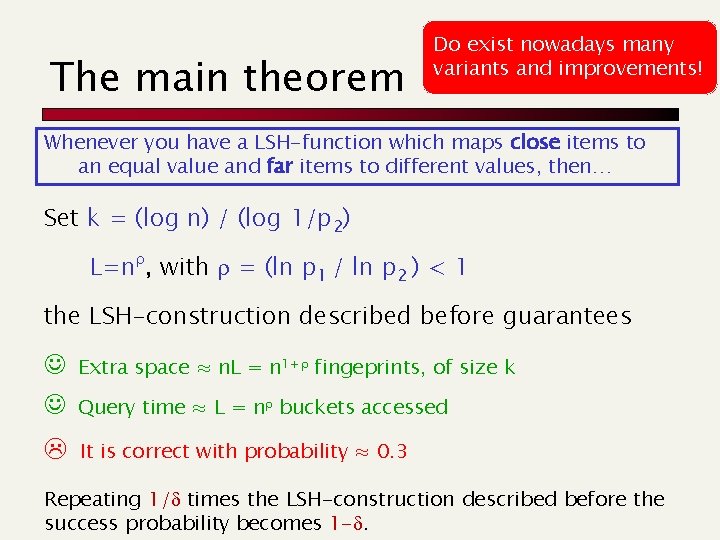

The main theorem Do exist nowadays many variants and improvements! Whenever you have a LSH-function which maps close items to an equal value and far items to different values, then… Set k = (log n) / (log 1/p 2) L=nr, with r = (ln p 1 / ln p 2 ) < 1 the LSH-construction described before guarantees J Extra space ≈ n. L = n 1+r fingeprints, of size k J Query time ≈ L = nr buckets accessed It is correct with probability ≈ 0. 3 Repeating 1/d times the LSH-construction described before the success probability becomes 1 -d.

Paolo ferragina

Paolo ferragina Paolo ferragina

Paolo ferragina Pfordelta

Pfordelta Paolo ferragina

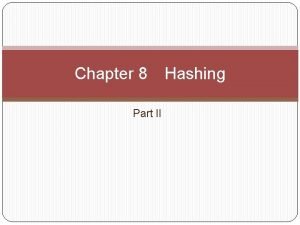

Paolo ferragina What is open hashing and closed hashing

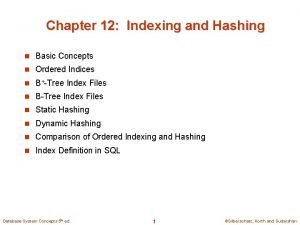

What is open hashing and closed hashing Static hashing and dynamic hashing

Static hashing and dynamic hashing Static and dynamic hashing in dbms

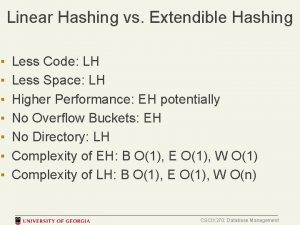

Static and dynamic hashing in dbms Linear hashing

Linear hashing Cro applications

Cro applications The fourier transform and its applications

The fourier transform and its applications Spectral graph theory and its applications

Spectral graph theory and its applications A brief introduction to spectral graph theory

A brief introduction to spectral graph theory Linear algebra and its applications

Linear algebra and its applications The resistance r experienced by a partially submerged body

The resistance r experienced by a partially submerged body Fast random walk with restart and its applications

Fast random walk with restart and its applications Screening constant of chlorine

Screening constant of chlorine Cstnet

Cstnet Client server basics

Client server basics Hittorf method for determination of transport number

Hittorf method for determination of transport number Zener diodes applications

Zener diodes applications