LHC Networking and Grids David Foster Networks and

- Slides: 17

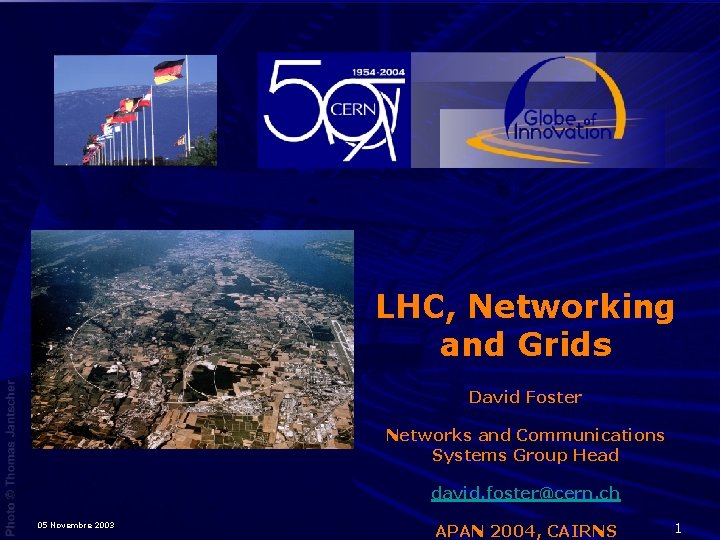

LHC, Networking and Grids David Foster Networks and Communications Systems Group Head david. foster@cern. ch 05 Novembre 2003 APAN 2004, CAIRNS 1

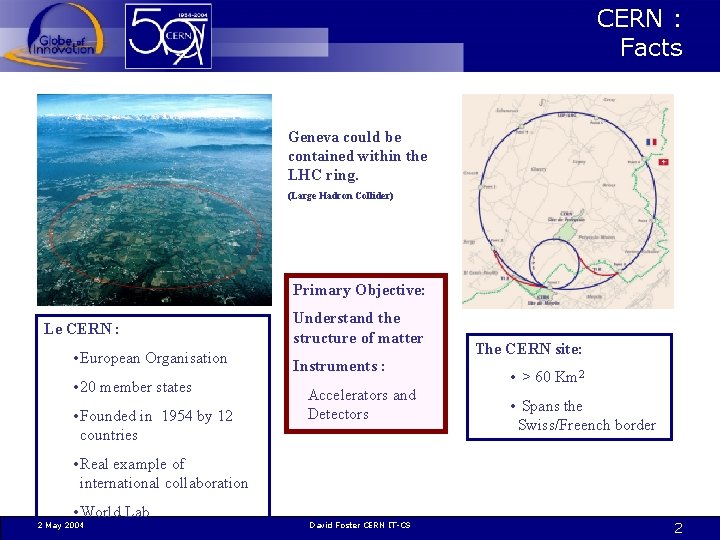

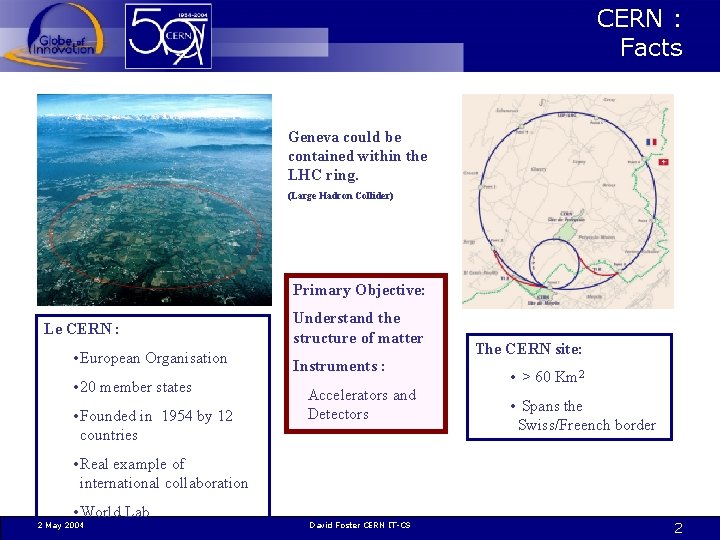

CERN : Facts Geneva could be contained within the LHC ring. (Large Hadron Collider) Primary Objective: Le CERN : • European Organisation • 20 member states • Founded in 1954 by 12 countries Understand the structure of matter Instruments : Accelerators and Detectors The CERN site: • > 60 Km 2 • Spans the Swiss/Freench border • Real example of international collaboration • World Lab 2 May 2004 David Foster CERN IT-CS 2

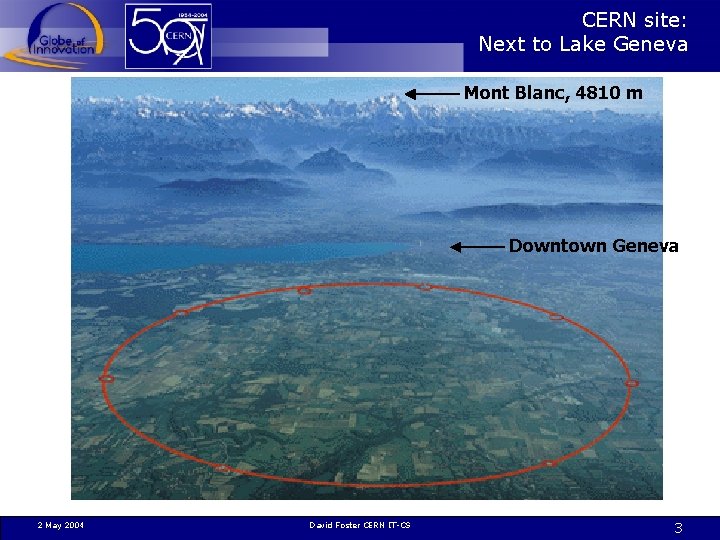

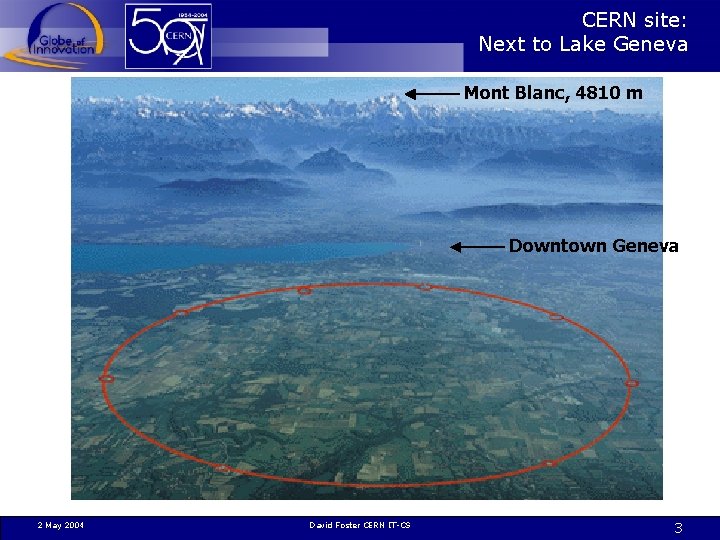

CERN site: Next to Lake Geneva Mont Blanc, 4810 m Downtown Geneva 2 May 2004 David Foster CERN IT-CS 3

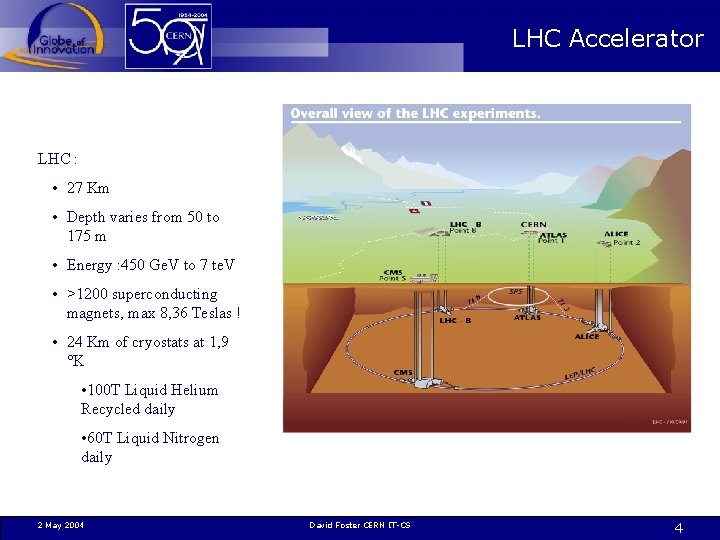

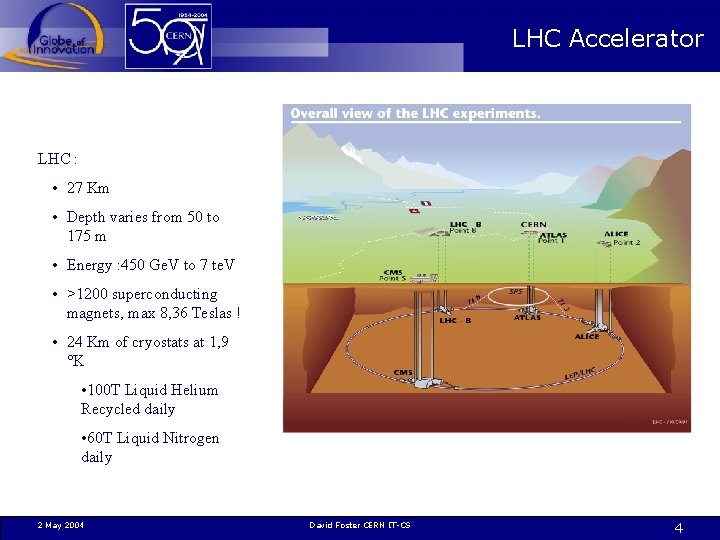

LHC Accelerator LHC : • 27 Km • Depth varies from 50 to 175 m • Energy : 450 Ge. V to 7 te. V • >1200 superconducting magnets, max 8, 36 Teslas ! • 24 Km of cryostats at 1, 9 °K • 100 T Liquid Helium Recycled daily • 60 T Liquid Nitrogen daily 2 May 2004 David Foster CERN IT-CS 4

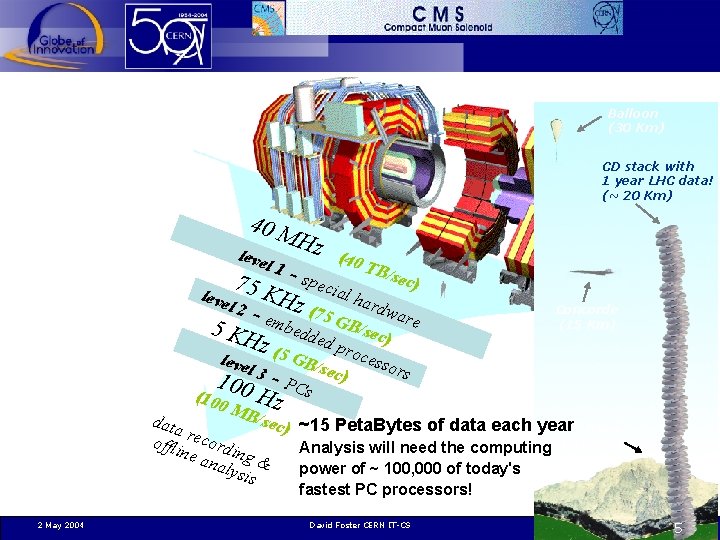

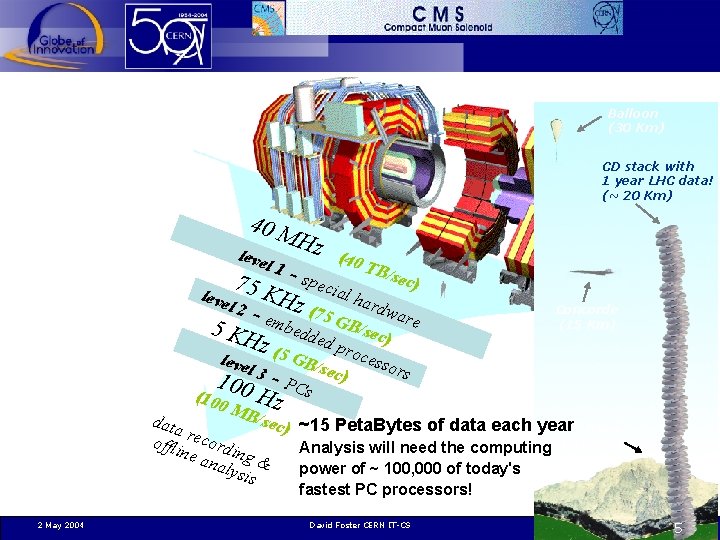

Balloon (30 Km) CD stack with 1 year LHC data! (~ 20 Km) 40 M leve l 1 Hz (40 TB/ sec) s peci 7 5 al h K leve ardw l 2 - Hz (7 a 5 5 KH embedde GB/sec) re d pr z( leve l Concorde (15 Km) o B/se cessor s c) PCs 5 G 100 3 (100 Hz M B/se data c) ~15 Peta. Bytes of data each year Mt. Blanc r e c offli ordi Analysis will need the computing (4. 8 Km) ne a ng & naly power of ~ 100, 000 of today's sis fastest PC processors! 2 May 2004 David Foster CERN IT-CS 5

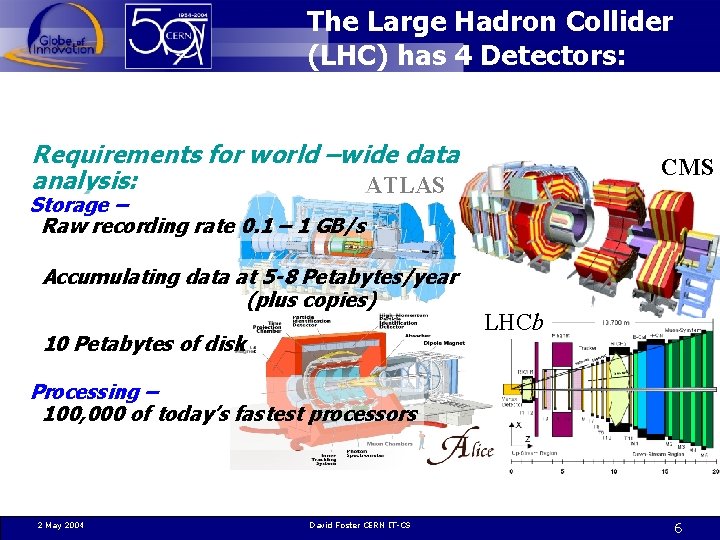

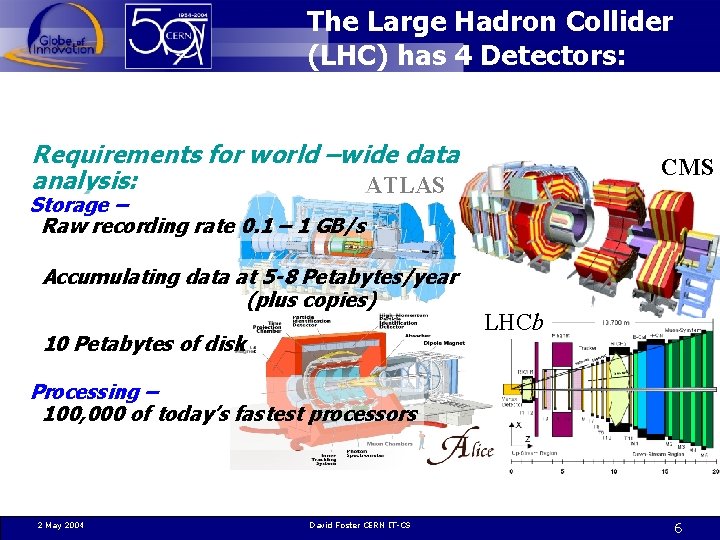

The Large Hadron Collider (LHC) has 4 Detectors: Requirements for world –wide data analysis: ATLAS CMS Storage – Raw recording rate 0. 1 – 1 GB/s Accumulating data at 5 -8 Petabytes/year (plus copies) 10 Petabytes of disk LHCb Processing – 100, 000 of today’s fastest processors 2 May 2004 David Foster CERN IT-CS 6

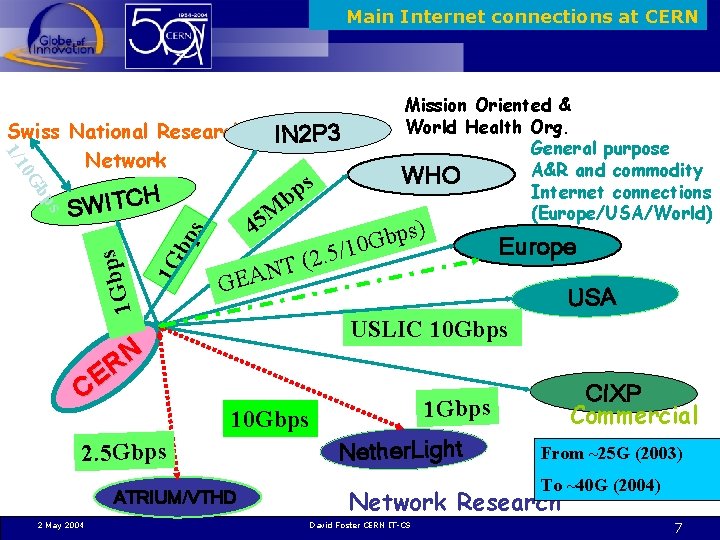

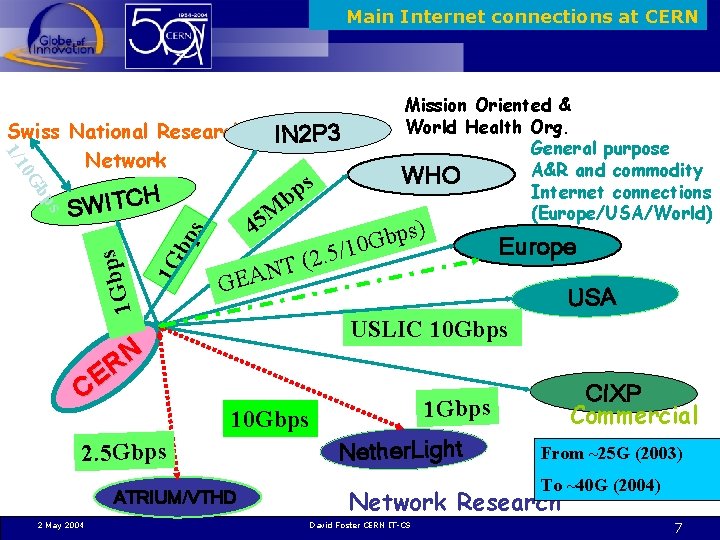

Main Internet connections at CERN IN 2 P 3 1 Gbp s s bp H SWITC 1 G bp s 0 G 1/1 Swiss National Research Network N R CE M 5 4 T N A GE s p b G 10 / 5. 2 ( ) Europe USA USLIC 10 Gbps ATRIUM/VTHD CIXP Commercial 1 Gbps 10 Gbps 2. 5 Gbps 2 May 2004 s p b Mission Oriented & World Health Org. General purpose A&R and commodity WHO Internet connections (Europe/USA/World) Nether. Light From ~25 G (2003) To ~40 G (2004) Network Research David Foster CERN IT-CS 7

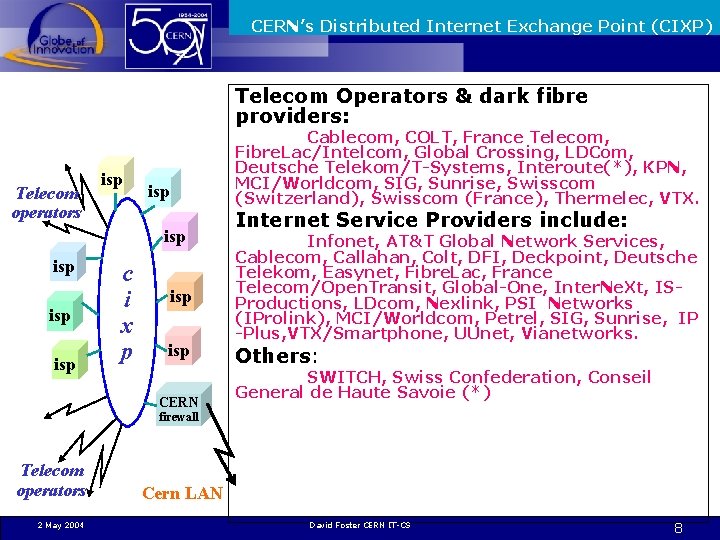

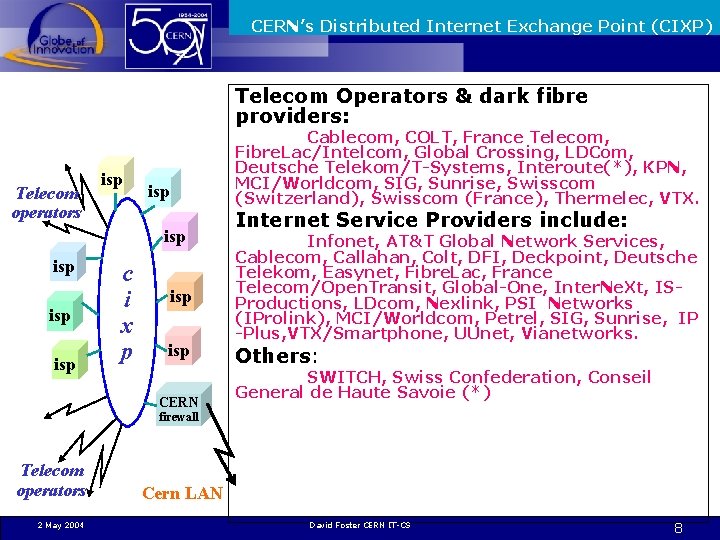

CERN’s Distributed Internet Exchange Point (CIXP) Telecom Operators & dark fibre providers: Telecom operators isp Cablecom, COLT, France Telecom, Fibre. Lac/Intelcom, Global Crossing, LDCom, Deutsche Telekom/T-Systems, Interoute(*), KPN, MCI/Worldcom, SIG, Sunrise, Swisscom (Switzerland), Swisscom (France), Thermelec, VTX. isp isp isp c i x p isp CERN Internet Service Providers include: Infonet, AT&T Global Network Services, Cablecom, Callahan, Colt, DFI, Deckpoint, Deutsche Telekom, Easynet, Fibre. Lac, France Telecom/Open. Transit, Global-One, Inter. Ne. Xt, ISProductions, LDcom, Nexlink, PSI Networks (IProlink), MCI/Worldcom, Petrel, SIG, Sunrise, IP -Plus, VTX/Smartphone, UUnet, Vianetworks. Others: SWITCH, Swiss Confederation, Conseil General de Haute Savoie (*) firewall Telecom operators 2 May 2004 Cern LAN David Foster CERN IT-CS 8

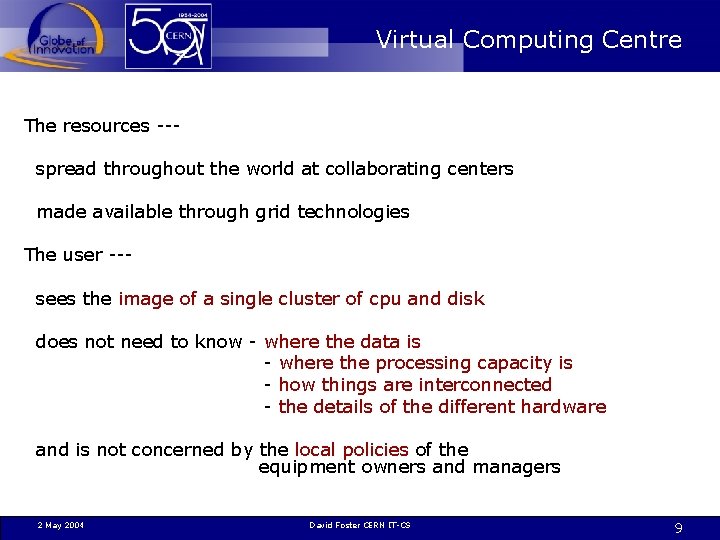

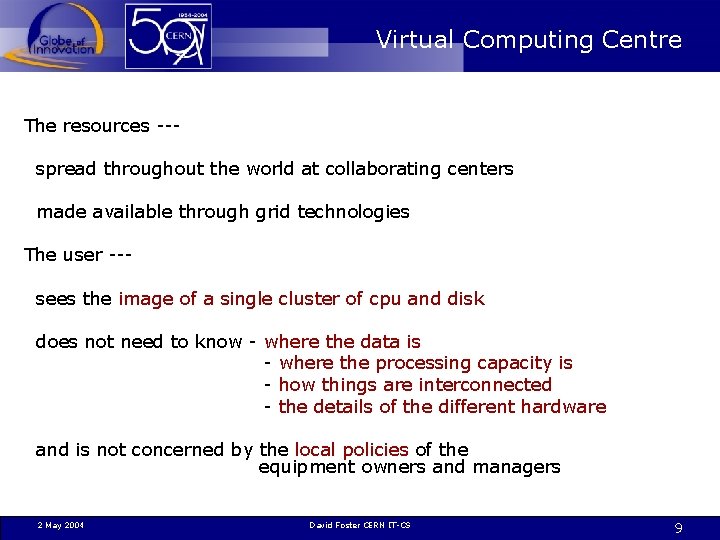

Virtual Computing Centre The resources --spread throughout the world at collaborating centers made available through grid technologies The user --sees the image of a single cluster of cpu and disk does not need to know - where the data is - where the processing capacity is - how things are interconnected - the details of the different hardware and is not concerned by the local policies of the equipment owners and managers 2 May 2004 David Foster CERN IT-CS 9

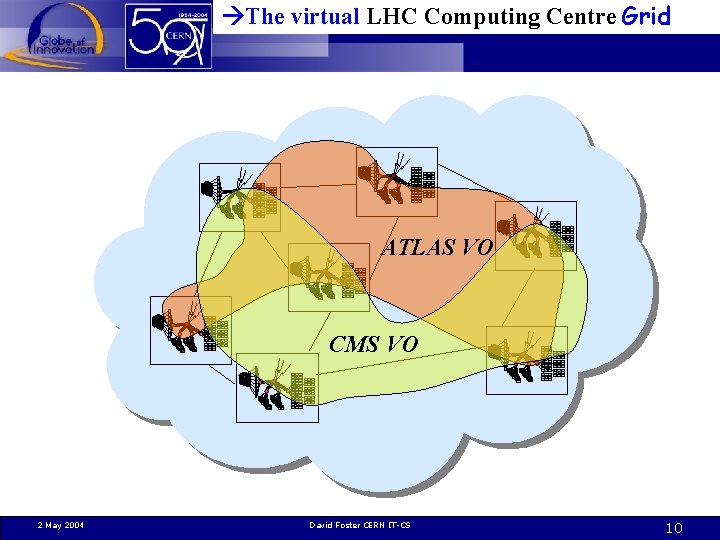

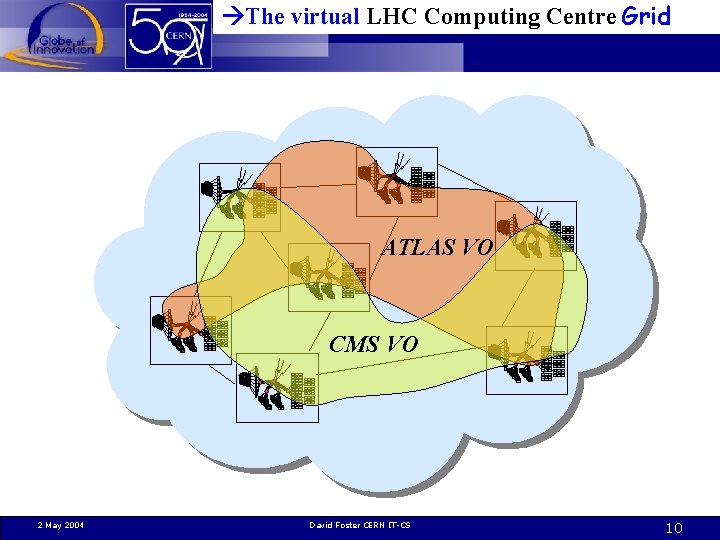

The virtual LHC Computing Centre Grid Collaborating Computer Centres ATLAS VO CMS VO 2 May 2004 David Foster CERN IT-CS 10

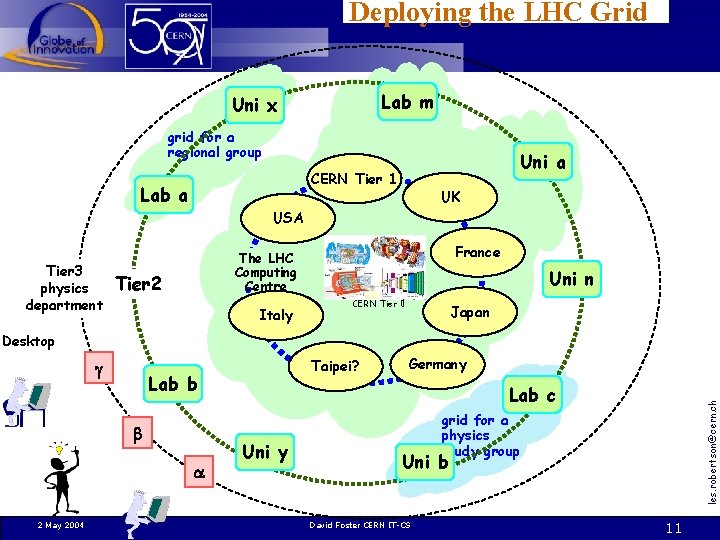

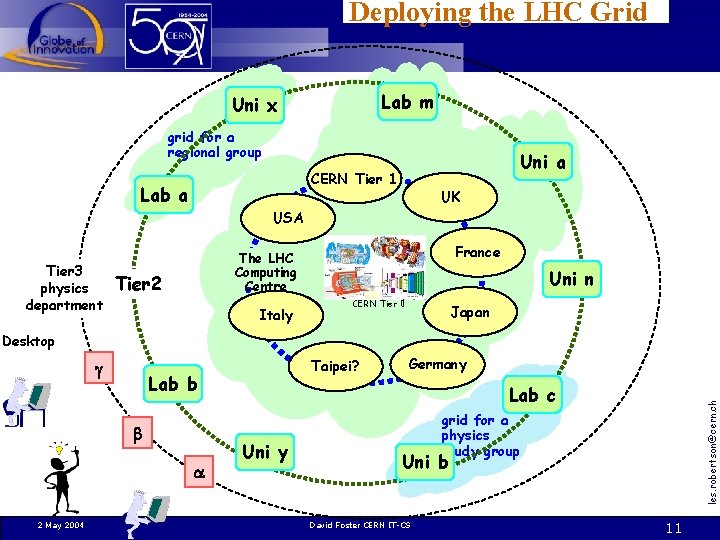

Deploying the LHC Grid Lab m Uni x grid for a regional group Uni a CERN Tier 1 Lab a UK USA Tier 3 physics department France The LHC Tier 1 Computing Tier 2 Uni n Centre Italy CERN Tier 0 Japan Desktop Lab b 2 May 2004 Taipei? Germany Lab c Uni y les. robertson@cern. ch grid for a physics study group Uni b David Foster CERN IT-CS 11

The Goal of the LHC Computing Grid Project (LCG) To help the experiments’ computing projects prepare, build and operate the computing environment needed to manage and analyse the data coming from the detectors Phase 1 – 2002 -05 prepare and deploy a prototype of the environment for LHC computing Phase 2 – 2006 -08 acquire, build and operate the LHC computing service 2 May 2004 David Foster CERN IT-CS matthias. kasemann@fnal. gov 12

Modes of Use • Connectivity requirements are subdivided by usage pattern: – “Buffered real-time” for the T 0 to T 1 raw data transfer. – “Peer Services” between the T 1 -T 1 and T 1 -T 2 for the background distribution of data products. – “Chaotic” • submission of analysis jobs to T 1 and T 2 centers • “on-demand” data transfer. 2 May 2004 David Foster CERN IT-CS 13

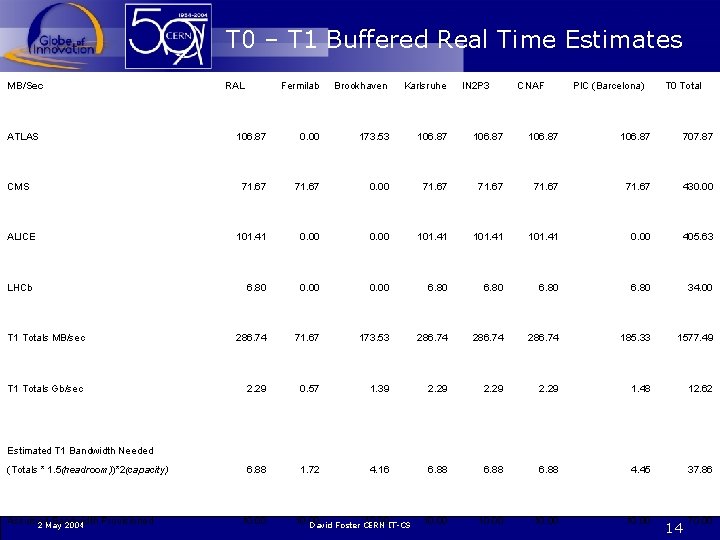

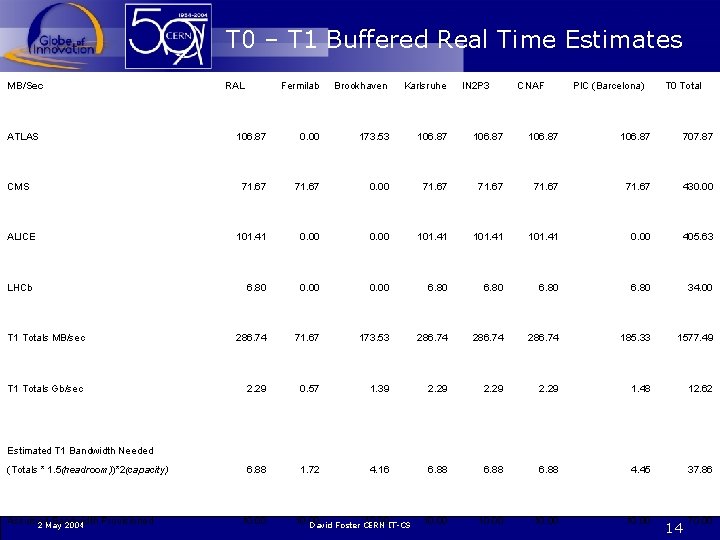

T 0 – T 1 Buffered Real Time Estimates MB/Sec Fermilab Brookhaven Karlsruhe 106. 87 0. 00 173. 53 106. 87 707. 87 71. 67 0. 00 71. 67 430. 00 101. 41 101. 41 0. 00 405. 63 6. 80 0. 00 6. 80 34. 00 T 1 Totals MB/sec 286. 74 71. 67 173. 53 286. 74 185. 33 1577. 49 T 1 Totals Gb/sec 2. 29 0. 57 1. 39 2. 29 1. 48 12. 62 6. 88 1. 72 4. 16 6. 88 4. 45 37. 86 10. 00 ATLAS CMS ALICE LHCb RAL IN 2 P 3 CNAF PIC (Barcelona) T 0 Total Estimated T 1 Bandwidth Needed (Totals * 1. 5(headroom))*2(capacity) Assumed Bandwidth Provisioned 2 May 2004 10. 00 David Foster CERN IT-CS 14 70. 00

Peer Services • Will be largely bulk data transfers. – Scheduled data “redisribution” • Need a very good, reliable, efficient file transfer service. – Much work going on with Grid. FTP – Maybe a candidate for non-IP service (fiberchannel over SONET) • Could be provided by a switched infrastructure. – Circuit based optical switching, on demand or static. – “Well known” and “Trusted” peer end points (hardware and software) and opportunity to bypass firewall issues. 2 May 2004 David Foster CERN IT-CS 15

Some Challenges • Real bandwidth estimates given the chaotic nature of the requirements. • End-end performance given the whole chain involved – (disk-bus-memory-bus-network-bus-memory-busdisk) • Provisioning over complex network infrastructures (GEANT, NREN’s etc) • Cost model for options (packet+SLA’s, circuit switched etc) • Consistent Performance (dealing with firewalls) • Merging leading edge research with production networking 2 May 2004 David Foster CERN IT-CS 16

Thank You! 05 Novembre 2003 17

Traditional network vs sdn

Traditional network vs sdn Layered architecture for web services and grids

Layered architecture for web services and grids Upside down grid error

Upside down grid error Glow discharge system

Glow discharge system Decimal using grids

Decimal using grids Grids 49152

Grids 49152 Demand response in smart grids

Demand response in smart grids Acted salary grid

Acted salary grid Bootstrap 4 osztály

Bootstrap 4 osztály Differentiation grids

Differentiation grids Differentiate between virtual circuit and datagram network

Differentiate between virtual circuit and datagram network Basestore iptv

Basestore iptv Tevatron vs lhc

Tevatron vs lhc Www.lhc.la.gov

Www.lhc.la.gov Hl-lhc schedule

Hl-lhc schedule Forum lhc

Forum lhc Lhc logbook

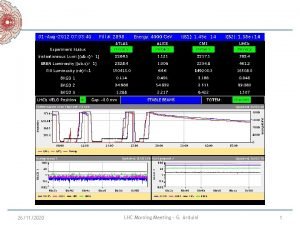

Lhc logbook Lhc morning meeting

Lhc morning meeting