KUMC Quality Assurance Program for Human Research Karen

- Slides: 26

KUMC Quality Assurance Program for Human Research Karen Blackwell, MS, CIP Director, Human Research Protection Program

Overview n Rationale for proposing a QA program n Activities of the QA Task Force n Task Force recommendations n Next steps

Quality Assurance… n n n Support and education for investigators Routine on-site reviews of study records Preparation for external audits For-cause audits, when required Feedback to the overall HRPP

Rationale for a QA Program n n n Reflect our commitment to excellence Coordinate efforts within KUMC Prevent compliance violations Meet contractual and fiduciary duties Address known challenges

EVC’s Charge to the Task Force n n n n Examine model programs Identify key individuals and groups Optimize existing resources Develop standard operating procedures Establish reporting paths Develop a communication plan Report back by September 1 st

QA Task Force Members April –August 2009 n n n n Ed Phillips Jeff Reene Paul Terranova Marge Bott Gary Doolittle Patty Kluding Greg Kopf n n n Karen Blackwell Jo Denton Diana Naser Becky Hubbell Monica Lubeck

Model Programs v v v v University of Pittsburgh Partners Health. Care System University of Michigan Emory University Indiana University of California – San Francisco Baylor College of Medicine Children’s Hospital of Boston

Task Force Recommendations n Overall philosophy for our program n Key components of a QA program n Resources, milestones, timelines n Leadership and oversight

Key Components n n n Support from institutional leadership Clear delineation of roles Transparent criteria for study selection Standard operating procedures Lines of authority to report audit findings Methods to translate findings into education and support for investigators

Philosophy of the QA Program n Partnership n Focus on education and assistance n Collegial approach n Soliciting investigator feedback

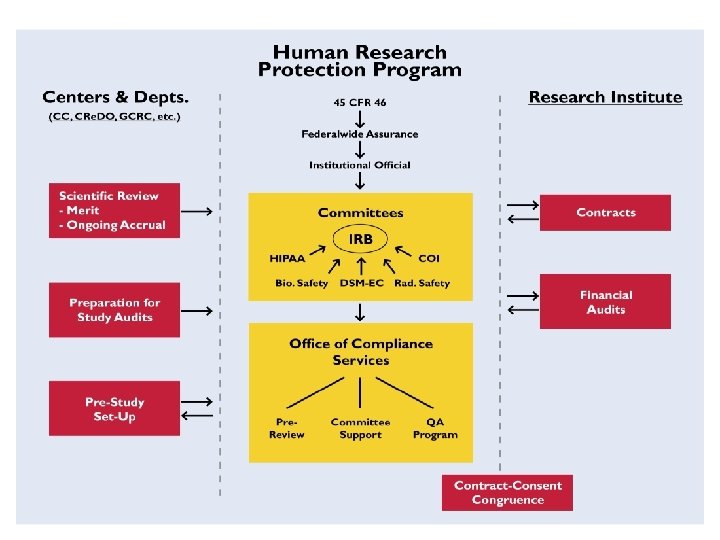

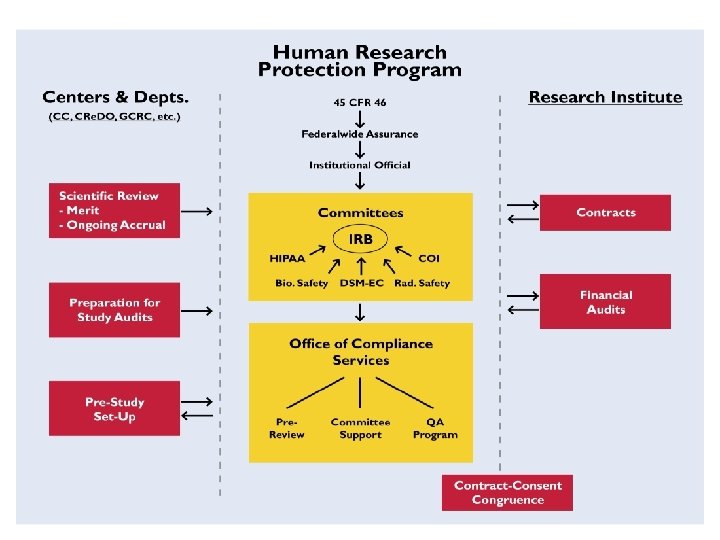

Appendix C

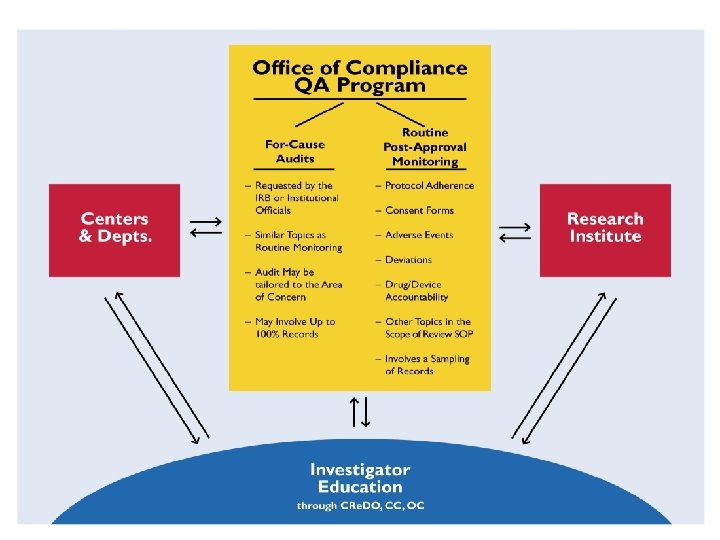

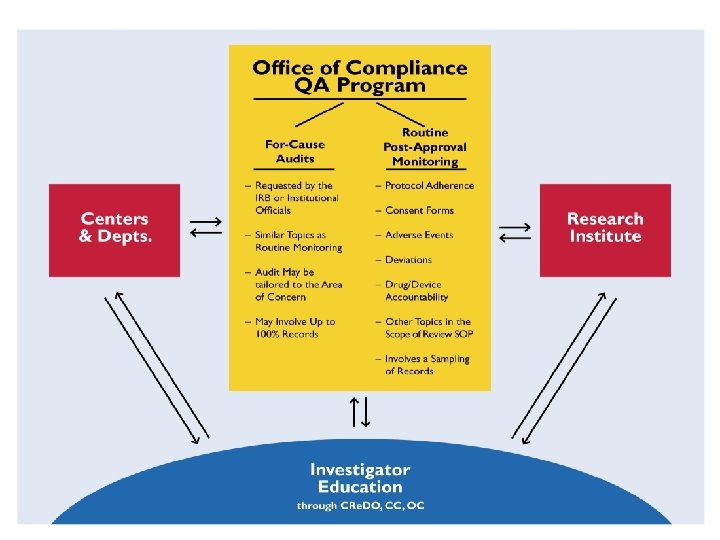

Appendix D

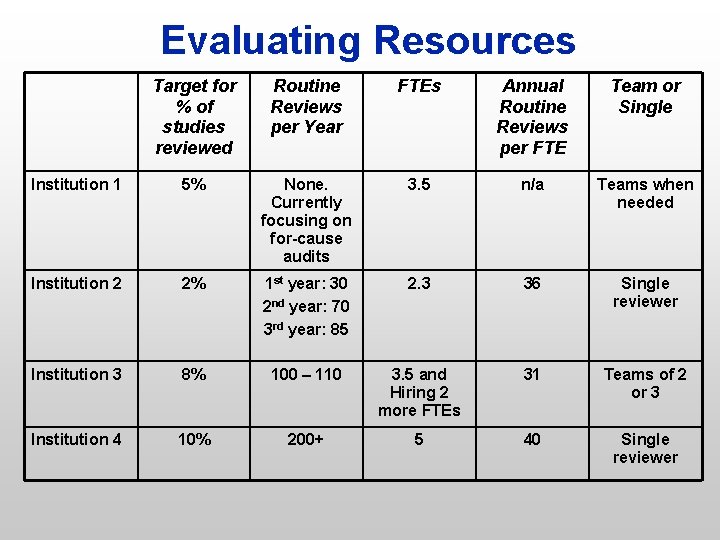

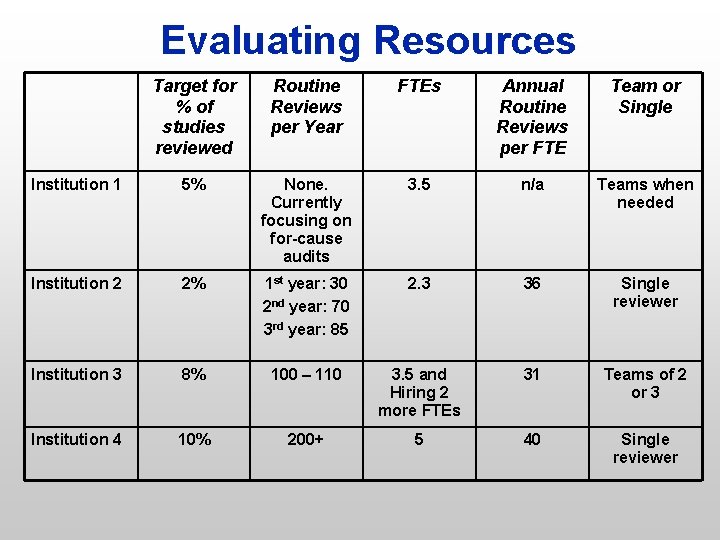

Evaluating Resources Target for % of studies reviewed Routine Reviews per Year FTEs Annual Routine Reviews per FTE Team or Single Institution 1 5% None. Currently focusing on for-cause audits 3. 5 n/a Teams when needed Institution 2 2% 1 st year: 30 2 nd year: 70 3 rd year: 85 2. 3 36 Single reviewer Institution 3 8% 100 – 110 3. 5 and Hiring 2 more FTEs 31 Teams of 2 or 3 Institution 4 10% 200+ 5 40 Single reviewer

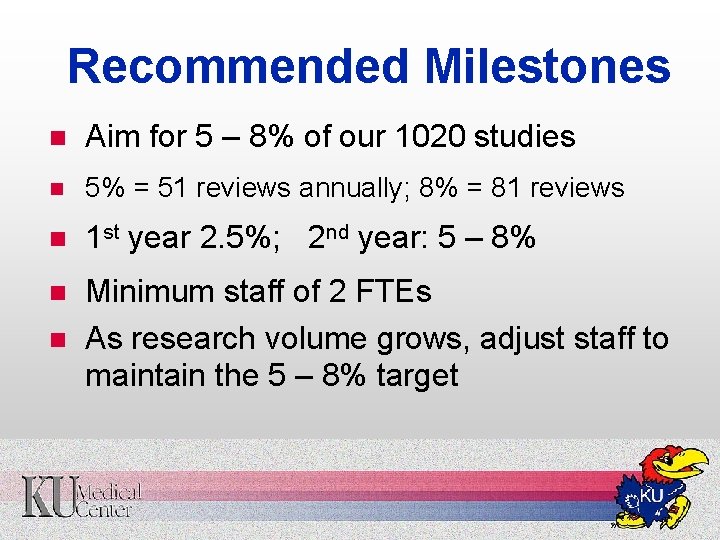

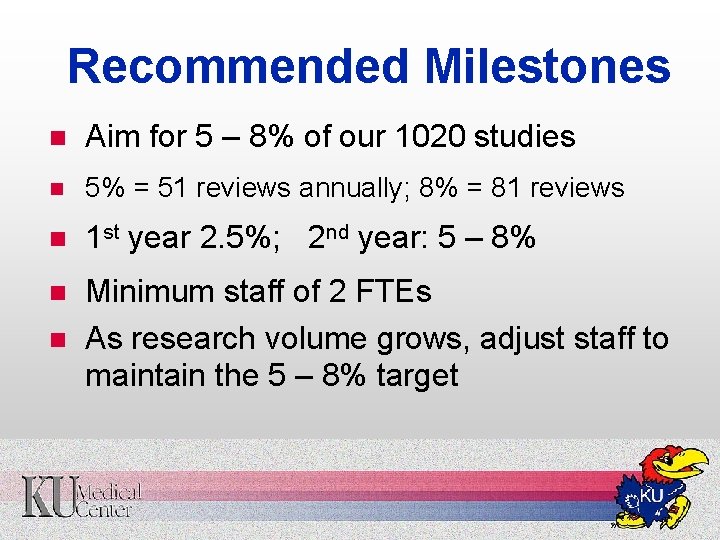

Recommended Milestones n Aim for 5 – 8% of our 1020 studies n 5% = 51 reviews annually; 8% = 81 reviews n 1 st year 2. 5%; 2 nd year: 5 – 8% n Minimum staff of 2 FTEs As research volume grows, adjust staff to maintain the 5 – 8% target n

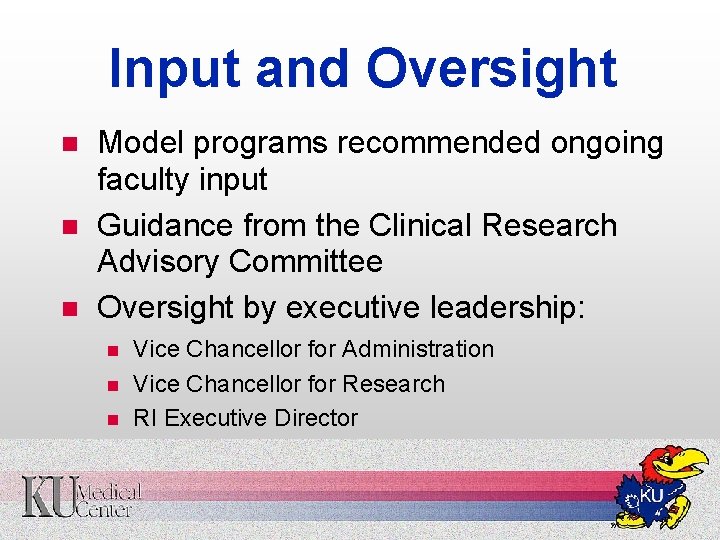

Input and Oversight n n n Model programs recommended ongoing faculty input Guidance from the Clinical Research Advisory Committee Oversight by executive leadership: n n n Vice Chancellor for Administration Vice Chancellor for Research RI Executive Director

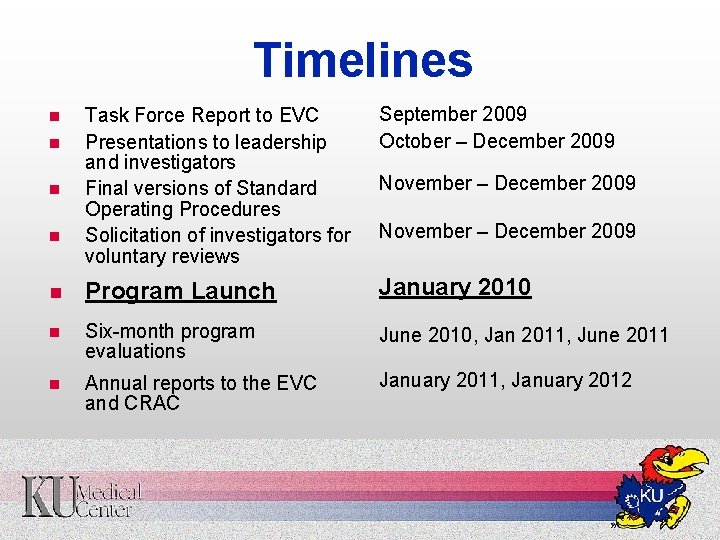

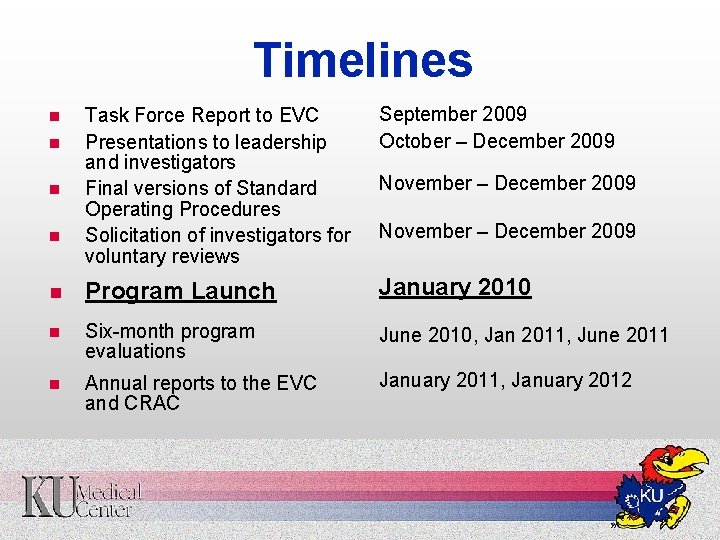

Timelines Task Force Report to EVC Presentations to leadership and investigators Final versions of Standard Operating Procedures Solicitation of investigators for voluntary reviews September 2009 October – December 2009 n Program Launch January 2010 n Six-month program evaluations June 2010, Jan 2011, June 2011 n Annual reports to the EVC and CRAC January 2011, January 2012 n n November – December 2009

Implementation: Study selection On-site review Feedback Corrections as needed Trend analysis

Study Selection Tier 1 Federally or internally funded n Moderate to high risk n IND/IDE holders n KUMC role as coordinating center n Vulnerable populations n COI n Tier 2 (other studies)

Review Process n PI is notified n Review is scheduled ~ 2 weeks n On-site review Routine reviews, 20 – 30% of records n For-cause, up to 100% n

Scope of the Review n n n IRB-approved documents Signed consent forms Study data, e. g. , Inclusion/exclusion decisions n Outcomes of assessments and procedures n Source documents n n n Adverse events or problems Drug/Device accountability

Common Findings at Other Sites n n Missing correspondence or approvals Informed consent issues Expired or invalid consents n Not dated or signed correctly n Consent by unauthorized persons n n Incomplete study records

Serious Findings n Protocol non-compliance n Inadequate study records n Unreported adverse events or deviations n Lack of drug/device accountability n Unapproved research

Observations and Corrections n n Exit interview Draft report to the PI, within 7 days PI responds with corrections of errors, clarifications, corrective action plan (if needed), within 14 days Final report to the PI and to the HSC

Reporting Findings n n n All final reports go to the HSC office Minor non-compliance is reviewed by the chair Potentially serious non-compliance goes to the convened HSC Evaluate corrective action plans n Follow-up as appropriate n

Getting Feedback n Exit interviews n Survey to investigators n Input from the CRAC n Cumulative results impact overall program

Feedback? Questions?