Intelligent Agents Chapter 2 Outline Agents and environments

![Vacuum-cleaner world • Percepts: location and contents, e. g. , [A, Dirty] • Actions: Vacuum-cleaner world • Percepts: location and contents, e. g. , [A, Dirty] • Actions:](https://slidetodoc.com/presentation_image_h2/0e3c00817b56ee7e17b3f341d4fdde44/image-9.jpg)

- Slides: 26

Intelligent Agents Chapter 2

Outline • Agents and environments • Rationality • PEAS (Performance measure, Environment, Actuators, Sensors) • Environment types • Agent types

Agents • An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators • Human agent: eyes, ears, and other organs for sensors; hands, legs, mouth, and other body parts for actuators. • Robotic agent: cameras and infrared range finders for sensors and various motors for actuators • A Software agent receives key strokes, file contains, and network packets as sensory inputs and acts on the environments by displaying on the screen, writing files, and sending network packets.

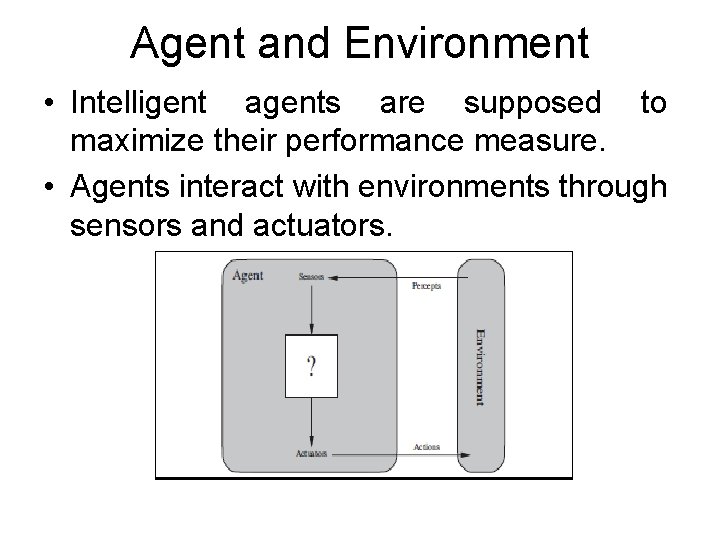

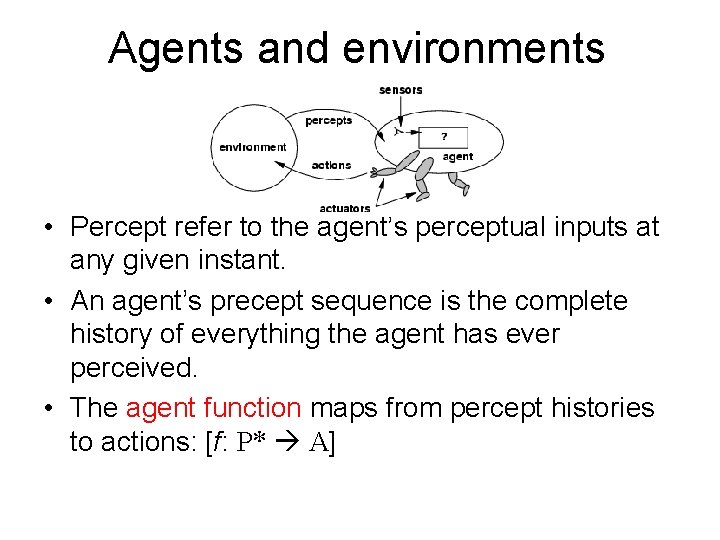

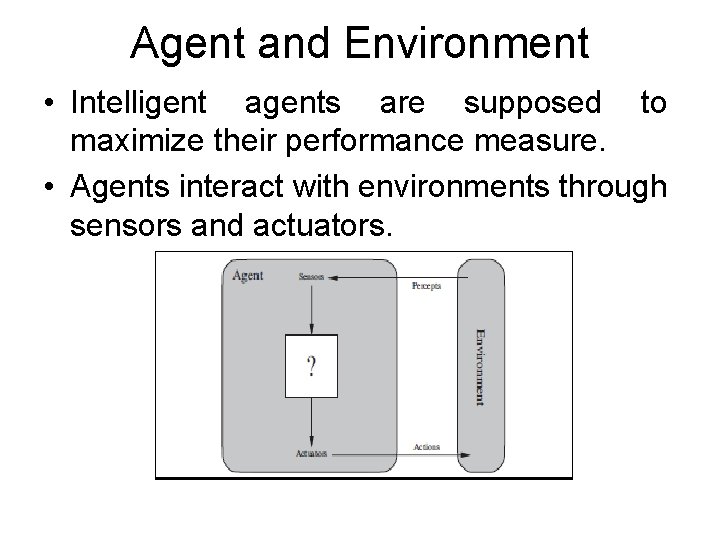

Agent and Environment • Intelligent agents are supposed to maximize their performance measure. • Agents interact with environments through sensors and actuators.

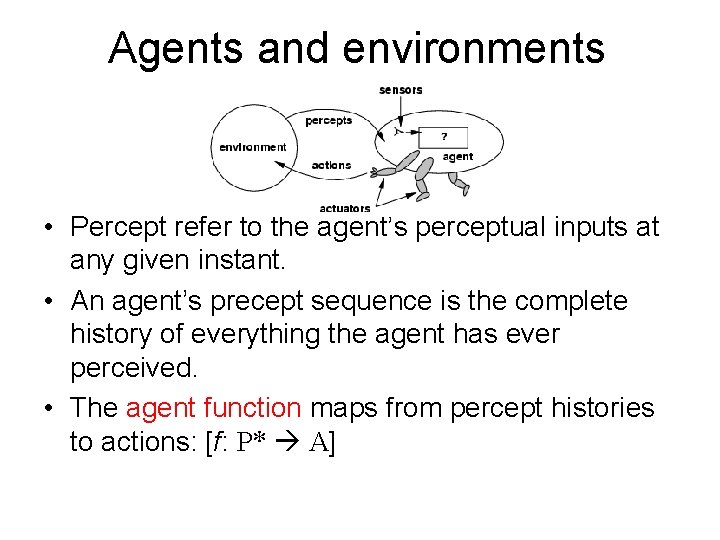

Agents and environments • Percept refer to the agent’s perceptual inputs at any given instant. • An agent’s precept sequence is the complete history of everything the agent has ever perceived. • The agent function maps from percept histories to actions: [f: P* A]

Rational agents • An agent should strive to "do the right thing", based on what it can perceive and the actions it can perform. The right action is the one that will cause the agent to be most successful • For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure.

Performance Measure • Performance measure: An objective criterion for success of an agent's behavior • E. g. , performance measure of a vacuum-cleaner agent could be amount of dirt cleaned up, amount of time taken, amount of electricity consumed, amount of noise generated, etc.

Rational agents • Rationality is distinct from omniscience (all -knowing with infinite knowledge) • Agents can perform actions in order to modify future percepts so as to obtain useful information (information gathering, exploration) • An agent is autonomous if its behavior is determined by its own experience (with ability to learn and adapt)

![Vacuumcleaner world Percepts location and contents e g A Dirty Actions Vacuum-cleaner world • Percepts: location and contents, e. g. , [A, Dirty] • Actions:](https://slidetodoc.com/presentation_image_h2/0e3c00817b56ee7e17b3f341d4fdde44/image-9.jpg)

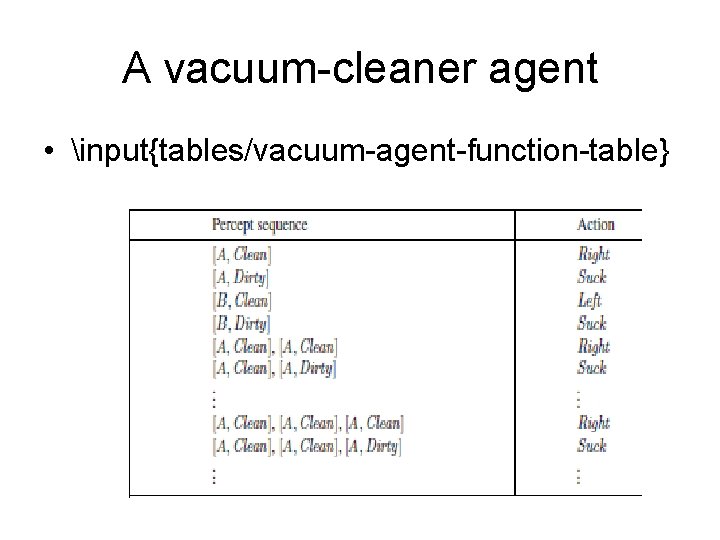

Vacuum-cleaner world • Percepts: location and contents, e. g. , [A, Dirty] • Actions: Left, Right, Suck, No. Op

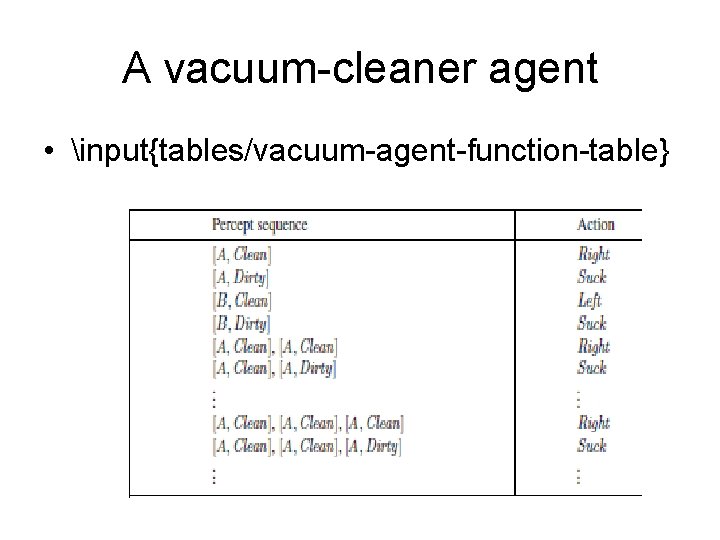

A vacuum-cleaner agent • input{tables/vacuum-agent-function-table}

PEAS • PEAS: Performance measure, Environment, Actuators, Sensors • Must first specify the setting for intelligent agent design • Consider, e. g. , the task of designing an automated taxi driver: – – Performance measure Environment Actuators Sensors

PEAS • Must first specify the setting for intelligent agent design • Consider, e. g. , the task of designing an automated taxi driver: – Performance measure: Safe, fast, legal, comfortable trip, maximize profits – Environment: Roads, other traffic, pedestrians, customers – Actuators: Steering wheel, accelerator, brake, signal, horn – Sensors: Cameras, sonar, speedometer, GPS, odometer, engine sensors, keyboard

PEAS • Agent: Medical diagnosis system • Performance measure: Healthy patient, minimize costs, lawsuits • Environment: Patient, hospital, staff • Actuators: Screen display (questions, tests, diagnoses, treatments, referrals) • Sensors: Keyboard (entry of symptoms, findings, patient's answers)

PEAS • Agent: Part-picking robot • Performance measure: Percentage of parts in correct bins • Environment: Conveyor belt with parts, bins • Actuators: Jointed arm and hand • Sensors: Camera, joint angle sensors

PEAS • Agent: Interactive English tutor • Performance measure: Maximize student's score on test • Environment: Set of students • Actuators: Screen display (exercises, suggestions, corrections) • Sensors: Keyboard

Agent functions and programs • An agent is completely specified by the agent function mapping percept sequences to actions • One agent function (or a small equivalence class) is rational • Aim: find a way to implement the rational agent function concisely

Agent types • Four basic types in order of increasing generality: ØSimple reflex agents ØModel-based reflex agents ØGoal-based agents ØUtility-based agents

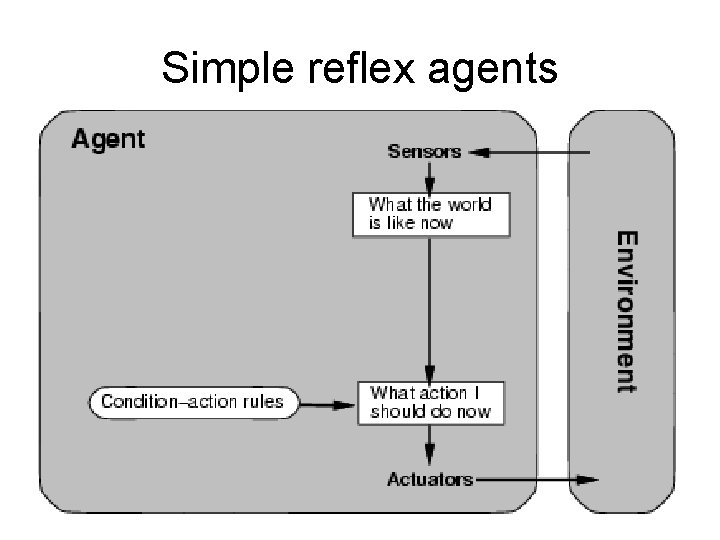

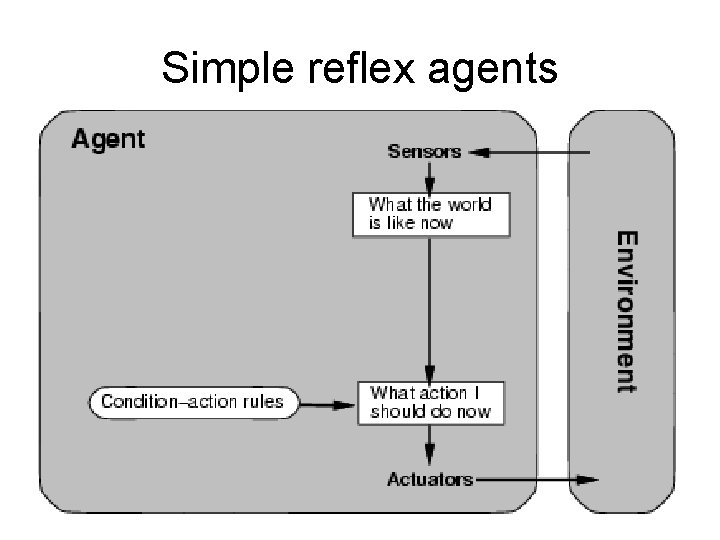

Simple reflex agents

Simple reflex agents • Simplest type of agent. • Concept is very simple and lacks much intelligence. • Each condition agent observe from its sensors based on the changed in the environment in which it is operating in, there is a specific actions defined by the agent program. • Each observation it receives from its sensors, it checks the condition-action rules and find the appropriate condition and then performs the relevant action defined in that rule using its actuators

Simple Reflex agent • Agent program contains a look-up table consists of all possible percept sequence mappings to respective actions. • Based on the input that the agent receives via the sensors, the agent would access this look up table and retrieve the respective action mapping for that percept sequence and inform actuators to perform action. • This process is not very effective where the environment is constantly changing.

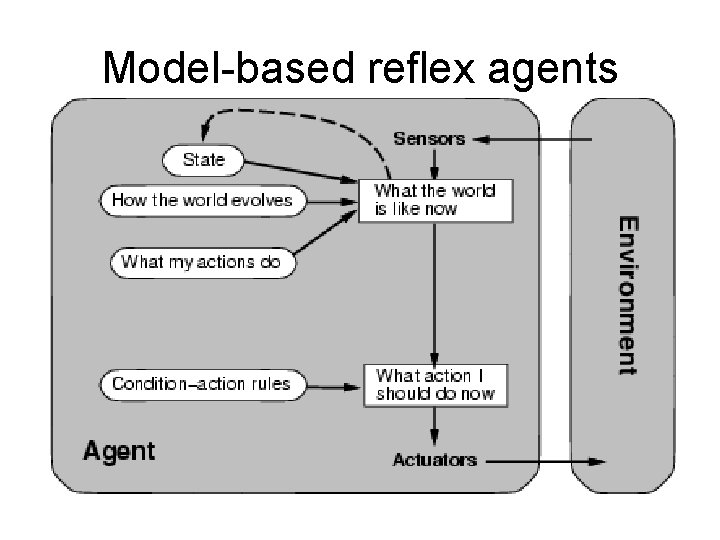

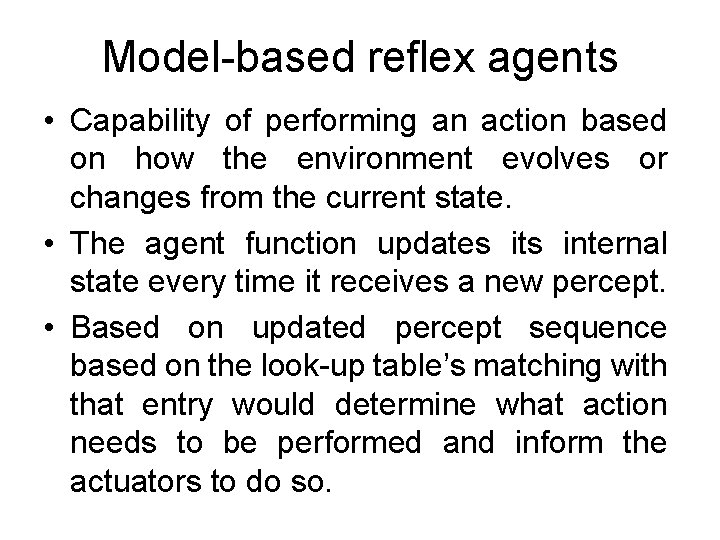

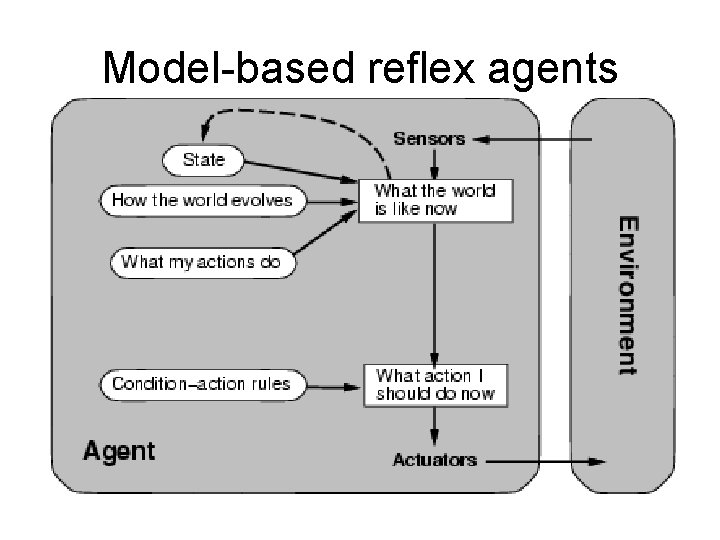

Model-based reflex agents

Model-based reflex agents • Capability of performing an action based on how the environment evolves or changes from the current state. • The agent function updates its internal state every time it receives a new percept. • Based on updated percept sequence based on the look-up table’s matching with that entry would determine what action needs to be performed and inform the actuators to do so.

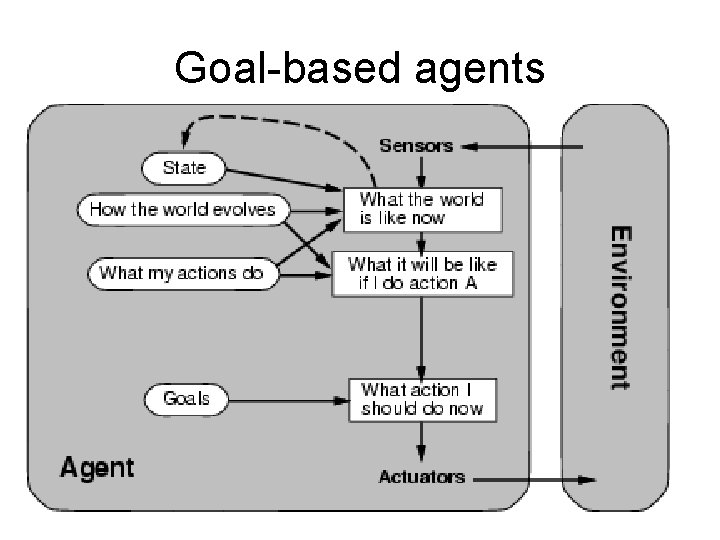

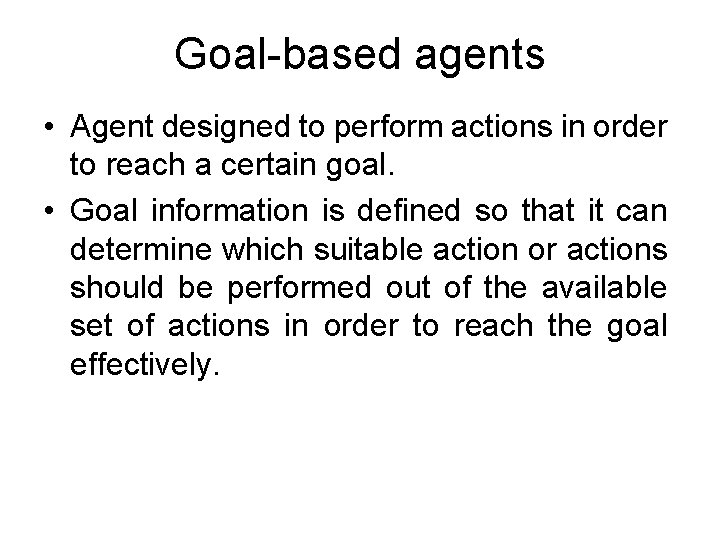

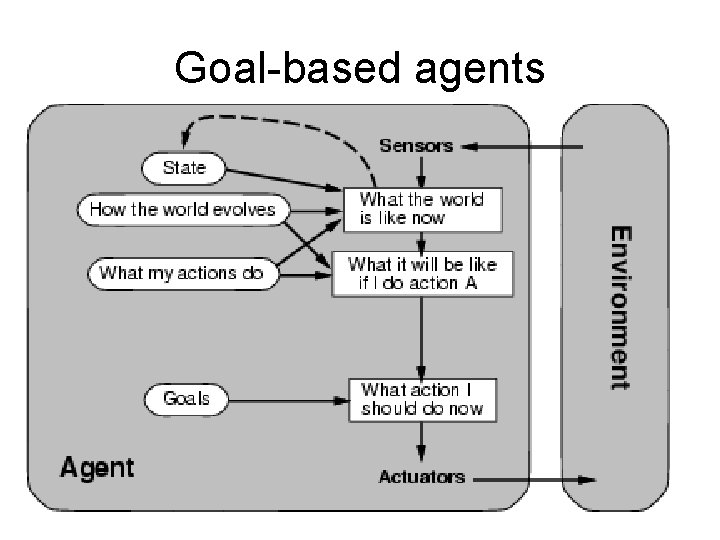

Goal-based agents

Goal-based agents • Agent designed to perform actions in order to reach a certain goal. • Goal information is defined so that it can determine which suitable action or actions should be performed out of the available set of actions in order to reach the goal effectively.

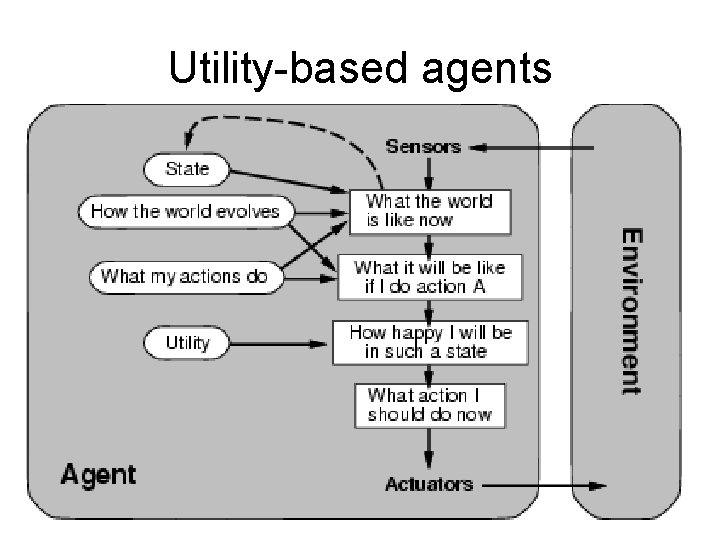

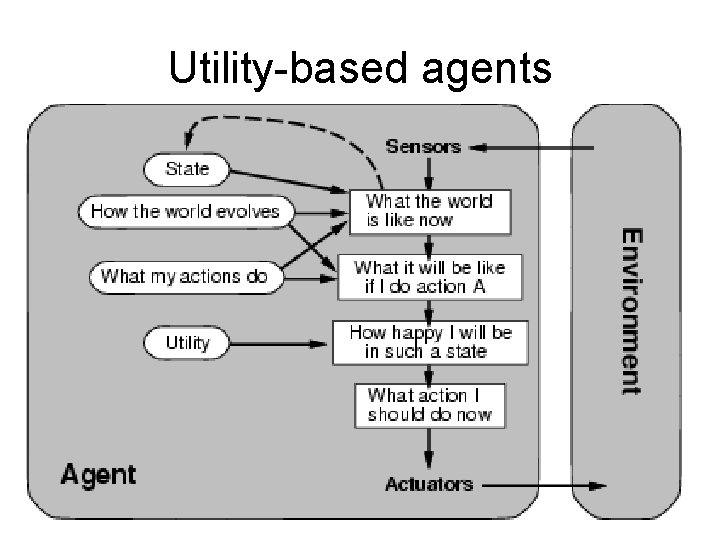

Utility-based agents

Utility-based agents • Goals would not be adequate to provide effective behavior in the agents • Combine goals with features desired. • A concept called utility function is used. • Comparison between different states of the world, a utility value is assigned to each state. • Utility function would map a state( a sequence of states) • Maximize the utility value derived from the state of the world.