Integrated Cloud Native NFV App stack Proposals Integrated

- Slides: 15

Integrated Cloud Native NFV & App stack Proposals

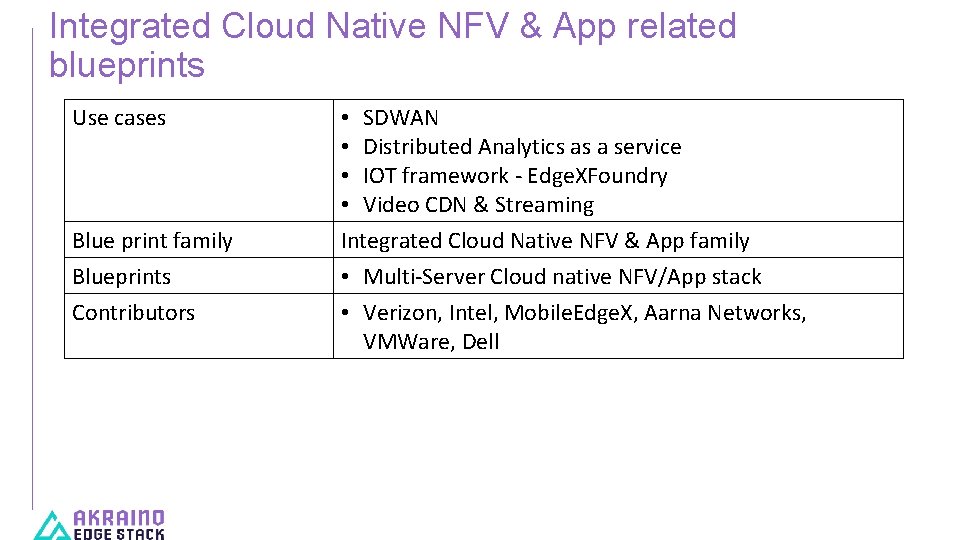

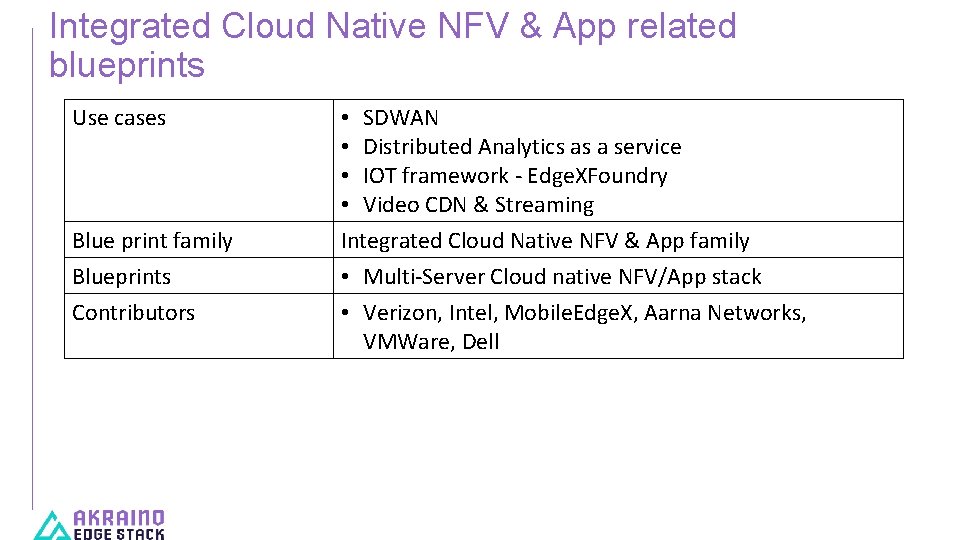

Integrated Cloud Native NFV & App related blueprints Use cases Blue print family Blueprints Contributors • SDWAN • Distributed Analytics as a service • IOT framework - Edge. XFoundry • Video CDN & Streaming Integrated Cloud Native NFV & App family • Multi-Server Cloud native NFV/App stack • Verizon, Intel, Mobile. Edge. X, Aarna Networks, VMWare, Dell

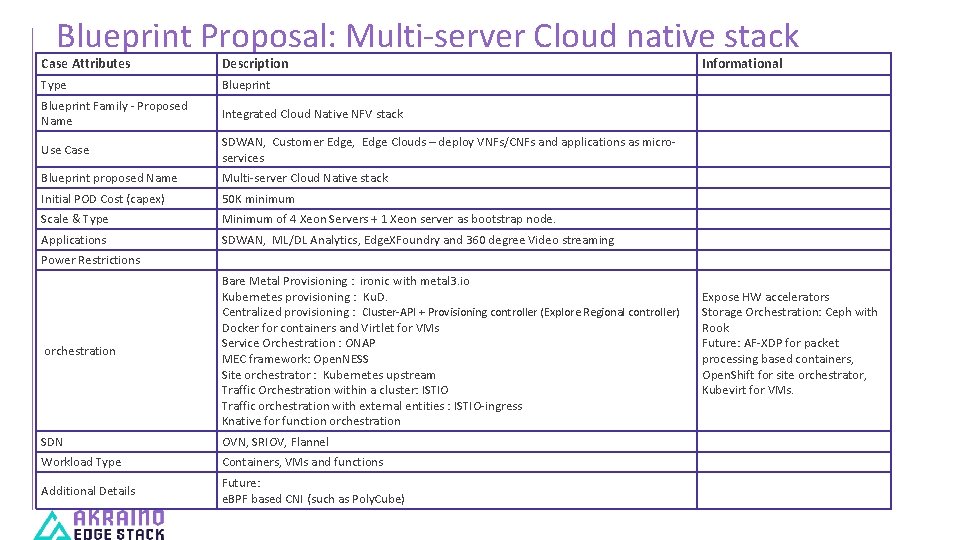

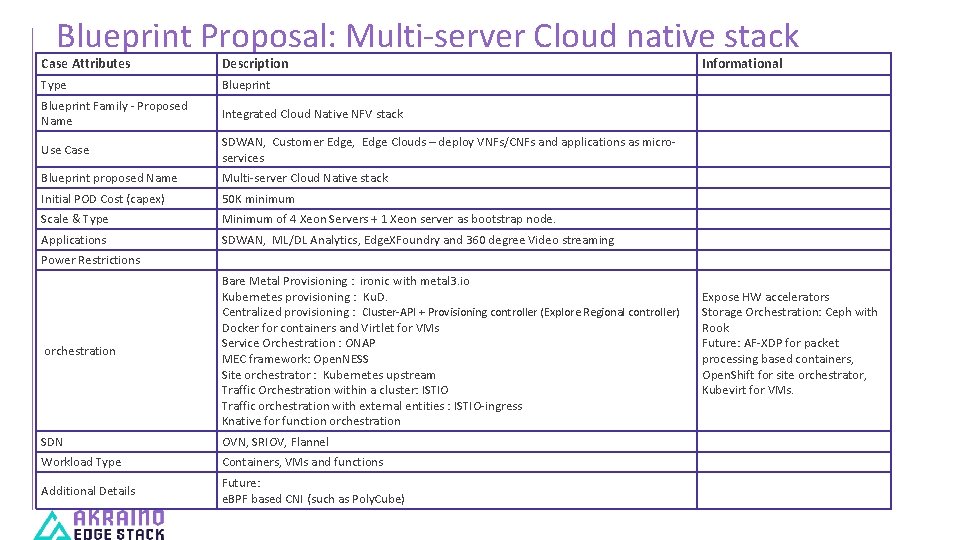

Blueprint Proposal: Multi-server Cloud native stack Case Attributes Description Informational Type Blueprint Family - Proposed Name Integrated Cloud Native NFV stack Use Case SDWAN, Customer Edge, Edge Clouds – deploy VNFs/CNFs and applications as microservices Blueprint proposed Name Multi-server Cloud Native stack Initial POD Cost (capex) 50 K minimum Scale & Type Minimum of 4 Xeon Servers + 1 Xeon server as bootstrap node. Applications SDWAN, ML/DL Analytics, Edge. XFoundry and 360 degree Video streaming Power Restrictions orchestration Bare Metal Provisioning : ironic with metal 3. io Kubernetes provisioning : Ku. D. Centralized provisioning : Cluster-API + Provisioning controller (Explore Regional controller) Docker for containers and Virtlet for VMs Service Orchestration : ONAP MEC framework: Open. NESS Site orchestrator : Kubernetes upstream Traffic Orchestration within a cluster: ISTIO Traffic orchestration with external entities : ISTIO-ingress Knative for function orchestration SDN OVN, SRIOV, Flannel Workload Type Containers, VMs and functions Additional Details Future: e. BPF based CNI (such as Poly. Cube) Expose HW accelerators Storage Orchestration: Ceph with Rook Future: AF-XDP for packet processing based containers, Open. Shift for site orchestrator, Kubevirt for VMs.

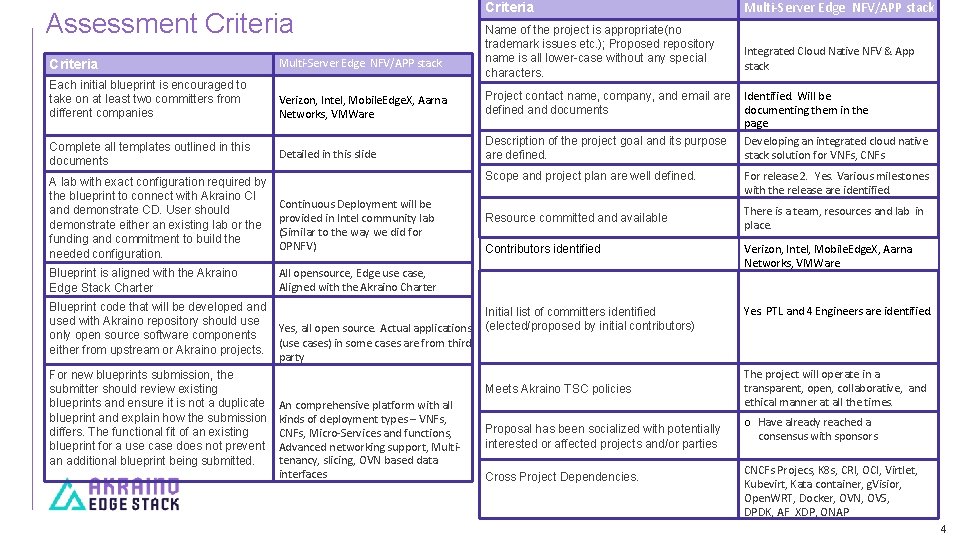

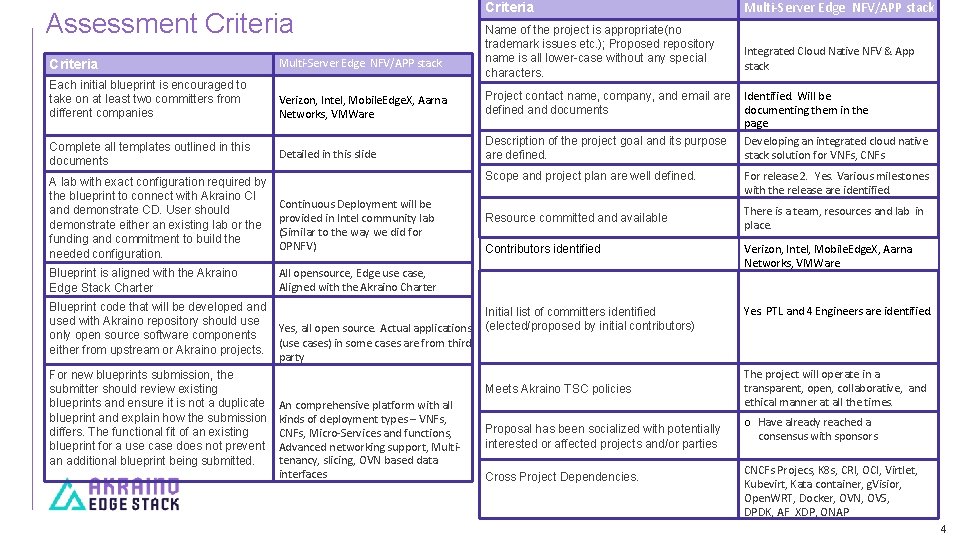

Assessment Criteria Name of the project is appropriate(no trademark issues etc. ); Proposed repository name is all lower-case without any special characters. Criteria Multi-Server Edge NFV/APP stack Each initial blueprint is encouraged to take on at least two committers from different companies Verizon, Intel, Mobile. Edge. X, Aarna Networks, VMWare Project contact name, company, and email are defined and documents Detailed in this slide Description of the project goal and its purpose are defined. Complete all templates outlined in this documents A lab with exact configuration required by the blueprint to connect with Akraino CI and demonstrate CD. User should demonstrate either an existing lab or the funding and commitment to build the needed configuration. Scope and project plan are well defined. Continuous Deployment will be provided in Intel community lab (Similar to the way we did for OPNFV) Resource committed and available Contributors identified Blueprint is aligned with the Akraino Edge Stack Charter All opensource, Edge use case, Aligned with the Akraino Charter Blueprint code that will be developed and used with Akraino repository should use only open source software components either from upstream or Akraino projects. Initial list of committers identified Yes, all open source. Actual applications (elected/proposed by initial contributors) (use cases) in some cases are from third party For new blueprints submission, the submitter should review existing blueprints and ensure it is not a duplicate blueprint and explain how the submission differs. The functional fit of an existing blueprint for a use case does not prevent an additional blueprint being submitted. Meets Akraino TSC policies An comprehensive platform with all kinds of deployment types – VNFs, CNFs, Micro-Services and functions, Advanced networking support, Multitenancy, slicing, OVN based data interfaces Proposal has been socialized with potentially interested or affected projects and/or parties Cross Project Dependencies. Multi-Server Edge NFV/APP stack Integrated Cloud Native NFV & App stack Identified. Will be documenting them in the page Developing an integrated cloud native stack solution for VNFs, CNFs For release 2. Yes. Various milestones with the release are identified. There is a team, resources and lab in place. Verizon, Intel, Mobile. Edge. X, Aarna Networks, VMWare Yes. PTL and 4 Engineers are identified. The project will operate in a transparent, open, collaborative, and ethical manner at all the times. o Have already reached a consensus with sponsors CNCFs Projecs, K 8 s, CRI, OCI, Virtlet, Kubevirt, Kata container, g. Visior, Open. WRT, Docker, OVN, OVS, DPDK, AF_XDP, ONAP 4

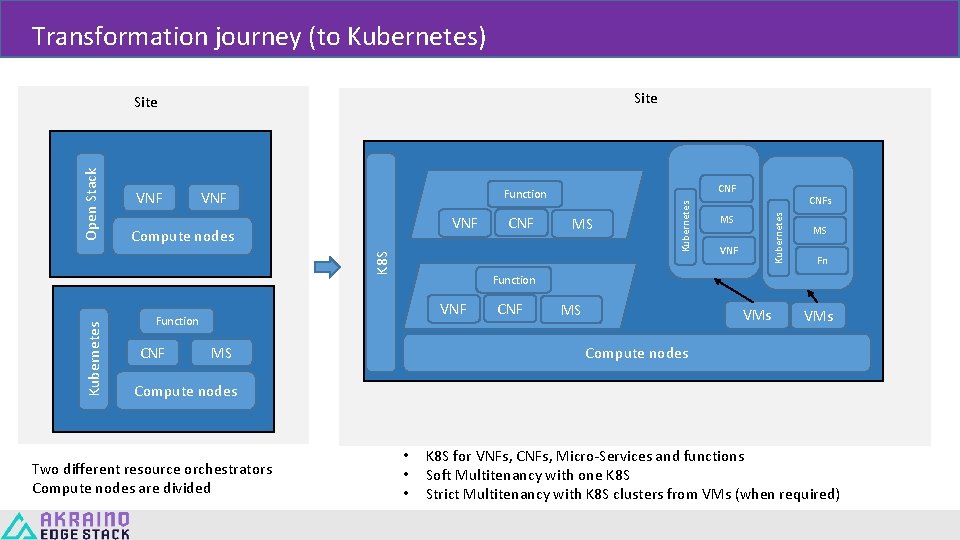

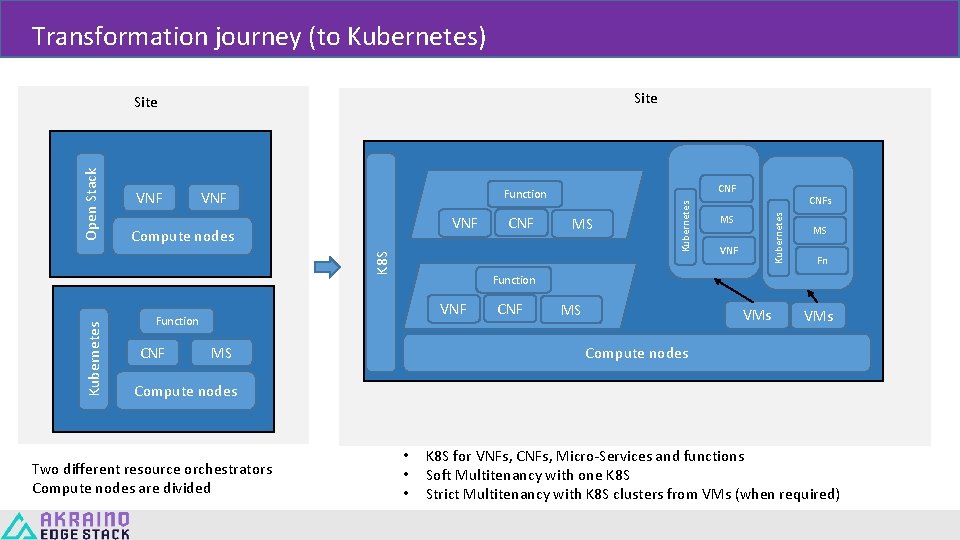

Transformation journey (to Kubernetes) Site Kubernetes MS CNFs Kubernetes CNF VNF Compute nodes MS VNF MS Fn Function CNF VNF Function CNF Function VNF Kubernetes VNF K 8 S Open Stack Site MS MS VMs Compute nodes Two different resource orchestrators Compute nodes are divided • • • K 8 S for VNFs, CNFs, Micro-Services and functions Soft Multitenancy with one K 8 S Strict Multitenancy with K 8 S clusters from VMs (when required)

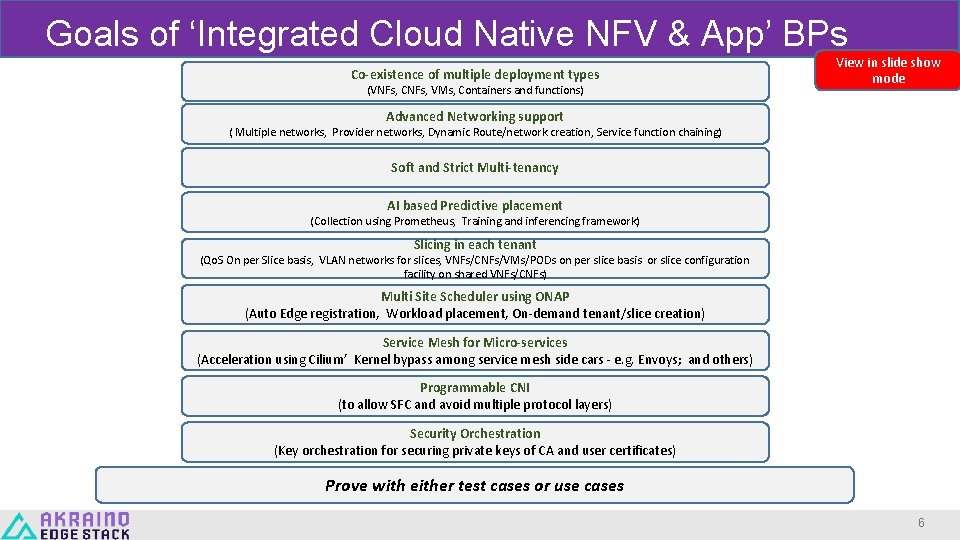

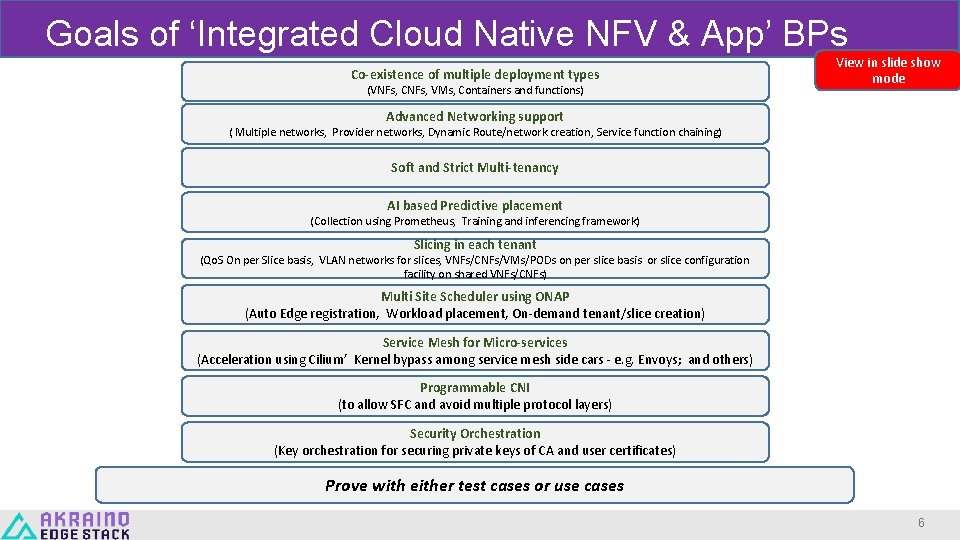

Goals of ‘Integrated Cloud Native NFV & App’ BPs Co-existence of multiple deployment types (VNFs, CNFs, VMs, Containers and functions) View in slide show mode Advanced Networking support ( Multiple networks, Provider networks, Dynamic Route/network creation, Service function chaining) Soft and Strict Multi-tenancy AI based Predictive placement (Collection using Prometheus, Training and inferencing framework) Slicing in each tenant (Qo. S On per Slice basis, VLAN networks for slices, VNFs/CNFs/VMs/PODs on per slice basis or slice configuration facility on shared VNFs/CNFs) Multi Site Scheduler using ONAP (Auto Edge registration, Workload placement, On-demand tenant/slice creation) Service Mesh for Micro-services (Acceleration using Cilium’ Kernel bypass among service mesh side cars - e. g. Envoys; and others) Programmable CNI (to allow SFC and avoid multiple protocol layers) Security Orchestration (Key orchestration for securing private keys of CA and user certificates) Prove with either test cases or use cases 6

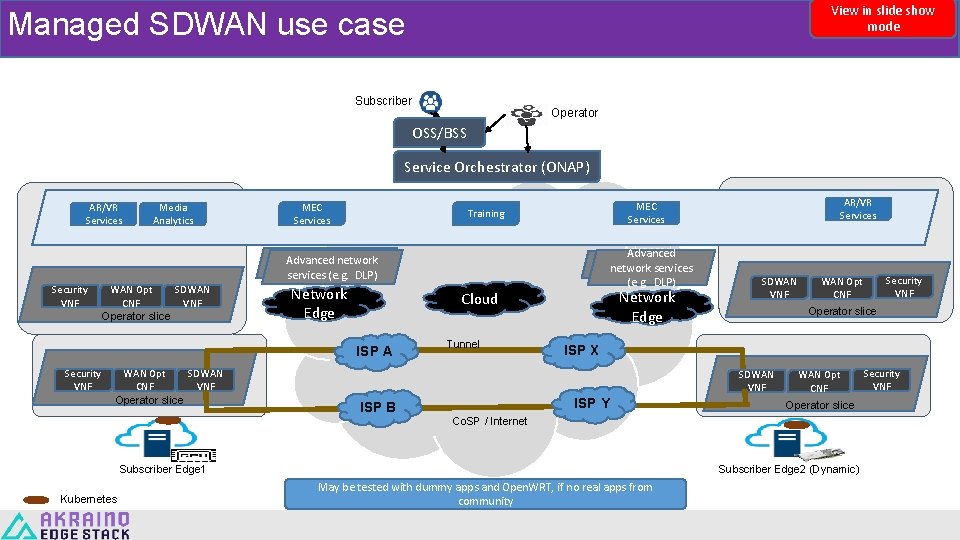

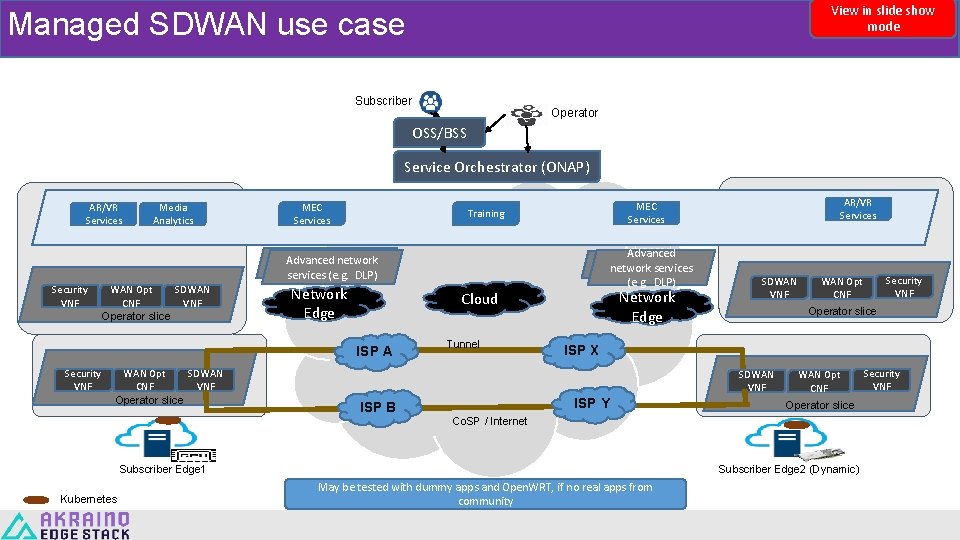

View in slide show mode Managed SDWAN use case Subscriber Operator OSS/BSS Service Orchestrator (ONAP) AR/VR Services Media Analytics MEC Services Advanced network services (e. g. DLP) Security VNF WAN Opt SDWAN CNF VNF Operator slice Network Edge Cloud ISP A Tunnel AR/VR Services MEC Services Subscriber slice Training SDWAN VNF Operator slice ISP X SDWAN VNF ISP Y ISP B WAN Opt CNF Operator slice Co. SP / Internet Subscriber Edge 2 (Dynamic) Subscriber Edge 1 Kubernetes Security VNF WAN Opt CNF May be tested with dummy apps and Open. WRT, if no real apps from community Security VNF

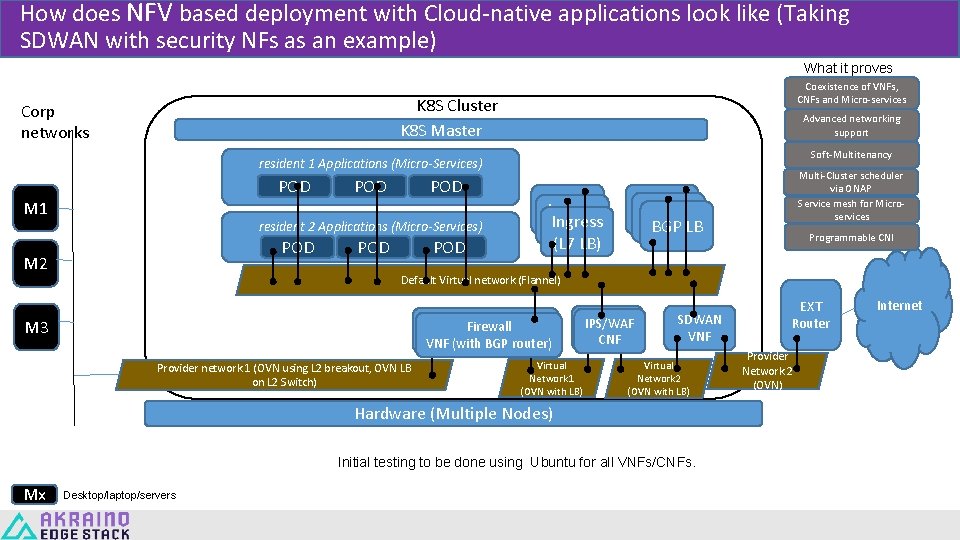

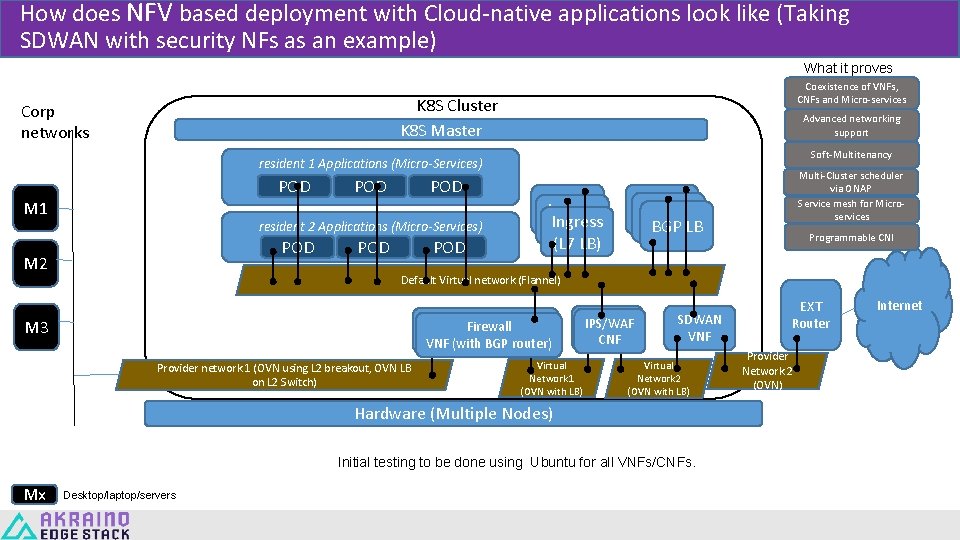

How does NFV based deployment with Cloud-native applications look like (Taking SDWAN with security NFs as an example) What it proves Coexistence of VNFs, CNFs and Micro-services K 8 S Cluster K 8 S Master Corp networks Advanced networking support Soft-Multitenancy resident 1 Applications (Micro-Services) POD M 1 POD resident 2 Applications (Micro-Services) POD M 2 POD Ingress (L 7 LB) BGP LB Programmable CNI Default Virtual network (Flannel) Firewall VNF (with BGP router) M 3 IPS/WAF CNF Virtual Network 1 (OVN with LB) Provider network 1 (OVN using L 2 breakout, OVN LB on L 2 Switch) SDWAN VNF Virtual Network 2 (OVN with LB) Hardware (Multiple Nodes) Initial testing to be done using Ubuntu for all VNFs/CNFs. Mx Multi-Cluster scheduler via ONAP Service mesh for Microservices Desktop/laptop/servers EXT Router Provider Network 2 (OVN) Internet

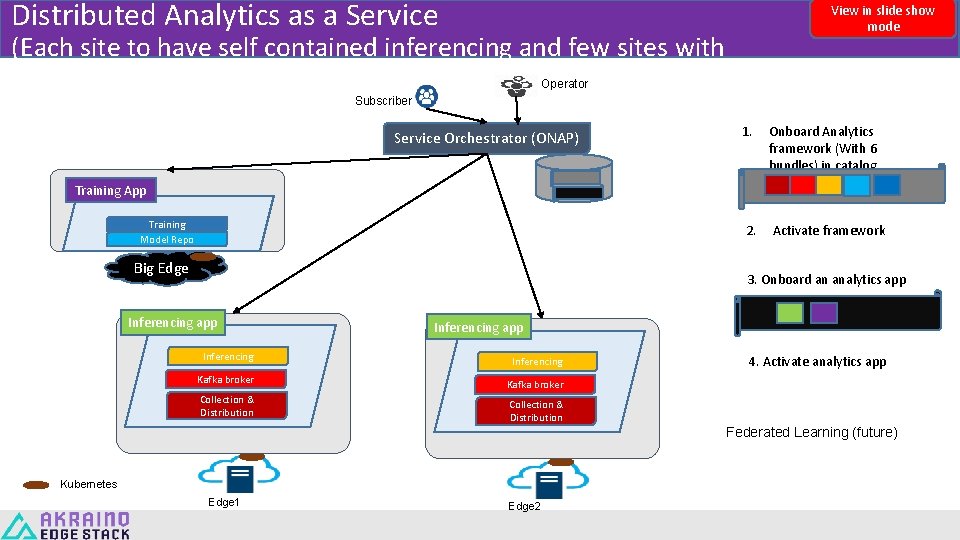

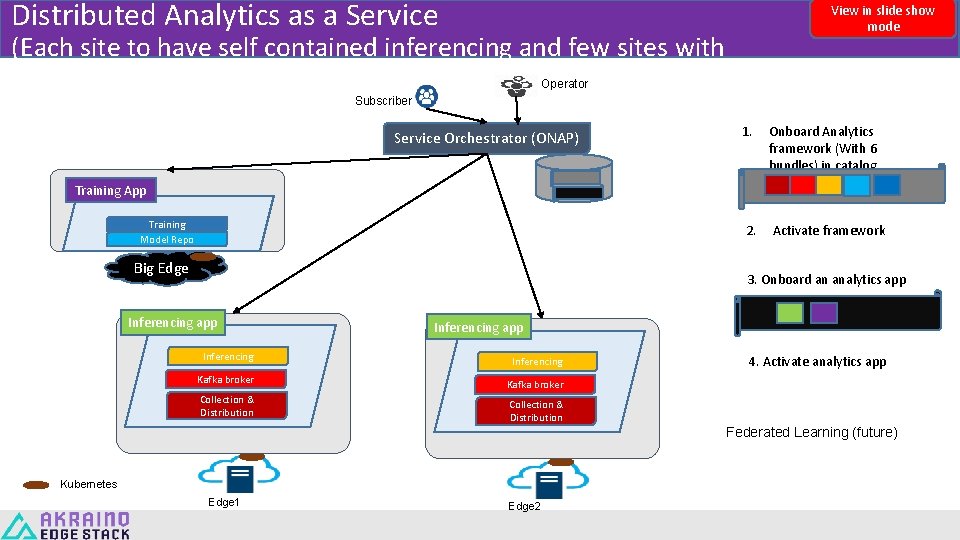

Distributed Analytics as a Service View in slide show mode (Each site to have self contained inferencing and few sites with training) Operator Subscriber Service Orchestrator (ONAP) 1. Onboard Analytics framework (With 6 bundles) in catalog 2. Activate framework Training App Training Model Repo Big Edge 3. Onboard an analytics app Inferencing Kafka broker Collection & Distribution Kubernetes Edge 1 Edge 2 4. Activate analytics app Federated Learning (future)

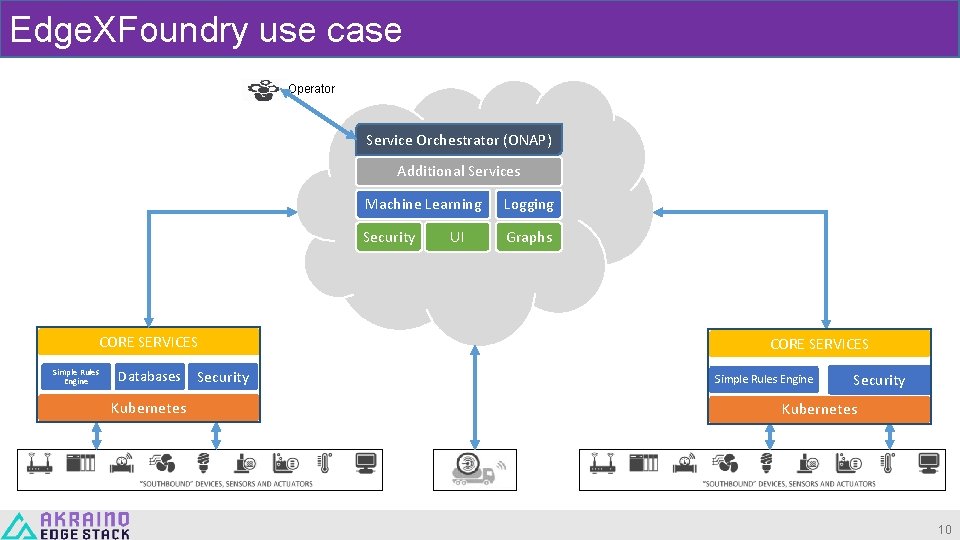

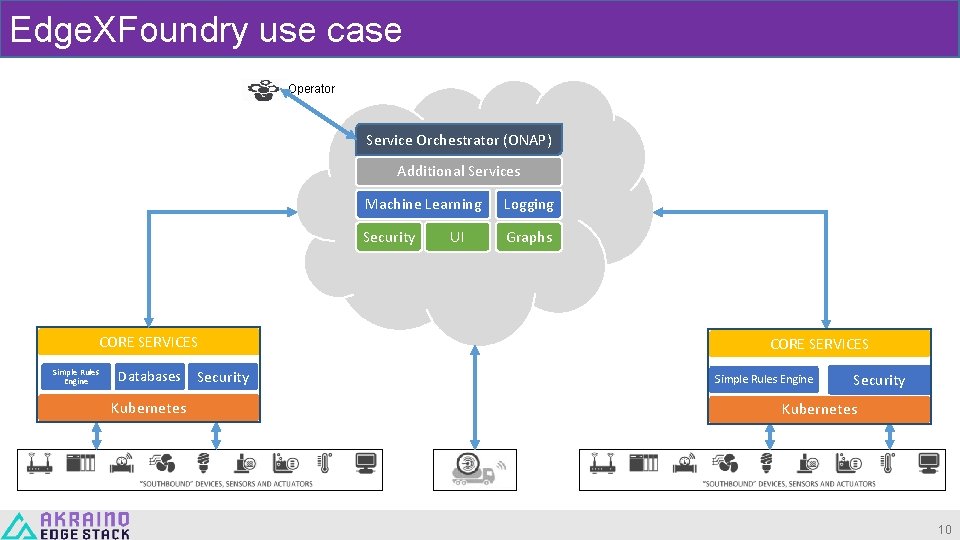

Edge. XFoundry use case Operator Service Orchestrator (ONAP) Additional Services Machine Learning Logging Security Graphs UI CORE SERVICES Simple Rules Engine Databases CORE SERVICES Security Simple Rules Engine Kubernetes Security Kubernetes 10

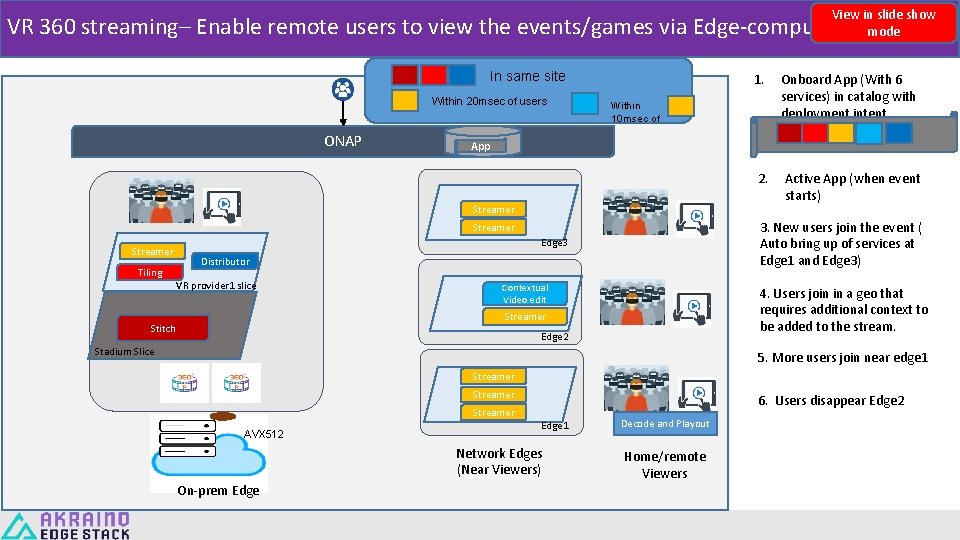

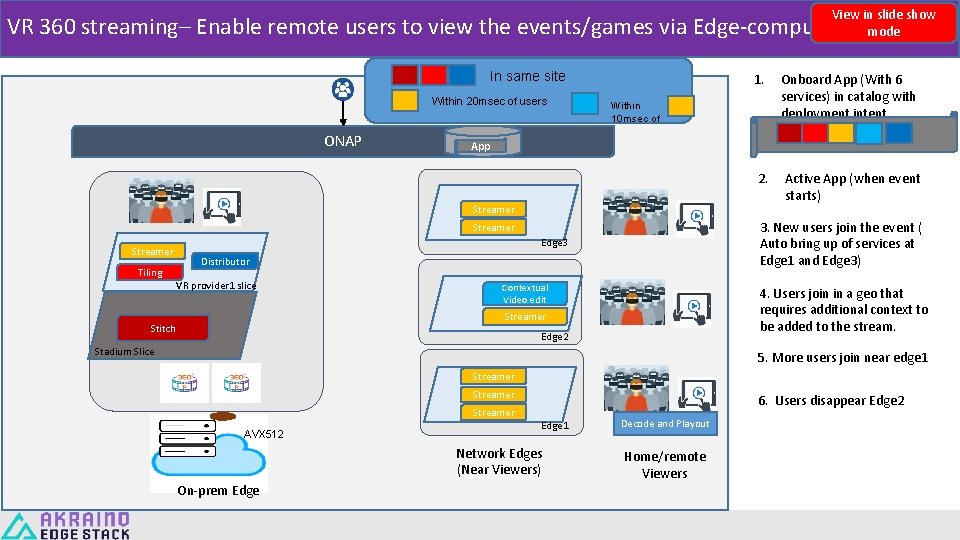

View in slide show mode VR 360 streaming– Enable remote users to view the events/games via Edge-computing In same site Within 20 msec of users ONAP 2. Active App (when event starts) App 3. New users join the event ( Auto bring up of services at Edge 1 and Edge 3) Streamer Tiling Onboard App (With 6 services) in catalog with deployment intent Within 10 msec of Streamer 1. Edge 3 Distributor VR provider 1 slice Contextual Video edit 4. Users join in a geo that requires additional context to be added to the stream. Streamer Stitch Edge 2 Stadium Slice 5. More users join near edge 1 Streamer AVX 512 6. Users disappear Edge 2 Edge 1 Network Edges (Near Viewers) On-prem Edge Decode and Playout Home/remote Viewers

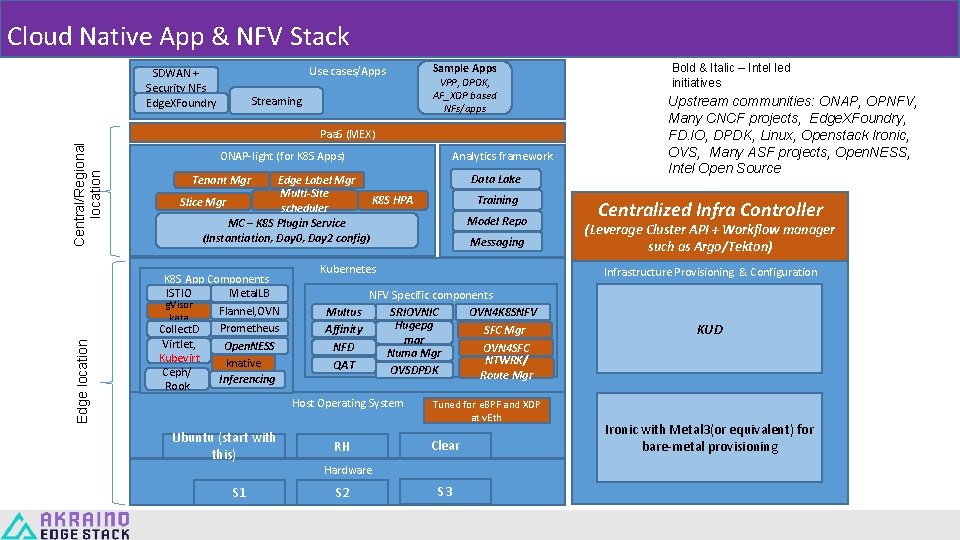

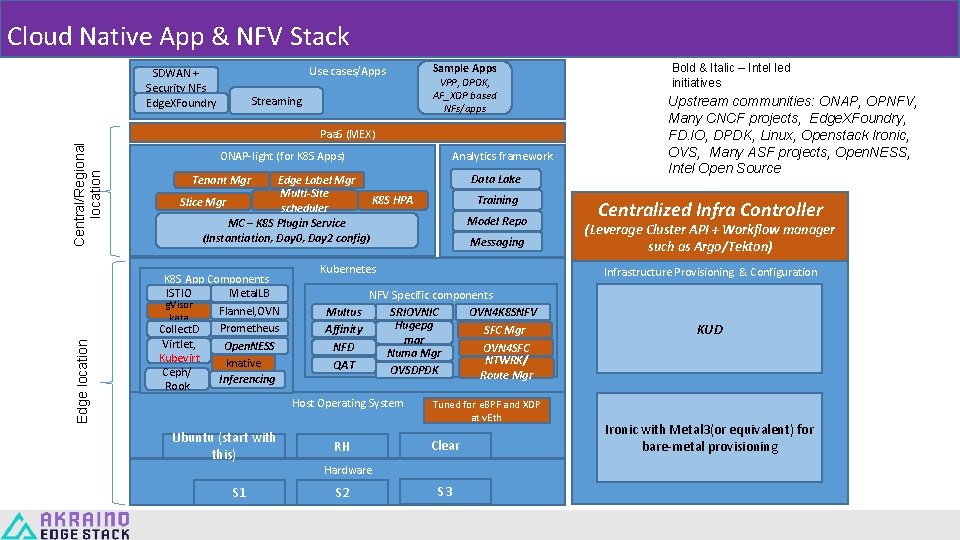

Cloud Native App & NFV Stack Use cases/Apps SDWAN + Security NFs Edge. XFoundry Streaming Sample Apps VPP, DPDK, AF_XDP based NFs/apps Edge location Central/Regional location Paa. S (MEX) ONAP-light (for K 8 S Apps) Analytics framework Data Lake Tenant Mgr Edge Label Mgr Multi-Site K 8 S HPA Slice Mgr scheduler MC – K 8 S Plugin Service (Instantiation, Day 0, Day 2 config) K 8 S App Components ISTIO Metal. LB g. Visor Flannel, OVN kata Prometheus Collect. D Virtlet, Open. NESS Kubevirt knative Ceph/ Inferencing Rook Model Repo Messaging Kubernetes RH Tuned for e. BPF and XDP at v. Eth Clear Hardware S 1 S 2 Upstream communities: ONAP, OPNFV, Many CNCF projects, Edge. XFoundry, FD. IO, DPDK, Linux, Openstack Ironic, OVS, Many ASF projects, Open. NESS, Intel Open Source Centralized Infra Controller (Leverage Cluster API + Workflow manager such as Argo/Tekton) Infrastructure Provisioning & Configuration NFV Specific components Multus SRIOVNIC OVN 4 K 8 SNFV Hugepg Affinity SFC Mgr mgr NFD OVN 4 SFC Numa Mgr NTWRK/ QAT OVSDPDK Route Mgr Host Operating System Ubuntu (start with this) Training Bold & Italic – Intel led initiatives S 3 KUD Ironic with Metal 3(or equivalent) for bare-metal provisioning

BACKUP 13

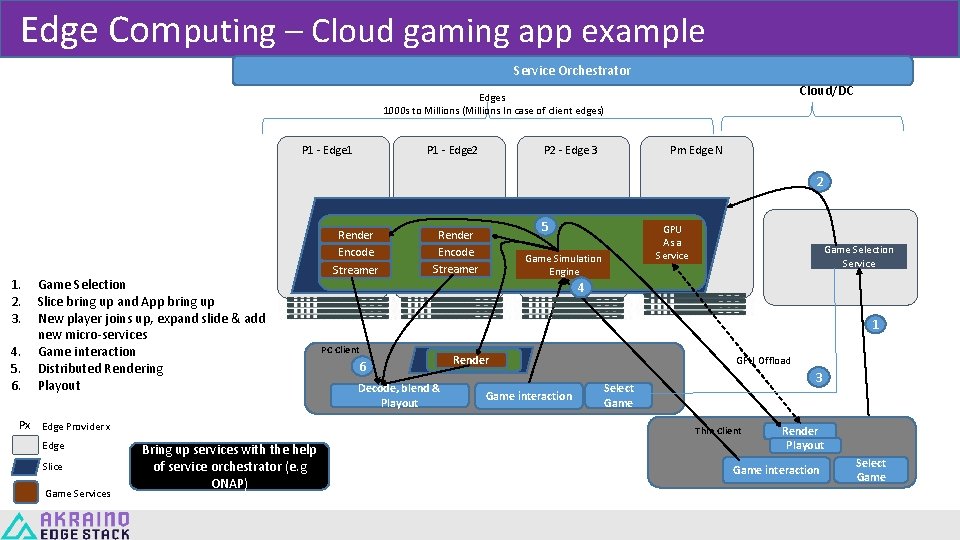

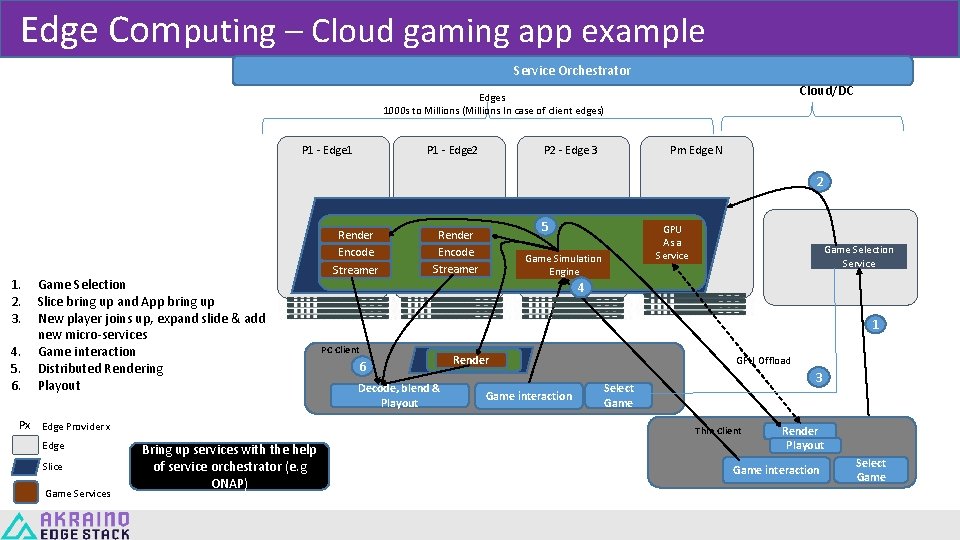

Edge Computing – Cloud gaming app example Service Orchestrator Cloud/DC Edges 1000 s to Millions (Millions In case of client edges) P 1 - Edge 1 P 1 - Edge 2 P 2 - Edge 3 Pm Edge N 2 1. 2. 3. 4. 5. 6. Game Selection Slice bring up and App bring up New player joins up, expand slide & add new micro-services Game interaction Distributed Rendering Playout Render Encode Streamer 5 Render Encode Streamer Game Simulation Engine Slice Game Services Game Selection Service 4 1 PC Client 6 Render Decode, blend & Playout Game interaction Px Edge Provider x Edge GPU As a Service GPU Offload 3 Select Game Thin Client Bring up services with the help of service orchestrator (e. g ONAP) Render Playout Game interaction Select Game

Thank you 15