Homa A ReceiverDriven Low Latency Transport Protocol Using

- Slides: 26

Homa: A Receiver-Driven Low. Latency Transport Protocol Using Network Priorities Behnam Montazeri Joint work with: Yilong Li, Mohammad Alizadeh, John Ousterhout Aug. 22, 2018 Slide 1

Goals & Key Results ● Goal: A datacenter transport for low latency at high load ▪ Close to hardware limit latency for short messages ▪ High network bandwidth utilization ▪ Practical design for commodity fabrics ● Key results: 100 x faster than best published testbed implementation ▪ 15 us tail latency (99%ile) for short messages, at 80% load ▪ High bandwidth utilization: more than 90% of available bandwidth ● Key idea: Effective use of network priorities ▪ Dynamically assigned priorities by receivers ▪ Receiver-driven packet scheduling ▪ Controlled overcommitment on receiver’s downlink Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 2

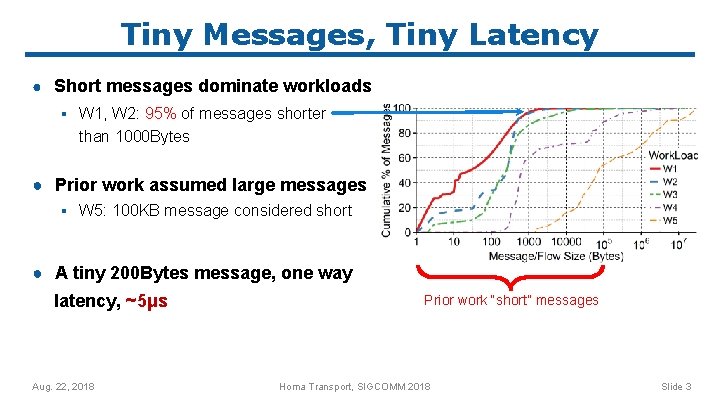

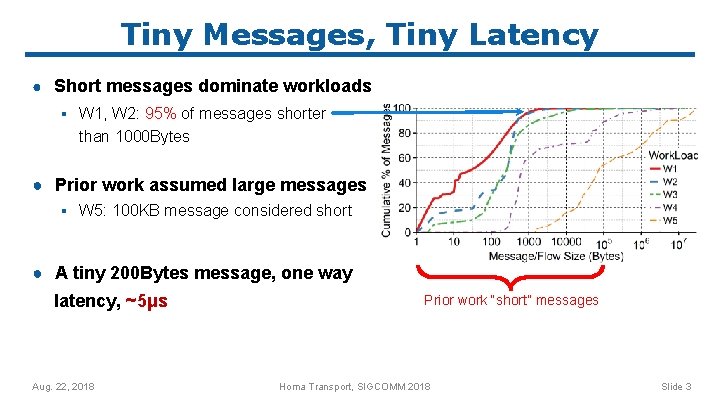

Tiny Messages, Tiny Latency ● Short messages dominate workloads ▪ W 1, W 2: 95% of messages shorter than 1000 Bytes ● Prior work assumed large messages ▪ W 5: 100 KB message considered short ● A tiny 200 Bytes message, one way latency, ~5μs Aug. 22, 2018 Prior work “short” messages Homa Transport, SIGCOMM 2018 Slide 3

Near-Hardware Tail Latency is Hard ● For tiny messages, small inefficiencies have large impact ▪ 8 packets of queueing adds 10μs of latency at 10 Gbps → 3 x minimum completion time for a 200 -byte message ● Such queues have minor impact on larger message sizes ▪ Aug. 22, 2018 A 100 KB message takes > 80μs to transmit Homa Transport, SIGCOMM 2018 Slide 4

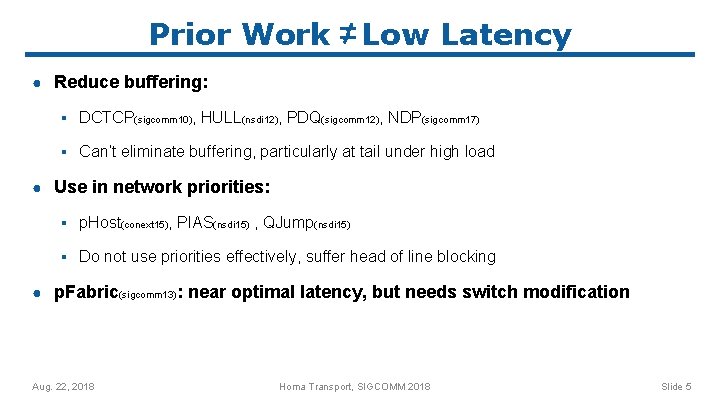

Prior Work Low Latency ● Reduce buffering: ▪ DCTCP(sigcomm 10), HULL(nsdi 12), PDQ(sigcomm 12), NDP(sigcomm 17) ▪ Can’t eliminate buffering, particularly at tail under high load ● Use in network priorities: ▪ p. Host(conext 15), PIAS(nsdi 15) , QJump(nsdi 15) ▪ Do not use priorities effectively, suffer head of line blocking ● p. Fabric(sigcomm 13): near optimal latency, but needs switch modification Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 5

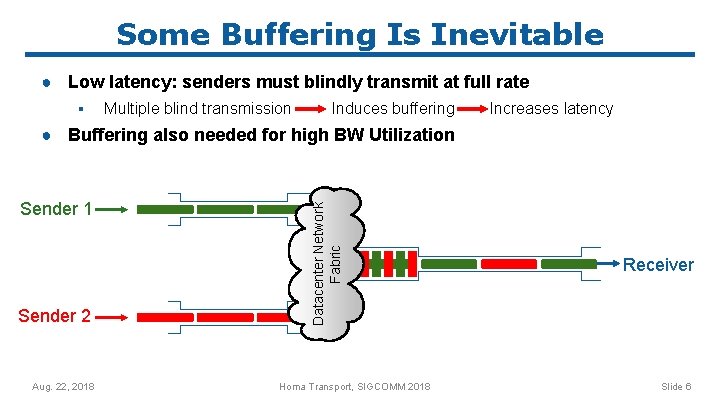

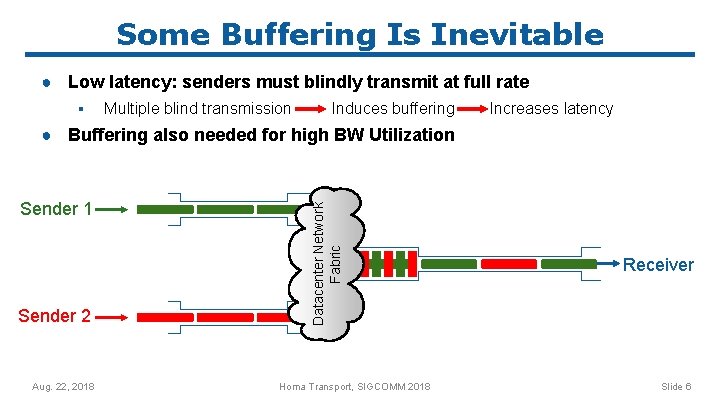

Some Buffering Is Inevitable ● Low latency: senders must blindly transmit at full rate ▪ Multiple blind transmission Induces buffering Increases latency Sender 1 Sender 2 Aug. 22, 2018 Datacenter Network Fabric ● Buffering also needed for high BW Utilization Homa Transport, SIGCOMM 2018 Receiver Slide 6

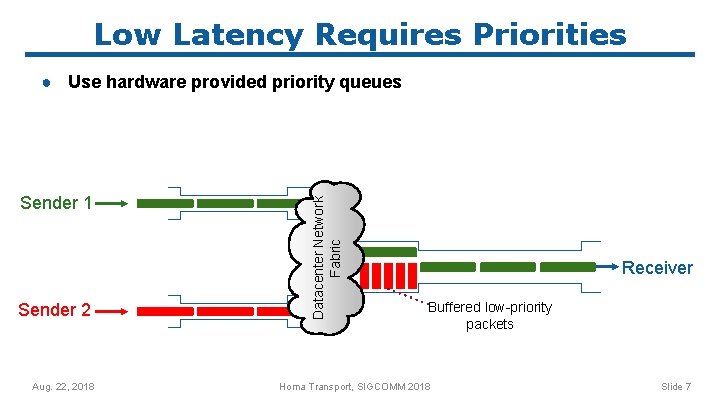

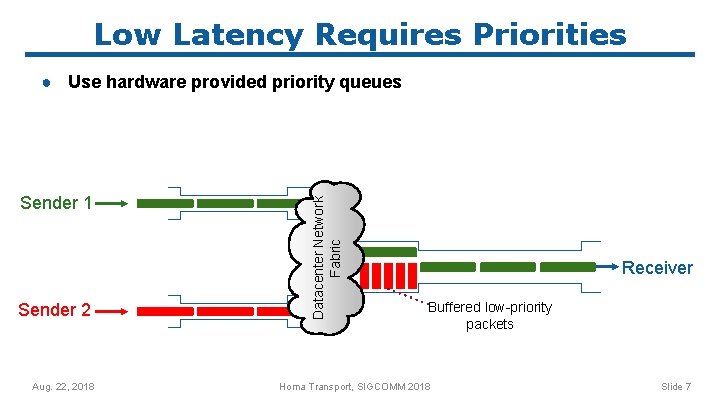

Low Latency Requires Priorities Sender 1 Sender 2 Aug. 22, 2018 Datacenter Network Fabric ● Use hardware provided priority queues Receiver Buffered low-priority packets Homa Transport, SIGCOMM 2018 Slide 7

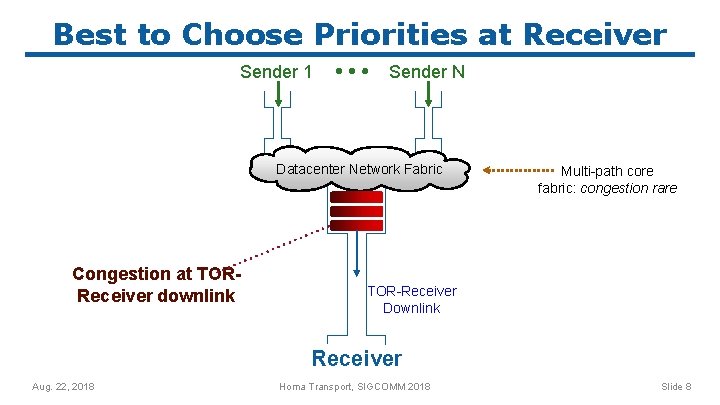

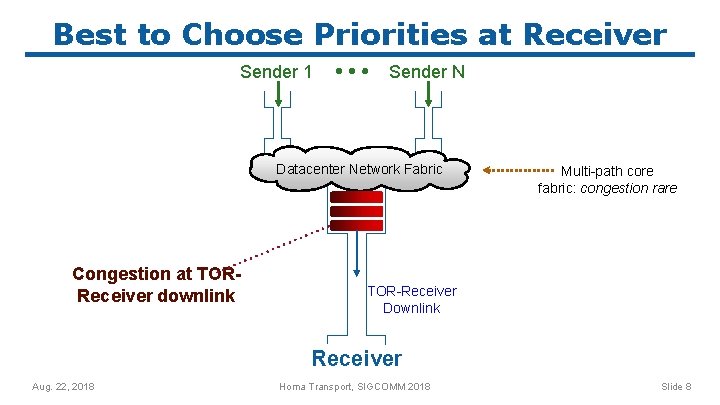

Best to Choose Priorities at Receiver Sender 1 Sender N Datacenter Network Fabric Congestion at TORReceiver downlink Multi-path core fabric: congestion rare TOR-Receiver Downlink Receiver Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 8

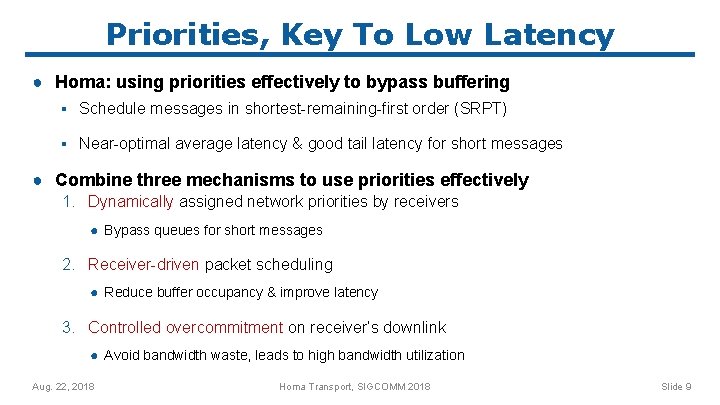

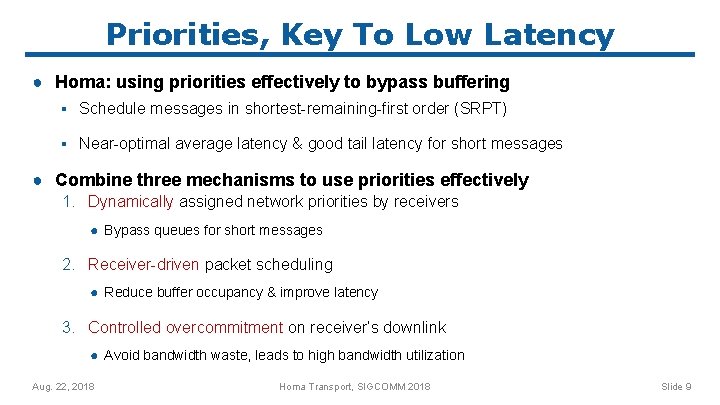

Priorities, Key To Low Latency ● Homa: using priorities effectively to bypass buffering ▪ Schedule messages in shortest-remaining-first order (SRPT) ▪ Near-optimal average latency & good tail latency for short messages ● Combine three mechanisms to use priorities effectively 1. Dynamically assigned network priorities by receivers ● Bypass queues for short messages 2. Receiver-driven packet scheduling ● Reduce buffer occupancy & improve latency 3. Controlled overcommitment on receiver’s downlink ● Avoid bandwidth waste, leads to high bandwidth utilization Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 9

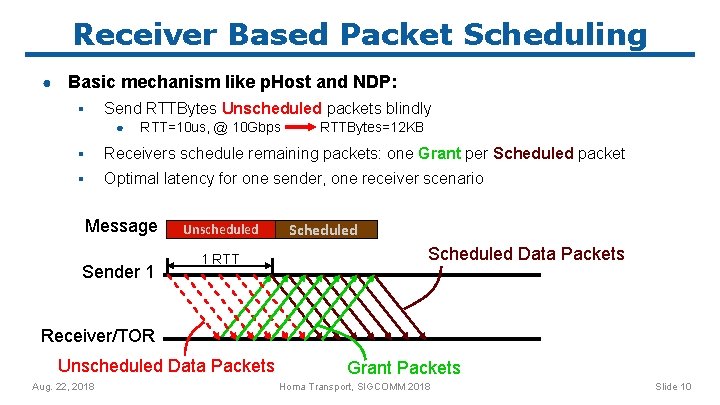

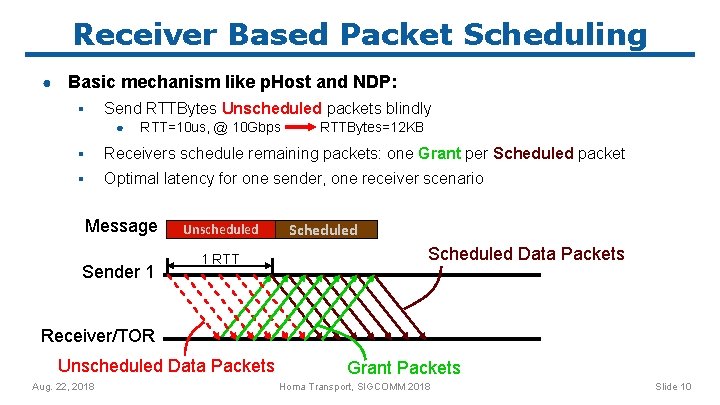

Receiver Based Packet Scheduling ● Basic mechanism like p. Host and NDP: ▪ Send RTTBytes Unscheduled packets blindly ● RTT=10 us, @ 10 Gbps RTTBytes=12 KB ▪ Receivers schedule remaining packets: one Grant per Scheduled packet ▪ Optimal latency for one sender, one receiver scenario Message Sender 1 Unscheduled 1 RTT Scheduled Data Packets Receiver/TOR Unscheduled Data Packets Aug. 22, 2018 Grant Packets Homa Transport, SIGCOMM 2018 Slide 10

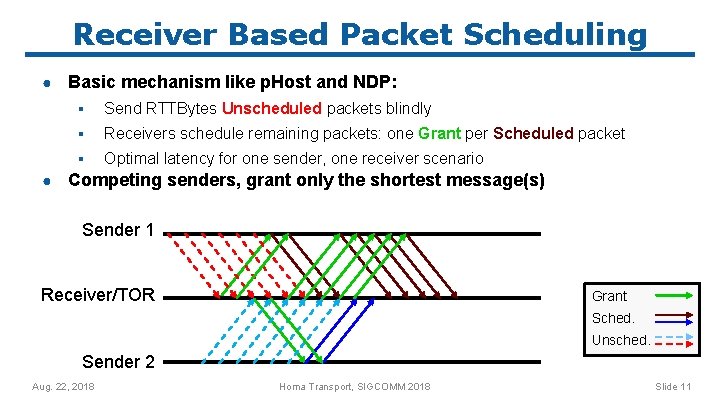

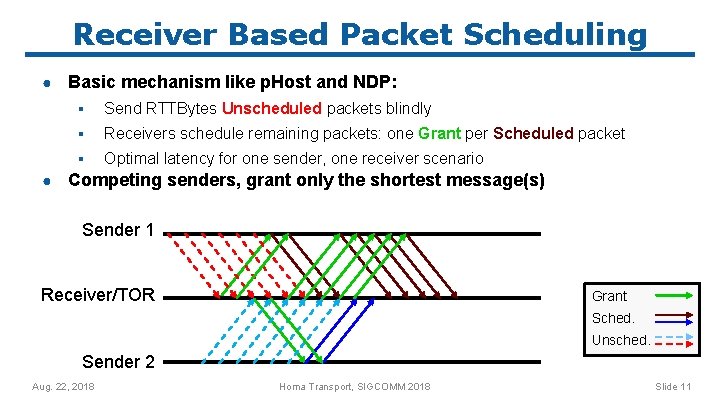

Receiver Based Packet Scheduling ● ● Basic mechanism like p. Host and NDP: ▪ Send RTTBytes Unscheduled packets blindly ▪ Receivers schedule remaining packets: one Grant per Scheduled packet ▪ Optimal latency for one sender, one receiver scenario Competing senders, grant only the shortest message(s) Sender 1 Receiver/TOR Grant Sched. Unsched. Sender 2 Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 11

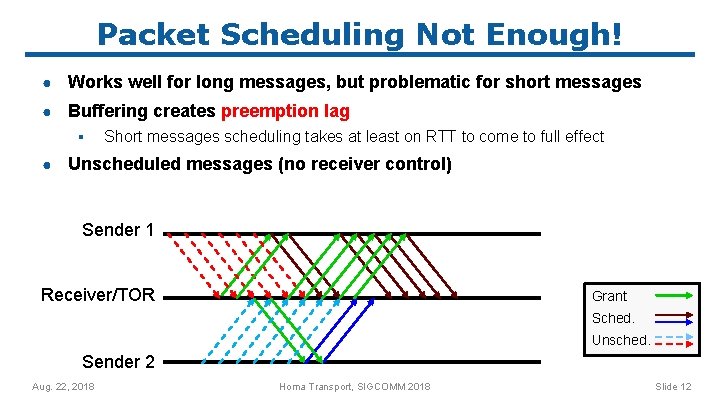

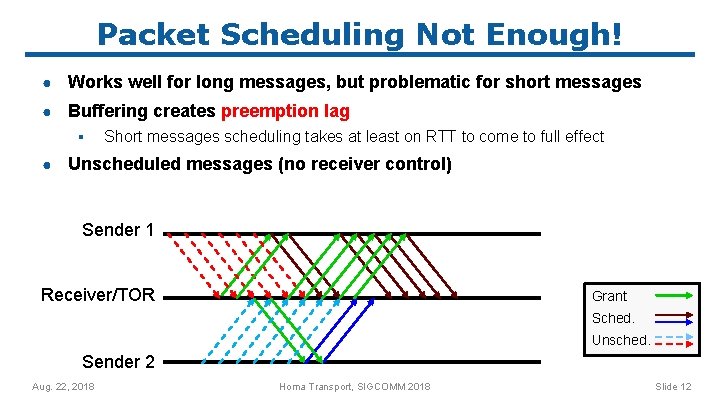

Packet Scheduling Not Enough! ● Works well for long messages, but problematic for short messages ● Buffering creates preemption lag ▪ ● Short messages scheduling takes at least on RTT to come to full effect Unscheduled messages (no receiver control) Sender 1 Receiver/TOR Grant Sched. Unsched. Sender 2 Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 12

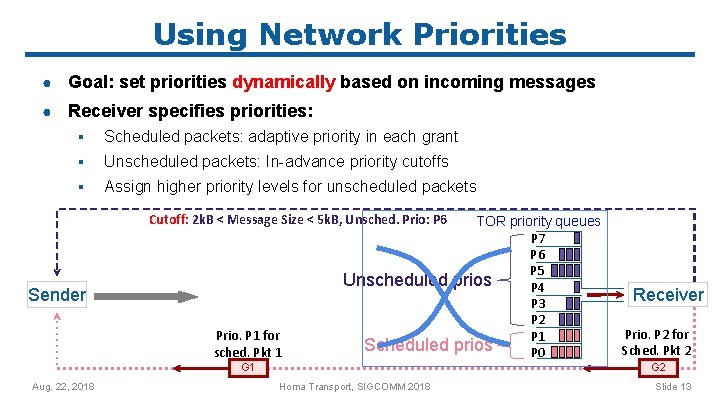

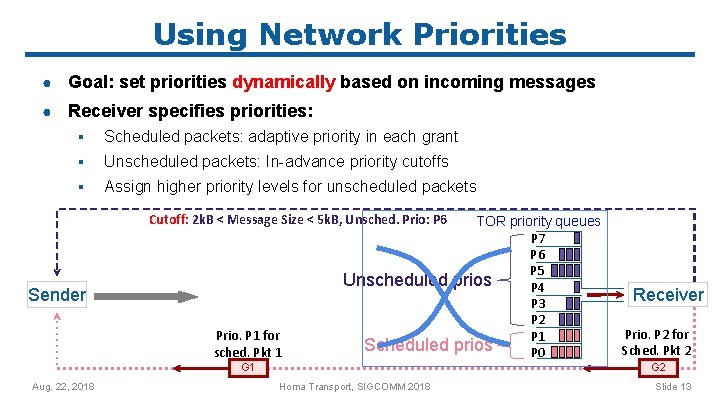

Using Network Priorities ● Goal: set priorities dynamically based on incoming messages ● Receiver specifies priorities: ▪ Scheduled packets: adaptive priority in each grant ▪ Unscheduled packets: In-advance priority cutoffs ▪ Assign higher priority levels for unscheduled packets Cutoff: 2 k. B < Message Size < 5 k. B, Unsched. Prio: P 6 Unscheduled Sender Prio. P 1 for sched. Pkt 1 Scheduled G 1 Aug. 22, 2018 TOR priority queues P 7 P 6 P 5 prios P 4 P 3 P 2 P 1 prios P 0 Receiver Prio. P 2 for Sched. Pkt 2 G 2 Homa Transport, SIGCOMM 2018 Slide 13

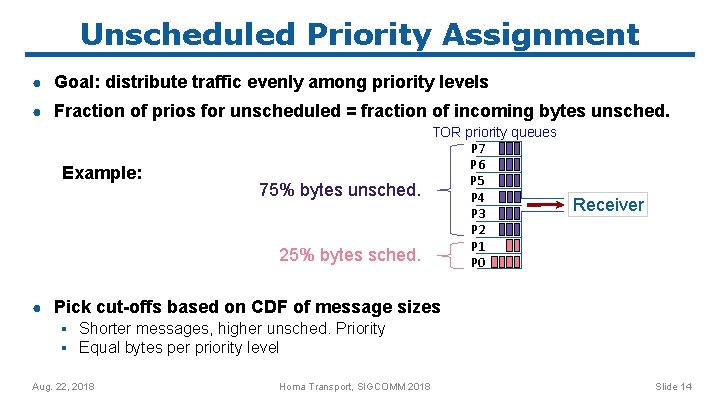

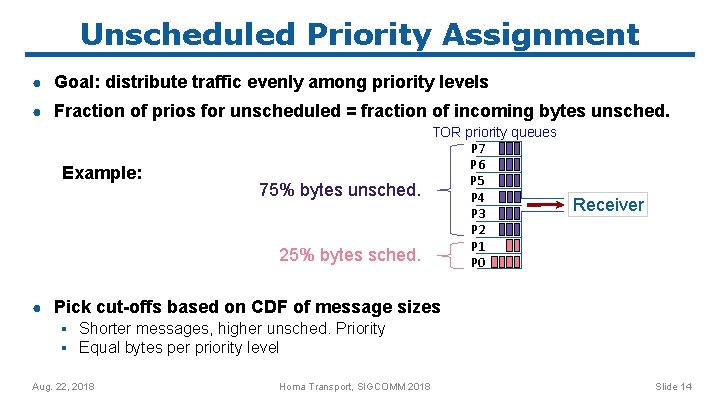

Unscheduled Priority Assignment ● Goal: distribute traffic evenly among priority levels ● Fraction of prios for unscheduled = fraction of incoming bytes unsched. Example: 75% bytes unsched. 25% bytes sched. TOR priority queues P 7 P 6 P 5 P 4 P 3 P 2 P 1 P 0 Receiver ● Pick cut-offs based on CDF of message sizes ▪ Shorter messages, higher unsched. Priority ▪ Equal bytes per priority level Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 14

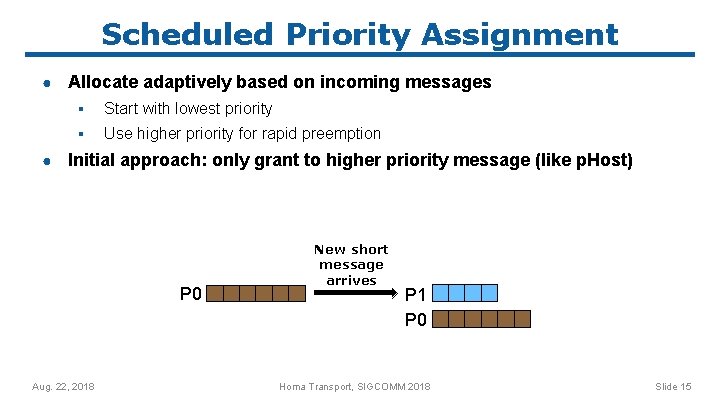

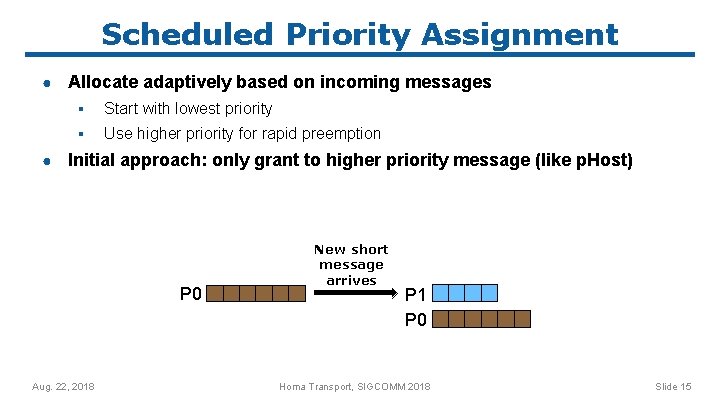

Scheduled Priority Assignment ● ● Allocate adaptively based on incoming messages ▪ Start with lowest priority ▪ Use higher priority for rapid preemption Initial approach: only grant to higher priority message (like p. Host) P 0 Aug. 22, 2018 New short message arrives P 1 P 0 Homa Transport, SIGCOMM 2018 Slide 15

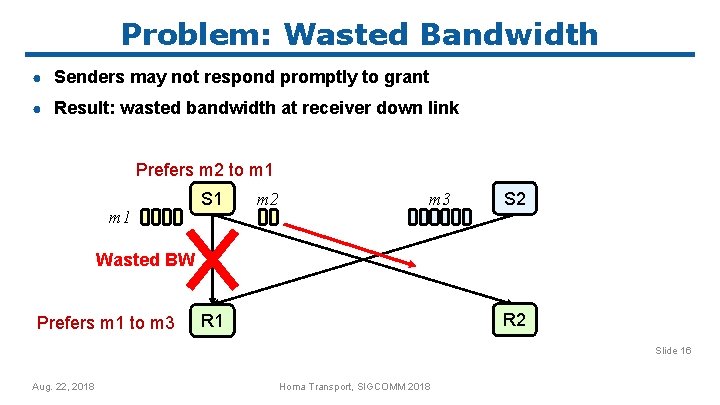

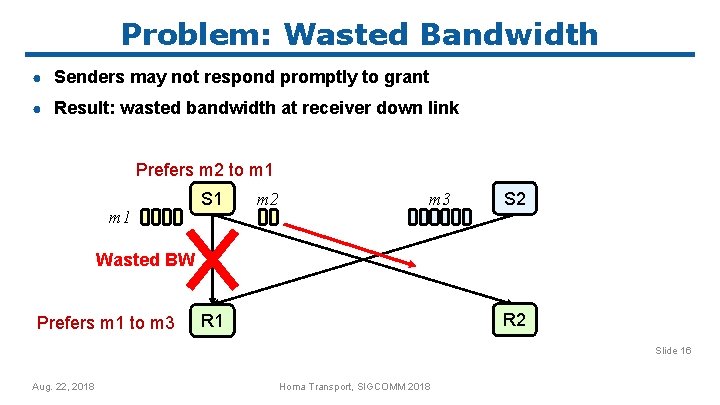

Problem: Wasted Bandwidth ● Senders may not respond promptly to grant ● Result: wasted bandwidth at receiver down link Prefers m 2 to m 1 S 1 m 2 m 3 S 2 Wasted BW Prefers m 1 to m 3 R 2 R 1 Slide 16 Aug. 22, 2018 Homa Transport, SIGCOMM 2018

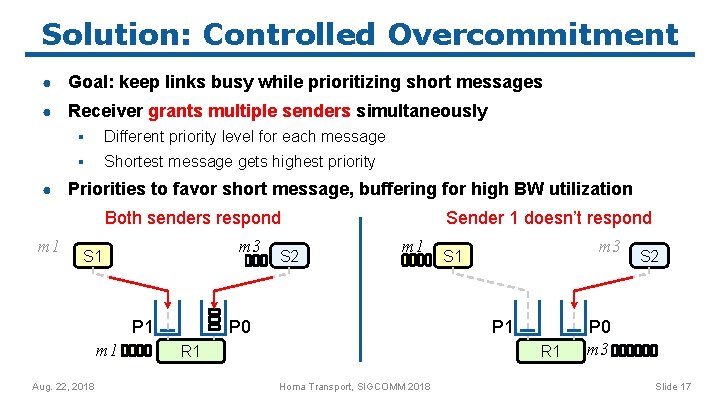

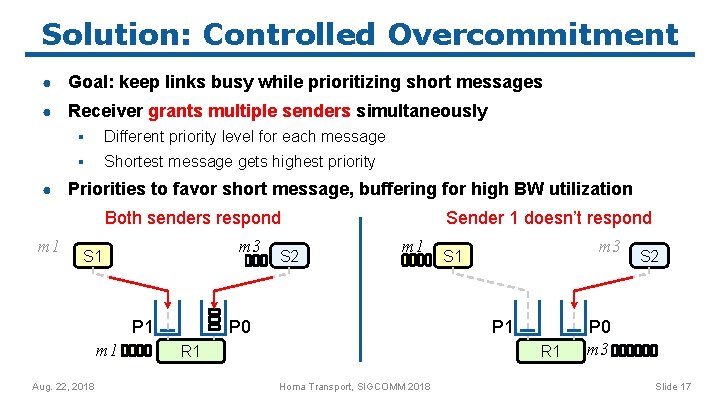

Solution: Controlled Overcommitment ● Goal: keep links busy while prioritizing short messages ● Receiver grants multiple senders simultaneously ● ▪ Different priority level for each message ▪ Shortest message gets highest priority Priorities to favor short message, buffering for high BW utilization Both senders respond m 1 m 3 S 1 P 1 m 1 Aug. 22, 2018 S 2 Sender 1 doesn’t respond m 1 P 0 m 3 S 1 P 1 R 1 Homa Transport, SIGCOMM 2018 S 2 P 0 m 3 Slide 17

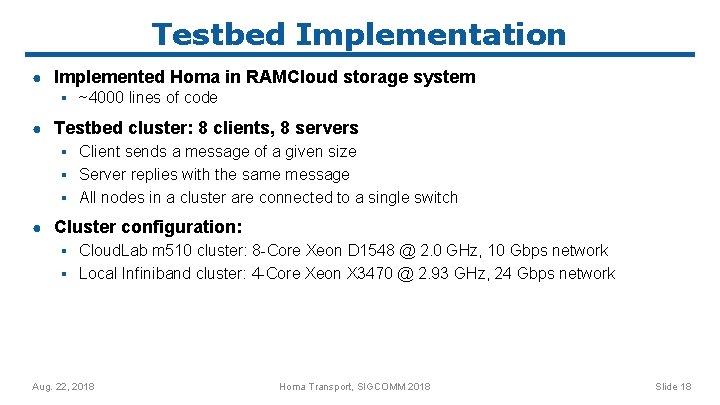

Testbed Implementation ● Implemented Homa in RAMCloud storage system ▪ ~4000 lines of code ● Testbed cluster: 8 clients, 8 servers ▪ Client sends a message of a given size ▪ Server replies with the same message ▪ All nodes in a cluster are connected to a single switch ● Cluster configuration: ▪ Cloud. Lab m 510 cluster: 8 -Core Xeon D 1548 @ 2. 0 GHz, 10 Gbps network ▪ Local Infiniband cluster: 4 -Core Xeon X 3470 @ 2. 93 GHz, 24 Gbps network Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 18

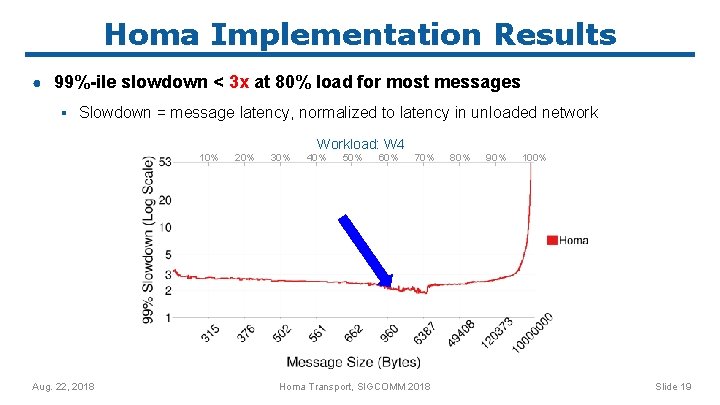

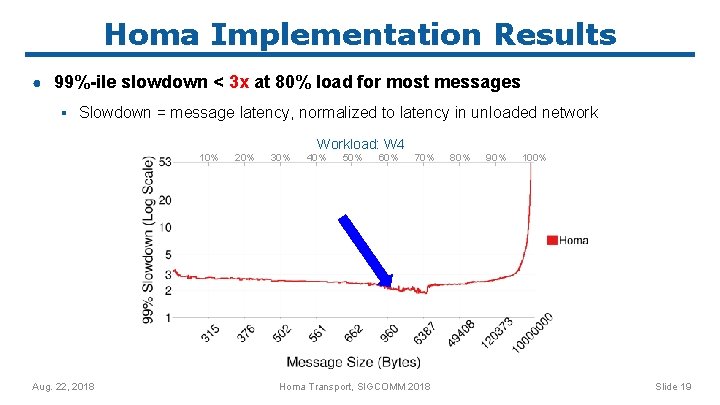

Homa Implementation Results ● 99%-ile slowdown < 3 x at 80% load for most messages ▪ Slowdown = message latency, normalized to latency in unloaded network 10% Aug. 22, 2018 20% 30% Workload: W 4 40% 50% 60% 70% Homa Transport, SIGCOMM 2018 80% 90% 100% Slide 19

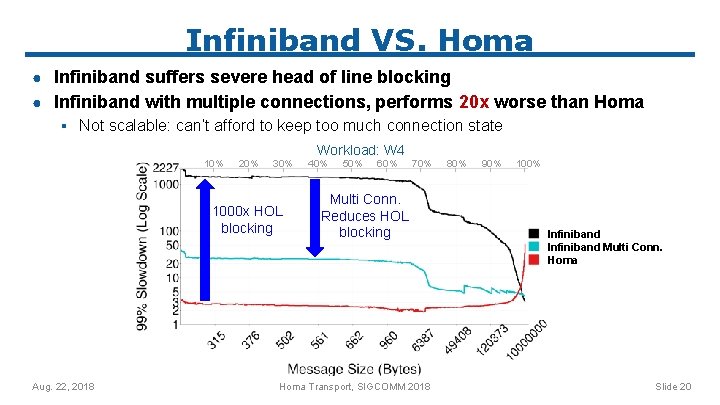

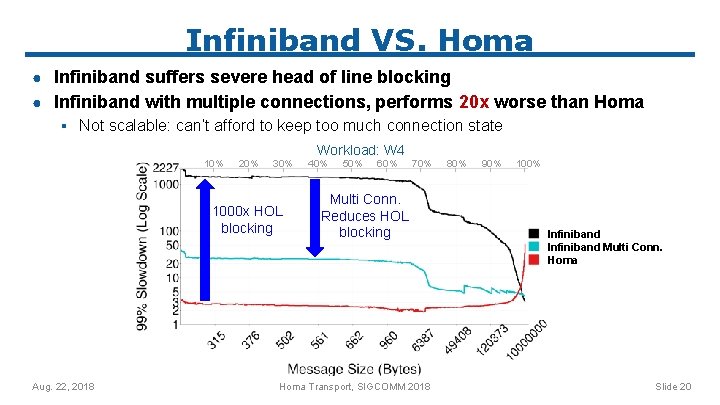

Infiniband VS. Homa ● Infiniband suffers severe head of line blocking ● Infiniband with multiple connections, performs 20 x worse than Homa ▪ Not scalable: can’t afford to keep too much connection state 10% 20% 30% 1000 x HOL blocking Aug. 22, 2018 Workload: W 4 40% 50% 60% 70% Multi Conn. Reduces HOL blocking Homa Transport, SIGCOMM 2018 80% 90% 100% Infiniband Multi Conn. Homa Slide 20

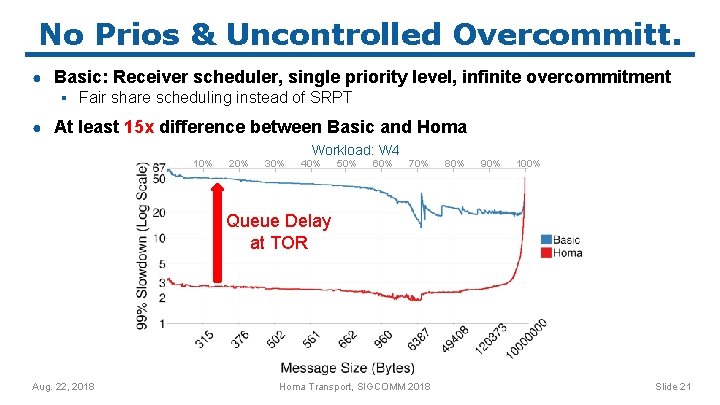

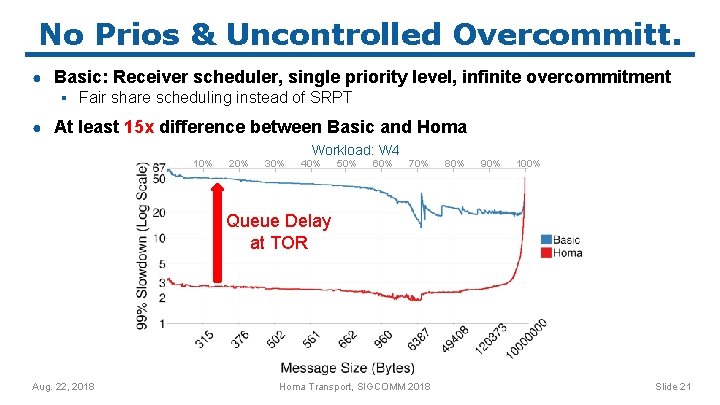

No Prios & Uncontrolled Overcommitt. ● Basic: Receiver scheduler, single priority level, infinite overcommitment ▪ Fair share scheduling instead of SRPT ● At least 15 x difference between Basic and Homa 10% 20% 30% Workload: W 4 40% 50% 60% 70% 80% 90% 100% Queue Delay at TOR Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 21

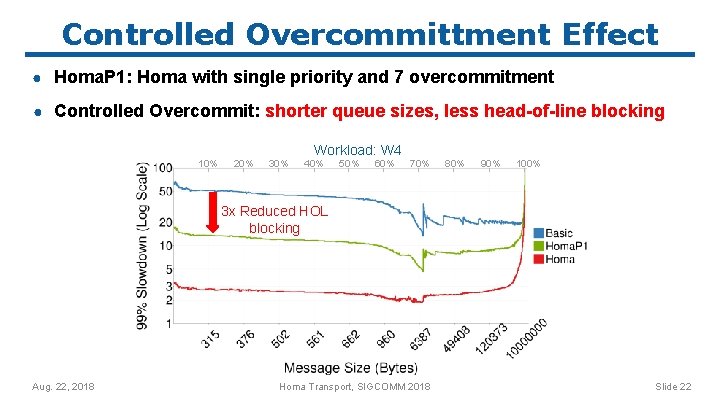

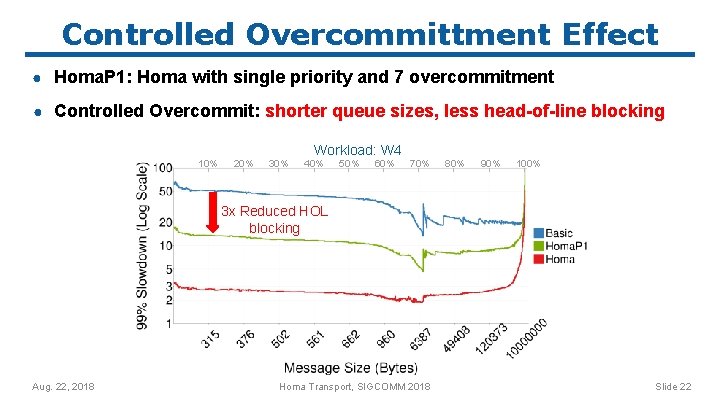

Controlled Overcommittment Effect ● Homa. P 1: Homa with single priority and 7 overcommitment ● Controlled Overcommit: shorter queue sizes, less head-of-line blocking 10% 20% 30% Workload: W 4 40% 50% 60% 70% 80% 90% 100% 3 x Reduced HOL blocking Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 22

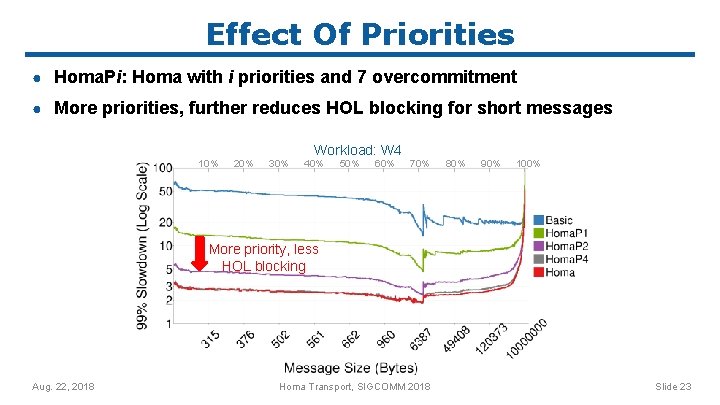

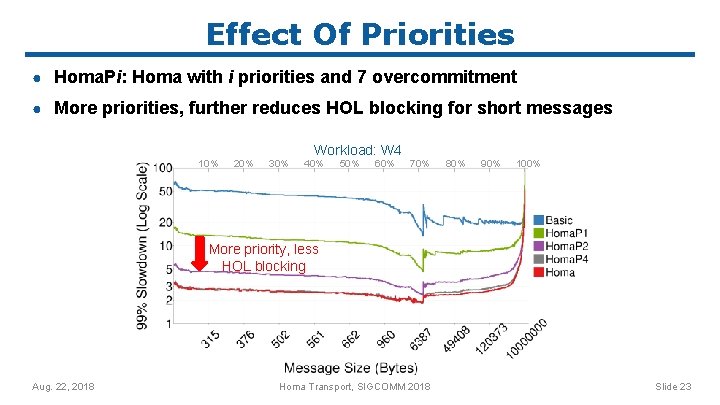

Effect Of Priorities ● Homa. Pi: Homa with i priorities and 7 overcommitment ● More priorities, further reduces HOL blocking for short messages 10% 20% 30% Workload: W 4 40% 50% 60% 70% 80% 90% 100% More priority, less HOL blocking Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 23

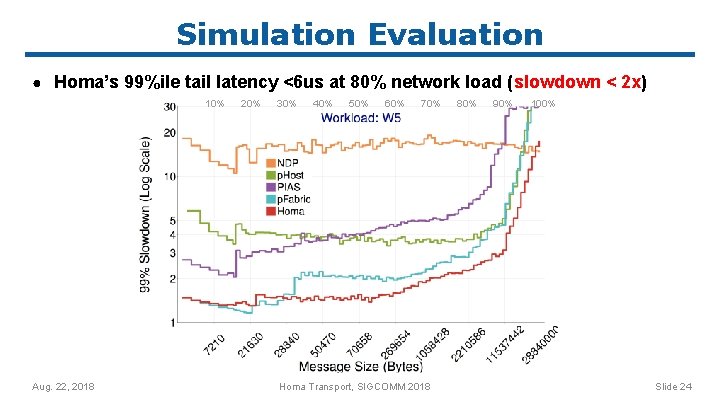

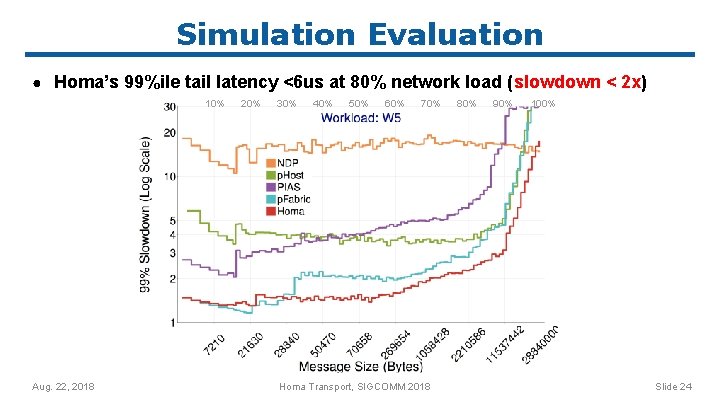

Simulation Evaluation ● Homa’s 99%ile tail latency <6 us at 80% network load (slowdown < 2 x) 10% Aug. 22, 2018 20% 30% 40% 50% 60% 70% Homa Transport, SIGCOMM 2018 80% 90% 100% Slide 24

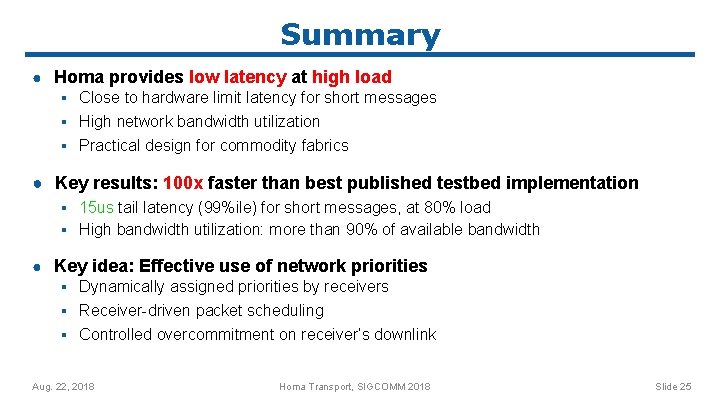

Summary ● Homa provides low latency at high load ▪ Close to hardware limit latency for short messages ▪ High network bandwidth utilization ▪ Practical design for commodity fabrics ● Key results: 100 x faster than best published testbed implementation ▪ 15 us tail latency (99%ile) for short messages, at 80% load ▪ High bandwidth utilization: more than 90% of available bandwidth ● Key idea: Effective use of network priorities ▪ Dynamically assigned priorities by receivers ▪ Receiver-driven packet scheduling ▪ Controlled overcommitment on receiver’s downlink Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 25

Homa Source Code ● Homa Simulator: ▪ ● Testbed implementation in RAMCloud: ▪ ● https: //github. com/Platform. Lab/Homa. Simulation https: //github. com/Platform. Lab/RAMCloud Stand alone implementations (under development): ▪ Linux Kernel: https: //github. com/Platform. Lab/Homa. Module ▪ Userspace RPC: https: //github. com/Platform. Lab/Homa Aug. 22, 2018 Homa Transport, SIGCOMM 2018 Slide 26