Hepix spring 2012 Summary SITE http indico cern

- Slides: 13

Hepix spring 2012 Summary SITE: http: //indico. cern. ch/contribution. List. Display. py? conf. Id=160737

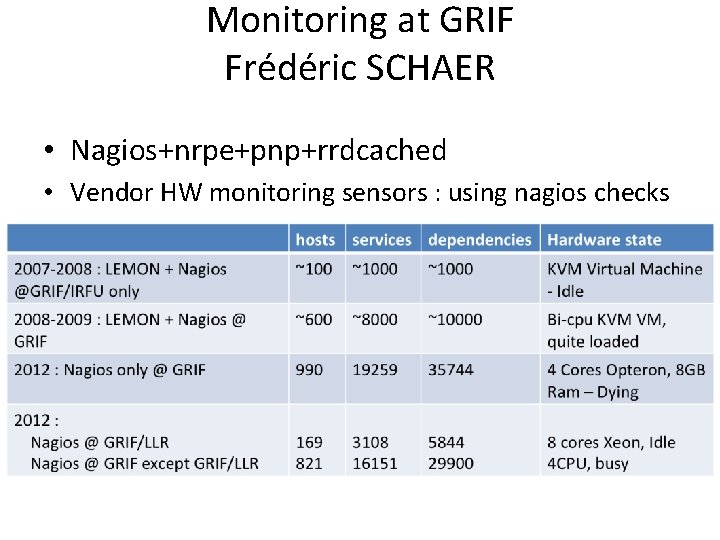

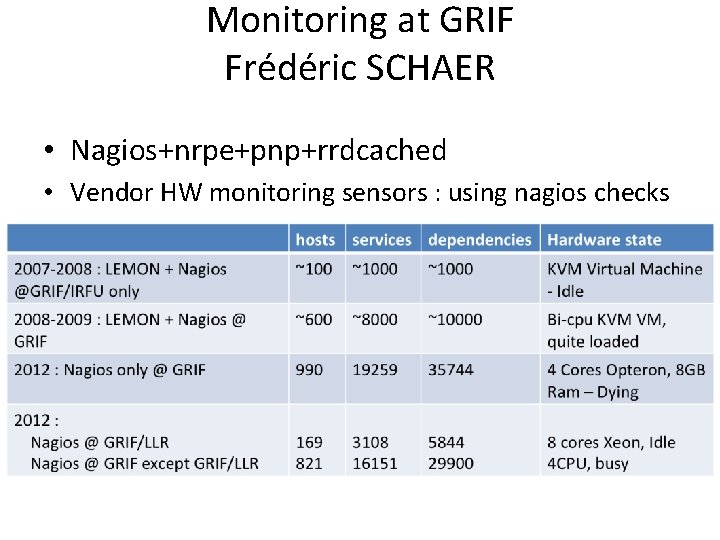

Monitoring at GRIF Frédéric SCHAER • Nagios+nrpe+pnp+rrdcached • Vendor HW monitoring sensors : using nagios checks

Nagios tricks • Compression : – Nrpe_check output|bzip 2 | uuencode – Nagios check_nrpe |bunzip 2 | uudecode • RRD cache daemon: – http: //docs. pnp 4 nagios. org/pnp-0. 4/rrdcached

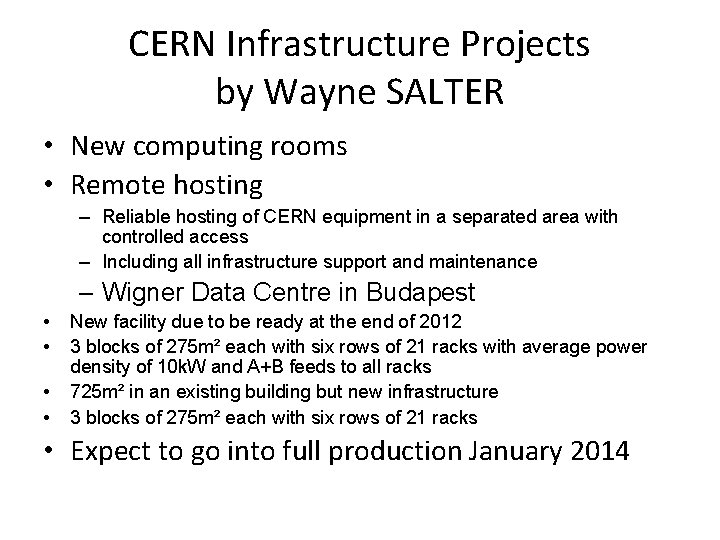

CERN Infrastructure Projects by Wayne SALTER • New computing rooms • Remote hosting – Reliable hosting of CERN equipment in a separated area with controlled access – Including all infrastructure support and maintenance – Wigner Data Centre in Budapest • • New facility due to be ready at the end of 2012 3 blocks of 275 m² each with six rows of 21 racks with average power density of 10 k. W and A+B feeds to all racks 725 m² in an existing building but new infrastructure 3 blocks of 275 m² each with six rows of 21 racks • Expect to go into full production January 2014

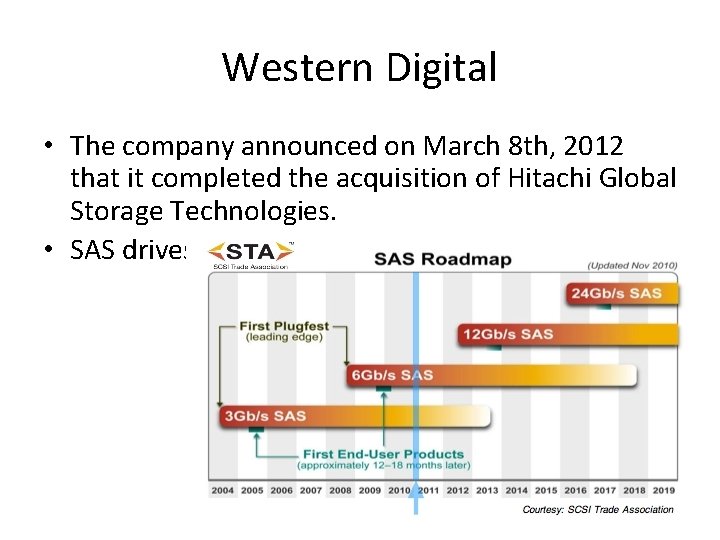

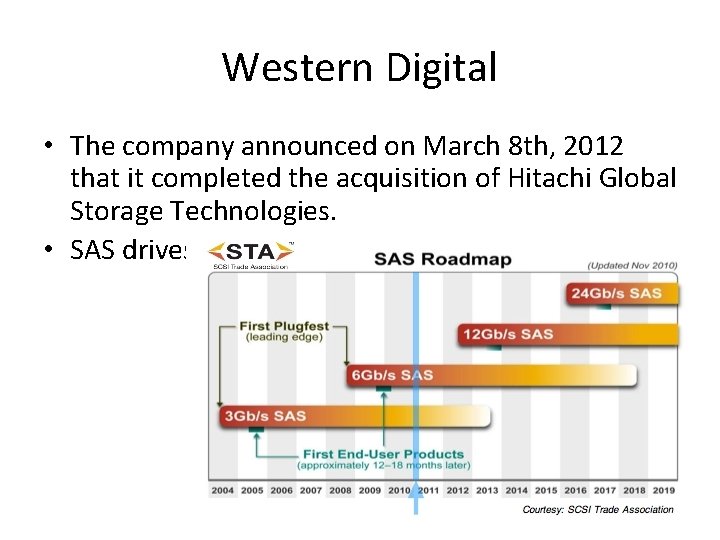

Western Digital • The company announced on March 8 th, 2012 that it completed the acquisition of Hitachi Global Storage Technologies. • SAS drives

CHroot OS (L. Pezzaglia, NERSC) If we run one job per core on a 100 -node cluster with 24 cores per node, we will have 2400 VMs to manage Each VM mounts and unmounts parallel filesystems Each VM will be joining and leaving shared serviceswith each reboot Shared services (including filesystems) mustmaintain state for all these VMs CHOS fulfills most of the use cases for virtualization in HPC with minimal administrative overhead and negligible performance impact Users do not interact directly with the “base” OS CHOS provides a seamless user experience Users manipulate only one file ($HOME/. chos), and the desired environment is automatically activated for all interactive and batch work

IPv 6: David Kelsey F. Prelz • • Already found a few problems Open. AFS, d. Cache, Uber. FTP FTS & globus_url_copy Large sites (e. g. CERN and DESY) wish to manage the allocation of addresses – Do not like autoconfiguration (SLAAC) • World IPv 6 Launch Day: 6 June 2012 “The Future is Forever” • Permanently enable IPv 6 by 6 th June 2012

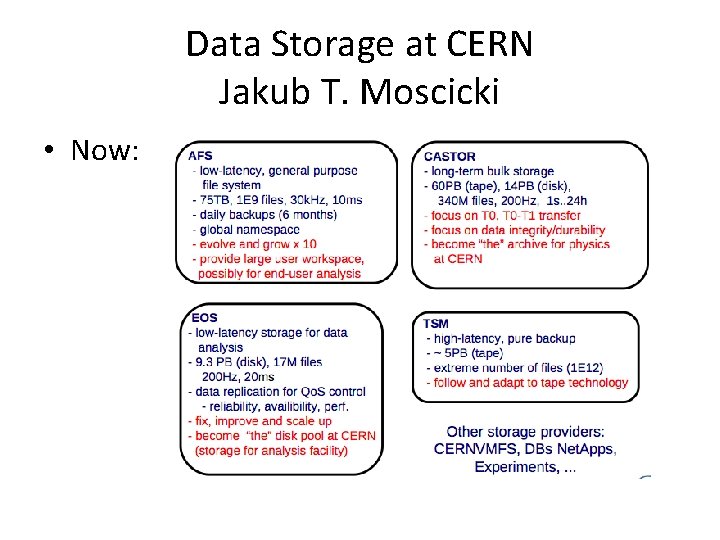

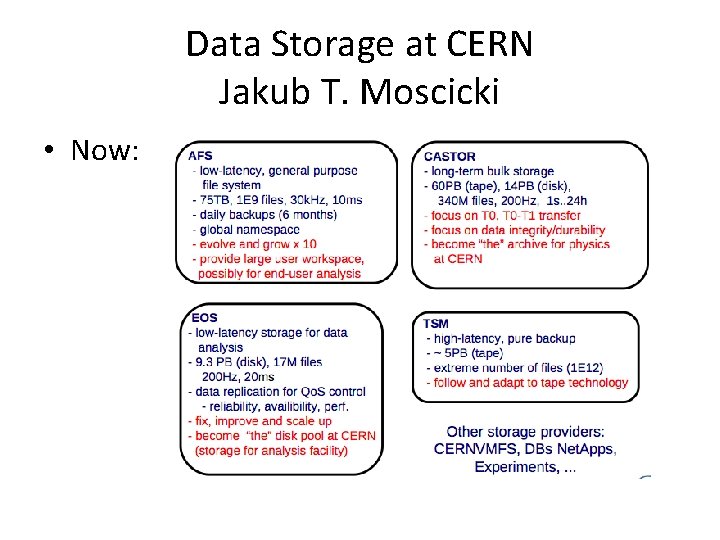

Data Storage at CERN Jakub T. Moscicki • Now:

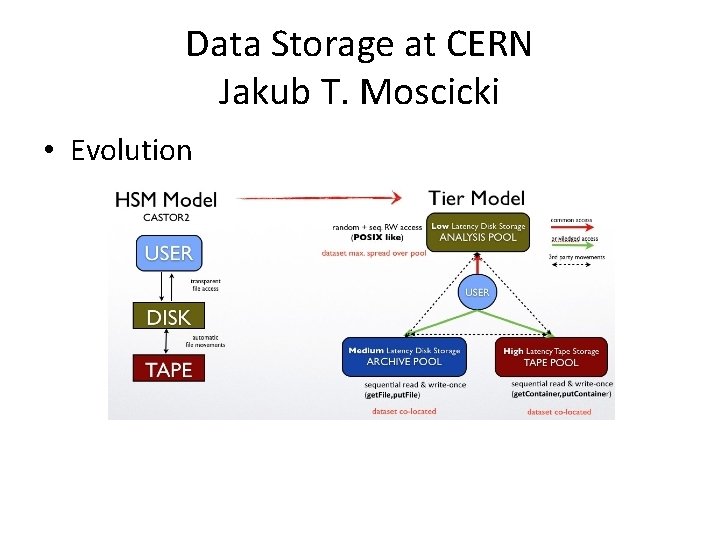

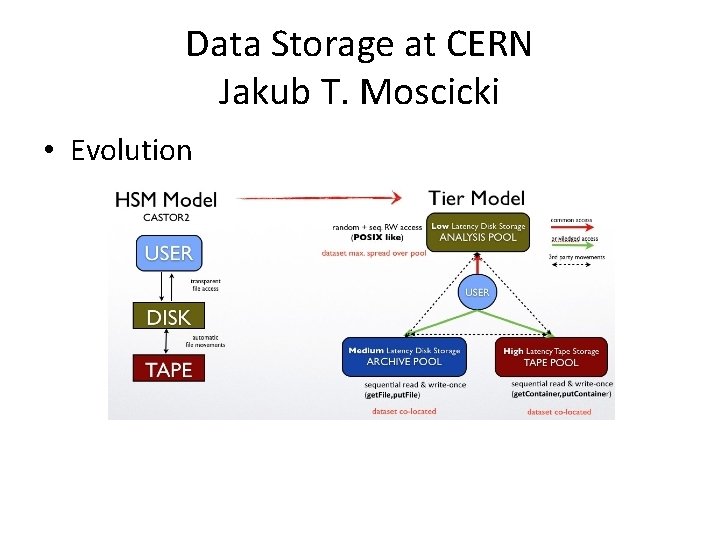

Data Storage at CERN Jakub T. Moscicki • Evolution

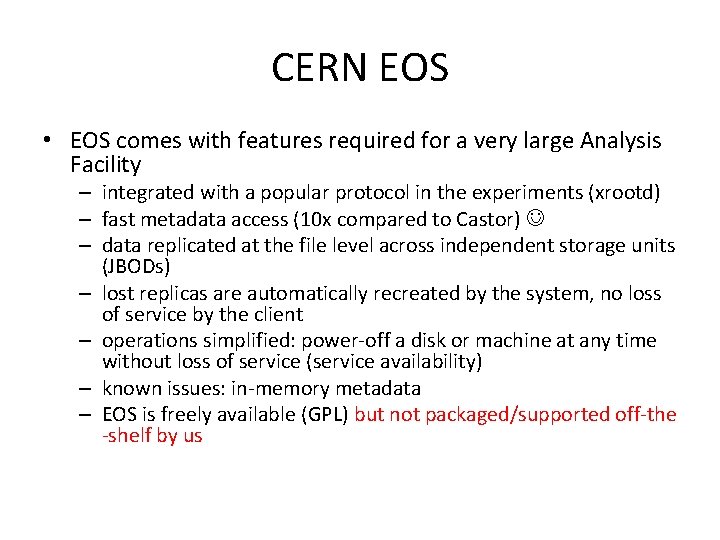

CERN EOS • EOS comes with features required for a very large Analysis Facility – integrated with a popular protocol in the experiments (xrootd) – fast metadata access (10 x compared to Castor) – data replicated at the file level across independent storage units (JBODs) – lost replicas are automatically recreated by the system, no loss of service by the client – operations simplified: power-off a disk or machine at any time without loss of service (service availability) – known issues: in-memory metadata – EOS is freely available (GPL) but not packaged/supported off-the -shelf by us

EOS: Future • Future: m+n block replication – stored on independent storage units (disk/servers) – different algorithms possible • double, triple parity, LDPC, Reed Solomon – simple replication: 3 copies, 200% overhead, 3 x streaming performance – 10+3 replicaton: can lose any 3, remaining 10 are enough to reconstruct, 30 % storage overhead

Hardware evaluation 2012 Jir í Horky • Deduplication test: • HV: Fujitsu Eternus CS 800 S 2 – All zeros: dedup ratio 1: 1045 – /dev/urandom, Atlas data: 1: 1. 07 – Backups: 1: 2. 8 – snapshots of VM: 1: 11. 7 • How many disks are needed for 64 core worker nodes? – 3 -4 SAS 2. 5” 300 GB 10 k. RPM drives in RAID 0

Cyber security • http: //www. sourceconference. com/boston/sp eakers_2012. asp