Heads youre in tails youre out How RCTs

- Slides: 56

Heads you’re in; tails you’re out: How RCTs have evolved in DWP Jane Hall jane. hall@dwp. gsi. gov. uk

Background § Increasing use of RCTs § Emphasis on evidence-based policy § Limited UK experience in social policy arena § Practical lessons not theoretical debate

Overview § § § Chronology of RCTs in DWP Site selection and preparation Identifying the eligible population Dealing with resistance Performing the random assignment Monitoring take-up

Chronology of RCTs § § § § Restart Various New Deals Employment Zones JRRP ERA JSA Intervention Pilots ND 50+ Mandatory IAP

Site Selection and Preparation § Need commitment from the top § All parties need to buy-in § Set-up is resource intensive § Personal visits § Pilot the approach

Identifying and recruiting the eligible population § § § Can they be easily identified Self-selection Suitability of the population Monitor P & C Group characteristics Selling techniques Sample sizes: Sub-group analysis

Dealing with resistance § Busting the myth § Significant investment in training at all levels § Aides and FAQs § Scripts

Performing the Random Allocation § § Needs to be sophisticated Not open to sabotage/gaming Block allocation: Maintain P: C ratio Different techniques – NINO – Call Centre – On-line algorithm – Random numbers

Monitoring take-up § Keep track of P & C Group § Ensure only P Group receive the treatment § Monitor key characteristics of P & C Group § Be prepared to redesign the random allocation

Expect the Unexpected § Results may not be what you anticipate § A fair allocation of resources? § Participation rates can be disappointing

Operational Challenges The ERA Experience Jenny Carrino

Overview § The ERA Policy § Key Challenges – Random Assignment (RA) Process – Customer Understanding of RA – Creation of ‘Informal’ Refusers – Jobcentre Plus Target Structure – Technical Assistance

The ERA Policy § To test interventions to improve retention and advancement – Adviser support – Funding for training – Financial Incentives § 6 Jobcentre Plus districts § Three customer groups NDLP ND 25+ WTC § To test the effectiveness of using RA to evaluate social policy in the UK

Random Assignment 1 § Issue: The random allocation process § Lessons Learnt – Importance of transparency in the allocation process – Avoiding contamination § Outcomes – Most customers and staff viewed random assignment as fair and justified

Random Assignment 2 § Issue: The Informed Consent Process § Lessons Learnt – Standardisation - adviser scripts and leaflets § Outcomes – Not everyone fully understood what they had signed up for – Too much information at initial interviews – conduct RA as a stand alone interview

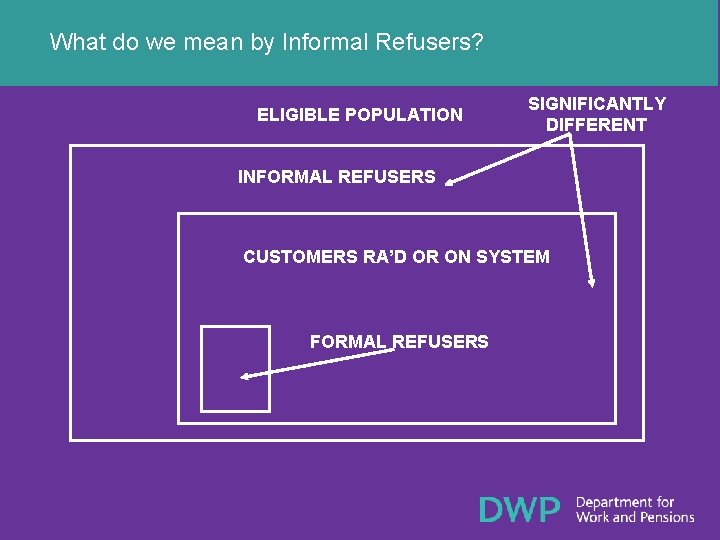

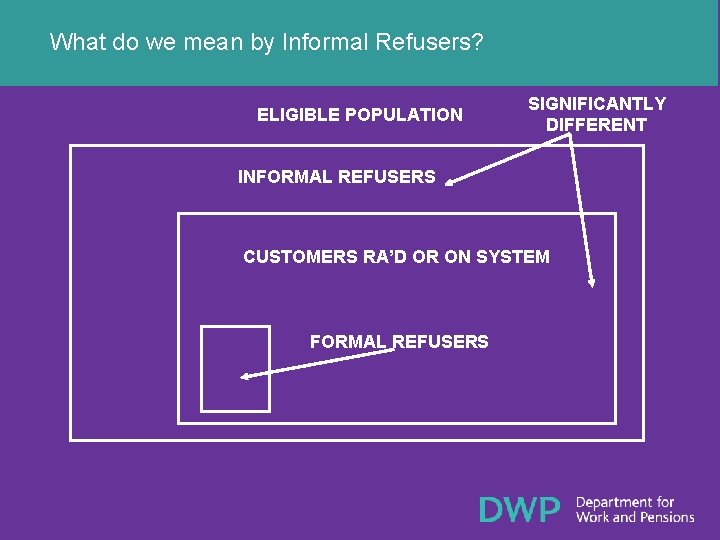

Random Assignment 3 § Issue: Creation of a group of ‘informal’ refusers

What do we mean by Informal Refusers? ELIGIBLE POPULATION SIGNIFICANTLY DIFFERENT INFORMAL REFUSERS CUSTOMERS RA’D OR ON SYSTEM FORMAL REFUSERS

Informal Refusers Why this happened – The decision to use RA – RA to ERA was voluntary – Influences from both advisers and customers § Outcomes – Creation of a ‘third’ group – Analysis to identify whether this group are different to the ERA population § Lesson Learnt – If possible monitor intake closely against eligible population §

Ensuring a Treatment - Targets § Issue: The Jobcentre Plus Target Structure – Some adviser behaviour negatively affected – Senior management buy-in affected § Lessons Learnt – Policies need to reflect the organisations reward system – Need to be able to monitor and feedback to implementation managers

Technical Assistance § Issue: Ensuring the effective delivery of RA § Lessons Learnt – US model of on-site RA assistance – Avoiding contamination – Monitoring Performance § Outcomes – Advisers felt supported during the RA period – Initial confusion over the role of TAs – Some districts deferred responsibility of ERA implementation

Summary of Key Challenges § § § RA Process Informed Consent Creation of informal refusers Ensuring a Treatment Providing effective support to delivery agents

Jobseekers Allowance (JSA) Intervention Pilots Jayne Middlemas

JSA Intervention Pilots § § § § JSA Intervention Regime The Pilots Evaluation Random assignment The data Did Random Assignment work? Results

JSA Intervention Regime § § § First Contact New Jobseeker Interview (NJI) Financial Assessor Interview Fortnightly Jobsearch Review (FJR) 13 week review

The Pilots § § Introduced in January 2005 108 Jobcentres in 10 Districts took part Each Jobcentre took part in a single pilot Aim to deliver resource savings on the FJR without reducing unemployment off-flow rates.

The pilots (cont) Five different approaches: § Excusal of signing for first 13 wks of claim § Excusal of signing for first 7 wks of claim § Telephone signing § Shortened FJR § Group signing

The pilots (cont) Some groups excluded: § Part-time workers § 16 and 17 year olds § People with no fixed abode § People known to have had a fraudulent claim in the past

Evaluation § § Customers randomly allocated Work study to record resources used Comparison of off-flows Qualitative evaluation

Random Assignment 50% programme, 50% control ORC International Call Centre Two methods: § Adviser calls immediately prior to each NJI § Jobcentre calls at start of day with details of all clients due to attend an NJI that day ORC also provided random call-in date

Data § Data collected during random assignment § JUVOS data – derived from the Jobseekers Allowance Payment System (JSAPS) § HMRC Employment Data

Did Random Assignment Work? § 66, 600 randomly assigned § 33, 100 programme & 33, 400 control § All pilots and Districts close to 50/50 split Was everyone assigned? § Difficult to answer precisely § Number randomly assigned around 90% of total new claims. Excluded groups likely to account for 8 to 12%

Were People Wrongly Assigned? § 19% had no new JSA claim during the pilot § Incorrect NI numbers may mean we can’t find some claims § Jobcentres didn’t always inform us of those who failed to attend § Can’t identify excluded groups in the data

Internal Validity § Compared characteristics for programme and control groups § Very little difference was found by gender, age or ethnic origin § Concluded that the control group is well suited to providing a counterfactual for the programme group

External Validity § Pilot Jobcentres account for small proportion of all new JSA claims across the country § Gender, age & ethnicity of new claimants in pilot areas different to country as a whole § Some difference in local unemployment rates § Weighted results to take account of differences

Results 13 week excusal pilot

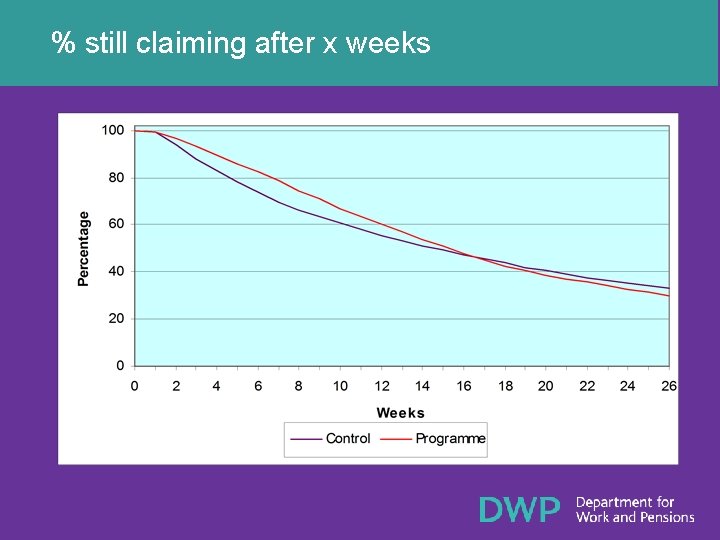

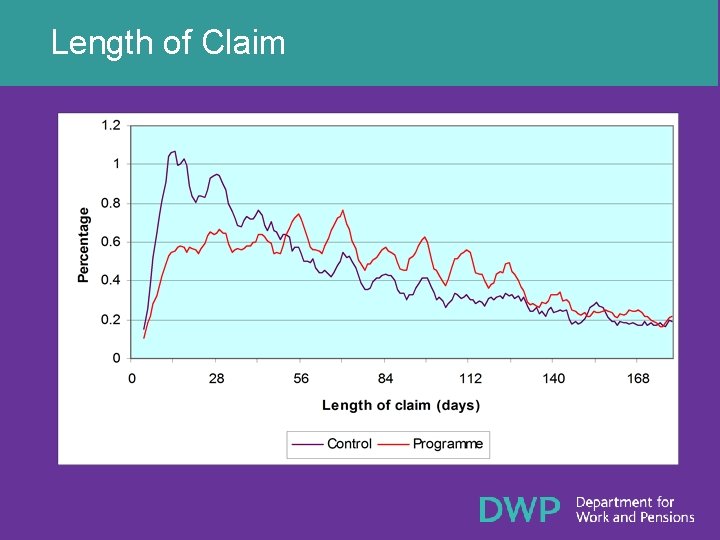

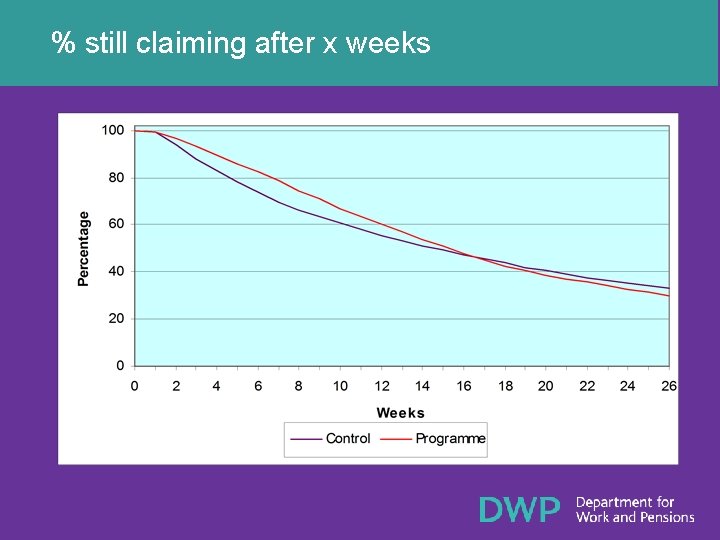

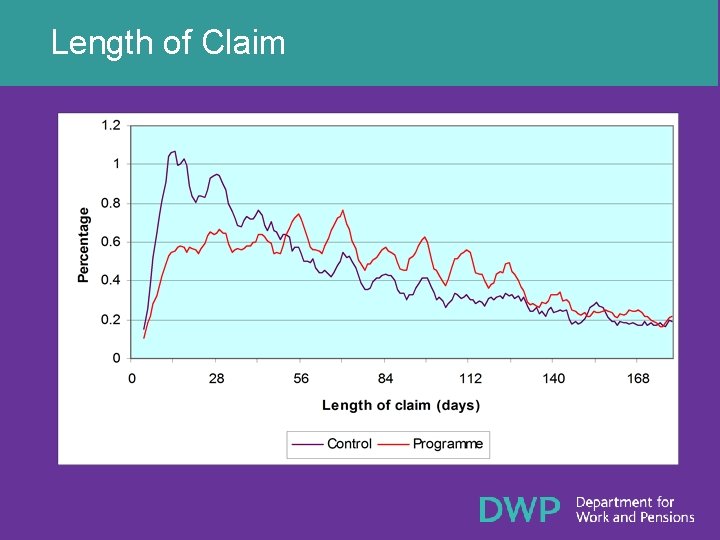

% still claiming after x weeks

Length of Claim

Results § Average length of claim is 5. 9 days greater in programme group than in control group § Weighting the results to be representative nationally suggests an increase in 6. 1 days in average length of claim § No difference in the proportions who moved into work

Results (cont) 4 reasons for difference in length of claim: § Some people take longer to find work § Some people take longer to tell us they have found work § Some control group customers fail to attend and have to start a new claim § Some people fail to sign off for other reasons

Results (cont) § Work study provided estimates of savings § Extra benefits paid as a result of increase in average length of claim exceed savings § Qualitative evaluation suggested that the pilot was implemented well § Customers were happy not to have to attend every fortnight

More information § DWP Research Report 300: The Qualitative Evaluation of the JSA Intervention Regime Pilots § DWP Research Report 382: Jobseeker’s Allowance Intervention Pilots Quantitative Evaluation § Available on DWP Website: http: //www. dwp. gov. uk/asd 5/rrs-index. asp

Job Retention and Rehabilitation Pilot Lessons learnt in running an RCT James Holland

Structure 1. Background to JRRP 2. Results of the trial 3. Hypotheses 4. Conclusions: Importance of complementary methodologies

Design § 4 - way trial § To test the effectiveness of a person centred case management approach and increased range of treatments in helping people retain work – – Health care focused Workplace focused Combined health care and/or workplace focused Control group § Four service providers in six parts of the country § Participants were people off work sick and unlikely to return to work without help

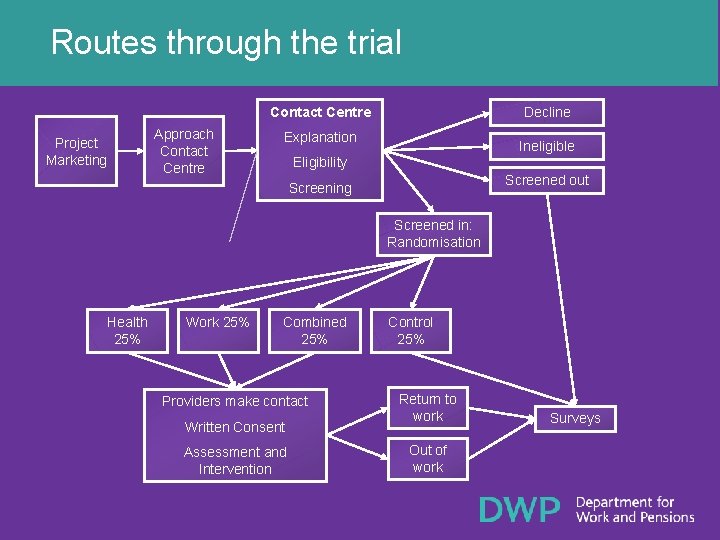

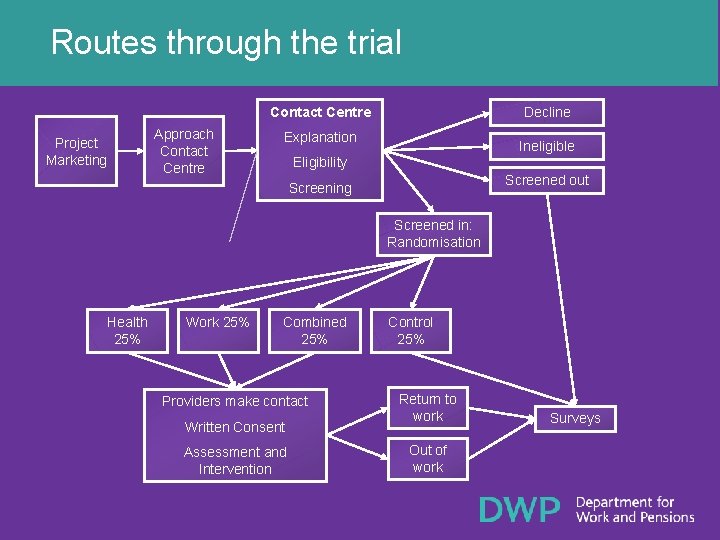

Routes through the trial Contact Centre Approach Contact Centre Project Marketing Decline Explanation Ineligible Eligibility Screened out Screening Screened in: Randomisation Health 25% Work 25% Combined 25% Providers make contact Written Consent Assessment and Intervention Control 25% Return to work Out of work Surveys

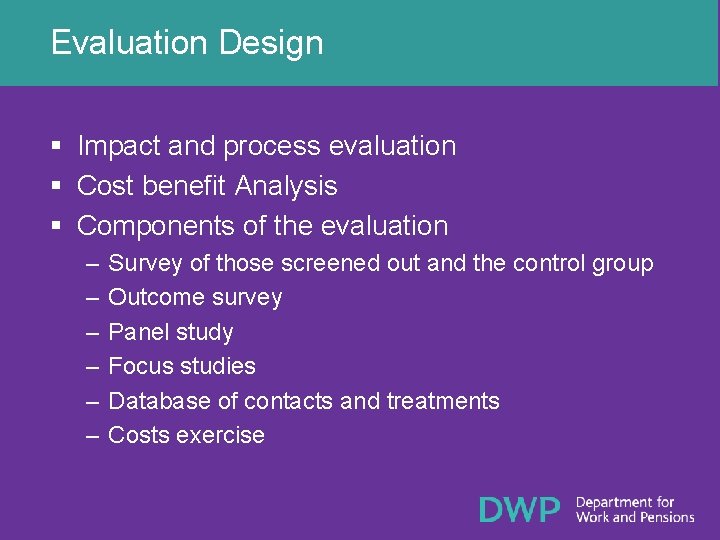

Evaluation Design § Impact and process evaluation § Cost benefit Analysis § Components of the evaluation – – – Survey of those screened out and the control group Outcome survey Panel study Focus studies Database of contacts and treatments Costs exercise

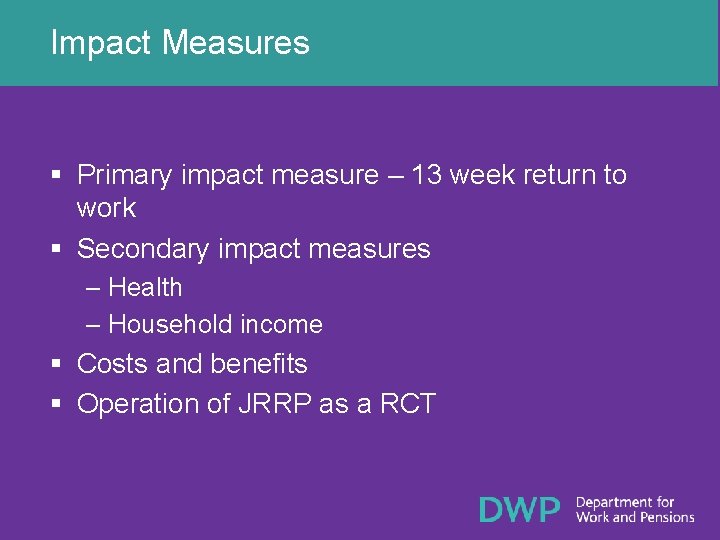

Impact Measures § Primary impact measure – 13 week return to work § Secondary impact measures – Health – Household income § Costs and benefits § Operation of JRRP as a RCT

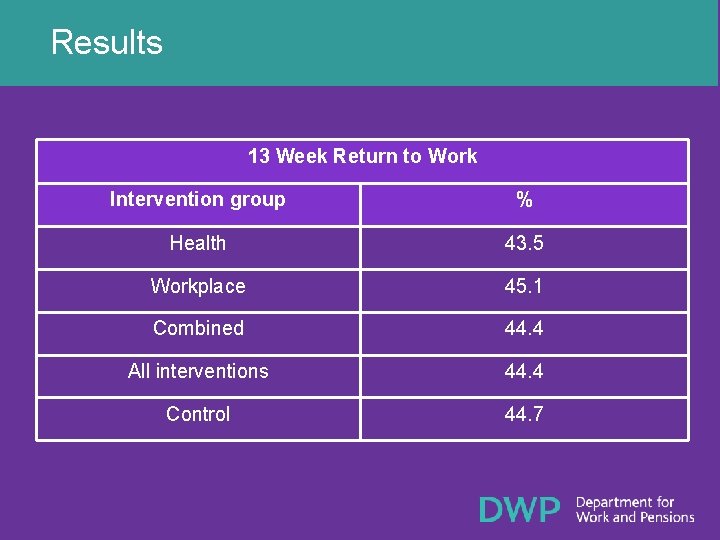

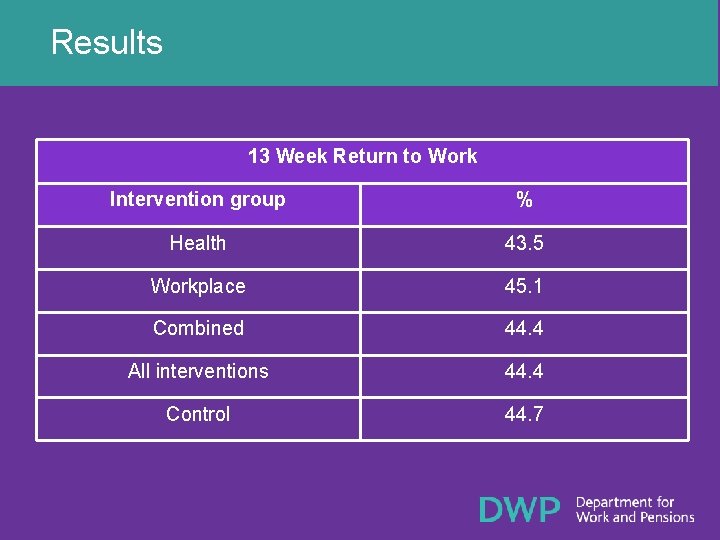

Results 13 Week Return to Work Intervention group % Health 43. 5 Workplace 45. 1 Combined 44. 4 All interventions 44. 4 Control 44. 7

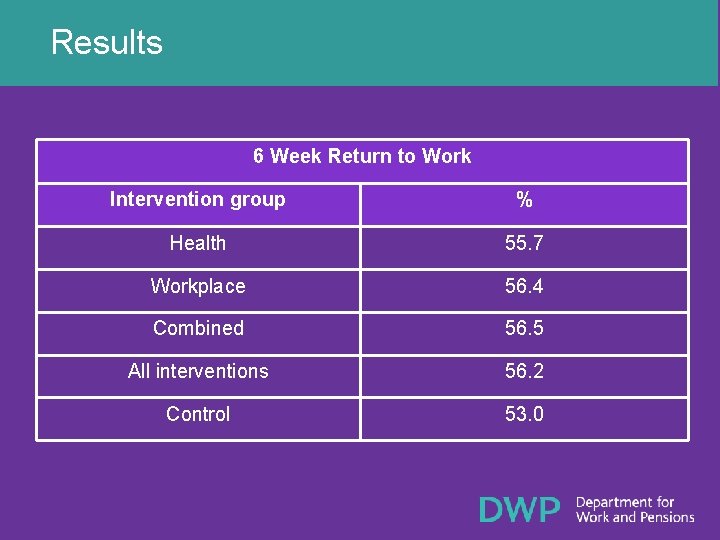

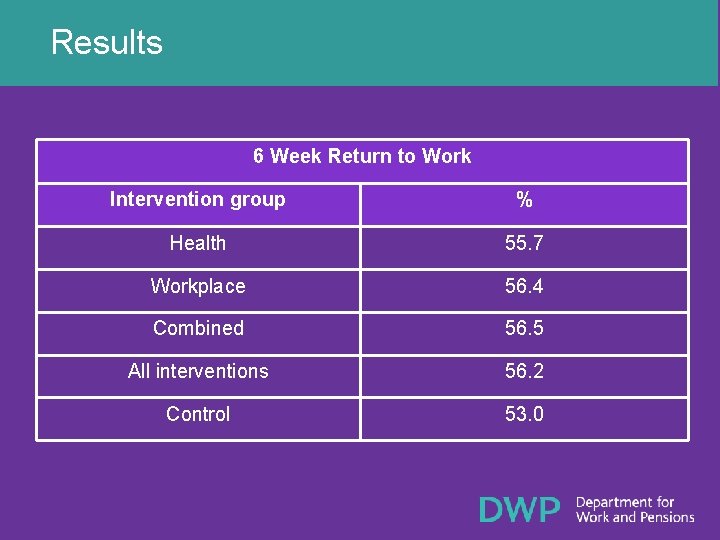

Results 6 Week Return to Work Intervention group % Health 55. 7 Workplace 56. 4 Combined 56. 5 All interventions 56. 2 Control 53. 0

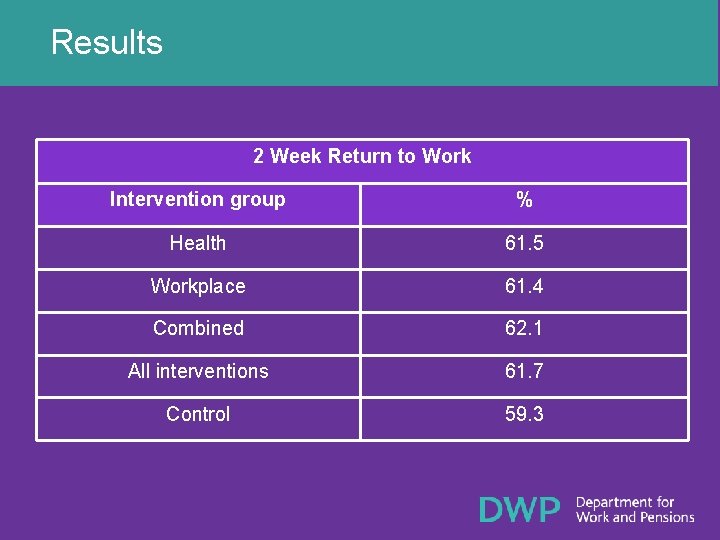

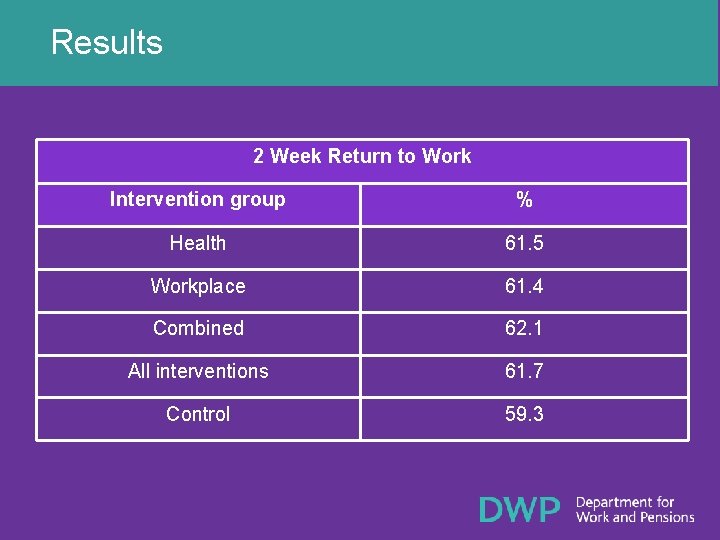

Results 2 Week Return to Work Intervention group % Health 61. 5 Workplace 61. 4 Combined 62. 1 All interventions 61. 7 Control 59. 3

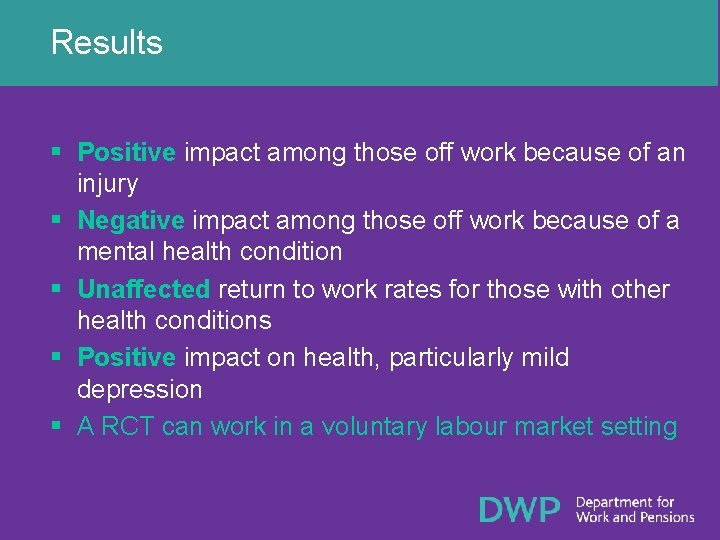

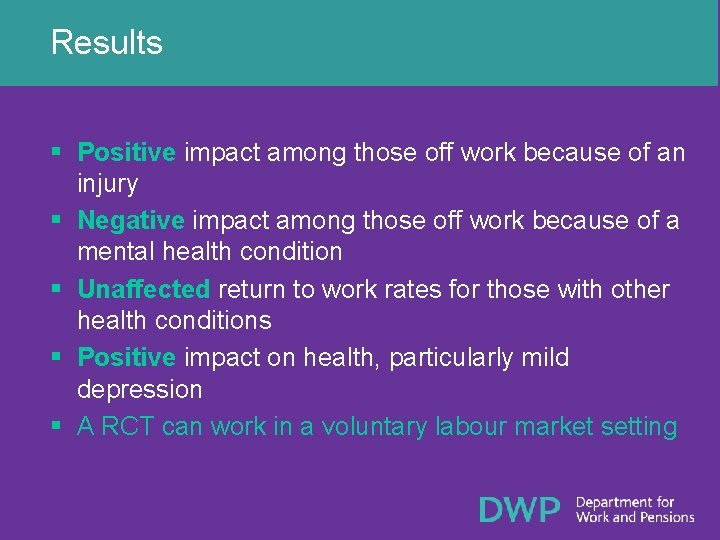

Results § Positive impact among those off work because of an injury § Negative impact among those off work because of a mental health condition § Unaffected return to work rates for those with other health conditions § Positive impact on health, particularly mild depression § A RCT can work in a voluntary labour market setting

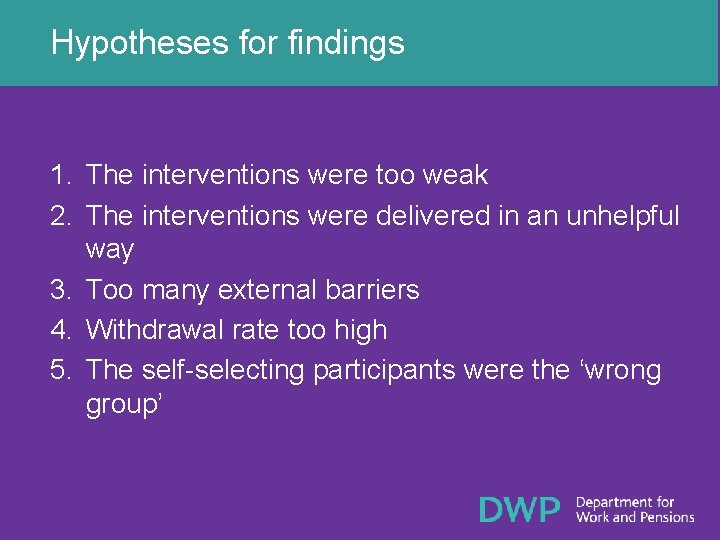

Hypotheses for findings 1. The interventions were too weak 2. The interventions were delivered in an unhelpful way 3. Too many external barriers 4. Withdrawal rate too high 5. The self-selecting participants were the ‘wrong group’

Missing evidence § Evidence of problems mostly drawn from qual research § This generates hypotheses/explanations but does not allow for quantification § Biggest gap is (quant) understanding of behaviour of control group § In retrospect, needed data on self-motivation and better understanding of participant/provider interaction

Thoughts on how to do it better § Set out possible scenarios at start § Early qual research on behaviours of participants and control group § Early impact estimates so that later research can be adapted

Conclusions § RCTs are the gold standard programme evaluation § But a number of problems, practically and methodologically § Need supplementing with a good quality process evaluation

Contact Details James Holland Disability and Work Division, DWP James. holland 1@dwp. gsi. gov. uk 0114 209 8280 Reports available via www. dwp. gsi. gov. uk/asd 5

Put apostrophe: mices tails are shorter than rats tails.

Put apostrophe: mices tails are shorter than rats tails. A pilot sets out from an airport and heads in the direction

A pilot sets out from an airport and heads in the direction Figurative language in the song one thing by one direction

Figurative language in the song one thing by one direction Review and revise your tentative goal statement

Review and revise your tentative goal statement My hope and firm foundation

My hope and firm foundation Do asexual people have sex

Do asexual people have sex I am writing email

I am writing email And the tree was happy

And the tree was happy Use the labels to draw and annotate a cell membrane

Use the labels to draw and annotate a cell membrane Tlri generator services

Tlri generator services If love is the answer you are wrong

If love is the answer you are wrong How to act angry

How to act angry You're my master

You're my master If less is more how you keeping score

If less is more how you keeping score Hello hello good afternoon

Hello hello good afternoon Cybersmart definition

Cybersmart definition How tall are caribou

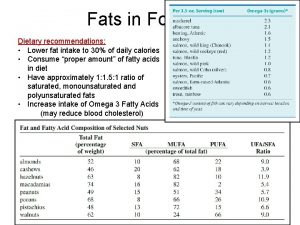

How tall are caribou Nonpolar tails

Nonpolar tails Common fairy tails

Common fairy tails Split laceration

Split laceration Tails graph acronym

Tails graph acronym Animals with prehensile tails

Animals with prehensile tails Phospholipid bilayer tails

Phospholipid bilayer tails Tails acronym graphs

Tails acronym graphs Tails roadmap

Tails roadmap Macrocytic anemia

Macrocytic anemia Tails operációs rendszer

Tails operációs rendszer Plantlike organisms that live on dead organic matter

Plantlike organisms that live on dead organic matter Someone left their backpack in the library pronoun shift

Someone left their backpack in the library pronoun shift Tails graph

Tails graph Semen analysis

Semen analysis This cloud sometimes resembles mare's tails.

This cloud sometimes resembles mare's tails. Okay coconut man

Okay coconut man Hydrophilic heads

Hydrophilic heads A motorboat heads due east at 16 m/s

A motorboat heads due east at 16 m/s Water supply requirement

Water supply requirement Heads up academy

Heads up academy Heads up tackling circuit

Heads up tackling circuit Heads down digitizing

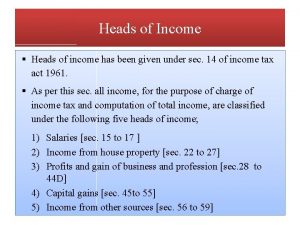

Heads down digitizing Income tax slab

Income tax slab Technical assistance for teachers

Technical assistance for teachers Cc: all deans and heads

Cc: all deans and heads The chief story

The chief story Mcq on single point cutting tool

Mcq on single point cutting tool Types of screw heads

Types of screw heads Easter island stonehenge

Easter island stonehenge Feed enzyme

Feed enzyme Personification about a house

Personification about a house Van gogh silent night

Van gogh silent night Heads paradoxical reflex

Heads paradoxical reflex Wheel and axle examples around the house

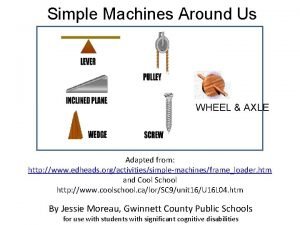

Wheel and axle examples around the house Why do irish have big heads

Why do irish have big heads Daimyo were heads of noble families who

Daimyo were heads of noble families who Boundary line between lower limb and pelvis

Boundary line between lower limb and pelvis Running head apa

Running head apa Hydrophilic heads

Hydrophilic heads Heads up tackling

Heads up tackling