ESPC Infrastructure Update on HYCOM in CESM Alex

- Slides: 28

ESPC Infrastructure • Update on HYCOM in CESM (Alex Bozec, Fei Liu, Kathy Saint, Mat Rothstein, Mariana Vertenstein, Jim Edwards, …) • Update on ESMF integration with MOAB (Bob Oehmke) • Update on accelerator-aware ESMF (Jayesh Krishna, Gerhard Theurich) • Update on the Earth System Prediction Suite (Cecelia De. Luca) ESPC Air-Ocean-Land-Ice Global Coupled Prediction Project Meeting, 19 -20 Nov 2014 University of Colorado UMC, Boulder

ESPC Infrastructure • Update on HYCOM in CESM (Alex Bozec, Fei Liu, Kathy Saint, Mat Rothstein, Mariana Vertenstein, Jim Edwards, …)

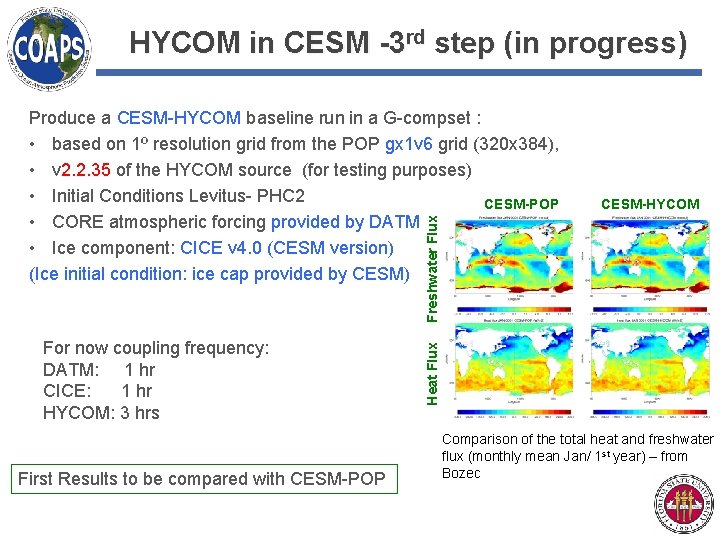

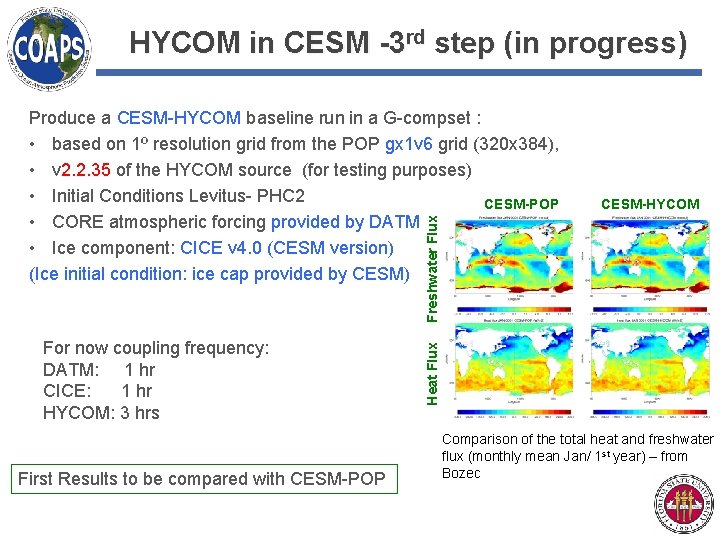

HYCOM in CESM -3 rd step (in progress) For now coupling frequency: DATM: 1 hr CICE: 1 hr HYCOM: 3 hrs First Results to be compared with CESM-POP Heat Flux CESM-HYCOM Freshwater Flux Produce a CESM-HYCOM baseline run in a G-compset : • based on 1º resolution grid from the POP gx 1 v 6 grid (320 x 384), • v 2. 2. 35 of the HYCOM source (for testing purposes) • Initial Conditions Levitus- PHC 2 CESM-POP • CORE atmospheric forcing provided by DATM • Ice component: CICE v 4. 0 (CESM version) (Ice initial condition: ice cap provided by CESM) Comparison of the total heat and freshwater flux (monthly mean Jan/ 1 st year) – from Bozec

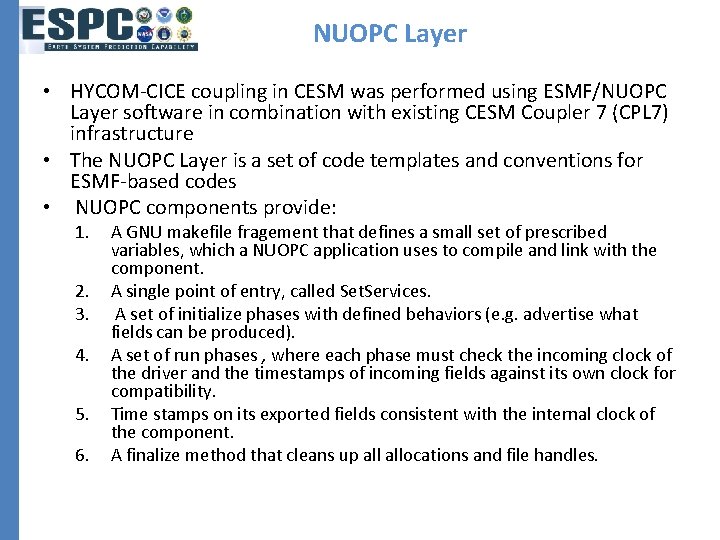

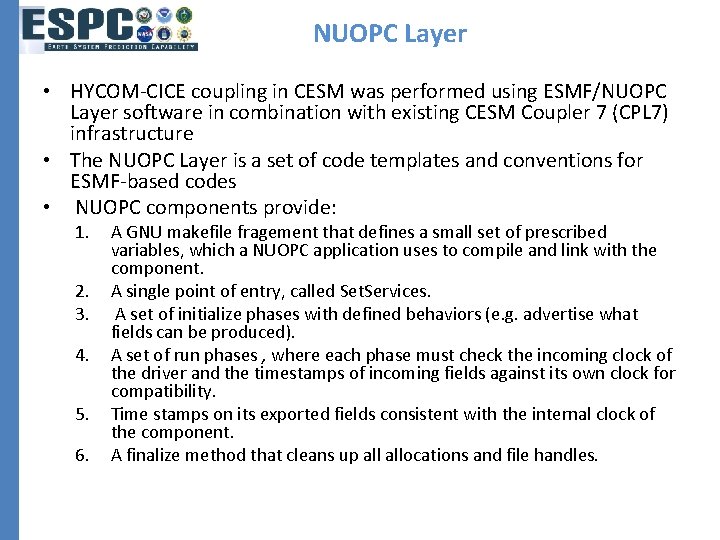

NUOPC Layer • HYCOM-CICE coupling in CESM was performed using ESMF/NUOPC Layer software in combination with existing CESM Coupler 7 (CPL 7) infrastructure • The NUOPC Layer is a set of code templates and conventions for ESMF-based codes • NUOPC components provide: 1. 2. 3. 4. 5. 6. A GNU makefile fragement that defines a small set of prescribed variables, which a NUOPC application uses to compile and link with the component. A single point of entry, called Set. Services. A set of initialize phases with defined behaviors (e. g. advertise what fields can be produced). A set of run phases , where each phase must check the incoming clock of the driver and the timestamps of incoming fields against its own clock for compatibility. Time stamps on its exported fields consistent with the internal clock of the component. A finalize method that cleans up allocations and file handles.

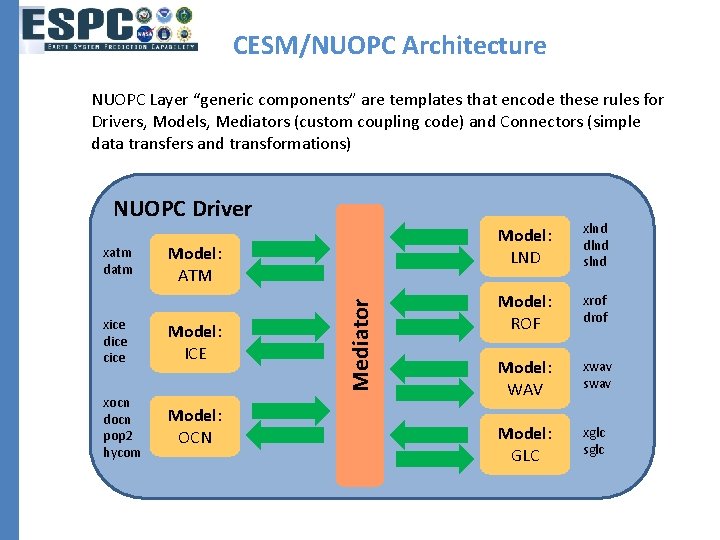

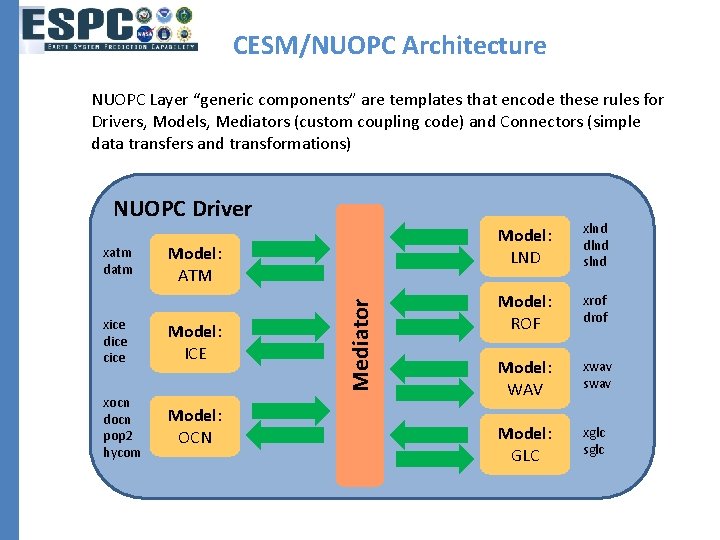

CESM/NUOPC Architecture NUOPC Layer “generic components” are templates that encode these rules for Drivers, Models, Mediators (custom coupling code) and Connectors (simple data transfers and transformations) NUOPC Driver Model: ATM xice dice cice Model: ICE xocn docn pop 2 hycom Model: OCN Mediator xatm datm Model: LND xlnd dlnd slnd Model: ROF xrof drof Model: WAV xwav swav Model: GLC xglc sglc

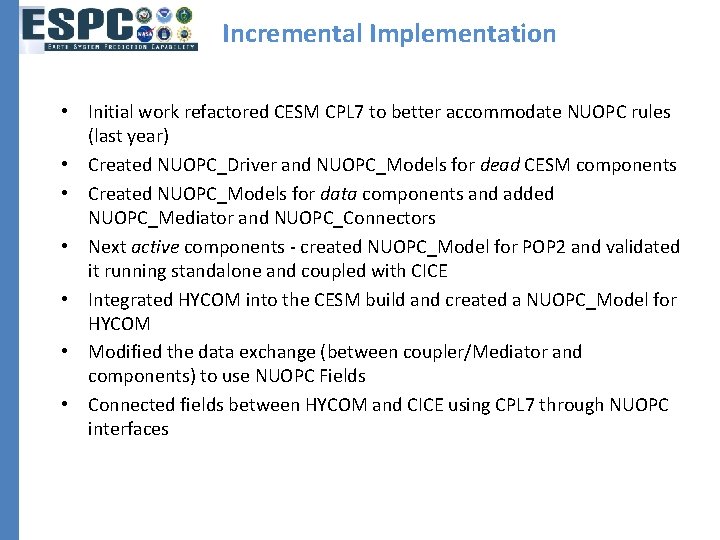

Incremental Implementation • Initial work refactored CESM CPL 7 to better accommodate NUOPC rules (last year) • Created NUOPC_Driver and NUOPC_Models for dead CESM components • Created NUOPC_Models for data components and added NUOPC_Mediator and NUOPC_Connectors • Next active components - created NUOPC_Model for POP 2 and validated it running standalone and coupled with CICE • Integrated HYCOM into the CESM build and created a NUOPC_Model for HYCOM • Modified the data exchange (between coupler/Mediator and components) to use NUOPC Fields • Connected fields between HYCOM and CICE using CPL 7 through NUOPC interfaces

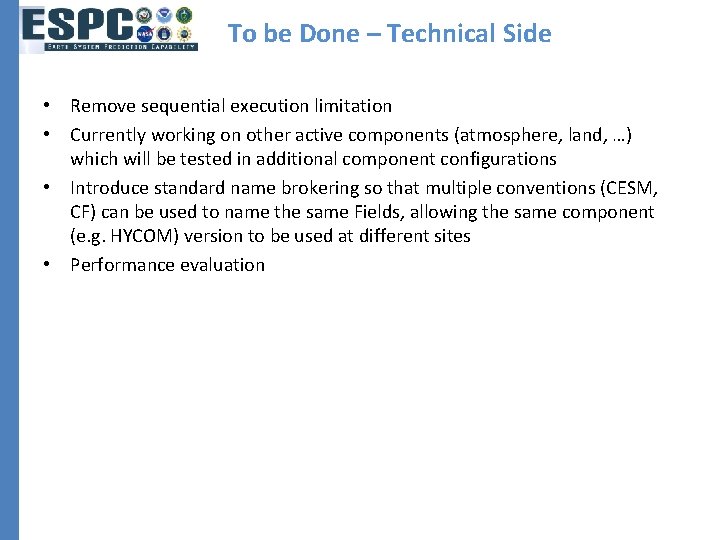

To be Done – Technical Side • Remove sequential execution limitation • Currently working on other active components (atmosphere, land, …) which will be tested in additional component configurations • Introduce standard name brokering so that multiple conventions (CESM, CF) can be used to name the same Fields, allowing the same component (e. g. HYCOM) version to be used at different sites • Performance evaluation

ESPC Infrastructure • Update on ESMF integration with MOAB (Bob Oehmke)

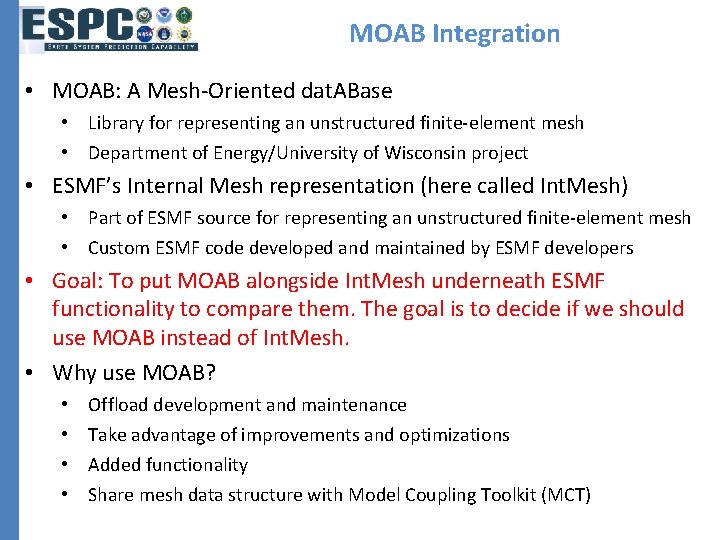

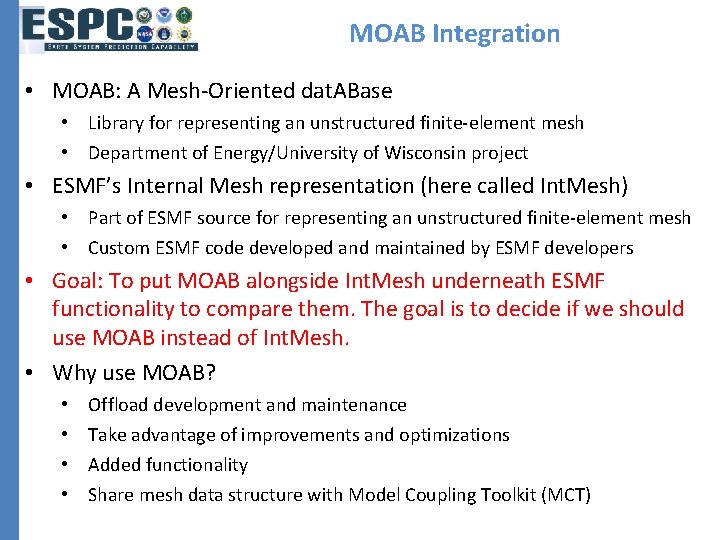

MOAB Integration • MOAB: A Mesh-Oriented dat. ABase • Library for representing an unstructured finite-element mesh • Department of Energy/University of Wisconsin project • ESMF’s Internal Mesh representation (here called Int. Mesh) • Part of ESMF source for representing an unstructured finite-element mesh • Custom ESMF code developed and maintained by ESMF developers • Goal: To put MOAB alongside Int. Mesh underneath ESMF functionality to compare them. The goal is to decide if we should use MOAB instead of Int. Mesh. • Why use MOAB? • • Offload development and maintenance Take advantage of improvements and optimizations Added functionality Share mesh data structure with Model Coupling Toolkit (MCT)

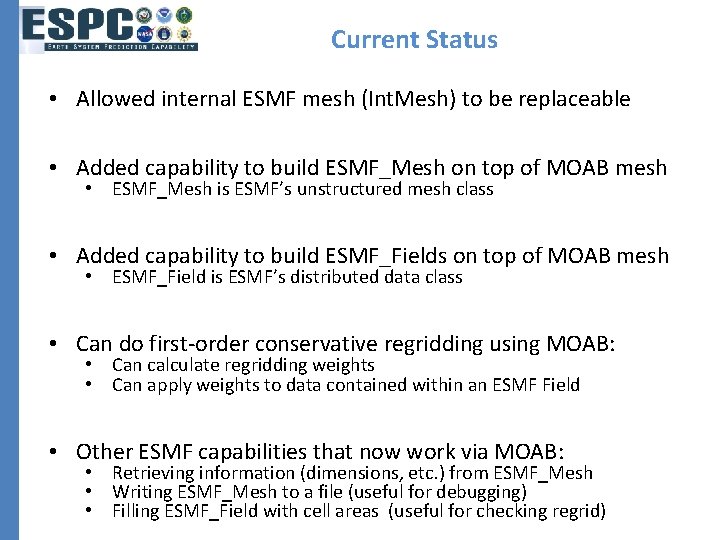

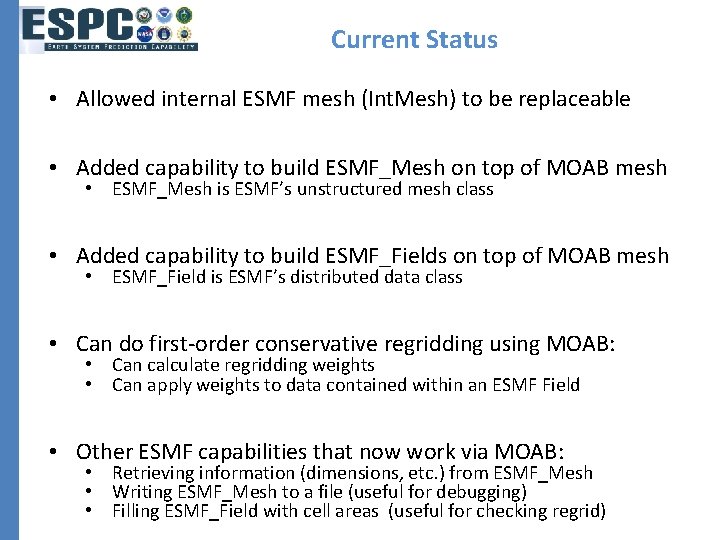

Current Status • Allowed internal ESMF mesh (Int. Mesh) to be replaceable • Added capability to build ESMF_Mesh on top of MOAB mesh • ESMF_Mesh is ESMF’s unstructured mesh class • Added capability to build ESMF_Fields on top of MOAB mesh • ESMF_Field is ESMF’s distributed data class • Can do first-order conservative regridding using MOAB: • Can calculate regridding weights • Can apply weights to data contained within an ESMF Field • Other ESMF capabilities that now work via MOAB: • Retrieving information (dimensions, etc. ) from ESMF_Mesh • Writing ESMF_Mesh to a file (useful for debugging) • Filling ESMF_Field with cell areas (useful for checking regrid)

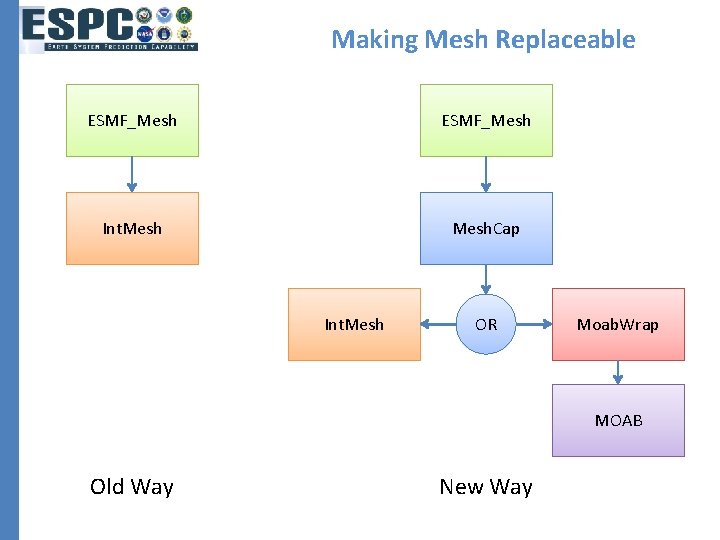

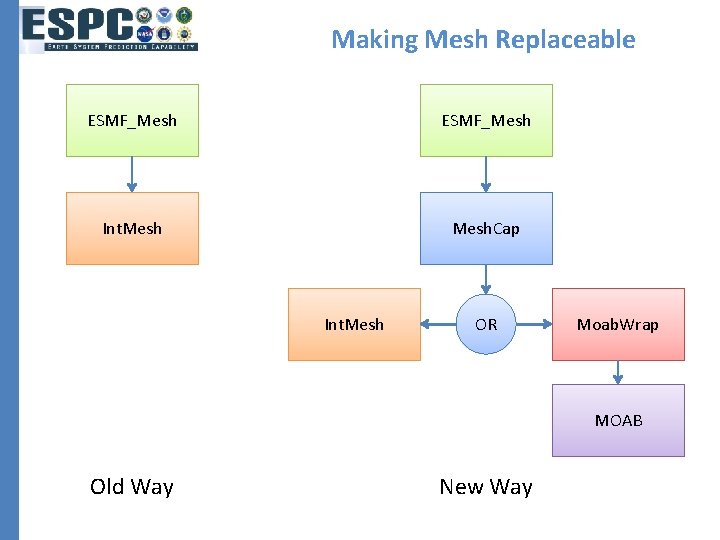

Making Mesh Replaceable ESMF_Mesh Int. Mesh. Cap Int. Mesh OR Moab. Wrap MOAB Old Way New Way

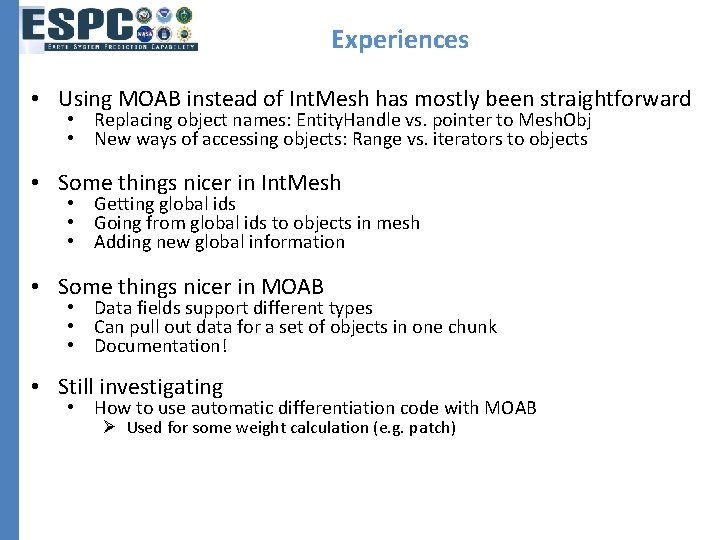

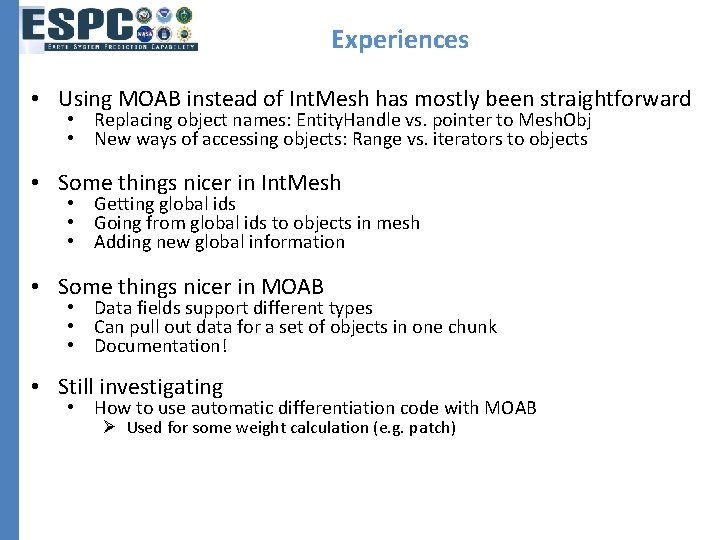

Experiences • Using MOAB instead of Int. Mesh has mostly been straightforward • Replacing object names: Entity. Handle vs. pointer to Mesh. Obj • New ways of accessing objects: Range vs. iterators to objects • Some things nicer in Int. Mesh • Getting global ids • Going from global ids to objects in mesh • Adding new global information • Some things nicer in MOAB • Data fields support different types • Can pull out data for a set of objects in one chunk • Documentation! • Still investigating • How to use automatic differentiation code with MOAB Ø Used for some weight calculation (e. g. patch)

Wrapping Up • In depth comparison of ESMF capabilities using MOAB vs. Int. Mesh • Accuracy • Performance • Decision about whether to replace Int. Mesh with MOAB

ESPC Infrastructure • Update on accelerator-aware ESMF (Jayesh Krishna, Gerhard Theurich)

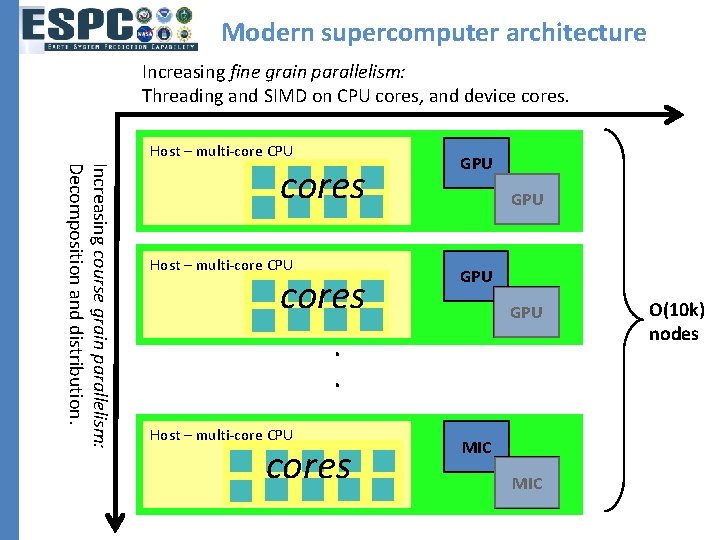

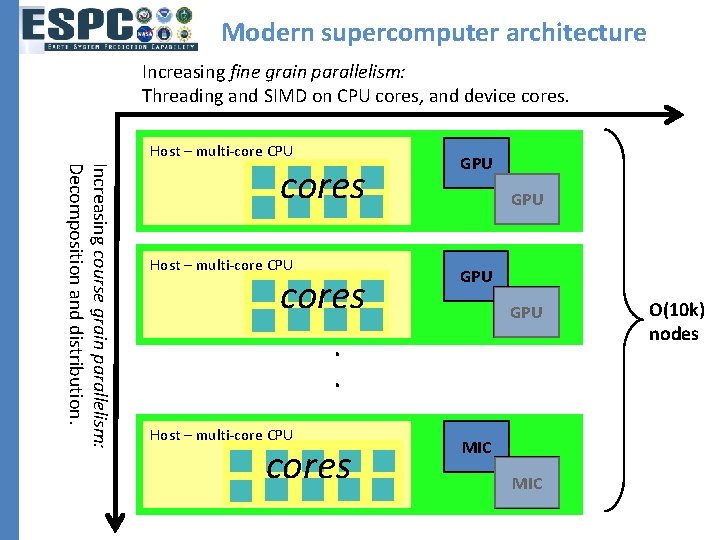

Modern supercomputer architecture Increasing fine grain parallelism: Threading and SIMD on CPU cores, and device cores. Host – multi-core CPU Increasing course grain parallelism: Decomposition and distribution. cores Host – multi-core CPU GPU GPU . . . cores MIC O(10 k) nodes

ESMF/NUOPC accelerator project GOAL To support migration of optimization strategies from the ESPC Coupling Testbed to infrastructure packages and coupled model applications, and provide support for coupling of optimized components in the ESPC program.

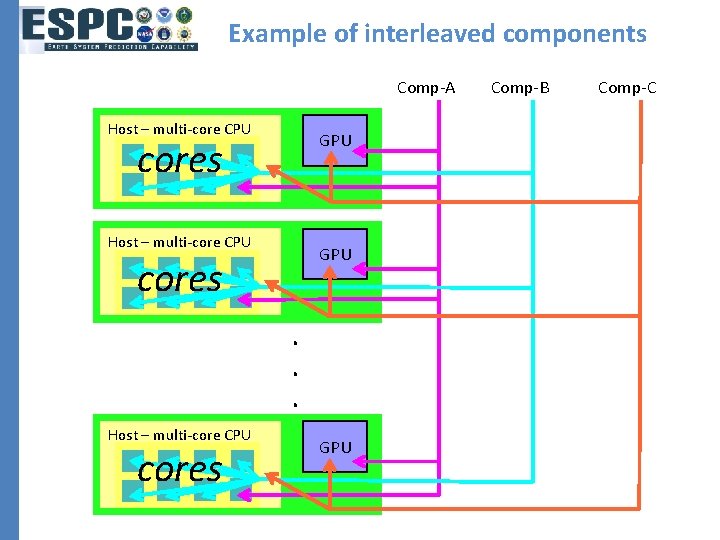

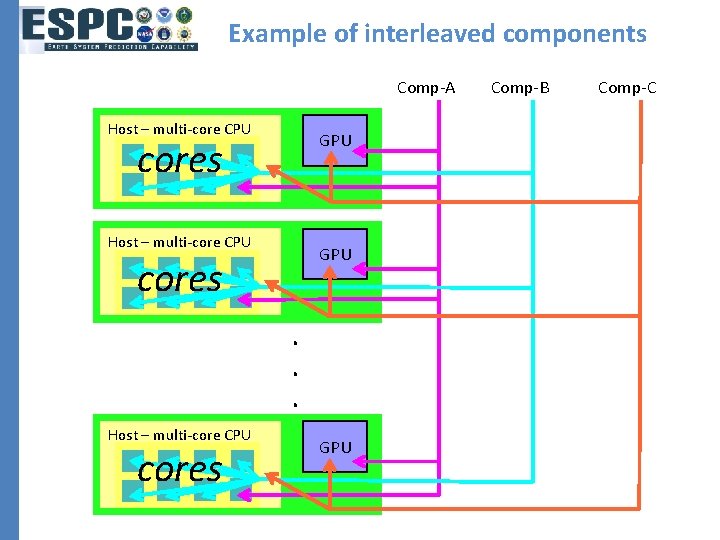

Example of interleaved components Comp-A Host – multi-core CPU GPU cores. . . Host – multi-core CPU cores GPU Comp-B Comp-C

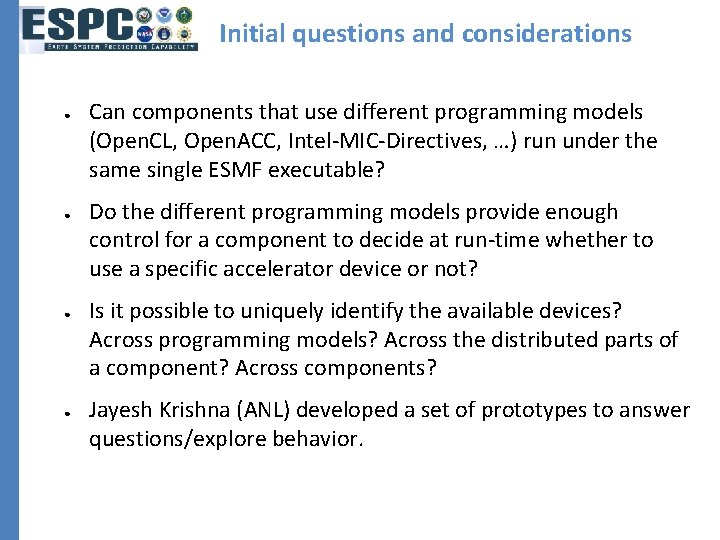

Initial questions and considerations ● ● Can components that use different programming models (Open. CL, Open. ACC, Intel-MIC-Directives, …) run under the same single ESMF executable? Do the different programming models provide enough control for a component to decide at run-time whether to use a specific accelerator device or not? Is it possible to uniquely identify the available devices? Across programming models? Across the distributed parts of a component? Across components? Jayesh Krishna (ANL) developed a set of prototypes to answer questions/explore behavior.

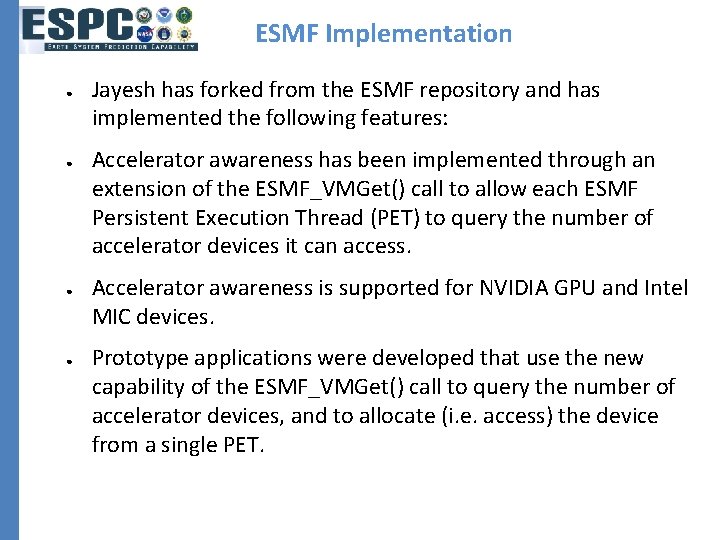

ESMF Implementation ● ● Jayesh has forked from the ESMF repository and has implemented the following features: Accelerator awareness has been implemented through an extension of the ESMF_VMGet() call to allow each ESMF Persistent Execution Thread (PET) to query the number of accelerator devices it can access. Accelerator awareness is supported for NVIDIA GPU and Intel MIC devices. Prototype applications were developed that use the new capability of the ESMF_VMGet() call to query the number of accelerator devices, and to allocate (i. e. access) the device from a single PET.

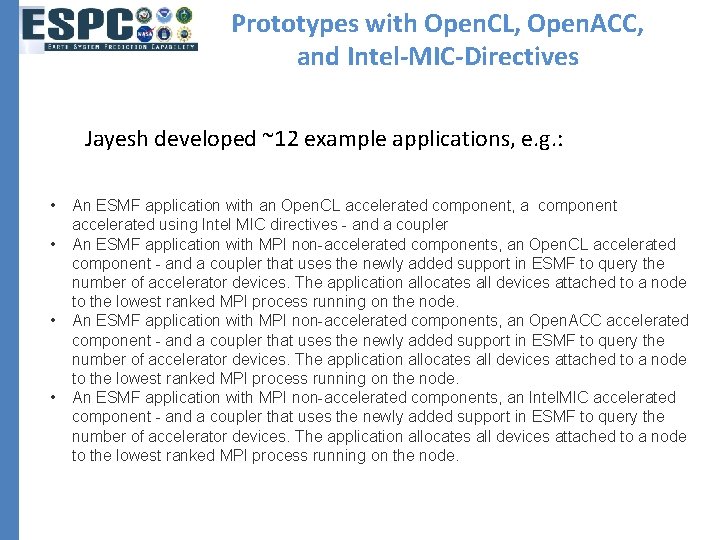

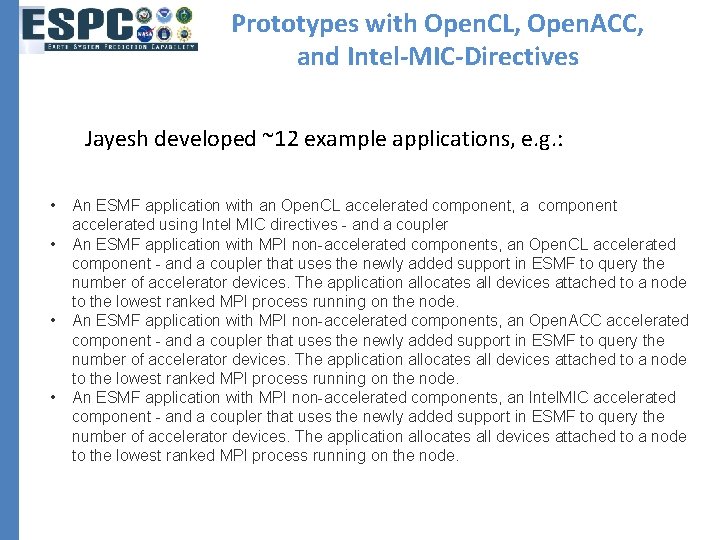

Prototypes with Open. CL, Open. ACC, and Intel-MIC-Directives Jayesh developed ~12 example applications, e. g. : • • An ESMF application with an Open. CL accelerated component, a component accelerated using Intel MIC directives - and a coupler An ESMF application with MPI non-accelerated components, an Open. CL accelerated component - and a coupler that uses the newly added support in ESMF to query the number of accelerator devices. The application allocates all devices attached to a node to the lowest ranked MPI process running on the node. An ESMF application with MPI non-accelerated components, an Open. ACC accelerated component - and a coupler that uses the newly added support in ESMF to query the number of accelerator devices. The application allocates all devices attached to a node to the lowest ranked MPI process running on the node. An ESMF application with MPI non-accelerated components, an Intel. MIC accelerated component - and a coupler that uses the newly added support in ESMF to query the number of accelerator devices. The application allocates all devices attached to a node to the lowest ranked MPI process running on the node.

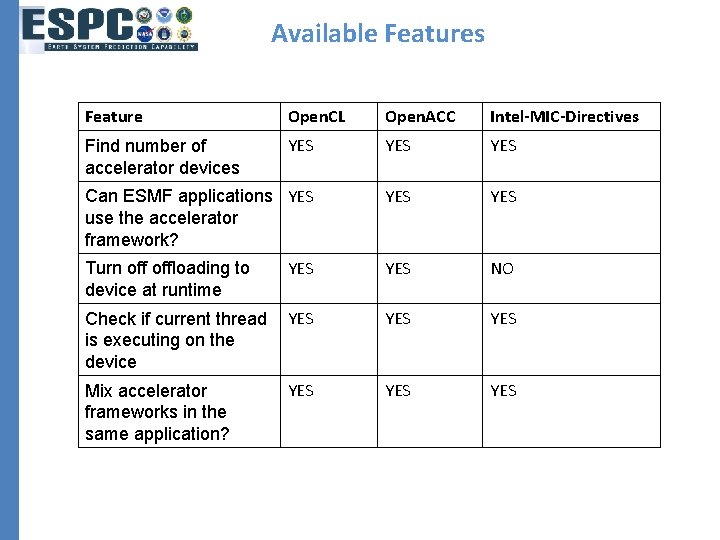

Available Features Feature Open. CL Open. ACC Intel-MIC-Directives Find number of accelerator devices YES YES Can ESMF applications YES use the accelerator framework? YES YES NO Check if current thread YES is executing on the device YES YES YES Turn offloading to device at runtime Mix accelerator frameworks in the same application?

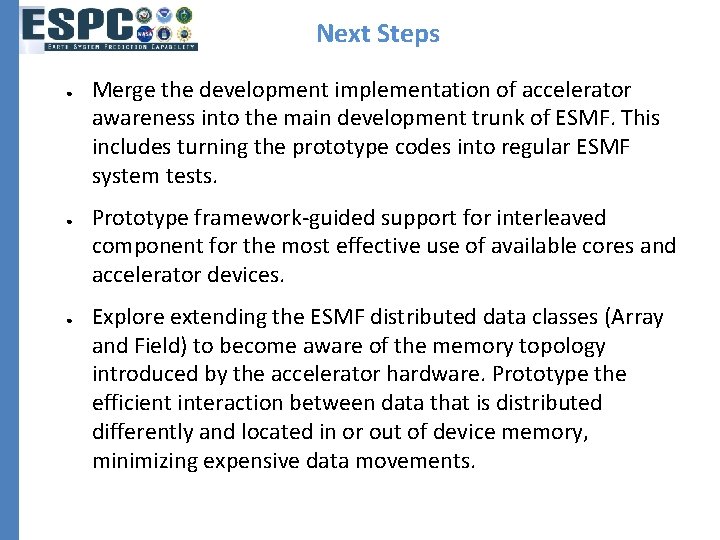

Next Steps ● ● ● Merge the development implementation of accelerator awareness into the main development trunk of ESMF. This includes turning the prototype codes into regular ESMF system tests. Prototype framework-guided support for interleaved component for the most effective use of available cores and accelerator devices. Explore extending the ESMF distributed data classes (Array and Field) to become aware of the memory topology introduced by the accelerator hardware. Prototype the efficient interaction between data that is distributed differently and located in or out of device memory, minimizing expensive data movements.

ESPC Infrastructure • Update on the Earth System Prediction Suite (Cecelia De. Luca)

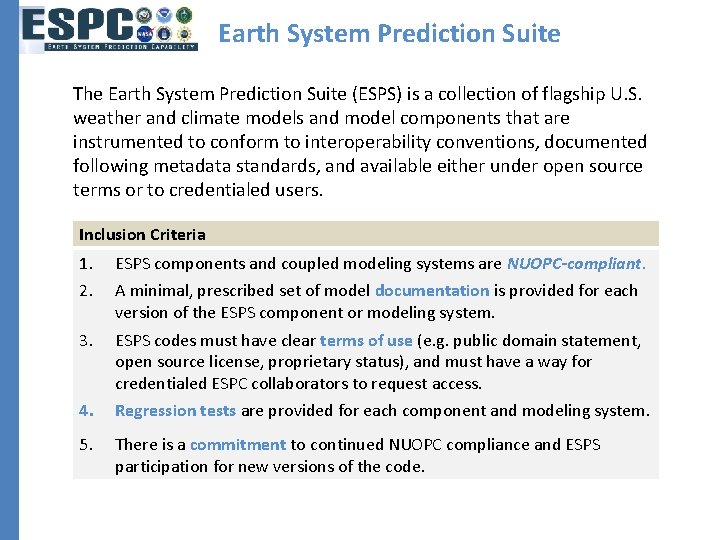

Earth System Prediction Suite The Earth System Prediction Suite (ESPS) is a collection of flagship U. S. weather and climate models and model components that are instrumented to conform to interoperability conventions, documented following metadata standards, and available either under open source terms or to credentialed users. Inclusion Criteria 1. 2. ESPS components and coupled modeling systems are NUOPC-compliant. 3. ESPS codes must have clear terms of use (e. g. public domain statement, open source license, proprietary status), and must have a way for credentialed ESPC collaborators to request access. 4. Regression tests are provided for each component and modeling system. 5. There is a commitment to continued NUOPC compliance and ESPS participation for new versions of the code. A minimal, prescribed set of model documentation is provided for each version of the ESPS component or modeling system.

Status of Modeling Systems using NUOPC Conventions 1. ESPS components and coupled modeling systems are NUOPC-compliant. • NOAA Enviromental Modeling System (NEMS) – now producing early results for Global Spectral Model atmosphere coupled to HYCOM and MOM 5 oceans – ice coupling results anticipated soon • CESM – now producing early results for HYCOM-CICE • COAMPS – system in use includes coupled ocean, atmosphere and wave components • Nav. GEM-HYCOM – system in use served as a model for other efforts • Model E – atmosphere and ocean components can be driven, but not yet producing results

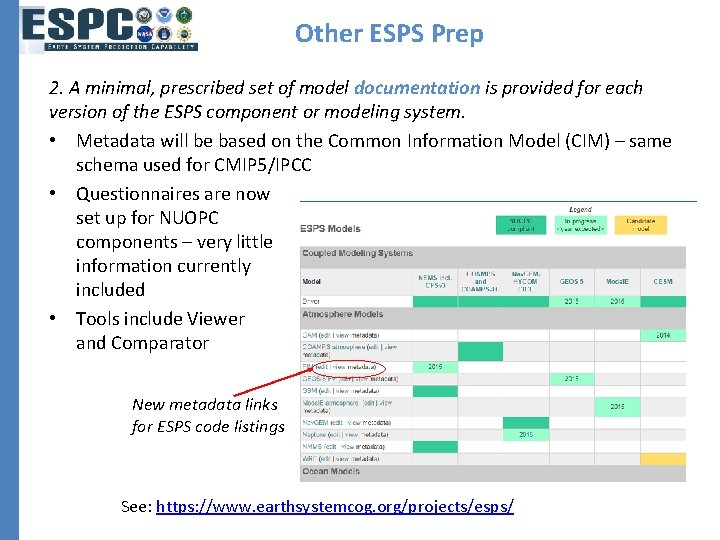

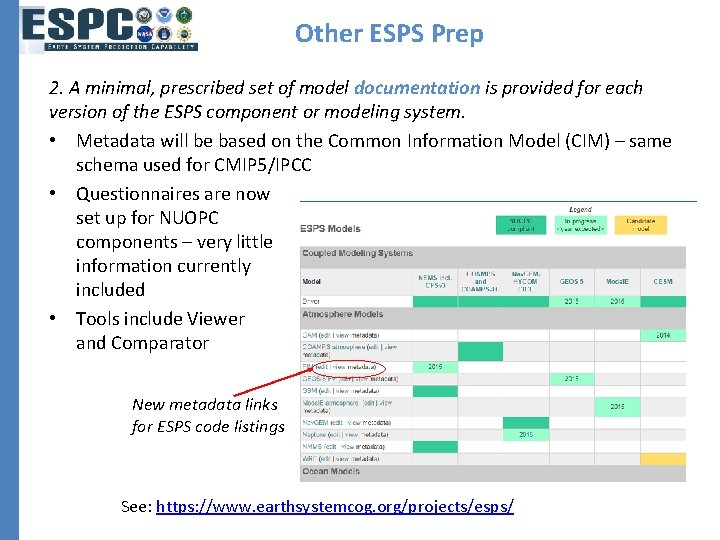

Other ESPS Prep 2. A minimal, prescribed set of model documentation is provided for each version of the ESPS component or modeling system. • Metadata will be based on the Common Information Model (CIM) – same schema used for CMIP 5/IPCC • Questionnaires are now set up for NUOPC components – very little information currently included • Tools include Viewer and Comparator New metadata links for ESPS code listings See: https: //www. earthsystemcog. org/projects/esps/

Other ESPS Prep 3. ESPS codes must have clear terms of use (e. g. public domain statement, open source license, proprietary status), and must have a way for credentialed ESPC collaborators to request access. • Now reviewing and documenting code access paths Have not focused on these yet inclusion criteria yet … 4. Regression tests are provided for each component and modeling system. 5. There is a commitment to continued NUOPC compliance and ESPS participation for new versions of the code.

Next Steps • ESPS BAMS paper proposal accepted in July 2014 – have 6 months to submit • Finalizing paper for submission – had waited on: – More coupled codes producing results – At least one open source coupled code available for examination – Initial metadata setup – Greater precision in discussion, esp. around behavioral guarantees – Reformatting and images suitable for BAMS • Last version here: https: //www. earthsystemcog. org/projects/esps/resources/ Keep working toward fulfilling ESPS criteria