EECS 583 Class 20 Research Topic 2 Stream

![Preliminaries v v Synchronous Data Flow (SDF) [Lee ’ 87] Stream. It [Thies ’ Preliminaries v v Synchronous Data Flow (SDF) [Lee ’ 87] Stream. It [Thies ’](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-4.jpg)

![Prepass Replication [static] S 0 6 22 10 A C 0 C 1 A Prepass Replication [static] S 0 6 22 10 A C 0 C 1 A](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-23.jpg)

![Partition Refinement [dynamic 1] v Available resources at runtime can be more limited than Partition Refinement [dynamic 1] v Available resources at runtime can be more limited than](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-24.jpg)

![Stage Assignment [dynamic 2] v Processor assignment only specifies how actors are overlapped across Stage Assignment [dynamic 2] v Processor assignment only specifies how actors are overlapped across](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-26.jpg)

- Slides: 30

EECS 583 – Class 20 Research Topic 2: Stream Compilation, Stream Graph Modulo Scheduling University of Michigan November 30, 2011 Guest Speaker Today: Daya Khudia

Announcements & Reading Material v This class » “Orchestrating the Execution of Stream Programs on Multicore Platforms, ” M. Kudlur and S. Mahlke, Proc. ACM SIGPLAN 2008 Conference on Programming Language Design and Implementation, Jun. 2008. v Next class – GPU compilation » “Program optimization space pruning for a multithreaded GPU, ” S. Ryoo, C. Rodrigues, S. Stone, S. Baghsorkhi, S. Ueng, J. Straton, and W. Hwu, Proc. Intl. Sym. on Code Generation and Optimization, Mar. 2008. -1 -

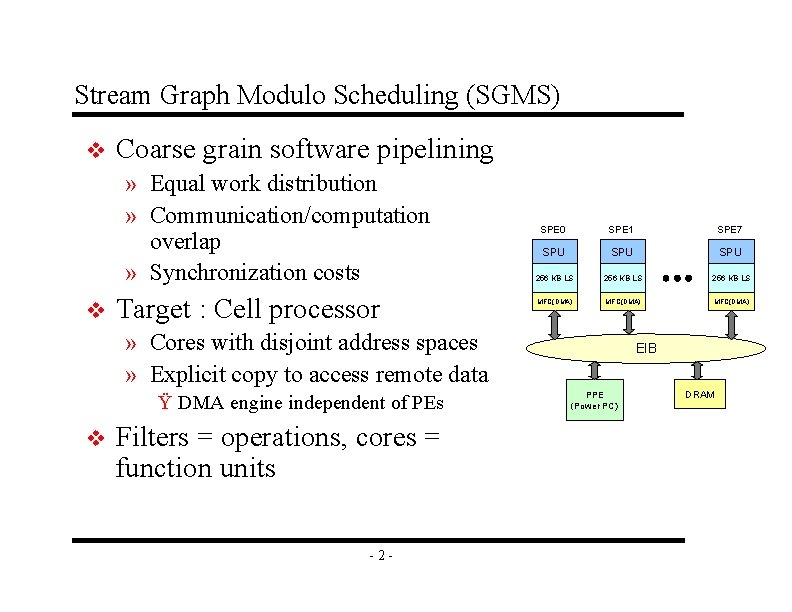

Stream Graph Modulo Scheduling (SGMS) v Coarse grain software pipelining » Equal work distribution » Communication/computation overlap » Synchronization costs v Target : Cell processor SPE 0 SPE 1 SPE 7 SPU SPU 256 KB LS MFC(DMA) » Cores with disjoint address spaces » Explicit copy to access remote data Ÿ DMA engine independent of PEs v Filters = operations, cores = function units -2 - EIB PPE (Power PC) DRAM

![Preliminaries v v Synchronous Data Flow SDF Lee 87 Stream It Thies Preliminaries v v Synchronous Data Flow (SDF) [Lee ’ 87] Stream. It [Thies ’](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-4.jpg)

Preliminaries v v Synchronous Data Flow (SDF) [Lee ’ 87] Stream. It [Thies ’ 02] int->int filter FIR(int N, int wgts[N]) { int wgts[N]; work pop 1 push 1 { int i, sum = 0; wgts = adapt(wgts); for(i=0; i<N; i++) sum += peek(i)*wgts[i]; push(sum); pop(); } } s l s u f e l e t a at t S S Push and pop items from input/output FIFOs -3 -

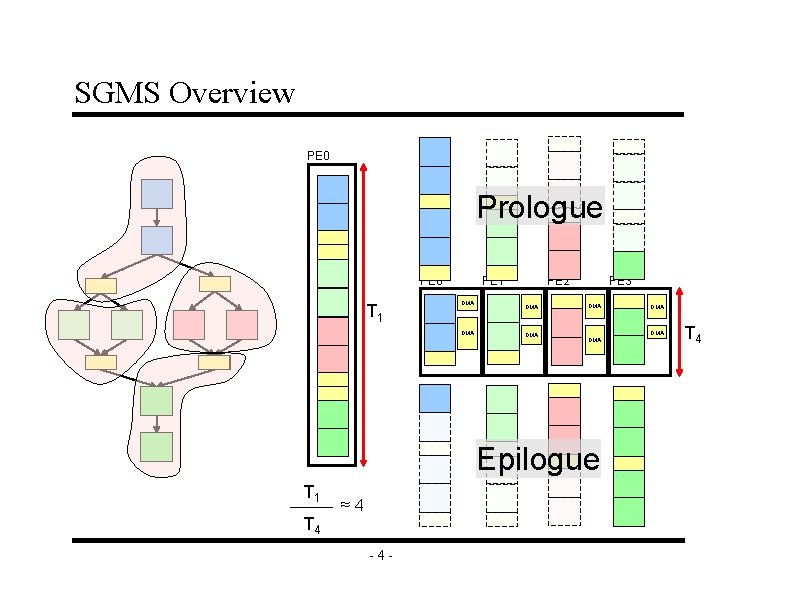

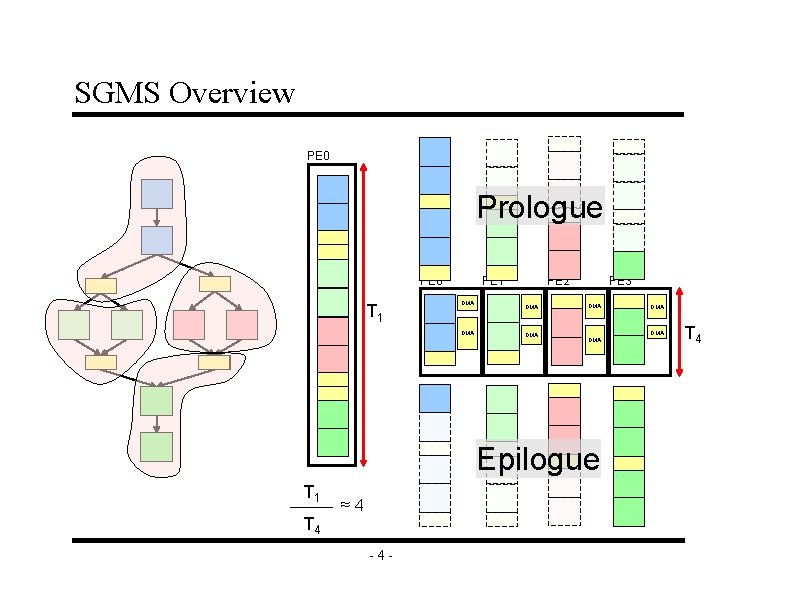

SGMS Overview PE 0 Prologue PE 0 T 1 PE 1 DMA PE 2 DMA PE 3 DMA DMA Epilogue T 1 T 4 ≈4 -4 - DMA T 4

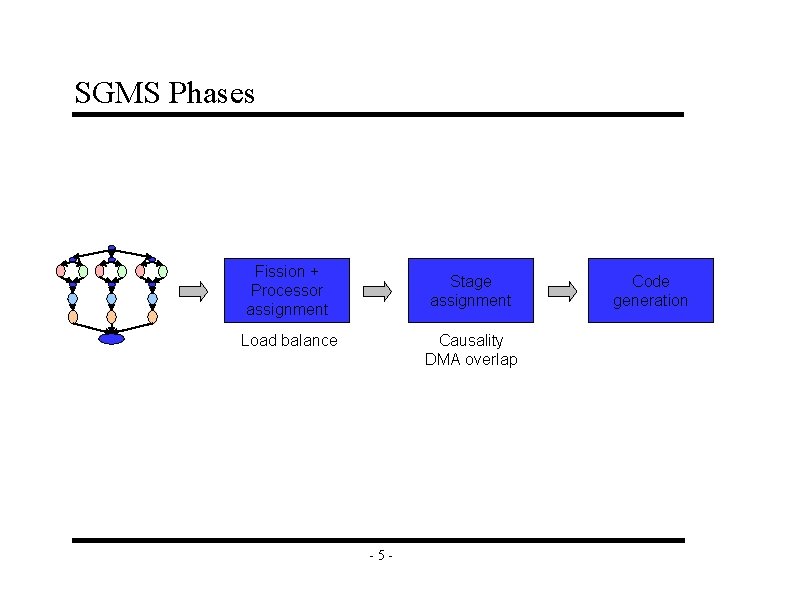

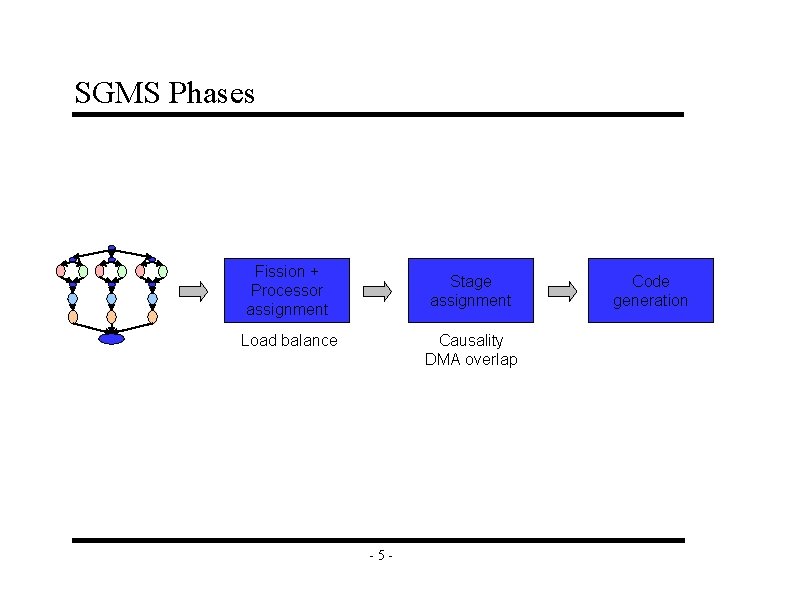

SGMS Phases Fission + Processor assignment Stage assignment Load balance Causality DMA overlap -5 - Code generation

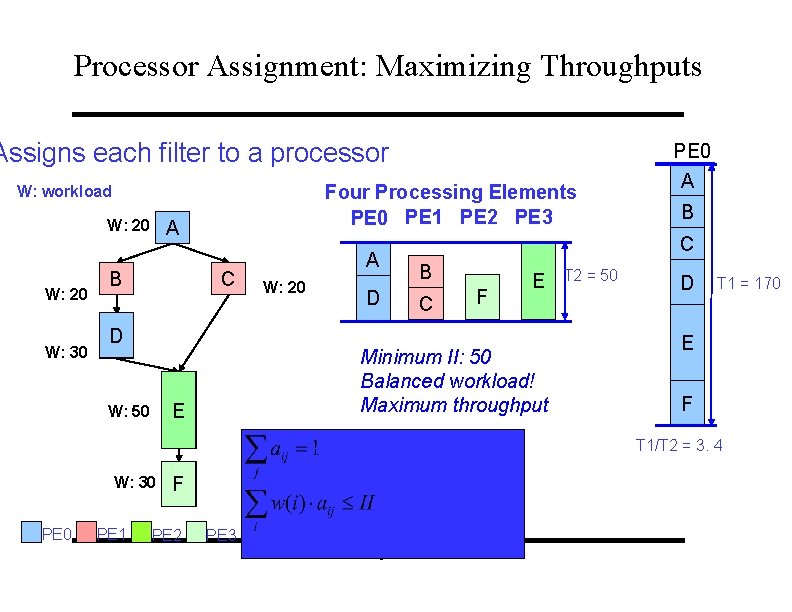

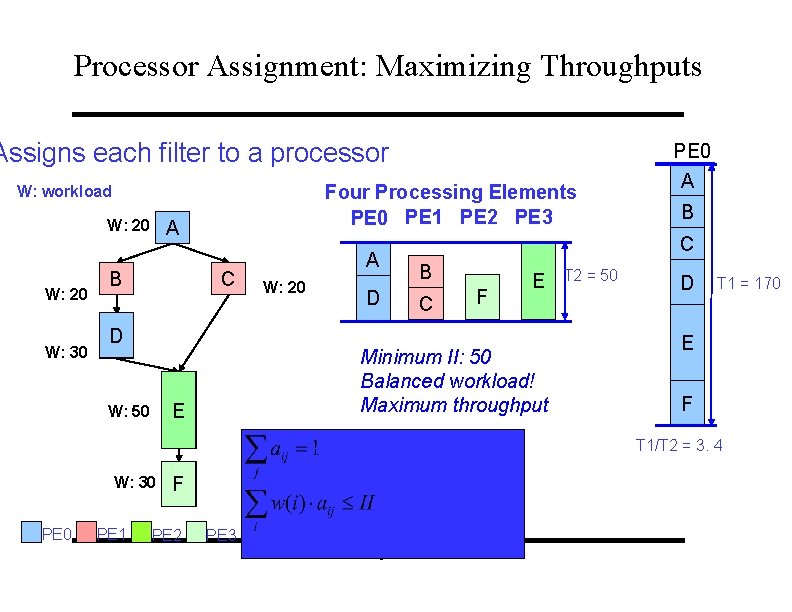

Processor Assignment: Maximizing Throughputs Assigns each filter to a processor Four Processing Elements PE 0 PE 1 PE 2 PE 3 W: workload W: 20 W: 30 A B C C F for all filter i = 1, …, N W: 30 PE 1 F PE 2 E Minimum II: 50 Balanced workload! Maximum throughput E W: 50 B D D for all PE j = 1, …, P PE 3 Minimize II B C A W: 20 PE 0 A -6 - T 2 = 50 D T 1 = 170 E F T 1/T 2 = 3. 4

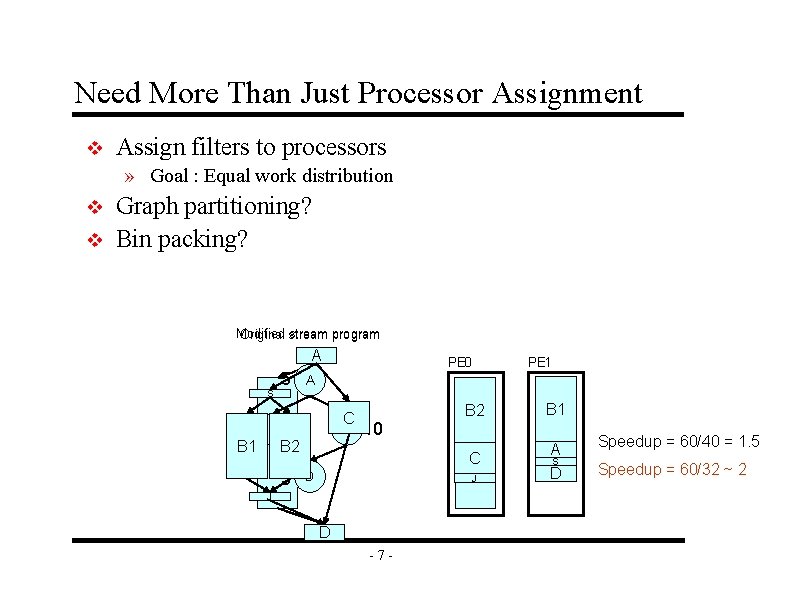

Need More Than Just Processor Assignment v Assign filters to processors » Goal : Equal work distribution v v Graph partitioning? Bin packing? Modified Original stream program A S 40 5 PE 0 A C B 1 BB 2 5 PE 1 10 D B 2 B C J J D -7 - A B 1 C D A S D Speedup = 60/40 = 1. 5 Speedup = 60/32 ~ 2

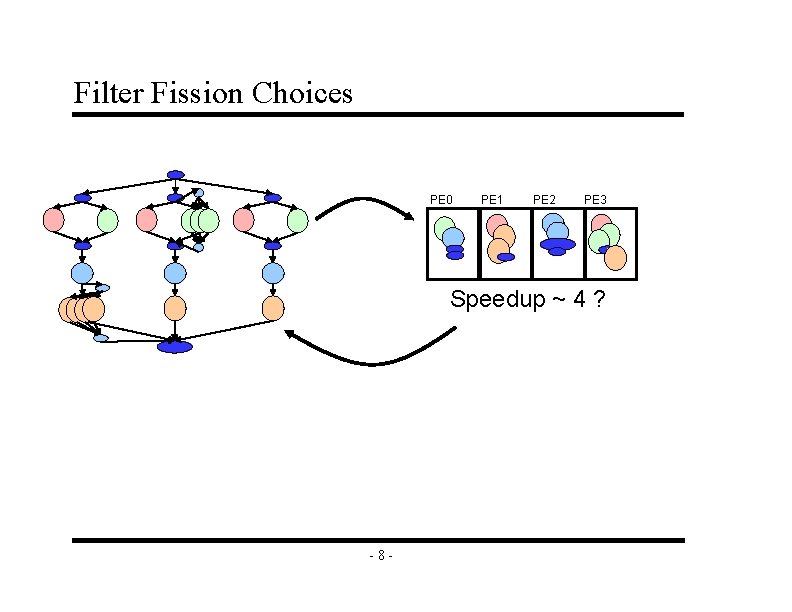

Filter Fission Choices PE 0 PE 1 PE 2 PE 3 Speedup ~ 4 ? -8 -

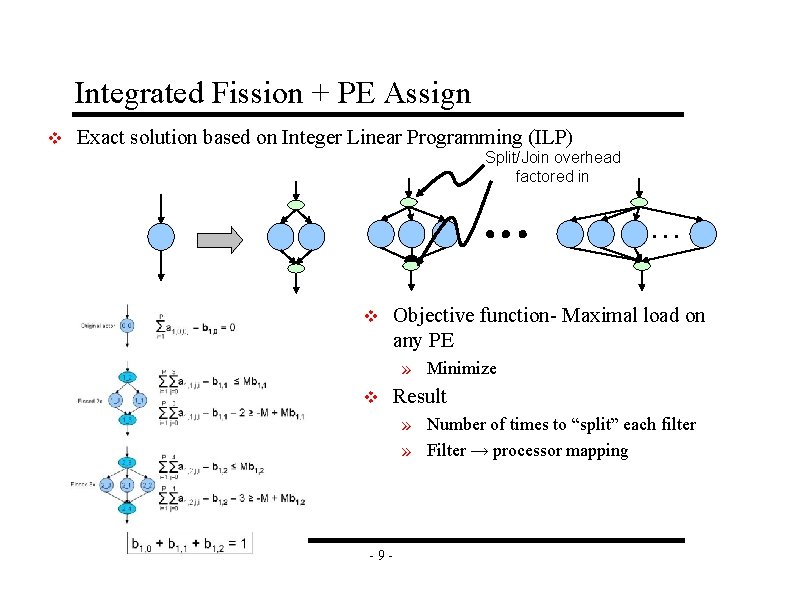

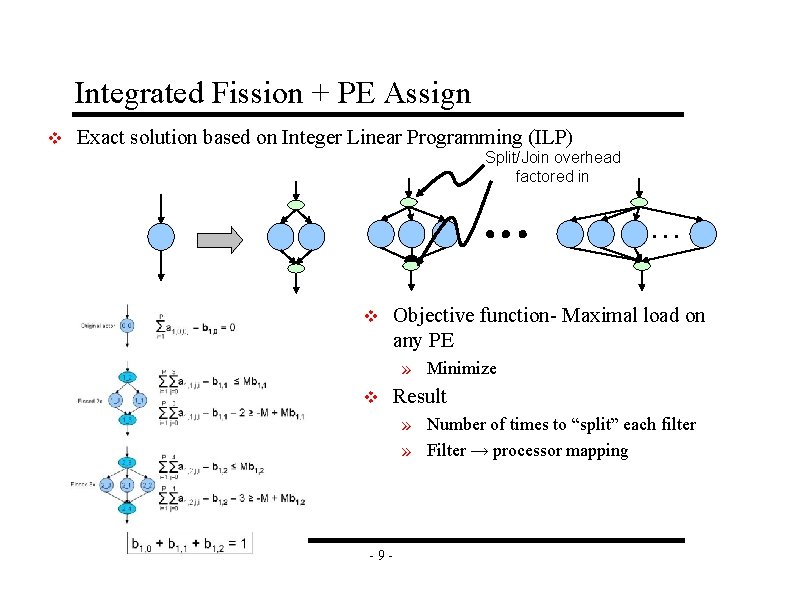

Integrated Fission + PE Assign v Exact solution based on Integer Linear Programming (ILP) Split/Join overhead factored in … v Objective function- Maximal load on any PE » Minimize v Result » Number of times to “split” each filter » Filter → processor mapping -9 -

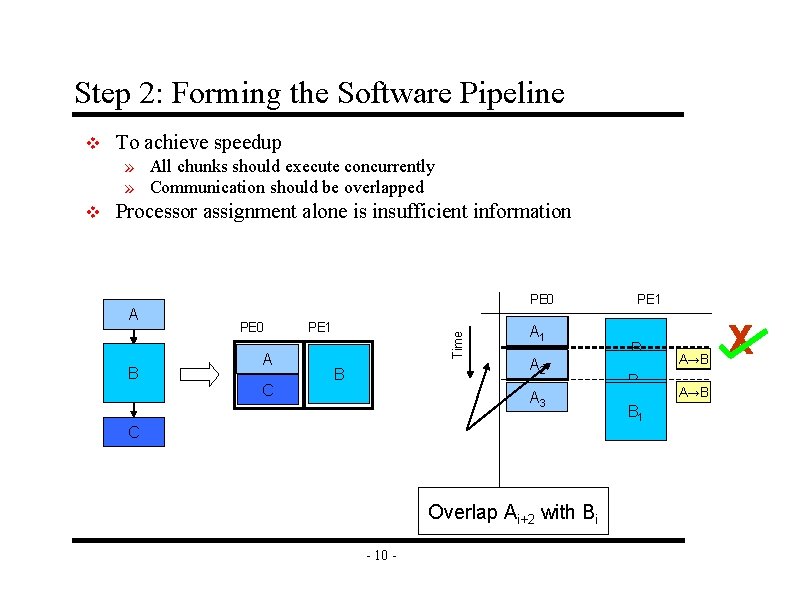

Step 2: Forming the Software Pipeline v To achieve speedup » All chunks should execute concurrently » Communication should be overlapped Processor assignment alone is insufficient information A B PE 0 A C PE 1 Time v B AA 1 A 2 A 3 C Overlap Ai+2 with Bi - 10 - PE 1 B B 11 A→B X

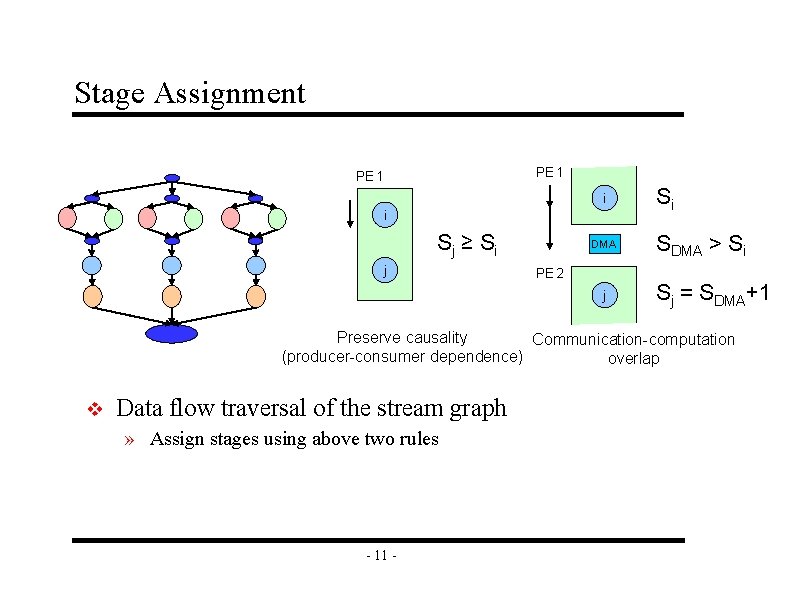

Stage Assignment PE 1 i i Sj ≥ S i j DMA PE 2 j Si SDMA > Si Sj = SDMA+1 Preserve causality Communication-computation (producer-consumer dependence) overlap v Data flow traversal of the stream graph » Assign stages using above two rules - 11 -

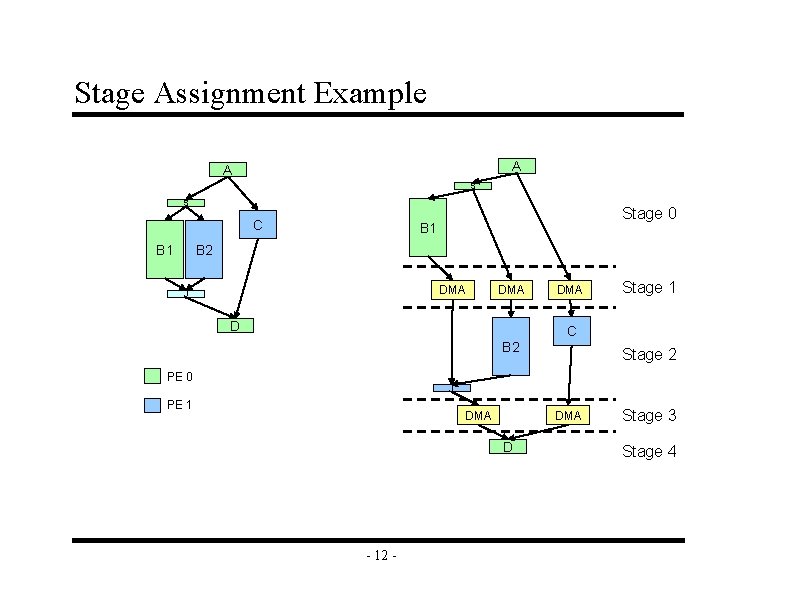

Stage Assignment Example A A S S C B 1 Stage 0 B 1 B 2 DMA J DMA D B 2 PE 0 DMA Stage 1 C Stage 2 J PE 1 DMA D - 12 - Stage 3 Stage 4

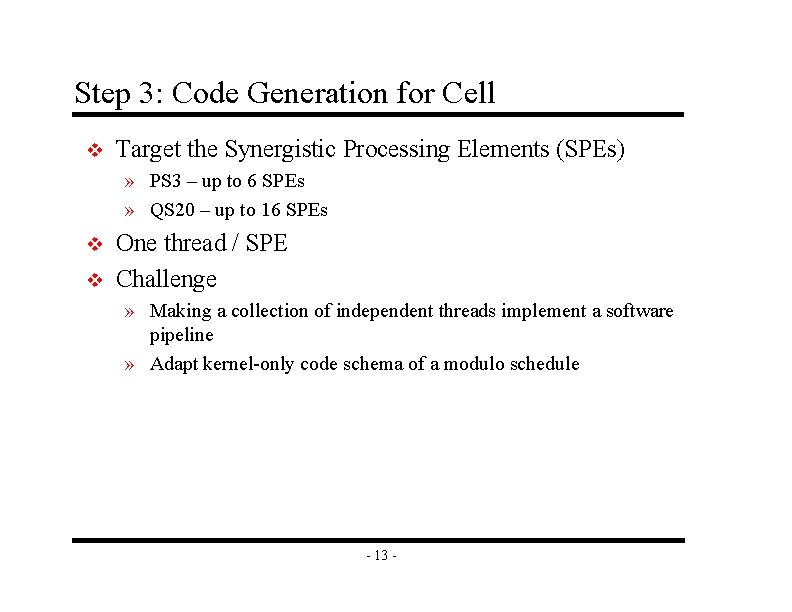

Step 3: Code Generation for Cell v Target the Synergistic Processing Elements (SPEs) » PS 3 – up to 6 SPEs » QS 20 – up to 16 SPEs v v One thread / SPE Challenge » Making a collection of independent threads implement a software pipeline » Adapt kernel-only code schema of a modulo schedule - 13 -

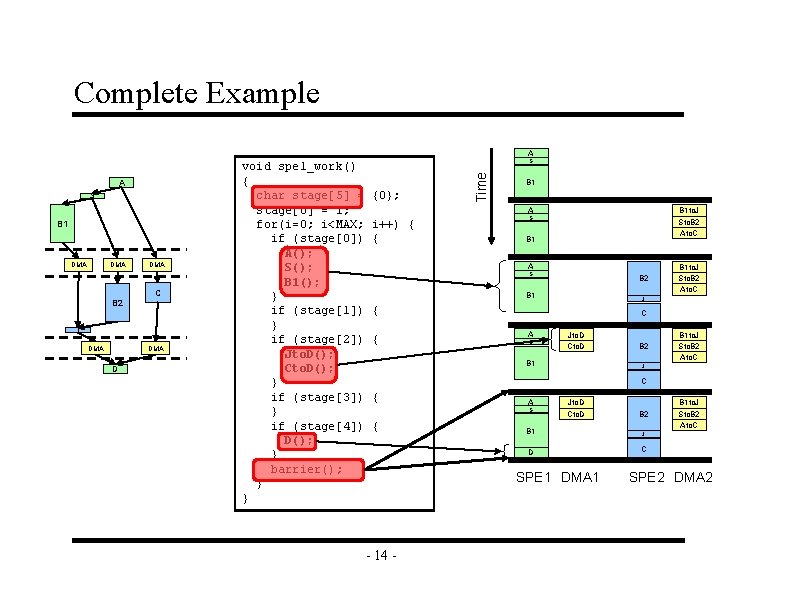

Complete Example A B 1 DMA DMA C B 2 J DMA D S {0}; Time A S void spe 1_work() { char stage[5] = stage[0] = 1; for(i=0; i<MAX; if (stage[0]) A(); S(); B 1(); } if (stage[1]) } if (stage[2]) Jto. D(); Cto. D(); } if (stage[3]) } if (stage[4]) D(); } barrier(); } } B 1 A i++) { { B 1 to. J Sto. B 2 Ato. C S B 1 A S B 2 B 1 J { { B 1 to. J Sto. B 2 Ato. C C A S Jto. D Cto. D B 1 B 2 B 1 to. J Sto. B 2 Ato. C J C { A S { Jto. D Cto. D B 1 D SPE 1 DMA 1 - 14 - B 2 B 1 to. J Sto. B 2 Ato. C J C SPE 2 DMA 2

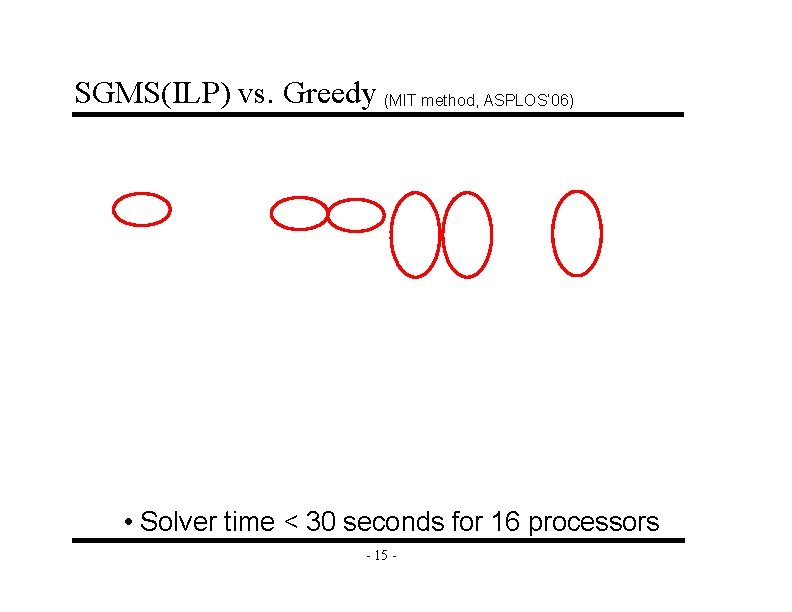

SGMS(ILP) vs. Greedy (MIT method, ASPLOS’ 06) • Solver time < 30 seconds for 16 processors - 15 -

SGMS Conclusions v Streamroller » Efficient mapping of stream programs to multicore » Coarse grain software pipelining v Performance summary » 14. 7 x speedup on 16 cores » Up to 35% better than greedy solution (11% on average) v Scheduling framework » Tradeoff memory space vs. load balance Ÿ Memory constrained (embedded) systems Ÿ Cache based system - 16 -

Discussion Points v v Is it possible to convert stateful filters into stateless? What if the application does not behave as you expect? » Filters change execution time? » Memory faster/slower than expected? v v v Could this be adapted for a more conventional multiprocessor with caches? Can C code be automatically streamized? Now you have seen 3 forms of software pipelining: » 1) Instruction level modulo scheduling, 2) Decoupled software pipelining, 3) Stream graph modulo scheduling » Where else can it be used? - 17 -

“Flextream: Adaptive Compilation of Streaming Applications for Heterogeneous Architectures, ”

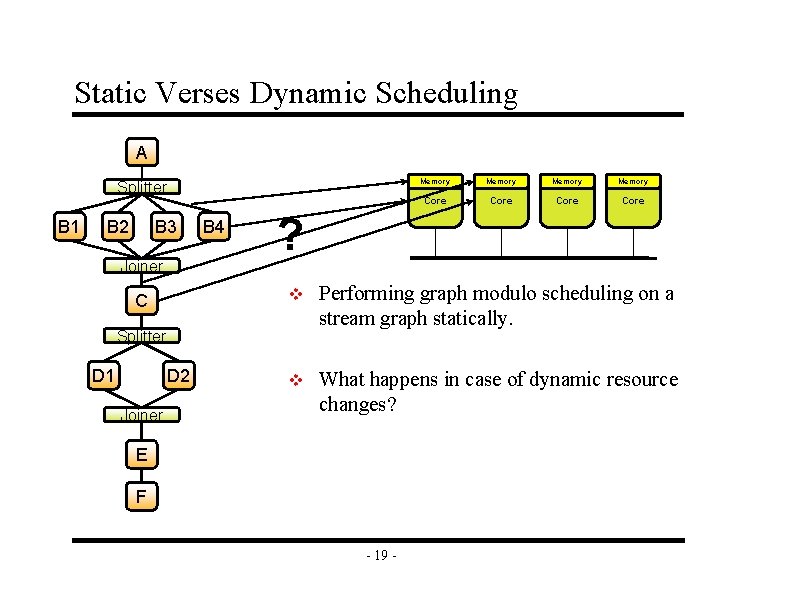

Static Verses Dynamic Scheduling A Splitter B 1 B 2 B 3 Joiner C B 4 D 2 Joiner Memory Core ? v Performing graph modulo scheduling on a stream graph statically. v What happens in case of dynamic resource changes? Splitter D 1 Memory E F - 19 -

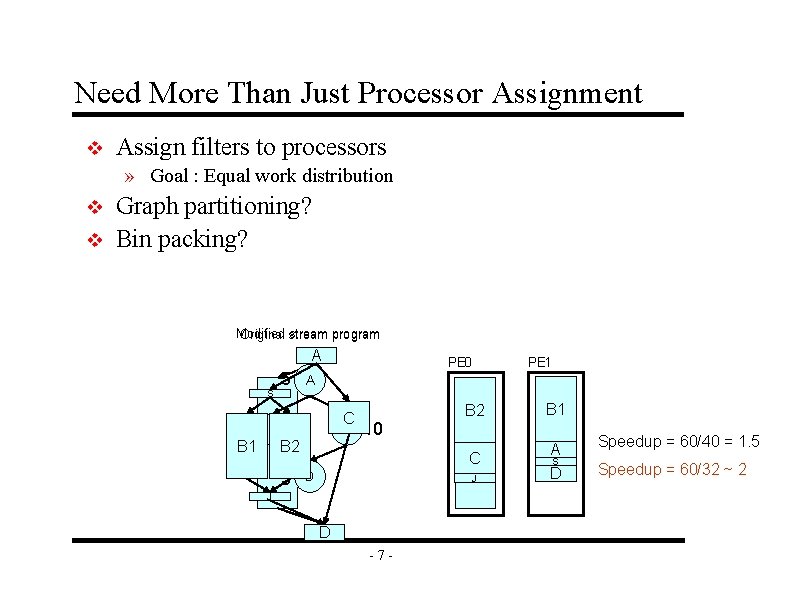

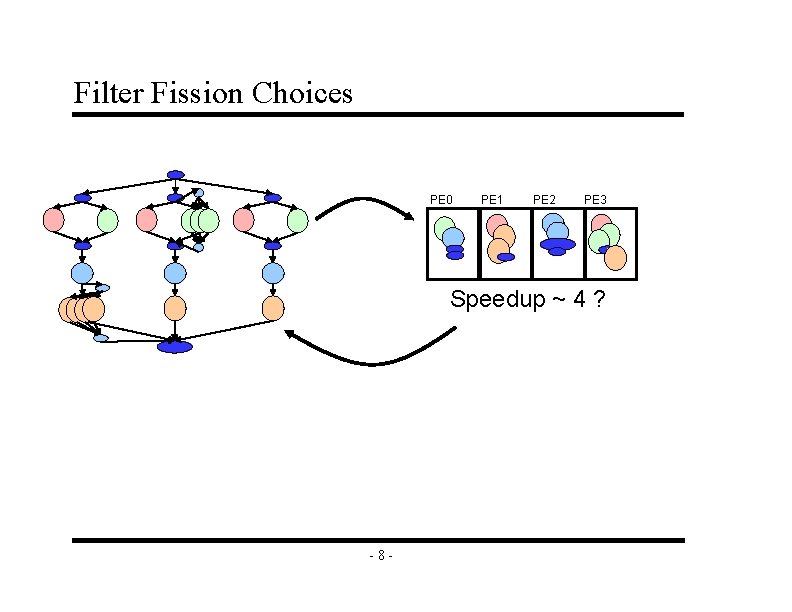

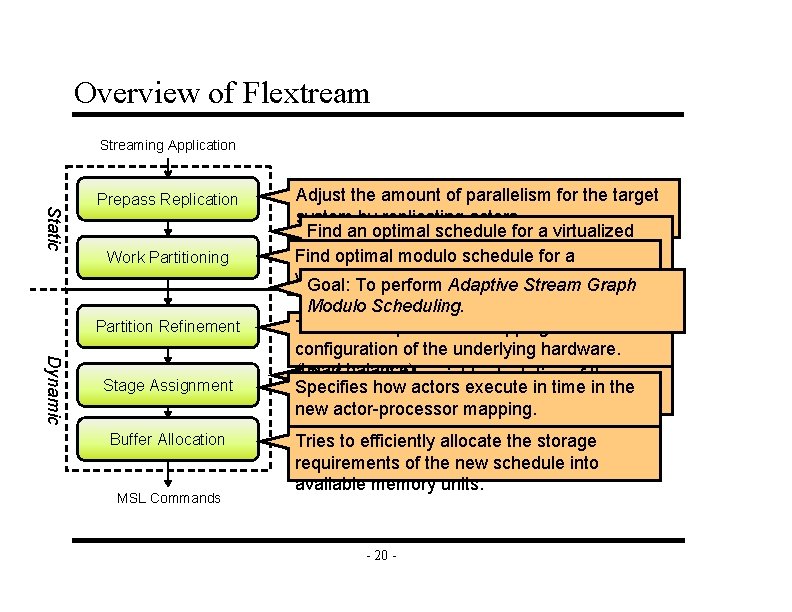

Overview of Flextream Streaming Application Static Prepass Replication Work Partitioning Partition Refinement Dynamic Stage Assignment Buffer Allocation MSL Commands Adjust the amount of parallelism for the target system by replicating actors. Find an optimal schedule for a virtualized member of modulo a family schedule of processors. Find optimal for a virtualized member Adaptive of a family of processors. Goal: To perform Stream Graph Modulo Scheduling. Tunes actor-processor mapping to the real configuration of the underlying hardware. (Load balance) Performs light-weight adaptation of the Specifies how actors execute in time in the schedule for the current configuration of the new actor-processor mapping. target hardware. Tries to efficiently allocate the storage requirements of the new schedule into available memory units. - 20 -

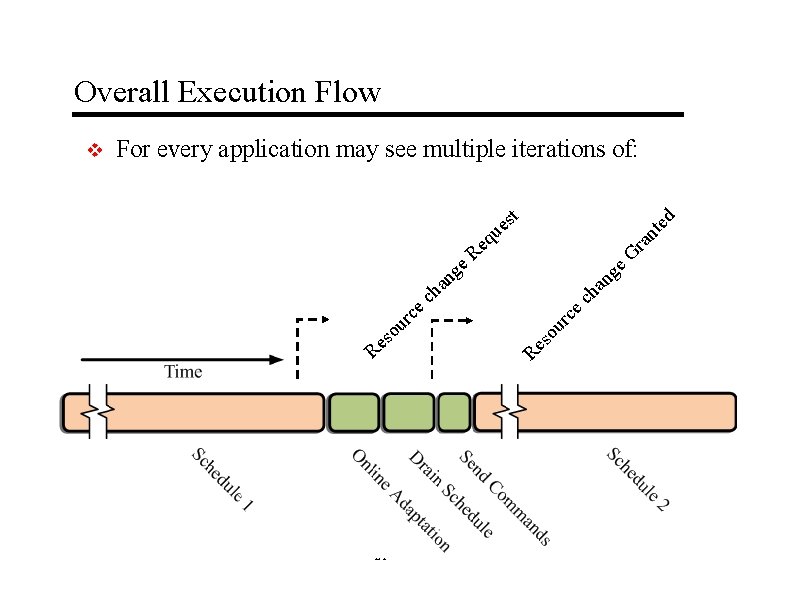

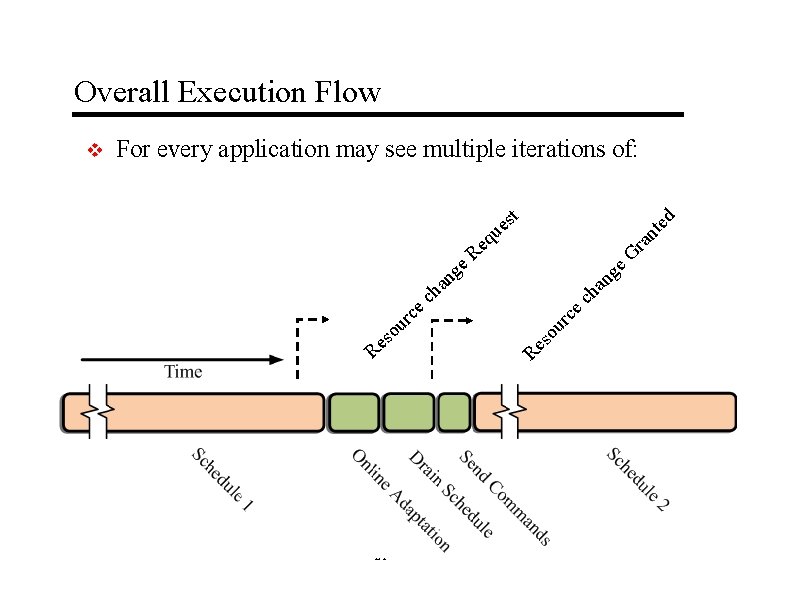

Overall Execution Flow For every application may see multiple iterations of: t - 21 - ra n G ce ur so s e R ch an a h ec c r ou q e R ge e g n te d s ue Re v

![Prepass Replication static S 0 6 22 10 A C 0 C 1 A Prepass Replication [static] S 0 6 22 10 A C 0 C 1 A](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-23.jpg)

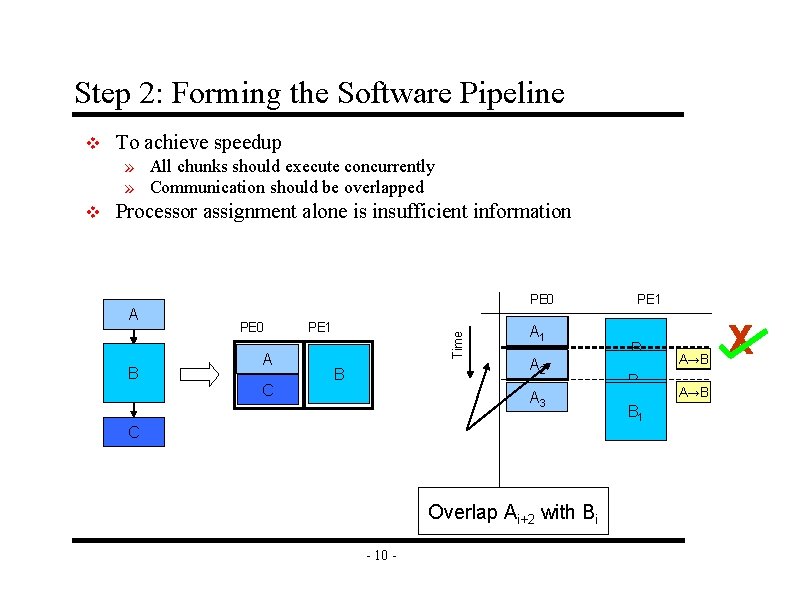

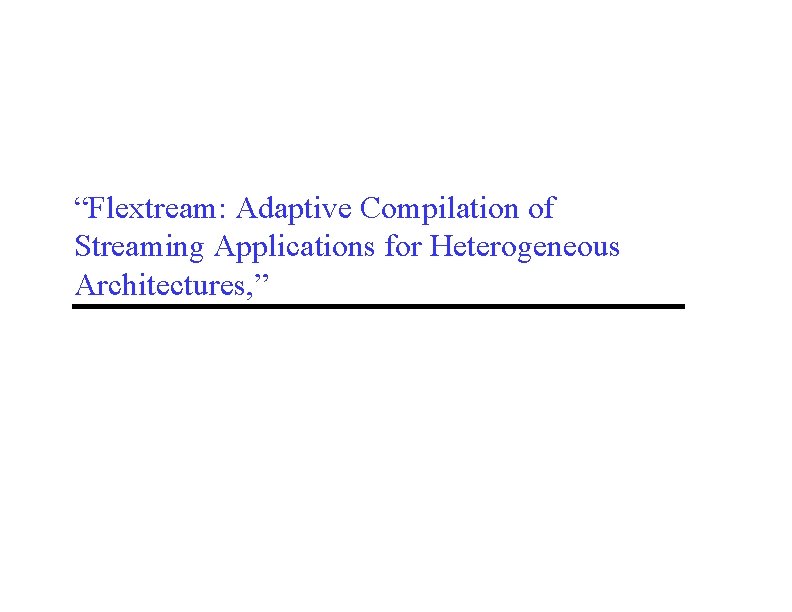

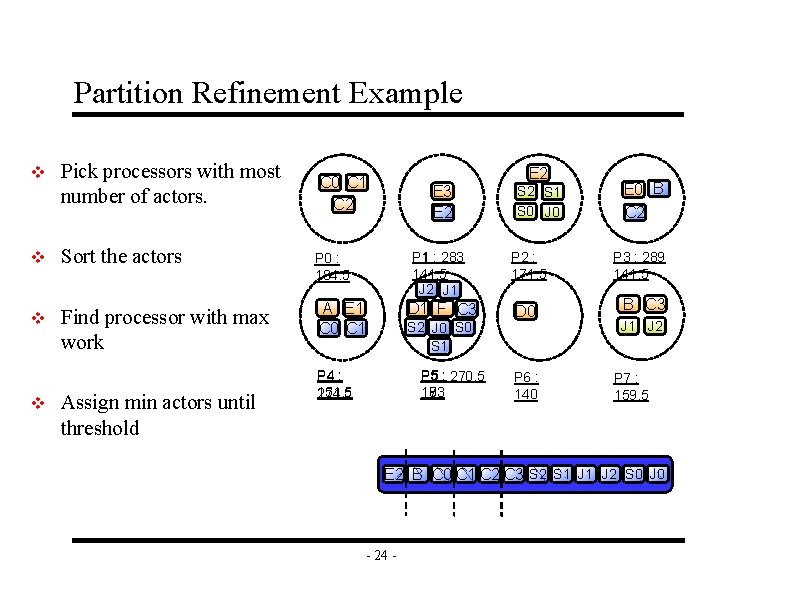

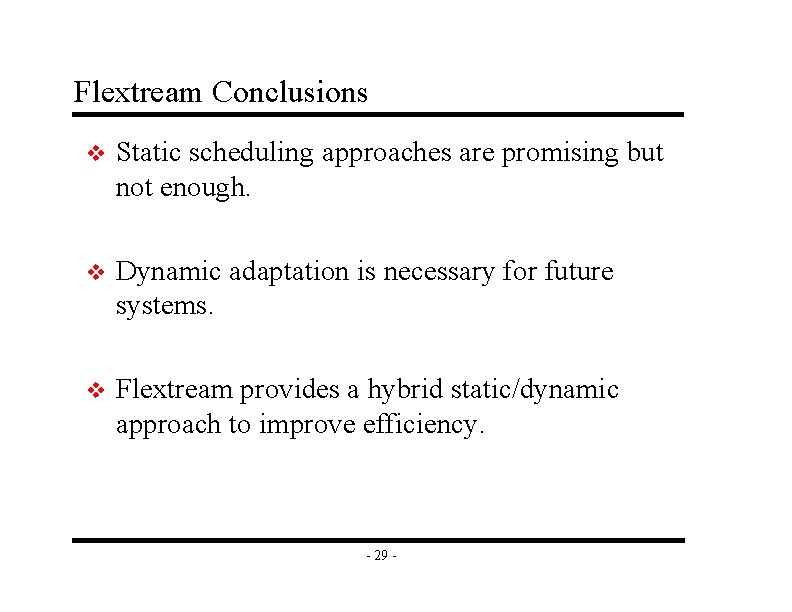

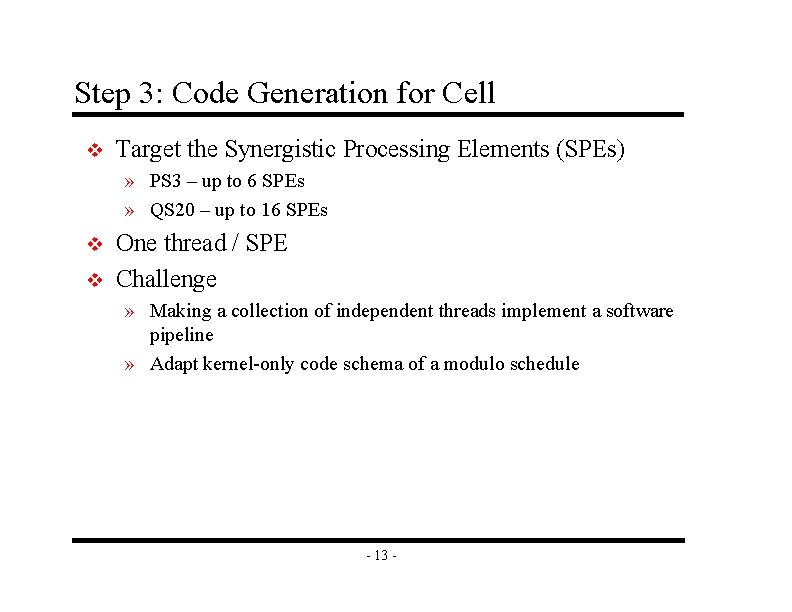

Prepass Replication [static] S 0 6 22 10 A C 0 C 1 A E 0 22 86 B S 1 6 246 326 566 10 C D E F 61. 5 J 0 6 P 0 : 10 151. 5 C 2 C 3 B C 0 22 D 0 P 1 : 86 147. 5 163 D 1 22 E 1 E 6 J 1 F E 2 6 D 0 D P 2 : 246 184. 5 P 3 : 326 163 E 0 D 1 P 6 : 0 283 141. 5 P 7 : 0 163 S 2 6 21 P 4 E 0 : 566 141. 5 283 21 C C 2 C 3 P 5 E 2 : E 3 10 151. 5 E 1 J 2 - 22 - 141. 5

![Partition Refinement dynamic 1 v Available resources at runtime can be more limited than Partition Refinement [dynamic 1] v Available resources at runtime can be more limited than](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-24.jpg)

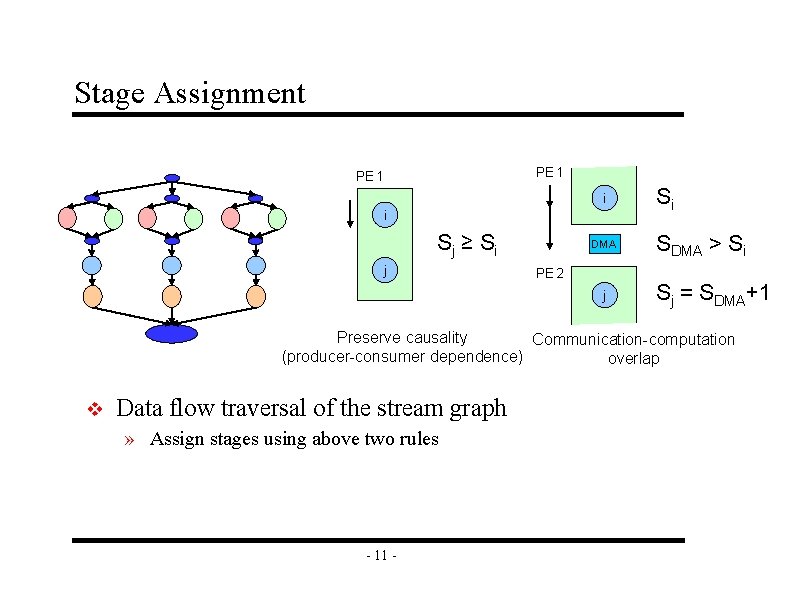

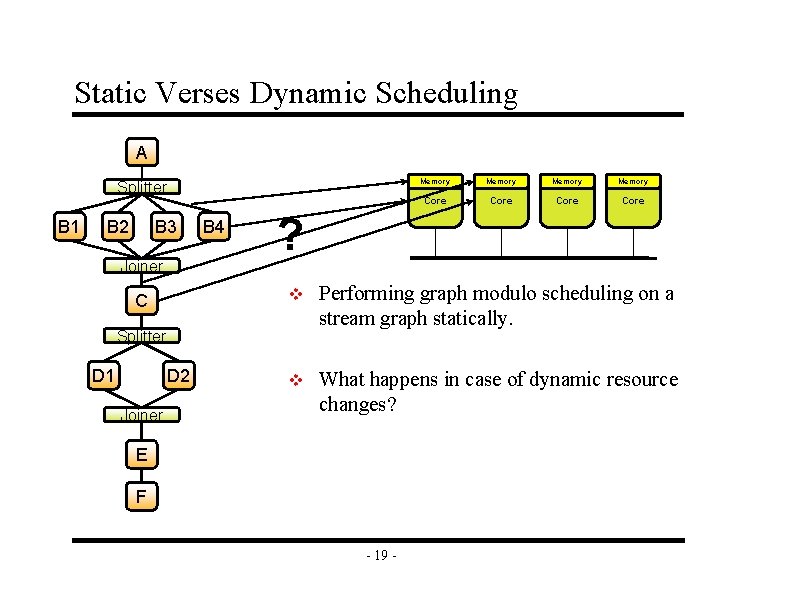

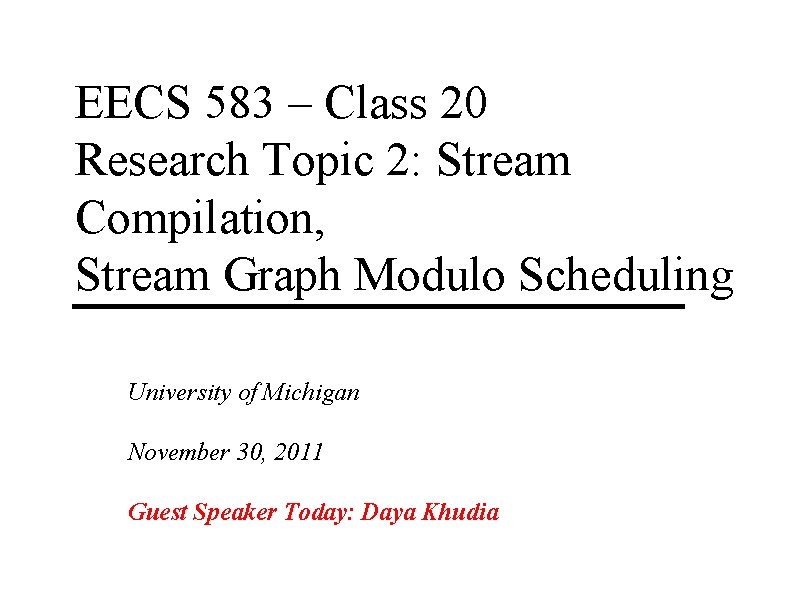

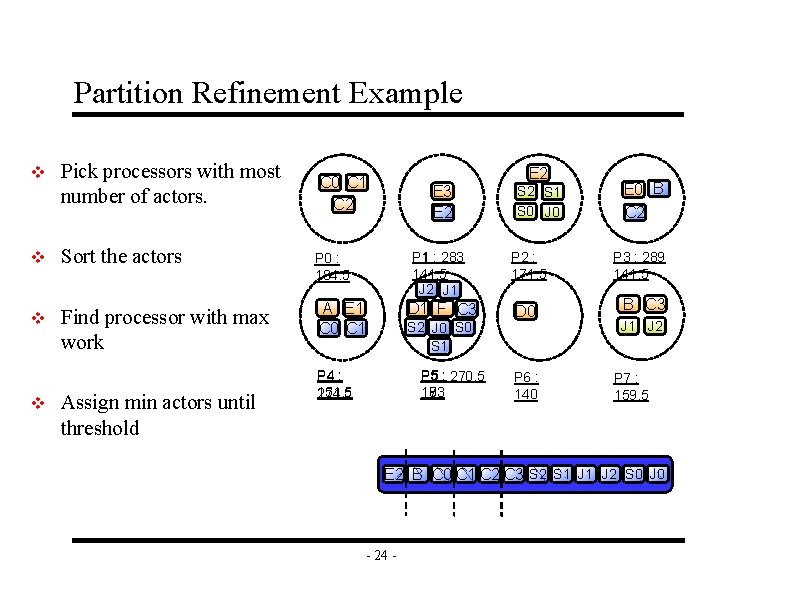

Partition Refinement [dynamic 1] v Available resources at runtime can be more limited than resources in static target architecture. v Partition refinement tunes actor to processor mapping for the active configuration. v A greedy iterative algorithm is used to achieve this goal. - 23 -

Partition Refinement Example v Pick processors with most number of actors. v Sort the actors P 0 : 184. 5 v Find processor with max work A E 1 C 0 C 1 v Assign min actors until threshold E 2 C 0 C 1 C 2 E 3 E 2 P 1 : 283 141. 5 J 2 J 1 P 2 : 171. 5 D 1 F C 3 D 0 S 2 J 0 S 1 P 4 : 151. 5 274. 5 S 2 S 1 S 0 J 0 P 5 : 270. 5 173 183 193 P 6 : 140 E 0 B C 2 P 3 : 289 141. 5 B C 3 J 1 J 2 P 7 : 159. 5 E 2 B C 0 C 1 C 2 C 3 S 2 S 1 J 2 S 0 J 0 - 24 -

![Stage Assignment dynamic 2 v Processor assignment only specifies how actors are overlapped across Stage Assignment [dynamic 2] v Processor assignment only specifies how actors are overlapped across](https://slidetodoc.com/presentation_image/51a8882532378e5daf654bbfdec35e91/image-26.jpg)

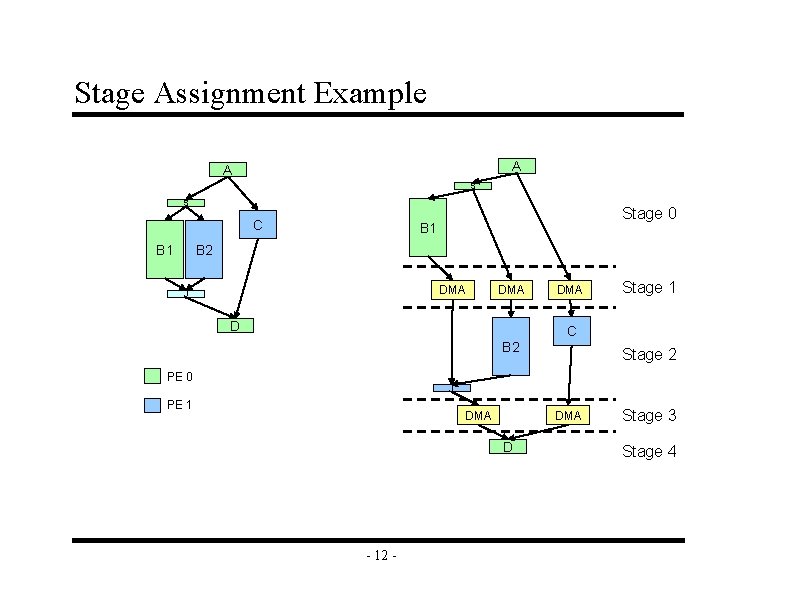

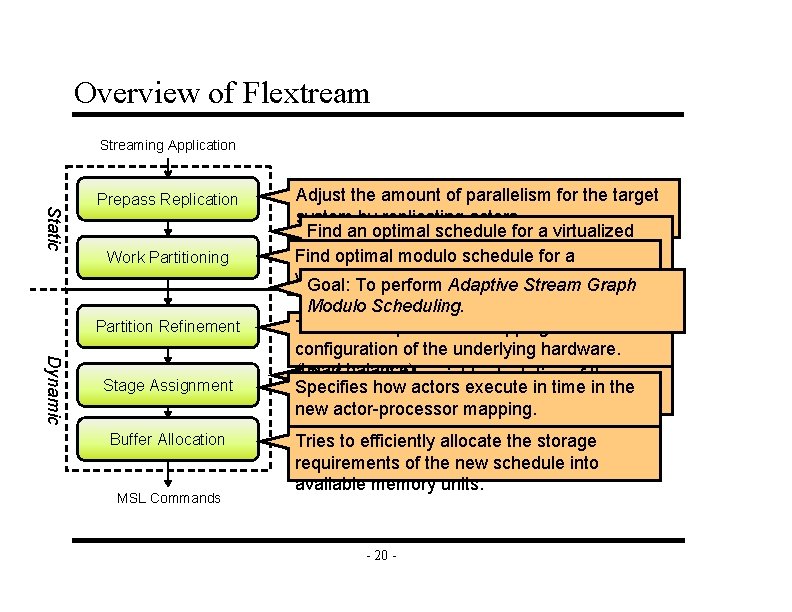

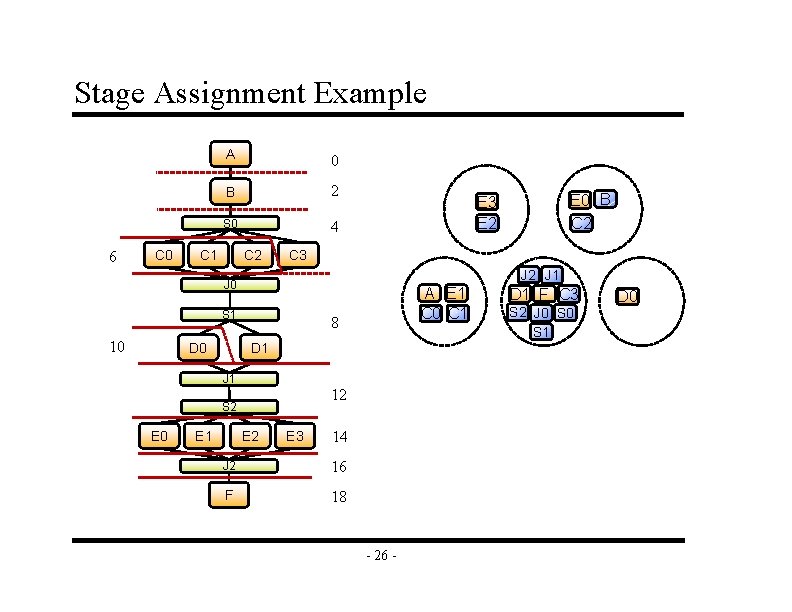

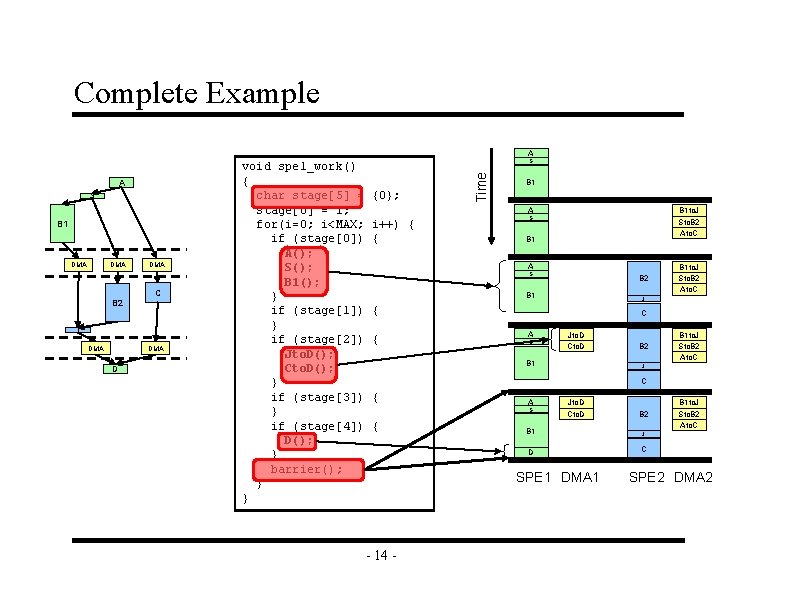

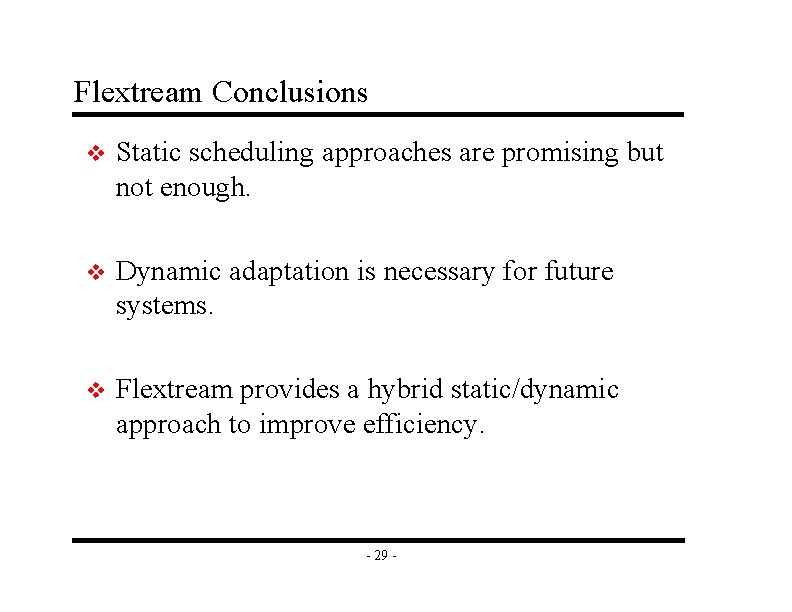

Stage Assignment [dynamic 2] v Processor assignment only specifies how actors are overlapped across processors. v Stage assignment finds how actors are overlapped in time. v Relative start time of the actors is based on stage numbers. v DMA operations will have a separate stage. - 25 -

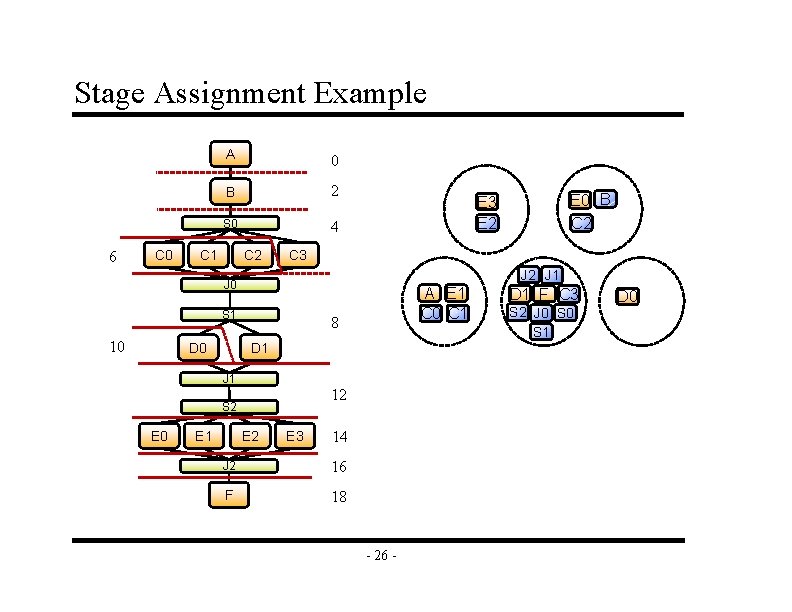

Stage Assignment Example 6 C 0 A 0 B 2 S 0 4 C 1 C 2 C 3 J 2 J 1 J 0 S 1 10 D 0 A E 1 C 0 C 1 8 D 1 J 1 12 S 2 E 0 E 1 E 0 B C 2 E 3 E 2 E 3 14 J 2 16 F 18 - 26 - D 1 F C 3 S 2 J 0 S 1 D 0

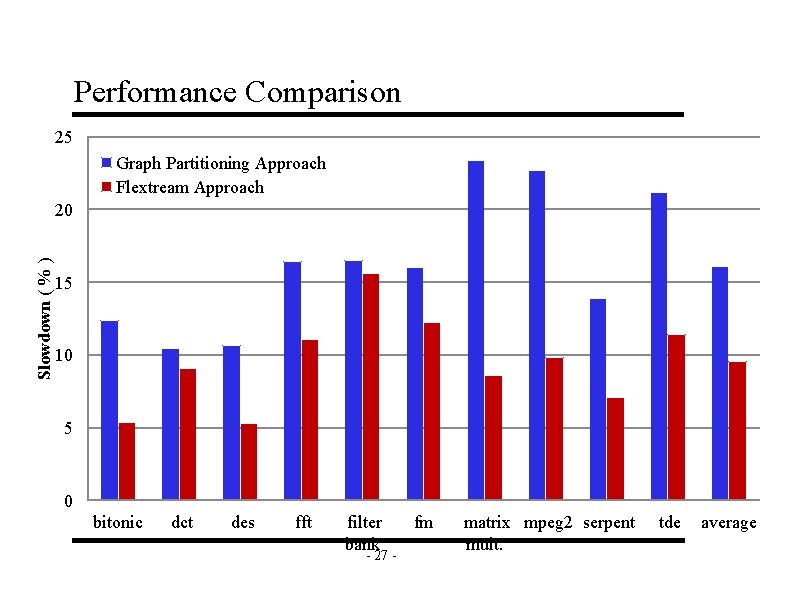

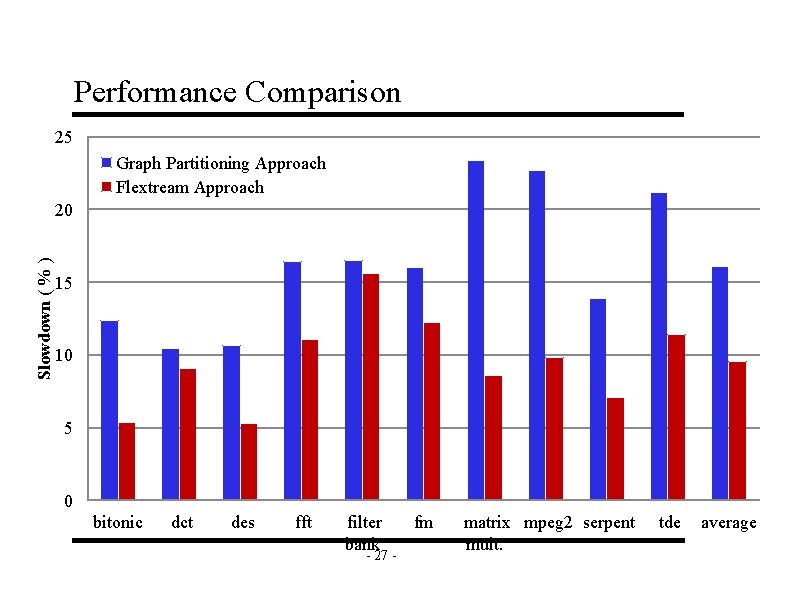

Performance Comparison 25 Graph Partitioning Approach Flextream Approach Slowdown ( % ) 20 15 10 5 0 bitonic dct des fft filter bank - 27 - fm matrix mpeg 2 serpent mult. tde average

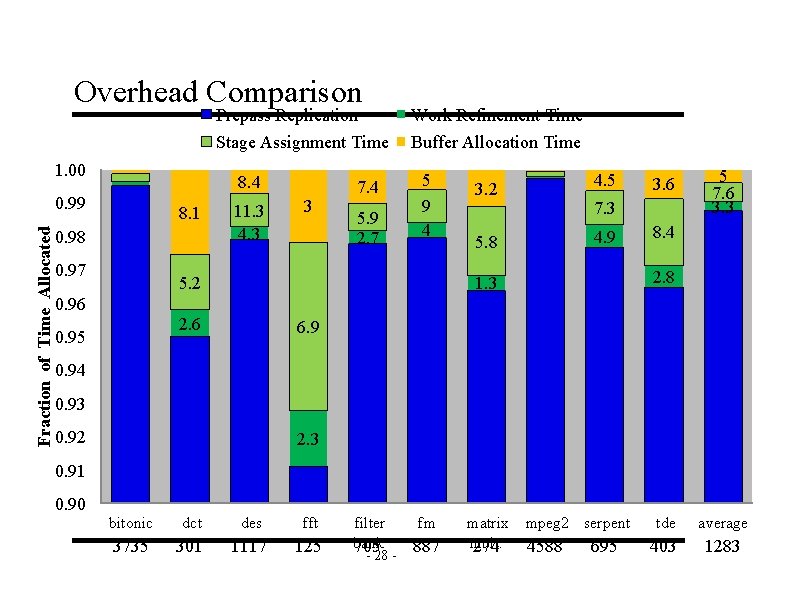

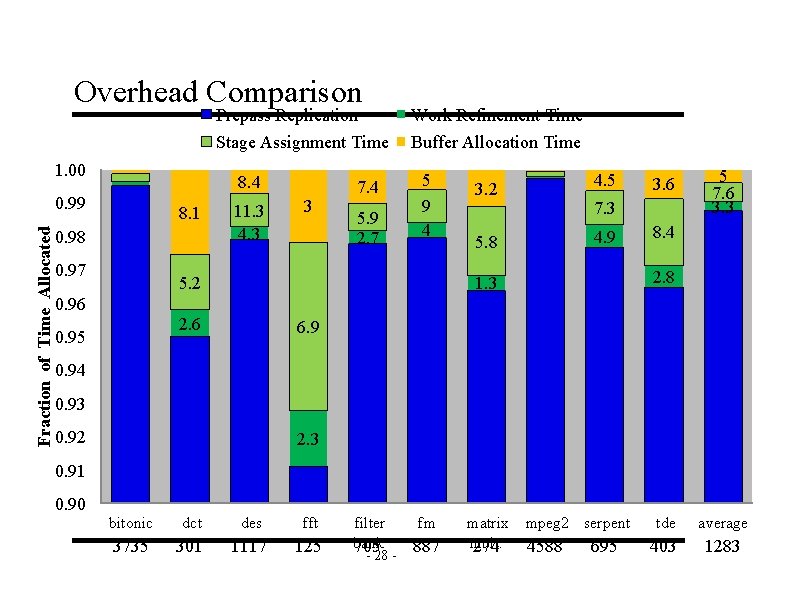

Overhead Comparison 1. 00 Work Refinement Time Stage Assignment Time Buffer Allocation Time 8. 4 0. 99 Fraction of Time Allocated Prepass Replication 8. 1 0. 98 0. 97 11. 3 4. 3 3 7. 4 5. 9 2. 7 5 9 4 5. 2 3. 2 4. 5 5. 8 4. 9 3. 6 7. 3 5 7. 6 3. 3 8. 4 2. 8 1. 3 0. 96 2. 6 0. 95 6. 9 0. 94 0. 93 0. 92 2. 3 0. 91 0. 90 bitonic dct des fft 3735 301 1117 125 filter bank 705 - 28 - fm 887 matrix mult. 274 mpeg 2 serpent 4588 695 tde average 403 1283

Flextream Conclusions v Static scheduling approaches are promising but not enough. v Dynamic adaptation is necessary for future systems. v Flextream provides a hybrid static/dynamic approach to improve efficiency. - 29 -