Dynamic HardwareSoftware Partitioning A First Approach Greg Stitt

- Slides: 34

Dynamic Hardware/Software Partitioning: A First Approach Greg Stitt, Roman Lysecky, Frank Vahid* Department of Computer Science and Engineering University of California, Riverside *Also with the Center for Embedded Computer Systems at UC Irvine

Introduction n Dynamic optimizations an increasing trend – Examples n Dynamo – Dynamic software optimizations n Transmeta Crusoe – Dynamic code morphing n Just In Time Compilation – Interpreted languages n Advantages – Transparent optimizations No designer effort n No tool restrictions n – Adapts to actual usage

Introduction n Drawbacks of current dynamic optimizations n Alternatively, we could perform hw/sw partitioning – Currently limited to software optimizations n Limited speedup (1. 1 x to 1. 3 x common) – Achieve large speedups (2 x to 10 x common) – However, presently dynamic optimization not possible Sw Profiler ______ Sw ______ Processor Critical Regions Hw ______ ASIC/FPGA

Introduction n Ideally, we would perform hardware/software partitioning dynamically – Transparent partitioning Supports all sw languages/tools n Most partitioning approaches have complex tool flows n – Achieves better results than software optimizations n >2 x speedup, energy savings – Adapts to actual usage n Appropriate architecture required – Requires a processor and configurable logic

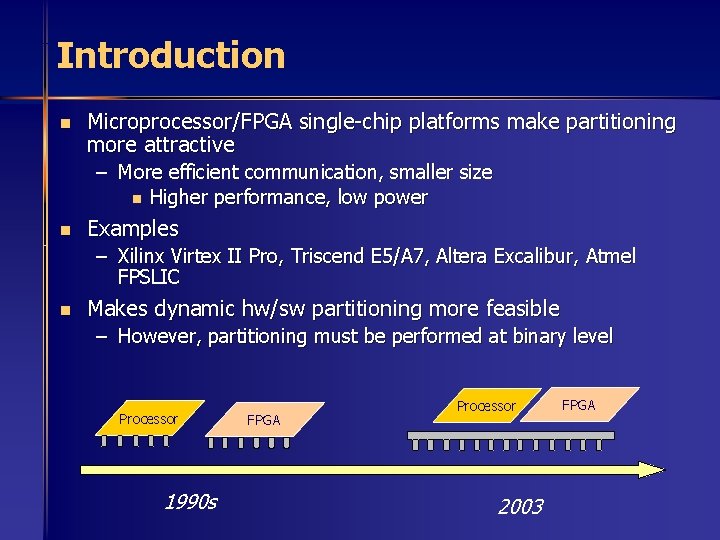

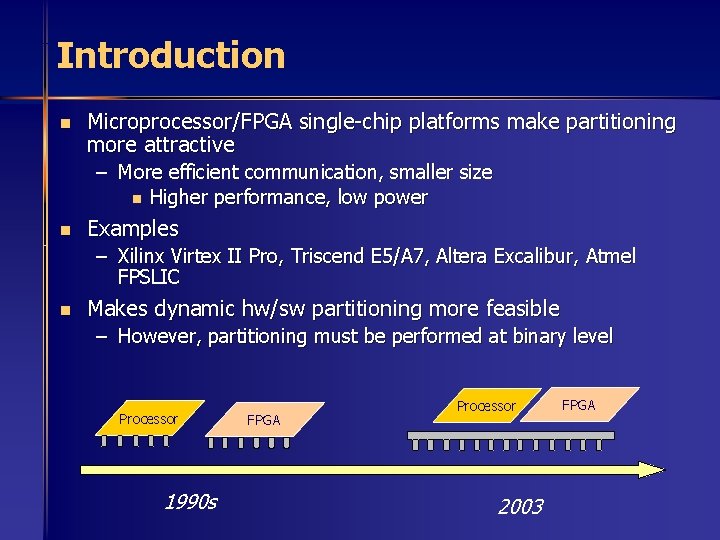

Introduction n Microprocessor/FPGA single-chip platforms make partitioning more attractive – More efficient communication, smaller size n Higher performance, low power n Examples – Xilinx Virtex II Pro, Triscend E 5/A 7, Altera Excalibur, Atmel FPSLIC n Makes dynamic hw/sw partitioning more feasible – However, partitioning must be performed at binary level Processor 1990 s FPGA Processor 2003 FPGA

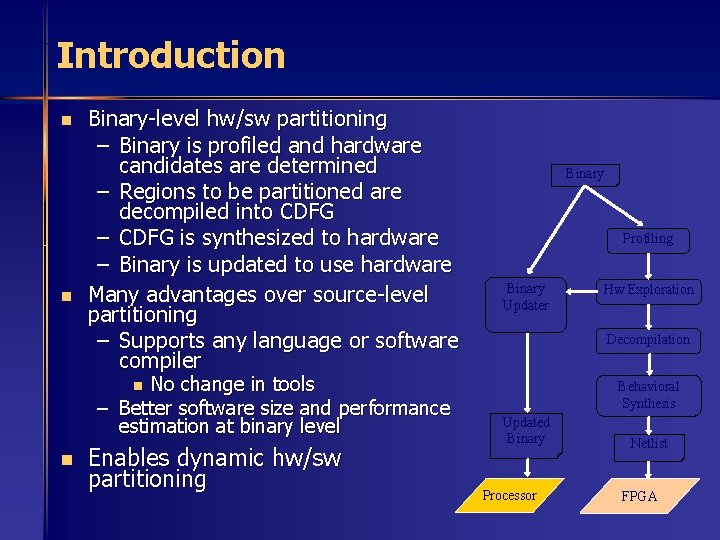

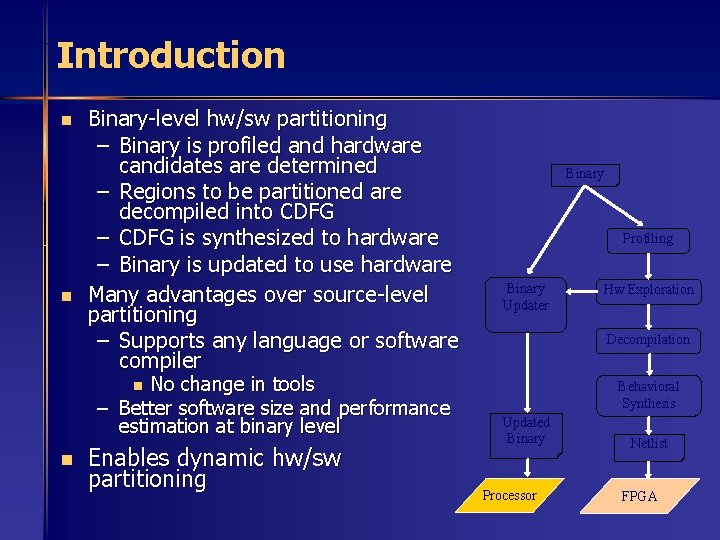

Introduction n n Binary-level hw/sw partitioning – Binary is profiled and hardware candidates are determined – Regions to be partitioned are decompiled into CDFG – CDFG is synthesized to hardware – Binary is updated to use hardware Many advantages over source-level partitioning – Supports any language or software compiler No change in tools – Better software size and performance estimation at binary level Binary Profiling Binary Updater Decompilation n n Enables dynamic hw/sw partitioning Hw Exploration Behavioral Synthesis Updated Binary Processor Netlist FPGA

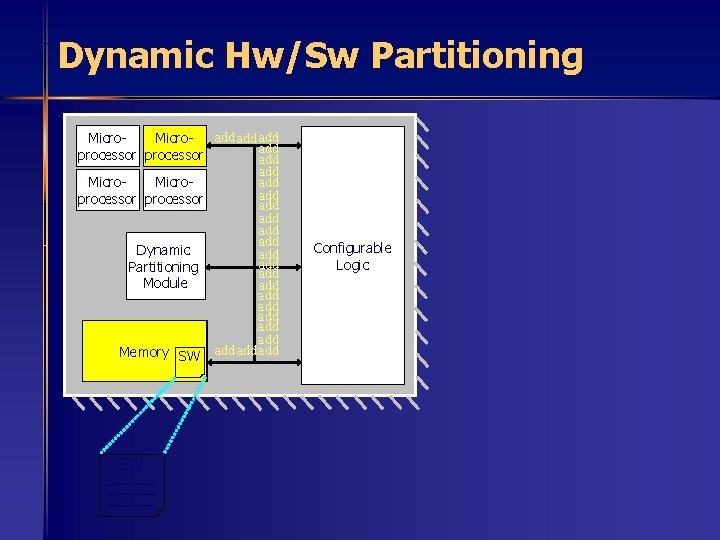

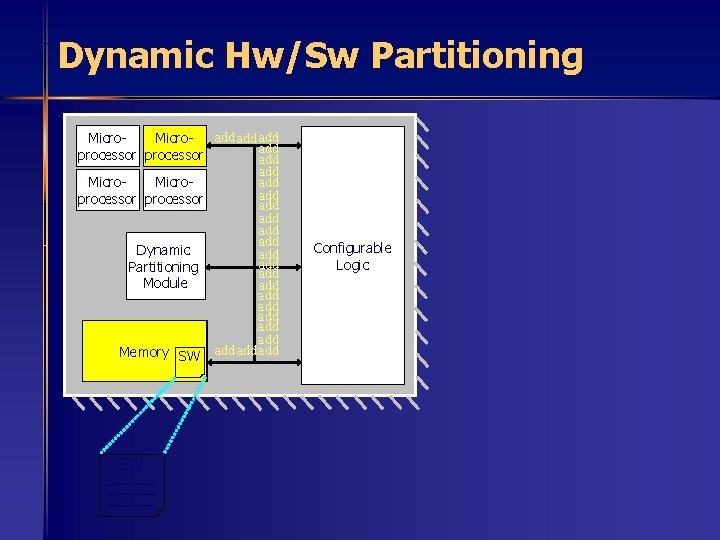

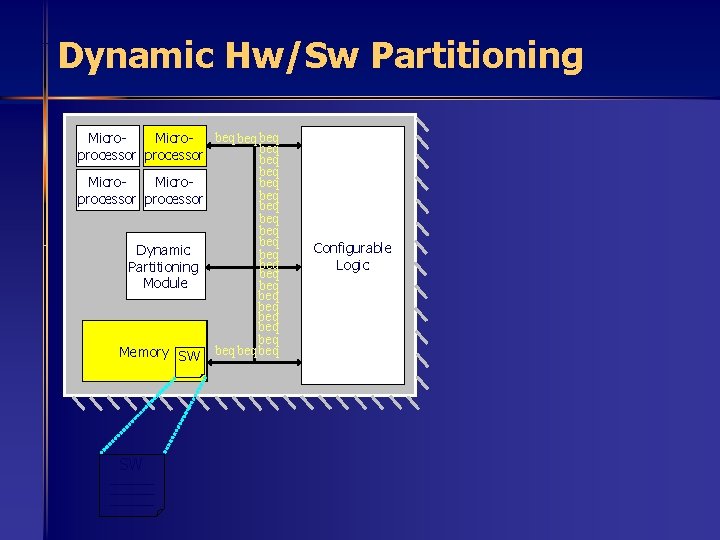

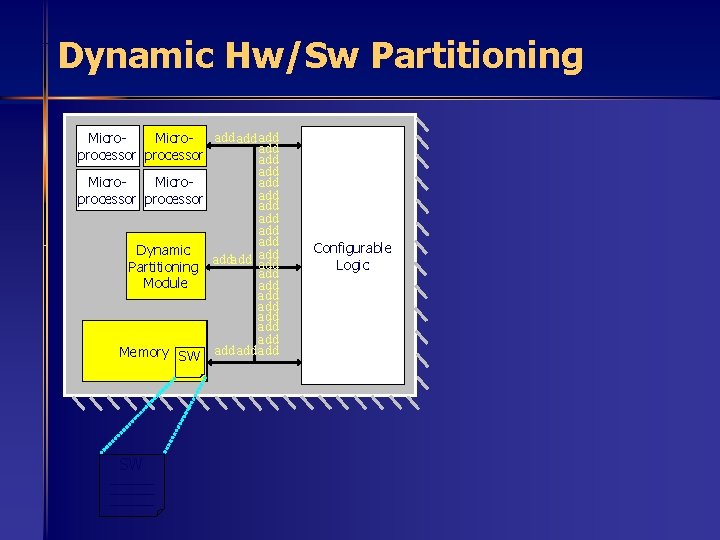

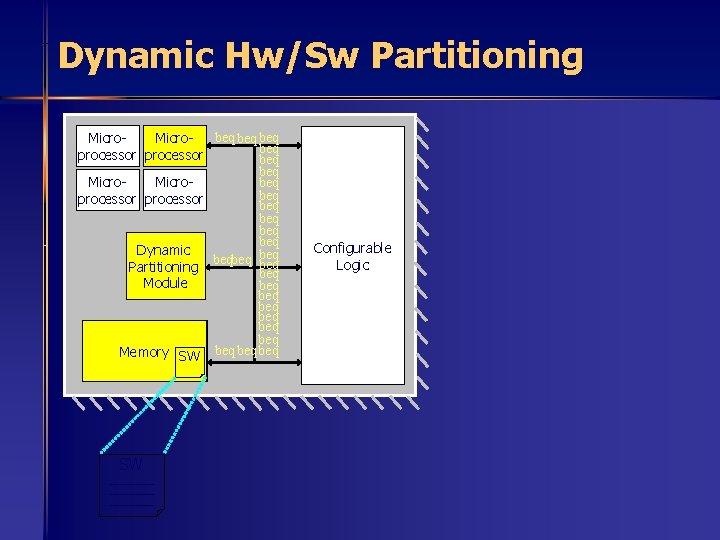

Dynamic Hw/Sw Partitioning add add Microadd processor add add Dynamic add Partitioning add Module add add add Memory SW SW _________ Configurable Logic

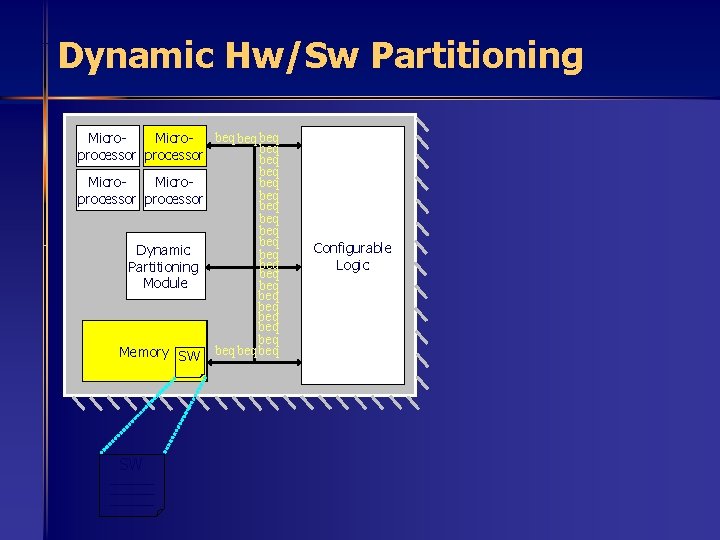

Dynamic Hw/Sw Partitioning beq beq Microbeq processor beq beq Dynamic beq Partitioning beq Module beq beq beq Memory SW SW _________ Configurable Logic

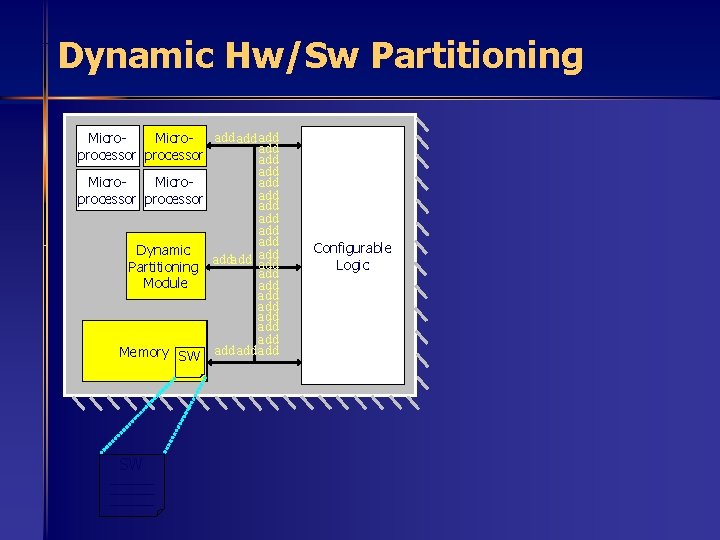

Dynamic Hw/Sw Partitioning add add Microadd processor add add Dynamic addadd add Partitioning add Module add add add Memory SW SW _________ Configurable Logic

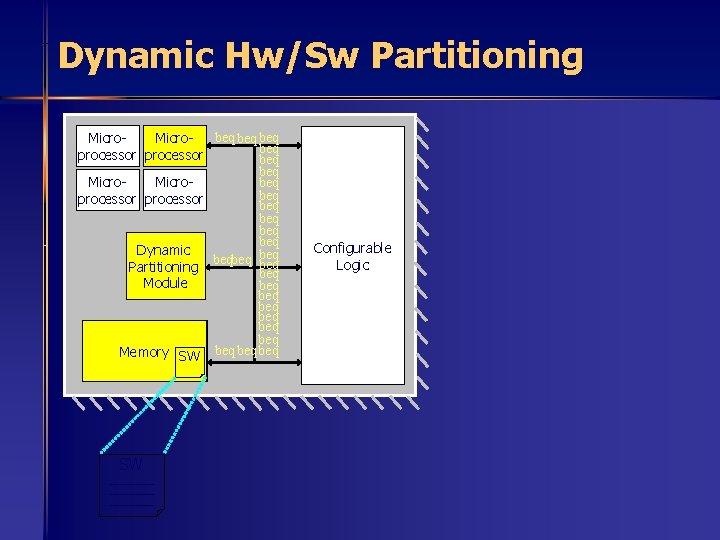

Dynamic Hw/Sw Partitioning beq beq Microbeq processor beq beq Dynamic beqbeq beq Partitioning beq Module beq beq beq Memory SW SW _________ Configurable Logic

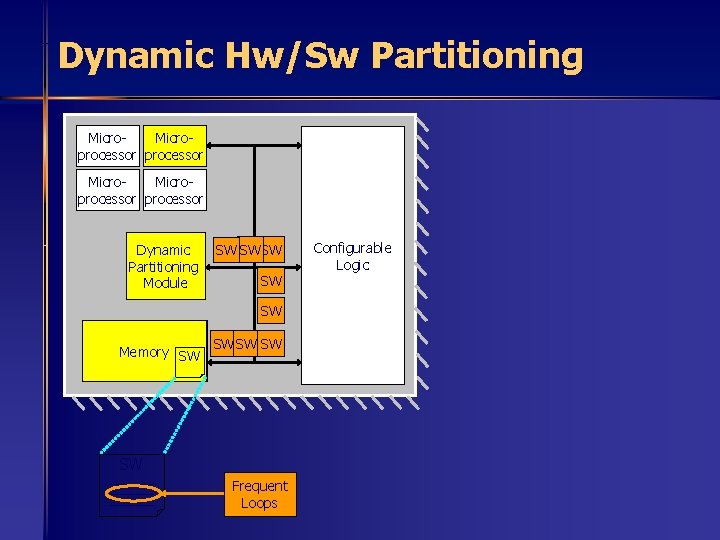

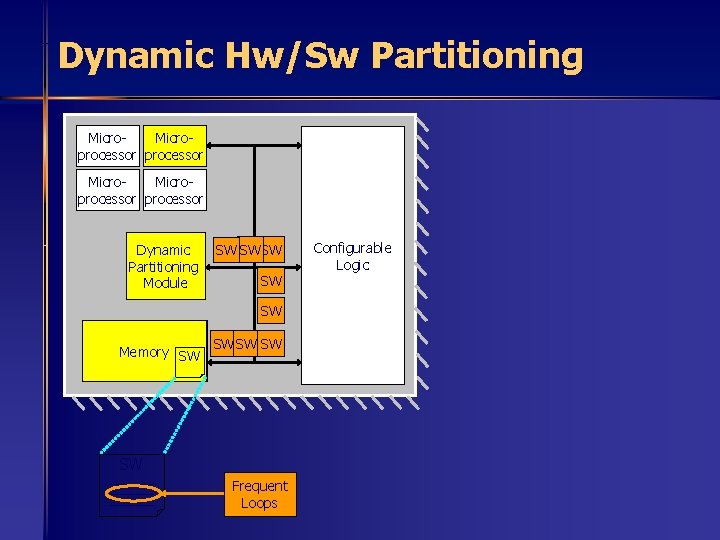

Dynamic Hw/Sw Partitioning Microprocessor Dynamic Partitioning Module SW SW SW Memory SW SW SW _________ Frequent Loops Configurable Logic

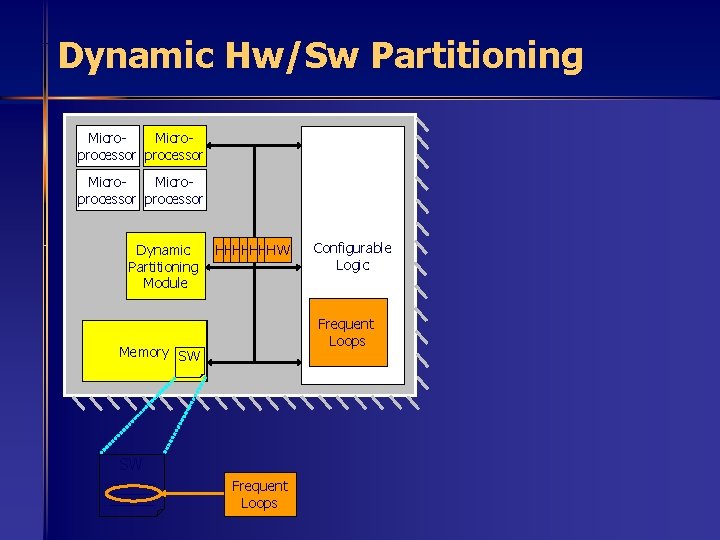

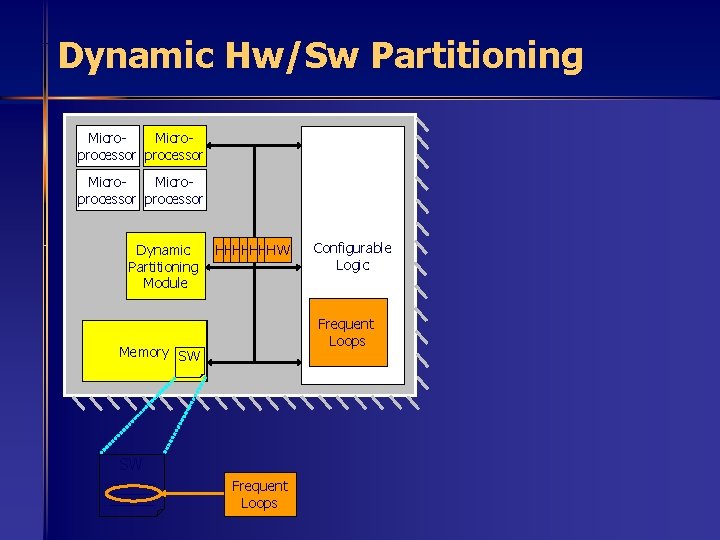

Dynamic Hw/Sw Partitioning Microprocessor Dynamic Partitioning Module HW HHW WHHW W Frequent Loops Memory SW SW _________ Configurable Logic Frequent Loops

Dynamic Hw/Sw Partitioning Microprocessor Configurable Logic Dynamic Partitioning Module Frequent Loops Memory SW SW _________ Frequent Loops

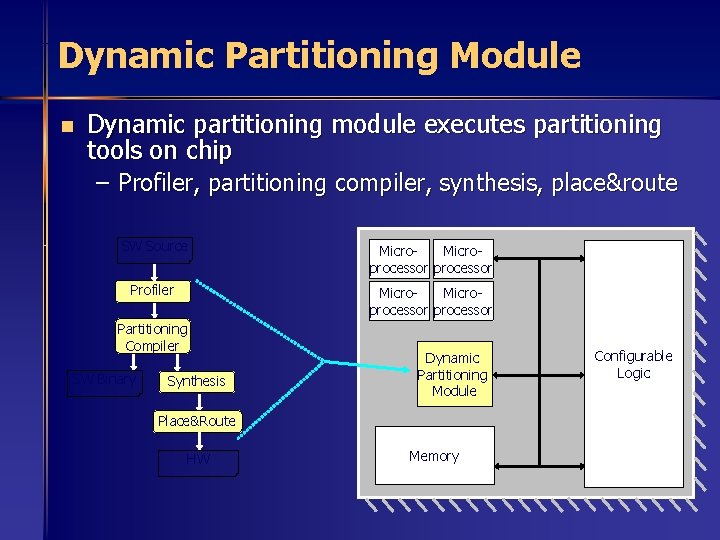

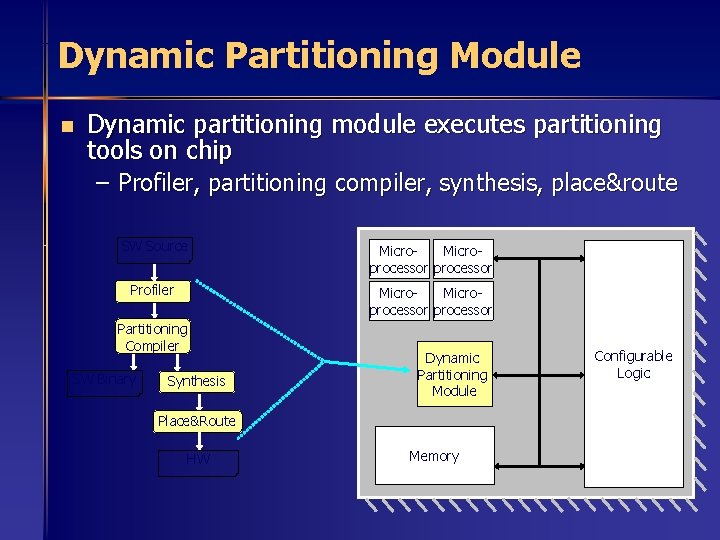

Dynamic Partitioning Module n Dynamic partitioning module executes partitioning tools on chip – Profiler, partitioning compiler, synthesis, place&route SW Source Microprocessor Profiler Microprocessor Partitioning Compiler SW Binary Synthesis Dynamic Partitioning Module Place&Route HW Memory Configurable Logic

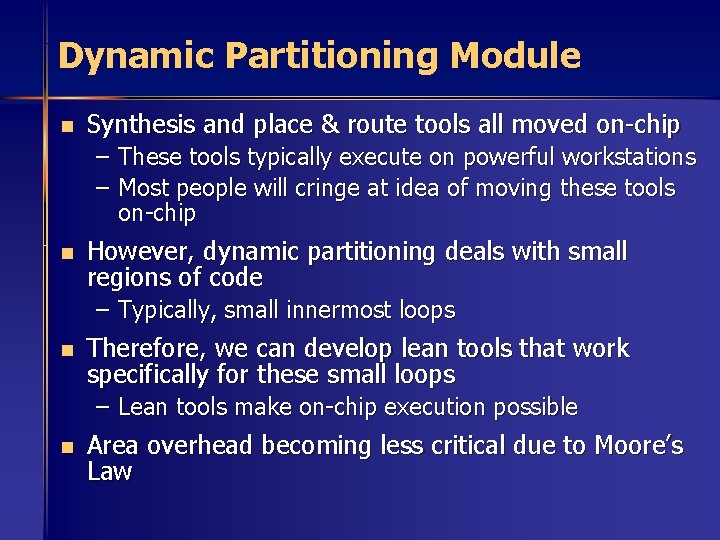

Dynamic Partitioning Module n Synthesis and place & route tools all moved on-chip – These tools typically execute on powerful workstations – Most people will cringe at idea of moving these tools on-chip n However, dynamic partitioning deals with small regions of code – Typically, small innermost loops n Therefore, we can develop lean tools that work specifically for these small loops – Lean tools make on-chip execution possible n Area overhead becoming less critical due to Moore’s Law

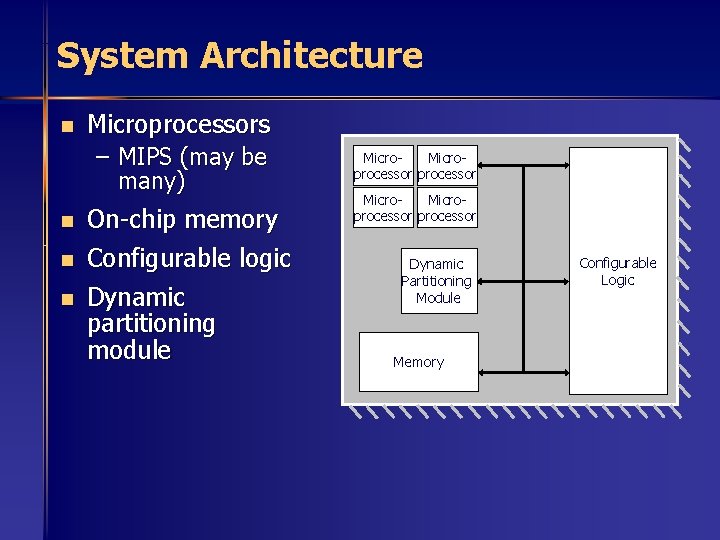

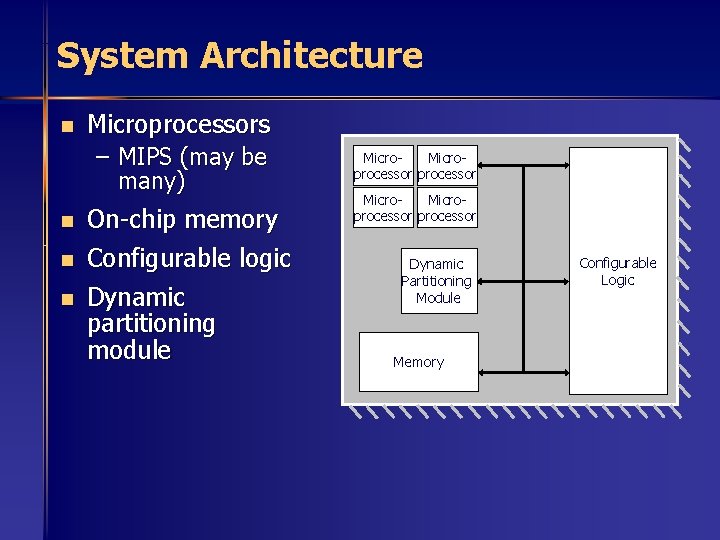

System Architecture n Microprocessors – MIPS (may be many) n n n On-chip memory Configurable logic Dynamic partitioning module Microprocessor Dynamic Partitioning Module Memory Configurable Logic

Dynamic Partitioning Module n n Dynamically detects frequent loops and then reimplements the loops in hardware running on the configurable logic Architectural components – Profiler – Additional processor and memory But SOCs may have dozens anyways n Alternatively, we could share main processor n Profiler Memory Partitioning Co-Processor

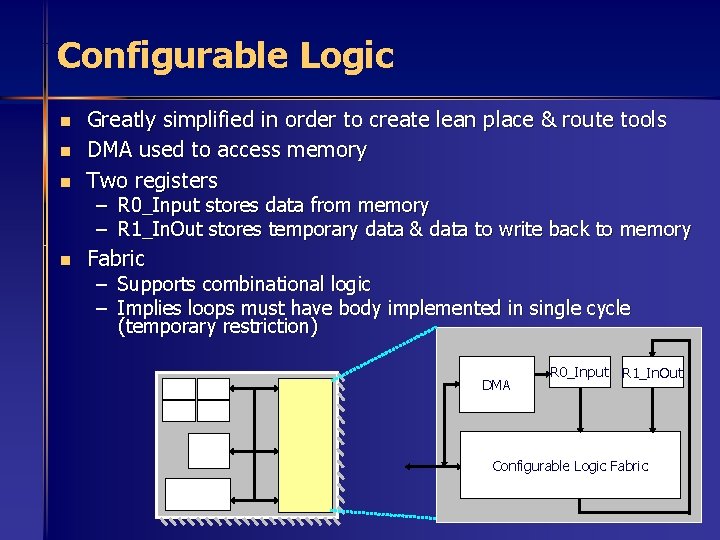

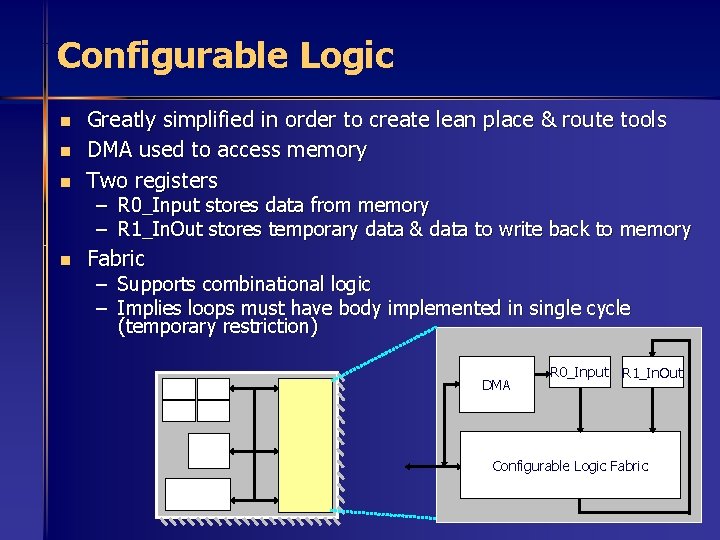

Configurable Logic n Greatly simplified in order to create lean place & route tools DMA used to access memory Two registers n Fabric n n – R 0_Input stores data from memory – R 1_In. Out stores temporary data & data to write back to memory – Supports combinational logic – Implies loops must have body implemented in single cycle (temporary restriction) DMA R 0_Input R 1_In. Out Configurable Logic Fabric

Configurable Logic Fabric n Fabric – 3 -input 2 -output LUTS surrounded by switch matrices n Switch Matrix – Connect wire to same channel on different side n LUT – 3 -input (8 word) 2 -output SRAM Configurable Logic Fabric Switch Matrix LUT Configurable Logic Fabric SM M SM LUT T SM M 0 1 2 3 SM LUT UT SM M . . . SM SM . . . Inputs 3 2 1 0 0 1 2 3 Inputs SRAM (8 x 2) Outputs

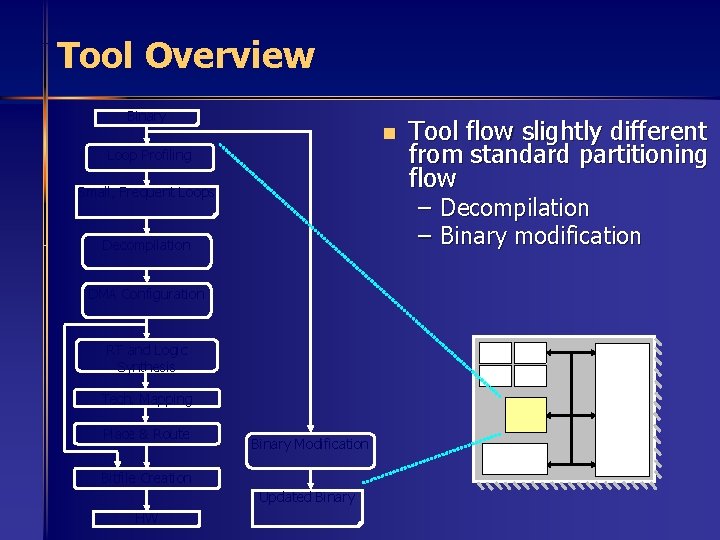

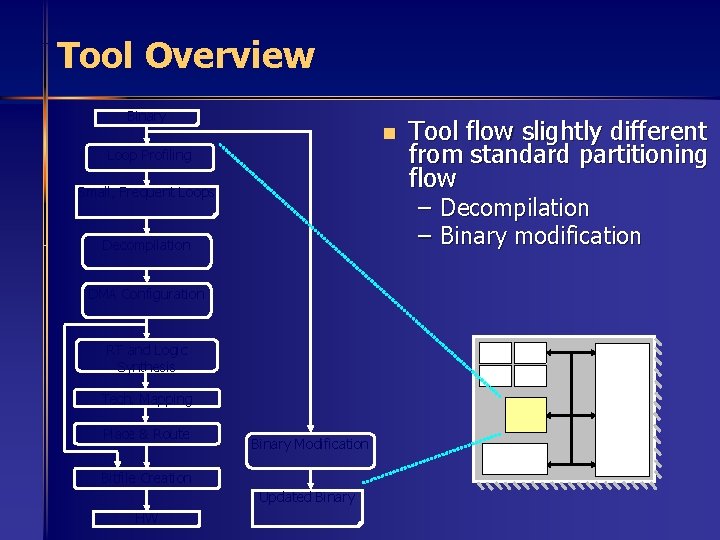

Tool Overview Binary n Loop Profiling Small, Frequent Loops – Decompilation – Binary modification Decompilation DMA Configuration RT and Logic Synthesis Tech. Mapping Place & Route Binary Modification Bitfile Creation Updated Binary HW Tool flow slightly different from standard partitioning flow

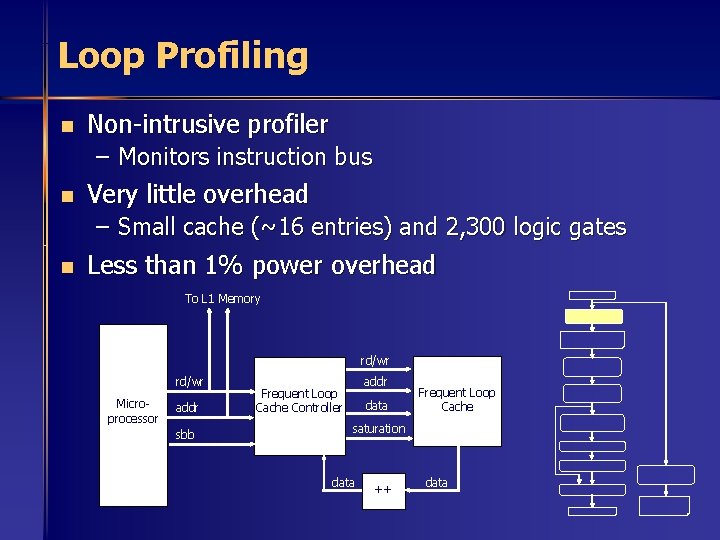

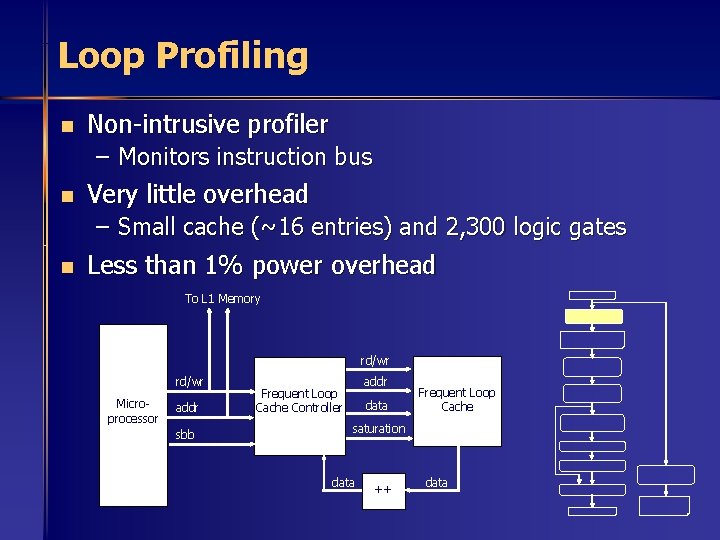

Loop Profiling n Non-intrusive profiler – Monitors instruction bus n Very little overhead – Small cache (~16 entries) and 2, 300 logic gates n Less than 1% power overhead To L 1 Memory rd/wr Microprocessor addr sbb addr Frequent Loop Cache Controller data Frequent Loop Cache saturation data ++ data

Decompilation n n Decompilation recovers high-level information Creates optimized CDFG – All instruction-set inefficiencies are removed n Binary partitioning has been shown to achieve similar results to source-level partitioning for many applications – [Greg Stitt, Frank Vahid, ICCAD 2002]

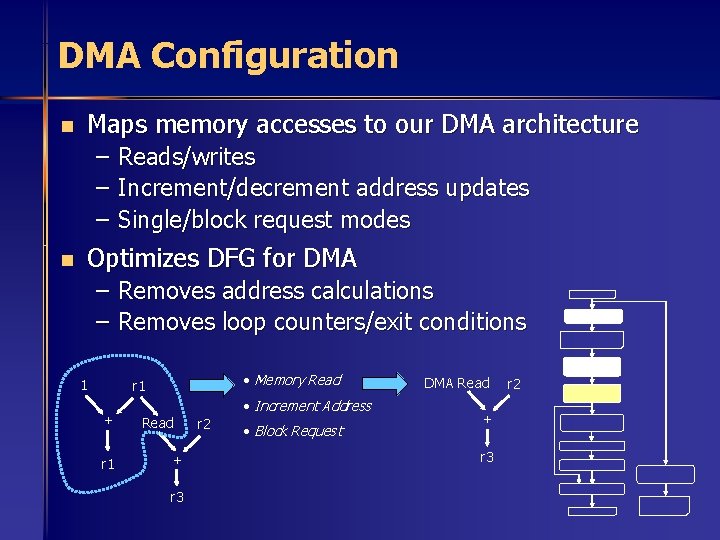

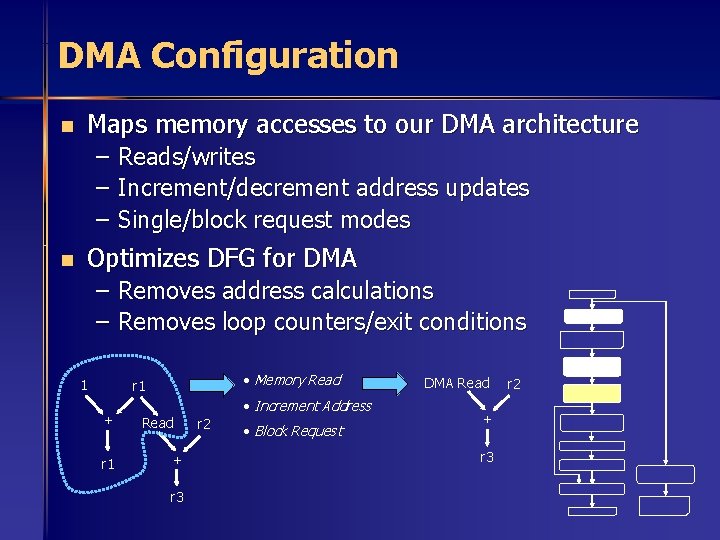

DMA Configuration n Maps memory accesses to our DMA architecture – Reads/writes – Increment/decrement address updates – Single/block request modes n Optimizes DFG for DMA – Removes address calculations – Removes loop counters/exit conditions 1 • Memory Read r 1 + r 1 • Increment Address Read + r 3 r 2 • Block Request DMA Read + r 3 r 2

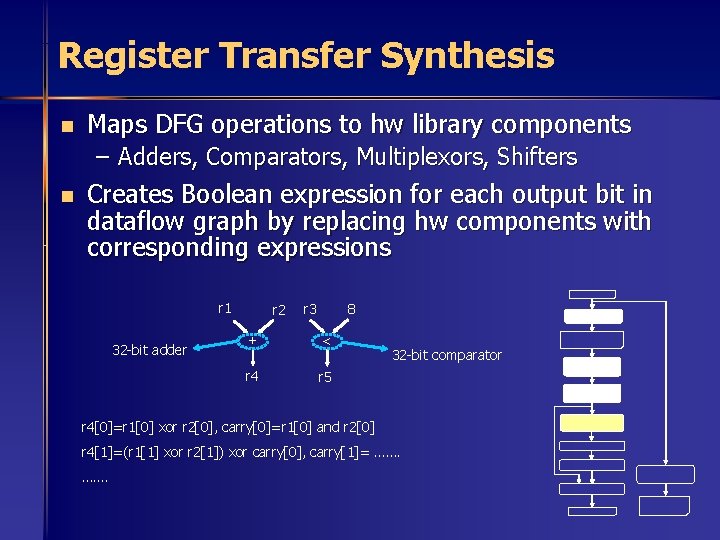

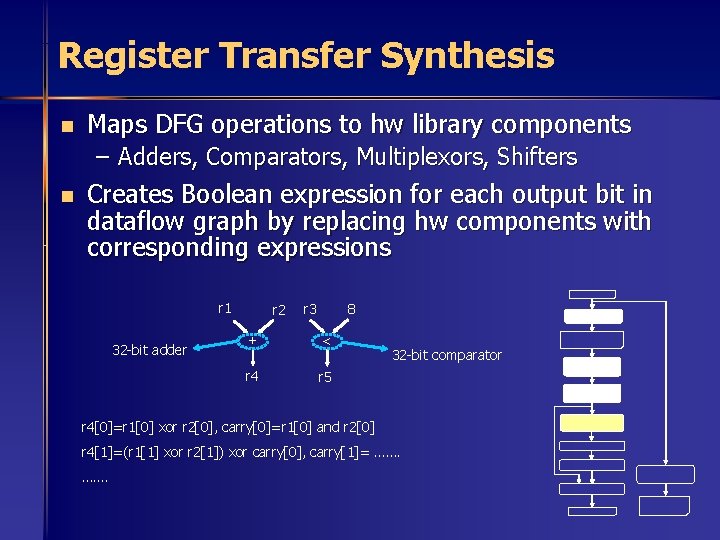

Register Transfer Synthesis n Maps DFG operations to hw library components – Adders, Comparators, Multiplexors, Shifters n Creates Boolean expression for each output bit in dataflow graph by replacing hw components with corresponding expressions r 1 32 -bit adder r 2 r 3 8 + < r 4 r 5 32 -bit comparator r 4[0]=r 1[0] xor r 2[0], carry[0]=r 1[0] and r 2[0] r 4[1]=(r 1[1] xor r 2[1]) xor carry[0], carry[1]= …….

Logic Synthesis n Optimizes Boolean equations from RT synthesis – Large opportunity for logic minimization due to use of immediate values in the binary n Simple on-chip 2 -level logic minimization method – Lysecky/Vahid DAC’ 03, session 20. 4 (9: 45 Wed) r 1 4 + r 2[0] = r 1[0] xor 0 r 2[1] = r 1[1] xor 0 xor carry[0] r 2[2] = r 1[2] xor 1 xor carry[1] r 2[3] = r 1[3] xor 0 xor carry[2] … r 2[0] = r 1[0] r 2[1] = r 1[1] xor carry[0] r 2[2] = r 1[2]’ xor carry[1] r 2[3] = r 1[3] xor carry[2] …

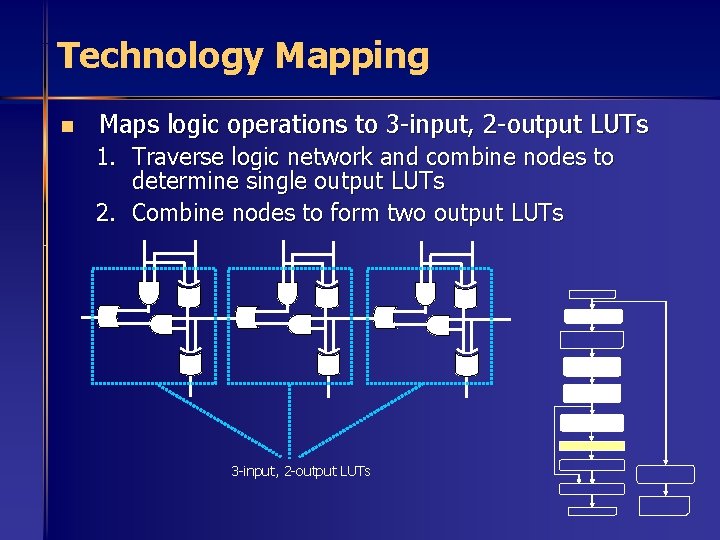

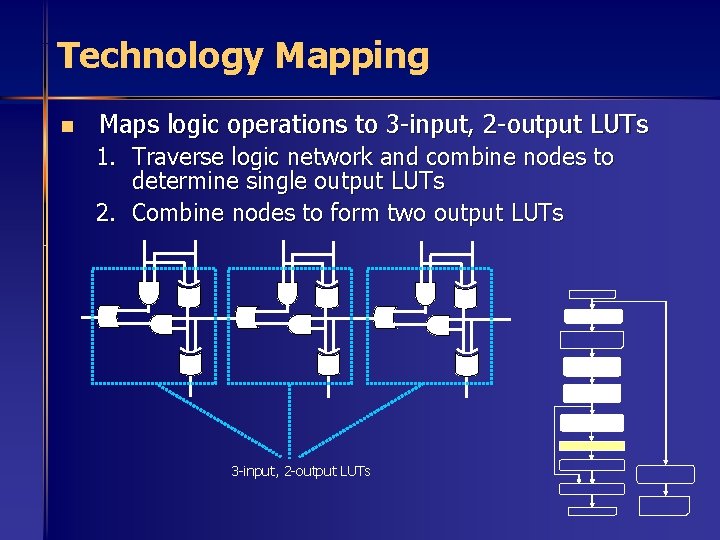

Technology Mapping n Maps logic operations to 3 -input, 2 -output LUTs 1. Traverse logic network and combine nodes to determine single output LUTs 2. Combine nodes to form two output LUTs 3 -input, 2 -output LUTs

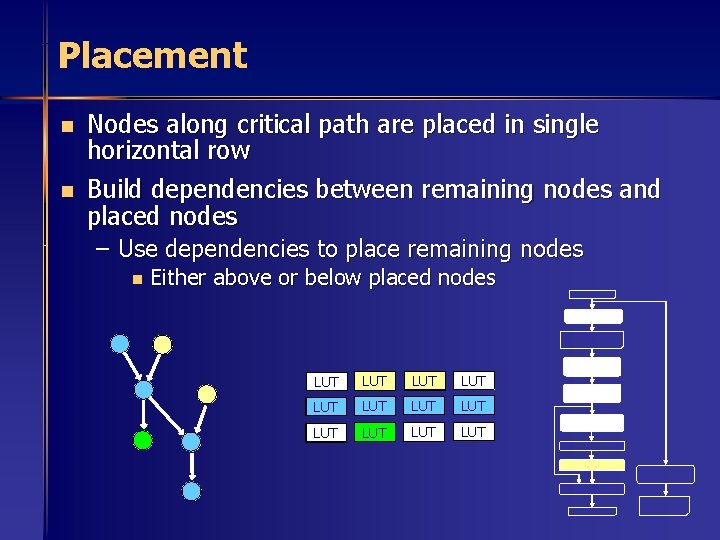

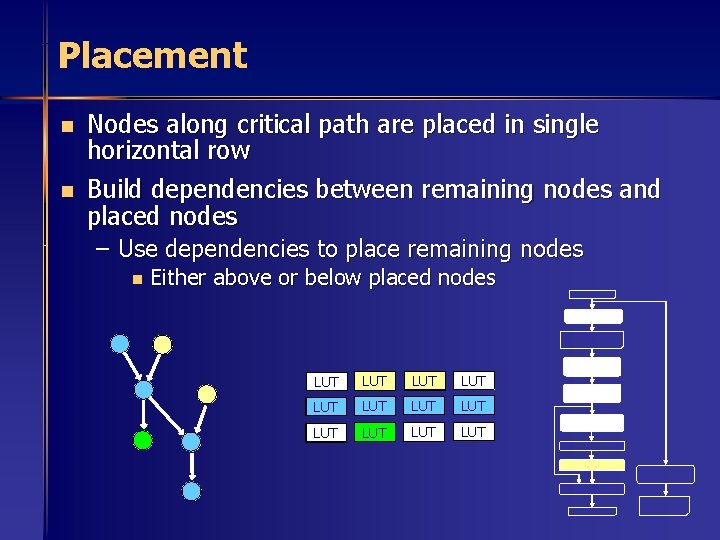

Placement n n Nodes along critical path are placed in single horizontal row Build dependencies between remaining nodes and placed nodes – Use dependencies to place remaining nodes n Either above or below placed nodes LUT LUT LUT

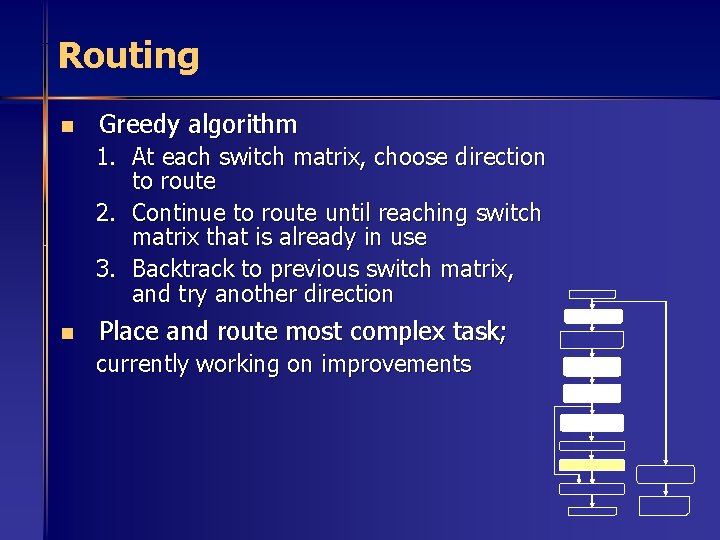

Routing n Greedy algorithm 1. At each switch matrix, choose direction to route 2. Continue to route until reaching switch matrix that is already in use 3. Backtrack to previous switch matrix, and try another direction n Place and route most complex task; currently working on improvements

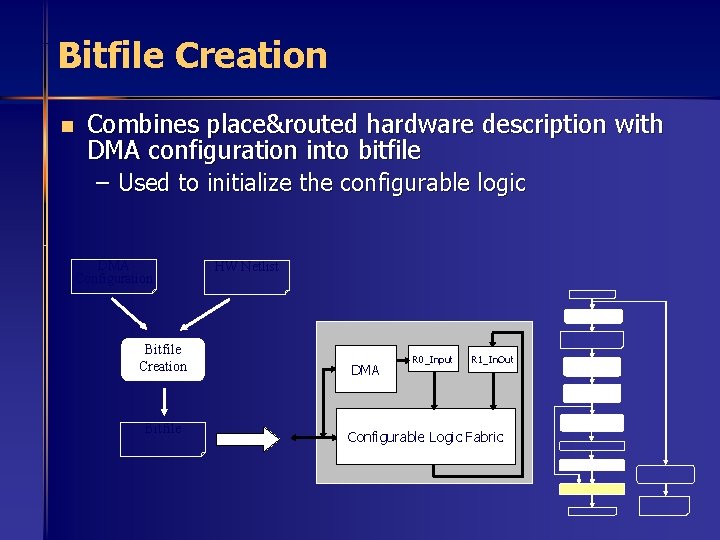

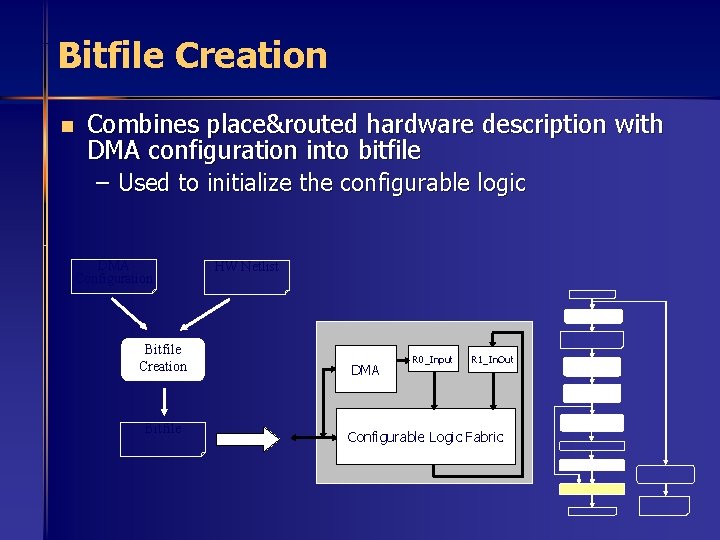

Bitfile Creation n Combines place&routed hardware description with DMA configuration into bitfile – Used to initialize the configurable logic DMA Configuration Bitfile Creation Bitfile HW Netlist DMA R 0_Input R 1_In. Out Configurable Logic Fabric

Binary Modification n Updates the application binary in order to utilize the new hardware – Loop replaced with jump to hw initialization code – Wisconsin Architectural Research Tool Set (WARTS) n EEL (Executable Editing Library) – We assume memory is RAM or programmable ROM loop: hw_init: Load r 2, 0(r 1) Jump hw_init 1. Initialize HW registers Add r 1, 1 . . 2. Enable HW Add r 3, r 2 after_loop: 3. Shutdown processor Blt r 1, 8, loop …. . • after_loop: …. . Woken up by HW interrupt 4. Store any results 5. Jump to after_loop

Tool Statistics n Executed on Simple. Scalar – Similar to a MIPS instruction set – Used 60 MHz clock (like Triscend A 7 device) n Statistics – – – Total run time of only 1. 09 seconds Requires less than ½ megabyte of RAM Code size much smaller than standard synthesis tools

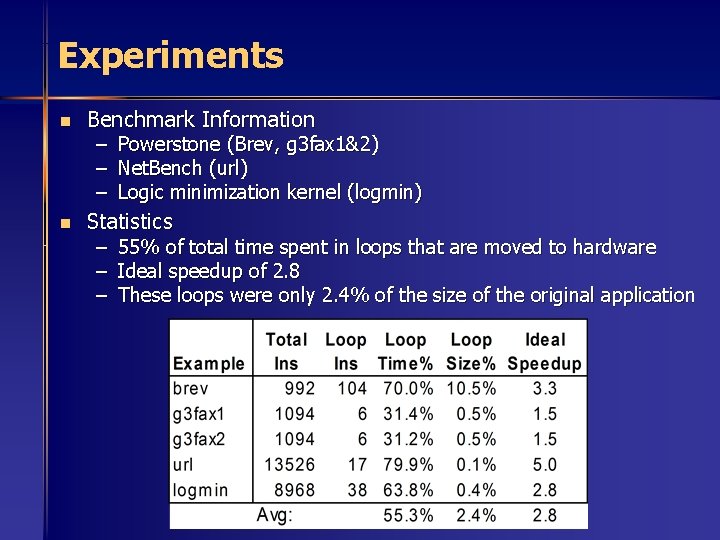

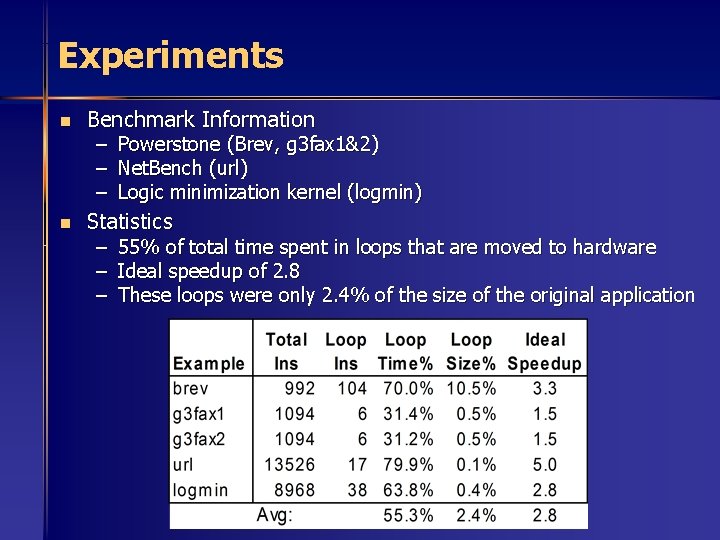

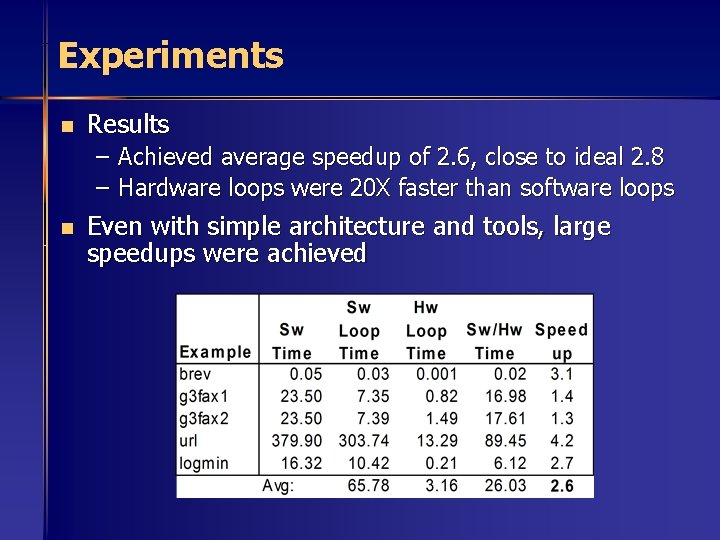

Experiments n n Benchmark Information – – – Powerstone (Brev, g 3 fax 1&2) Net. Bench (url) Logic minimization kernel (logmin) Statistics – 55% of total time spent in loops that are moved to hardware – Ideal speedup of 2. 8 – These loops were only 2. 4% of the size of the original application

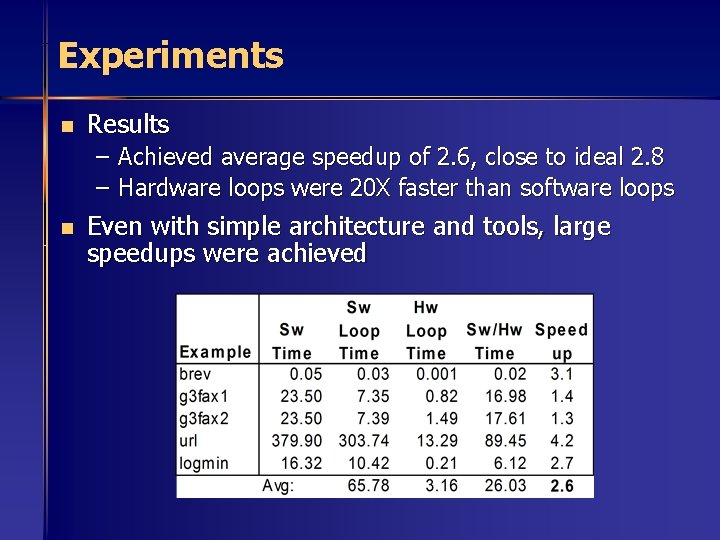

Experiments n Results – Achieved average speedup of 2. 6, close to ideal 2. 8 – Hardware loops were 20 X faster than software loops n Even with simple architecture and tools, large speedups were achieved

Conclusion n Dynamic hardware/software partitioning has advantages over other partitioning approaches – Completely transparent – Designers get performance/energy benefits of hw/sw partitioning by simply writing software – Quality likely not as good as desktop CAD for some applications, so most suitable when transparency is critical (very often!) n Achieved average speedup of 2. 6 – Very close to ideal speedup of 2. 8 n Future work – More complex configurable logic fabric n Designed in close conjunction with on-chip CAD tools n Sequential logic and increased inputs/outputs n Support larger hardware regions, not just simple loops n Improved algorithms (especially place and route) – Handle more complex memory access patterns