HardwareSoftware Partitioning Greg Stitt ECE Department University of

- Slides: 26

Hardware/Software Partitioning Greg Stitt ECE Department University of Florida

Introduction n FPGAs are often much faster than sw n But, most real designs with FPGAs still use microprocessors n n FPGAs typically implement “kernels” efficiently n n Why? Difficult/inefficient to implement entire application as a custom circuit in FPGA Common case n n Implement performance critical code in FPGA Implement everything else on microprocessors n Certain regions can afford to be slow

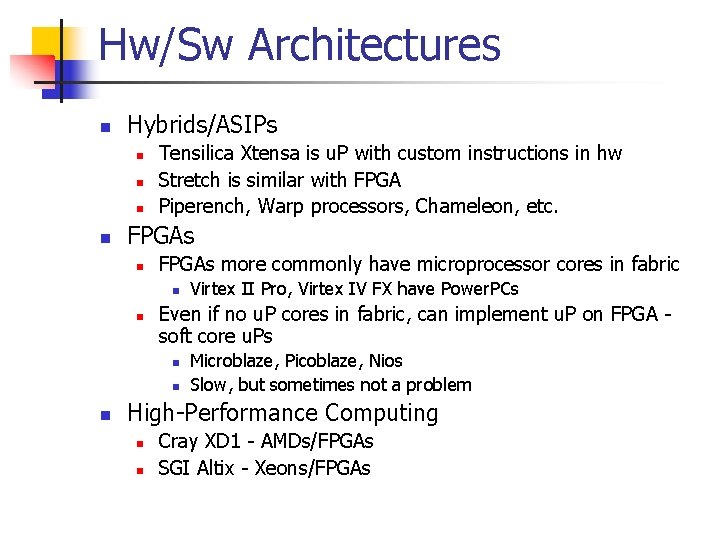

Hw/Sw Architectures n Hybrids/ASIPs n n Tensilica Xtensa is u. P with custom instructions in hw Stretch is similar with FPGA Piperench, Warp processors, Chameleon, etc. FPGAs n FPGAs more commonly have microprocessor cores in fabric n n Even if no u. P cores in fabric, can implement u. P on FPGA soft core u. Ps n n n Virtex II Pro, Virtex IV FX have Power. PCs Microblaze, Picoblaze, Nios Slow, but sometimes not a problem High-Performance Computing n n Cray XD 1 - AMDs/FPGAs SGI Altix - Xeons/FPGAs

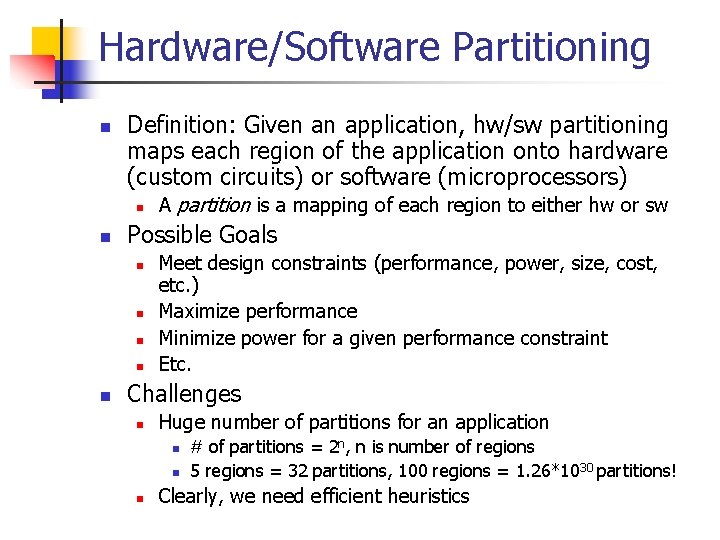

Hardware/Software Partitioning n Definition: Given an application, hw/sw partitioning maps each region of the application onto hardware (custom circuits) or software (microprocessors) n n Possible Goals n n n A partition is a mapping of each region to either hw or sw Meet design constraints (performance, power, size, cost, etc. ) Maximize performance Minimize power for a given performance constraint Etc. Challenges n Huge number of partitions for an application n # of partitions = 2 n, n is number of regions 5 regions = 32 partitions, 100 regions = 1. 26*1030 partitions! Clearly, we need efficient heuristics

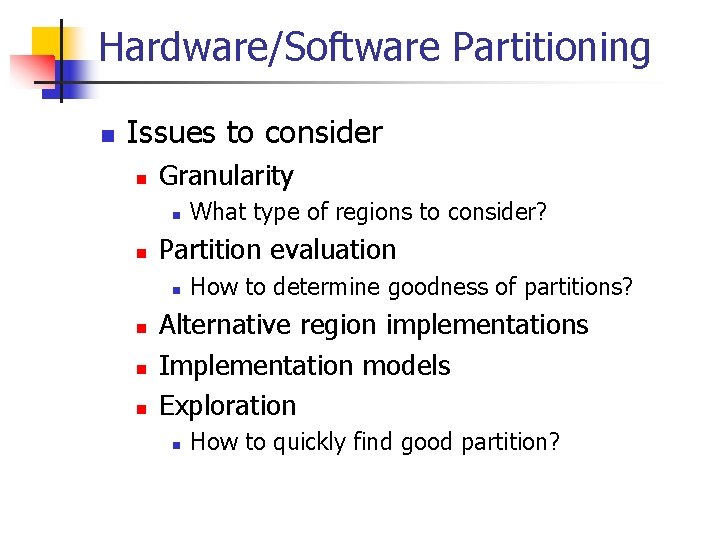

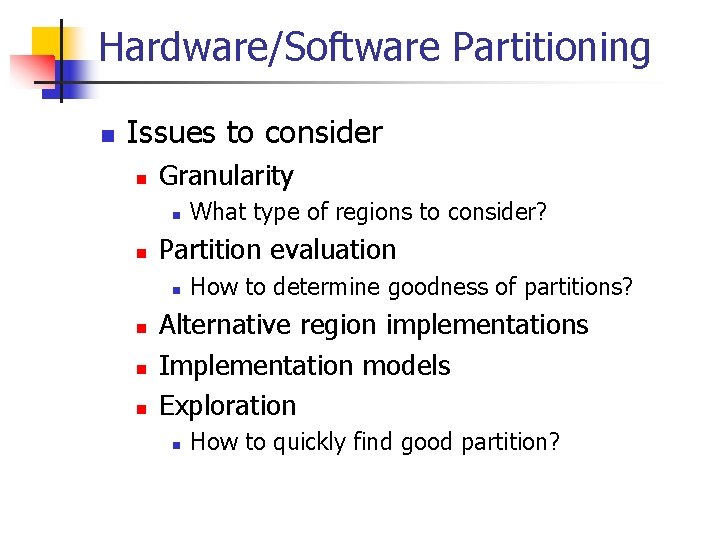

Hardware/Software Partitioning n Issues to consider n Granularity n n Partition evaluation n n What type of regions to consider? How to determine goodness of partitions? Alternative region implementations Implementation models Exploration n How to quickly find good partition?

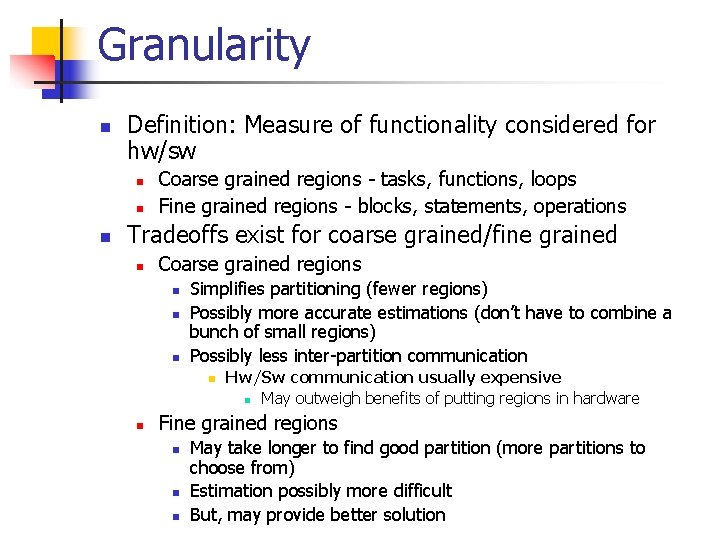

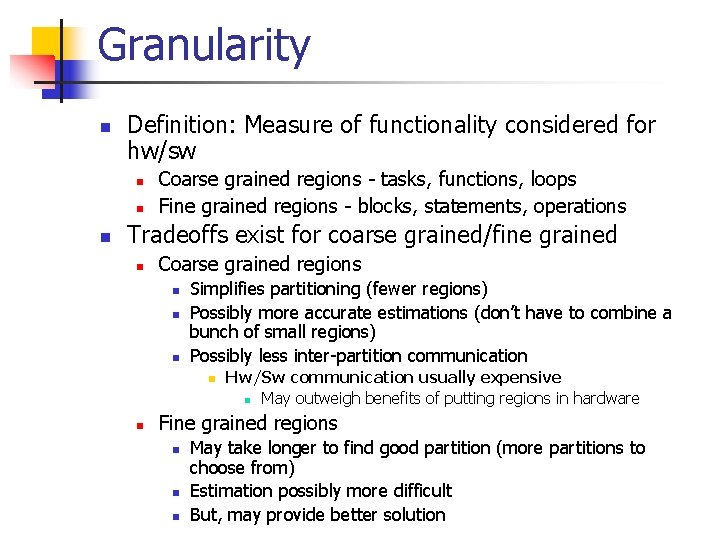

Granularity n Definition: Measure of functionality considered for hw/sw n n n Coarse grained regions - tasks, functions, loops Fine grained regions - blocks, statements, operations Tradeoffs exist for coarse grained/fine grained n Coarse grained regions n n n Simplifies partitioning (fewer regions) Possibly more accurate estimations (don’t have to combine a bunch of small regions) Possibly less inter-partition communication n n Hw/Sw communication usually expensive n May outweigh benefits of putting regions in hardware Fine grained regions n n n May take longer to find good partition (more partitions to choose from) Estimation possibly more difficult But, may provide better solution

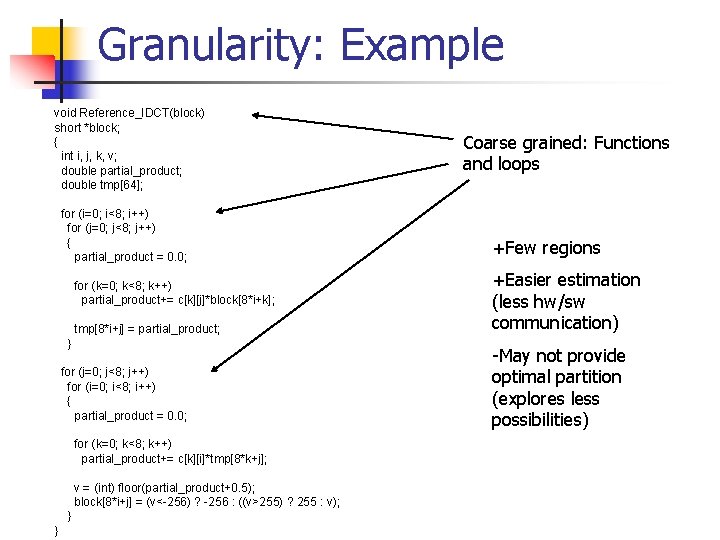

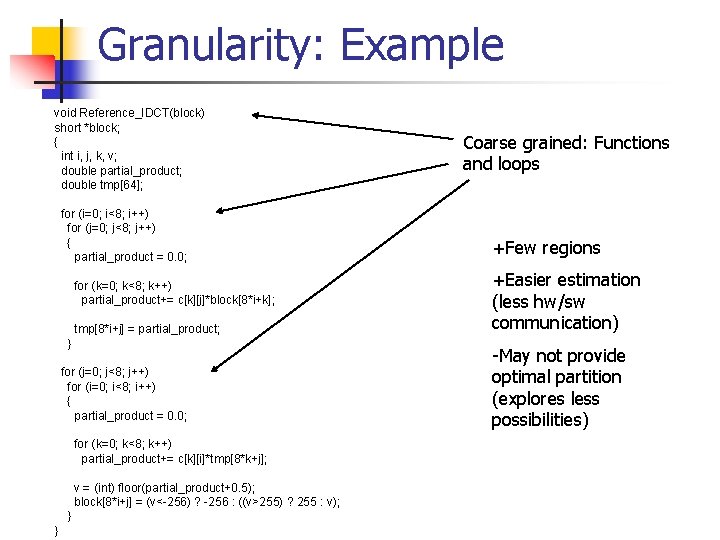

Granularity: Example void Reference_IDCT(block) short *block; { int i, j, k, v; double partial_product; double tmp[64]; for (i=0; i<8; i++) for (j=0; j<8; j++) { partial_product = 0. 0; for (k=0; k<8; k++) partial_product+= c[k][j]*block[8*i+k]; tmp[8*i+j] = partial_product; } for (j=0; j<8; j++) for (i=0; i<8; i++) { partial_product = 0. 0; for (k=0; k<8; k++) partial_product+= c[k][i]*tmp[8*k+j]; v = (int) floor(partial_product+0. 5); block[8*i+j] = (v<-256) ? -256 : ((v>255) ? 255 : v); } } Coarse grained: Functions and loops +Few regions +Easier estimation (less hw/sw communication) -May not provide optimal partition (explores less possibilities)

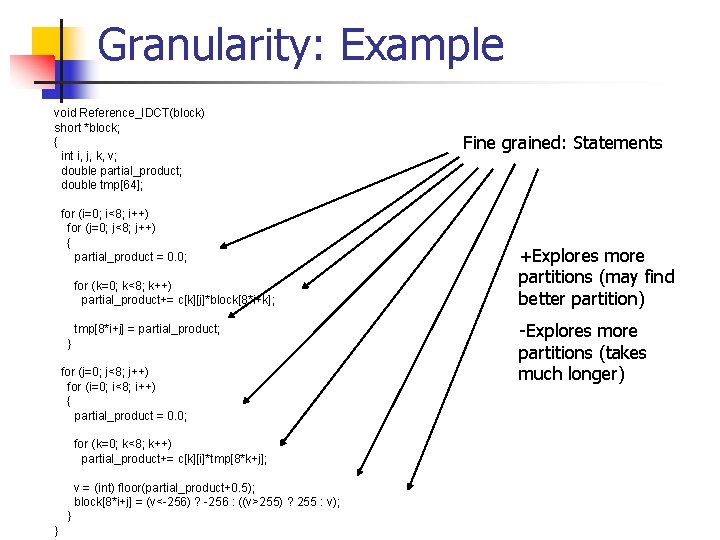

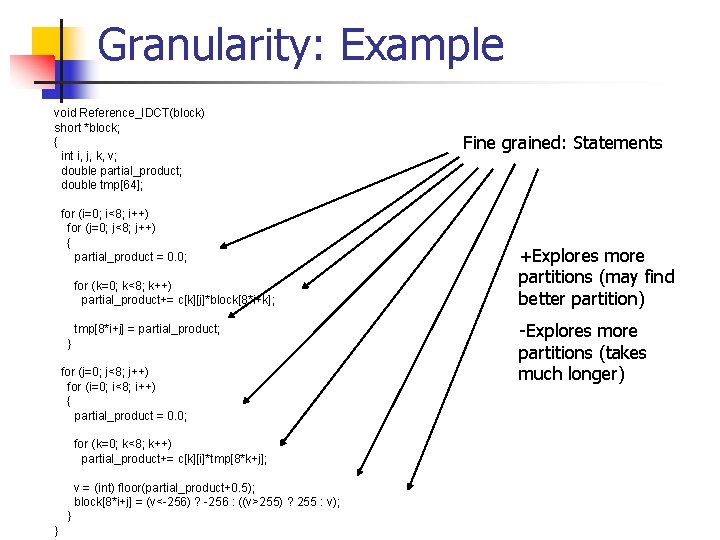

Granularity: Example void Reference_IDCT(block) short *block; { int i, j, k, v; double partial_product; double tmp[64]; for (i=0; i<8; i++) for (j=0; j<8; j++) { partial_product = 0. 0; for (k=0; k<8; k++) partial_product+= c[k][j]*block[8*i+k]; tmp[8*i+j] = partial_product; } for (j=0; j<8; j++) for (i=0; i<8; i++) { partial_product = 0. 0; for (k=0; k<8; k++) partial_product+= c[k][i]*tmp[8*k+j]; v = (int) floor(partial_product+0. 5); block[8*i+j] = (v<-256) ? -256 : ((v>255) ? 255 : v); } } Fine grained: Statements +Explores more partitions (may find better partition) -Explores more partitions (takes much longer)

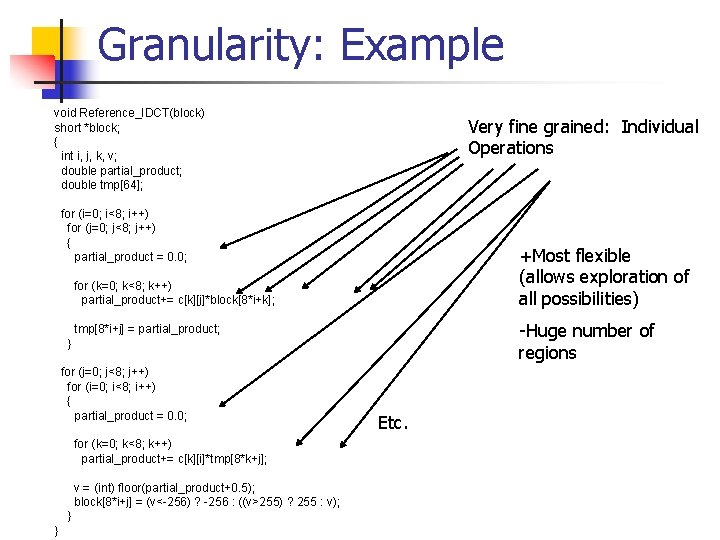

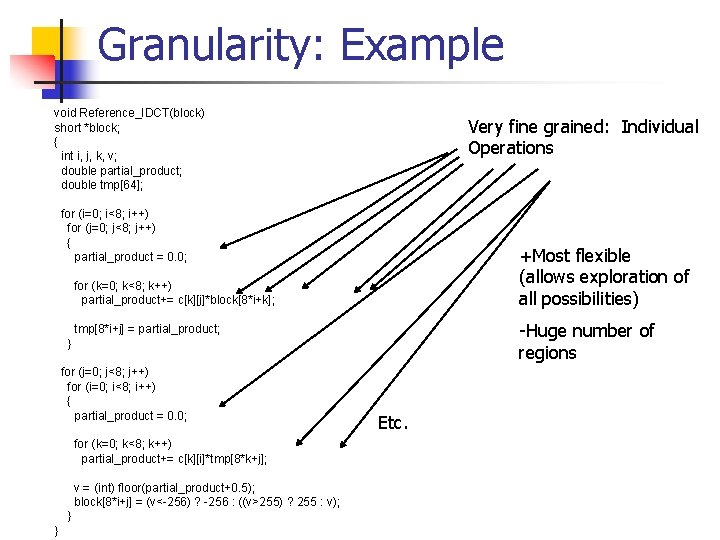

Granularity: Example void Reference_IDCT(block) short *block; { int i, j, k, v; double partial_product; double tmp[64]; Very fine grained: Individual Operations for (i=0; i<8; i++) for (j=0; j<8; j++) { partial_product = 0. 0; +Most flexible (allows exploration of all possibilities) for (k=0; k<8; k++) partial_product+= c[k][j]*block[8*i+k]; -Huge number of regions tmp[8*i+j] = partial_product; } for (j=0; j<8; j++) for (i=0; i<8; i++) { partial_product = 0. 0; for (k=0; k<8; k++) partial_product+= c[k][i]*tmp[8*k+j]; v = (int) floor(partial_product+0. 5); block[8*i+j] = (v<-256) ? -256 : ((v>255) ? 255 : v); } } Etc.

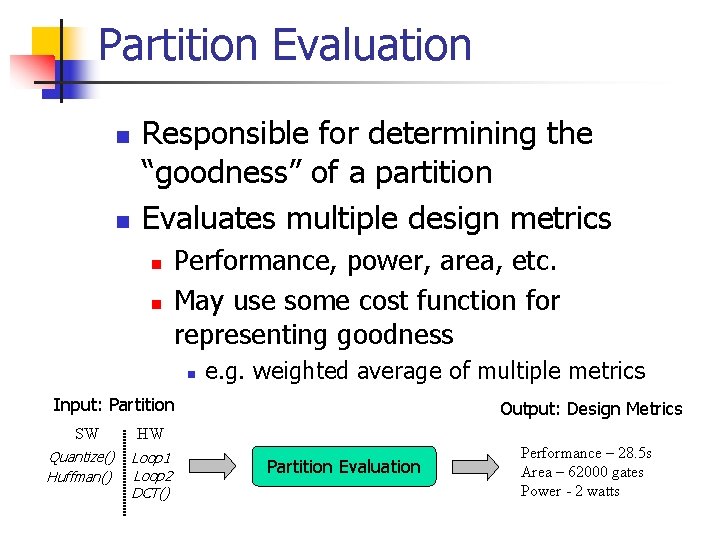

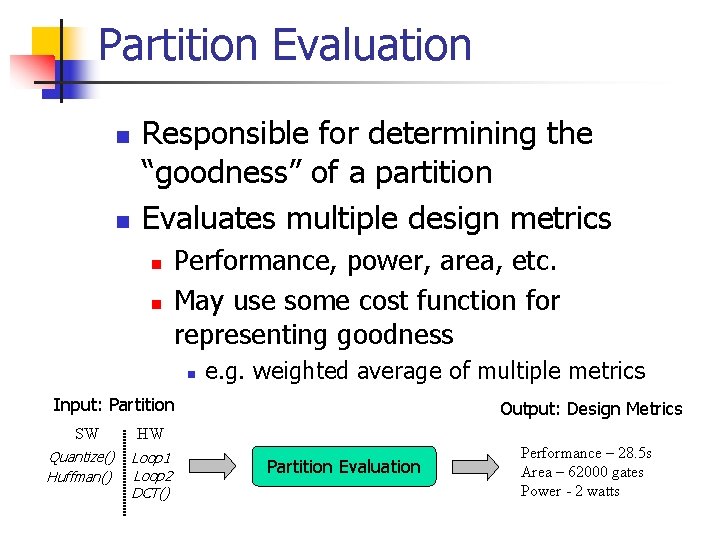

Partition Evaluation n n Responsible for determining the “goodness” of a partition Evaluates multiple design metrics n n Performance, power, area, etc. May use some cost function for representing goodness n e. g. weighted average of multiple metrics Input: Partition SW Quantize() Huffman() Output: Design Metrics HW Loop 1 Loop 2 DCT() Partition Evaluation Performance – 28. 5 s Area – 62000 gates Power - 2 watts

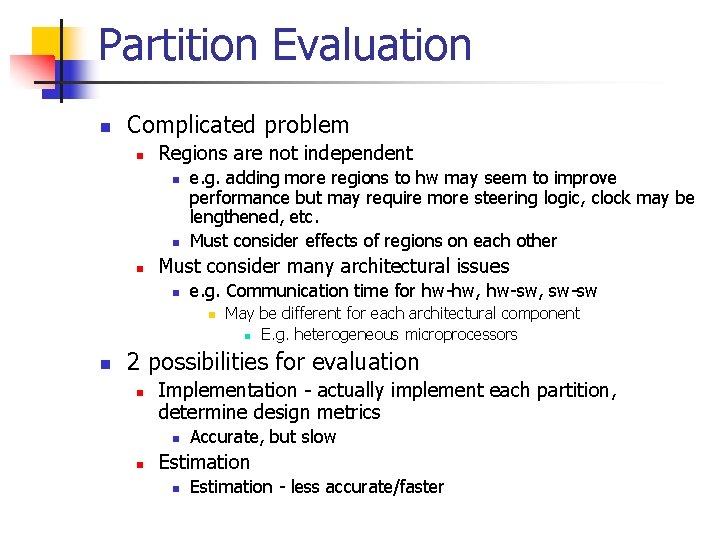

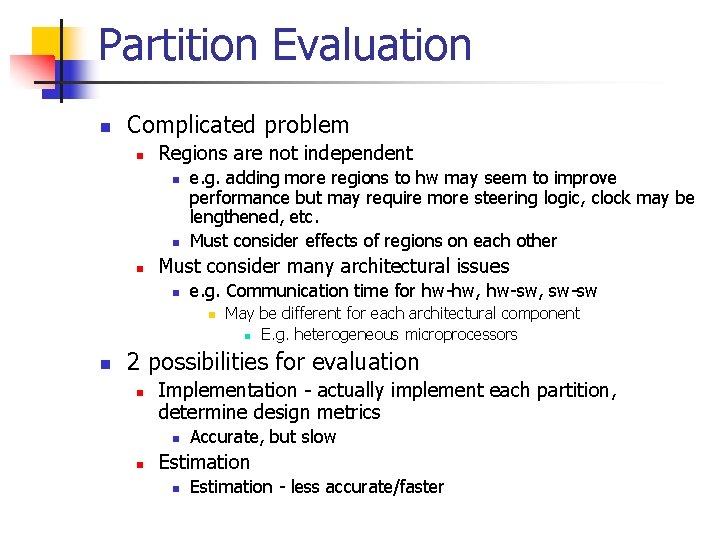

Partition Evaluation n Complicated problem n Regions are not independent n n n e. g. adding more regions to hw may seem to improve performance but may require more steering logic, clock may be lengthened, etc. Must consider effects of regions on each other Must consider many architectural issues n e. g. Communication time for hw-hw, hw-sw, sw-sw n n May be different for each architectural component n E. g. heterogeneous microprocessors 2 possibilities for evaluation n Implementation - actually implement each partition, determine design metrics n n Accurate, but slow Estimation n Estimation - less accurate/faster

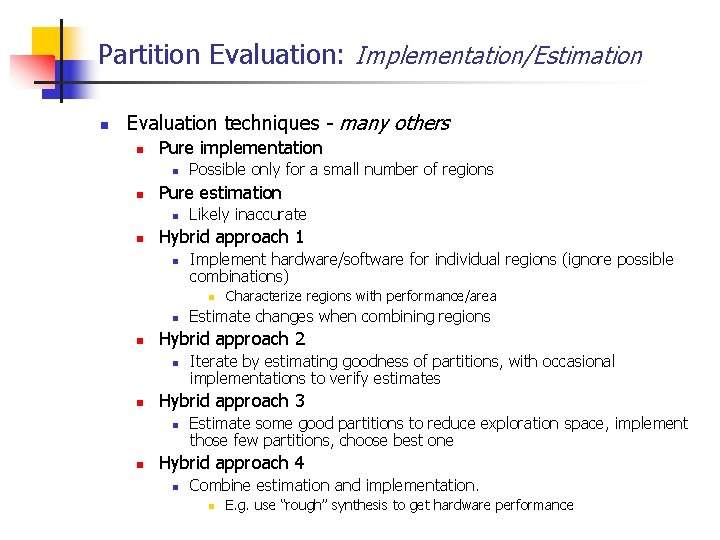

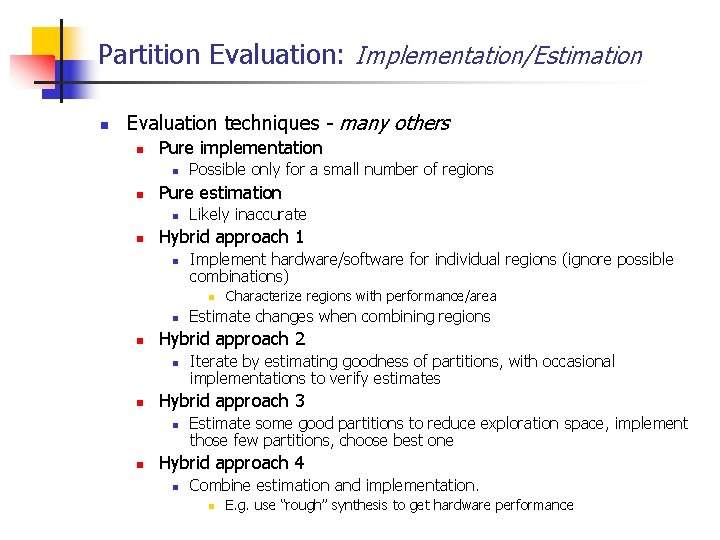

Partition Evaluation: Implementation/Estimation n Evaluation techniques - many others n Pure implementation n n Pure estimation n n Possible only for a small number of regions Likely inaccurate Hybrid approach 1 n Implement hardware/software for individual regions (ignore possible combinations) n n n Iterate by estimating goodness of partitions, with occasional implementations to verify estimates Hybrid approach 3 n n Estimate changes when combining regions Hybrid approach 2 n n Characterize regions with performance/area Estimate some good partitions to reduce exploration space, implement those few partitions, choose best one Hybrid approach 4 n Combine estimation and implementation. n E. g. use “rough” synthesis to get hardware performance

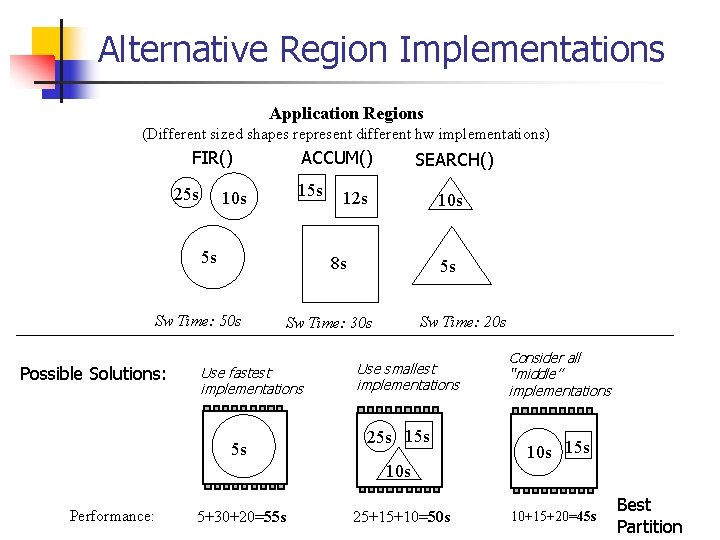

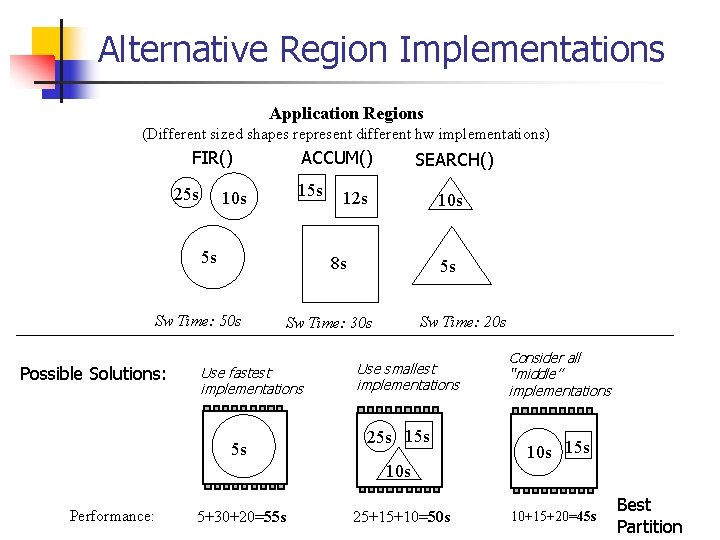

Alternative Region Implementations Application Regions (Different sized shapes represent different hw implementations) FIR() 25 s ACCUM() 15 s 10 s 5 s 12 s 10 s 8 s Sw Time: 50 s Possible Solutions: SEARCH() 5 s Sw Time: 20 s Sw Time: 30 s Use fastest implementations 5 s Use smallest implementations 25 s 10 s Performance: 5+30+20=55 s 25+15+10=50 s Consider all “middle” implementations 10 s 15 s 10+15+20=45 s Best Partition

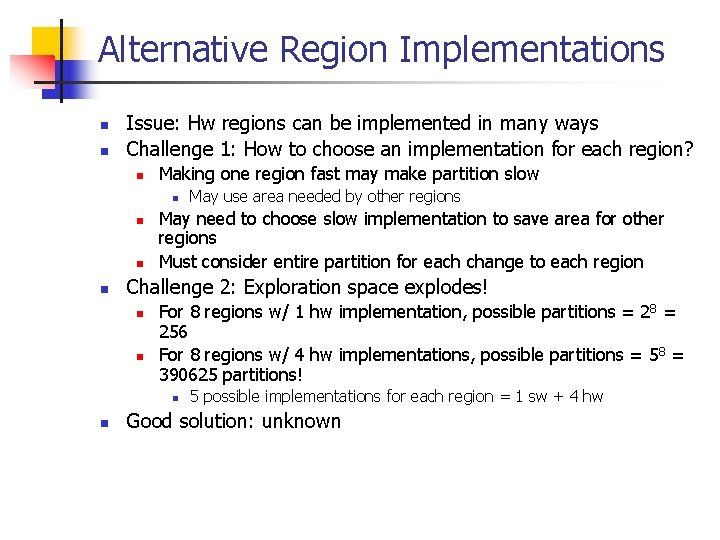

Alternative Region Implementations n n Issue: Hw regions can be implemented in many ways Challenge 1: How to choose an implementation for each region? n Making one region fast may make partition slow n n May need to choose slow implementation to save area for other regions Must consider entire partition for each change to each region Challenge 2: Exploration space explodes! n n For 8 regions w/ 1 hw implementation, possible partitions = 28 = 256 For 8 regions w/ 4 hw implementations, possible partitions = 58 = 390625 partitions! n n May use area needed by other regions 5 possible implementations for each region = 1 sw + 4 hw Good solution: unknown

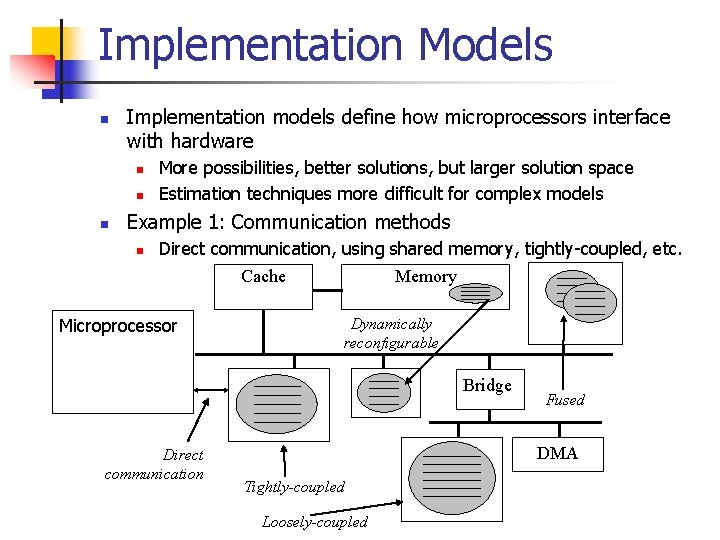

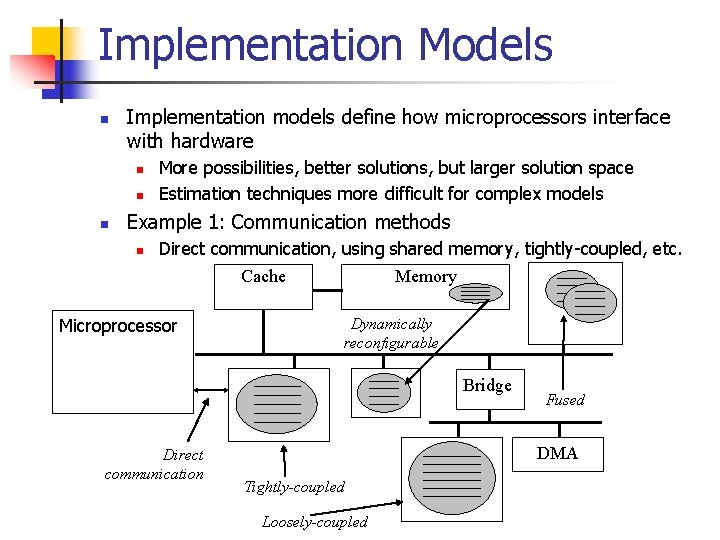

Implementation Models n Implementation models define how microprocessors interface with hardware n n n More possibilities, better solutions, but larger solution space Estimation techniques more difficult for complex models Example 1: Communication methods n Direct communication, using shared memory, tightly-coupled, etc. Cache Microprocessor Memory Dynamically reconfigurable Bridge Direct communication Fused DMA Tightly-coupled Loosely-coupled

Implementation Models n Example 2: Execution models n Mutually exclusive n FPGA and u. P never execute simultaneously n n May be appropriate for sequential applications Advantage: easier estimations Disadvantage: decreased performance Parallel n n Advantage: Improved performance Disadvantage: Estimates much more difficult n Must take into account memory contention, cache coherency, synchronization, etc.

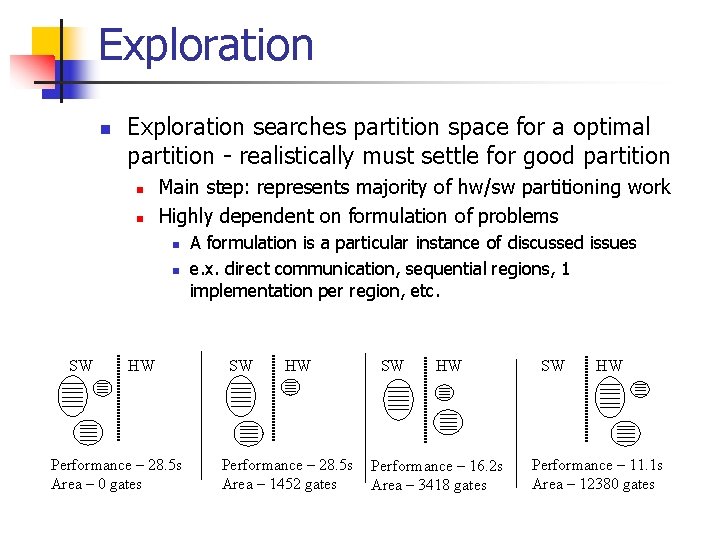

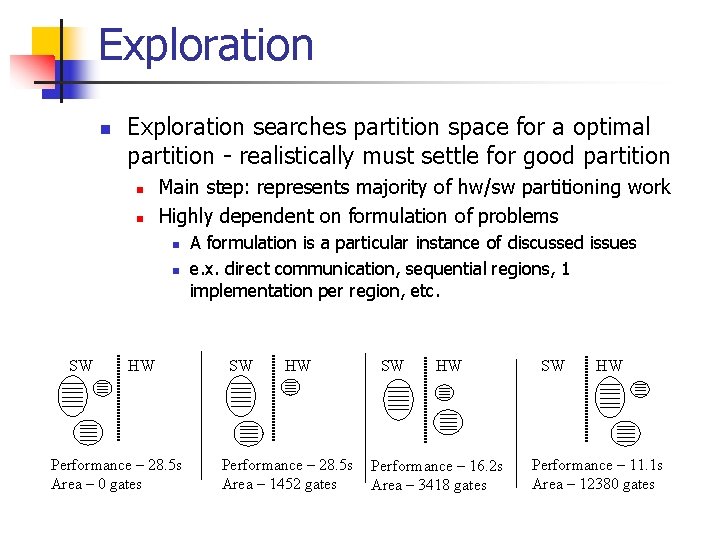

Exploration n Exploration searches partition space for a optimal partition - realistically must settle for good partition n n Main step: represents majority of hw/sw partitioning work Highly dependent on formulation of problems n n SW HW Performance – 28. 5 s Area – 0 gates A formulation is a particular instance of discussed issues e. x. direct communication, sequential regions, 1 implementation per region, etc. SW HW Performance – 28. 5 s Area – 1452 gates SW HW Performance – 16. 2 s Area – 3418 gates SW HW Performance – 11. 1 s Area – 12380 gates

Exploration n n Simple formulation: n regions, each region has Sw time, Hw time, and Hw area Assumptions n Adding hw regions together doesn’t change area/performance n n n Obviously not true But, may be good enough in some situations Communication time of regions same for Hw or Sw n Often not true, but may be true if u. P and Hw has same interface to memory

Exploration n A solution for simple formulation: n Problem identical to 0 -1 knapsack problem n n Input: knapsack with weight capacity, and a set of items with profit and weight Problem: Determine which items should be placed in the knapsack n n NP-complete Goal: maximizing profit without violating weight capacity Mapping to hw/sw partitioning n n n Knapsack is hw (FPGA in our case) Weight capacity is hw area Items are program regions Profit is speedup from implementation in hw Weight is area of hw implemention

Exploration: n Problem: 0 -1 knapsack is NP-complete n n n Heuristics for simple formulation We likely need to use a heuristic Need way of focusing on moving regions to hw that provide large speedup How do we know if a region potentially provides large speedup?

Exploration: n Amdahl’s Law n n n p is percentage of app. that is optimized, s is the percentage unoptimized (1 -p), n is the speedup of the region created by the optimization Ideal Speedup = 1/(s) = 1/(1 -p) n n Originally stated how much performance could be improved by parallelization Can be generalized to stating how much speedup is achieved based on the percentage of the application that is optimized Speedup = 1/(s-p/n) n n Heuristics for simple formulation Speedup assuming that hw runs infinitely fast From these equations, we can see that heuristics should focus on regions consisting of a large % of execution time n The larger p is for a region, the larger the potential speedup is n n p = 90%, ideal speedup = 1/(1 -. 9) = 10 x p = 10%, ideal speedup = 1/(1 -. 1) = 1. 1 x

Exploration: n 90 -10 rule n n Heuristics for simple formulation Observation that for many applications 90% of execution time spent in 10% of code Good news for heuristic n Suggests heuristic can achieve most of potential speedup by focusing on moving this 10% of code to hardware

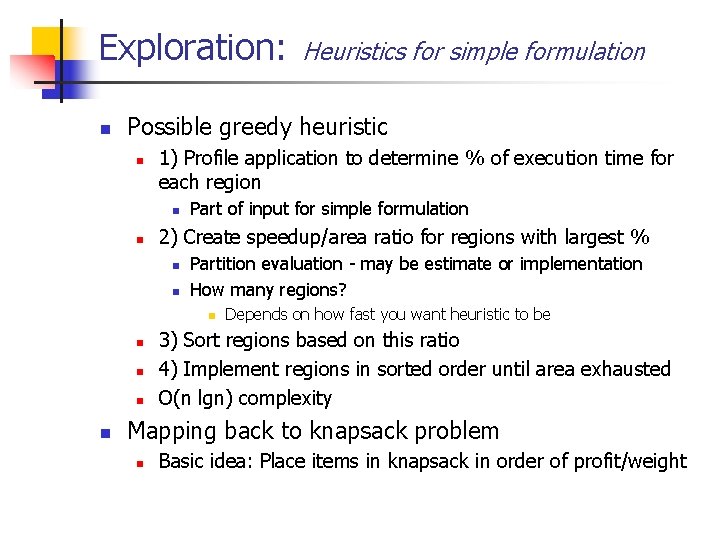

Exploration: n Possible greedy heuristic n 1) Profile application to determine % of execution time for each region n n Part of input for simple formulation 2) Create speedup/area ratio for regions with largest % n n Partition evaluation - may be estimate or implementation How many regions? n n n Heuristics for simple formulation Depends on how fast you want heuristic to be 3) Sort regions based on this ratio 4) Implement regions in sorted order until area exhausted O(n lgn) complexity Mapping back to knapsack problem n Basic idea: Place items in knapsack in order of profit/weight

Exploration n More complicated formulations n More complex implementation models n n n n Asymmetric communication Multiple processors Multiple FPGAs Tightly-coupled vs loosely coupled Multiple implementations Etc. Common exploration techniques: n n n ILP Simulated annealing/genetic algorithms/hill climbing Group migration (Kernighan-Lin) Graph bipartitioning (read paper on website) Tabu search (read paper on website) n Similar to simulated annealing, but maintains “Tabu” list to improve diversity of solutions

Exploration n There is no known efficient solution for considering all possible issues n n n Ridiculously large exploration space Problem is becoming harder with more complex architectures State of the art: n Granularity n n Partition evaluation n n Consider coarse and fine grained partitions Estimation and “rough” implementation Alternative region implementations n Typically only consider a single implementation of each region n n Area for future improvements - a lot of interesting problems n How to decide how many implementations to consider? n How to decide which implementations to consider? Implementation models n Typically assume architectures with few options n n One type of communication, no dynamic reconfiguration, etc. Future architectures will increase options n Should improve partition, but increase exploration space

Summary n Applications often not efficient in pure hw n Hw/sw partitioning maps regions of application onto sw (microprocessors) and hw (custom circuit) n n Goal: Maximize performance, meet design constraints, etc. Issues n n n Granularity of regions Partition evaluation Alternative region implementations Implementation models Exploration techniques n Focus of most work