Differentially Private Marginals Release with Mutual Consistency and

![Accuracy of Reported Values Roughly, described by E[||R(M(T)) – M(T)||1] Expected error in each Accuracy of Reported Values Roughly, described by E[||R(M(T)) – M(T)||1] Expected error in each](https://slidetodoc.com/presentation_image_h2/df7d09b91baef8f30f6e24f18388637e/image-10.jpg)

![Improving Accuracy Gaussian noise, instead of Laplacian E[noise/cell] for M 3 looks more like Improving Accuracy Gaussian noise, instead of Laplacian E[noise/cell] for M 3 looks more like](https://slidetodoc.com/presentation_image_h2/df7d09b91baef8f30f6e24f18388637e/image-23.jpg)

- Slides: 25

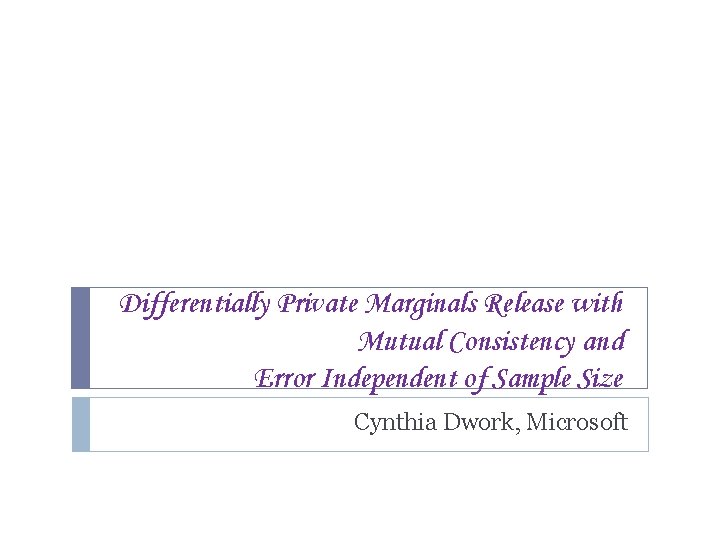

Differentially Private Marginals Release with Mutual Consistency and Error Independent of Sample Size Cynthia Dwork, Microsoft

Full Papers Privacy, Accuracy, and Consistency Too: A Holistic Solution to Contingency Table Release Barak, Chaudhuri, Dwork, Kale, Mc. Sherry, and Talwar ACM SIGMOD/PODS 2007 Differentially Private Marginals Release with Mutual Consistency and Error Independent of Sample Size Dwork, Mc. Sherry, and Talwar This Workshop

Release of Contingency Table Marginals Simultaneously ensure: Consistency Accuracy Differential Privacy

Release of Contingency Table Marginals Simultaneously ensure: Consistency Accuracy Differential Privacy Terms To Define: Contingency Table Marginal Consistency Accuracy Differential Privacy

Contingency Tables and Marginals Contingency Table: Histogram / Table of Counts Each respondent (member of data set) described by a vector of k (binary) attributes Population in each of the 2 k cells One cell for each setting of the k attributes A 2 A 1 A 3

Contingency Tables and Marginals Contingency Table: Histogram / Table of Counts Each respondent (member of data set) described by a vector of k (binary) attributes Population in each of the 2 k cells One cell for each setting of the k attributes Marginal: sub-table Specified by a set of j ≤ k attributes, eg, j=1 Histogram of population in each of 2 j A 1 (eg, 2) cells One cell for each setting of the j selected attributes A 1 = 0: 3, A 1 = 1: 4, so the A 1 marginal is (3, 4)

All the Notation for the Entire Talk D: the real data set n: number of respondents k: number of attributes T = T(D) : the contingency table representing D (2 k cells) T*: contingency table of a fictional data set M = M(T): a collection of marginals of T R=R(M)=R(M(T)): reported marginals M 3: collection of all 3 -way marginals Typically noisy, to protect privacy: R(M(T)) ≠ M(T) c = c(M): total number of cells in M : name of a marginal (ie, a set of attributes) ε: a privacy parameter

Consistency Across Reported Marginals There exists a fictional contingency table T* whose marginals equal the reported marginals M(T*) = R(M(T)) T*, M(T*) may have negative and/or non-integral counts Who cares about consistency? Not we. Software?

Consistency Across Reported Marginals There exists a fictional contingency table T* whose marginals equal the reported marginals M(T*) = R(M(T)) T*, M(T*) may have negative and/or non-integral counts Who cares about integrality, non-negativity? Not we. Software? See the paper.

![Accuracy of Reported Values Roughly described by ERMT MT1 Expected error in each Accuracy of Reported Values Roughly, described by E[||R(M(T)) – M(T)||1] Expected error in each](https://slidetodoc.com/presentation_image_h2/df7d09b91baef8f30f6e24f18388637e/image-10.jpg)

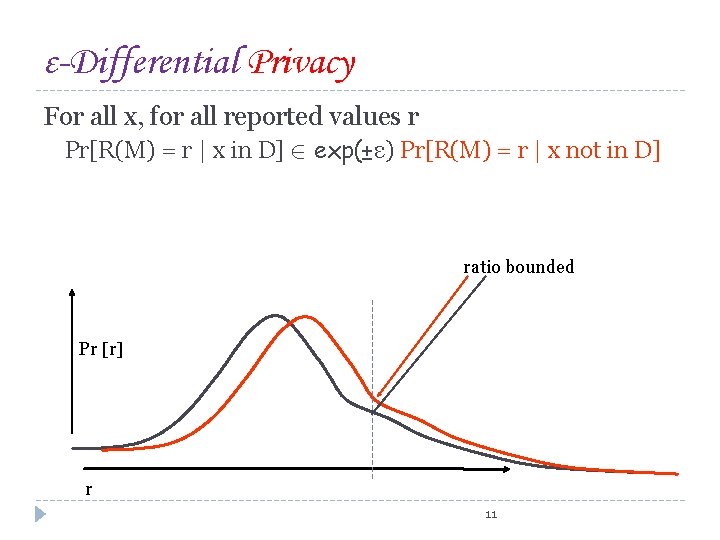

Accuracy of Reported Values Roughly, described by E[||R(M(T)) – M(T)||1] Expected error in each cell: proportional to c(M)/ε A little worse Probabilistic guarantees on size of max error Similar to change obtained by randomly adding/deleting c(M)/ε respondents to T and then computing M Key Point: Error is Independent of n (and k) Depends on the “complexity” of M Depends on the privacy parameter ε

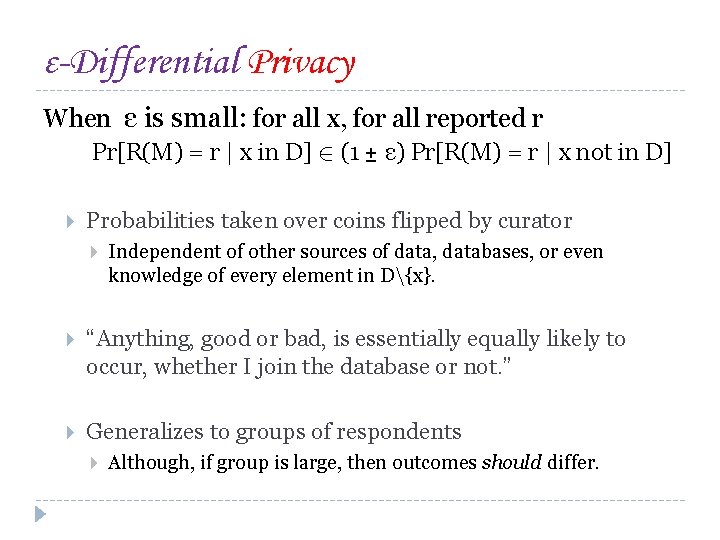

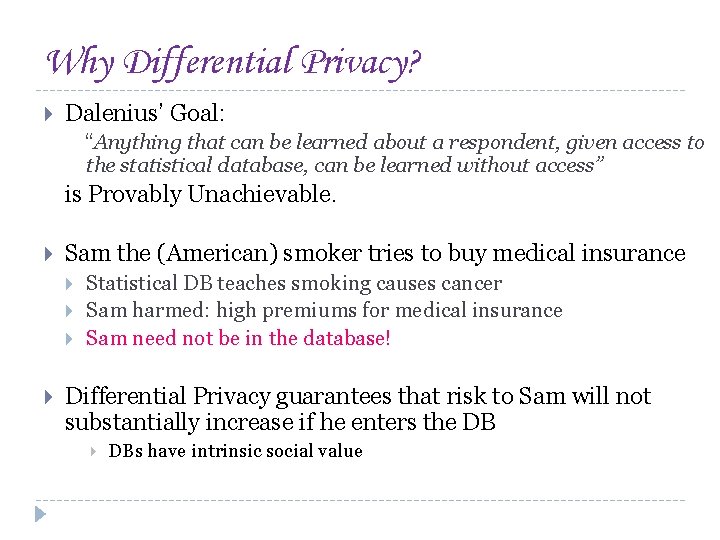

ε-Differential Privacy For all x, for all reported values r Pr[R(M) = r | x in D] 2 exp(±ε) Pr[R(M) = r | x not in D] ratio bounded Pr [r] r 11

ε-Differential Privacy When ε is small: for all x, for all reported r Pr[R(M) = r | x in D] 2 (1 ± ε) Pr[R(M) = r | x not in D] Probabilities taken over coins flipped by curator Independent of other sources of data, databases, or even knowledge of every element in D{x}. “Anything, good or bad, is essentially equally likely to occur, whether I join the database or not. ” Generalizes to groups of respondents Although, if group is large, then outcomes should differ.

Why Differential Privacy? Dalenius’ Goal: “Anything that can be learned about a respondent, given access to the statistical database, can be learned without access” is Provably Unachievable. Sam the (American) smoker tries to buy medical insurance Statistical DB teaches smoking causes cancer Sam harmed: high premiums for medical insurance Sam need not be in the database! Differential Privacy guarantees that risk to Sam will not substantially increase if he enters the DB DBs have intrinsic social value

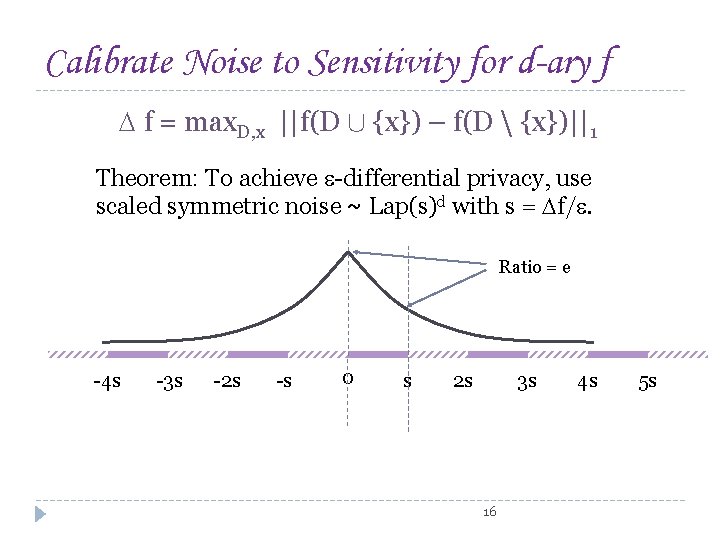

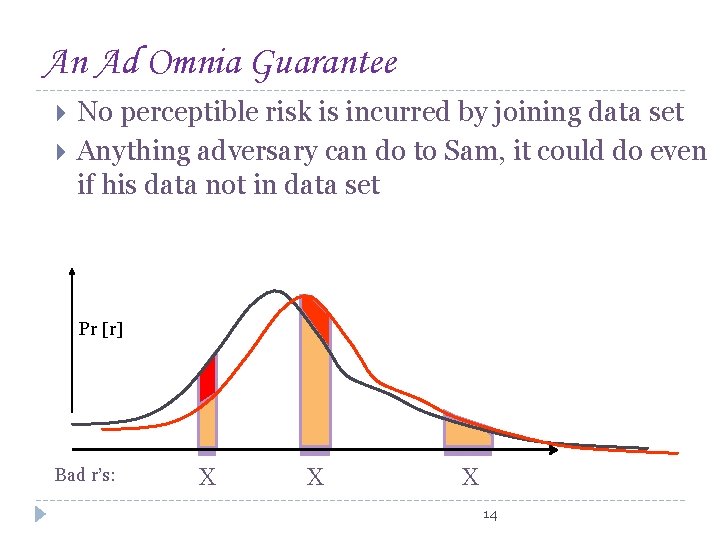

An Ad Omnia Guarantee No perceptible risk is incurred by joining data set Anything adversary can do to Sam, it could do even if his data not in data set Pr [r] Bad r’s: X X X 14

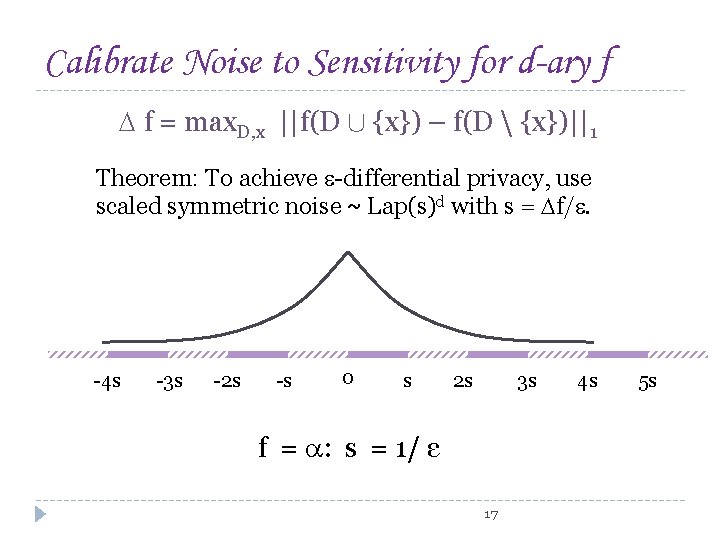

Achieving Differential Privacy for d-ary f Curator adds noise according to Laplace distribution “Hides” the presence/absence of any individual How much can the data of one person affect M(T)? 8 2 M, one person can affect one cell in (T), by 1 f = max. D, x ||f(D [ {x}) – f(D {x})||1 eg, = 1 M ≤ |M|

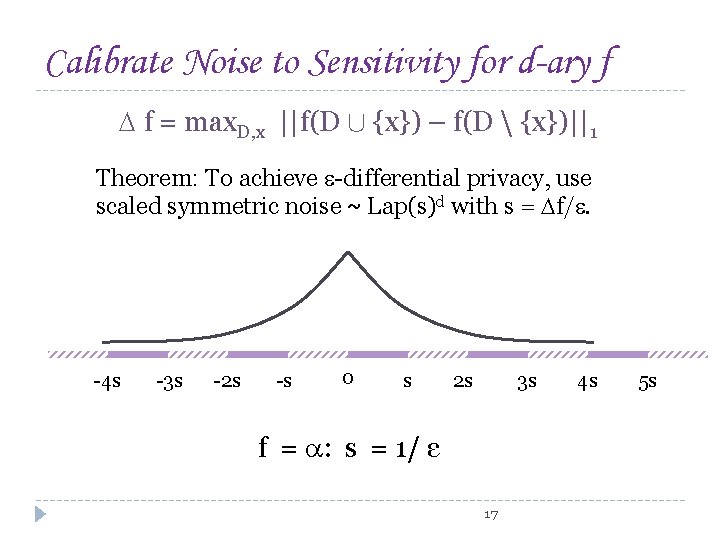

Calibrate Noise to Sensitivity for d-ary f f = max. D, x ||f(D [ {x}) – f(D {x})||1 Theorem: To achieve -differential privacy, use scaled symmetric noise ~ Lap(s)d with s = f/. Ratio = e -4 s -3 s -2 s -s 0 s 2 s 3 s 16 4 s 5 s

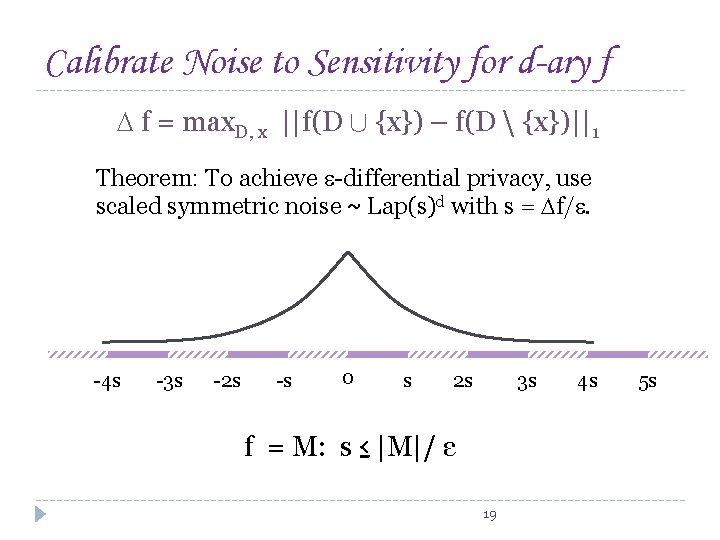

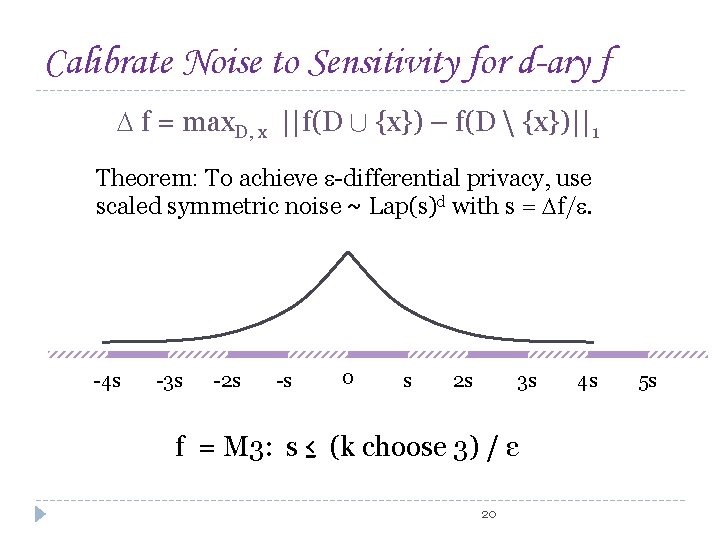

Calibrate Noise to Sensitivity for d-ary f f = max. D, x ||f(D [ {x}) – f(D {x})||1 Theorem: To achieve -differential privacy, use scaled symmetric noise ~ Lap(s)d with s = f/. -4 s -3 s -2 s -s 0 s 2 s 3 s f = : s = 1/ ε 17 4 s 5 s

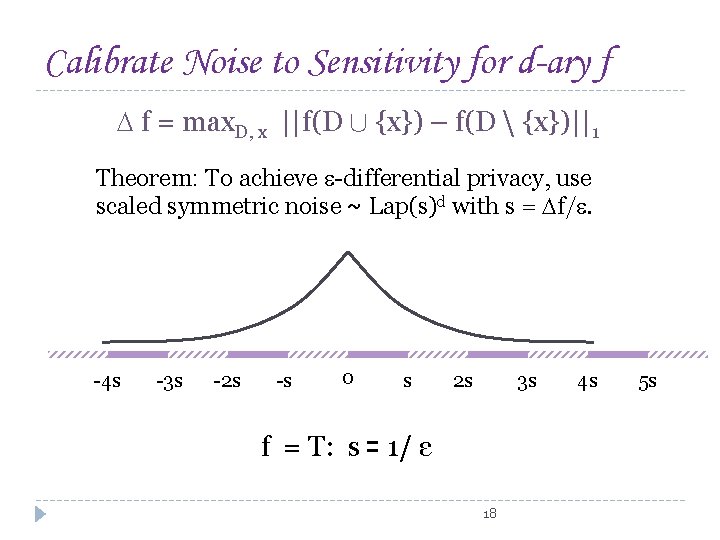

Calibrate Noise to Sensitivity for d-ary f f = max. D, x ||f(D [ {x}) – f(D {x})||1 Theorem: To achieve -differential privacy, use scaled symmetric noise ~ Lap(s)d with s = f/. -4 s -3 s -2 s -s 0 s 2 s 3 s f = T: s = 1/ ε 18 4 s 5 s

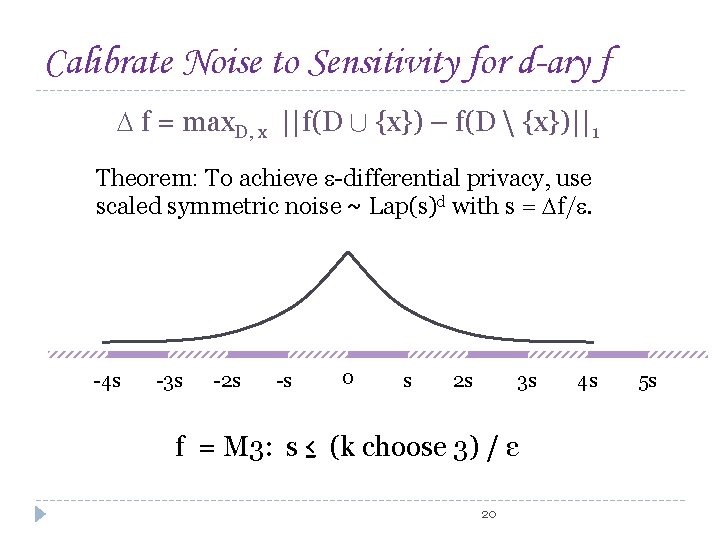

Calibrate Noise to Sensitivity for d-ary f f = max. D, x ||f(D [ {x}) – f(D {x})||1 Theorem: To achieve -differential privacy, use scaled symmetric noise ~ Lap(s)d with s = f/. -4 s -3 s -2 s -s 0 s 2 s 3 s f = M: s ≤ |M|/ ε 19 4 s 5 s

Calibrate Noise to Sensitivity for d-ary f f = max. D, x ||f(D [ {x}) – f(D {x})||1 Theorem: To achieve -differential privacy, use scaled symmetric noise ~ Lap(s)d with s = f/. -4 s -3 s -2 s -s 0 s 2 s 3 s f = M 3: s ≤ (k choose 3) / ε 20 4 s 5 s

Application: Release of Marginals M Release noisy contingency table; compute marginals? Consistency and differential privacy Noise per cell of T: Lap(1/ε) Noise per cell of M: about 2 k/2/ ε for low order marginals Release noisy versions of all marginals in M? Noise per cell of M: Lap(|M|/ε) Differential privacy and better accuracy Inconsistent

Move to the Fourier Domain Just a change of basis. Why bother? T represented by 2 k Fourier coefficients (it has 2 k cells) To compute j-ary marginal ®(T) only need 2 j coefficients For any M, expected noise/cell depends on number of coefficients needed to compute M(T) Eg, for M 3, E[noise/cell] ≈ (k choose 3)/ε. The Algorithm for R(M(T)): Compute set of Fourier coefficients of T needed for M(T) Add noise; gives Fourier coefficients for M(T*) 1 -1 mapping between set of Fourier coeffs and tables ensures consistency! Convert back to obtain M(T*) Release R(M(T))=M(T*)

![Improving Accuracy Gaussian noise instead of Laplacian Enoisecell for M 3 looks more like Improving Accuracy Gaussian noise, instead of Laplacian E[noise/cell] for M 3 looks more like](https://slidetodoc.com/presentation_image_h2/df7d09b91baef8f30f6e24f18388637e/image-23.jpg)

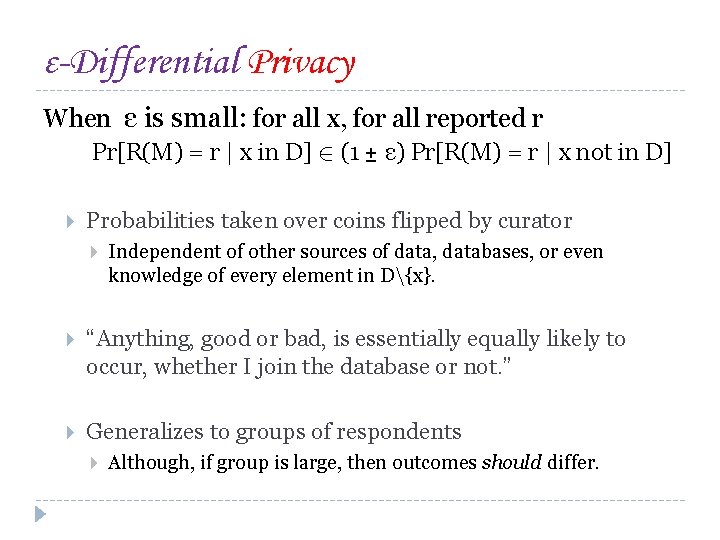

Improving Accuracy Gaussian noise, instead of Laplacian E[noise/cell] for M 3 looks more like O((log (1/ ) k 3/2/ε) Probablistic (1 - ) guarantee of ε-differential privacy Use Domain-Specific Knowledge We have, so far, avoided this! If most attributes are considered (socially) insensitive, can add less noise, and to fewer coefficients Eg, M 3 with 1 sensitive attribute ≈ k 2 (instead of k 3 )

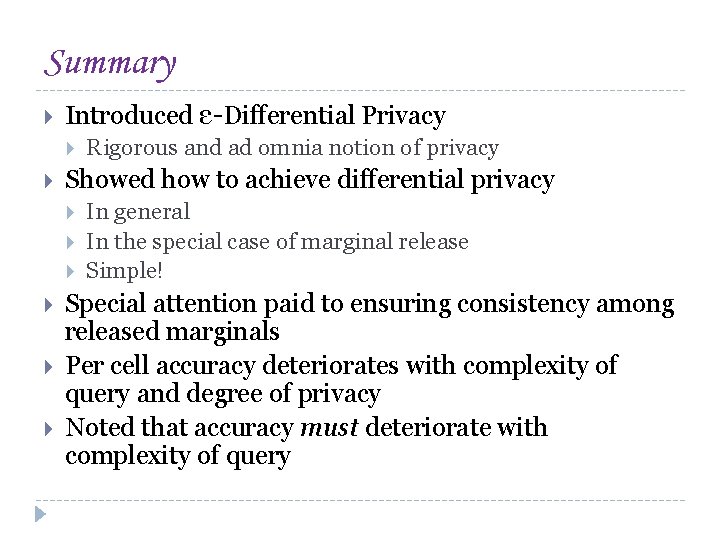

In Theory, Noise Must Depend on the Set M “Dinur-Nissim” style results: 1 sensitive attribute Overly accurate answers to too many questions permits reconstruction of sensitive attributes of almost entire database, say, 99%. Attacks use no linkage/external/auxiliary information Rough Translation: there are “bad” databases, in which a sensitive binary attribute can be learned for all respondents, from, say, 2√n degree-2 marginals, if percell errors are strictly less than √n The “badness” relates to the distribution of the occurrence of the insensitive attributes!

Summary Introduced ε-Differential Privacy Showed how to achieve differential privacy Rigorous and ad omnia notion of privacy In general In the special case of marginal release Simple! Special attention paid to ensuring consistency among released marginals Per cell accuracy deteriorates with complexity of query and degree of privacy Noted that accuracy must deteriorate with complexity of query