Developing STANAG 6001 Language Proficiency Tests with Less

- Slides: 30

Developing STANAG 6001 Language Proficiency Tests with Less Inference and More Evidence Ray Clifford Brno 6 September

Language testing is a professional discipline. • Language is the most complex of observable human behaviors. • Testing is a complex science. • Language testing is a professional discipline that requires scientific expertise. – Every test development effort is a research project. – Every research project should be conducted in a precise, disciplined manner.

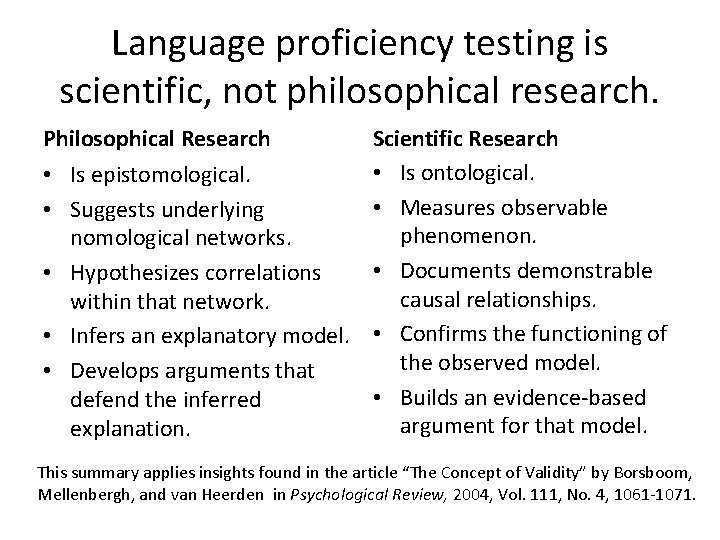

But language testing is not as complex as we have been told it is. • Language testing is not a philosophical, epistemological exercise that must infer an unobservable latent trait from a hypothesized nomological network of correlational relationships. • Language testing is a scientific, ontological effort that describes an observable human trait by measuring causal relationships.

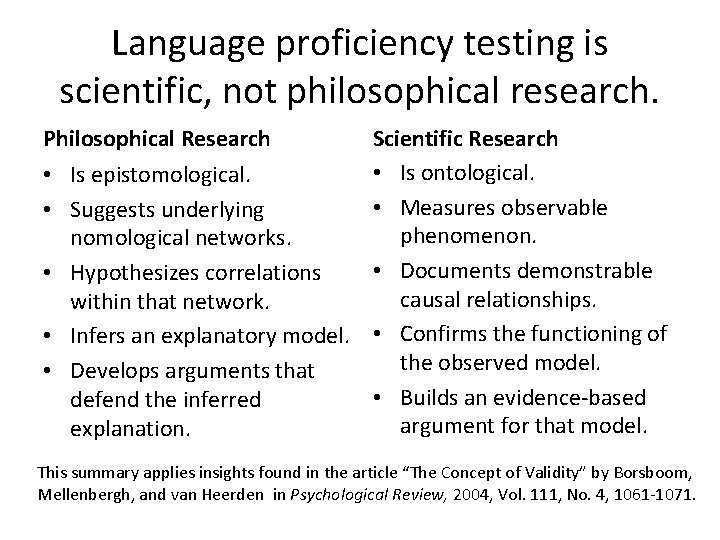

Language proficiency testing is scientific, not philosophical research. Philosophical Research • • • Scientific Research • Is ontological. Is epistomological. • Measures observable Suggests underlying phenomenon. nomological networks. • Documents demonstrable Hypothesizes correlations causal relationships. within that network. Infers an explanatory model. • Confirms the functioning of the observed model. Develops arguments that • Builds an evidence-based defend the inferred argument for that model. explanation. This summary applies insights found in the article “The Concept of Validity” by Borsboom, Mellenbergh, and van Heerden in Psychological Review, 2004, Vol. 111, No. 4, 1061 -1071.

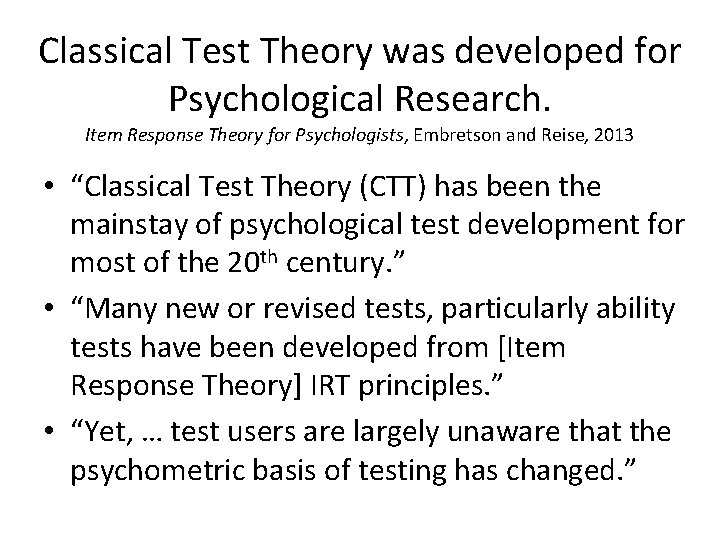

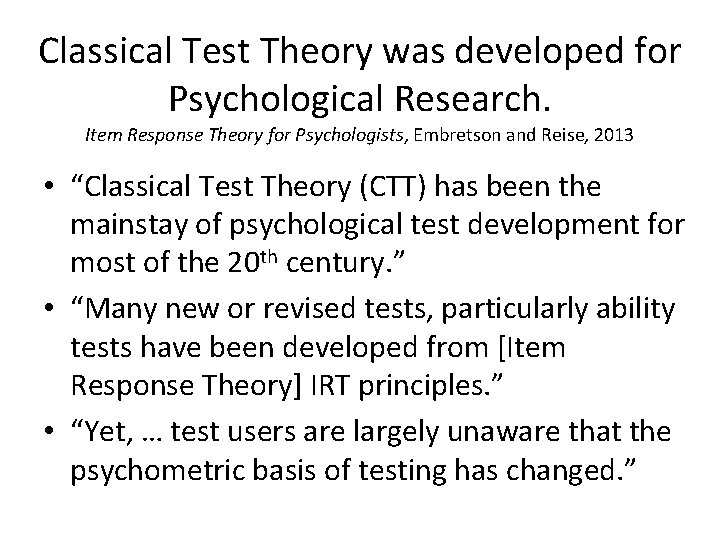

Classical Test Theory was developed for Psychological Research. Item Response Theory for Psychologists, Embretson and Reise, 2013 • “Classical Test Theory (CTT) has been the mainstay of psychological test development for most of the 20 th century. ” • “Many new or revised tests, particularly ability tests have been developed from [Item Response Theory] IRT principles. ” • “Yet, … test users are largely unaware that the psychometric basis of testing has changed. ”

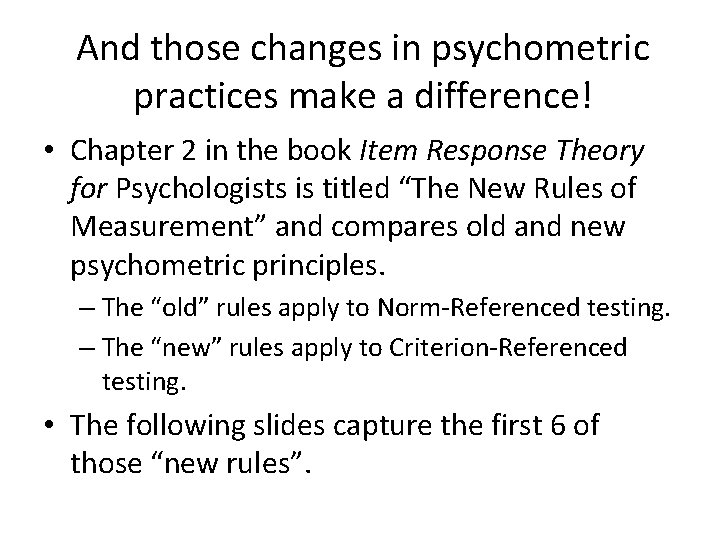

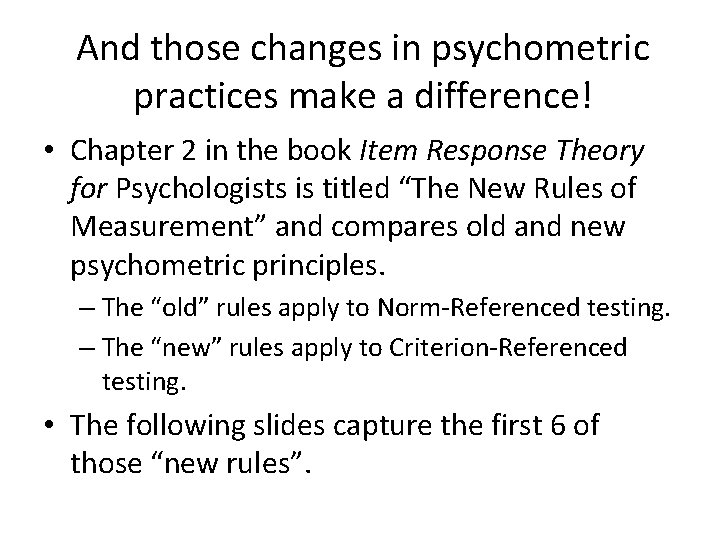

And those changes in psychometric practices make a difference! • Chapter 2 in the book Item Response Theory for Psychologists is titled “The New Rules of Measurement” and compares old and new psychometric principles. – The “old” rules apply to Norm-Referenced testing. – The “new” rules apply to Criterion-Referenced testing. • The following slides capture the first 6 of those “new rules”.

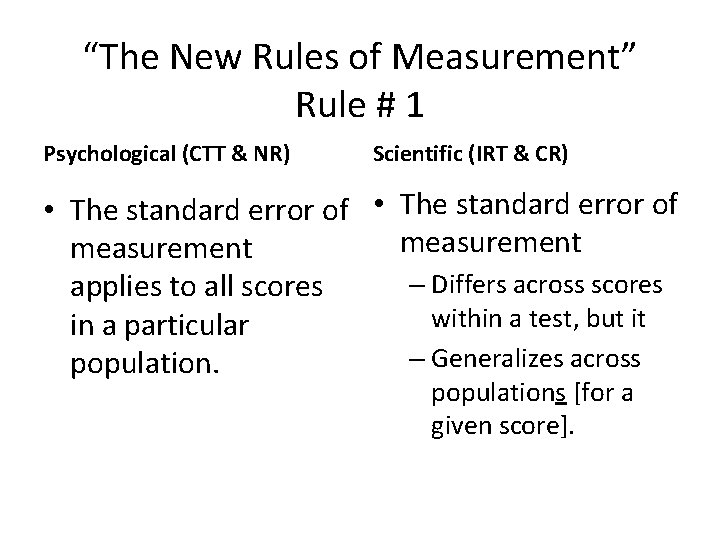

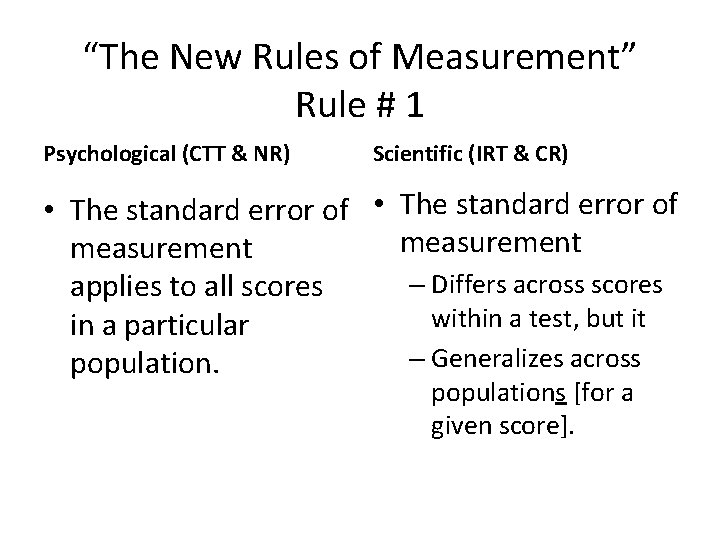

“The New Rules of Measurement” Rule # 1 Psychological (CTT & NR) Scientific (IRT & CR) • The standard error of measurement – Differs across scores applies to all scores within a test, but it in a particular – Generalizes across populations [for a given score].

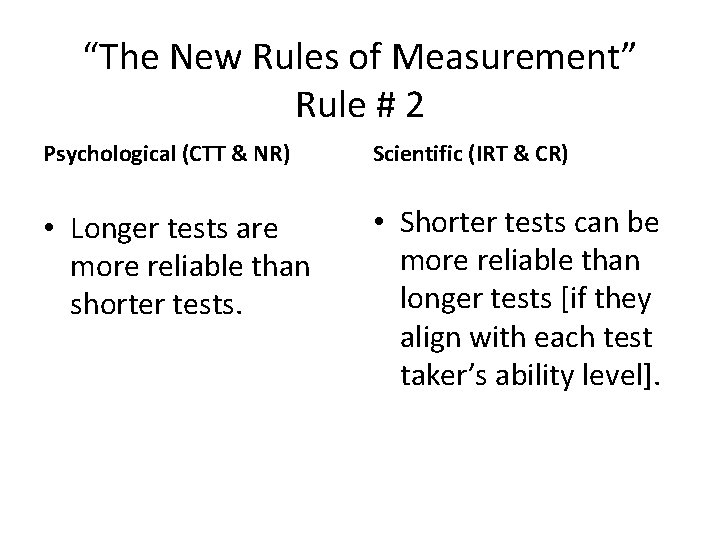

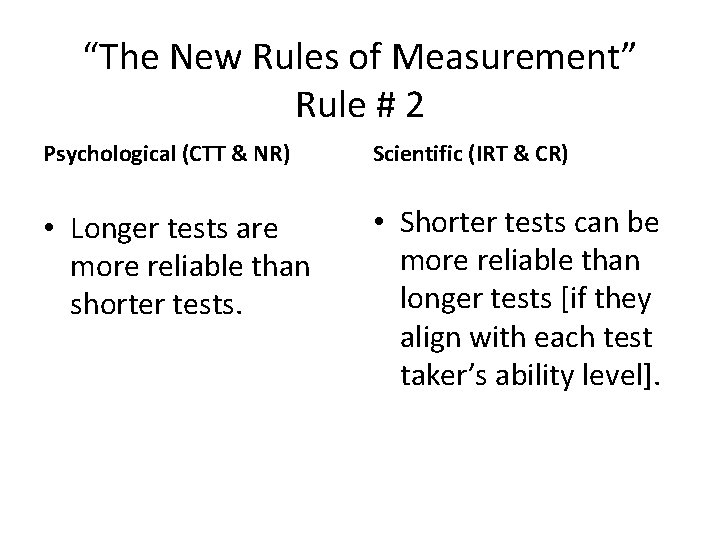

“The New Rules of Measurement” Rule # 2 Psychological (CTT & NR) Scientific (IRT & CR) • Longer tests are more reliable than shorter tests. • Shorter tests can be more reliable than longer tests [if they align with each test taker’s ability level].

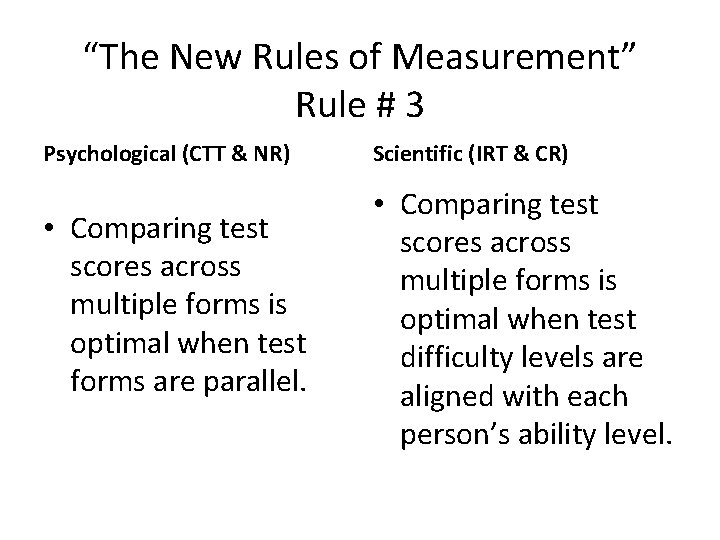

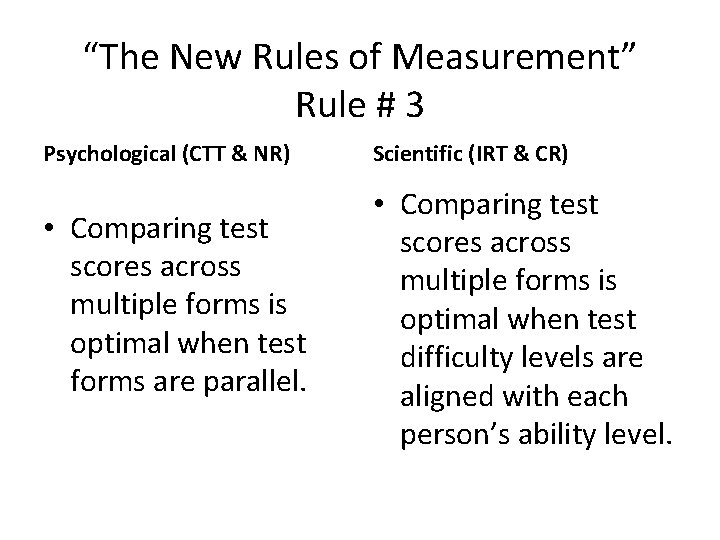

“The New Rules of Measurement” Rule # 3 Psychological (CTT & NR) • Comparing test scores across multiple forms is optimal when test forms are parallel. Scientific (IRT & CR) • Comparing test scores across multiple forms is optimal when test difficulty levels are aligned with each person’s ability level.

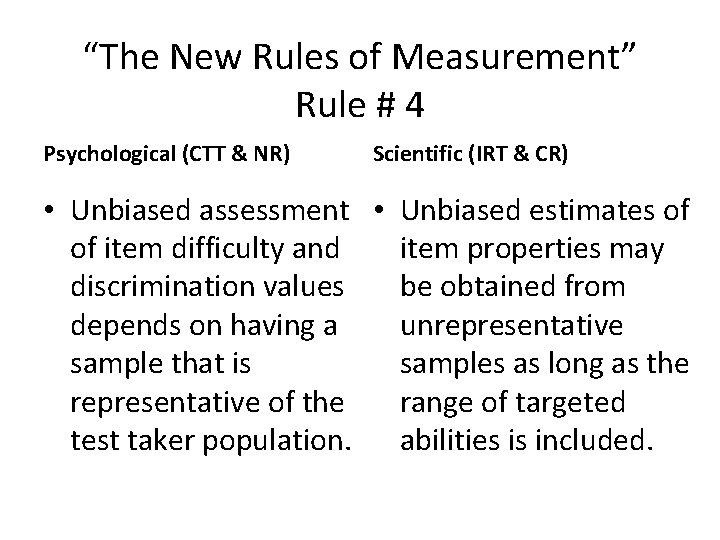

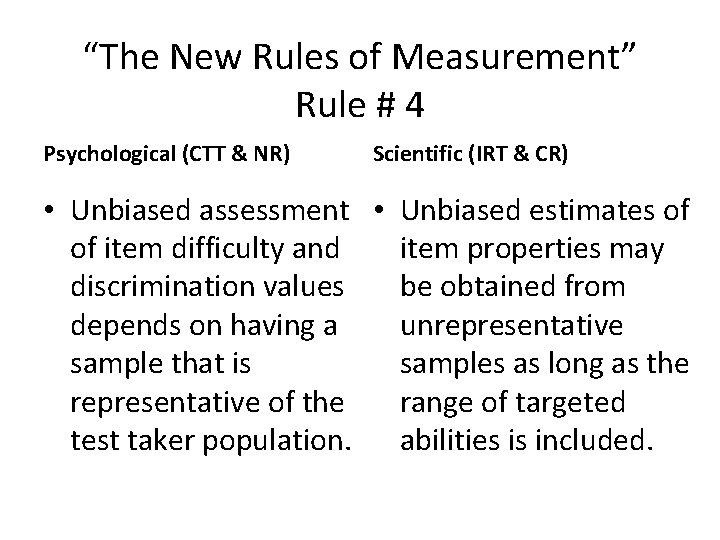

“The New Rules of Measurement” Rule # 4 Psychological (CTT & NR) Scientific (IRT & CR) • Unbiased assessment • Unbiased estimates of of item difficulty and item properties may discrimination values be obtained from depends on having a unrepresentative sample that is samples as long as the representative of the range of targeted test taker population. abilities is included.

“The New Rules of Measurement” Rule # 5 Psychological (CTT & NR) Scientific (IRT & CR) • Test scores obtain meaning by comparing their position in a norm group. • Test scores obtain meaning by comparing their distance from [criterionreferenced] anchor items.

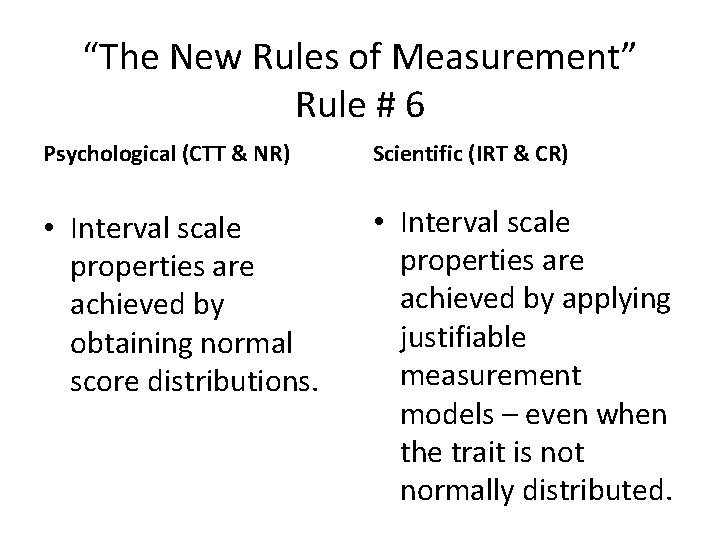

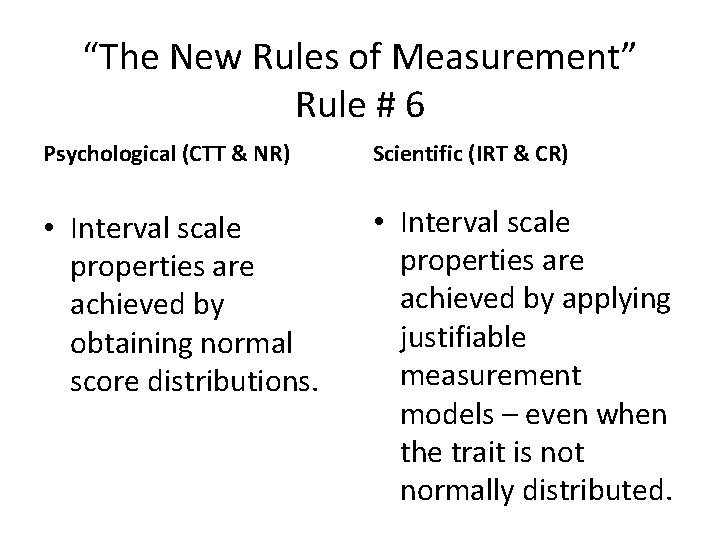

“The New Rules of Measurement” Rule # 6 Psychological (CTT & NR) Scientific (IRT & CR) • Interval scale properties are achieved by obtaining normal score distributions. • Interval scale properties are achieved by applying justifiable measurement models – even when the trait is not normally distributed.

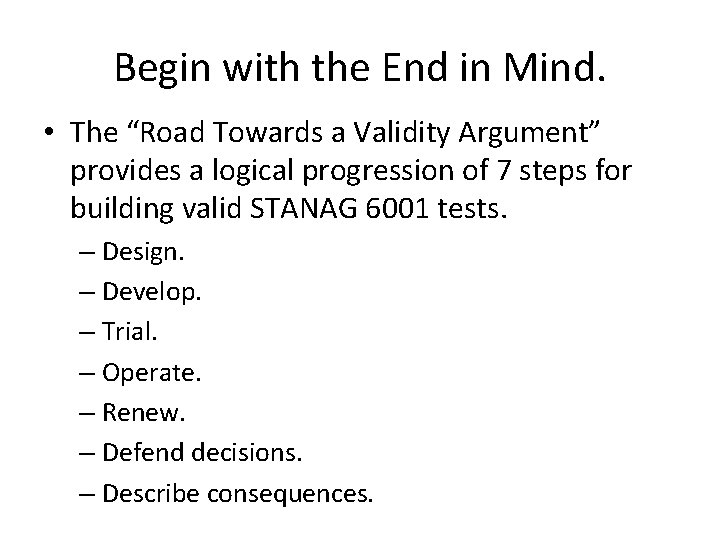

Begin with the End in Mind. • The “Road Towards a Validity Argument” provides a logical progression of 7 steps for building valid STANAG 6001 tests. – Design. – Develop. – Trial. – Operate. – Renew. – Defend decisions. – Describe consequences.

The “Roadmap” reminds us to begin with the end in mind and stay focused on that goal. • Let’s look at how a Criterion-Referenced approach to testing differs from a CTT or NR approach when developing a STANAG 6001 reading test.

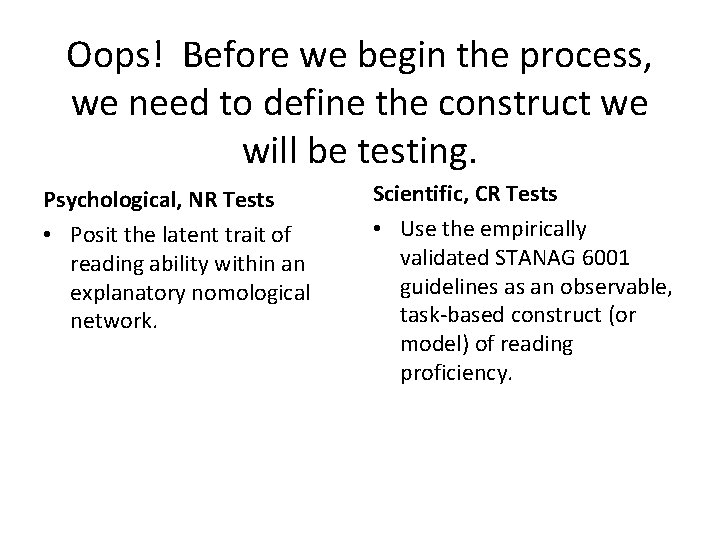

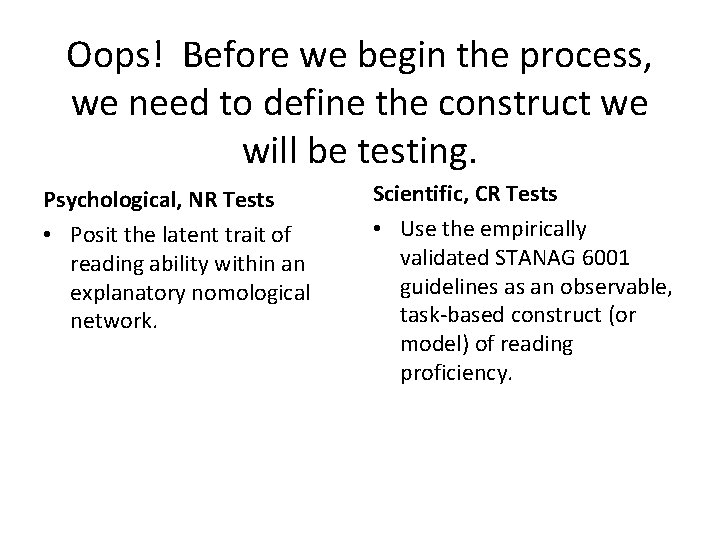

Oops! Before we begin the process, we need to define the construct we will be testing. Psychological, NR Tests • Posit the latent trait of reading ability within an explanatory nomological network. Scientific, CR Tests • Use the empirically validated STANAG 6001 guidelines as an observable, task-based construct (or model) of reading proficiency.

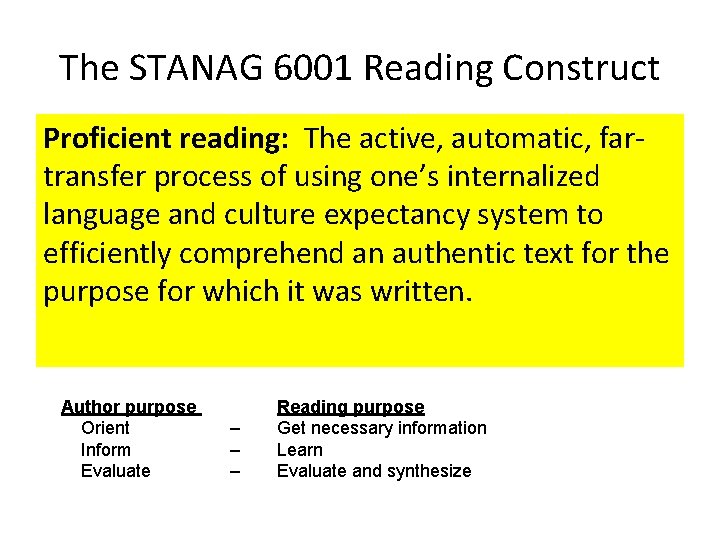

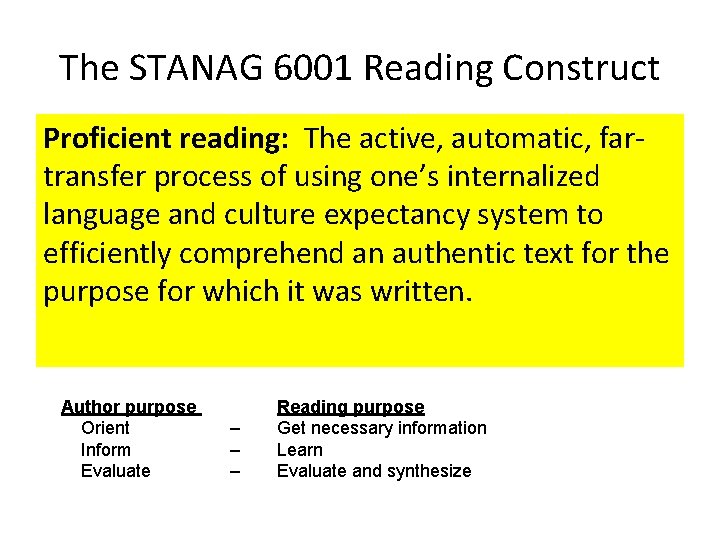

The STANAG 6001 Reading Construct Proficient reading: The active, automatic, fartransfer process of using one’s internalized language and culture expectancy system to efficiently comprehend an authentic text for the purpose for which it was written. Author purpose Orient Inform Evaluate – – – Reading purpose Get necessary information Learn Evaluate and synthesize

Definition of Proficient Reading • Proficient reading is the … – active, – automatic, – far transfer process – of using one’s internalized language and culture expectancy system – to efficiently comprehend – an authentic text – for the purpose for which it was written.

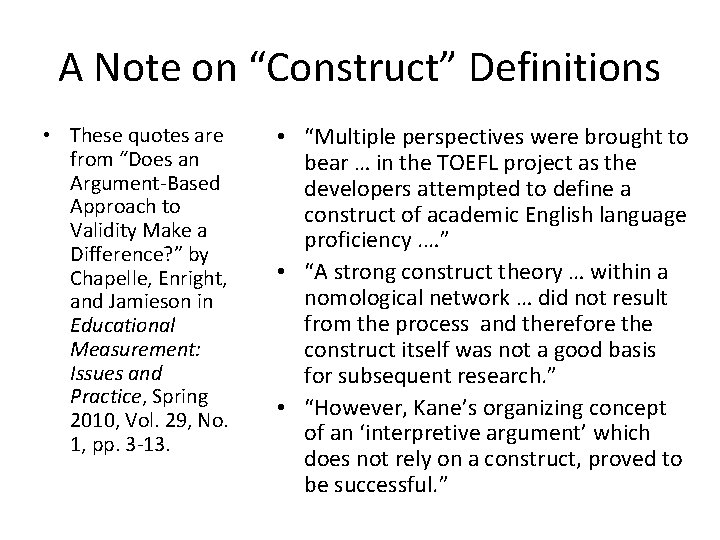

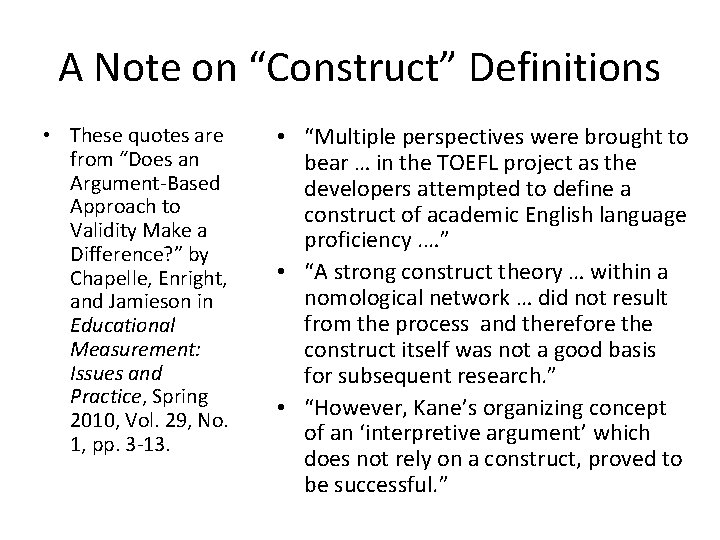

A Note on “Construct” Definitions • These quotes are from “Does an Argument-Based Approach to Validity Make a Difference? ” by Chapelle, Enright, and Jamieson in Educational Measurement: Issues and Practice, Spring 2010, Vol. 29, No. 1, pp. 3 -13. • “Multiple perspectives were brought to bear … in the TOEFL project as the developers attempted to define a construct of academic English language proficiency. …” • “A strong construct theory … within a nomological network … did not result from the process and therefore the construct itself was not a good basis for subsequent research. ” • “However, Kane’s organizing concept of an ‘interpretive argument’ which does not rely on a construct, proved to be successful. ”

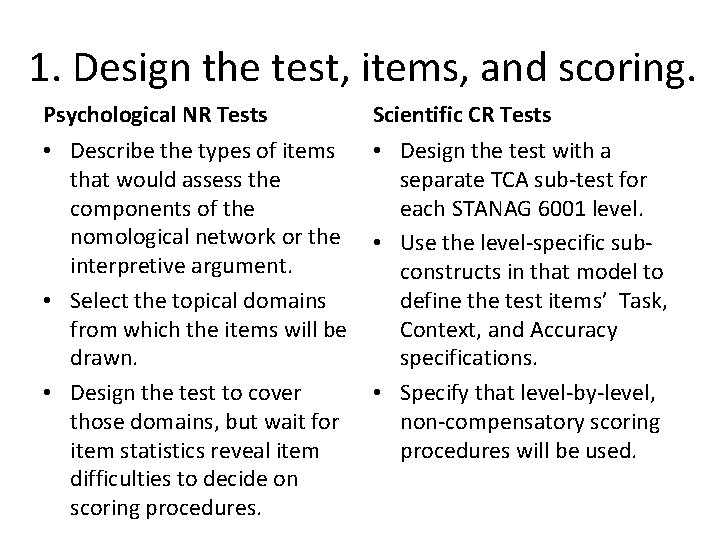

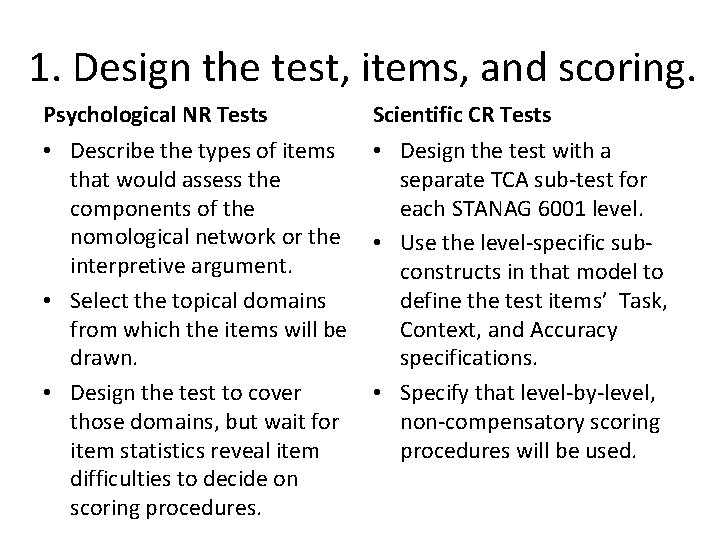

1. Design the test, items, and scoring. Psychological NR Tests Scientific CR Tests • Describe the types of items that would assess the components of the nomological network or the interpretive argument. • Select the topical domains from which the items will be drawn. • Design the test to cover those domains, but wait for item statistics reveal item difficulties to decide on scoring procedures. • Design the test with a separate TCA sub-test for each STANAG 6001 level. • Use the level-specific subconstructs in that model to define the test items’ Task, Context, and Accuracy specifications. • Specify that level-by-level, non-compensatory scoring procedures will be used.

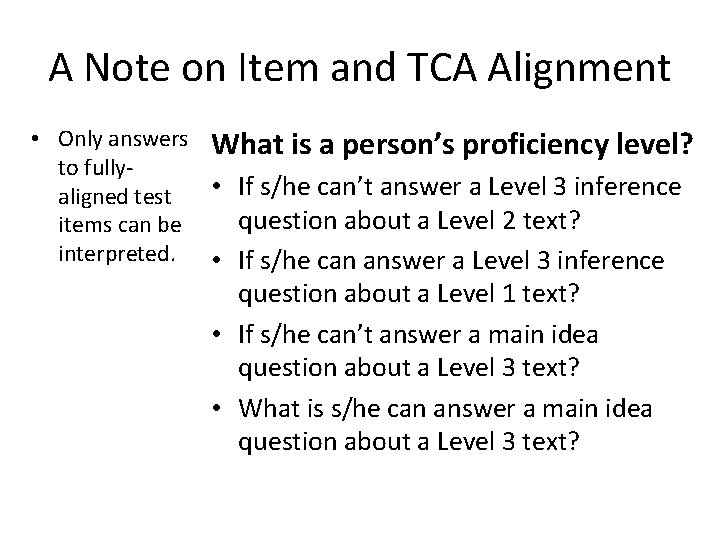

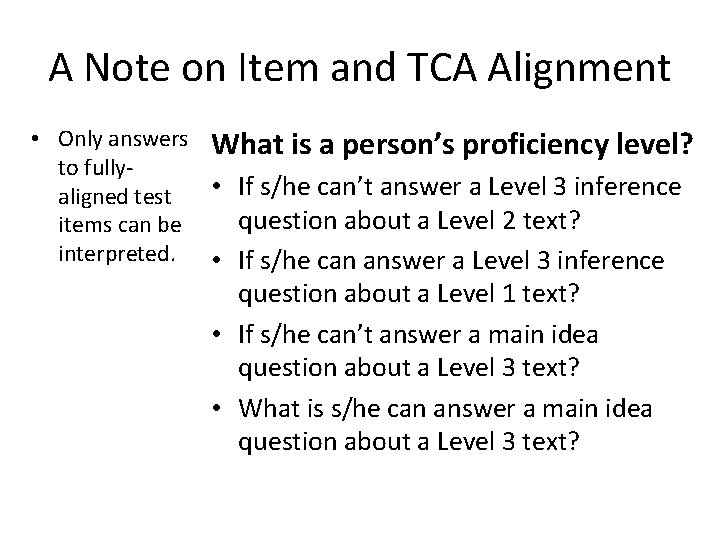

A Note on Item and TCA Alignment • Only answers What is a person’s proficiency level? to fully • If s/he can’t answer a Level 3 inference aligned test question about a Level 2 text? items can be interpreted. • If s/he can answer a Level 3 inference question about a Level 1 text? • If s/he can’t answer a main idea question about a Level 3 text? • What is s/he can answer a main idea question about a Level 3 text?

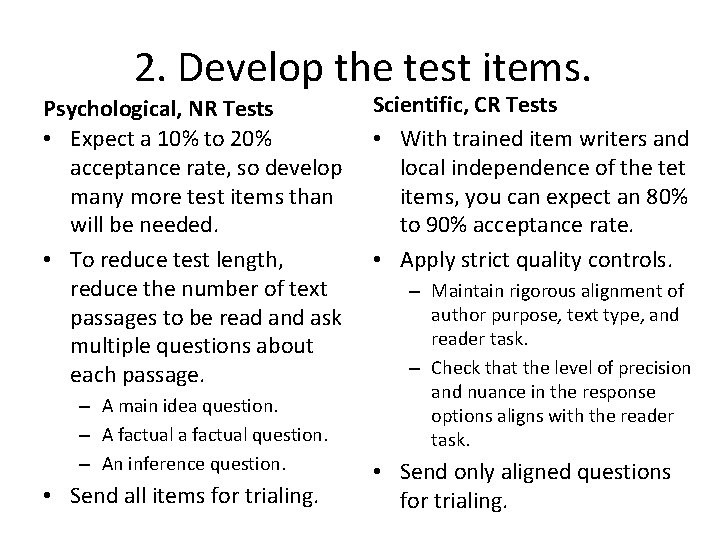

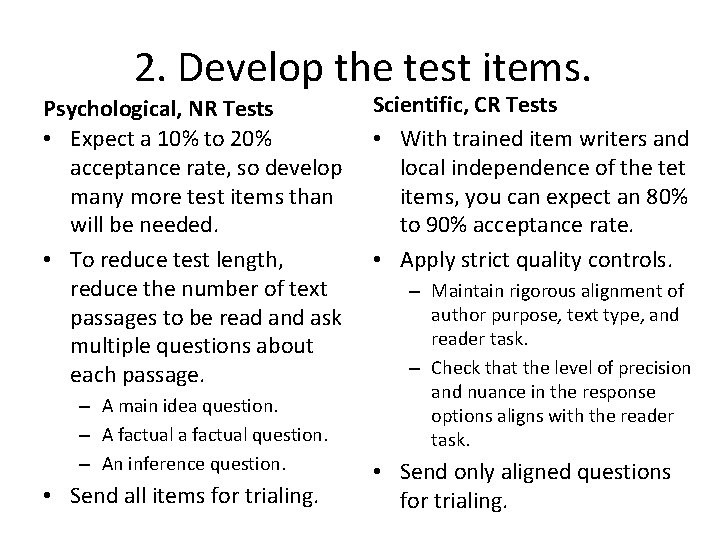

2. Develop the test items. Psychological, NR Tests • Expect a 10% to 20% acceptance rate, so develop many more test items than will be needed. • To reduce test length, reduce the number of text passages to be read and ask multiple questions about each passage. – A main idea question. – A factual a factual question. – An inference question. • Send all items for trialing. Scientific, CR Tests • With trained item writers and local independence of the tet items, you can expect an 80% to 90% acceptance rate. • Apply strict quality controls. – Maintain rigorous alignment of author purpose, text type, and reader task. – Check that the level of precision and nuance in the response options aligns with the reader task. • Send only aligned questions for trialing.

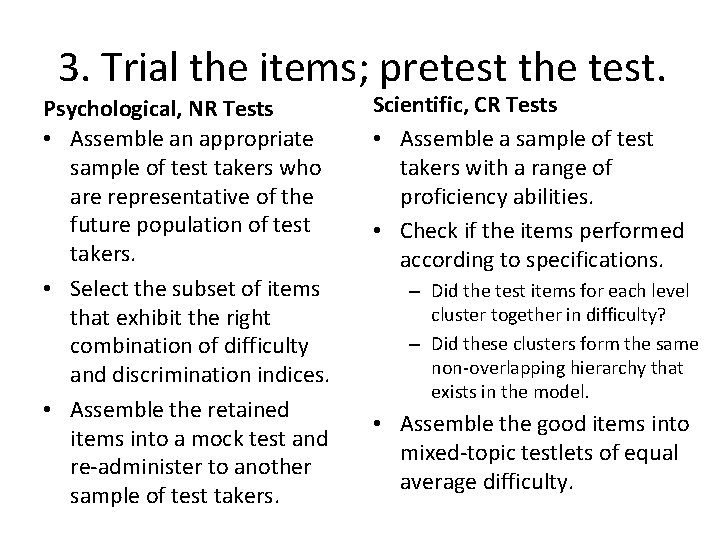

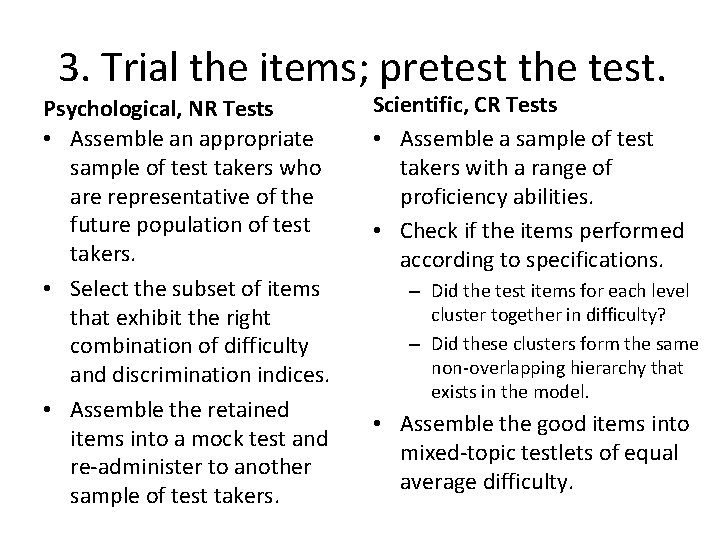

3. Trial the items; pretest the test. Psychological, NR Tests • Assemble an appropriate sample of test takers who are representative of the future population of test takers. • Select the subset of items that exhibit the right combination of difficulty and discrimination indices. • Assemble the retained items into a mock test and re-administer to another sample of test takers. Scientific, CR Tests • Assemble a sample of test takers with a range of proficiency abilities. • Check if the items performed according to specifications. – Did the test items for each level cluster together in difficulty? – Did these clusters form the same non-overlapping hierarchy that exists in the model. • Assemble the good items into mixed-topic testlets of equal average difficulty.

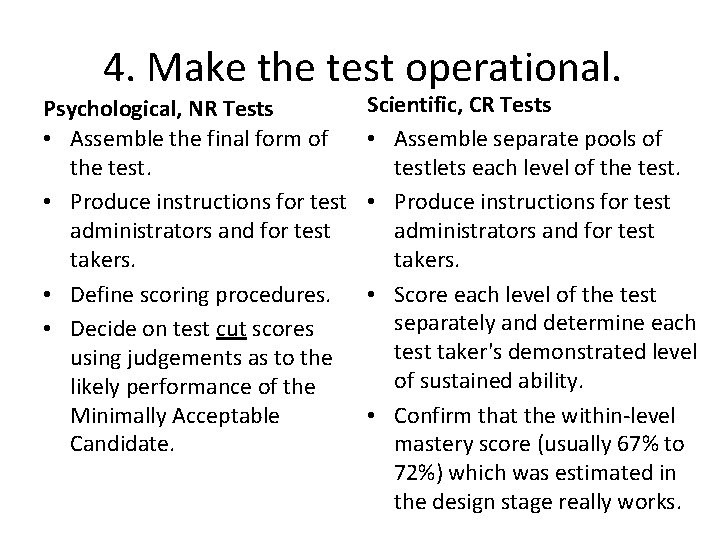

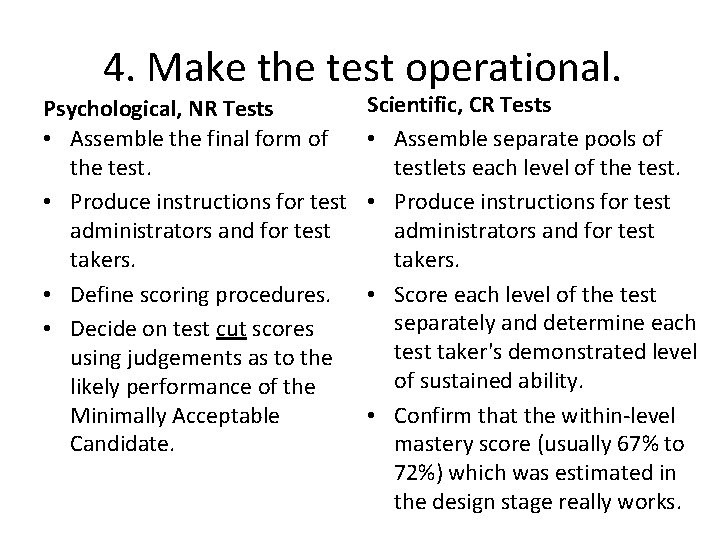

4. Make the test operational. Psychological, NR Tests • Assemble the final form of the test. • Produce instructions for test administrators and for test takers. • Define scoring procedures. • Decide on test cut scores using judgements as to the likely performance of the Minimally Acceptable Candidate. Scientific, CR Tests • Assemble separate pools of testlets each level of the test. • Produce instructions for test administrators and for test takers. • Score each level of the test separately and determine each test taker's demonstrated level of sustained ability. • Confirm that the within-level mastery score (usually 67% to 72%) which was estimated in the design stage really works.

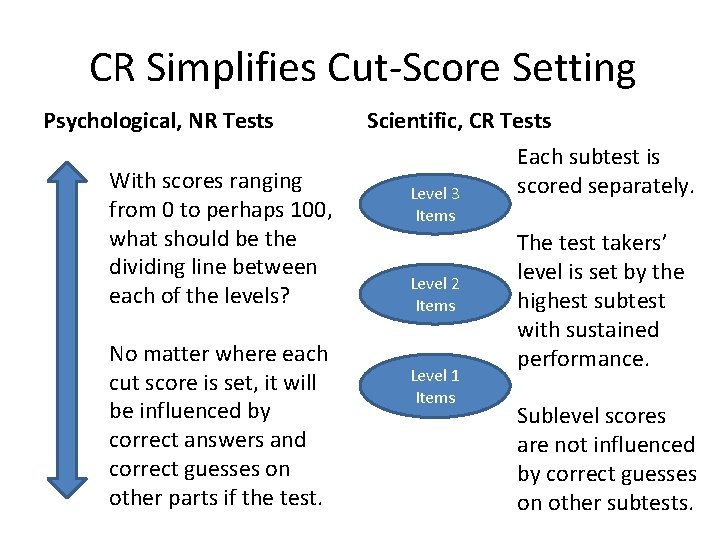

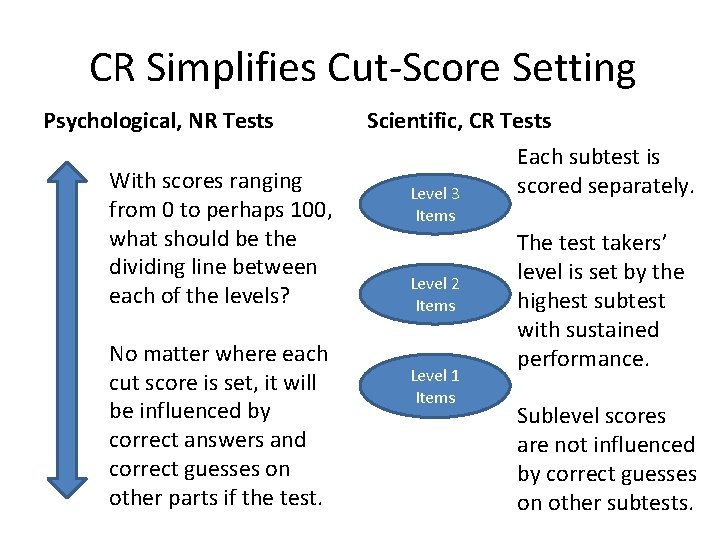

CR Simplifies Cut-Score Setting Psychological, NR Tests With scores ranging from 0 to perhaps 100, what should be the dividing line between each of the levels? No matter where each cut score is set, it will be influenced by correct answers and correct guesses on other parts if the test. Scientific, CR Tests Level 3 Items Level 2 Items Level 1 Items Each subtest is scored separately. The test takers’ level is set by the highest subtest with sustained performance. Sublevel scores are not influenced by correct guesses on other subtests.

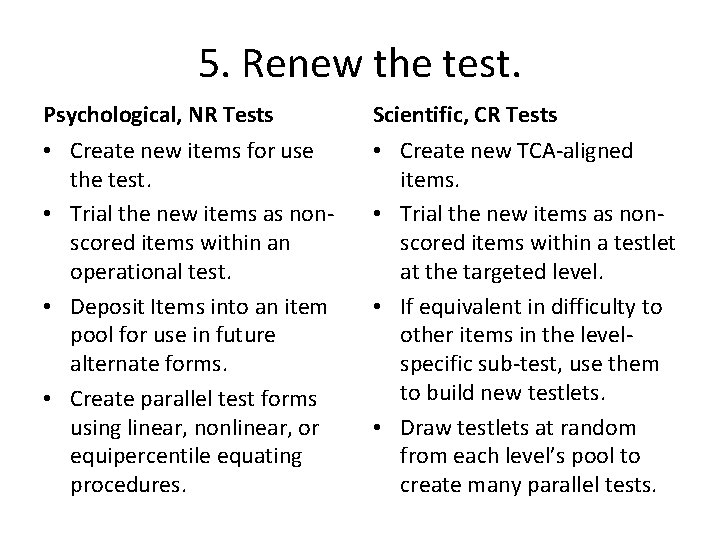

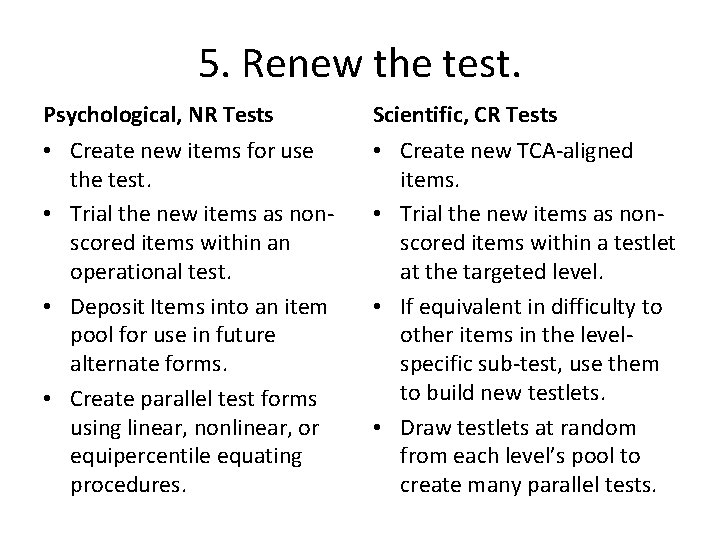

5. Renew the test. Psychological, NR Tests • Create new items for use the test. • Trial the new items as nonscored items within an operational test. • Deposit Items into an item pool for use in future alternate forms. • Create parallel test forms using linear, nonlinear, or equipercentile equating procedures. Scientific, CR Tests • Create new TCA-aligned items. • Trial the new items as nonscored items within a testlet at the targeted level. • If equivalent in difficulty to other items in the levelspecific sub-test, use them to build new testlets. • Draw testlets at random from each level’s pool to create many parallel tests.

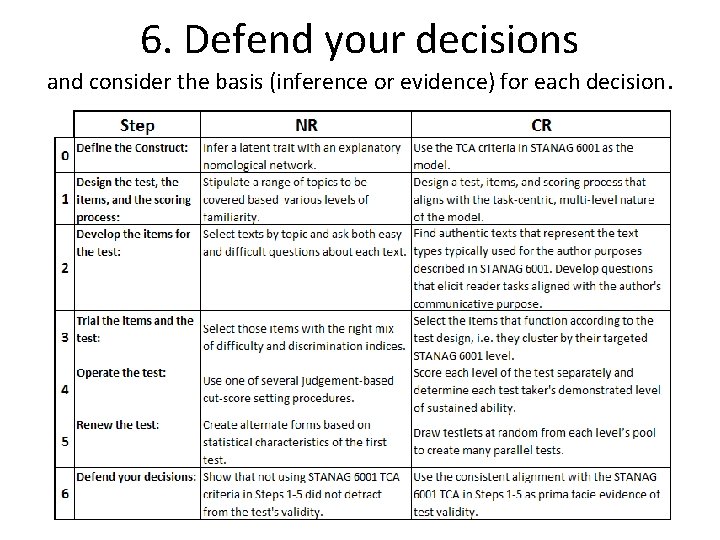

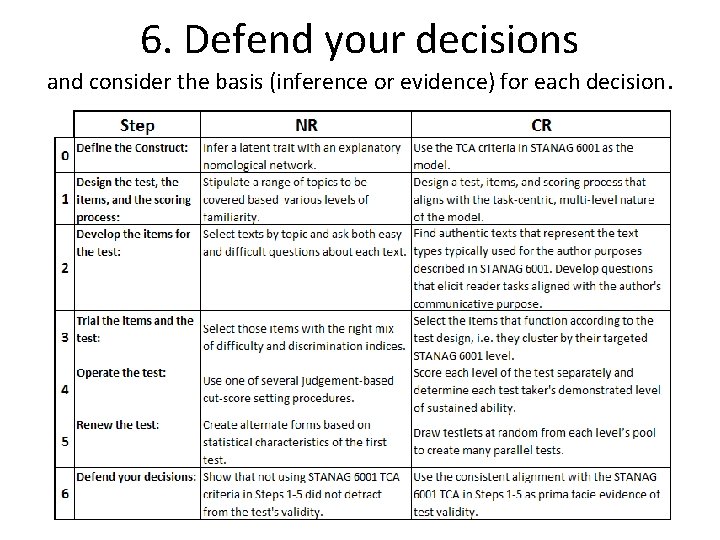

6. Defend your decisions and consider the basis (inference or evidence) for each decision.

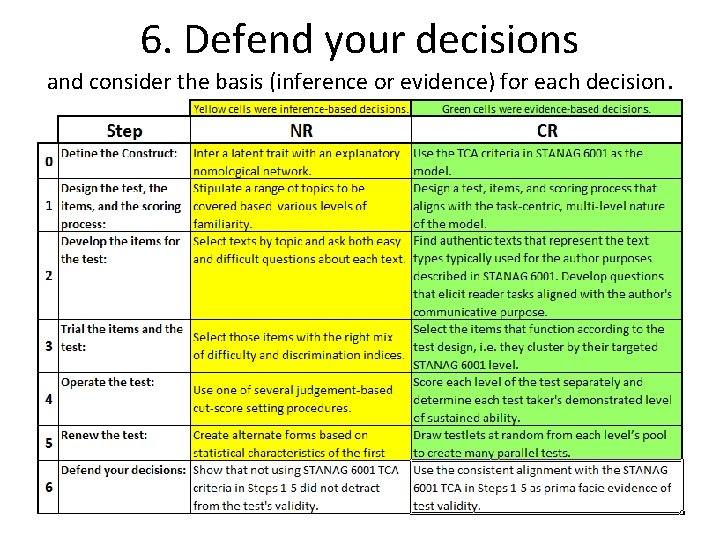

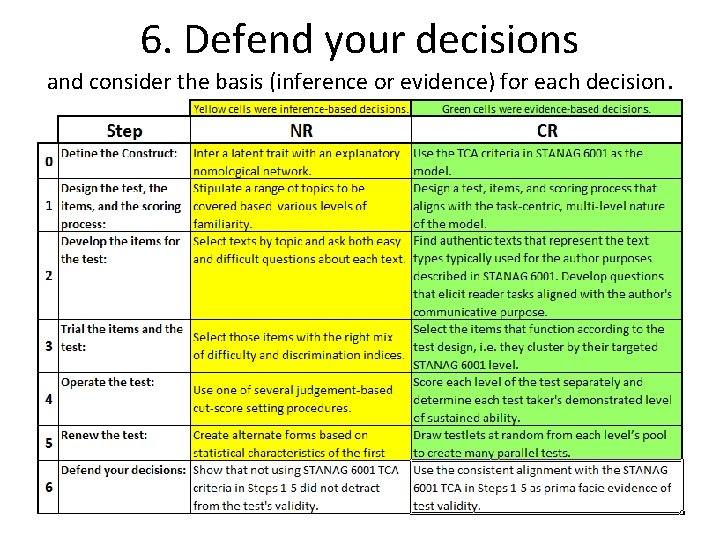

6. Defend your decisions and consider the basis (inference or evidence) for each decision.

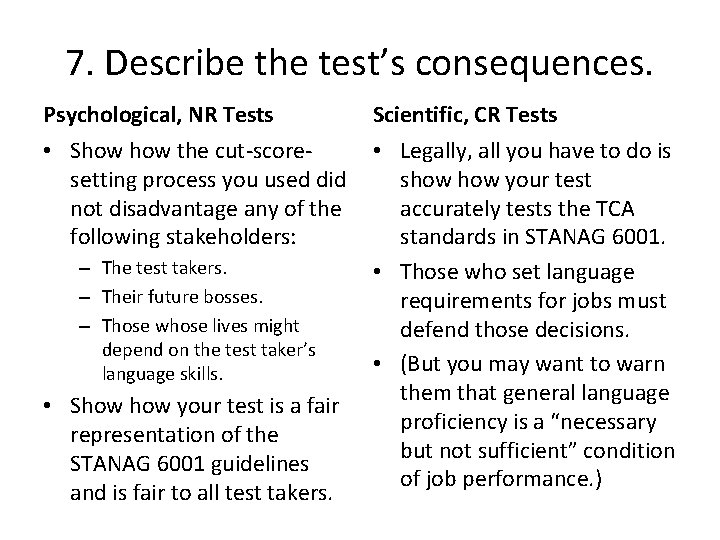

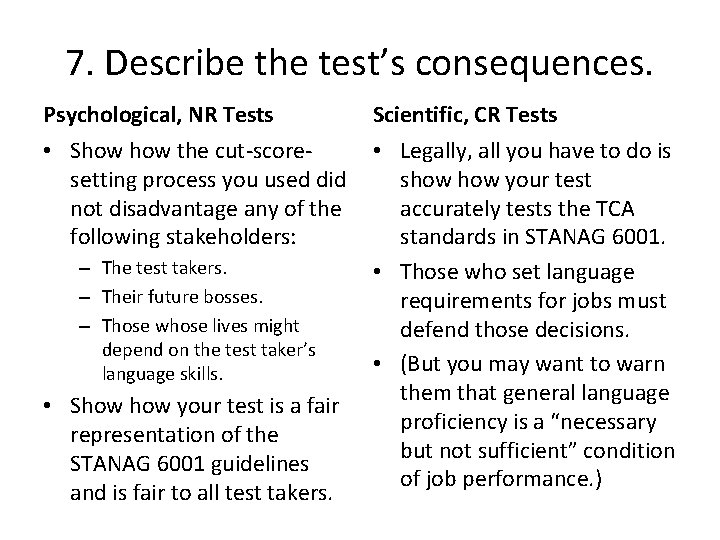

7. Describe the test’s consequences. Psychological, NR Tests • Show the cut-scoresetting process you used did not disadvantage any of the following stakeholders: – The test takers. – Their future bosses. – Those whose lives might depend on the test taker’s language skills. • Show your test is a fair representation of the STANAG 6001 guidelines and is fair to all test takers. Scientific, CR Tests • Legally, all you have to do is show your test accurately tests the TCA standards in STANAG 6001. • Those who set language requirements for jobs must defend those decisions. • (But you may want to warn them that general language proficiency is a “necessary but not sufficient” condition of job performance. )

In Conclusion • When developing language proficiency tests, HOPE is a good companion. • However, HOPE is a lousy substitute for evidence-based decision making. • Criterion-Referenced procedures can provide the evidence that will – Make your language proficiency tests better. – Make your life easier when asked to defend your tests!

Thank you for your interest in the emerging field of Criterion-Referenced Proficiency Testing!