Derivatives and Gradients Derivatives Derivatives tell us which

- Slides: 20

Derivatives and Gradients

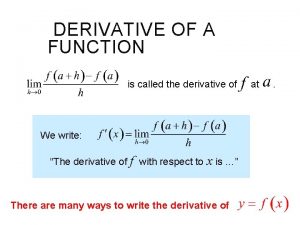

Derivatives • Derivatives tell us which direction to search for a solution 2

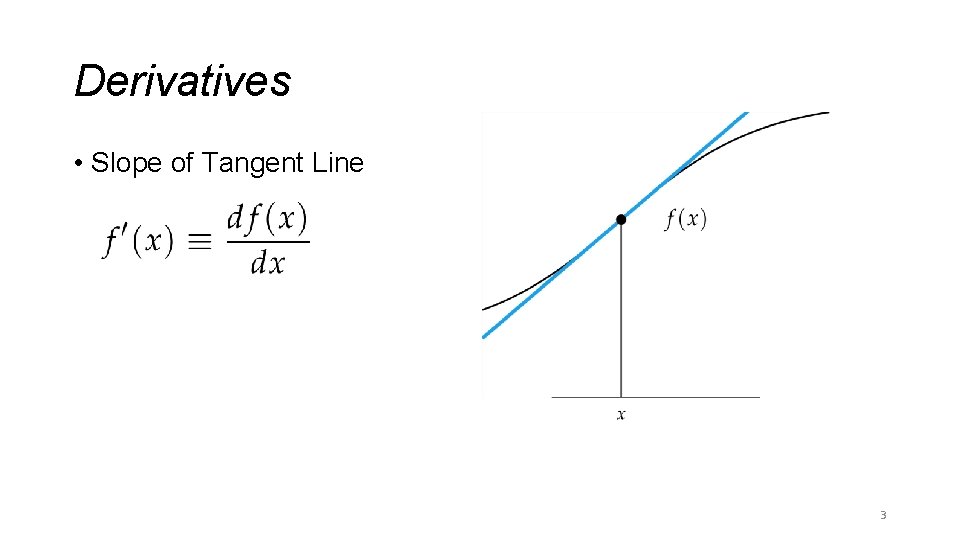

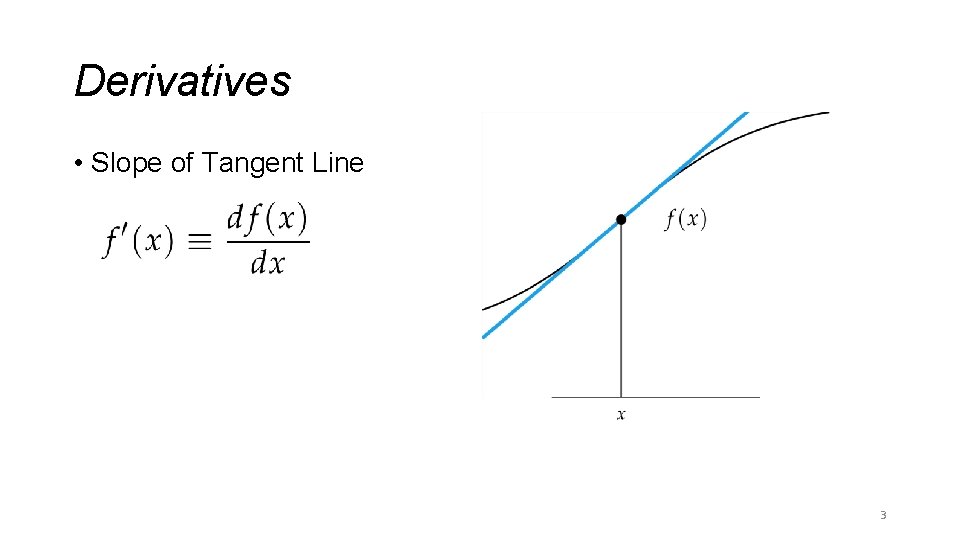

Derivatives • Slope of Tangent Line 3

Derivatives 4

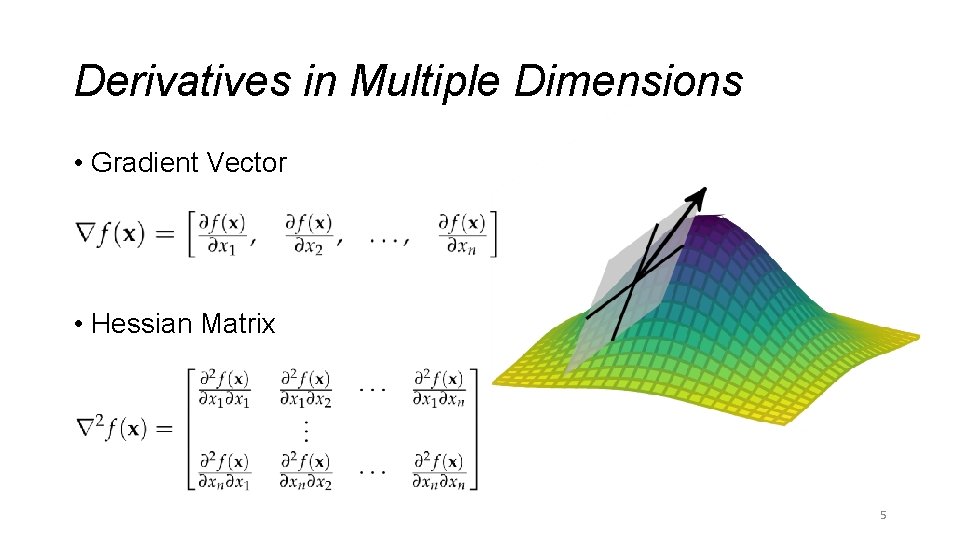

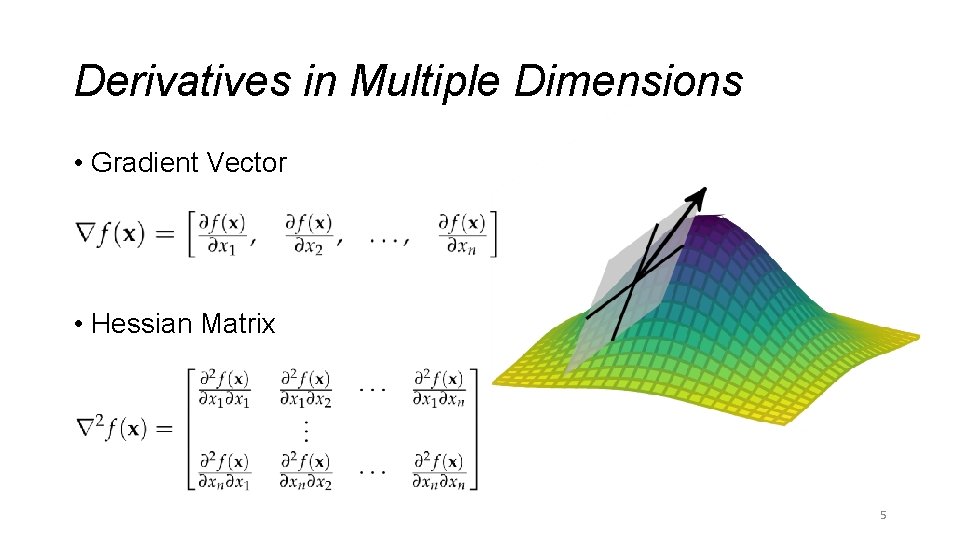

Derivatives in Multiple Dimensions • Gradient Vector • Hessian Matrix 5

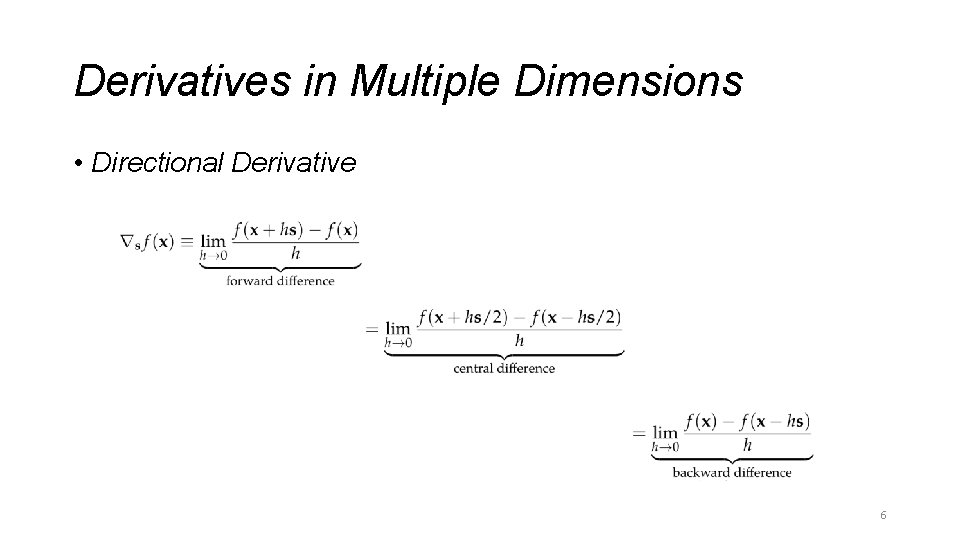

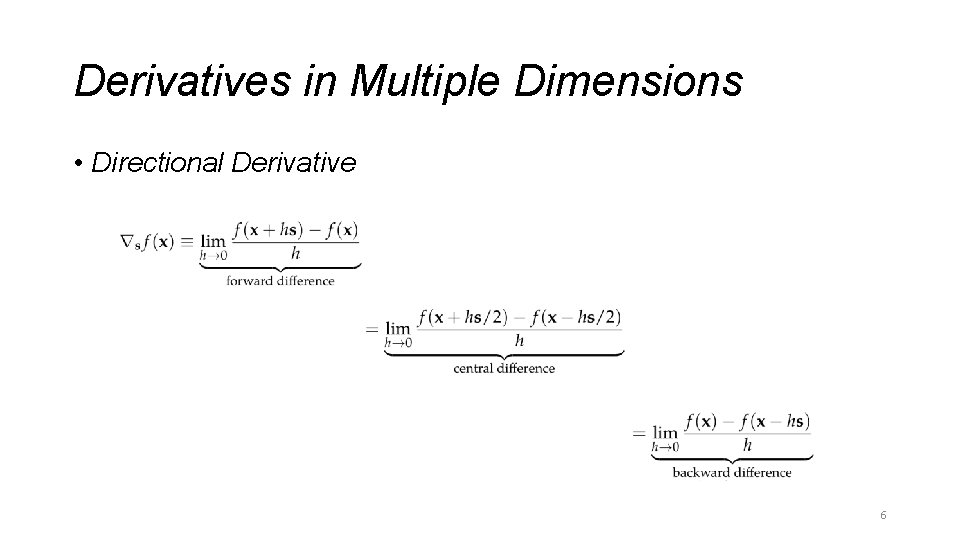

Derivatives in Multiple Dimensions • Directional Derivative 6

Numerical Differentiation • Finite Difference Methods • Complex Step Method 7

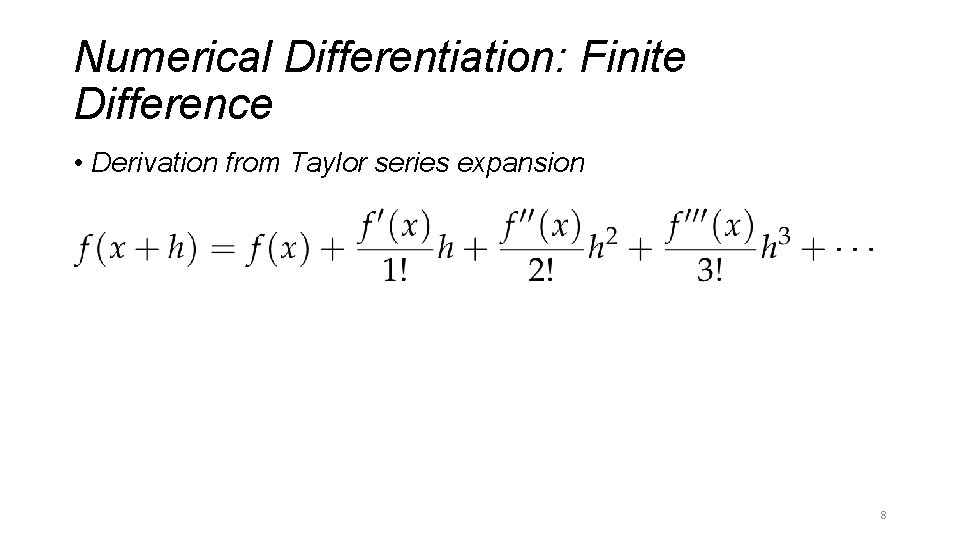

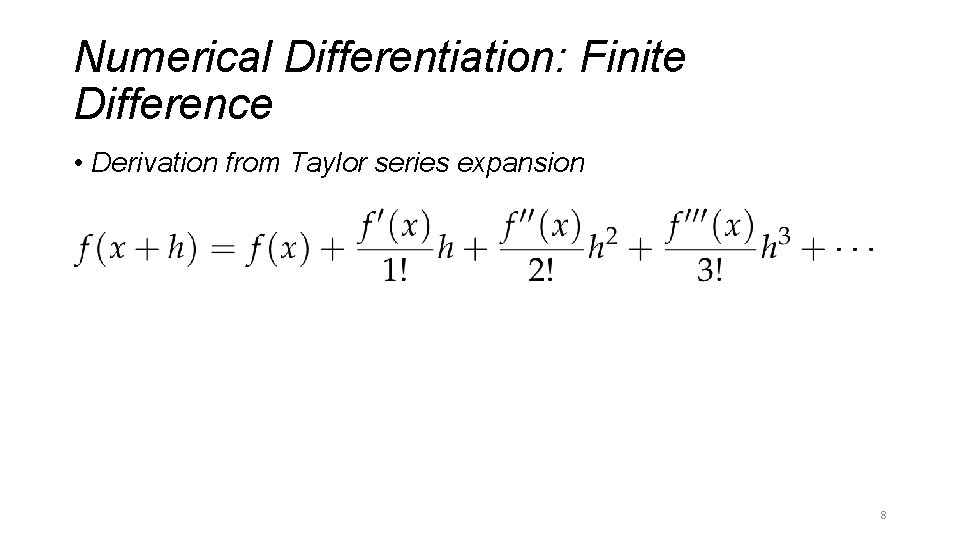

Numerical Differentiation: Finite Difference • Derivation from Taylor series expansion 8

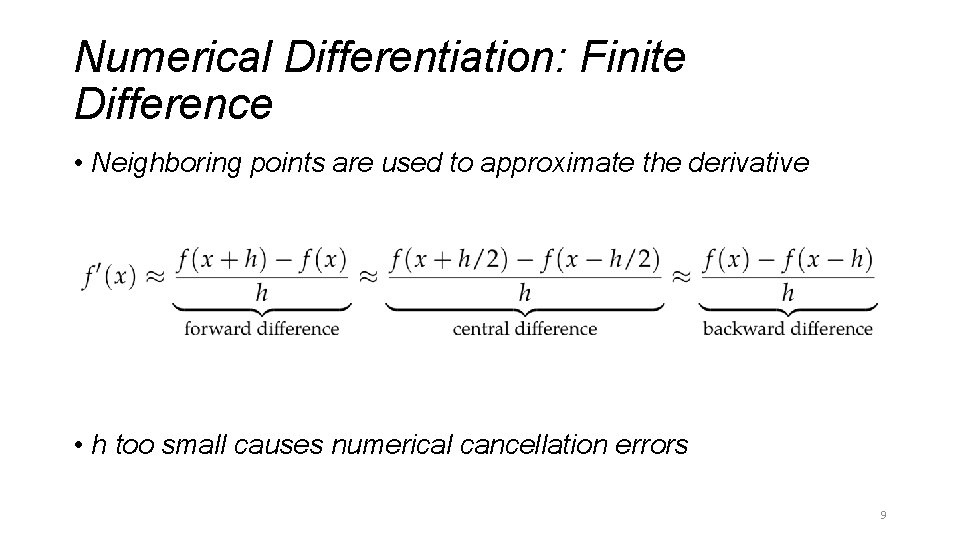

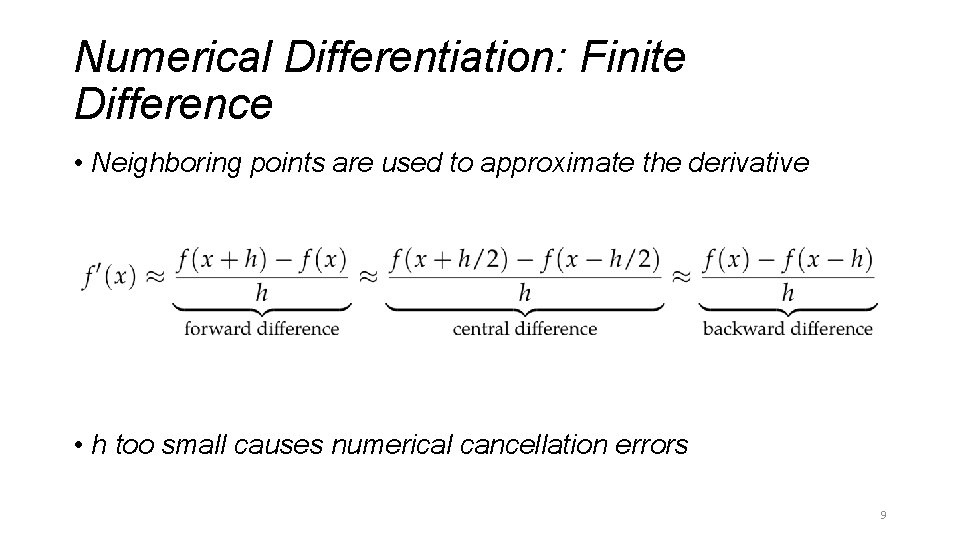

Numerical Differentiation: Finite Difference • Neighboring points are used to approximate the derivative • h too small causes numerical cancellation errors 9

Numerical Differentiation: Finite Difference • Error Analysis • Forward Difference: O(h) • Central Difference: O(h 2) 10

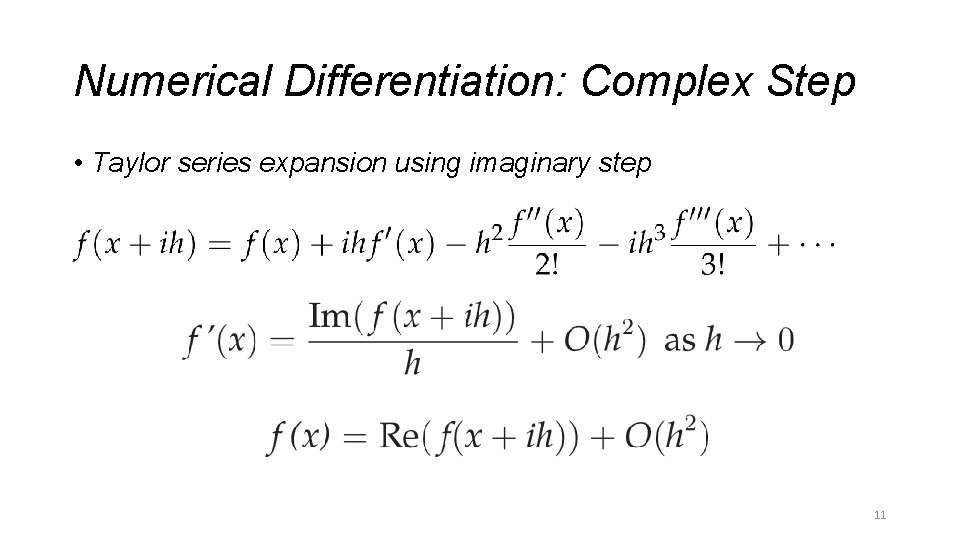

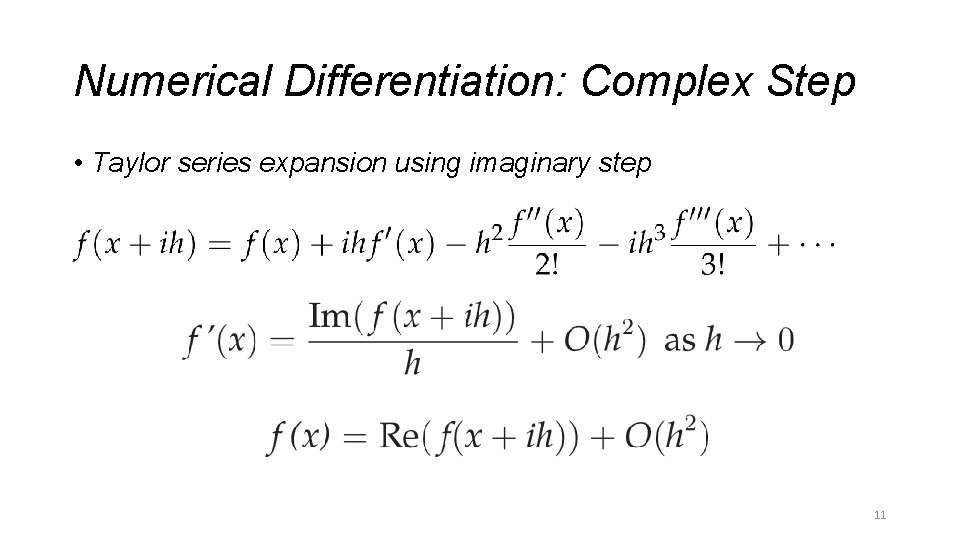

Numerical Differentiation: Complex Step • Taylor series expansion using imaginary step 11

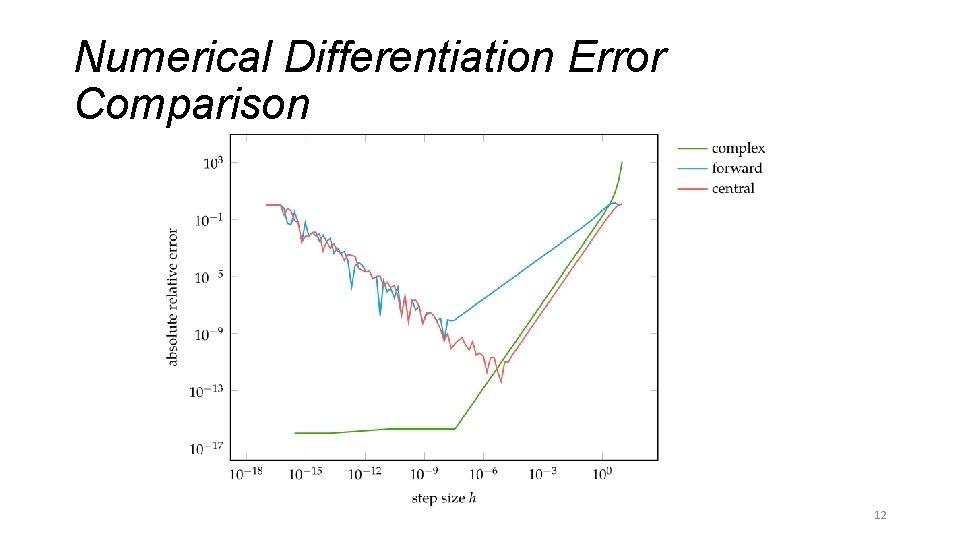

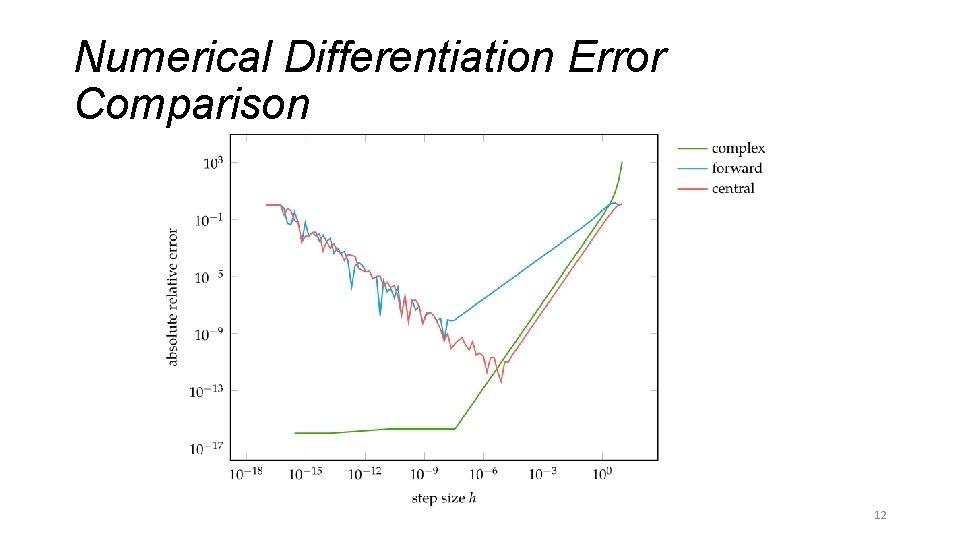

Numerical Differentiation Error Comparison 12

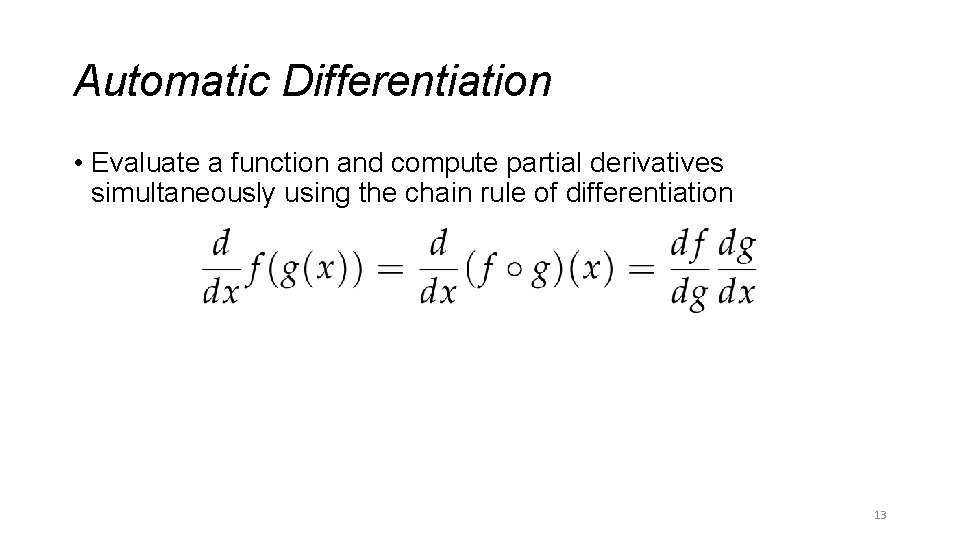

Automatic Differentiation • Evaluate a function and compute partial derivatives simultaneously using the chain rule of differentiation 13

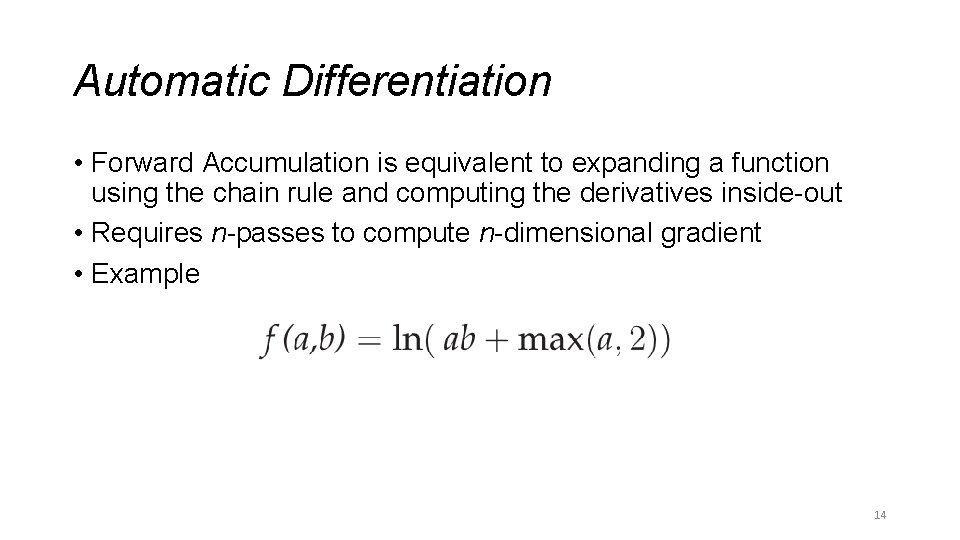

Automatic Differentiation • Forward Accumulation is equivalent to expanding a function using the chain rule and computing the derivatives inside-out • Requires n-passes to compute n-dimensional gradient • Example 14

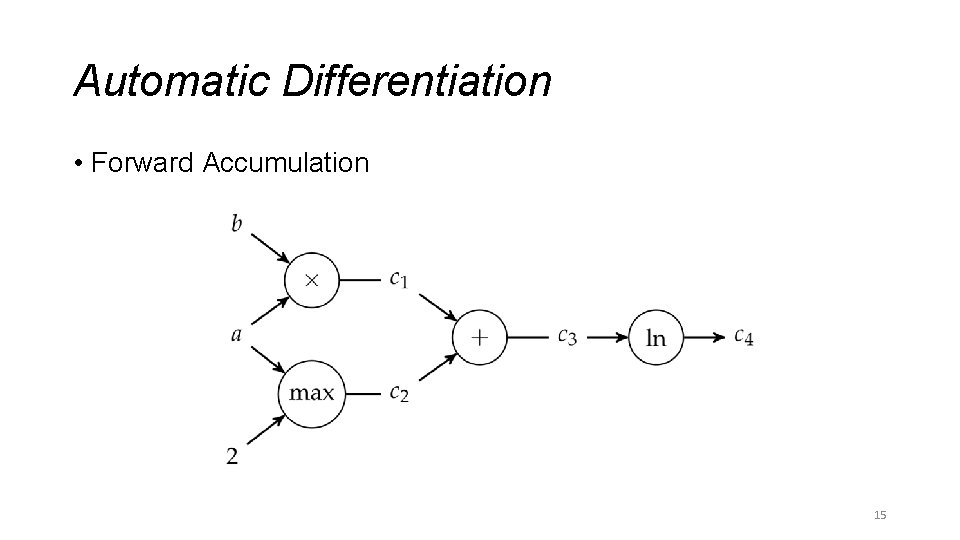

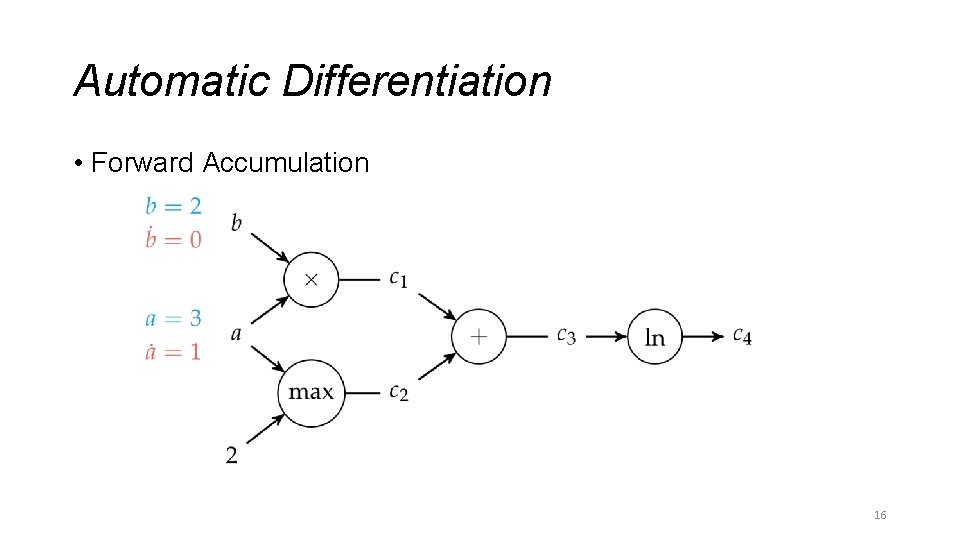

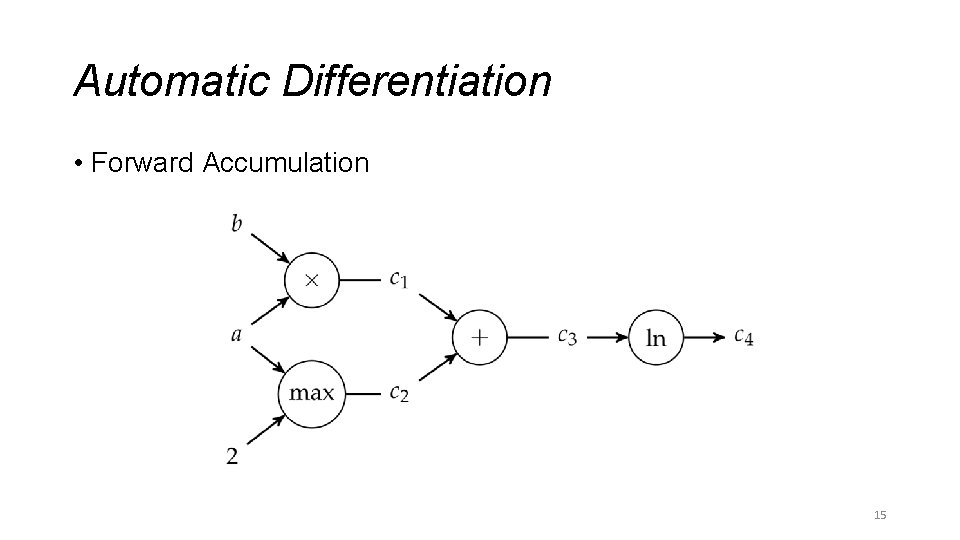

Automatic Differentiation • Forward Accumulation 15

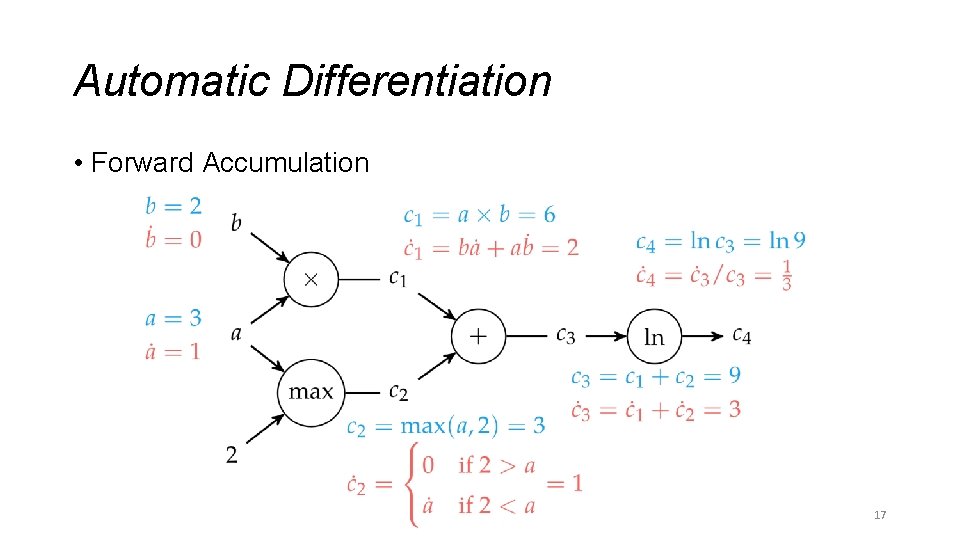

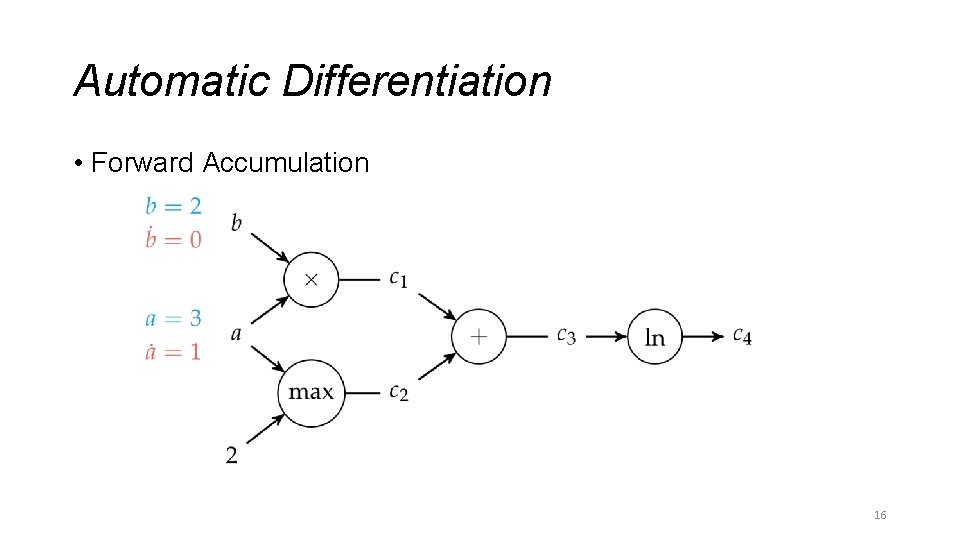

Automatic Differentiation • Forward Accumulation 16

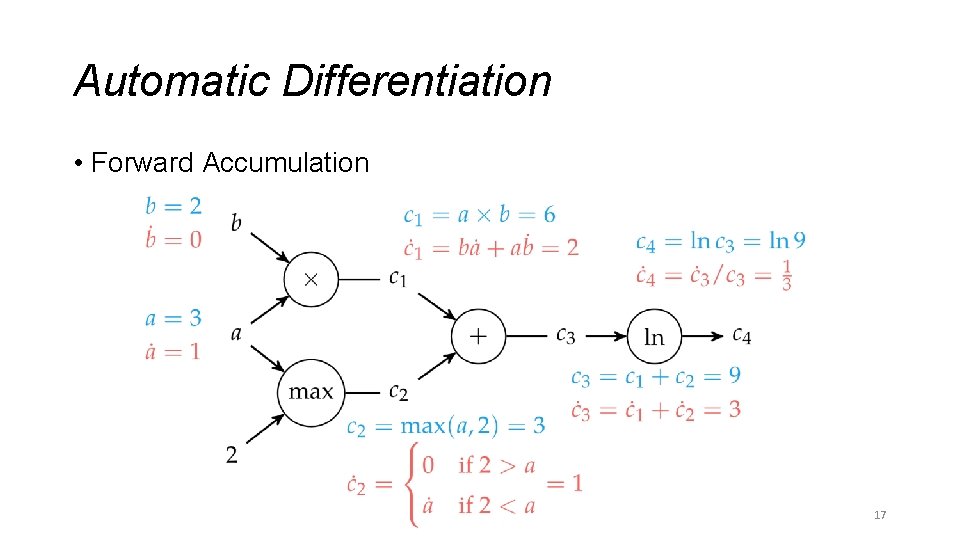

Automatic Differentiation • Forward Accumulation 17

Automatic Differentiation • Reverse accumulation is performed in single run using two passes over an n-dimensional function (forward and back) • Note: this is central to the backpropagation algorithm used to train neural networks • Many open-source software implementations are available 18

Summary • Derivatives are useful in optimization because they provide information about how to change a given point in order to improve the objective function • For multivariate functions, various derivative-based concepts are useful for directing the search for an optimum, including the gradient, the Hessian, and the directional derivative • One approach to numerical differentiation includes finite difference approximations 19

Summary • Complex step method can eliminate the effect of subtractive cancellation error when taking small steps • Analytic differentiation methods include forward and reverse accumulation on computational graphs 20

Histograms of oriented gradients for human detection

Histograms of oriented gradients for human detection Pressure gradients in the heart

Pressure gradients in the heart Histograms of oriented gradients for human detection

Histograms of oriented gradients for human detection Authority gradients

Authority gradients Tell me what you eat and i shall tell you what you are

Tell me what you eat and i shall tell you what you are Show, not tell generator

Show, not tell generator Strong versus weak acids worksheet answers

Strong versus weak acids worksheet answers A song transmitted orally which tell a story

A song transmitted orally which tell a story The writer tells us about

The writer tells us about Product and quotient rules and higher order derivatives

Product and quotient rules and higher order derivatives Tell me and i will forget

Tell me and i will forget Ttt and stt

Ttt and stt Mother sauces derivatives

Mother sauces derivatives Cleft and pouch

Cleft and pouch Basis risk arises due to

Basis risk arises due to Log properties

Log properties Log function properties

Log function properties Derivative of exponential

Derivative of exponential Carboxylic acid derivatives

Carboxylic acid derivatives Forwards vs futures vs options vs swaps

Forwards vs futures vs options vs swaps Carboxylic acid vs ester

Carboxylic acid vs ester