Deconstructing Standard 2 c Laura Frizzell Coastal Plains

- Slides: 18

Deconstructing Standard 2 c Laura Frizzell Coastal Plains RESA 1

2 c: Use of Data for Program Improvement Element 2 c focuses on regular and systematic use of data to evaluate and improve the efficacy and effectiveness of programs and unit operations. Performance assessment data are shared and used to support candidate and faculty growth and development. Data-informed changes are initiated to improve the unit and its programs. 2

2 c: Use of Data for Program Improvement, p. 2 Key criteria for meeting the expectations of element 2 c • Unit systematically and regularly uses data to evaluate the efficacy of courses, programs, and clinical experiences • Changes in the unit are discussed and made based on systematic use of data • Candidate and faculty data are shared with candidates and faculty to encourage reflection and improvement • Faculty have access to data or data systems 3

Sub-elements of Standard 2 c (1) The professional education unit regularly and systematically uses data, including candidate and graduate performance information, to evaluate the efficacy of its courses, preparation programs, and clinical experiences. • 3 AFI’s cited 4

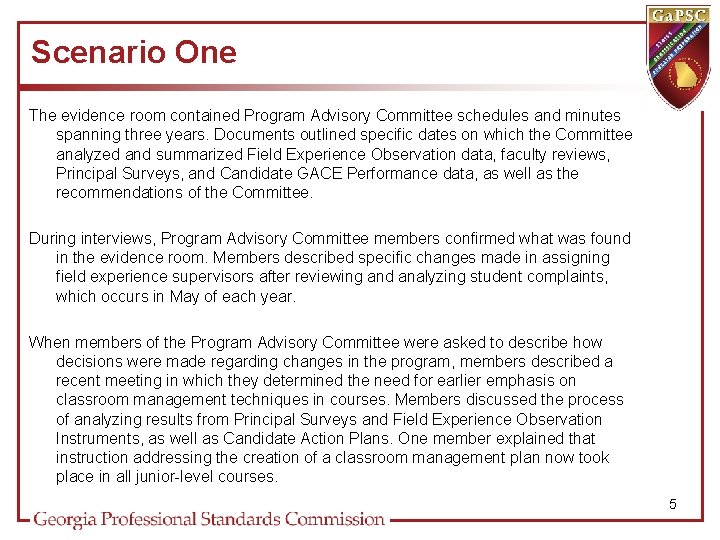

Scenario One The evidence room contained Program Advisory Committee schedules and minutes spanning three years. Documents outlined specific dates on which the Committee analyzed and summarized Field Experience Observation data, faculty reviews, Principal Surveys, and Candidate GACE Performance data, as well as the recommendations of the Committee. During interviews, Program Advisory Committee members confirmed what was found in the evidence room. Members described specific changes made in assigning field experience supervisors after reviewing and analyzing student complaints, which occurs in May of each year. When members of the Program Advisory Committee were asked to describe how decisions were made regarding changes in the program, members described a recent meeting in which they determined the need for earlier emphasis on classroom management techniques in courses. Members discussed the process of analyzing results from Principal Surveys and Field Experience Observation Instruments, as well as Candidate Action Plans. One member explained that instruction addressing the creation of a classroom management plan now took place in all junior-level courses. 5

List of Evidence (Scenario One) • • • Analysis of Candidate Action Plans Analysis of Field Experience Observation Instruments Analysis of Principal Surveys Department Meeting Minutes (9/5/11) Department Meeting Minutes (10/2/11) Department Meeting Minutes (11/4/11) Department Meeting Minutes (12/1/11) Faculty Assessment Tables Program Advisory Committee Meeting Minutes (5/24/11) Program Advisory Committee Meeting Minutes (11/15/11) Unacceptable, Acceptable, or Target? 6

Sub-elements of Standard 2 c (2) The professional education unit analyzes preparation program evaluation and performance assessment data to initiate changes in the program and professional unit operations. • 2 AFI’s cited 7

Scenario Two The evidence room contained descriptions of the roles and responsibilities of unit faculty and P-12 partners in the administration and evaluation of the unit’s assessment system, but interviewees were unable to articulate their roles. The evidence room also housed three years of aggregated data for the Field Experience Observation Instrument. These data indicated that candidates struggled with incorporating technology. When faculty and clinical supervisors were asked about these data during interviews, no one seemed aware of any changes made to courses. When asked in interviews how decisions were made regarding changes in curriculum or assessment, members of the Program Advisory Committee struggled to answer. 8

List of Evidence (Scenario Two) • • Field Experience Observation Data (3 years) Field Experience Observation Form Program Advisory Committee Minutes (2/25/11) Roles and Responsibilities of Stakeholders Unacceptable, Acceptable, or Target? 9

Sub-elements of Standard 2 c (3) • Faculty have access to candidate assessment data and/or data systems. • 0 AFI’s cited 10

Scenario Three The Institutional Report described the unit’s assessment system in detail. It included dates for assessment completion and the roles of faculty, candidates, and other stakeholders in reviewing specific assessment data. During interviews, faculty and clinical supervisors articulated the changes made to processes and courses because of data reviews. In interviews, current and past candidates discussed their work on Student Study Teams. They described the process of analyzing results from faculty members’ evaluations of candidate performance for the past three years. These analyses demonstrated an area of weakness in candidates’ use of technology; and candidates had the opportunity to suggest specific ways for making improvements in this area. One of those improvements was to develop a new technology assessment, which is now completed during a pedagogical course (EDUC 3212). In interviews, faculty described the value of the training on the Data Collection System and the Candidate Data Review. They stated that they have a better understanding of how their work fits into the bigger picture of candidate assessment. 11

List of Evidence (Scenario Three) • • • Minutes from Faculty Training: Candidate Data Review (1/25/11) Minutes from Faculty Training: Data Collection System (3/30/11) Student Study Team Groups (List) Student Study Team Minutes (5/18/10) Student Study Team Minutes (5/20/11) Syllabus: EDUC 3212 (Pedagogy Methods) Teacher Education Program Timeline for Assessment (2010 -2011) Teacher Education Program Timeline for Assessment (2011 -2012) Technology Assessment (completed in EDUC 3212) Unacceptable, Acceptable, or Target? 12

Sub-elements of Standard 2 c (4) • Candidate assessment data are regularly shared with candidates and faculty to help them reflect on and improve their performance and preparation programs. • 0 AFI’s cited 13

Scenario Four The evidence room contained minutes from the most recent Program Advisory Committee meeting, which revealed that the order of coursework was not highly beneficial to candidates in the field. A different order of classes would be more conducive to the candidates’ field experiences. All stakeholders were made aware of the concerns, and the process for changing the order of coursework had begun. There were also minutes from a department meeting from the previous year that demonstrated that GACE data were examined. ECE candidates were consistently struggling to pass the language arts/social studies portion of the test. An analysis of the sub-element data revealed that social studies was the area of concern. The social studies methods courses were revised to reflect a closer correlation to the GACE frameworks. During interviews, current and previous candidates described the process by which their performance assessments were shared with them in End-of. Semester Conferences. 14

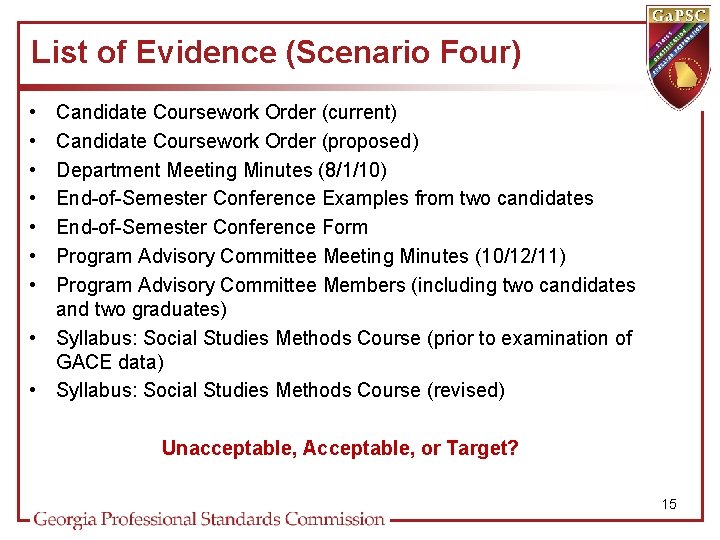

List of Evidence (Scenario Four) • • Candidate Coursework Order (current) Candidate Coursework Order (proposed) Department Meeting Minutes (8/1/10) End-of-Semester Conference Examples from two candidates End-of-Semester Conference Form Program Advisory Committee Meeting Minutes (10/12/11) Program Advisory Committee Members (including two candidates and two graduates) • Syllabus: Social Studies Methods Course (prior to examination of GACE data) • Syllabus: Social Studies Methods Course (revised) Unacceptable, Acceptable, or Target? 15

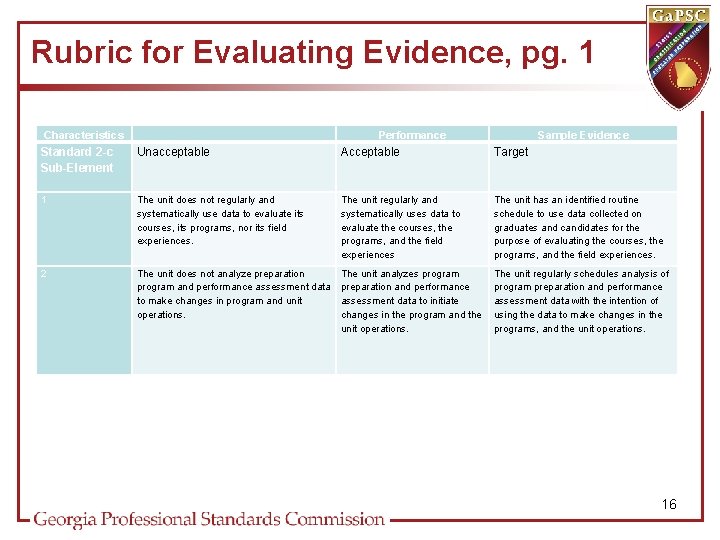

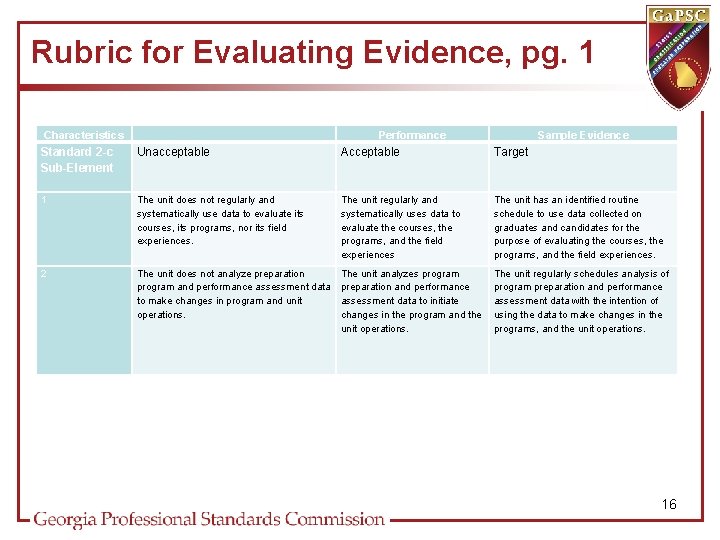

Rubric for Evaluating Evidence, pg. 1 Characteristics Performance Sample Evidence Standard 2 -c Sub-Element Unacceptable Acceptable Target 1 The unit does not regularly and systematically use data to evaluate its courses, its programs, nor its field experiences. The unit regularly and systematically uses data to evaluate the courses, the programs, and the field experiences The unit has an identified routine schedule to use data collected on graduates and candidates for the purpose of evaluating the courses, the programs, and the field experiences. 2 The unit does not analyze preparation program and performance assessment data to make changes in program and unit operations. The unit analyzes program preparation and performance assessment data to initiate changes in the program and the unit operations. The unit regularly schedules analysis of program preparation and performance assessment data with the intention of using the data to make changes in the programs, and the unit operations. 16

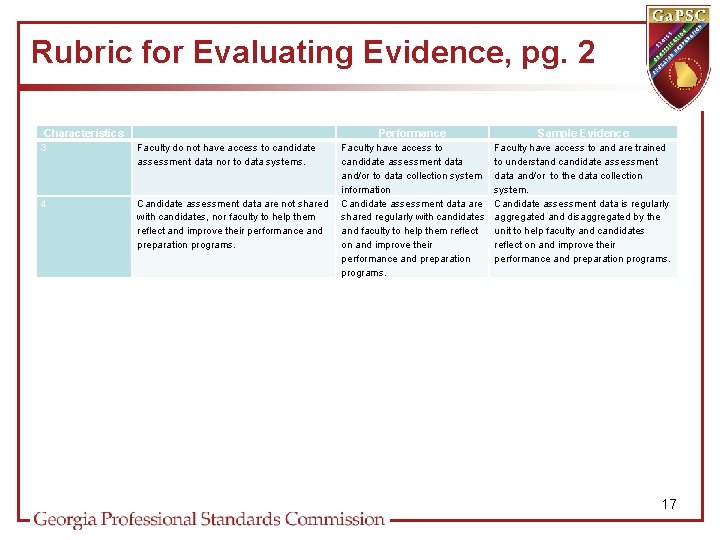

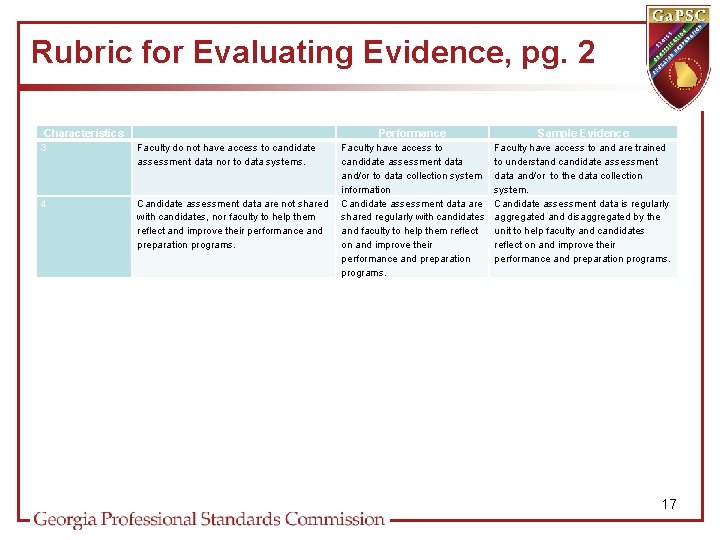

Rubric for Evaluating Evidence, pg. 2 Characteristics 3 Faculty do not have access to candidate assessment data nor to data systems. 4 Candidate assessment data are not shared with candidates, nor faculty to help them reflect and improve their performance and preparation programs. Performance Sample Evidence Faculty have access to candidate assessment data and/or to data collection system information Candidate assessment data are shared regularly with candidates and faculty to help them reflect on and improve their performance and preparation programs. Faculty have access to and are trained to understand candidate assessment data and/or to the data collection system. Candidate assessment data is regularly aggregated and disaggregated by the unit to help faculty and candidates reflect on and improve their performance and preparation programs. 17

Thank You! 18

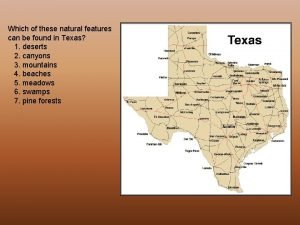

Atlantic coastal plains facts

Atlantic coastal plains facts Balcones escarpment

Balcones escarpment Oak woods and prairies water features

Oak woods and prairies water features Coastal region of texas

Coastal region of texas Coastal plains attractions

Coastal plains attractions Coastal region of india

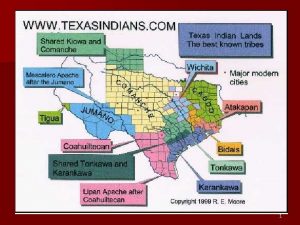

Coastal region of india Ecoregion of texas

Ecoregion of texas What are the 5 subregions of the coastal plains

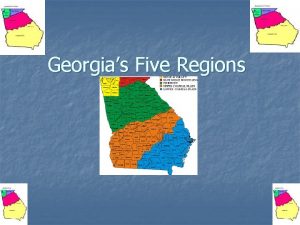

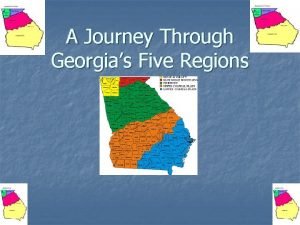

What are the 5 subregions of the coastal plains Coastal plains ga to columbus georgia gps

Coastal plains ga to columbus georgia gps Coastal plains ga to columbus georgia gps

Coastal plains ga to columbus georgia gps What does the coastal plains look like

What does the coastal plains look like Examples of erosion in texas

Examples of erosion in texas Central plains texas major cities

Central plains texas major cities Rolling plains weathering erosion and deposition

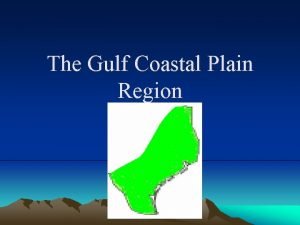

Rolling plains weathering erosion and deposition Gulf coastal plains

Gulf coastal plains 7 regions of texas

7 regions of texas Valley and ridge animals

Valley and ridge animals Central plains center of amarillo, tx

Central plains center of amarillo, tx Laura veia a elian todos los dias

Laura veia a elian todos los dias