CSI 5388 A Critique of our Evaluation Practices

- Slides: 14

CSI 5388: A Critique of our Evaluation Practices in Machine Learning 1

Observations n n n The way in which Evaluation is conducted in Machine learning/Data Mining has not been a primary concern in the community. This is very different from the way Evaluation is approached in other applied fields such as: Economics, Psychology and Sociology. In such fields, researchers have been more concerned with the meaning and validity of their results than in ours. 2

The Problem n The objective value of our advances in Machine Learning may be different from what we believe it is. ü Our conclusions may be flawed or meaningless. ü ML methods may get undue credit or not get sufficiently recognized. ü The field may start stagnating. ü Practitioners in other fields or potential business partners may dismiss our approaches/results. Ø We hope that with better evaluation practices, we can help the field of machine learning focus on more effective research and encourage more crossdiscipline or cross-purposes exchanges. 3

Organization of the. Lecture A review of the shortcomings of current evaluation methods: o Problems with Performance Evaluation o Problems with Confidence Estimation o Problems with Data Sets 4

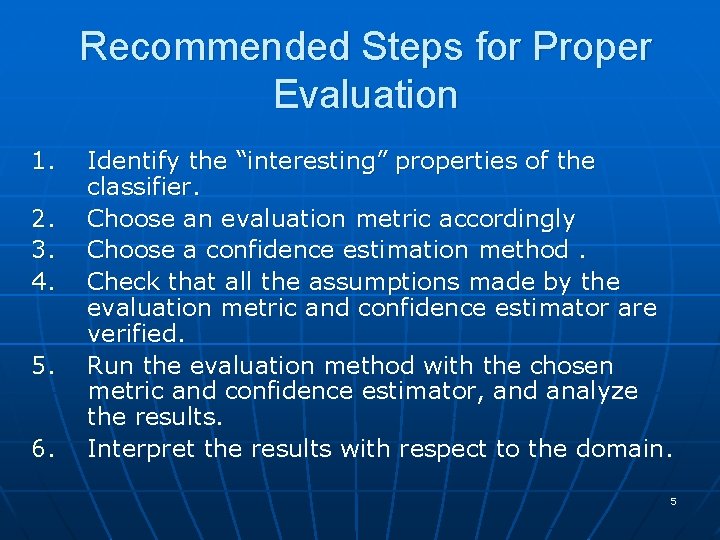

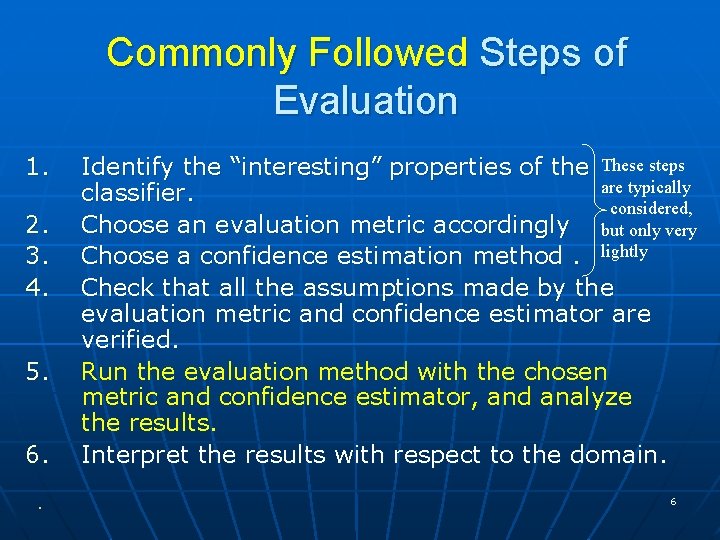

Recommended Steps for Proper Evaluation 1. 2. 3. 4. 5. 6. Identify the “interesting” properties of the classifier. Choose an evaluation metric accordingly Choose a confidence estimation method. Check that all the assumptions made by the evaluation metric and confidence estimator are verified. Run the evaluation method with the chosen metric and confidence estimator, and analyze the results. Interpret the results with respect to the domain. 5

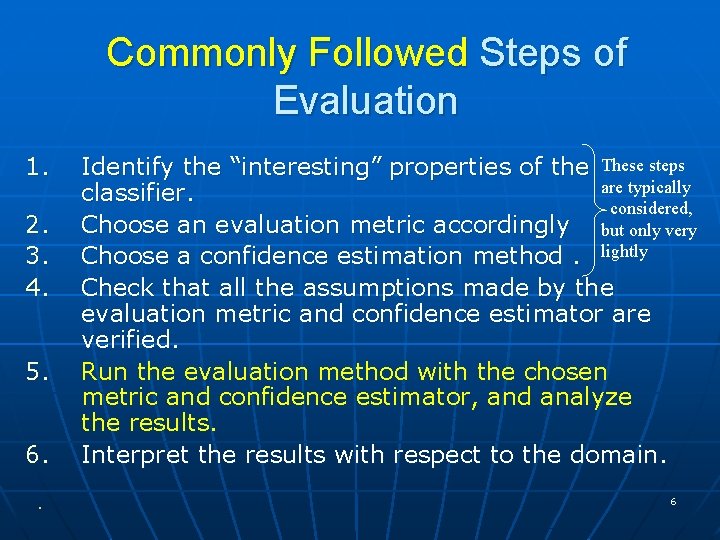

Commonly Followed Steps of Evaluation 1. 2. 3. 4. 5. 6. . Identify the “interesting” properties of the These steps are typically classifier. considered, Choose an evaluation metric accordingly but only very Choose a confidence estimation method. lightly Check that all the assumptions made by the evaluation metric and confidence estimator are verified. Run the evaluation method with the chosen metric and confidence estimator, and analyze the results. Interpret the results with respect to the domain. 6

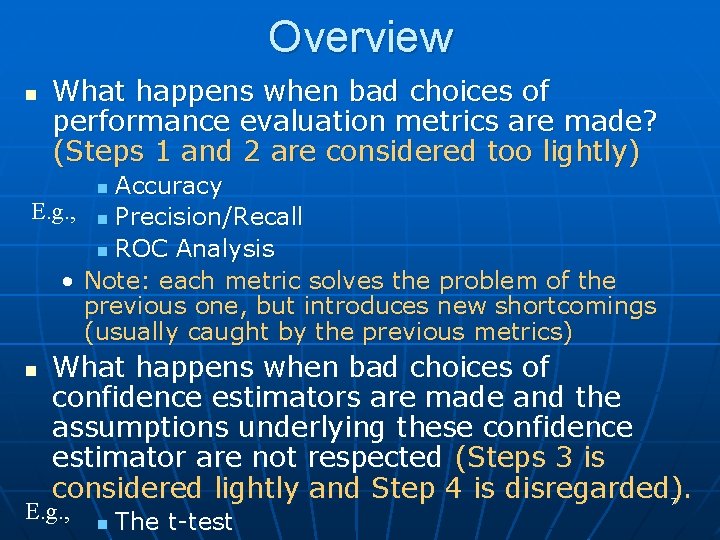

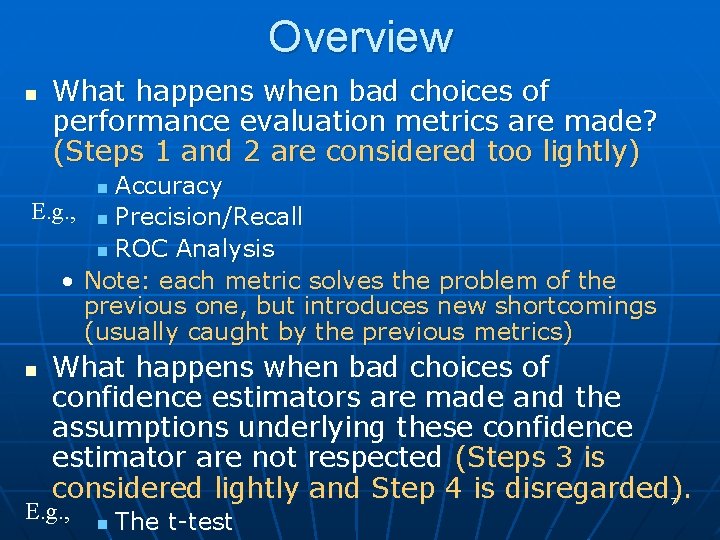

Overview n What happens when bad choices of performance evaluation metrics are made? (Steps 1 and 2 are considered too lightly) Accuracy E. g. , n Precision/Recall n ROC Analysis • Note: each metric solves the problem of the previous one, but introduces new shortcomings (usually caught by the previous metrics) n n What happens when bad choices of confidence estimators are made and the assumptions underlying these confidence estimator are not respected (Steps 3 is considered lightly and Step 4 is disregarded). E. g. , n The t-test 7

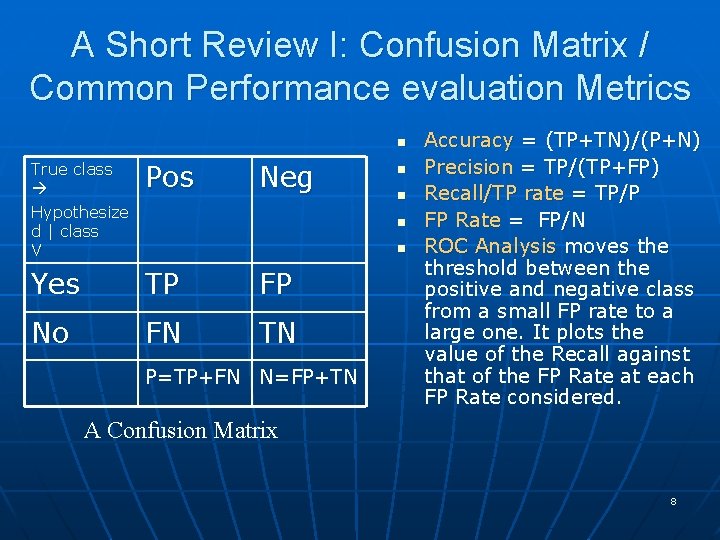

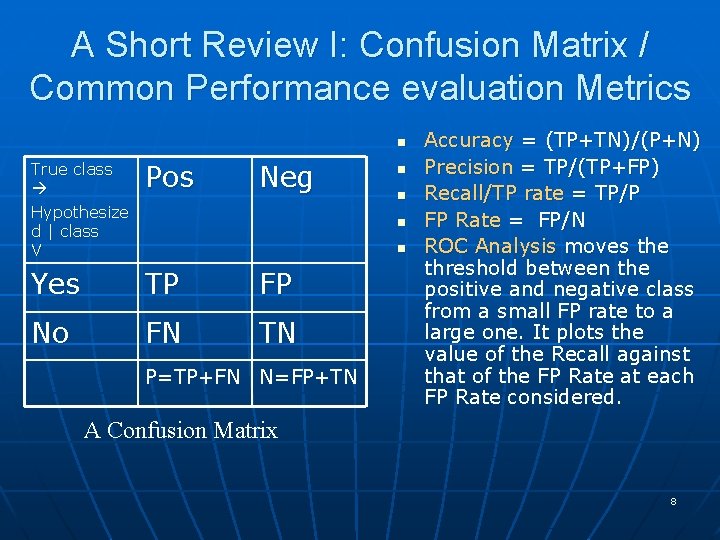

A Short Review I: Confusion Matrix / Common Performance evaluation Metrics n True class Hypothesize d | class V Pos Neg Yes TP FP No FN TN n n P=TP+FN N=FP+TN Accuracy = (TP+TN)/(P+N) Precision = TP/(TP+FP) Recall/TP rate = TP/P FP Rate = FP/N ROC Analysis moves the threshold between the positive and negative class from a small FP rate to a large one. It plots the value of the Recall against that of the FP Rate at each FP Rate considered. A Confusion Matrix 8

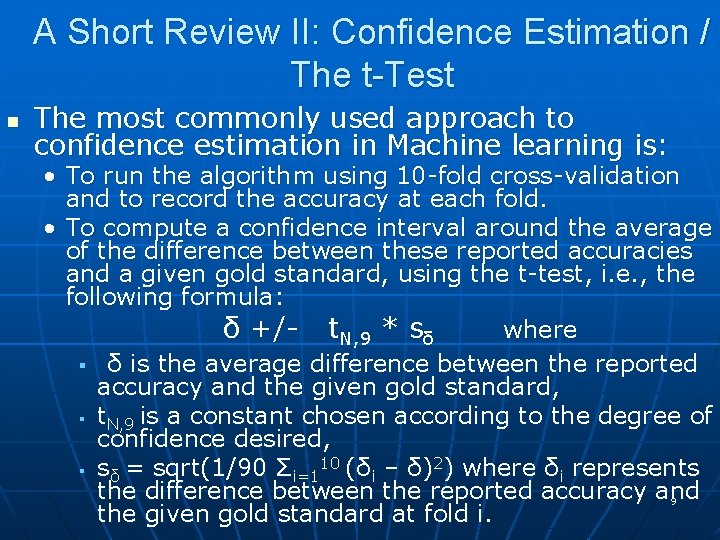

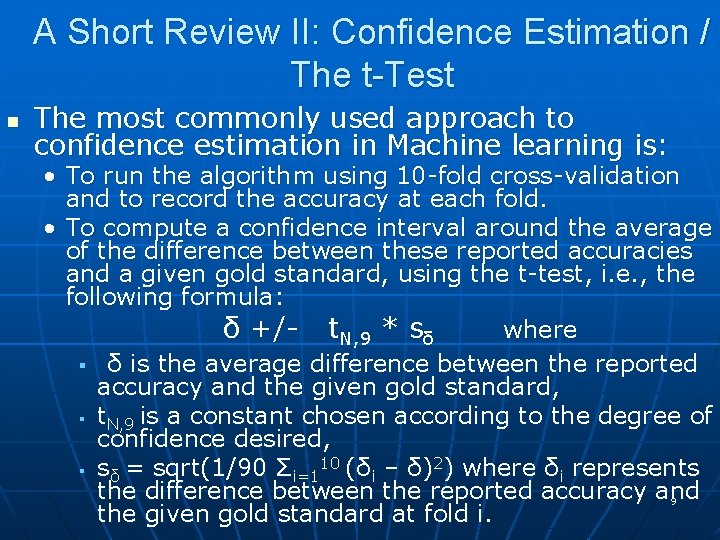

A Short Review II: Confidence Estimation / The t-Test n The most commonly used approach to confidence estimation in Machine learning is: • To run the algorithm using 10 -fold cross-validation and to record the accuracy at each fold. • To compute a confidence interval around the average of the difference between these reported accuracies and a given gold standard, using the t-test, i. e. , the following formula: δ +/- t. N, 9 * sδ where § δ is the average difference between the reported accuracy and the given gold standard, § t. N, 9 is a constant chosen according to the degree of confidence desired, § sδ = sqrt(1/90 Σi=110 (δi – δ)2) where δi represents the difference between the reported accuracy and 9 the given gold standard at fold i.

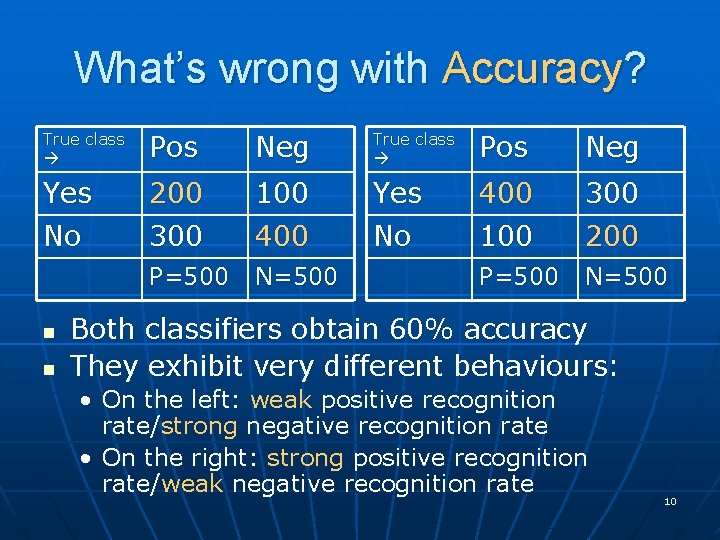

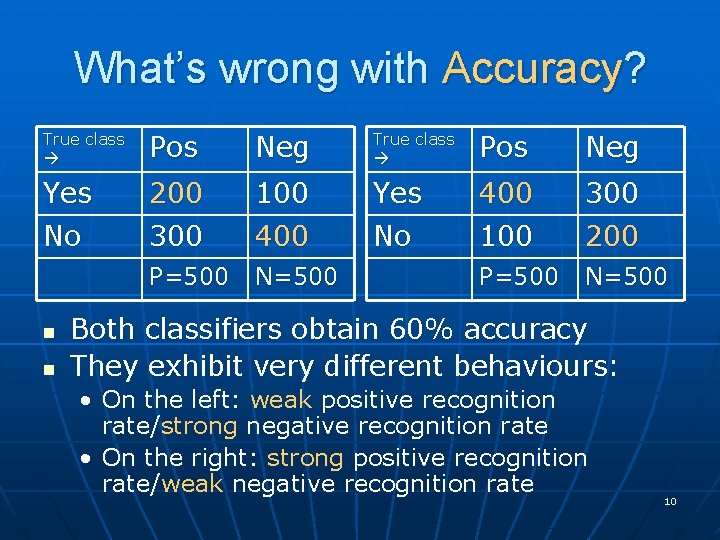

What’s wrong with Accuracy? True class Pos Neg Yes No 200 300 100 400 Yes No 400 100 300 200 P=500 N=500 n n Both classifiers obtain 60% accuracy They exhibit very different behaviours: • On the left: weak positive recognition rate/strong negative recognition rate • On the right: strong positive recognition rate/weak negative recognition rate 10

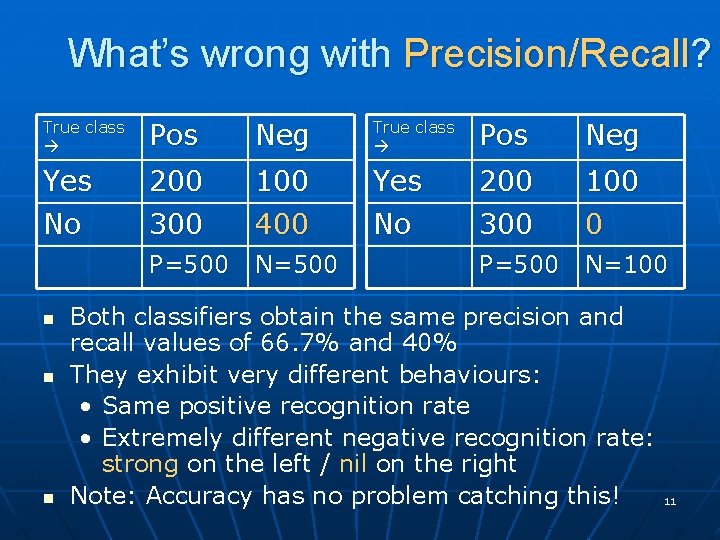

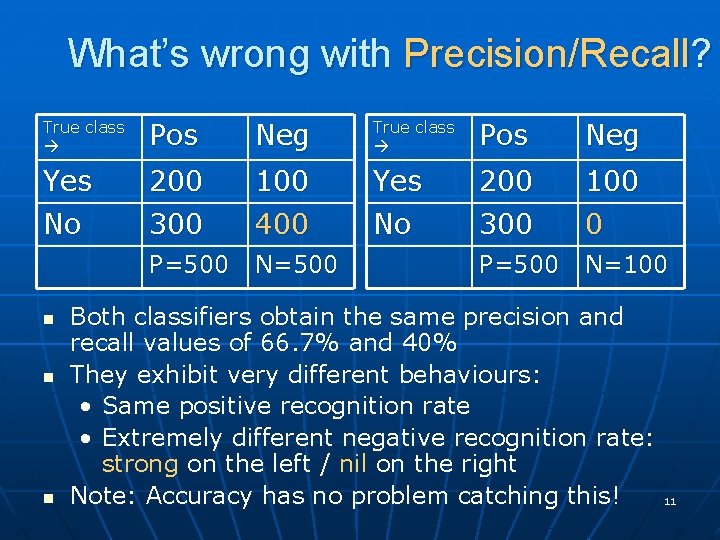

What’s wrong with Precision/Recall? True class Pos Neg Yes No 200 300 100 400 Yes No 200 300 100 0 P=500 N=500 P=500 N=100 n n n Both classifiers obtain the same precision and recall values of 66. 7% and 40% They exhibit very different behaviours: • Same positive recognition rate • Extremely different negative recognition rate: strong on the left / nil on the right Note: Accuracy has no problem catching this! 11

What’s wrong with ROC Analysis? (We consider single points in space: not the entire ROC Curve) n True class Pos Neg Yes No 200 300 10 4, 000 Yes No 500 300 1, 000 P=500 N=4, 010 P=800 N=401, 000 400, 000 ROC Analysis and Precision yield contradictory results • In terms of ROC Analysis, the classifier on the right is a significantly better choice than the on the left. [the point representing the right classifier is on the same vertical line but 22. 25% higher than the point representing the left classifier] • Yet, the classifier on the right has ridiculously low precision (33. 3%) while the classifier on the left has excellent precision (95. 24%). 12

What’s wrong with the t-test? Fold 1 Fold Fold 2 3 4 5 6 7 8 Fold 9 10 C 1 +5% -5% +5 % C 2 +10 % -5% 0% n n n -5% +5 % 0% -5% +5 % -5% 0% 0% 0% Classifiers 1 and 2 yield the same average mean and confidence interval. Yet, Classifier 1 is relatively stable, while Classifier 2 is not. Problem: the t-test assumes a normal distribution. The difference in accuracy between classifier 2 and the goldstandard is not normally distributed 13

Discussion n There is nothing intrinsically wrong with any of the performance evaluation measures or confidence tests discussed. It’s all a matter of thinking about which one to use when, and what the results means (both in terms of added value and limitations). Simple conceptualization of the Problem with current evaluation practices: • Evaluation Metrics and Confidence Measures summarize the results ML Practitioners must understand the terms of these summarizations and verify that their assumptions are verified. In certain cases, however, it is necessary to look further and, eventually, borrow practices from other disciplines. In, yet, other cases, it pays to devise our own methods. Both instances are discussed in what follows. 14

Awareness of ourselves and our environment is

Awareness of ourselves and our environment is Our census our future

Our census our future Awareness of ourselves and our environment is

Awareness of ourselves and our environment is Our census our future

Our census our future Awareness of ourselves and our environment

Awareness of ourselves and our environment Ok 313

Ok 313 Our awareness of ourselves and our environment.

Our awareness of ourselves and our environment. Our life is what our thoughts make it

Our life is what our thoughts make it God our father christ our brother

God our father christ our brother Our life is what our thoughts make it

Our life is what our thoughts make it The poem money madness was published in the collection

The poem money madness was published in the collection Thinking affects our language which then affects our

Thinking affects our language which then affects our Our future is in our hands quotes

Our future is in our hands quotes We bow our hearts

We bow our hearts Bernard williams integrity

Bernard williams integrity