CSI 5388 Putting it all together Error Estimation

- Slides: 30

CSI 5388 Putting it all together: Error Estimation of Machine Learning Algorithms 1

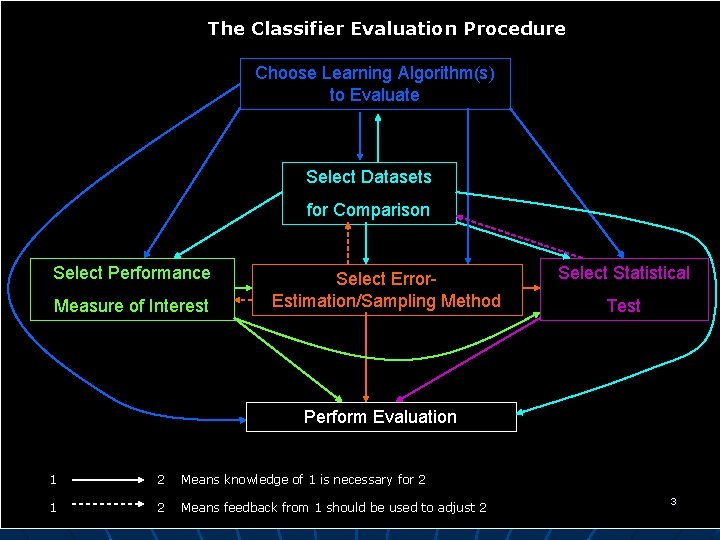

Testing Learning Algorithms n n n The process of Testing the performance of our learning algorithms is very intricate. A number of decisions must be made, which are, for the most part, inter-related. Optimally, the evaluation process would include the steps described on the next slide. 2

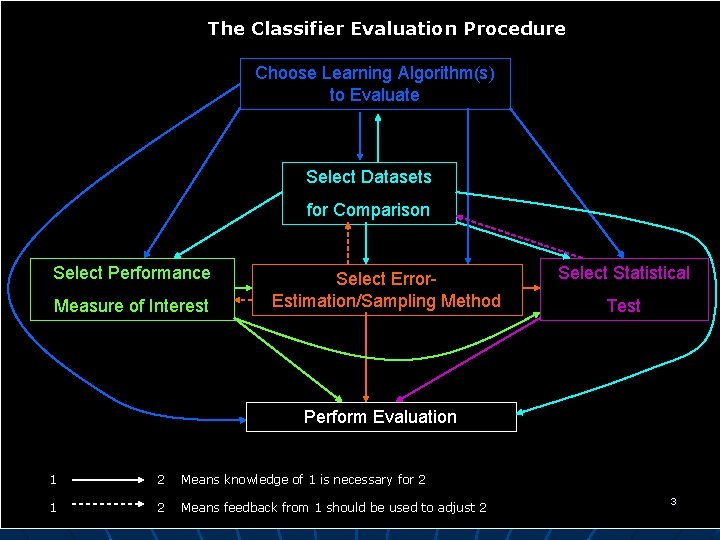

The Classifier Evaluation Procedure Choose Learning Algorithm(s) to Evaluate Select Datasets for Comparison Select Performance Measure of Interest Select Error. Estimation/Sampling Method Select Statistical Test Perform Evaluation 1 2 Means knowledge of 1 is necessary for 2 1 2 Means feedback from 1 should be used to adjust 2 3

I. Choosing Learning Algorithm(s) to evaluate n n n The user should decide which learning algorithms s/he is trying to evaluate. The kind of questions that come up at this level are: • Is it a new algorithm? • What other algorithms will it be pitted against? • Is there no new algorithm, but rather, is the user trying to establish the strengths and weaknesses of various standard algorithms? • Is the user trying to determine the best parameter settings for a single standard algorithm? etc. . . The decision of what algorithm(s) to choose does not depend on any other component of our scheme, except, in certain cases, the choice of data sets. 4

II. Selecting Data Sets for Comparison n This procedure is simple in the case where the purpose of the study is a particular application, but more complex if it is to test learning algorithms. In the case where the focus is a particular application, we simply select the data set of interest. In the other cases, the choice of data sets will depend in great part on the purpose of the evaluation, and as a results on all the other components of the figure describing the evaluation process. 5

II. Selecting Data Sets for Comparison (Cont’d) n n n For example, if we are testing how well a new algorithm fares on domains presenting a particular characteristic, then we should choose domains that present this characteristic. If we want certain statistical guarantees on our results, however, we should also make sure that our domains present the characteristics necessary for our performance measures, error estimation method and our statistical tests to succeed. In turn, our choices of performance measure, error estimation methods, and statistical tests will also affect our choices of data sets. 6

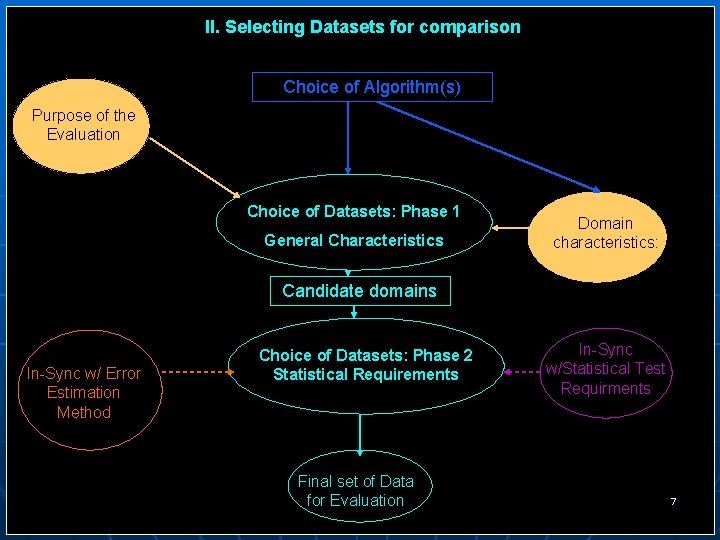

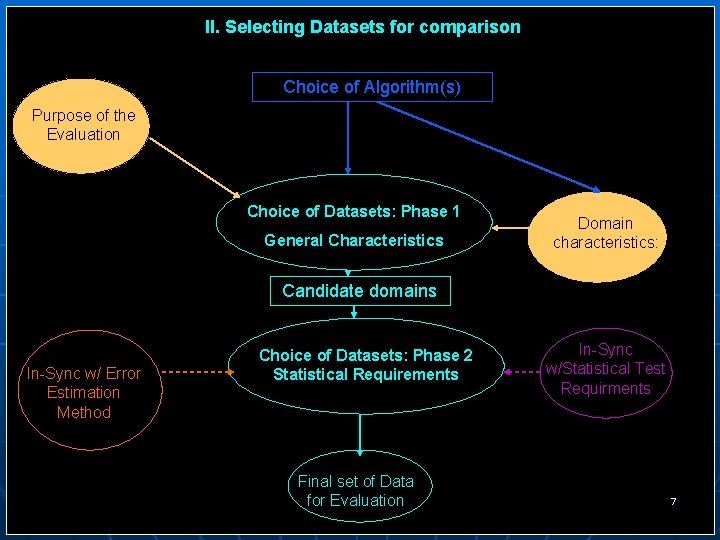

II. Selecting Datasets for comparison Choice of Algorithm(s) Purpose of the Evaluation Choice of Datasets: Phase 1 General Characteristics Domain characteristics: Candidate domains In-Sync w/ Error Estimation Method Choice of Datasets: Phase 2 Statistical Requirements Final set of Data for Evaluation In-Sync w/Statistical Test Requirments 7

II. Selecting Data Sets for Comparison: Suggested Procedure n n We focus on the case where we are not working in the context of a particular application. The first consideration concerns the identity of the algorithms we intend to evaluate: • Do these algorithms claim to perform well on particular domains? Example, face recognition tasks, domains with high dimensionality, overlaps, noisy domains, cost, domains with missing values, and so on. . . • If that is the case, the data set will obviously need to present the kind of properties we are interested in testing. 8

II. Selecting Data Sets for Comparison: Suggested Procedure (Cont’d) n Second, our choices of data sets will have to be synchronized with our choices of an error estimation method and our choices of a statistical test. • For example, if we choose to use a leave-oneout error estimation method, we should make sure that our data sets are not too large, since leave-one-out is very computer intensive. • Alternatively, if 10 -fold cross-validation is our error estimation method of choice, we should make sure to have enough samples in the data set. • Similarly, the number of domains that we choose will depend on our chosen error estimation method, since issues of time complexity will 9 arise.

II. Selecting Data Sets for Comparison: Suggested Procedure (Cont’d) • On the other hand, the number of domains we choose is also related to the statistical confidence we wish to establish: we can be more confident in our approach if it succeeds on a lot of domains than if it only succeeds on a few. • Similarly, given the degree of confidence in our results that we wish to establish, the sample size of our data set will also matter. • In certain case, so will the distribution of our data sets. For example, paired t-tests will not fare well on highly imbalanced domains. If the paired t-test is the chosen statistical test, the experiment designer should stay away from imbalanced data sets. 10

II. Selecting Data Sets for Comparison: Suggested Procedure (Cont’d) n Because data set selection can be slightly confusing, we suggest a two-phase approach. • During the first phase, a subset of domains can be selected, based on simple, "human-level" considerations such as the purpose of the evaluation and the desired domain characteristics; • The selected domains can then be filtered according to more algorithmic considerations such as their sample size and distribution. 11

III. Selecting a Performance Measure of Interest n n n The performance measure we will choose will depend mainly on the algorithms we wish to test, the data sets we are testing them on, and the focus of our evaluation. The focus of our evaluation is linked to our application. If, for example, we are testing an algorithm designed to perform face recognition, we may be interested in testing that algorithm's precision. 12

III. Selecting a Performance Measure of Interest (Cont’d) n n In another example, if we are testing algorithms designed to deal with the class imbalance problem on domains that present a class imbalance, it would be inappropriate to choose accuracy as a performance measure, since accuracy would give us optimally biased assessments of performance on the minority class. A better measure would be ROC Analysis. The choice of a performance measure, in turn, will affect our choices of an error estimation method and of a statistical test. 13

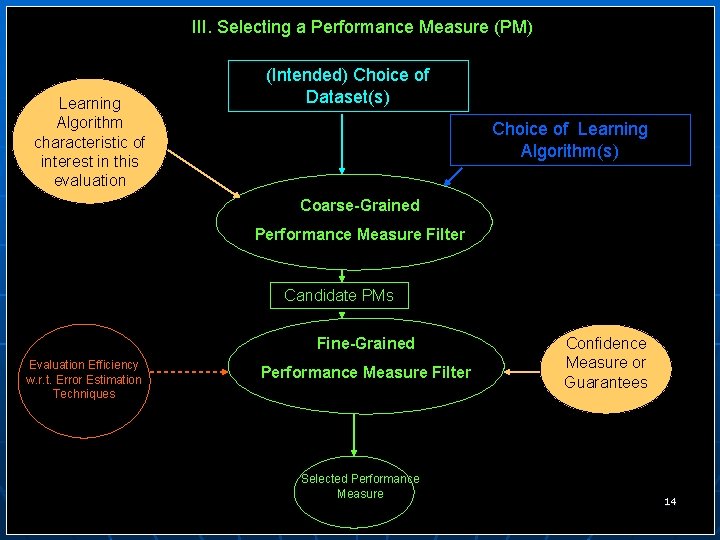

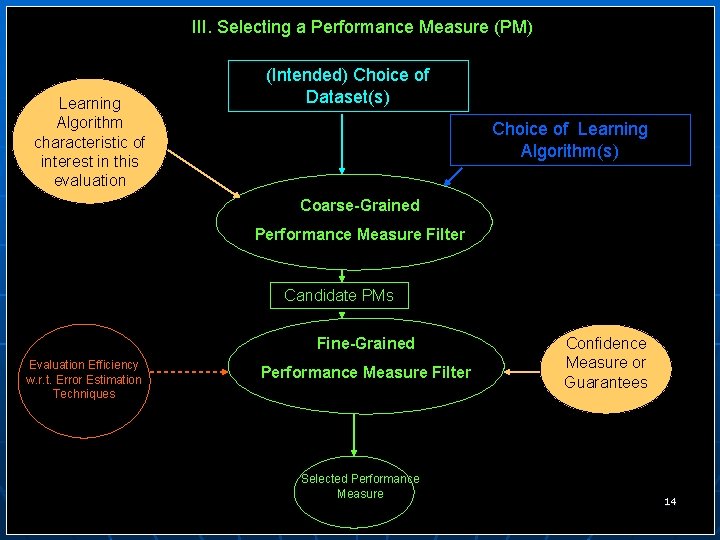

III. Selecting a Performance Measure (PM) Learning Algorithm characteristic of interest in this evaluation (Intended) Choice of Dataset(s) Choice of Learning Algorithm(s) Coarse-Grained Performance Measure Filter Candidate PMs Fine-Grained Evaluation Efficiency w. r. t. Error Estimation Techniques Performance Measure Filter Selected Performance Measure Confidence Measure or Guarantees 14

III. Selecting a Performance Measure of Interest: Suggested Procedure n n Once again, we suggest to divide the selection process into two phases: a coarse -grained and a finer-grained phase. In the coarse-grained phase, the three criteria that determine the first subset of performance measures are: • The choice of a learning algorithm, • The choice of data sets and • The characteristic of interest in the evaluation. 15

III. Selecting a Performance Measure of Interest: Suggested Procedure (Cont’d) n n The choice of a learning algorithm determines our choice of performance measure. For example, if we do not have enough data to allow for a formal threshold-setting procedure to take place (i. e. , there is not enough data for a validation set), then it is better to use a threshold-insensitive evaluation measure, such as ROC Analysis than it would be to use a threshold-sensitive measure such as accuracy. The choice of data sets also determines our choice of an evaluation measure. If for example some of the data sets we selected are multi-class domains, a measure that applies to multi-class domains, such as accuracy, would have to be employed. Similarly in the case of hierarchical classification where specialized hierarchical measures must be employed. 16

III. Selecting a Performance Measure of Interest: Suggested Procedure (Cont’d) n n The characteristic of interest in the evaluation is obviously very important. In medical applications, for example, the sensitivity and specificity of a test matter much more than the overall accuracy it predicts. The performance measure will thus have to be chosen appropriately. Once several candidate performance measures have been chosen, a finer-grained filter should be applied to ensure that the performance measure chosen is synchronized with our choice of an error estimation technique and that it is associated with appropriate confidence measures or guarantees. 17

III. Selecting a Performance Measure of Interest: Suggested Procedure (Cont’d) n n n With respect to synchronization with the error estimation technique, we are concerned about the time efficiency of the performance measure. If, for example, it is too long, it could make an already long and repetitive error estimation process intractable. The confidence measure or guarantees that dictate the finer-grained choice of a performance measure can be explained as follows: assume that we want to derive a tight confidence interval as a guarantee that our results are meaningful. Not all performance measures have means of computing tight confidence intervals associated with them. (E. g. , ROC-bands). As well, a measure such as the F 1 measure does not have confidence intervals associated with it. (these measures do not have 18 known or easy to compute standard deviations associated with them)

IV. Selecting an Error. Estimation/Sampling Method n n The purpose of an Error Estimation method is to ensure that the models were tested on data that is as representative as possible of the overall population. An important feature of error estimation procedure is that they are repetitive, some being more than others. Thus the selection of an error estimation method will depend very heavily on the time efficiency of the algorithms being tested as well as on the time efficiency of the performance measure used. The choice of an error estimation method will also depend on the kind of statistical guarantees we wish to obtain. 19

IV. Selecting an Error. Estimation/Sampling Method (Cont’d) n n For example, in health-sensitive applications, it is of paramount importance to have very high confidence in our results. It is not as important in other applications, where mistakes are not as costly. This, in turn, will impact on our choice of a statistical test. As well, our choice of an error estimation method, may cause us to have to re-consider our choices of data sets (to the extent possible) to make sure that we have enough data available for use with our error estimation method. 20

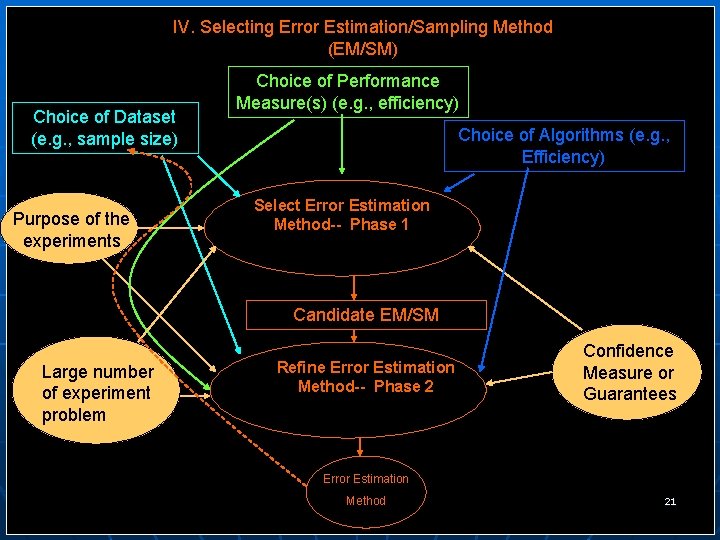

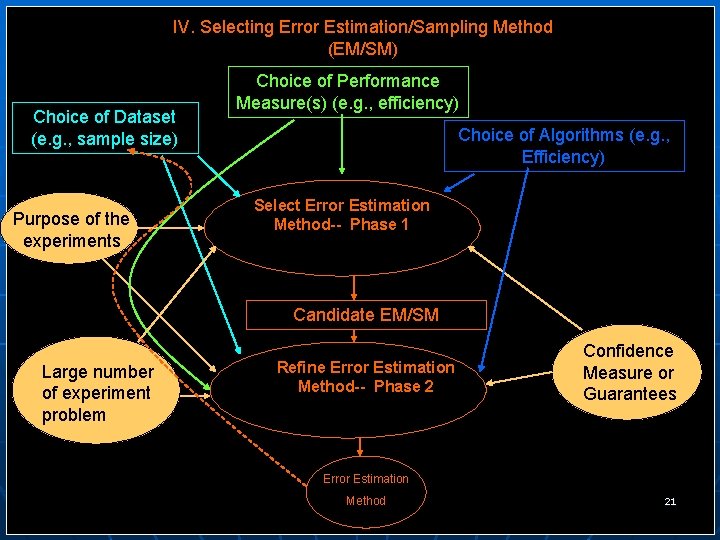

IV. Selecting Error Estimation/Sampling Method (EM/SM) Choice of Dataset (e. g. , sample size) Purpose of the experiments Choice of Performance Measure(s) (e. g. , efficiency) Choice of Algorithms (e. g. , Efficiency) Select Error Estimation Method-- Phase 1 Candidate EM/SM Large number of experiment problem Refine Error Estimation Method-- Phase 2 Confidence Measure or Guarantees Error Estimation Method 21

IV. Selecting an Error-Estimation / Sampling Method: Suggested Procedure n n Once again, we propose a two stage selection, although this time, the purpose of the first stage is to select an approach while the purpose of the second stage is to fine-tune that approach. For example, the first stage may decide to select a bootstrapping rather than a sampling regimen and the second step will decide whether or not balanced bootstrapping is necessary. 22

IV. Selecting an Error-Estimation / Sampling Method: Suggested Procedure (Cont’d) n n n The purpose of the experiments will dictate what kind of constraints are put on the error estimation method. The constraints will come in the form of constraints we have regarding the tendencies of the method. These constraints can be of the form of constraints on the Bias/Variance or computational intensity of the method. For example, leave-one out has a moderate to high bias, but is nearly unbiased. However, it is very computational intensive. The. 632 bootstrap is not as computationally intensive, but it has low variance and is only optimistically biased. 23

IV. Selecting an Error-Estimation / Sampling Method: Suggested Procedure (Cont’d) n n n The large number of experiments problem, also known as multiplicity effect refers to the fact that if too many experiments are ran, then there is a greater chance that the observed results occur by chance. Some of the ways to deal with this problem involve the choice of an error estimation technique. For example, randomization testing may provide a solution to this issue. 24

IV. Selecting an Error-Estimation / Sampling Method: Suggested Procedure (Cont’d) n n Confidence Measure or Guarantee refers to the kind of statistical guarantees that is sought by the user. Statistical tests associated with different error estimation regimen may not have the characteristics sought by the user. For example, the statistical guarantees that are derived from Bootstrapping have much lower powers than those derived from t-tests applied after sampling methods were applied. If power is of importance for the particular application, then a sampling error estimation regimen is preferred to a bootstrapping one. 25

V. Selecting a Statistical Test n n n The main drive for our choice of a statistical test is the kind of statistical guarantees we wish to obtain for our results. In fact, the error estimation procedure and the statistical test are very closely related. Each statistical test is associated with a number of characteristics it tests such as its behaviour with respect to Type I and Type II errors, its consistency, discriminance, etc. This choice, however, also depend on the assumptions the statistical test makes. In order for the test to be valid, these assumptions have to be verified. For example, the t-test is valid only if the distribution of the data it is applied to is normal. 26

V. Selecting a Statistical Test (Cont’d) n Our choice of a statistical test will also depend on: • our choice of a performance measure (certain measures do not have statistical tests associated with them. Examples: measures depending on the median rather than the average), • the number of algorithms we are comparing (Example: should we run a paired t-test or perform an ANOVA test? ), and • our choices of data sets, especially their sample size (example: a t-test will not be valid on a data set that is too small, if the normality assumptions cannot be verified). 27

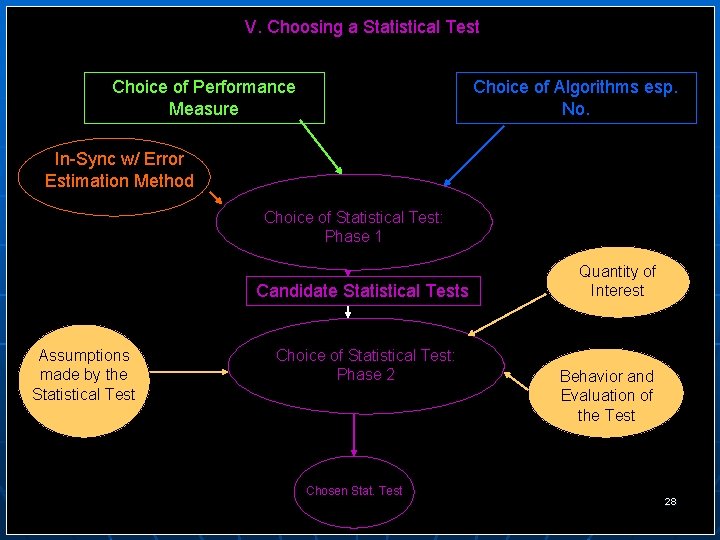

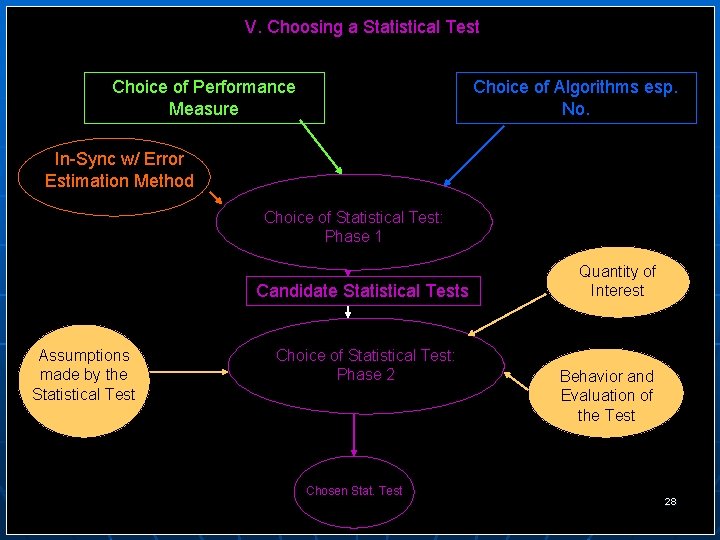

V. Choosing a Statistical Test Choice of Performance Measure Choice of Algorithms esp. No. In-Sync w/ Error Estimation Method Choice of Statistical Test: Phase 1 Candidate Statistical Tests Assumptions made by the Statistical Test Choice of Statistical Test: Phase 2 Chosen Stat. Test Quantity of Interest Behavior and Evaluation of the Test 28

V. Selecting a Statistical Test: Suggested Procedure n n n As in all the previous steps, the process is divided into two stages. The first stage selects a subset of candidate statistical tests and the second stage selects one test from among these candidates. The first stage is quite constrained by all the other choices that were previously made. For example, the choice in the number of algorithms being compared will help select the test: paired tests versus ANOVA. The choice of a performance measure will indicate what types of tests are available associated with the statistics computed by that measure. The error estimation method will also determine whether a parametric test (based on sampling approaches) or nonparametric test (based on bootstrapping approaches (and coming with no assumptions) or based on randomization (and coming with some assumptions about variance)) needs to be used. 29

V. Selecting a Statistical Test: Suggested Procedure (Cont’d) n Once a number of candidate tests has been selected by the first stage, a second stage filter is applied to restrict the choice to a single test. This is done by using the following user's constraints and preferences as well as procedural constraints: • The quantity of interest, which corresponds to the kind of statistical justifications sought by the user. Is s/he only interested in a measure of statistical significance or its some sort of quantification of the results is necessary as well (e. g. , in the form of a confidence interval). • Certain user's preference and constraints regarding the behaviour and evaluation of the test. Like in the case of the choice of a performance measure, which is related to the issue of statistical test selection, we are interested in the test's performance with respect to Type I/II error, consistency, discriminance and so on. • Finally, the choice of the test necessarily has to depend on whether the assumptions made by the test are verified by the experimental setting. For example, if the results are not 30 normally distributed, the t-test cannot be applied.