CS 7810 Lecture 7 Trace Cache A Low

- Slides: 15

CS 7810 Lecture 7 Trace Cache: A Low Latency Approach to High Bandwidth Instruction Fetching E. Rotenberg, S. Bennett, J. E. Smith Proceedings of MICRO-29 1996

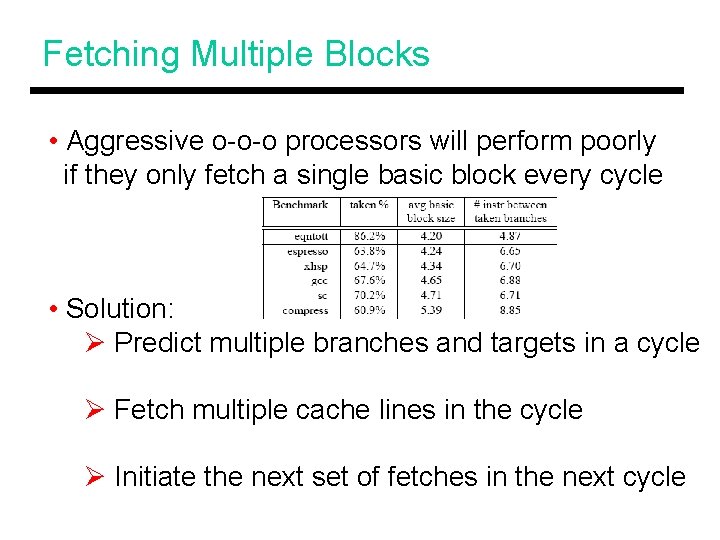

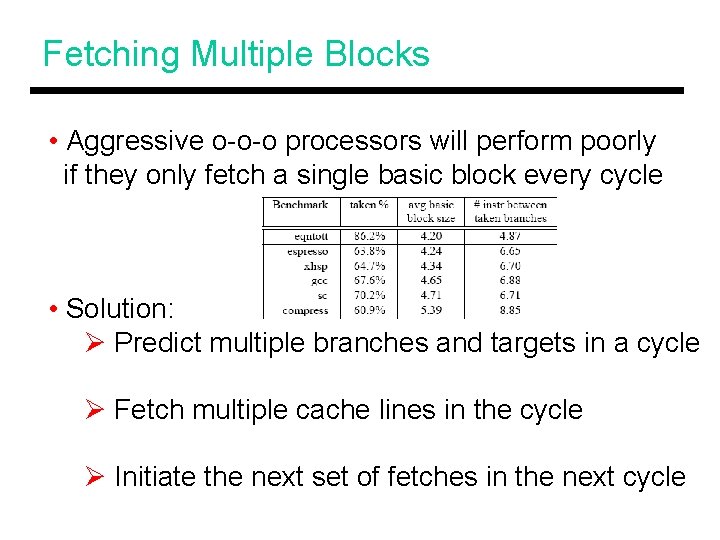

Fetching Multiple Blocks • Aggressive o-o-o processors will perform poorly if they only fetch a single basic block every cycle • Solution: Ø Predict multiple branches and targets in a cycle Ø Fetch multiple cache lines in the cycle Ø Initiate the next set of fetches in the next cycle

Without the Trace Cache • Stage 1 requires identification of predictions and target addresses • Stage 2 requires multi-ported access of the I-cache • Stage 3 requires shifting and alignment

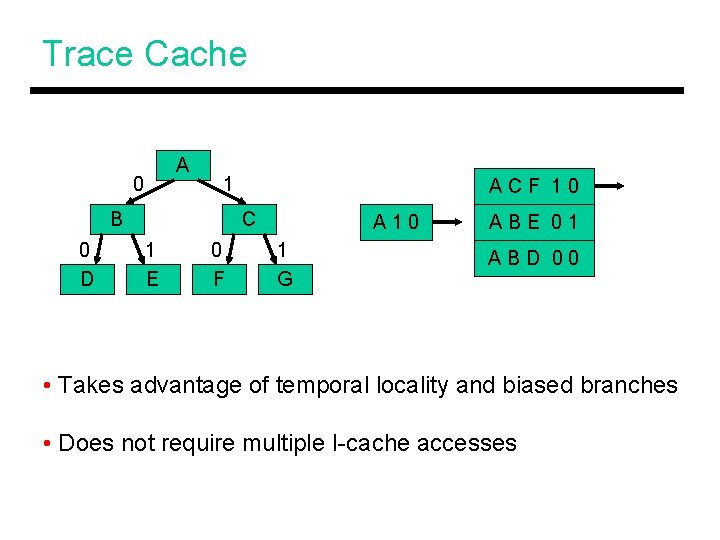

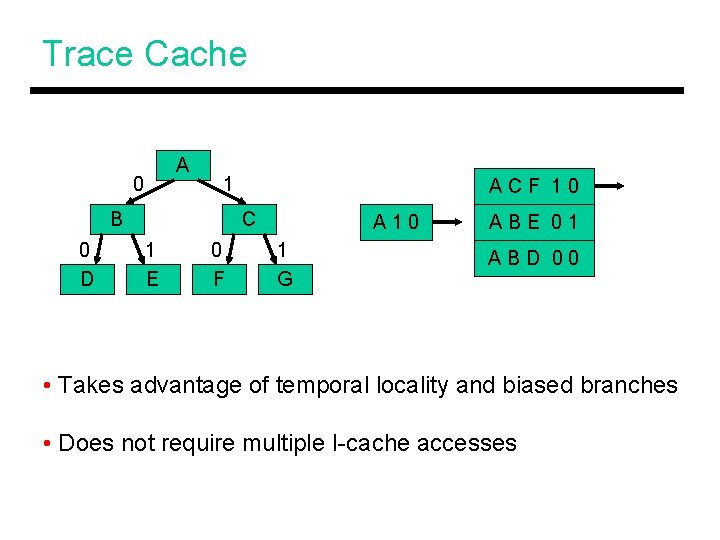

Trace Cache A 0 1 B 0 D ACF 10 C 1 E 0 F A 10 1 G ABE 01 ABD 00 • Takes advantage of temporal locality and biased branches • Does not require multiple I-cache accesses

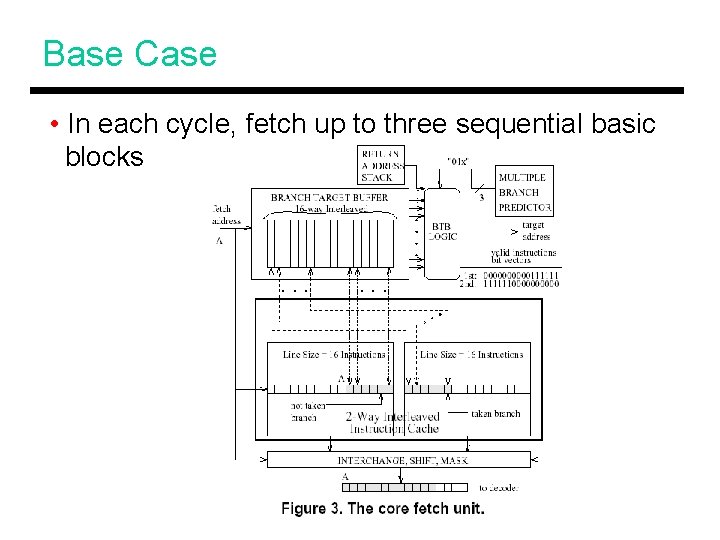

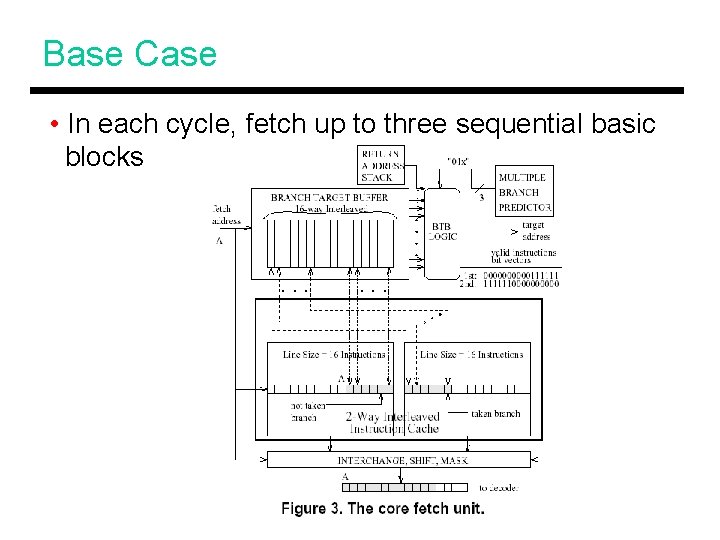

Base Case • In each cycle, fetch up to three sequential basic blocks

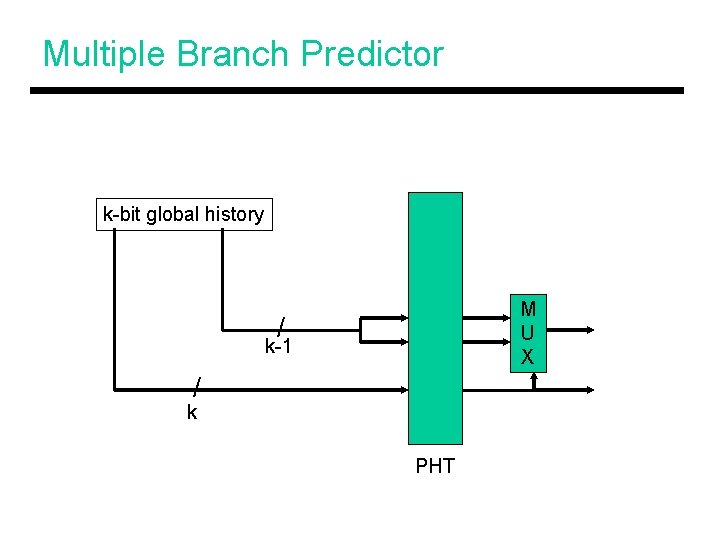

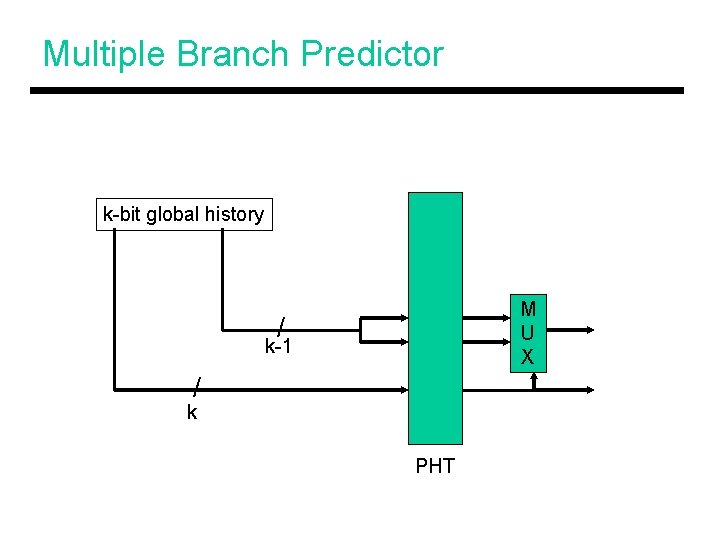

Multiple Branch Predictor k-bit global history M U X / k-1 / k PHT

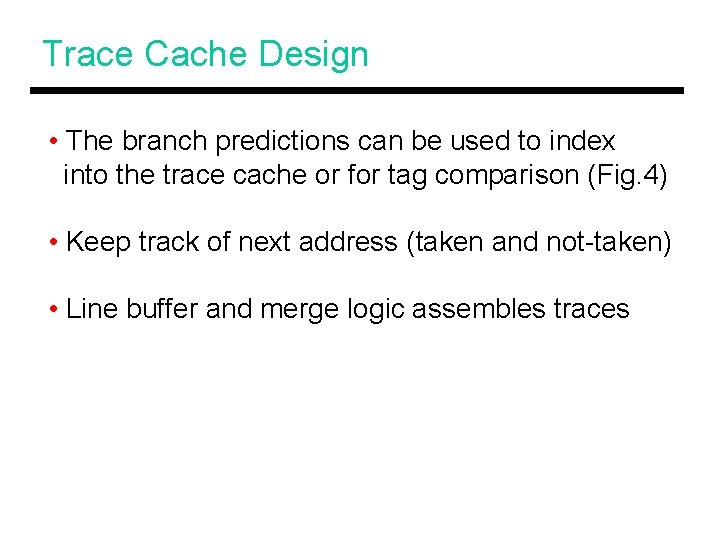

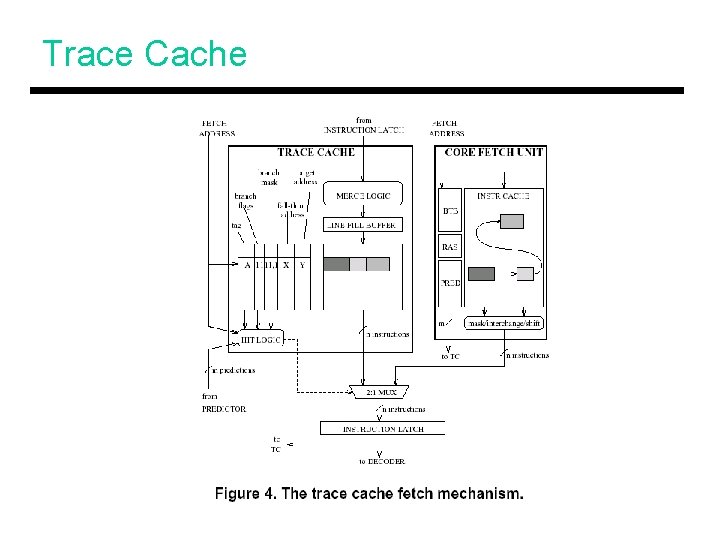

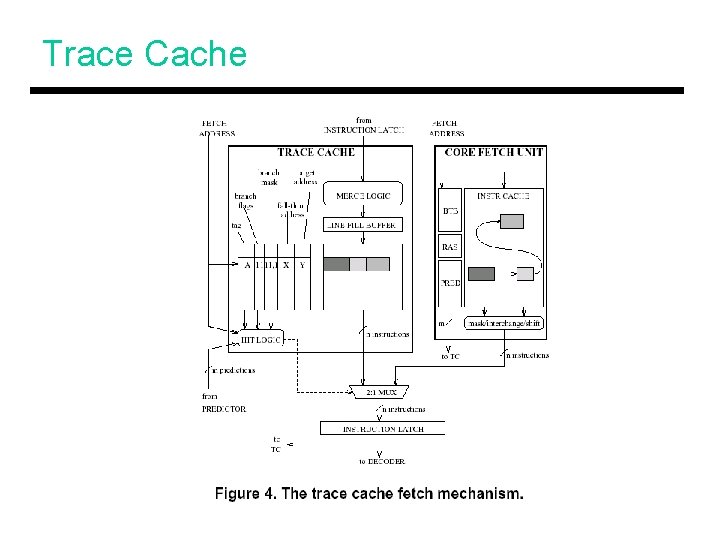

Trace Cache Design • The branch predictions can be used to index into the trace cache or for tag comparison (Fig. 4) • Keep track of next address (taken and not-taken) • Line buffer and merge logic assembles traces

Trace Cache

Design Alternatives • Associativity (including paths) • Partial matches – use all instructions till the first mispredict • Multiple line-fill buffers • Trace selection to reduce conflicts • Multi-cycle trace caches?

Branch Address Cache • The BTB maintains 14 addresses (tree of basic blocks) • Based on the branch prediction, three addresses are forwarded to the I-Cache • BTB extension that allows multiple target prediction Ø adds pipeline stages Ø can still have I-Cache bank contention

Collapsing Buffer • Can detect taken branches within a single cache line • Also suffers from merge logic and bank contention

Methodology • Very aggressive o-o-o processor – large window (2048 instrs), unlimited resources, no artificial dependences, no cache misses • SPEC 92 -Int and Instruction Benchmark Suite (IBS) • Trace cache – 64 entries, 16 instrs and 3 branches per entry – 712 tag bytes and 4 KB worth of instructions – ICache is 128 KB

Results • Fetching three sequential basic blocks (SEQ. 3) is not much more complex than fetching one – IPC improvement of ~15% • Trace cache outperforms BAC and CB – note that the latter can’t handle all kinds of trace patterns and suffer from ICache bank contention • TC outperforms SEQ. 3 by 12% • BAC and CB do worse than SEQ. 3 if they increase front-end latency

Ideal Fetch • The trace cache is within 20% of ideal fetch • The trace miss rate is fairly high – 18 -76% • Up to 60% of instructions do not come from the trace cache • A larger trace cache comes within 10% of ideal fetch – note that the front-end is the bottleneck in this processor

Title • Bullet