CS 519 Advanced Operating Systems Multithreading and Synchronization

![xchg • xchg [memory], %reg • Atomically exchange the content in memory with the xchg • xchg [memory], %reg • Atomically exchange the content in memory with the](https://slidetodoc.com/presentation_image_h2/b414361e4c0ad3fa3d7eae57adab61f8/image-27.jpg)

![lock cmpxchg • cmpxchg [memory], [value] • Compare the value in memory with %rax lock cmpxchg • cmpxchg [memory], [value] • Compare the value in memory with %rax](https://slidetodoc.com/presentation_image_h2/b414361e4c0ad3fa3d7eae57adab61f8/image-39.jpg)

- Slides: 40

CS 519 Advanced Operating Systems Multi-threading and Synchronization Yeongjin Jang 02/21/19

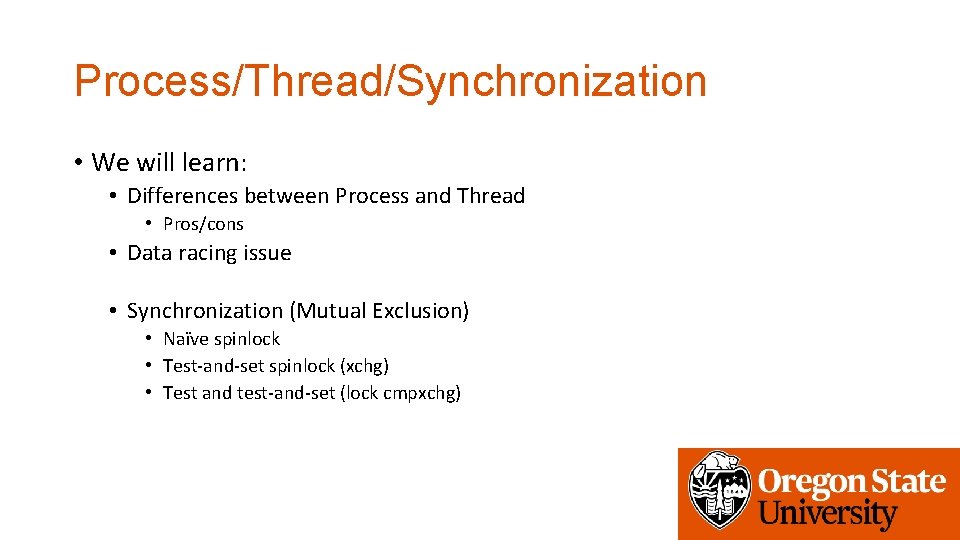

Process/Thread/Synchronization • We will learn: • Differences between Process and Thread • Pros/cons • Data racing issue • Synchronization (Mutual Exclusion) • Naïve spinlock • Test-and-set spinlock (xchg) • Test and test-and-set (lock cmpxchg)

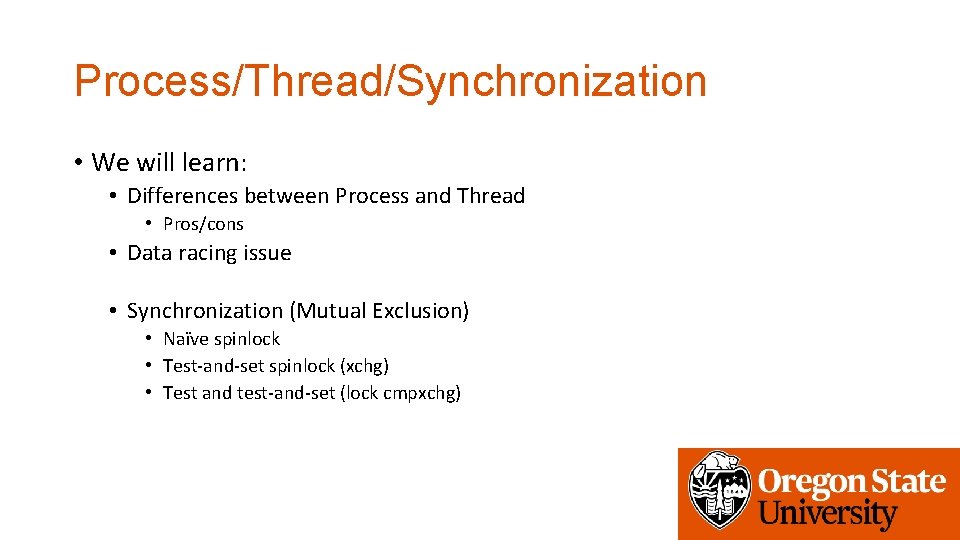

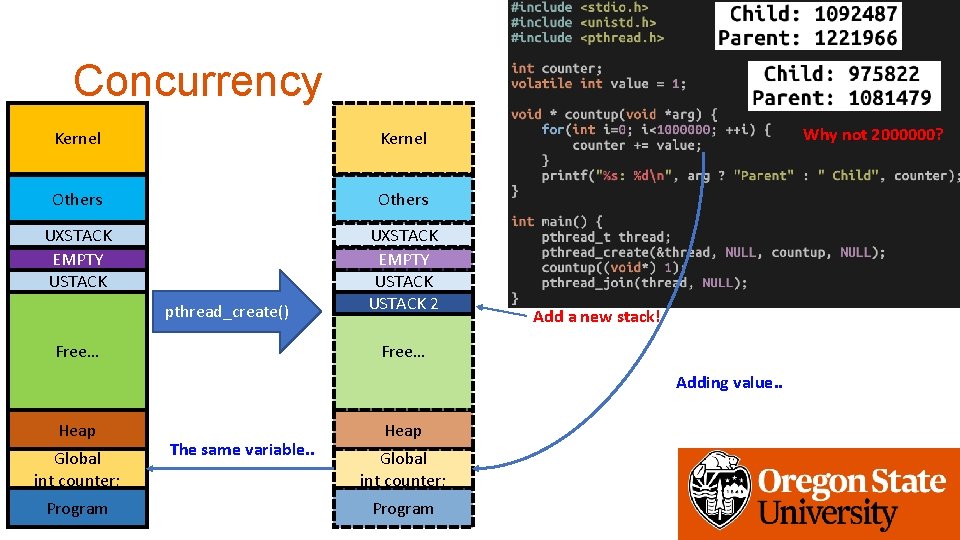

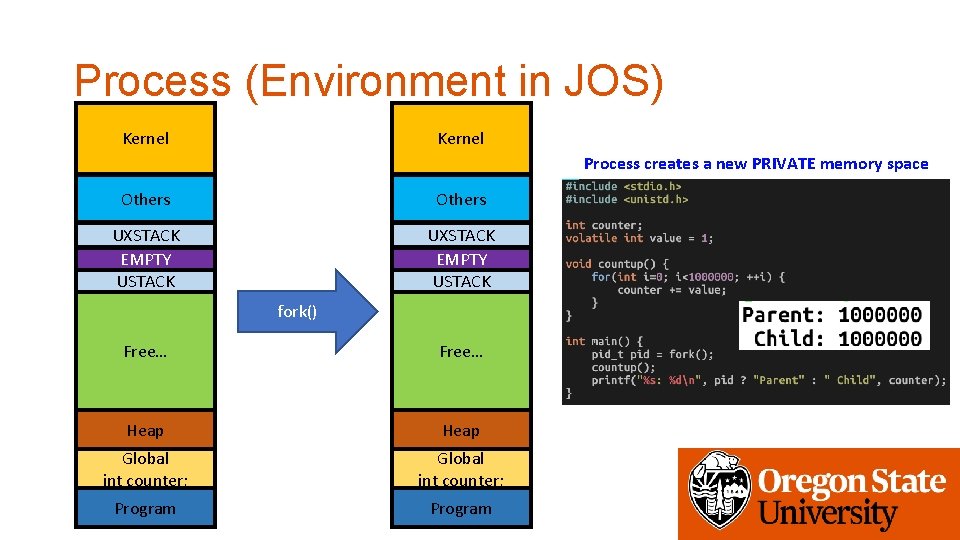

Process (Environment in JOS) Kernel Process creates a new PRIVATE memory space Others UXSTACK EMPTY USTACK fork() Free… Heap Global int counter; Program

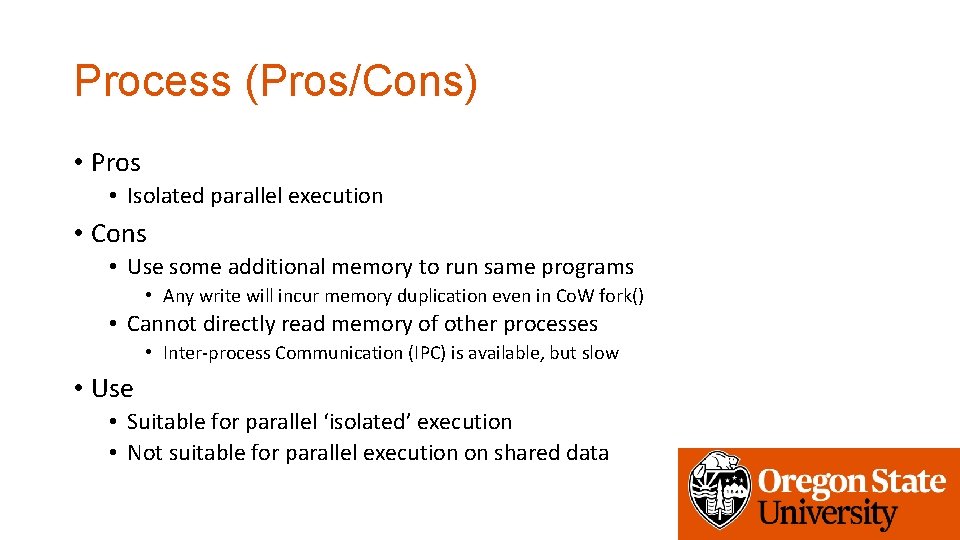

Process (Pros/Cons) • Pros • Isolated parallel execution • Cons • Use some additional memory to run same programs • Any write will incur memory duplication even in Co. W fork() • Cannot directly read memory of other processes • Inter-process Communication (IPC) is available, but slow • Use • Suitable for parallel ‘isolated’ execution • Not suitable for parallel execution on shared data

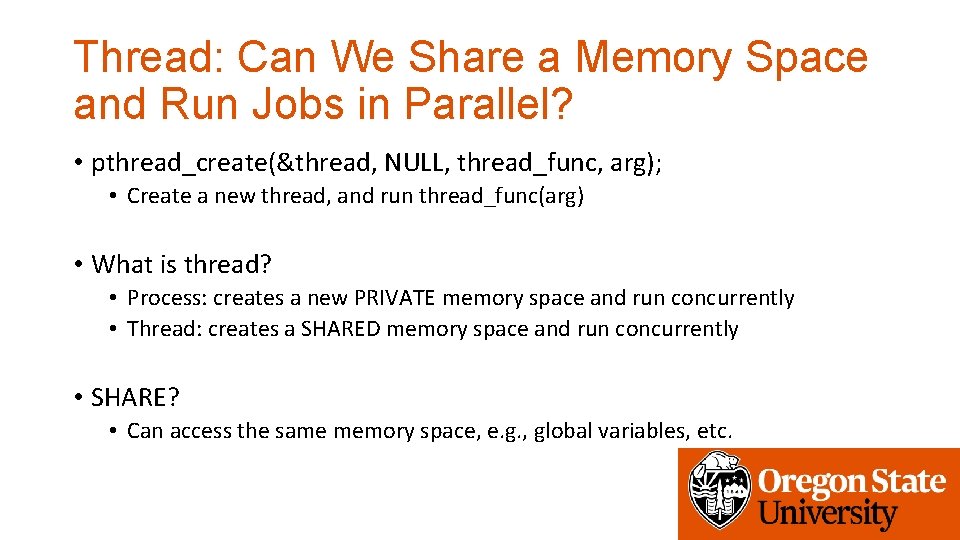

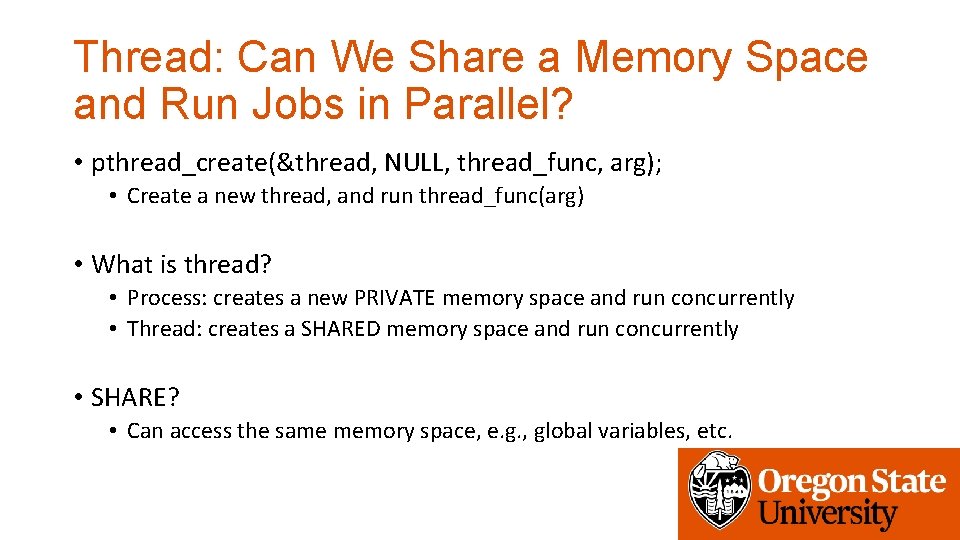

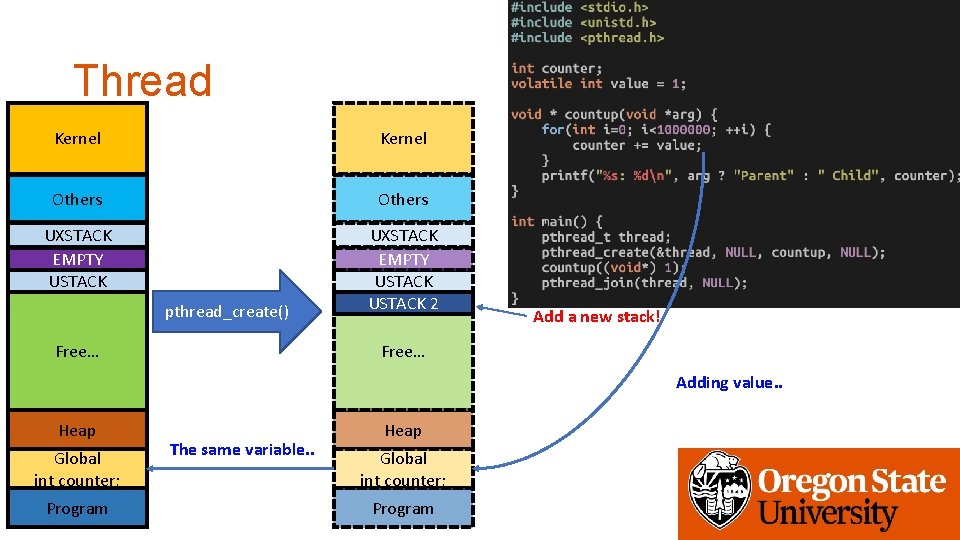

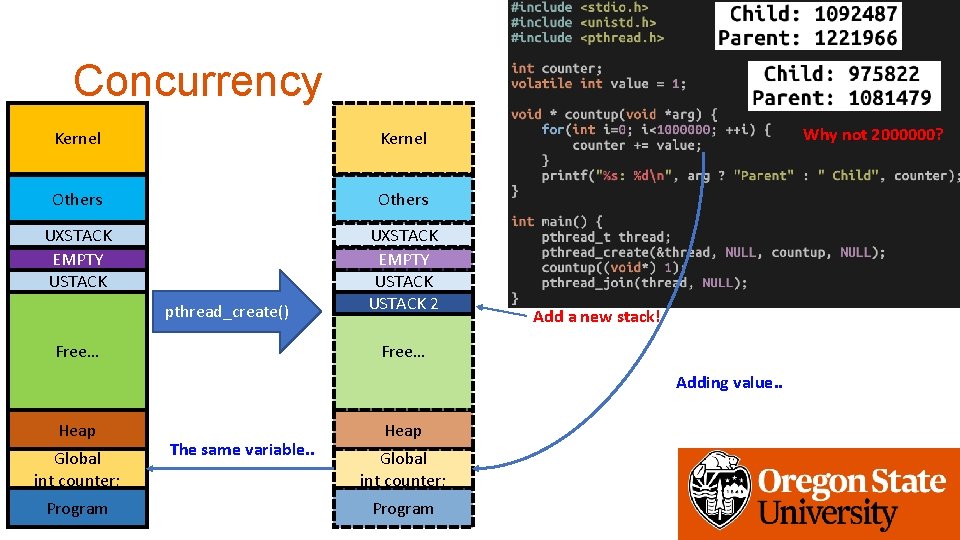

Thread: Can We Share a Memory Space and Run Jobs in Parallel? • pthread_create(&thread, NULL, thread_func, arg); • Create a new thread, and run thread_func(arg) • What is thread? • Process: creates a new PRIVATE memory space and run concurrently • Thread: creates a SHARED memory space and run concurrently • SHARE? • Can access the same memory space, e. g. , global variables, etc.

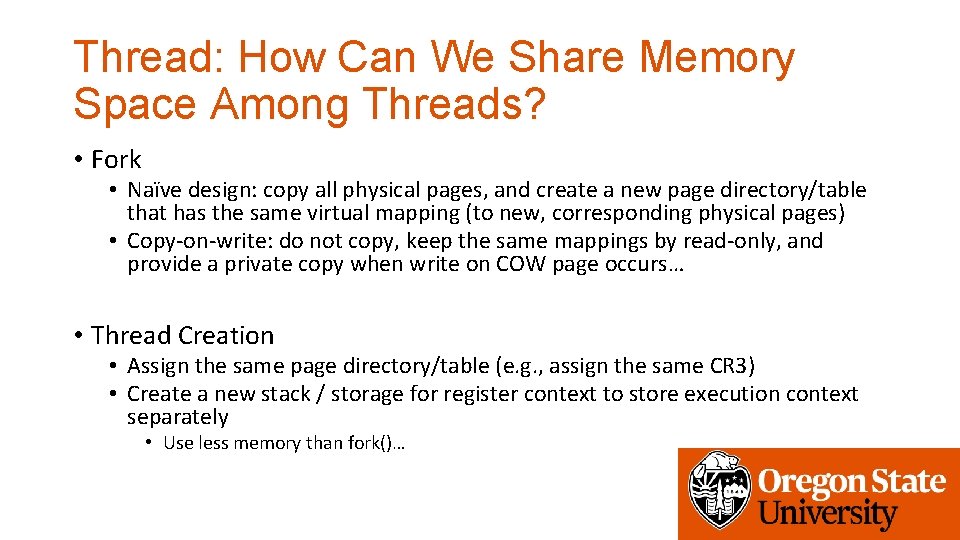

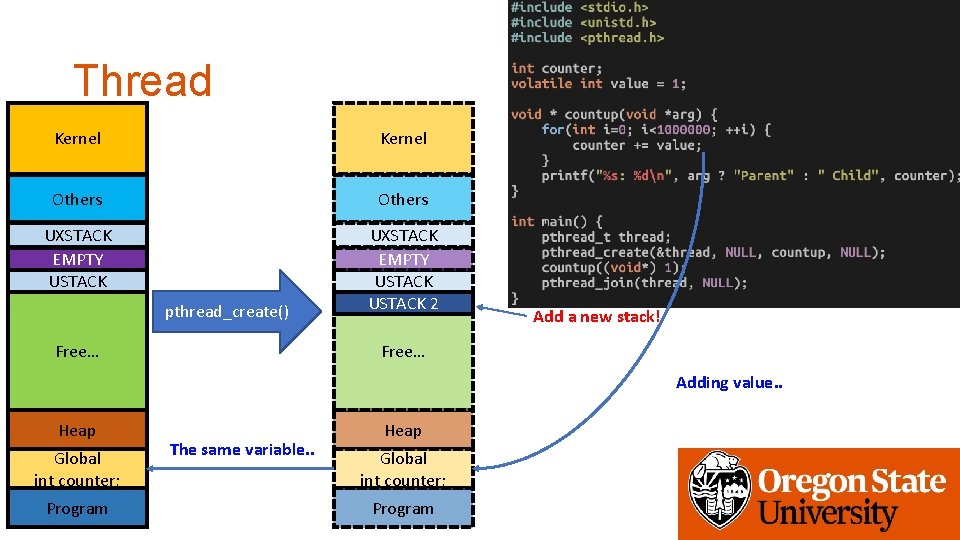

Thread: How Can We Share Memory Space Among Threads? • Fork • Naïve design: copy all physical pages, and create a new page directory/table that has the same virtual mapping (to new, corresponding physical pages) • Copy-on-write: do not copy, keep the same mappings by read-only, and provide a private copy when write on COW page occurs… • Thread Creation • Assign the same page directory/table (e. g. , assign the same CR 3) • Create a new stack / storage for register context to store execution context separately • Use less memory than fork()…

Thread Kernel Others UXSTACK EMPTY USTACK 2 pthread_create() Free… Add a new stack! Free… Adding value. . Heap Global int counter; Program The same variable. . Heap Global int counter; Program

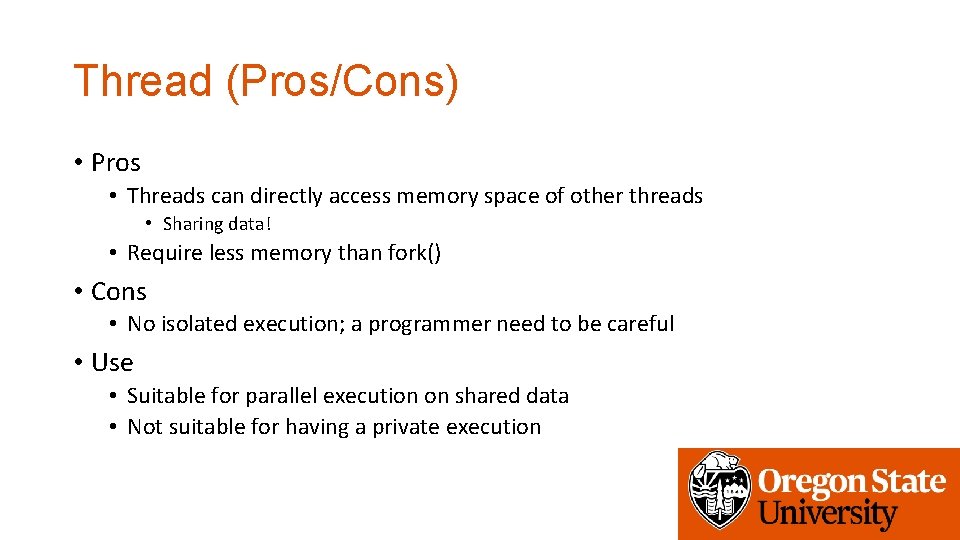

Thread (Pros/Cons) • Pros • Threads can directly access memory space of other threads • Sharing data! • Require less memory than fork() • Cons • No isolated execution; a programmer need to be careful • Use • Suitable for parallel execution on shared data • Not suitable for having a private execution

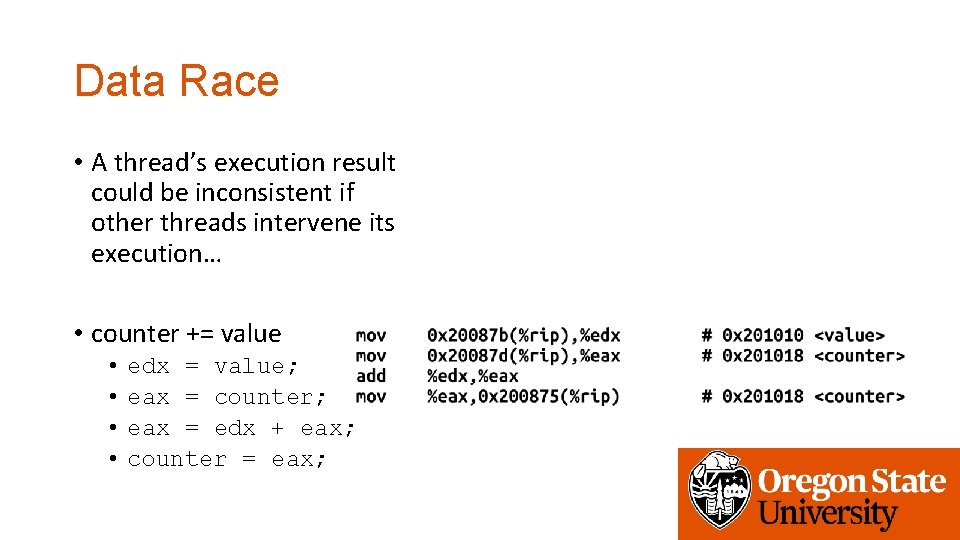

Concurrency Kernel Others UXSTACK EMPTY USTACK 2 pthread_create() Free… Why not 2000000? Add a new stack! Free… Adding value. . Heap Global int counter; Program The same variable. . Heap Global int counter; Program

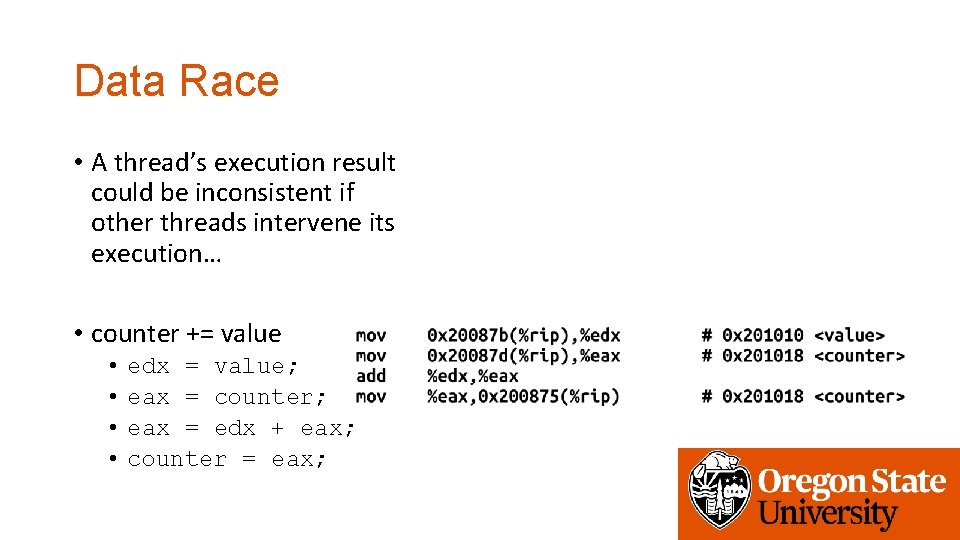

Data Race • A thread’s execution result could be inconsistent if other threads intervene its execution… • counter += value • • edx = value; eax = counter; eax = edx + eax; counter = eax;

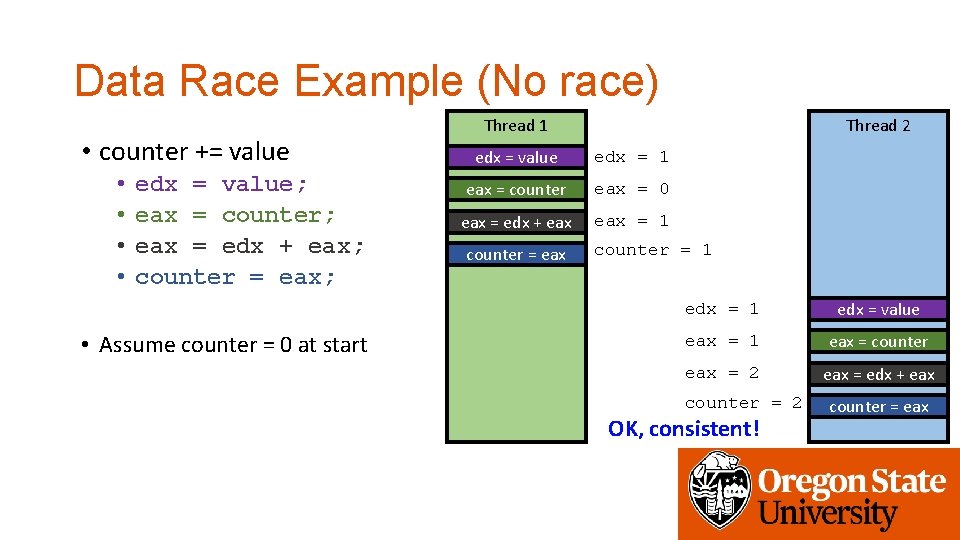

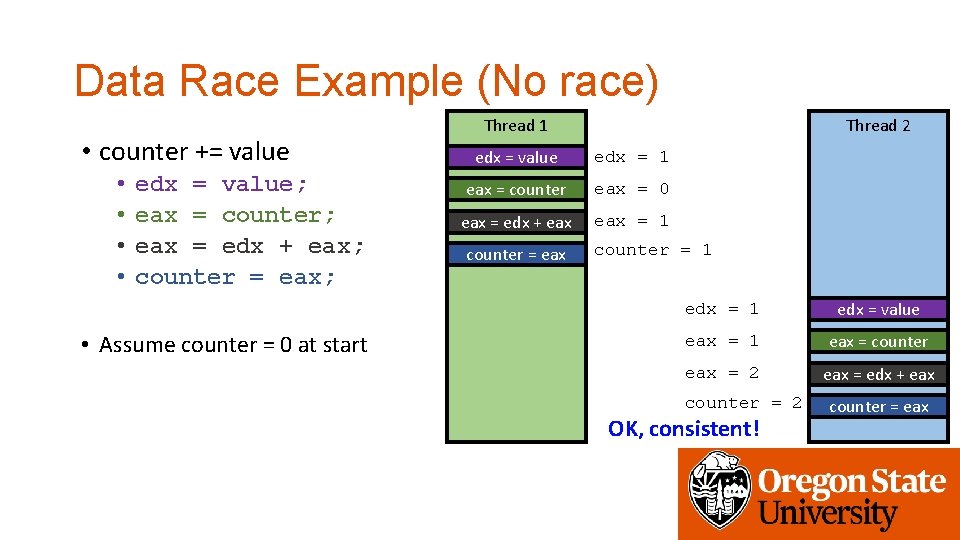

Data Race Example (No race) • counter += value • • edx = value; eax = counter; eax = edx + eax; counter = eax; • Assume counter = 0 at start Thread 2 Thread 1 edx = value edx = 1 eax = counter eax = 0 eax = edx + eax = 1 counter = eax counter = 1 edx = value eax = 1 eax = counter eax = 2 eax = edx + eax counter = 2 OK, consistent! counter = eax

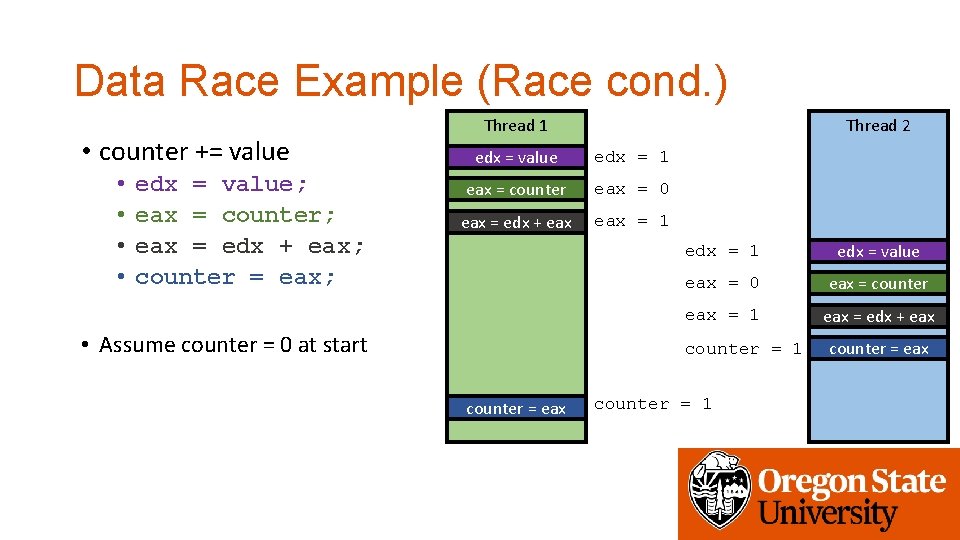

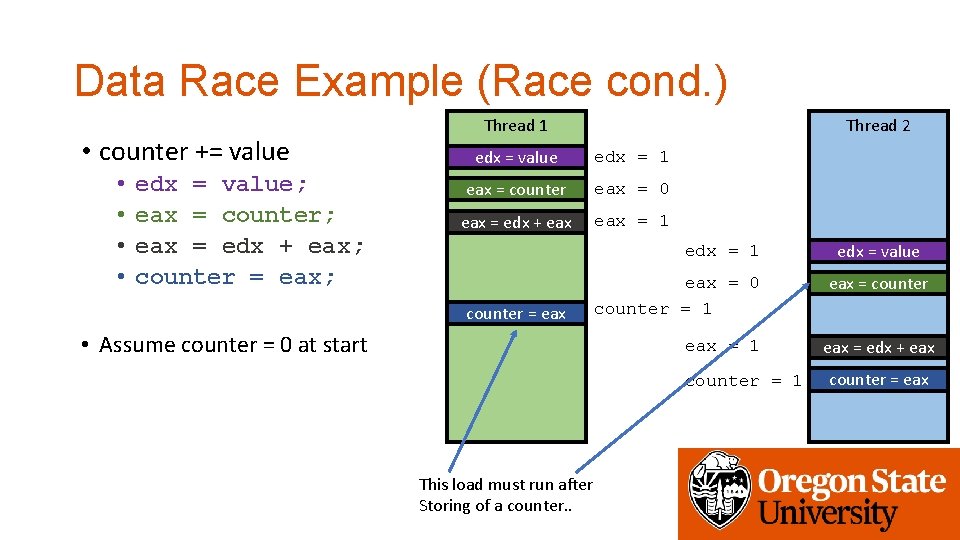

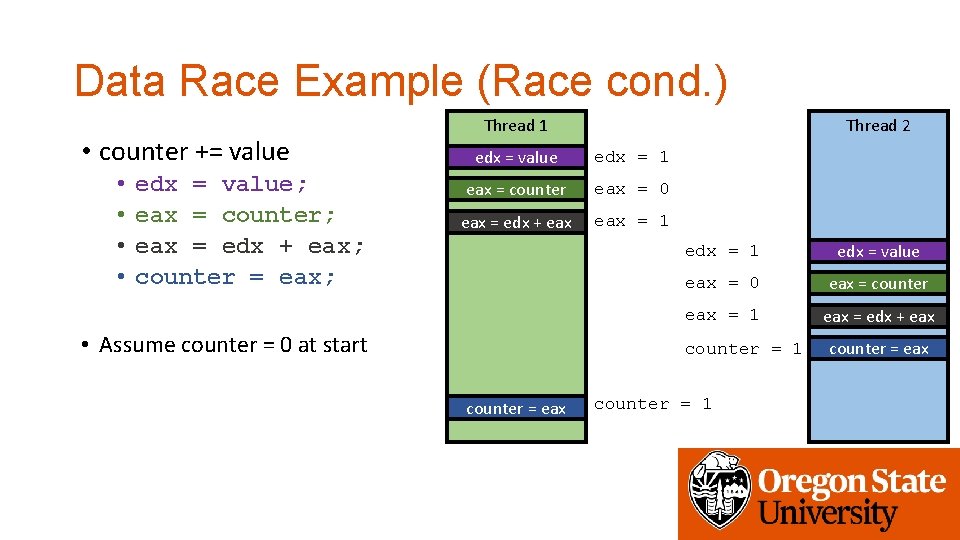

Data Race Example (Race cond. ) • counter += value • • edx = value; eax = counter; eax = edx + eax; counter = eax; Thread 2 Thread 1 edx = value edx = 1 eax = counter eax = 0 eax = edx + eax = 1 • Assume counter = 0 at start edx = 1 edx = value eax = 0 eax = counter eax = 1 eax = edx + eax counter = 1 counter = eax

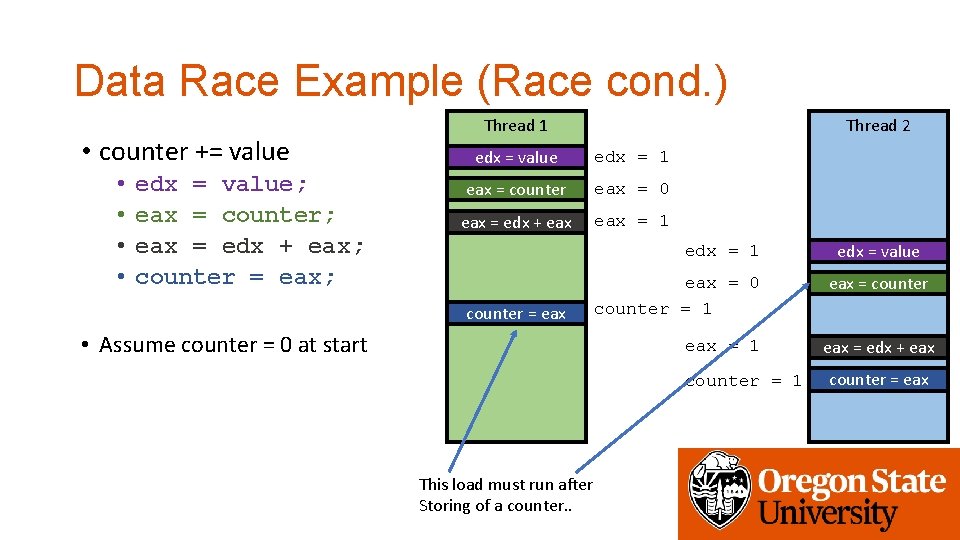

Data Race Example (Race cond. ) • counter += value • • edx = value; eax = counter; eax = edx + eax; counter = eax; Thread 2 Thread 1 edx = value edx = 1 eax = counter eax = 0 eax = edx + eax = 1 edx = 1 counter = eax • Assume counter = 0 at start eax = 0 counter = 1 eax = 1 counter = 1 This load must run after Storing of a counter. . edx = value eax = counter eax = edx + eax counter = eax

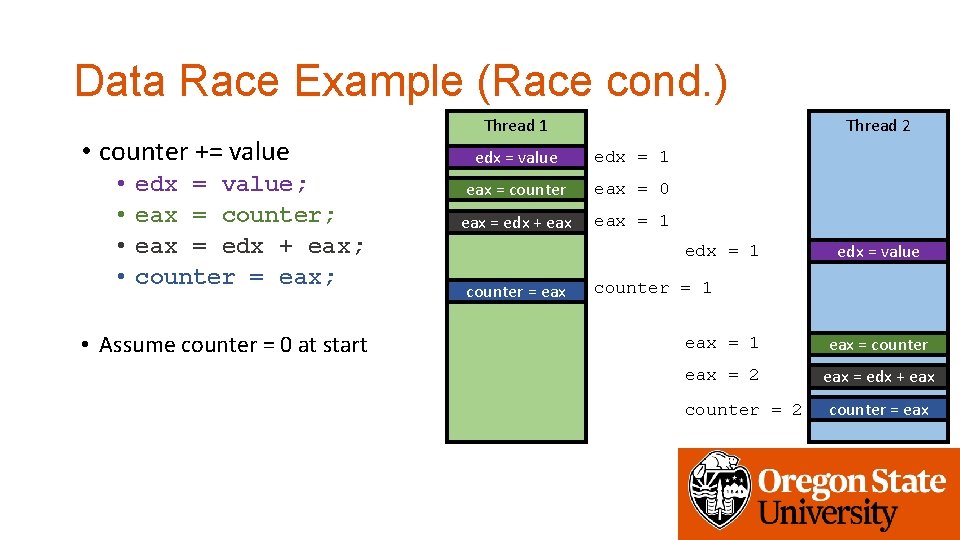

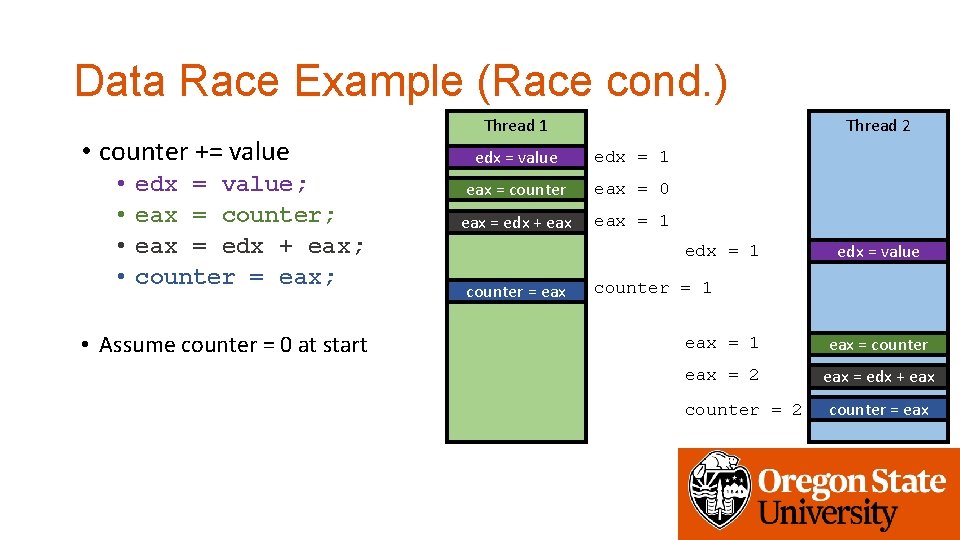

Data Race Example (Race cond. ) • counter += value • • edx = value; eax = counter; eax = edx + eax; counter = eax; • Assume counter = 0 at start Thread 2 Thread 1 edx = value edx = 1 eax = counter eax = 0 eax = edx + eax = 1 edx = 1 counter = eax edx = value counter = 1 eax = counter eax = 2 eax = edx + eax counter = 2 counter = eax

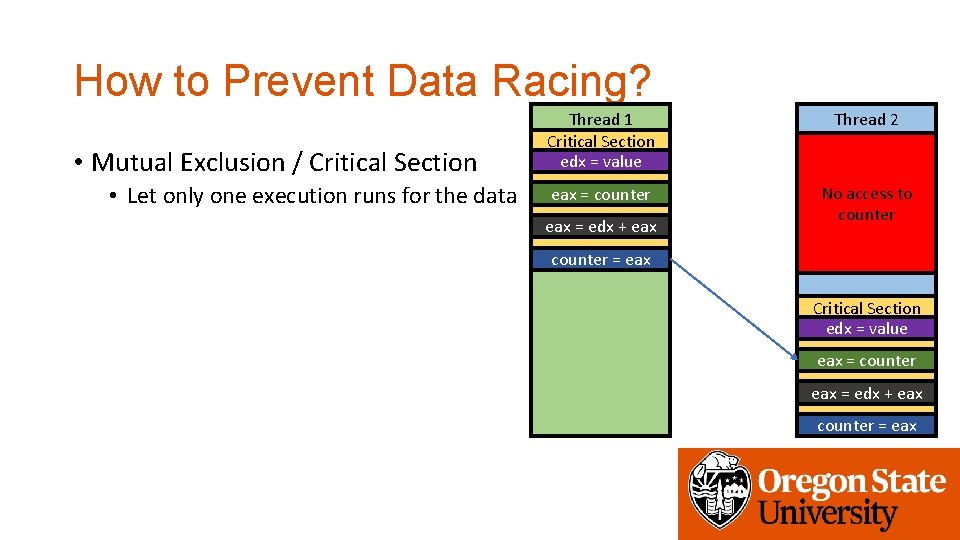

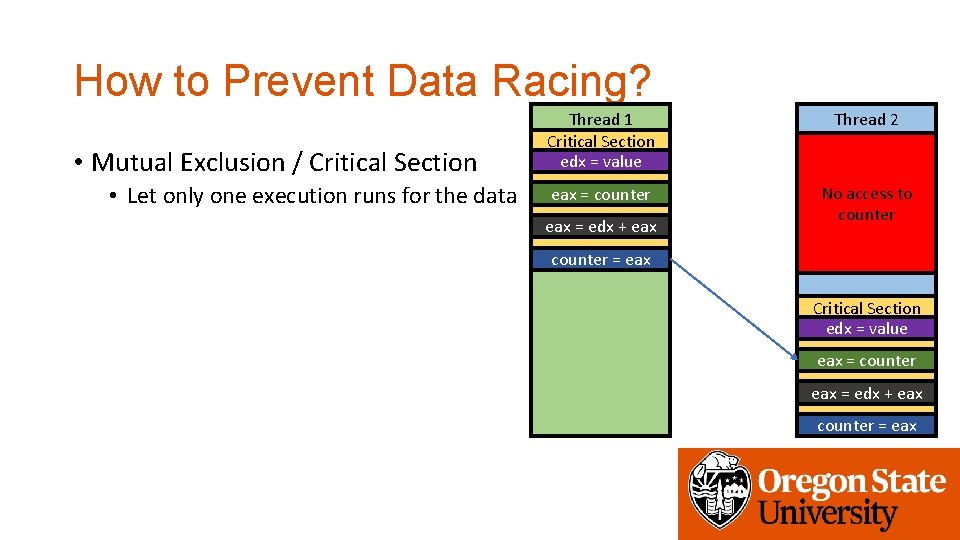

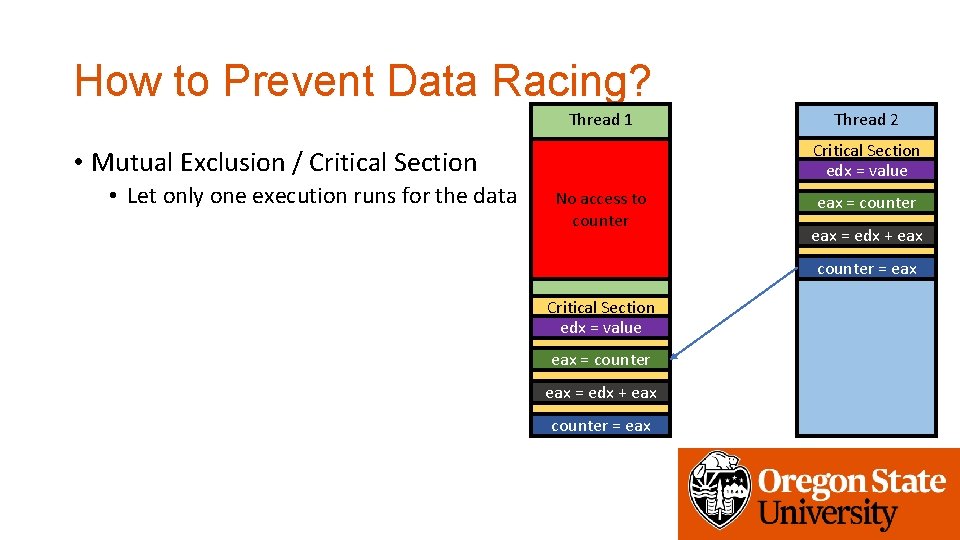

How to Prevent Data Racing? • Mutual Exclusion / Critical Section • Let only one execution runs for the data Thread 1 Critical Section edx = value Thread 2 eax = counter No access to counter eax = edx + eax counter = eax Critical Section edx = value eax = counter eax = edx + eax counter = eax

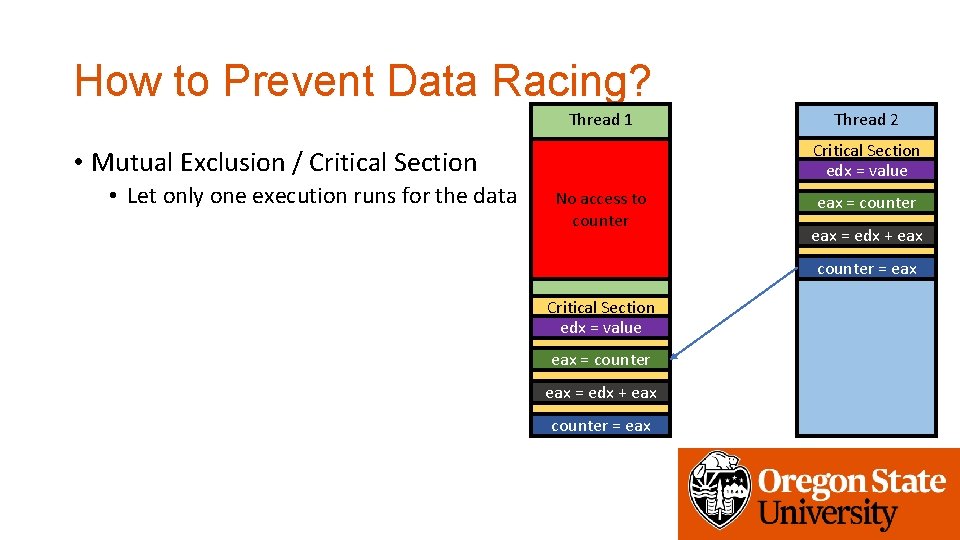

How to Prevent Data Racing? Thread 1 Critical Section edx = value • Mutual Exclusion / Critical Section • Let only one execution runs for the data Thread 2 No access to counter eax = edx + eax counter = eax Critical Section edx = value eax = counter eax = edx + eax counter = eax

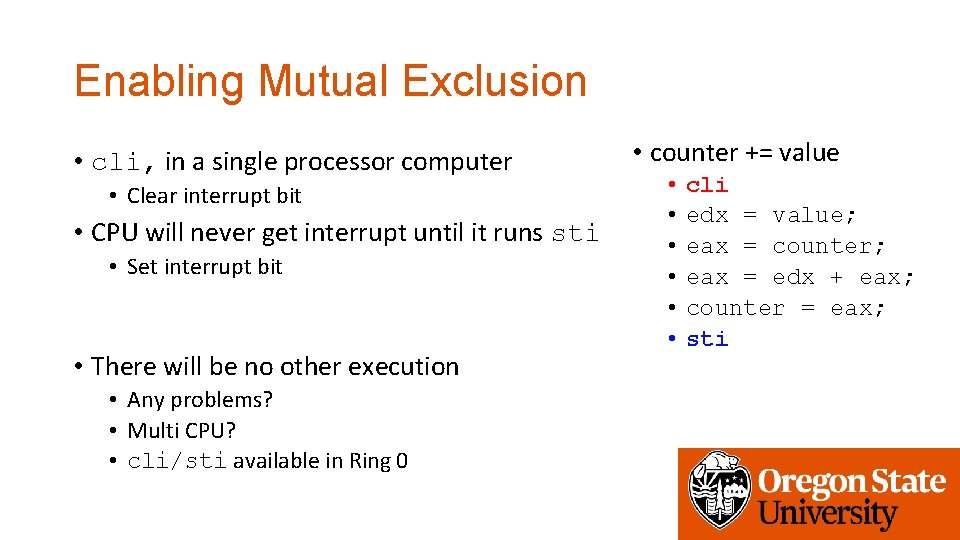

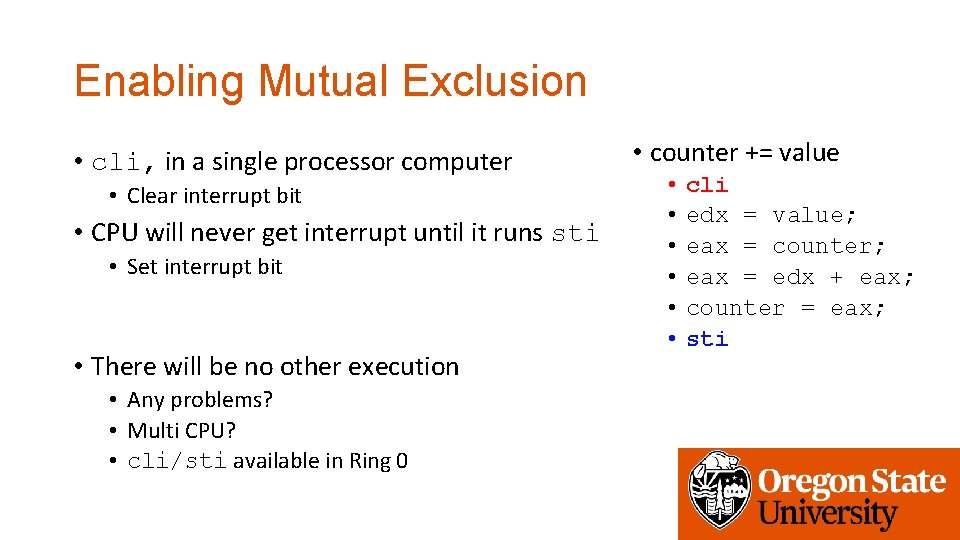

Enabling Mutual Exclusion • cli, in a single processor computer • Clear interrupt bit • CPU will never get interrupt until it runs sti • Set interrupt bit • There will be no other execution • Any problems? • Multi CPU? • cli/sti available in Ring 0 • counter += value • • • cli edx = value; eax = counter; eax = edx + eax; counter = eax; sti

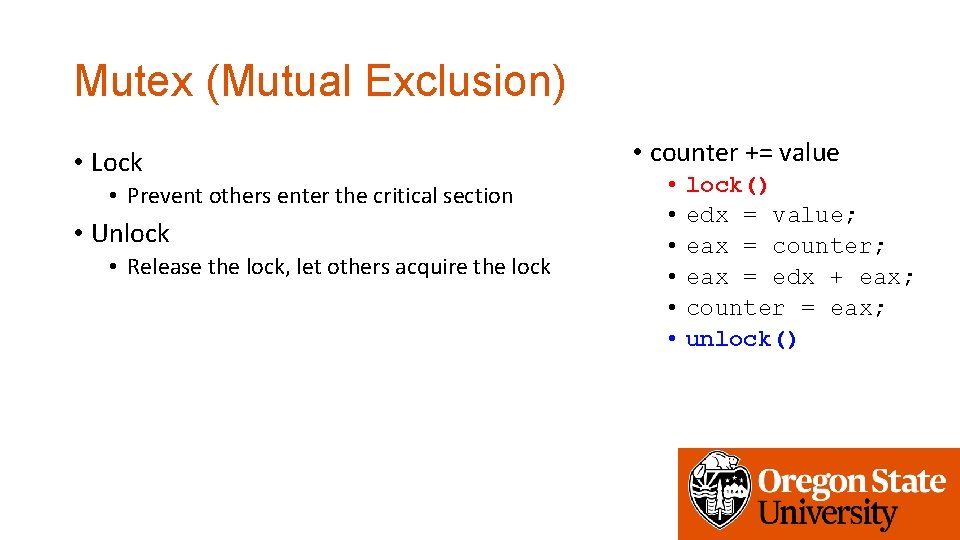

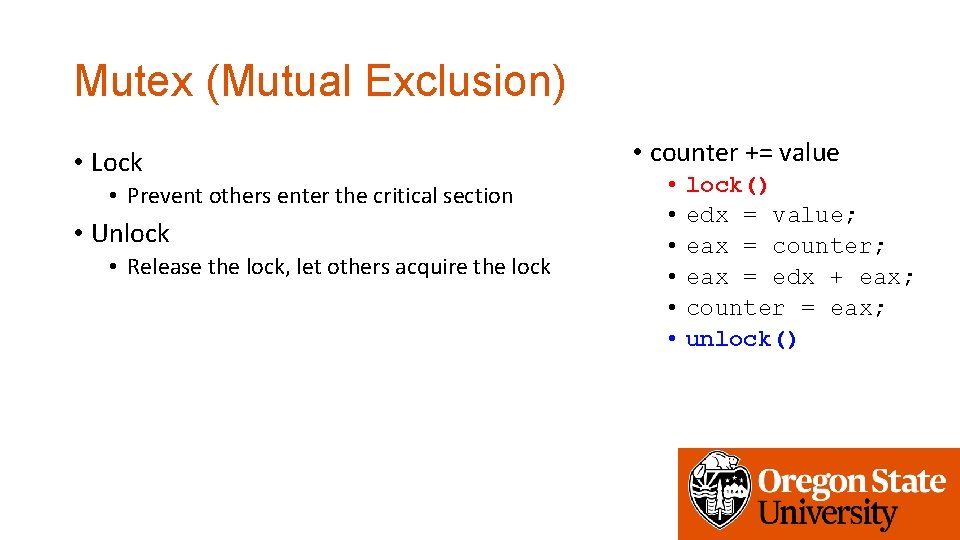

Mutex (Mutual Exclusion) • Lock • Prevent others enter the critical section • Unlock • Release the lock, let others acquire the lock • counter += value • • • lock() edx = value; eax = counter; eax = edx + eax; counter = eax; unlock()

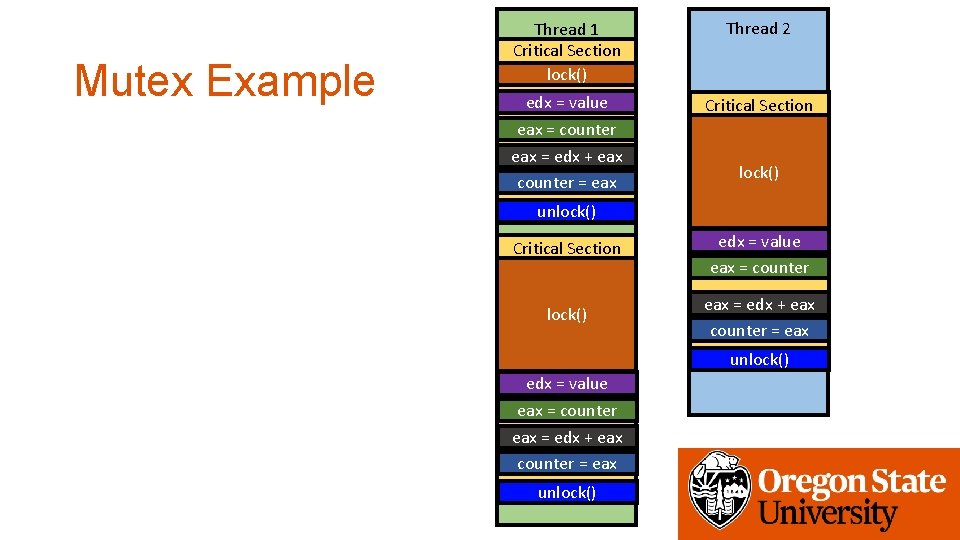

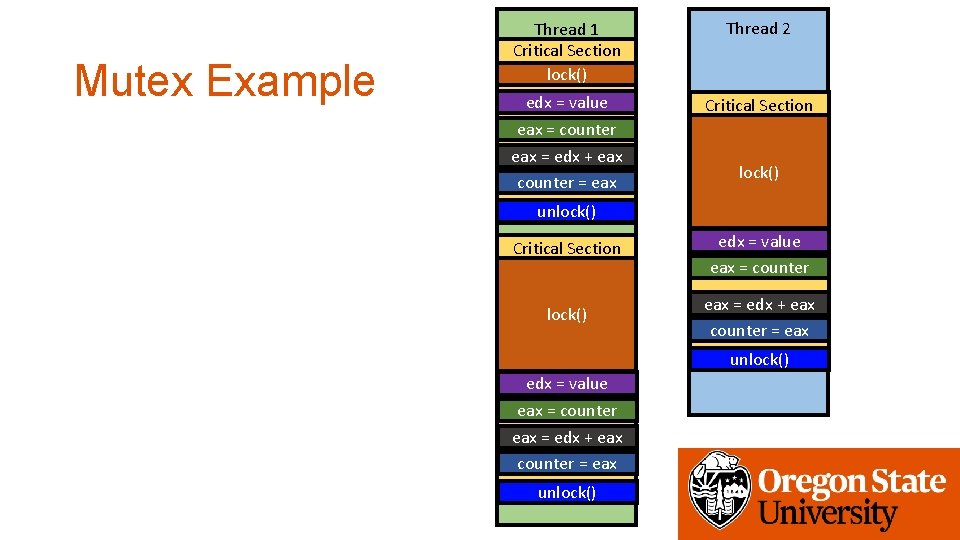

Mutex Example Thread 1 Critical Section lock() Thread 2 edx = value eax = counter eax = edx + eax counter = eax Critical Section lock() unlock() Critical Section edx = value eax = counter lock() eax = edx + eax counter = eax unlock() edx = value eax = counter eax = edx + eax counter = eax unlock()

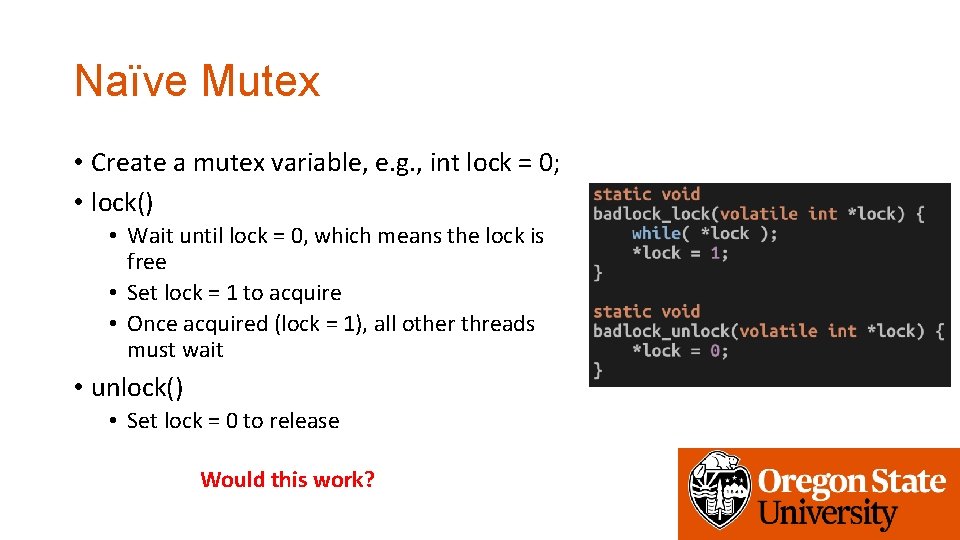

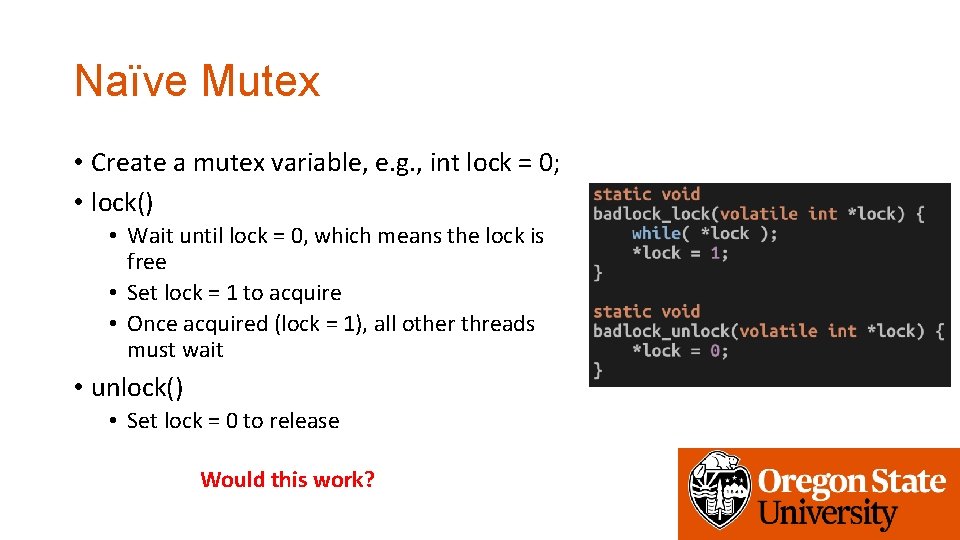

Naïve Mutex • Create a mutex variable, e. g. , int lock = 0; • lock() • Wait until lock = 0, which means the lock is free • Set lock = 1 to acquire • Once acquired (lock = 1), all other threads must wait • unlock() • Set lock = 0 to release Would this work?

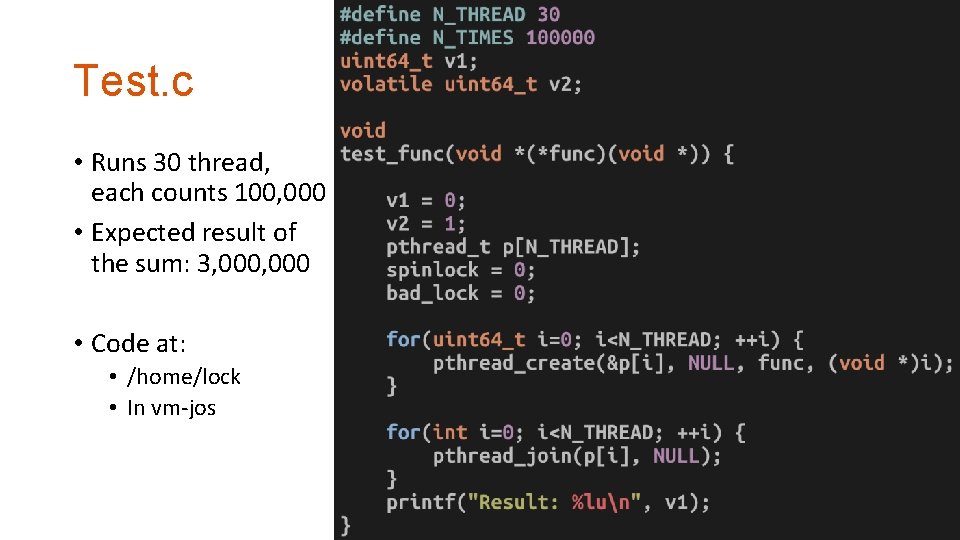

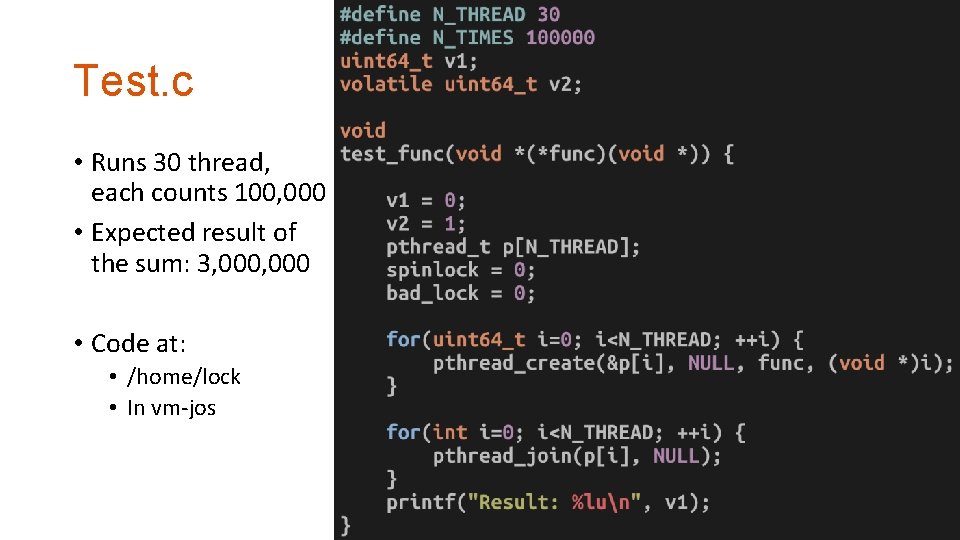

Test. c • Runs 30 thread, each counts 100, 000 • Expected result of the sum: 3, 000 • Code at: • /home/lock • In vm-jos

Run Badlock with Test. c Critical Section

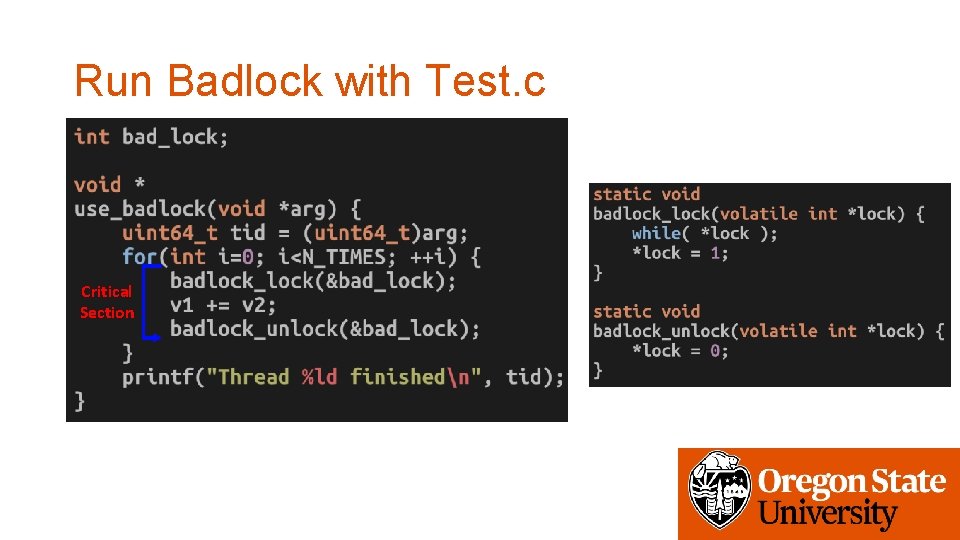

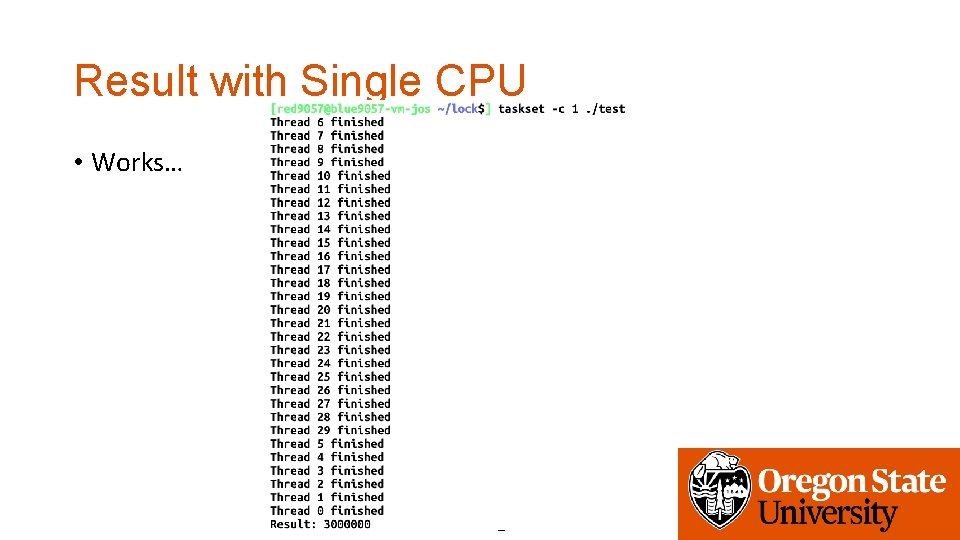

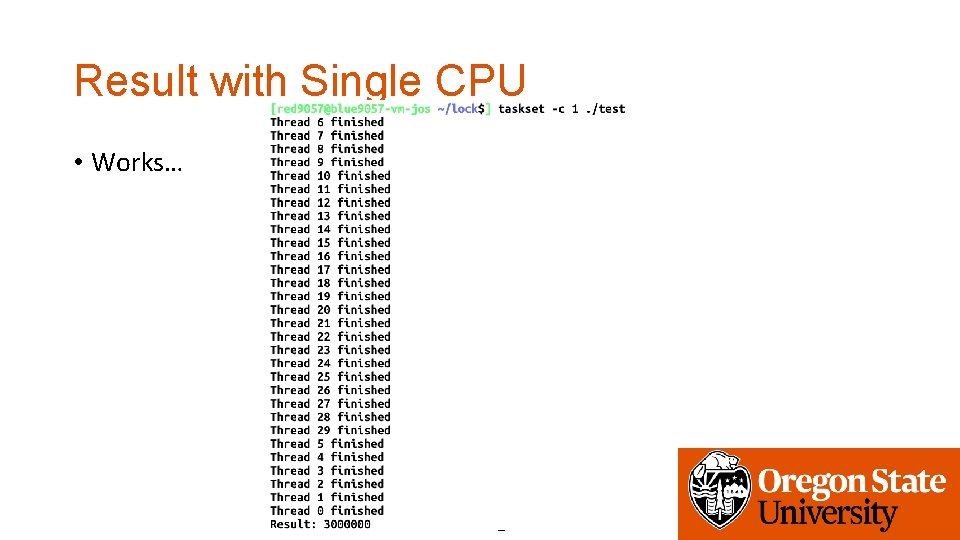

Result with Single CPU • Works…

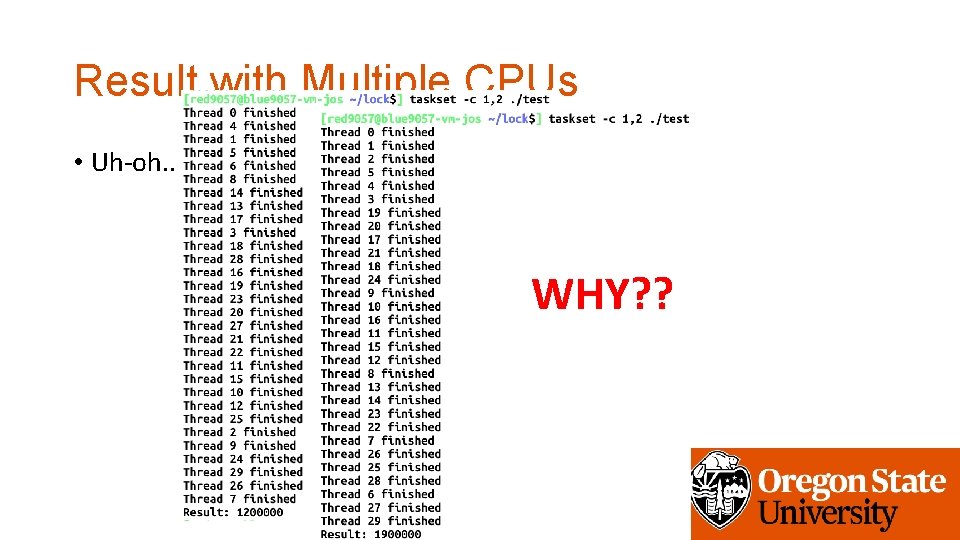

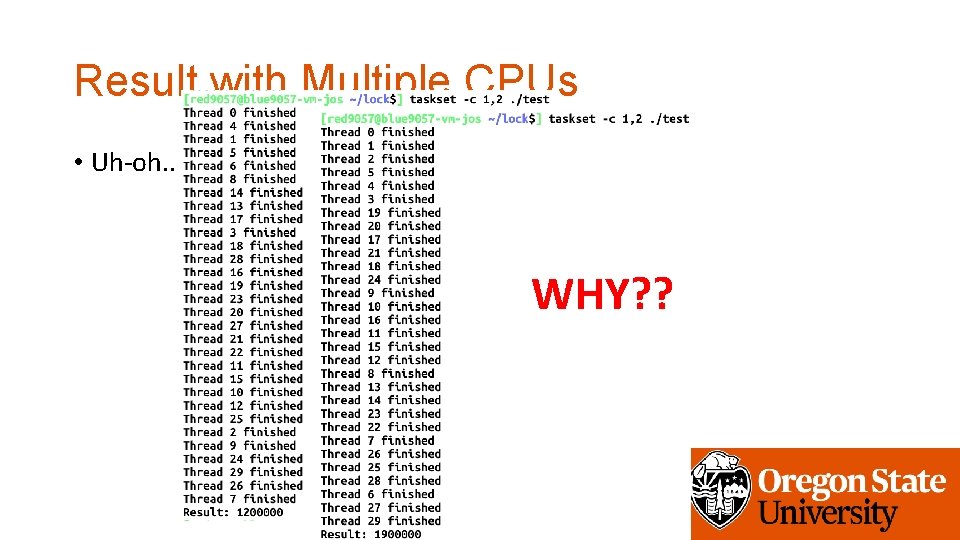

Result with Multiple CPUs • Uh-oh. . WHY? ?

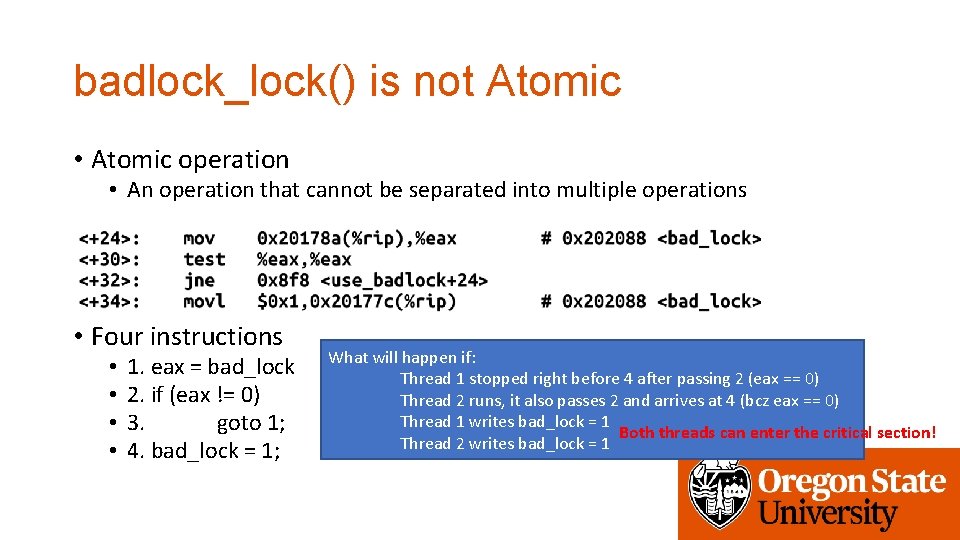

badlock_lock() is not Atomic • Atomic operation • An operation that cannot be separated into multiple operations • Four instructions • • 1. eax = bad_lock 2. if (eax != 0) 3. goto 1; 4. bad_lock = 1; What will happen if: Thread 1 stopped right before 4 after passing 2 (eax == 0) Thread 2 runs, it also passes 2 and arrives at 4 (bcz eax == 0) Thread 1 writes bad_lock = 1 Both threads can enter the critical section! Thread 2 writes bad_lock = 1

Atomic Test-and-Set • We need a way to test • if lock == 0 • And we would like to set • lock = 1 • And do this atomically • Hardware support is required • xchg in x 86 does this • An atomic test-and-set operation

![xchg xchg memory reg Atomically exchange the content in memory with the xchg • xchg [memory], %reg • Atomically exchange the content in memory with the](https://slidetodoc.com/presentation_image_h2/b414361e4c0ad3fa3d7eae57adab61f8/image-27.jpg)

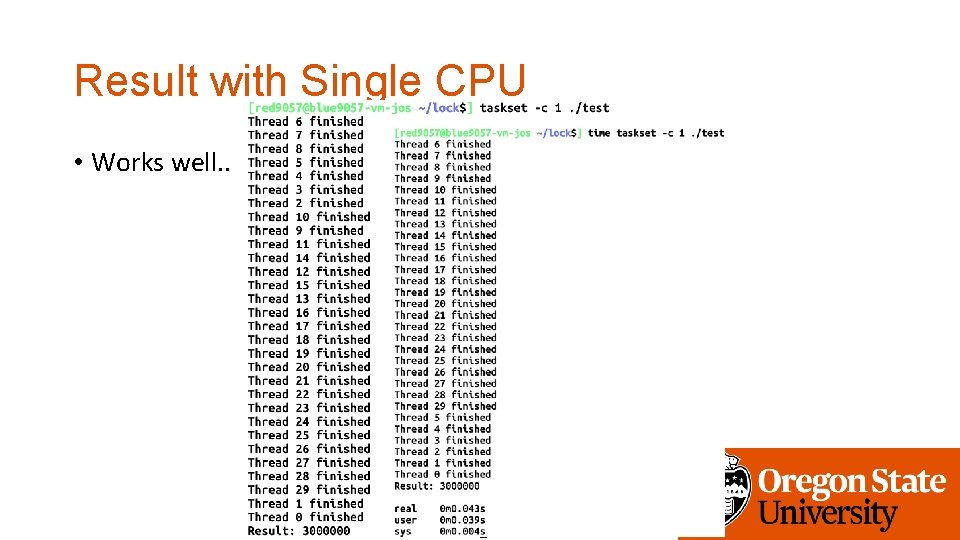

xchg • xchg [memory], %reg • Atomically exchange the content in memory with the value in reg • E. g. , • mov $1, %eax • xchg $lock, %eax • This will set %eax as the value in lock • At the same time, this will set lock = 1 (the value was in %eax) • CPU applies ‘lock’ at hardware level (cache/memory) to do this

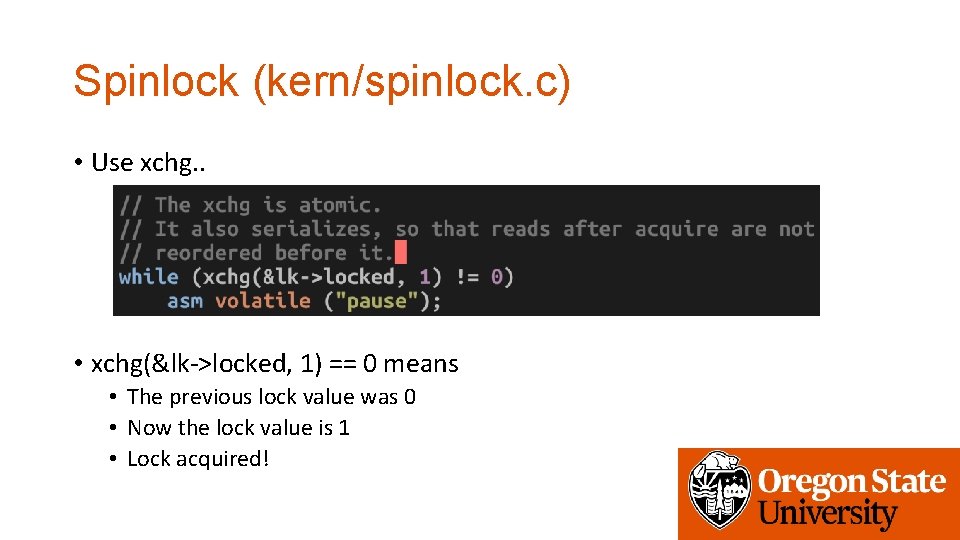

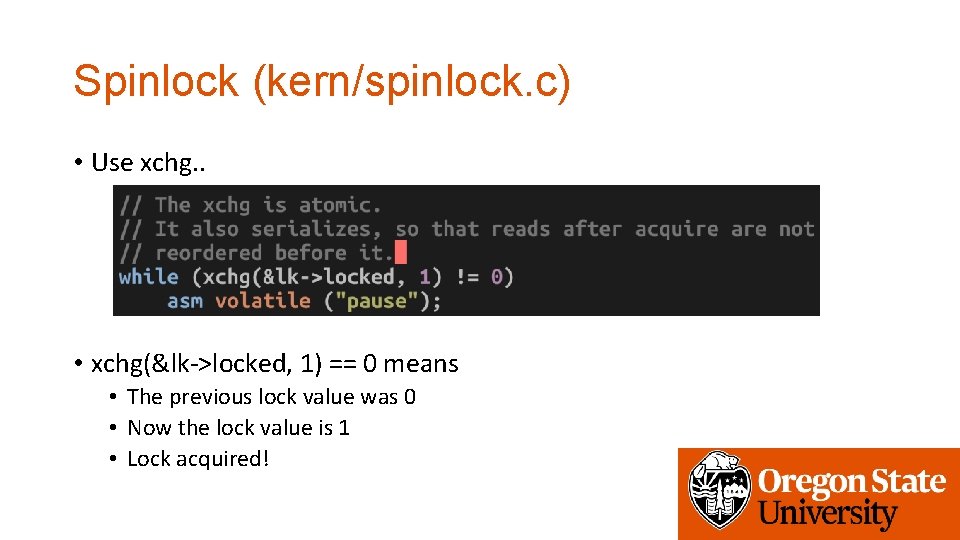

Spinlock (kern/spinlock. c) • Use xchg. . • xchg(&lk->locked, 1) == 0 means • The previous lock value was 0 • Now the lock value is 1 • Lock acquired!

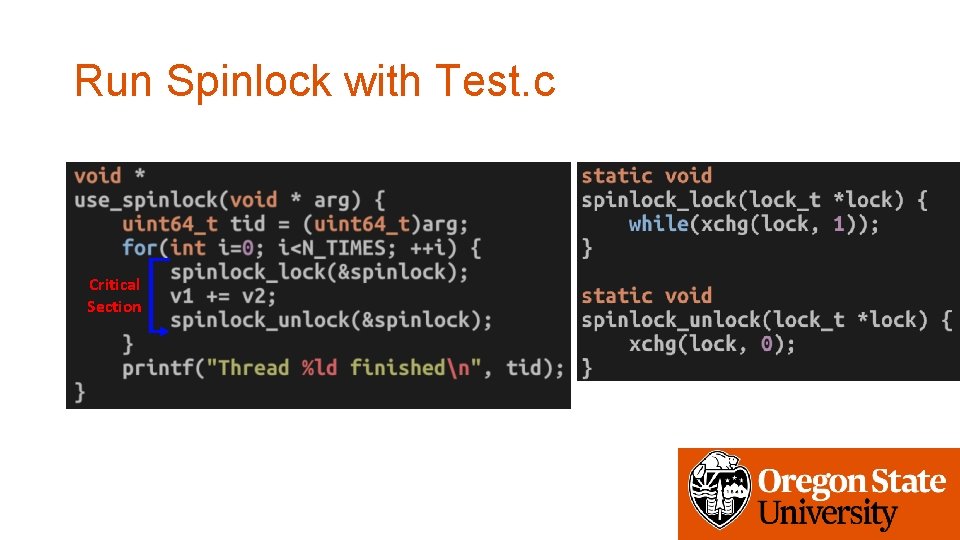

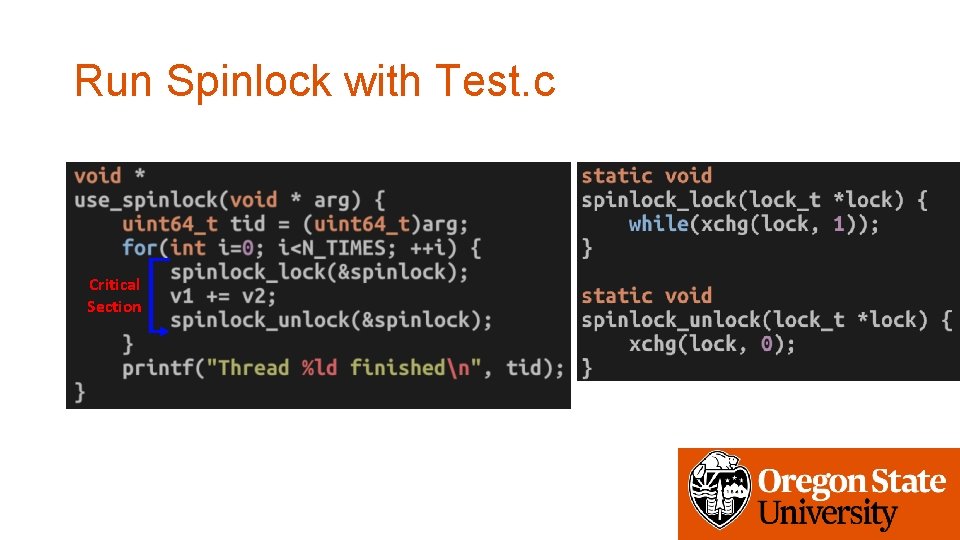

Run Spinlock with Test. c Critical Section

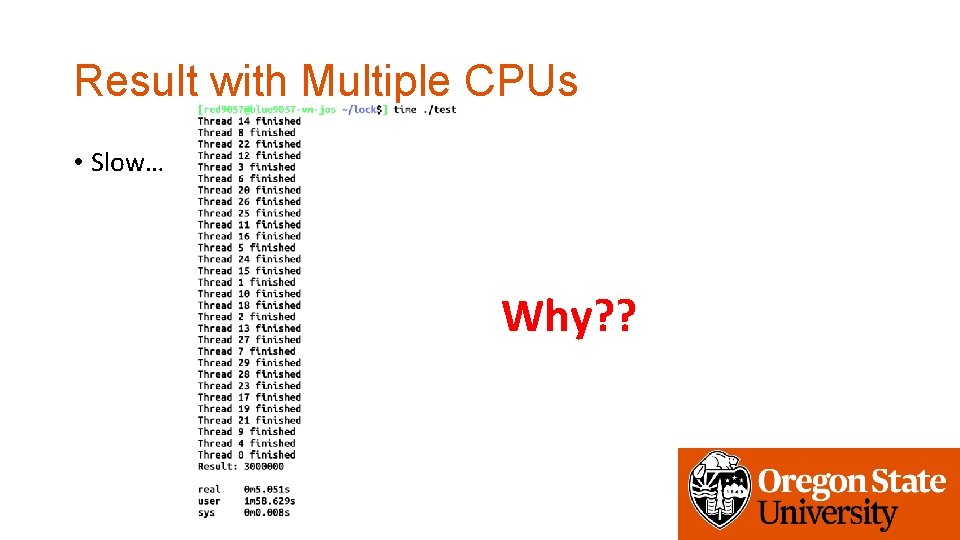

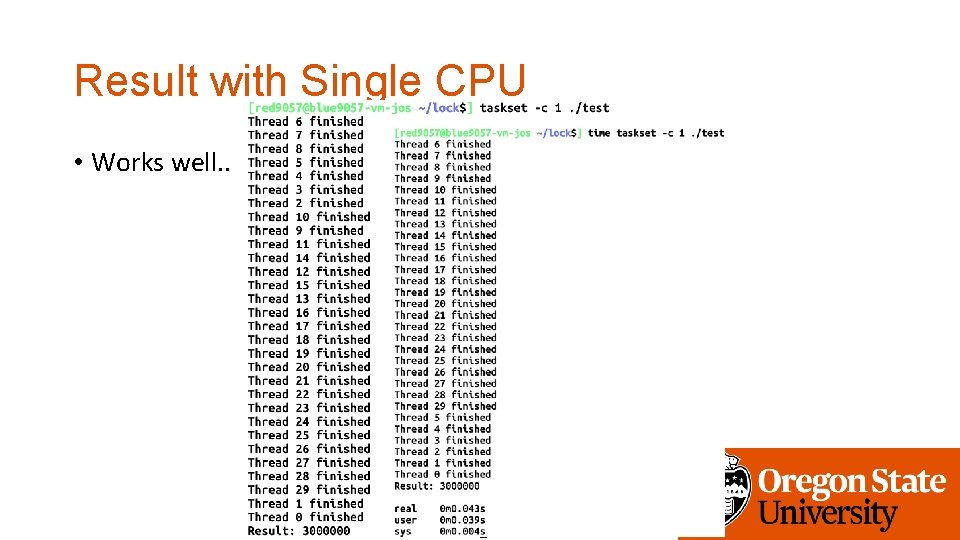

Result with Single CPU • Works well. .

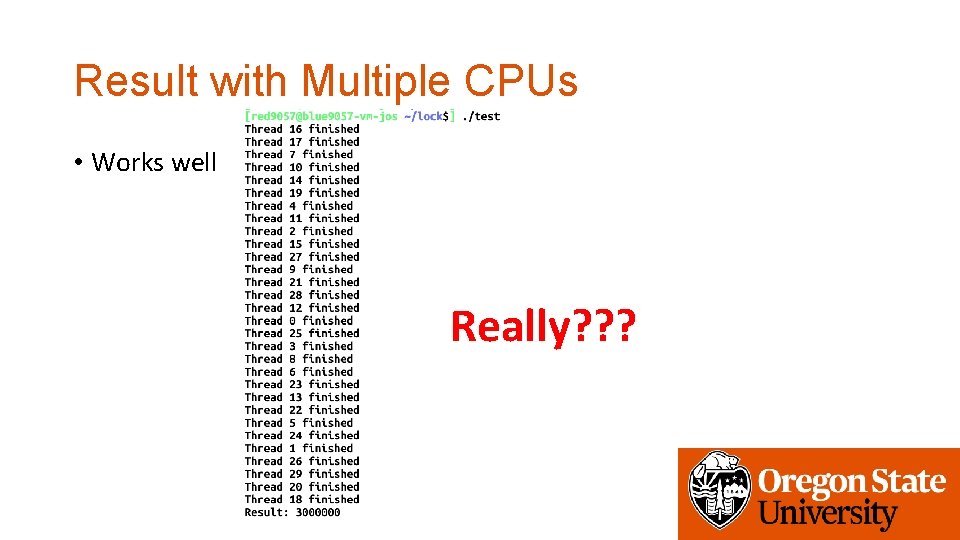

Result with Multiple CPUs • Works well Really? ? ?

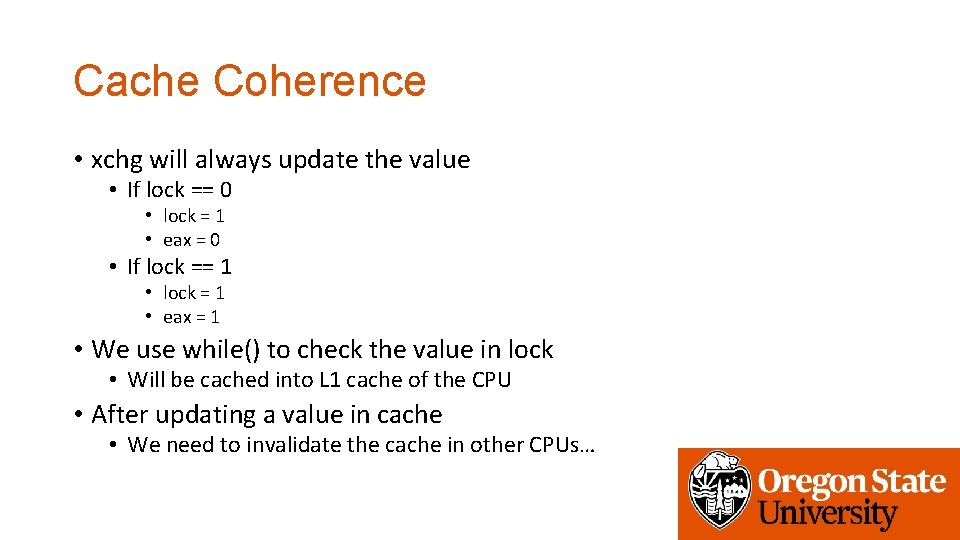

Result with Multiple CPUs • Slow… Why? ?

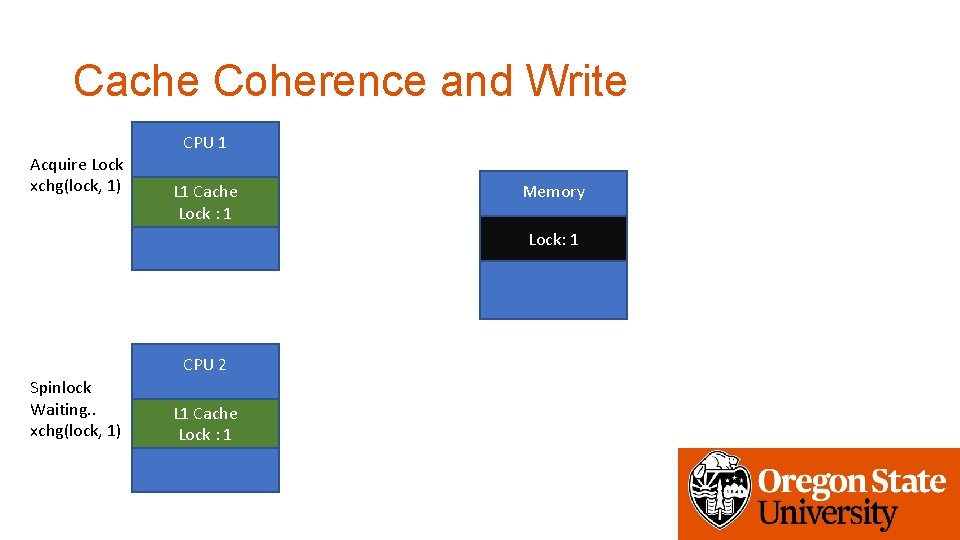

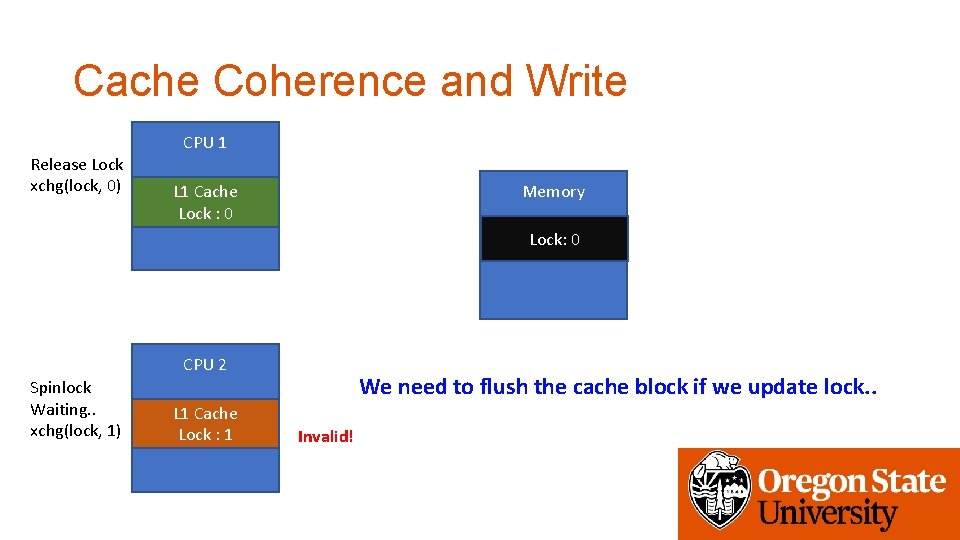

Cache Coherence • xchg will always update the value • If lock == 0 • lock = 1 • eax = 0 • If lock == 1 • lock = 1 • eax = 1 • We use while() to check the value in lock • Will be cached into L 1 cache of the CPU • After updating a value in cache • We need to invalidate the cache in other CPUs…

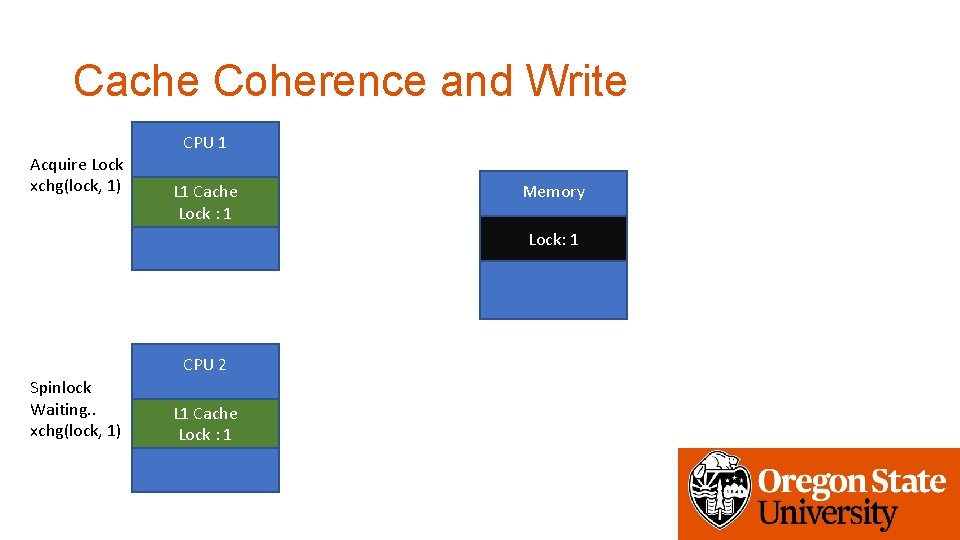

Cache Coherence and Write Acquire Lock xchg(lock, 1) CPU 1 L 1 Cache Lock : 1 Memory Lock: 10 CPU 2 Spinlock Waiting. . xchg(lock, 1) L 1 Cache Lock : 1

Cache Coherence and Write Release Lock xchg(lock, 0) CPU 1 L 1 Cache Lock : 10 Memory Lock: 10 CPU 2 Spinlock Waiting. . xchg(lock, 1) L 1 Cache Lock : 1 We need to flush the cache block if we update lock. . Invalid!

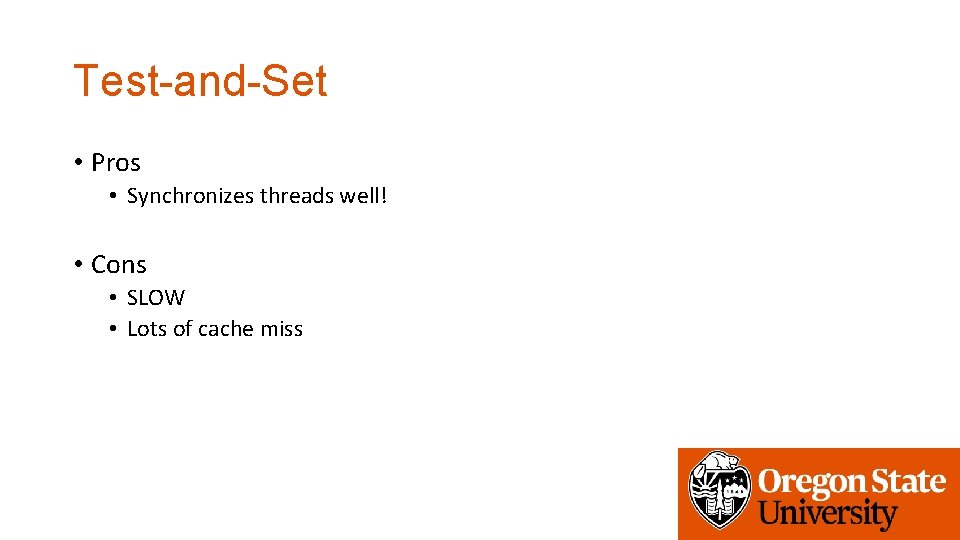

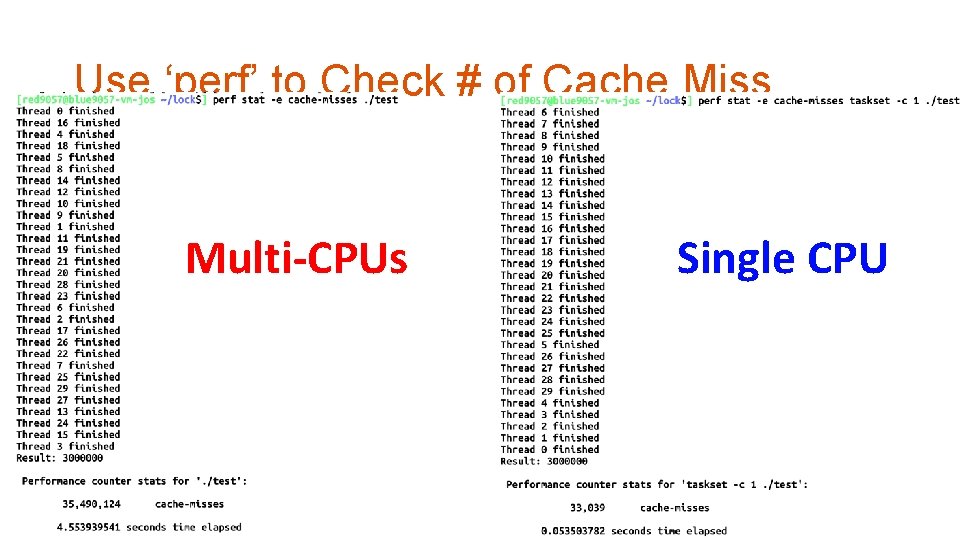

Test-and-Set • Pros • Synchronizes threads well! • Cons • SLOW • Lots of cache miss

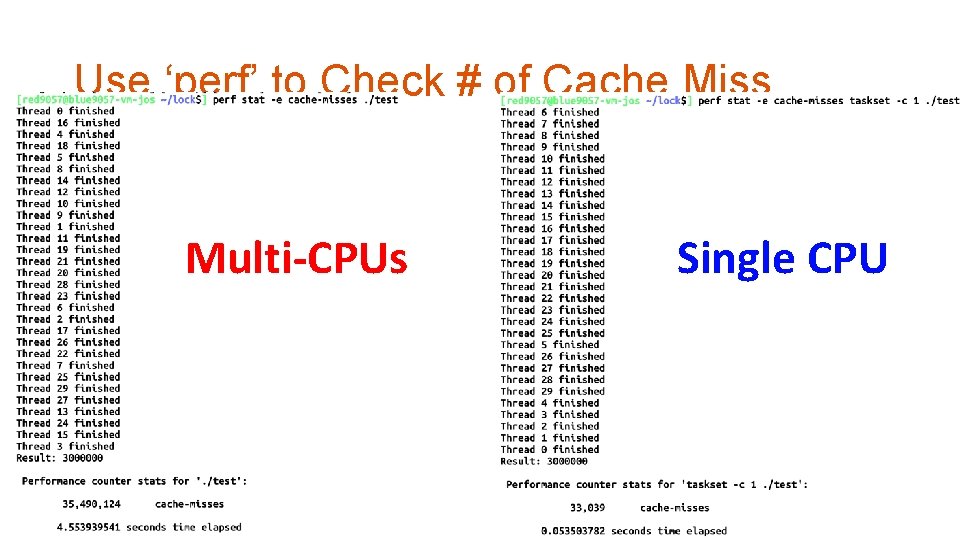

Use ‘perf’ to Check # of Cache Miss Multi-CPUs Single CPU

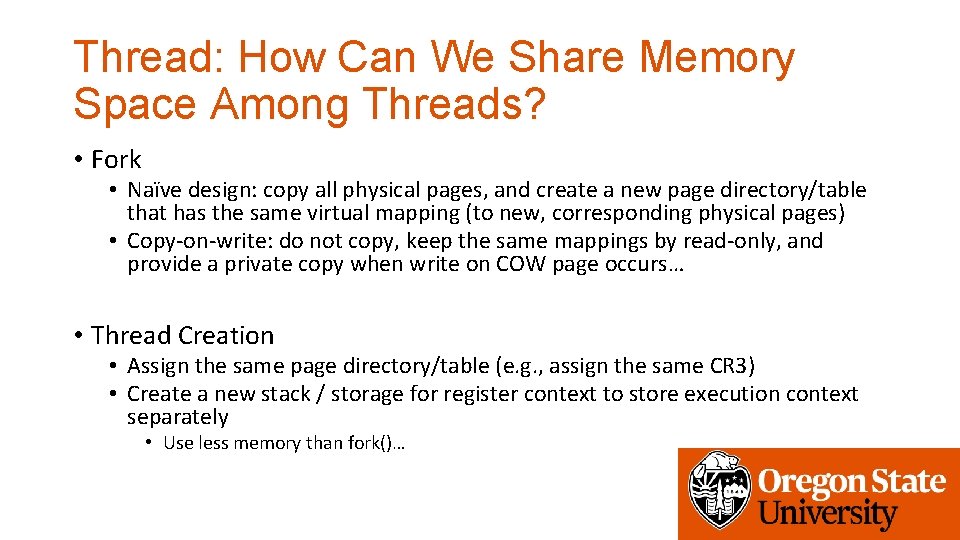

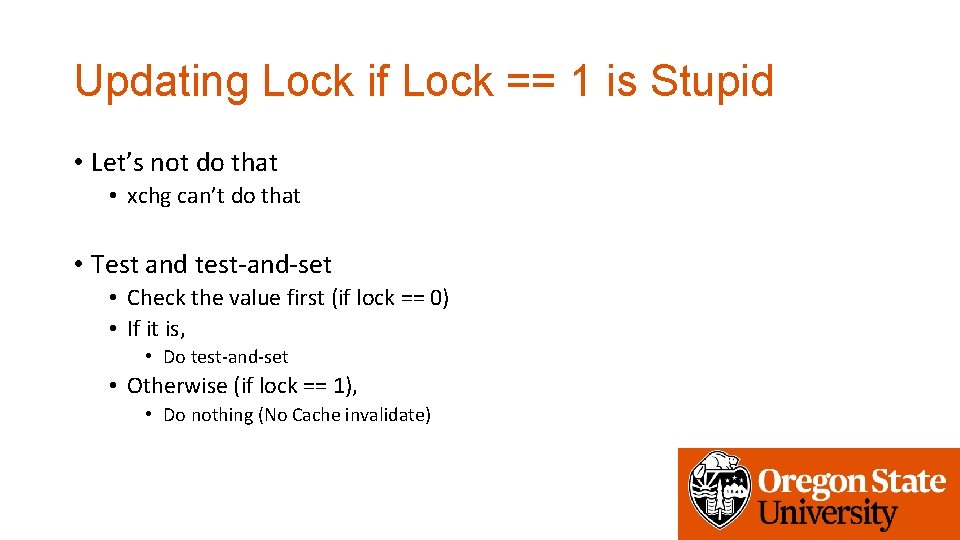

Updating Lock if Lock == 1 is Stupid • Let’s not do that • xchg can’t do that • Test and test-and-set • Check the value first (if lock == 0) • If it is, • Do test-and-set • Otherwise (if lock == 1), • Do nothing (No Cache invalidate)

![lock cmpxchg cmpxchg memory value Compare the value in memory with rax lock cmpxchg • cmpxchg [memory], [value] • Compare the value in memory with %rax](https://slidetodoc.com/presentation_image_h2/b414361e4c0ad3fa3d7eae57adab61f8/image-39.jpg)

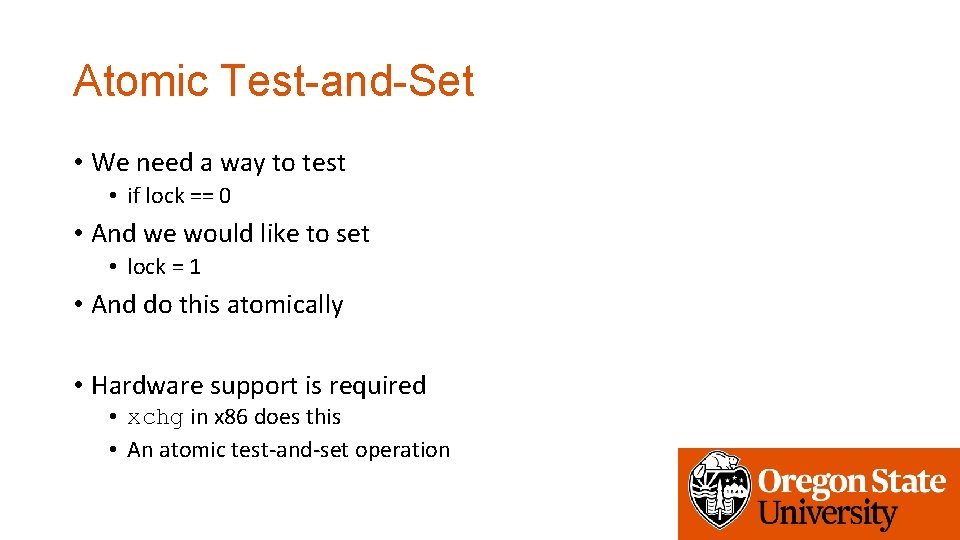

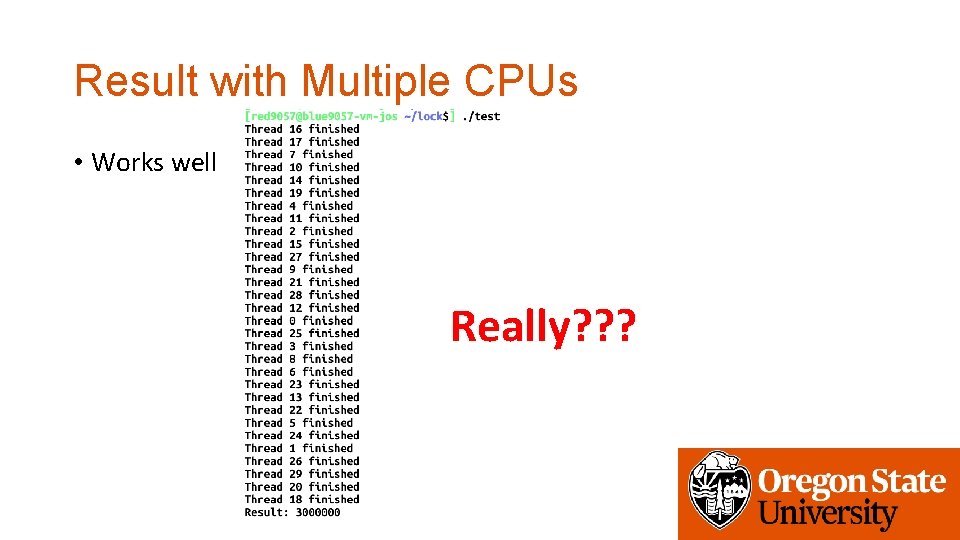

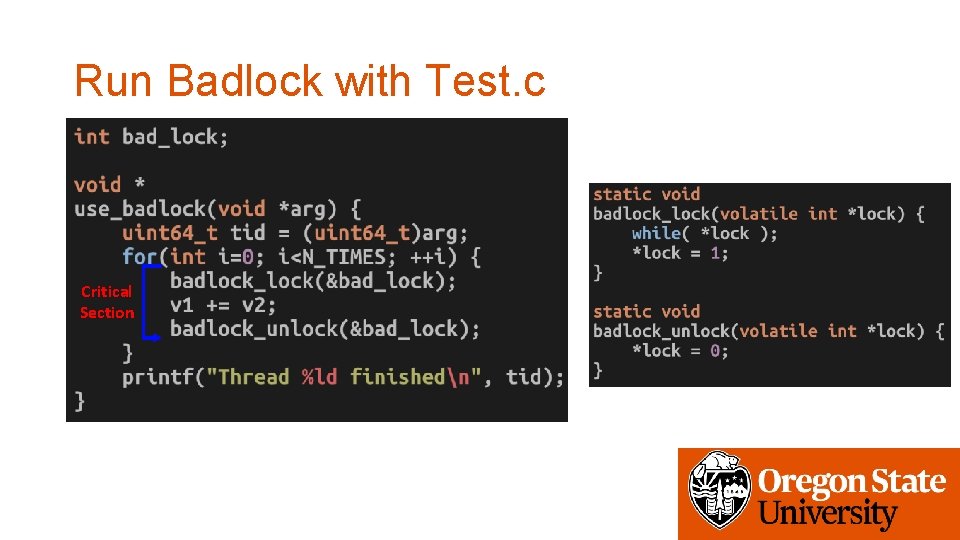

lock cmpxchg • cmpxchg [memory], [value] • Compare the value in memory with %rax • If matched, perform xchg • Otherwise, do not perform xchg • xchg(lock, 1) • Lock, new value • Returns old value. . • cmpxchg(lock, 0, 1) • Lock, old value, new value • Returns old value. .

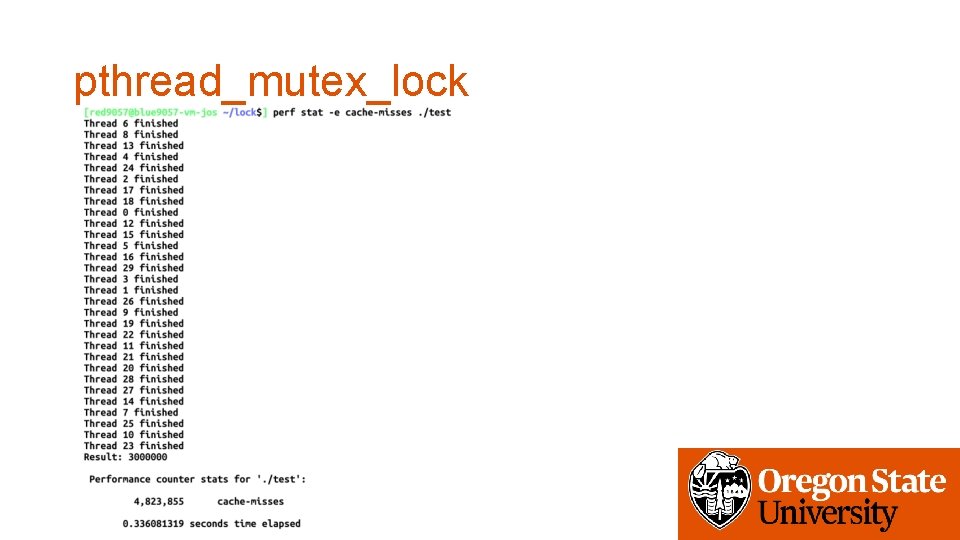

pthread_mutex_lock