CS 444CS 544 Operating Systems Synchronization 2212006 Prof

- Slides: 22

CS 444/CS 544 Operating Systems Synchronization 2/21/2006 Prof. Searleman jets@clarkson. edu

Outline l Synchronization NOTE: l Return & discuss HW#4 l Lab#2 posted, due Thurs, 3/9 l Read: Chapter 7 l HW#5 posted, due Friday, 2/24/06 l Exam#1, Wednesday, March 1, 7: 00 pm, SC 162, SGG: Chapters 5 & 6

Last time l l Need for synchronization primitives Locks and building locks from HW primitives

Criteria for a Good Solution to the Critical Section Problem l Mutual Exclusion l l Progress l l Only one process is allowed to be in its critical section at once All other processes forced to wait on entry When one process leaves, another may enter If process is in the critical section, it should not be able to stop another process from entering it indefinitely Decision of who will be next can’t be delayed indefinitely Can’t just give one process access; can’t deny access to everyone Bounded Waiting l After a process has made a request to enter its critical section, there should be a bound on the number of times other processes can enter their critical sections

Synchronization Primitives l l Synchronization Primitives are used to implement a solution to the critical section problem OS uses HW primitives l l l Disable Interrupts HW Test and set OS exports primitives to user applications; User level can build more complex primitives from simpler OS primitives l l Locks Semaphores Monitors Messages

Implementing Locks l l Ok so now we have seen that all is well *if* we have these objects called locks How do we implement locks? l l Recall: The implementation of lock has a critical section too (read lock; if lock free, write lock taken) Need help from hardware l Make basic lock primitive atomic l l Atomic instructions like test-and-set or read-modify – write, compare-and-swap Prevent context switches l Disable/enable interrupts

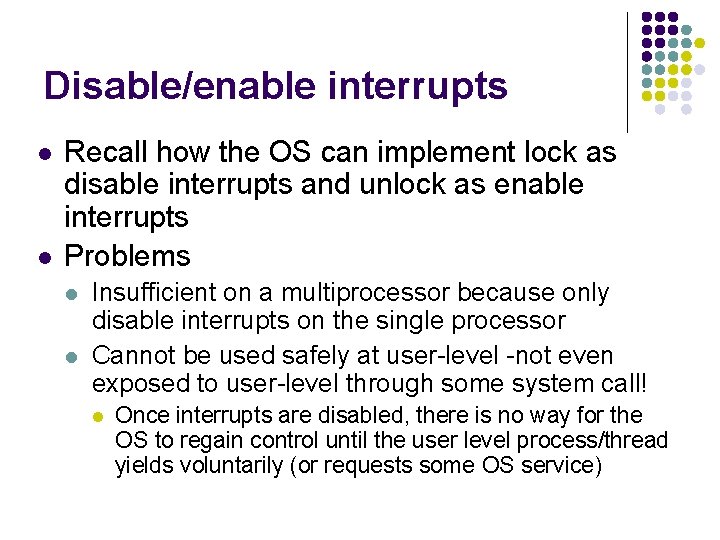

Disable/enable interrupts l l Recall how the OS can implement lock as disable interrupts and unlock as enable interrupts Problems l l Insufficient on a multiprocessor because only disable interrupts on the single processor Cannot be used safely at user-level -not even exposed to user-level through some system call! l Once interrupts are disabled, there is no way for the OS to regain control until the user level process/thread yields voluntarily (or requests some OS service)

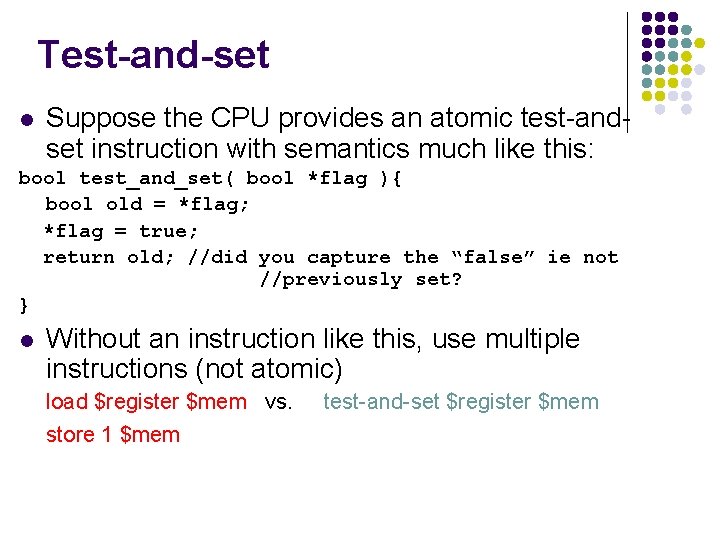

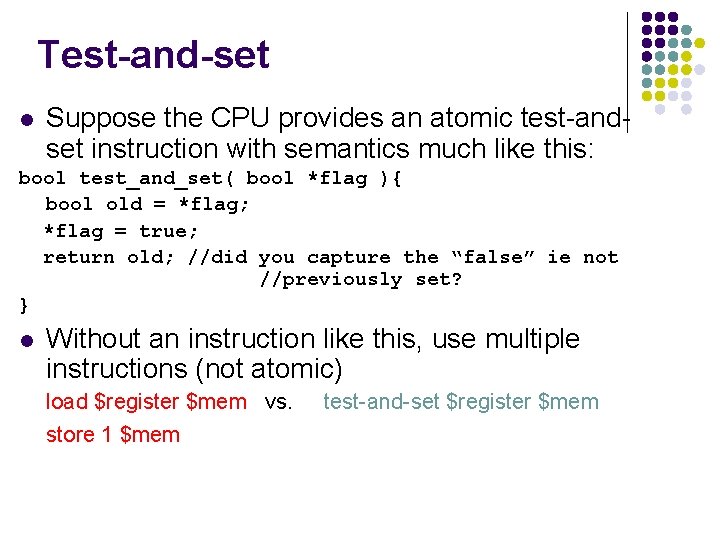

Test-and-set l Suppose the CPU provides an atomic test-andset instruction with semantics much like this: bool test_and_set( bool *flag ){ bool old = *flag; *flag = true; return old; //did you capture the “false” ie not //previously set? } l Without an instruction like this, use multiple instructions (not atomic) load $register $mem vs. store 1 $mem test-and-set $register $mem

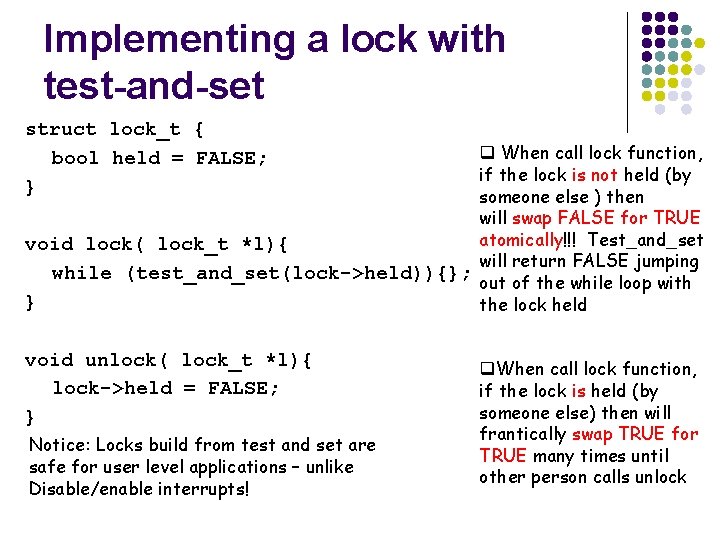

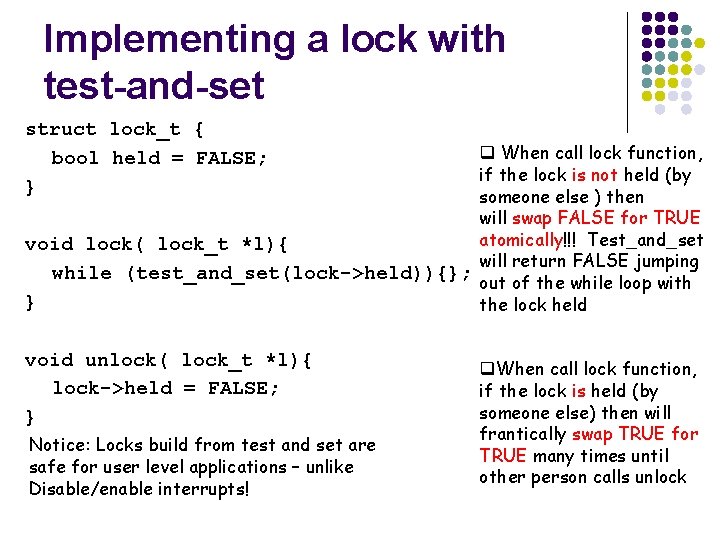

Implementing a lock with test-and-set struct lock_t { bool held = FALSE; } q When call lock function, if the lock is not held (by someone else ) then will swap FALSE for TRUE atomically!!! Test_and_set void lock( lock_t *l){ will return FALSE jumping while (test_and_set(lock->held)){}; out of the while loop with } the lock held void unlock( lock_t *l){ lock->held = FALSE; } Notice: Locks build from test and set are safe for user level applications – unlike Disable/enable interrupts! q. When call lock function, if the lock is held (by someone else) then will frantically swap TRUE for TRUE many times until other person calls unlock

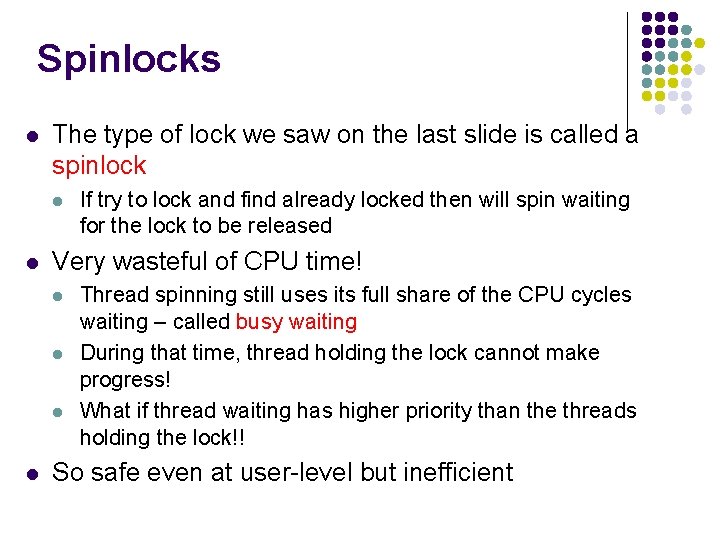

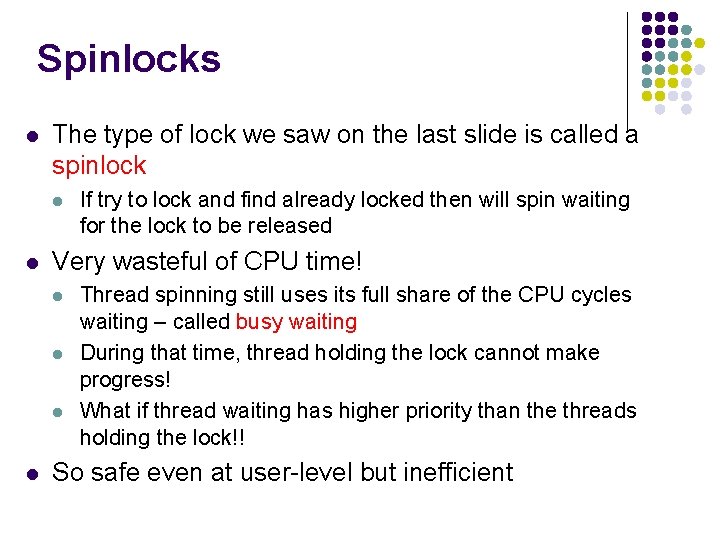

Spinlocks l The type of lock we saw on the last slide is called a spinlock l l Very wasteful of CPU time! l l If try to lock and find already locked then will spin waiting for the lock to be released Thread spinning still uses its full share of the CPU cycles waiting – called busy waiting During that time, thread holding the lock cannot make progress! What if thread waiting has higher priority than the threads holding the lock!! So safe even at user-level but inefficient

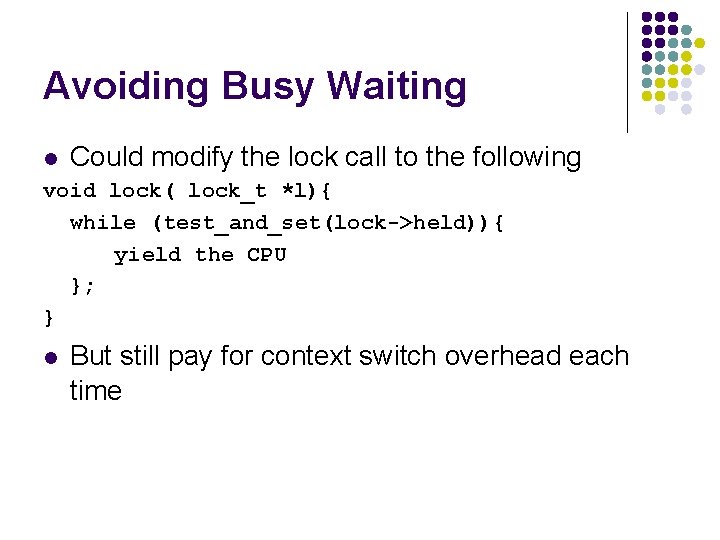

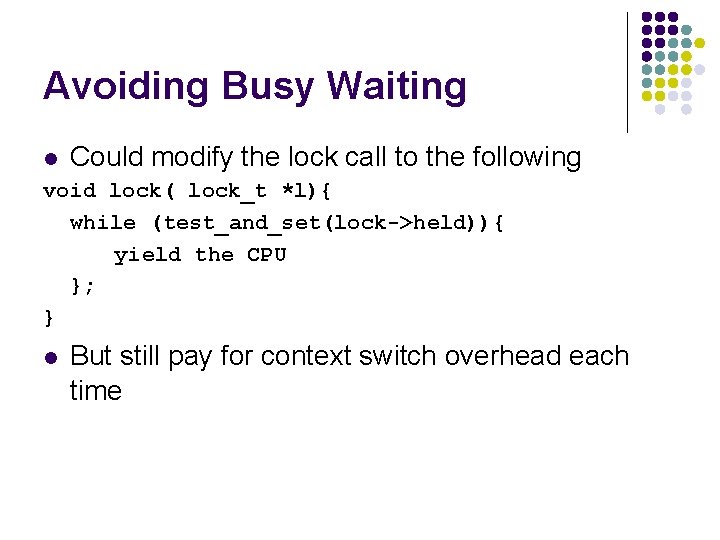

Avoiding Busy Waiting l Could modify the lock call to the following void lock( lock_t *l){ while (test_and_set(lock->held)){ yield the CPU }; } l But still pay for context switch overhead each time

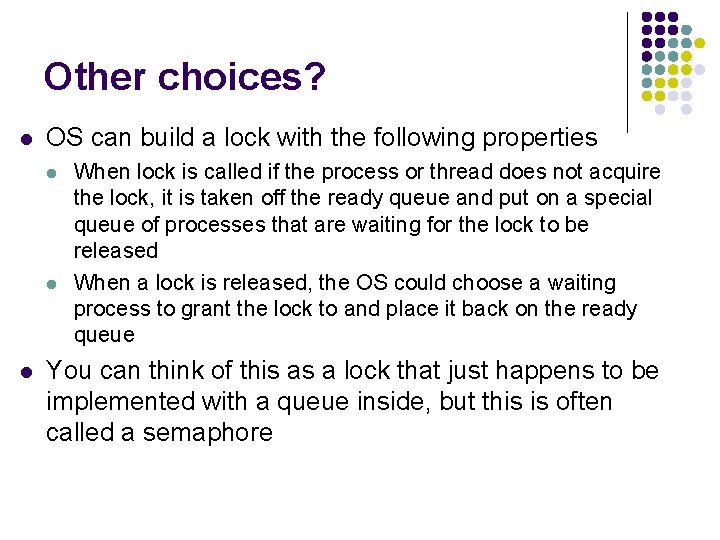

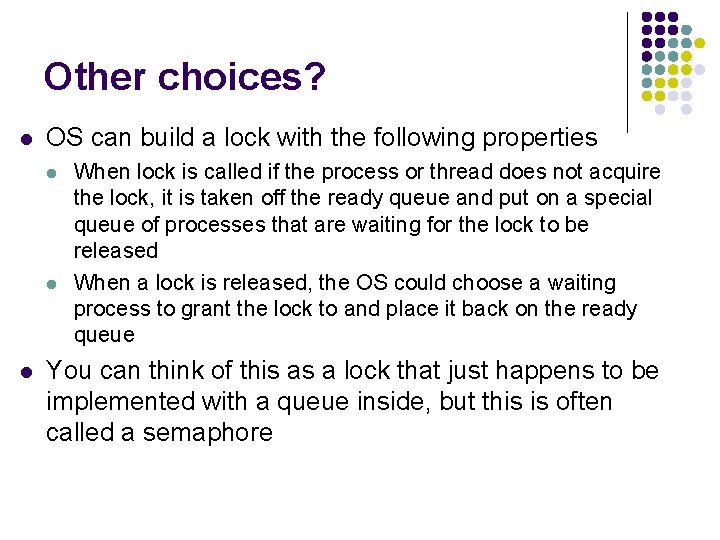

Other choices? l OS can build a lock with the following properties l l l When lock is called if the process or thread does not acquire the lock, it is taken off the ready queue and put on a special queue of processes that are waiting for the lock to be released When a lock is released, the OS could choose a waiting process to grant the lock to and place it back on the ready queue You can think of this as a lock that just happens to be implemented with a queue inside, but this is often called a semaphore

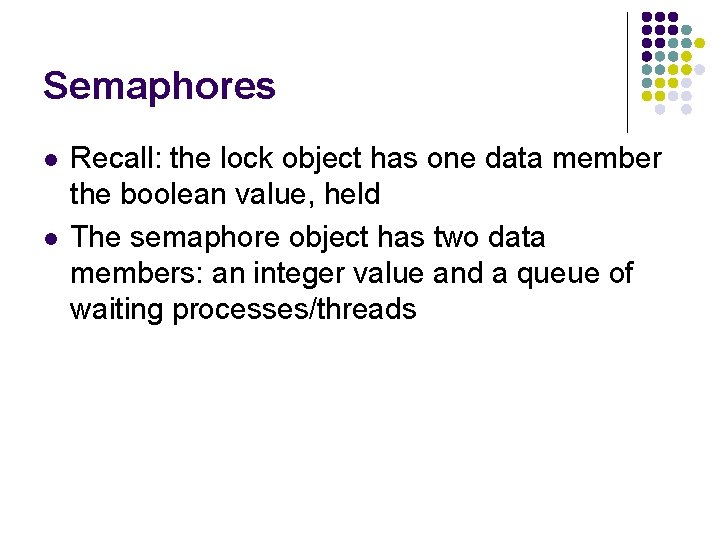

Semaphores l l Recall: the lock object has one data member the boolean value, held The semaphore object has two data members: an integer value and a queue of waiting processes/threads

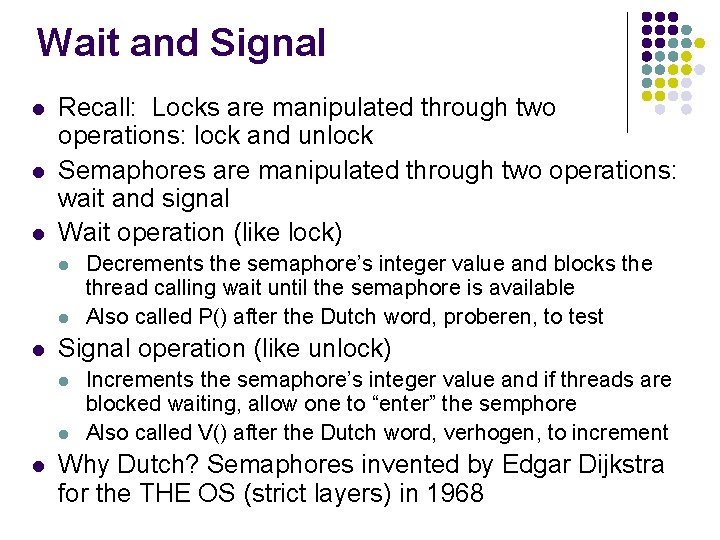

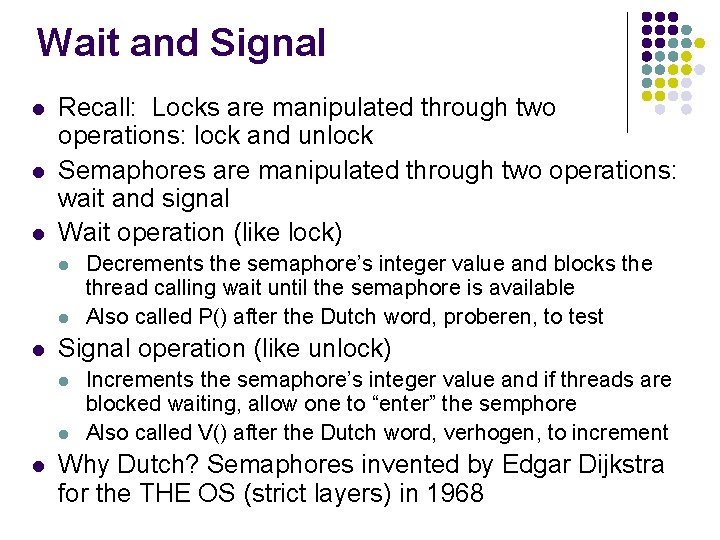

Wait and Signal l Recall: Locks are manipulated through two operations: lock and unlock Semaphores are manipulated through two operations: wait and signal Wait operation (like lock) l l l Signal operation (like unlock) l l l Decrements the semaphore’s integer value and blocks the thread calling wait until the semaphore is available Also called P() after the Dutch word, proberen, to test Increments the semaphore’s integer value and if threads are blocked waiting, allow one to “enter” the semphore Also called V() after the Dutch word, verhogen, to increment Why Dutch? Semaphores invented by Edgar Dijkstra for the THE OS (strict layers) in 1968

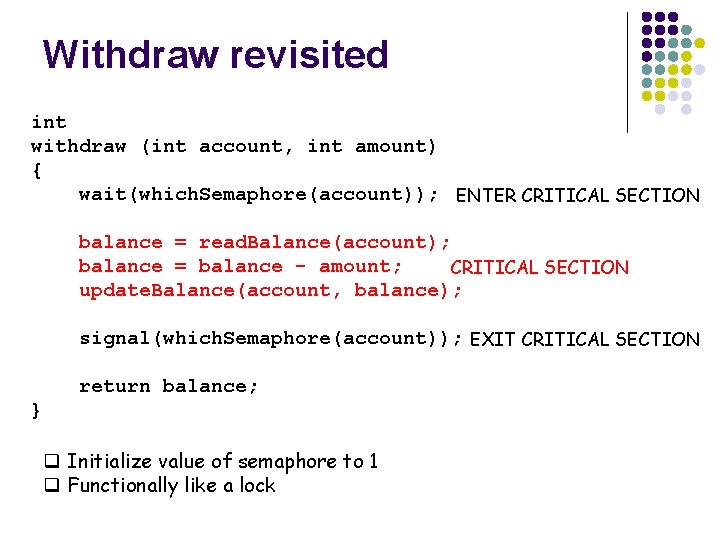

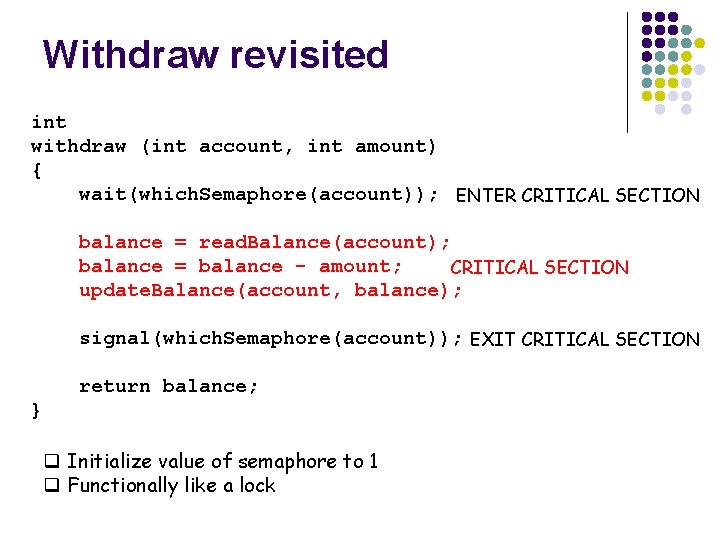

Withdraw revisited int withdraw (int account, int amount) { wait(which. Semaphore(account)); ENTER CRITICAL SECTION balance = read. Balance(account); balance = balance - amount; CRITICAL SECTION update. Balance(account, balance); signal(which. Semaphore(account)); EXIT CRITICAL SECTION return balance; } q Initialize value of semaphore to 1 q Functionally like a lock

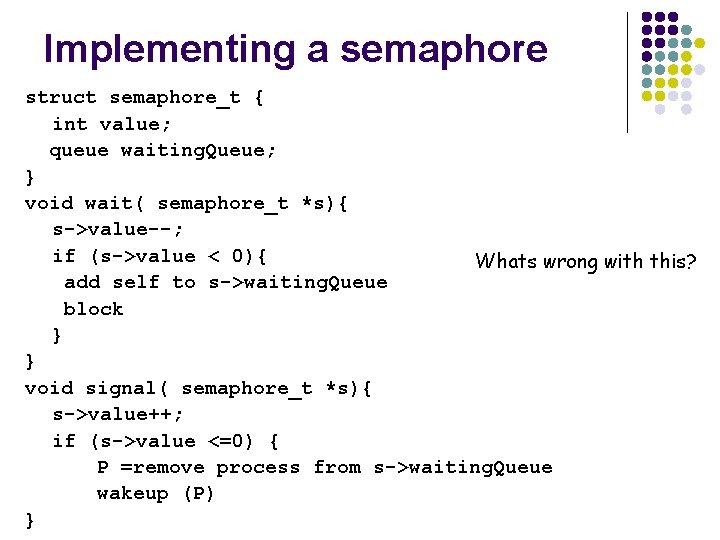

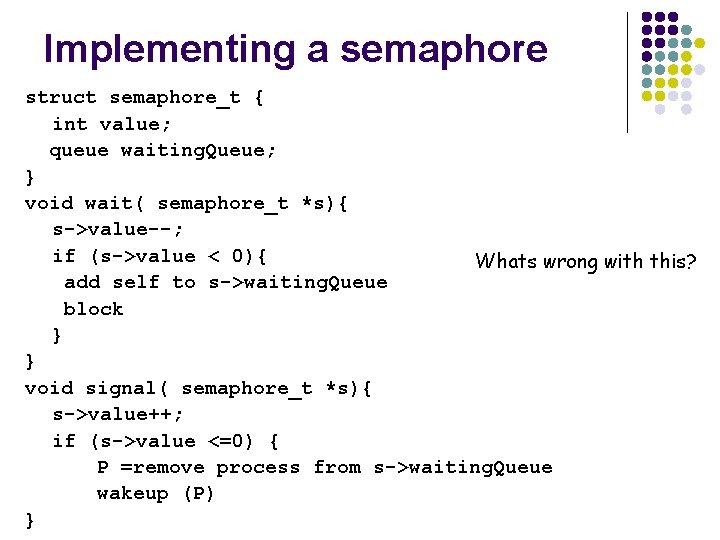

Implementing a semaphore struct semaphore_t { int value; queue waiting. Queue; } void wait( semaphore_t *s){ s->value--; if (s->value < 0){ Whats wrong with this? add self to s->waiting. Queue block } } void signal( semaphore_t *s){ s->value++; if (s->value <=0) { P =remove process from s->waiting. Queue wakeup (P) }

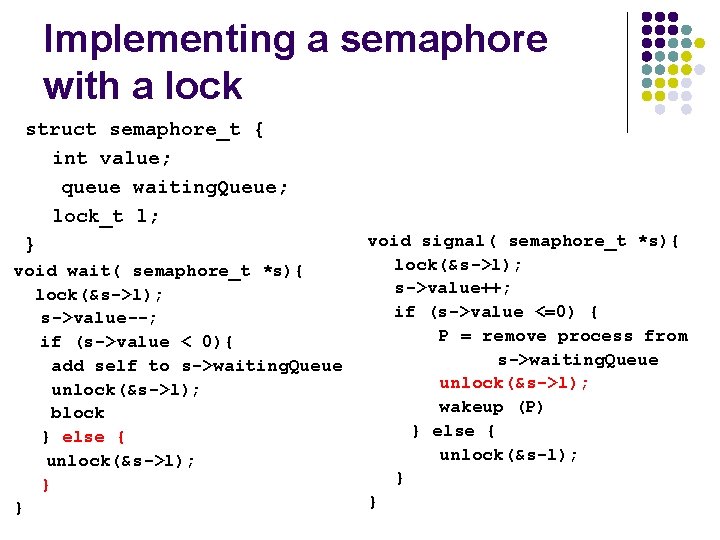

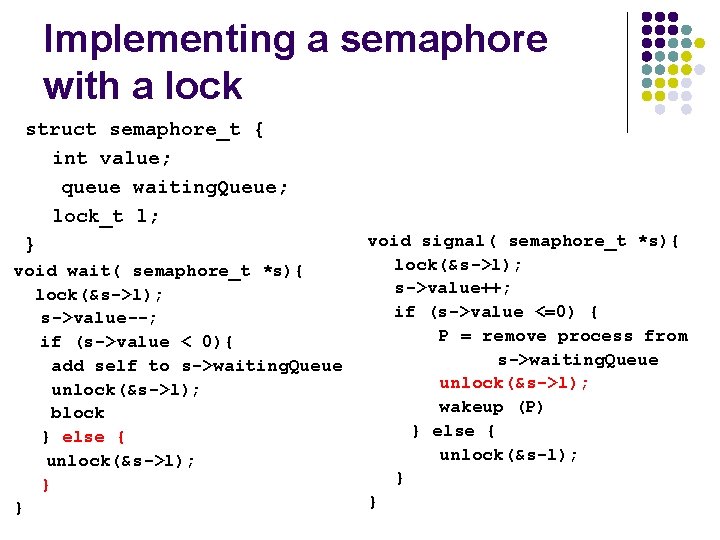

Implementing a semaphore with a lock struct semaphore_t { int value; queue waiting. Queue; lock_t l; } void wait( semaphore_t *s){ lock(&s->l); s->value--; if (s->value < 0){ add self to s->waiting. Queue unlock(&s->l); block } else { unlock(&s->l); } } void signal( semaphore_t *s){ lock(&s->l); s->value++; if (s->value <=0) { P = remove process from s->waiting. Queue unlock(&s->l); wakeup (P) } else { unlock(&s-l); } }

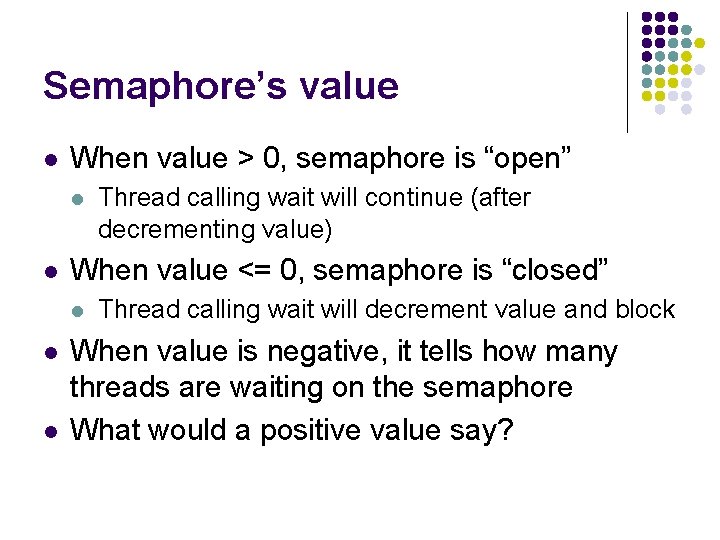

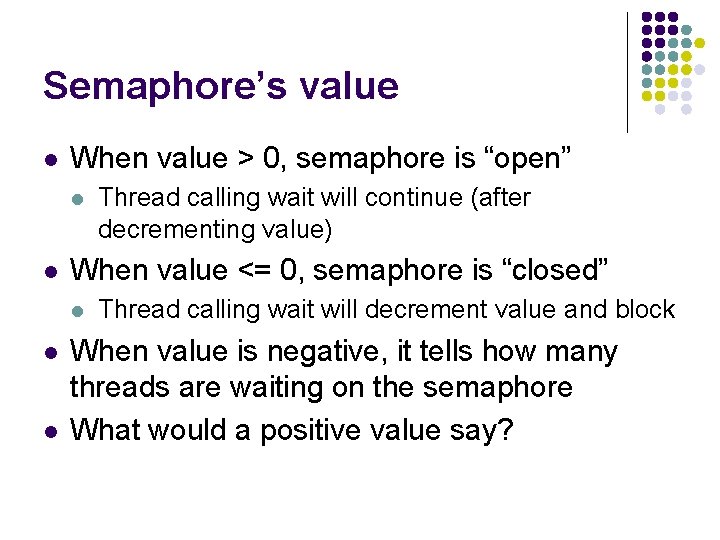

Semaphore’s value l When value > 0, semaphore is “open” l l When value <= 0, semaphore is “closed” l l l Thread calling wait will continue (after decrementing value) Thread calling wait will decrement value and block When value is negative, it tells how many threads are waiting on the semaphore What would a positive value say?

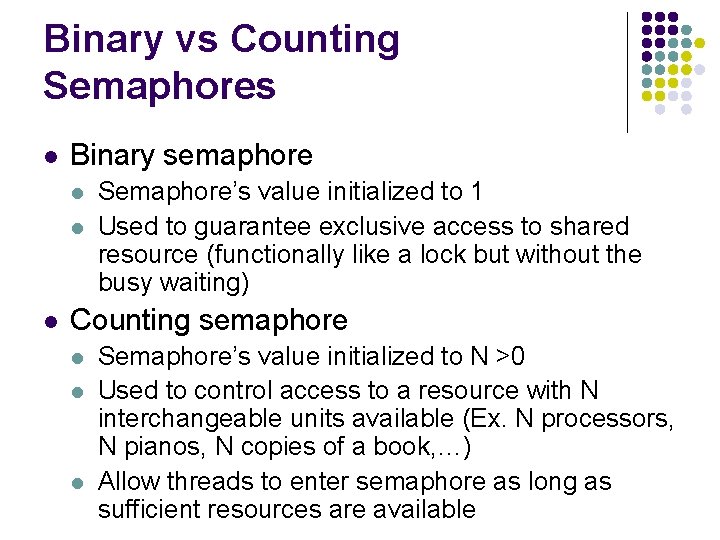

Binary vs Counting Semaphores l Binary semaphore l l l Semaphore’s value initialized to 1 Used to guarantee exclusive access to shared resource (functionally like a lock but without the busy waiting) Counting semaphore l l l Semaphore’s value initialized to N >0 Used to control access to a resource with N interchangeable units available (Ex. N processors, N pianos, N copies of a book, …) Allow threads to enter semaphore as long as sufficient resources are available

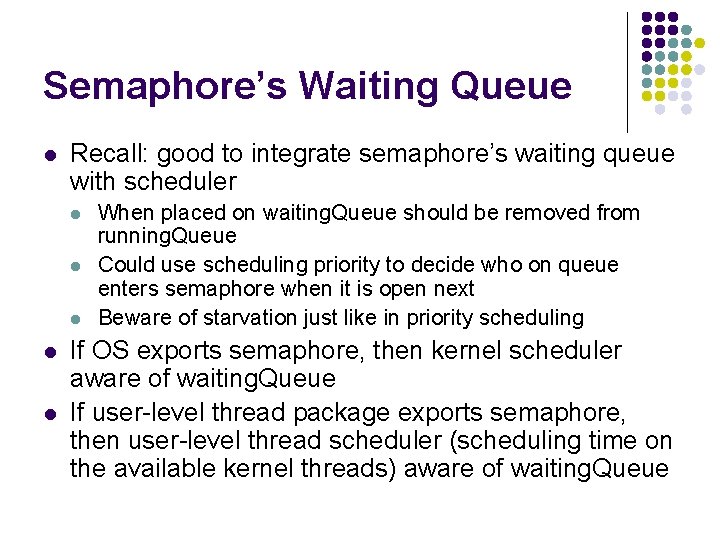

Semaphore’s Waiting Queue l Recall: good to integrate semaphore’s waiting queue with scheduler l l l When placed on waiting. Queue should be removed from running. Queue Could use scheduling priority to decide who on queue enters semaphore when it is open next Beware of starvation just like in priority scheduling If OS exports semaphore, then kernel scheduler aware of waiting. Queue If user-level thread package exports semaphore, then user-level thread scheduler (scheduling time on the available kernel threads) aware of waiting. Queue

Is busy-waiting eliminated? l l Threads block on the queue associated with the semaphore instead of busy waiting Busy waiting is not gone completely l l l When accessing the semaphore’s critical section, thread holds the semaphore’s lock and another process that tries to call wait or signal at the same time will busy wait Semaphore’s critical section is normally much smaller than the critical section it is protecting so busy waiting is greatly minimized Also avoid context switch overhead when just checking to see if can enter critical section and know all threads that are blocked on this object

Are spin locks always bad? l l Adaptive Locking in Solaris Adaptive mutexes l l Multiprocessor system if can’t get lock l And thread with lock is not running, then sleep l And thread with lock is running, spin wait Uniprocessor if can’t get lock l Immediately sleep (no hope for lock to be released while you are running) Programmers choose adaptive mutexes for short code segments and semaphores or condition variables for longer ones Blocked threads placed on separate queue for desired object l Thread to gain access next chosen by priority and priority inversion is implemented