Collectives Pattern Parallel Computing CIS 410510 Department of

![Right Biased Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to Right Biased Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to](https://slidetodoc.com/presentation_image_h2/62b6947ce9641ae1ad195b6b4413b92f/image-28.jpg)

![Tree Shape Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to Tree Shape Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to](https://slidetodoc.com/presentation_image_h2/62b6947ce9641ae1ad195b6b4413b92f/image-30.jpg)

- Slides: 31

Collectives Pattern Parallel Computing CIS 410/510 Department of Computer and Information Science Lecture 8 – Collective Pattern

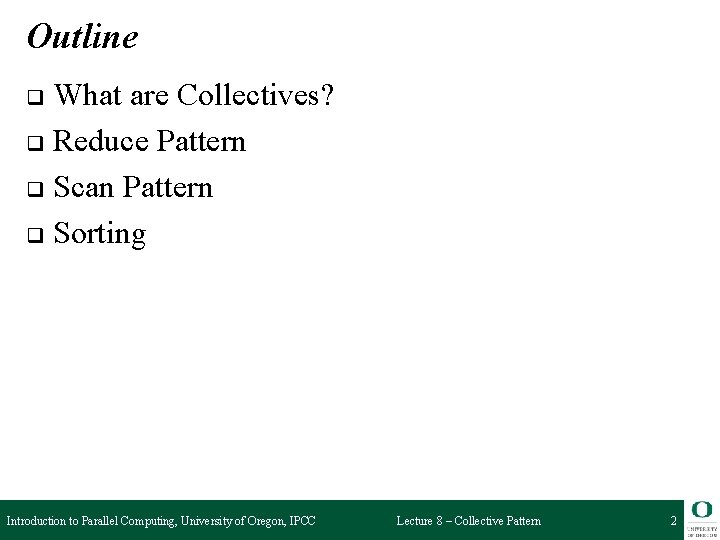

Outline What are Collectives? q Reduce Pattern q Scan Pattern q Sorting q Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 2

Collectives Collective operations deal with a collection of data as a whole, rather than as separate elements q Collective patterns include: q ❍ Reduce ❍ Scan ❍ Partition ❍ Scatter ❍ Gather Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 3

Collectives Collective operations deal with a collection of data as a whole, rather than as separate elements q Collective patterns include: q ❍ Reduce ❍ Scan ❍ Partition ❍ Scatter Reduce and Scan will be covered in this lecture ❍ Gather Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 4

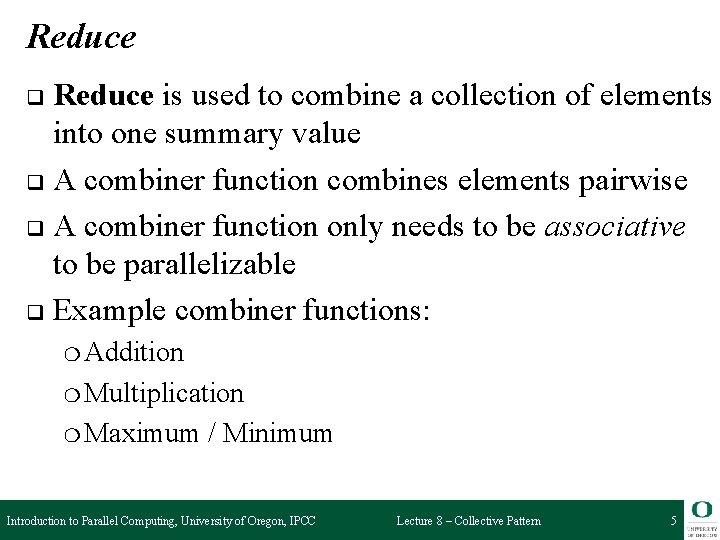

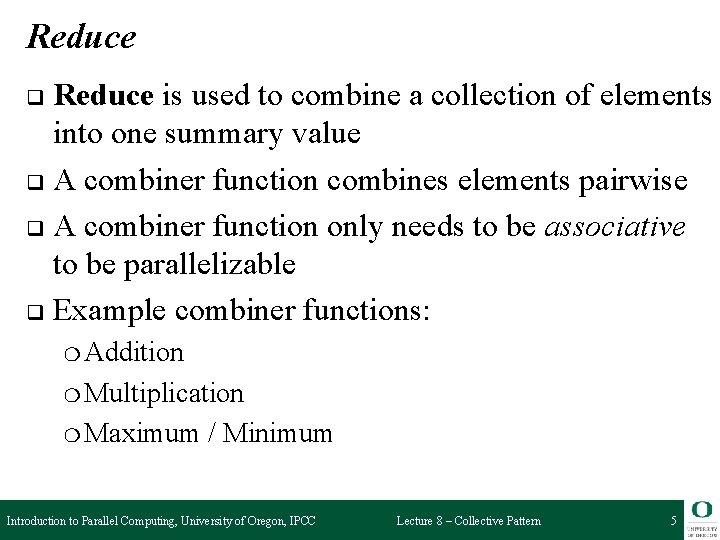

Reduce is used to combine a collection of elements into one summary value q A combiner function combines elements pairwise q A combiner function only needs to be associative to be parallelizable q Example combiner functions: q ❍ Addition ❍ Multiplication ❍ Maximum / Minimum Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 5

Reduce Serial Reduction Introduction to Parallel Computing, University of Oregon, IPCC Parallel Reduction Lecture 8 – Collective Pattern 6

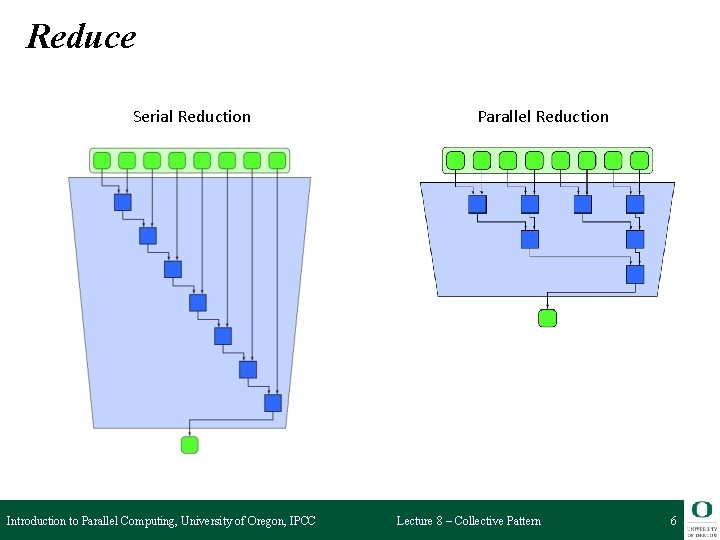

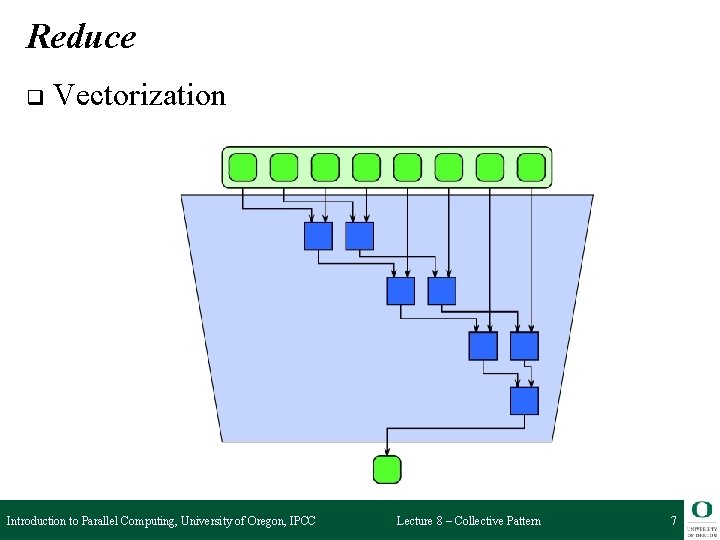

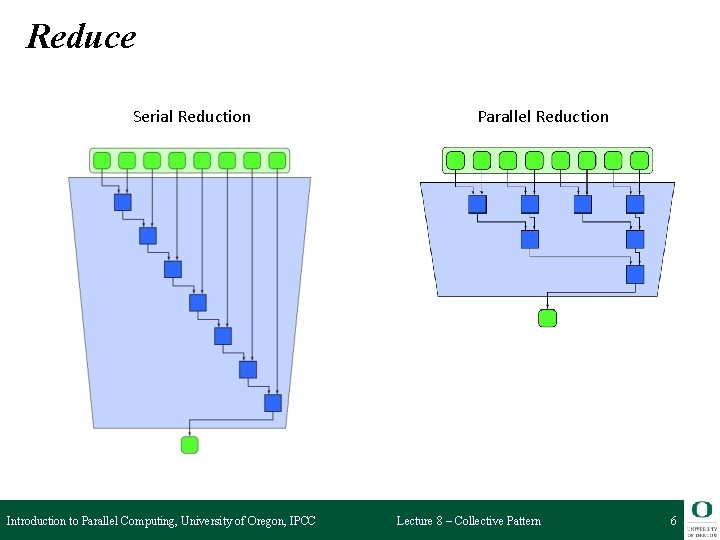

Reduce q Vectorization Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 7

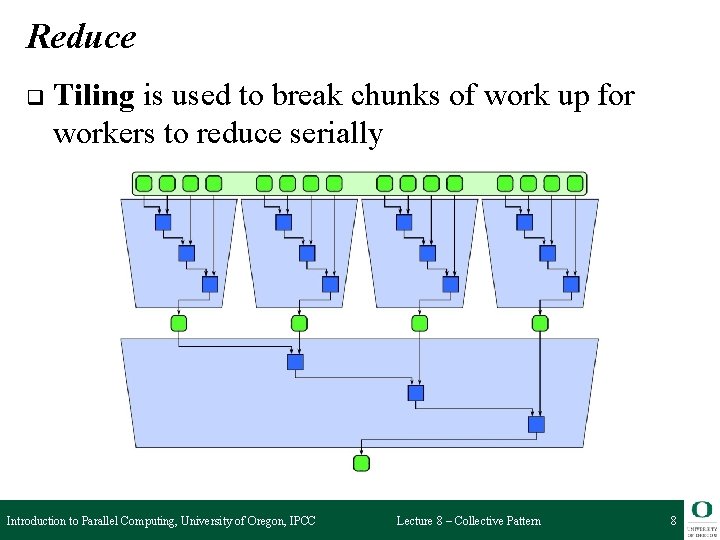

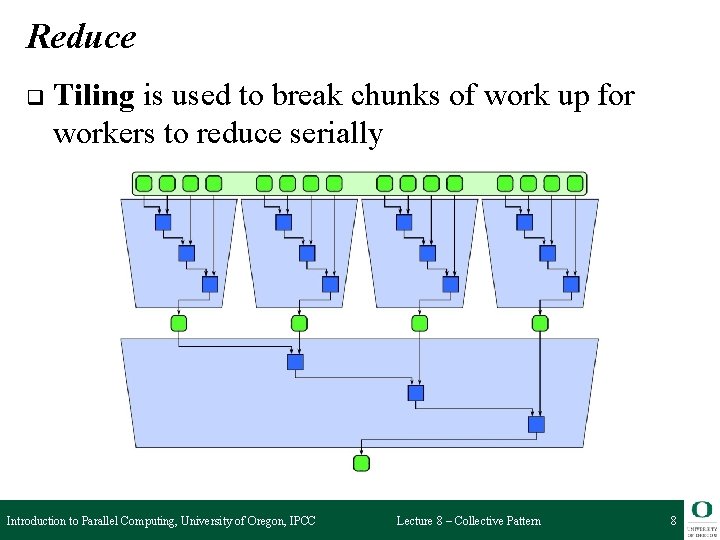

Reduce q Tiling is used to break chunks of work up for workers to reduce serially Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 8

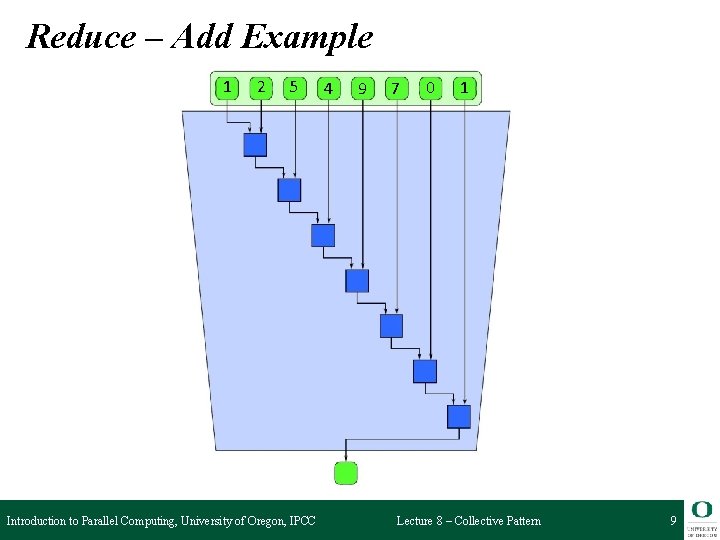

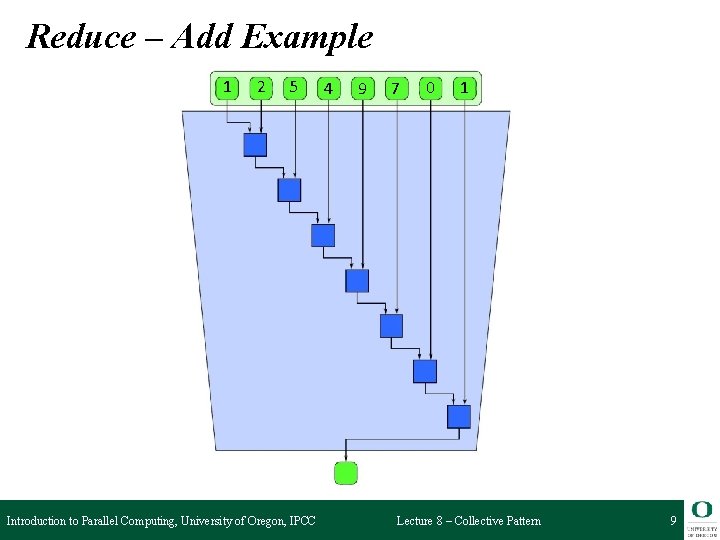

Reduce – Add Example 1 2 5 Introduction to Parallel Computing, University of Oregon, IPCC 4 9 7 0 1 Lecture 8 – Collective Pattern 9

Reduce – Add Example 1 2 5 4 9 7 0 1 3 8 12 21 28 28 29 29 Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 10

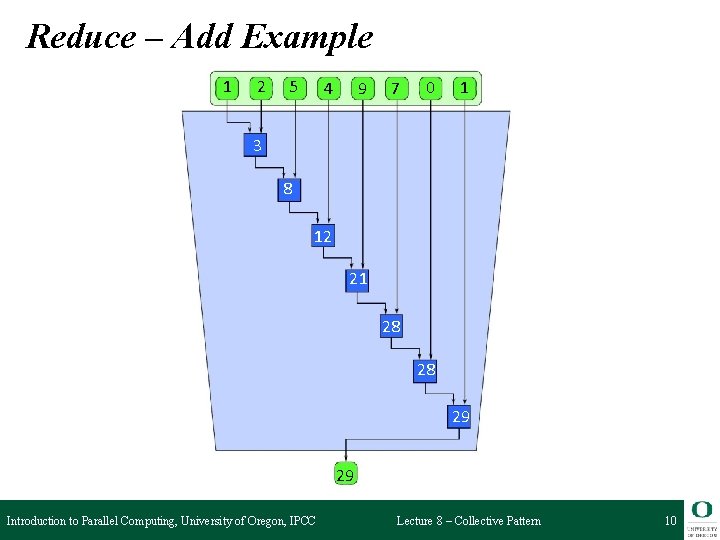

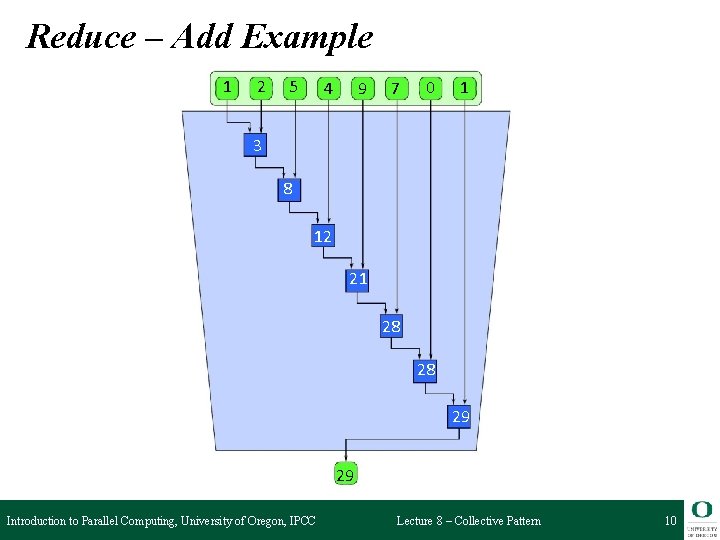

Reduce – Add Example 1 2 5 4 Introduction to Parallel Computing, University of Oregon, IPCC 9 7 0 Lecture 8 – Collective Pattern 1 11

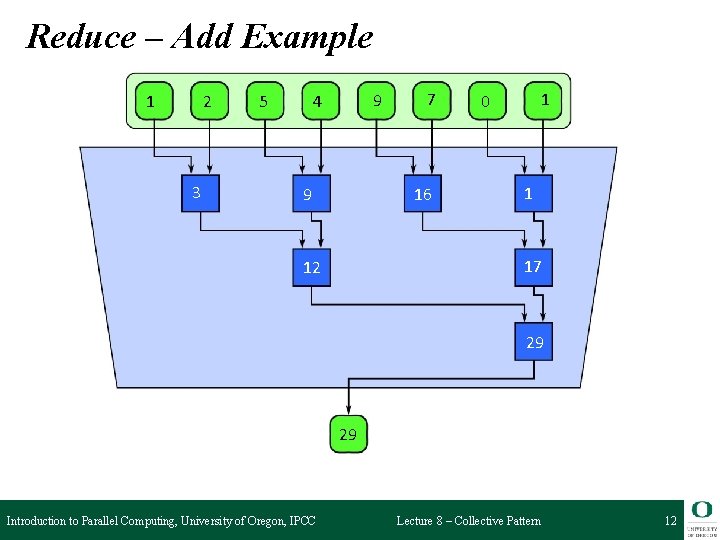

Reduce – Add Example 1 2 3 5 9 4 9 7 16 1 0 1 17 12 29 29 Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 12

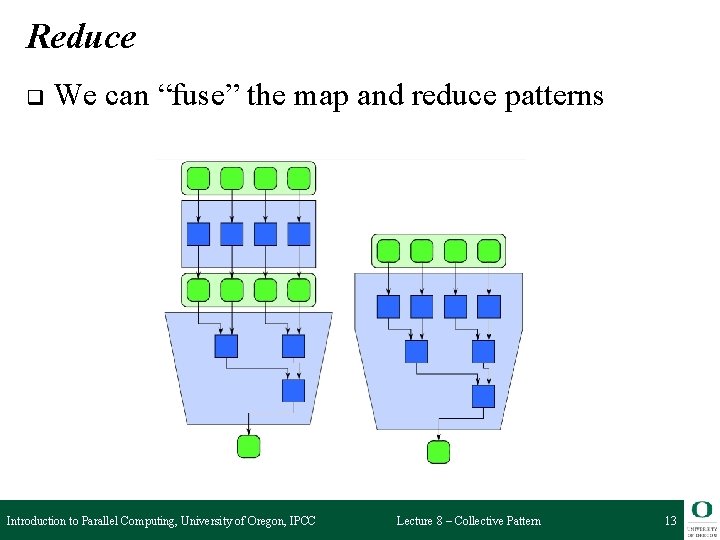

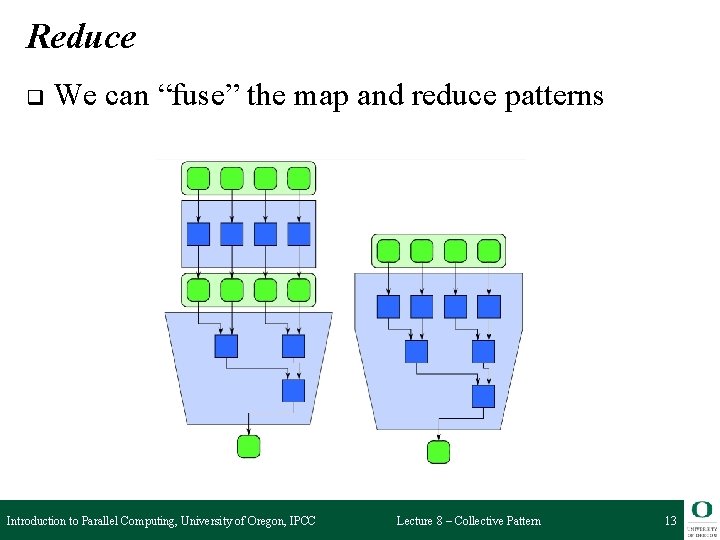

Reduce q We can “fuse” the map and reduce patterns Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 13

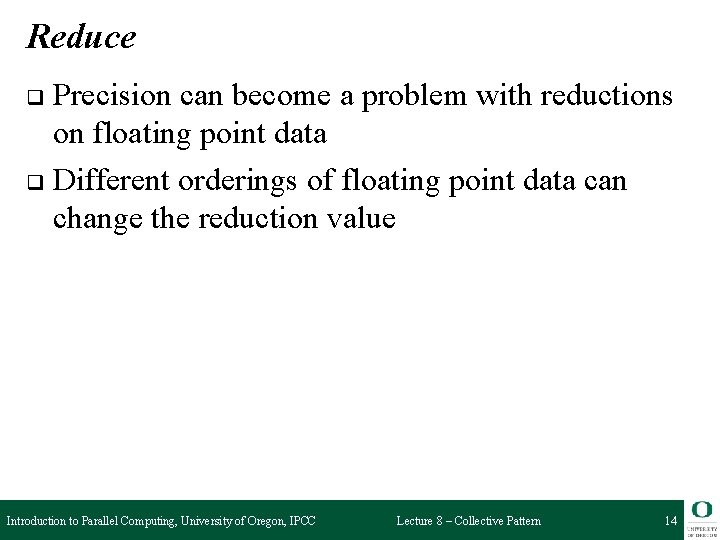

Reduce Precision can become a problem with reductions on floating point data q Different orderings of floating point data can change the reduction value q Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 14

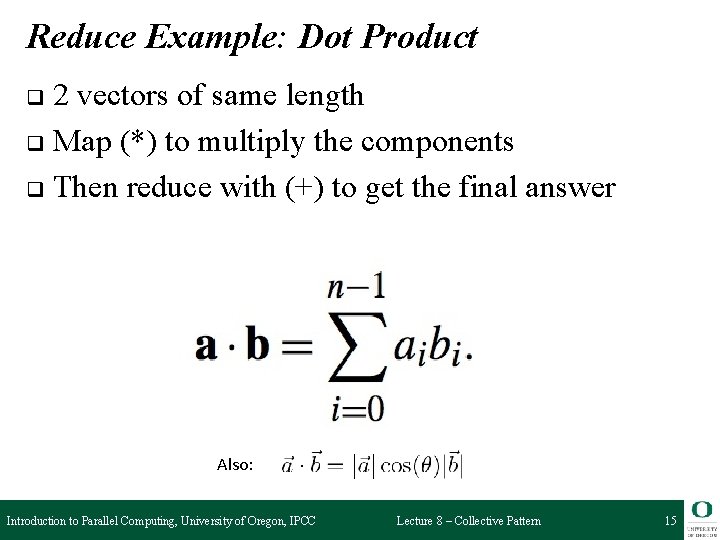

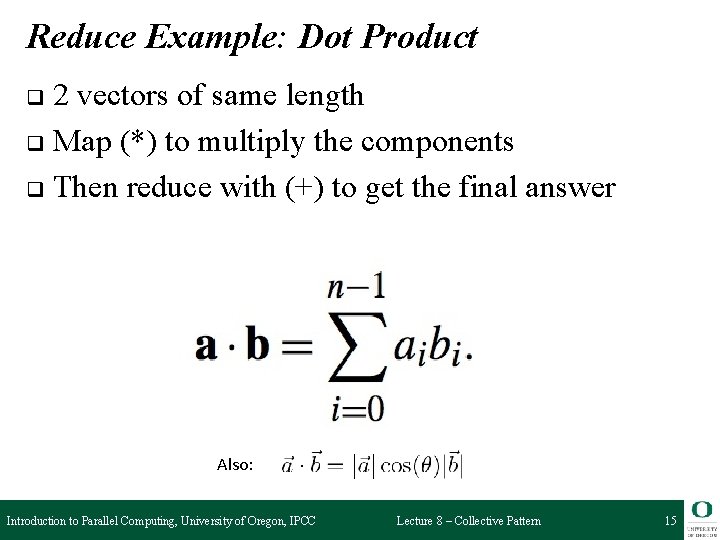

Reduce Example: Dot Product 2 vectors of same length q Map (*) to multiply the components q Then reduce with (+) to get the final answer q Also: Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 15

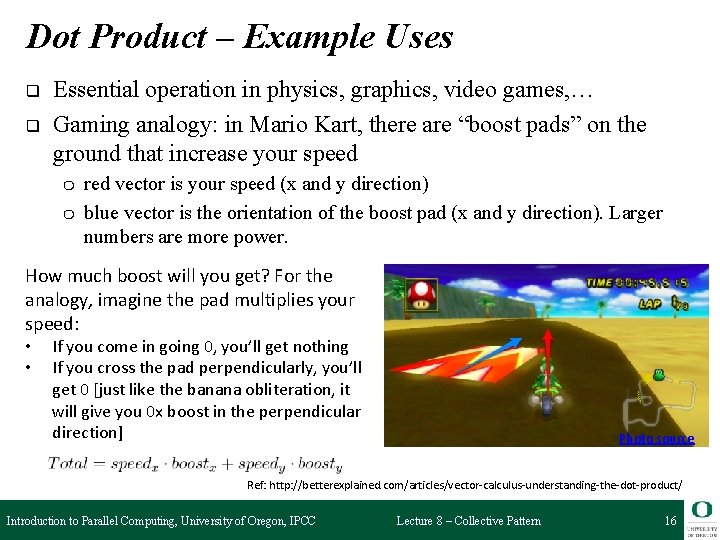

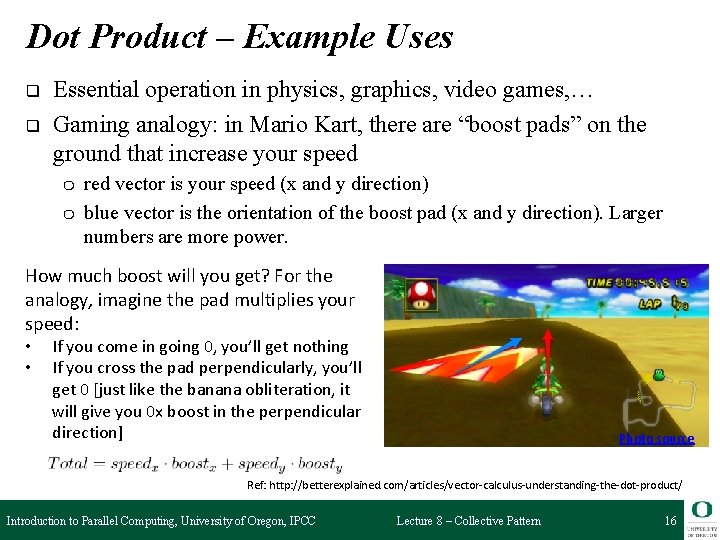

Dot Product – Example Uses q q Essential operation in physics, graphics, video games, … Gaming analogy: in Mario Kart, there are “boost pads” on the ground that increase your speed ❍ ❍ red vector is your speed (x and y direction) blue vector is the orientation of the boost pad (x and y direction). Larger numbers are more power. How much boost will you get? For the analogy, imagine the pad multiplies your speed: • • If you come in going 0, you’ll get nothing If you cross the pad perpendicularly, you’ll get 0 [just like the banana obliteration, it will give you 0 x boost in the perpendicular direction] Photo source Ref: http: //betterexplained. com/articles/vector-calculus-understanding-the-dot-product/ Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 16

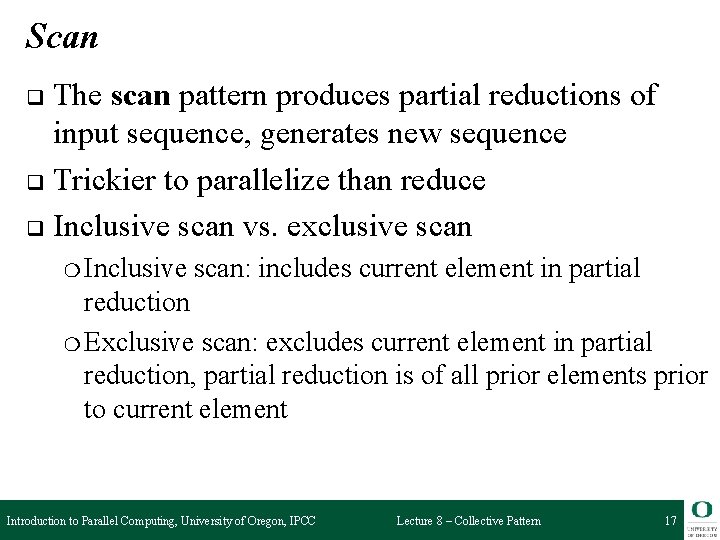

Scan The scan pattern produces partial reductions of input sequence, generates new sequence q Trickier to parallelize than reduce q Inclusive scan vs. exclusive scan q ❍ Inclusive scan: includes current element in partial reduction ❍ Exclusive scan: excludes current element in partial reduction, partial reduction is of all prior elements prior to current element Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 17

Scan – Example Uses q q q q q Lexical comparison of strings – e. g. , determine that “strategy” should appear before “stratification” in a dictionary Add multi-precision numbers (those that cannot be represented in a single machine word) Evaluate polynomials Implement radix sort or quicksort Delete marked elements in an array Dynamically allocate processors Lexical analysis – parsing programs into tokens Searching for regular expressions Labeling components in 2 -D images Some tree algorithms – e. g. , finding the depth of every vertex in a tree Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 18

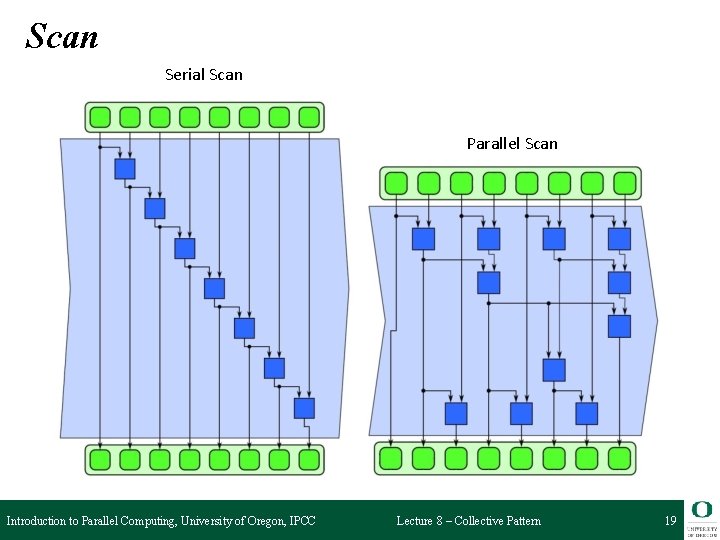

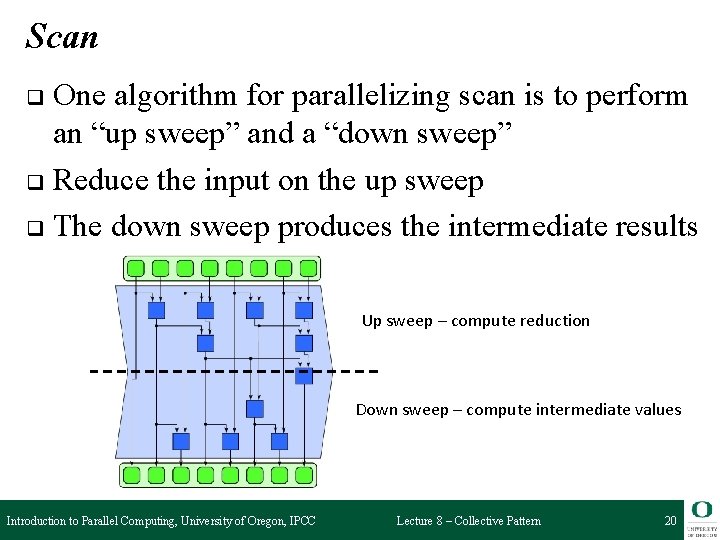

Scan Serial Scan Parallel Scan Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 19

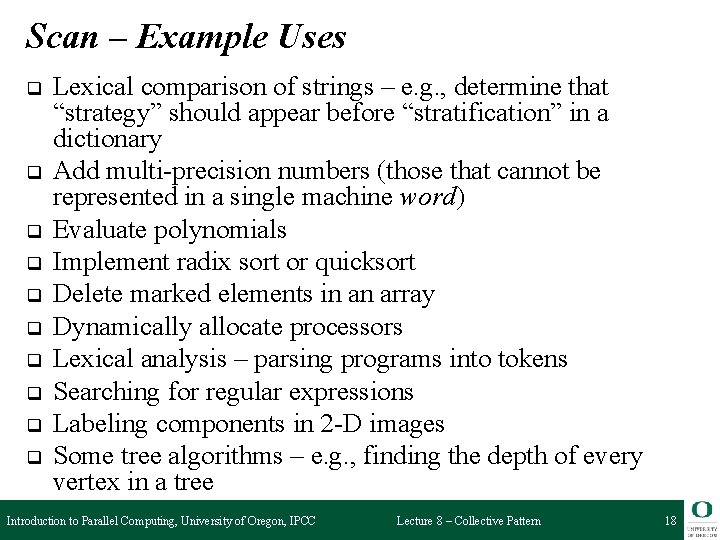

Scan One algorithm for parallelizing scan is to perform an “up sweep” and a “down sweep” q Reduce the input on the up sweep q The down sweep produces the intermediate results q Up sweep – compute reduction Down sweep – compute intermediate values Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 20

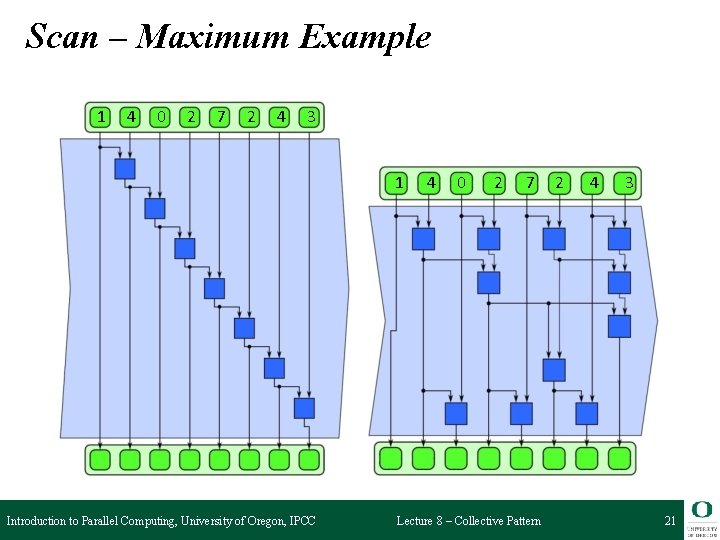

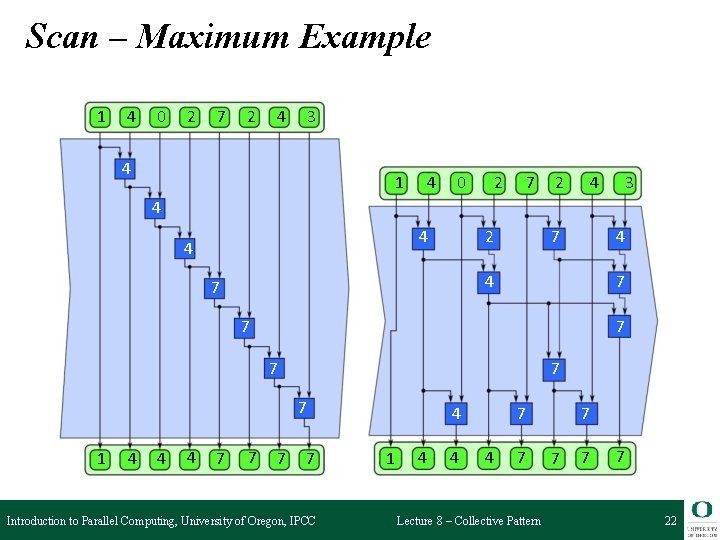

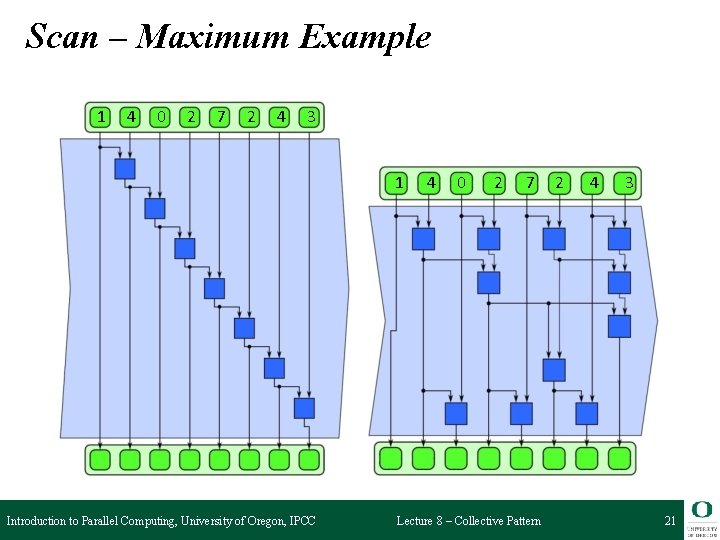

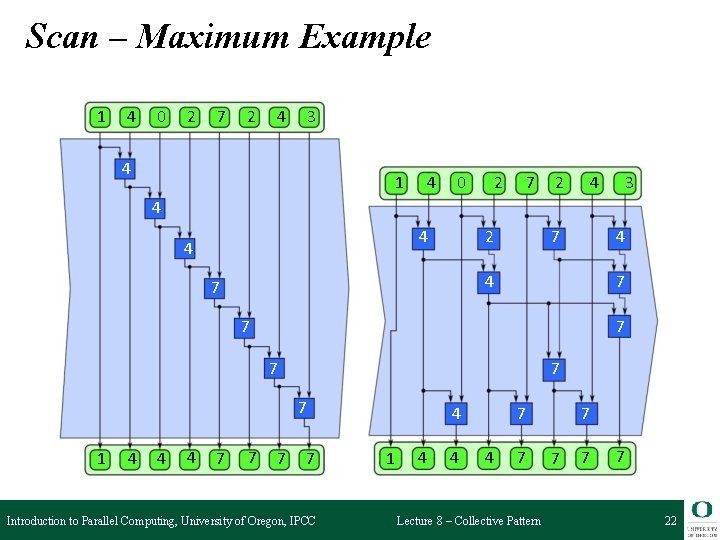

Scan – Maximum Example 1 4 0 2 7 2 4 3 1 Introduction to Parallel Computing, University of Oregon, IPCC 4 0 2 7 Lecture 8 – Collective Pattern 2 4 3 21

Scan – Maximum Example 1 4 0 2 7 2 4 3 4 4 4 2 4 7 7 7 7 1 4 4 4 7 7 Introduction to Parallel Computing, University of Oregon, IPCC 7 4 1 4 4 4 7 Lecture 8 – Collective Pattern 7 7 22

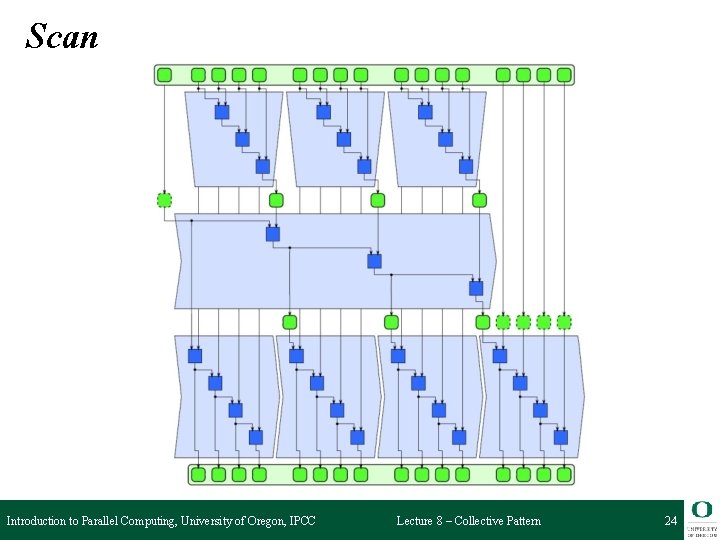

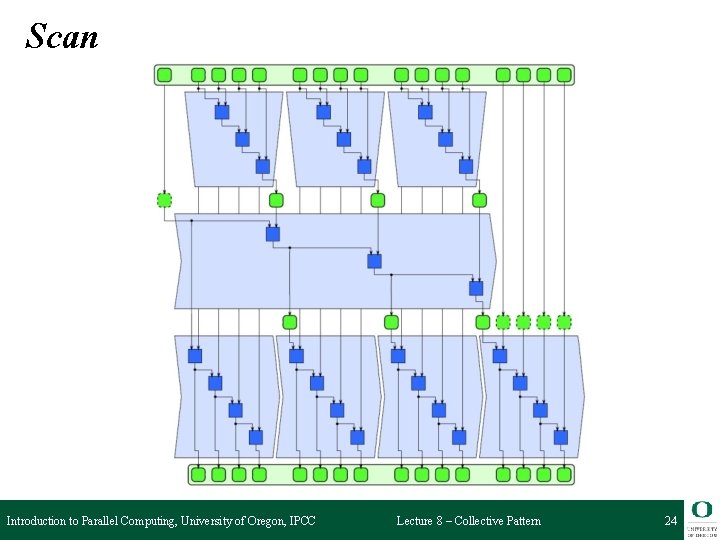

Scan q Three phase scan with tiling Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 23

Scan Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 24

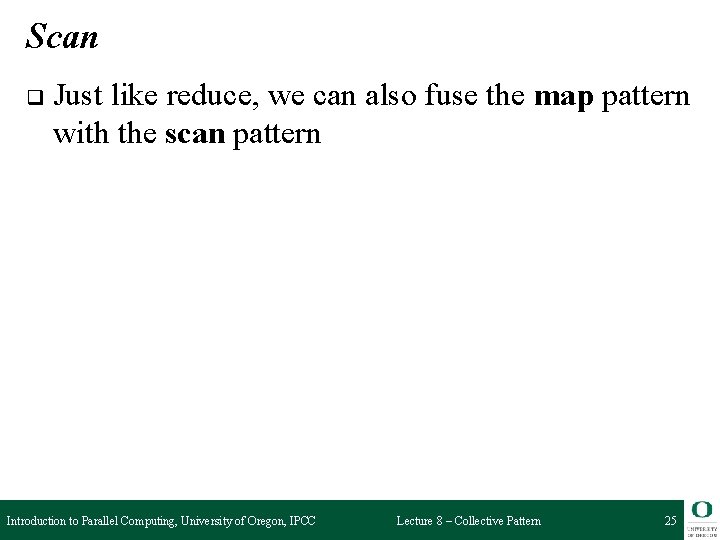

Scan q Just like reduce, we can also fuse the map pattern with the scan pattern Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 25

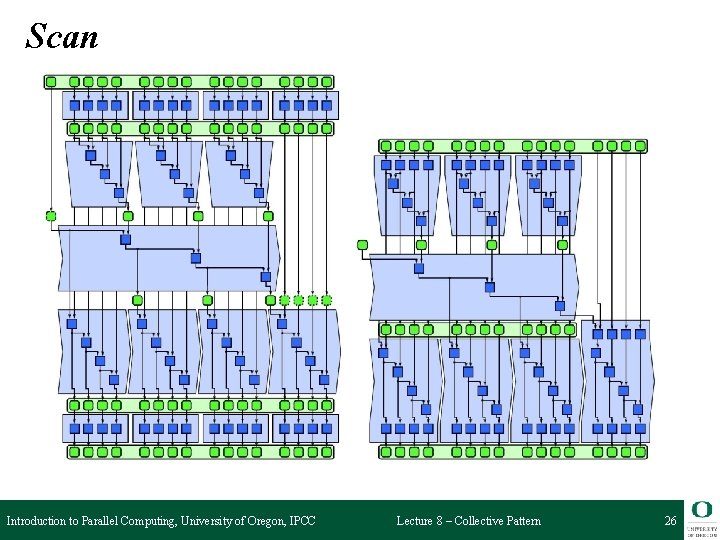

Scan Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 26

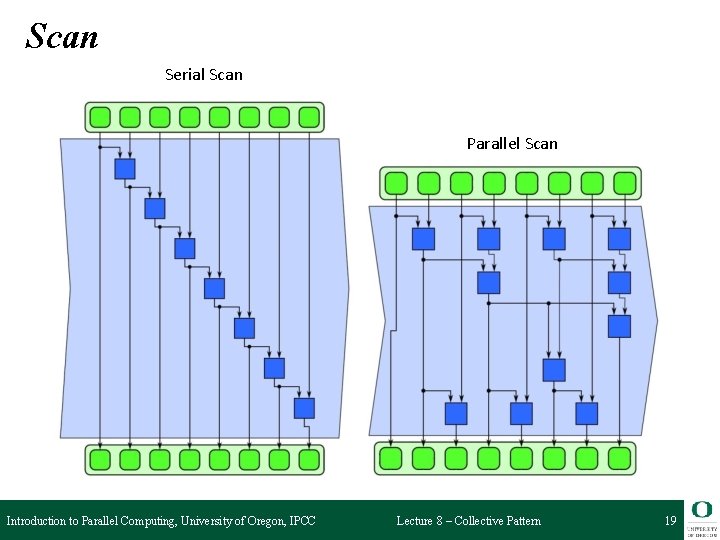

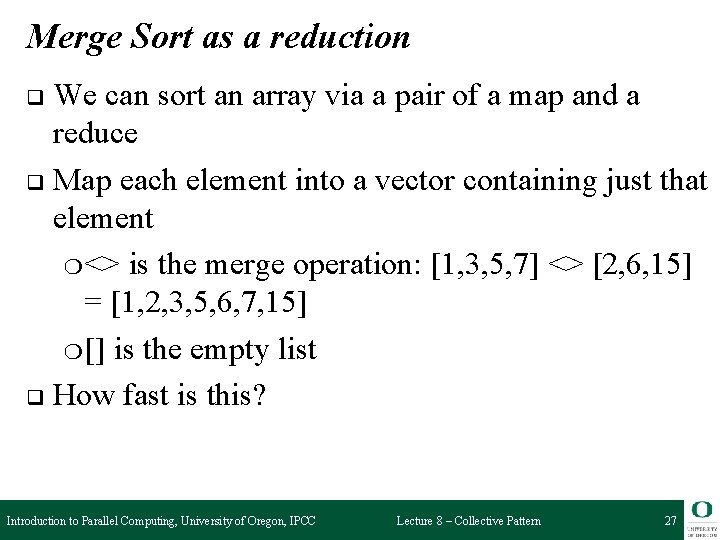

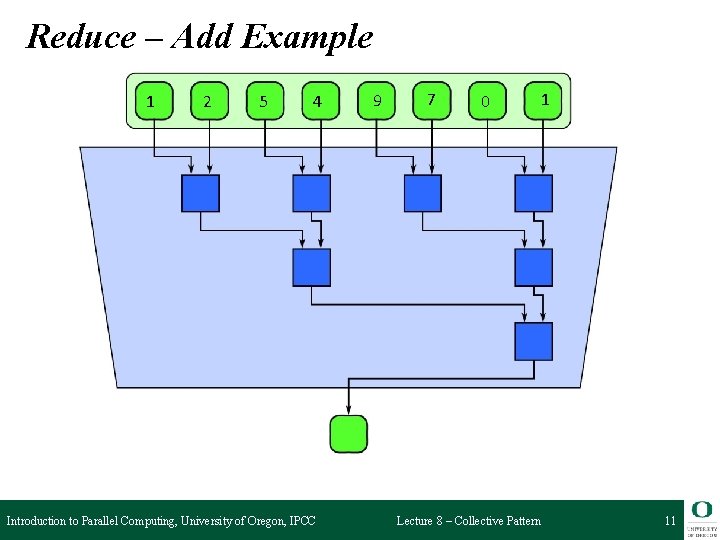

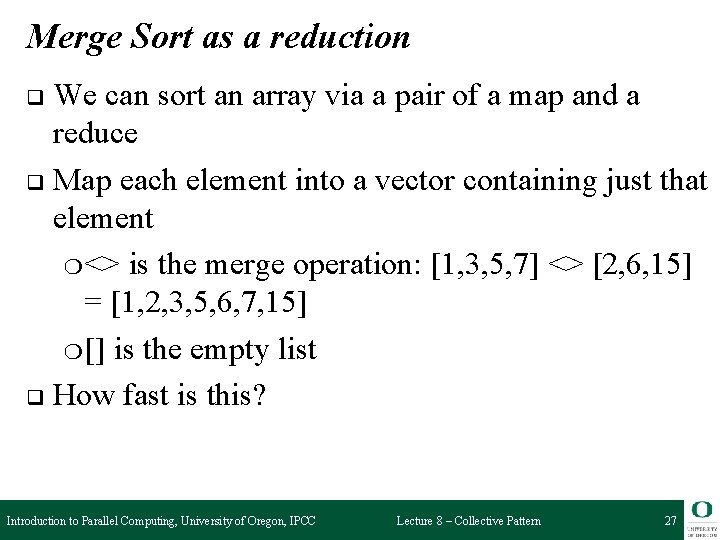

Merge Sort as a reduction We can sort an array via a pair of a map and a reduce q Map each element into a vector containing just that element ❍ <> is the merge operation: [1, 3, 5, 7] <> [2, 6, 15] = [1, 2, 3, 5, 6, 7, 15] ❍ [] is the empty list q How fast is this? q Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 27

![Right Biased Sort Start with 14 3 4 8 7 52 1 Map to Right Biased Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to](https://slidetodoc.com/presentation_image_h2/62b6947ce9641ae1ad195b6b4413b92f/image-28.jpg)

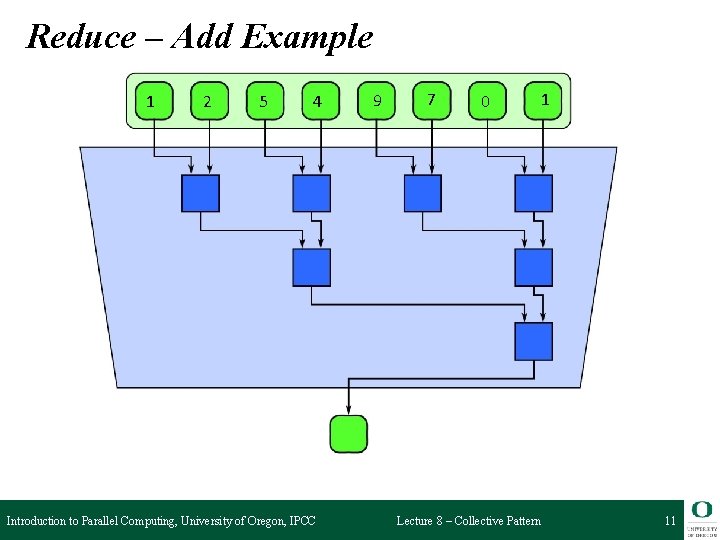

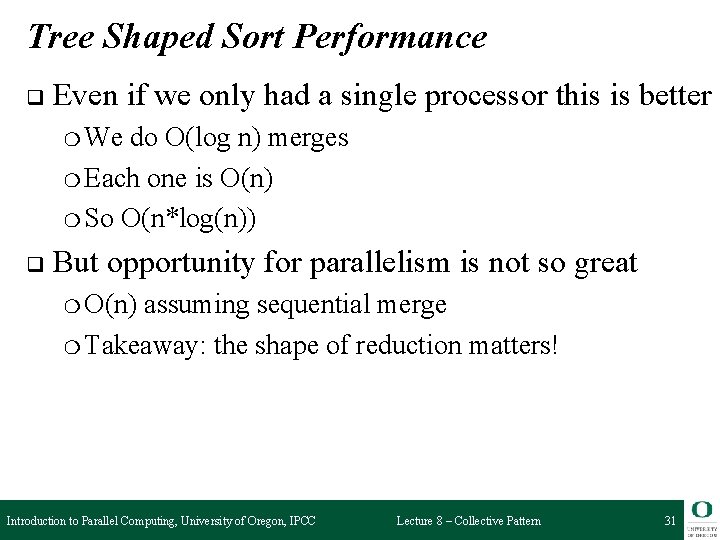

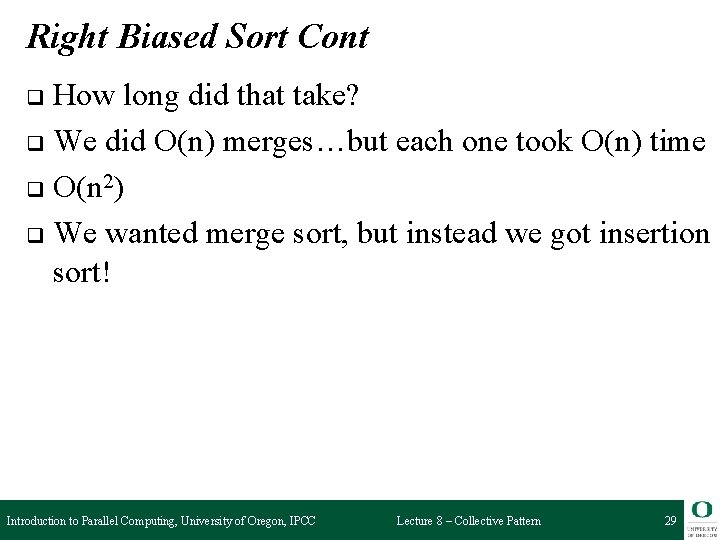

Right Biased Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to [[14], [3], [4], [8], [7], [52], [1]] Reduce: [14] <> ([3] <> ([4] <> ([8] <> ([7] <> ([52] <> [1]))))) = [14] <> ([3] <> ([4] <> ([8] <> ([7] <> [1, 52])))) = [14] <> ([3] <> ([4] <> ([8] <> [1, 7, 52]))) = [14] <> ([3] <> ([4] <> [1, 7, 8, 52])) = [14] <> ([3] <> [1, 4, 7, 8, 52]) = [14] <> [1, 3, 4, 7, 8, 52] = [1, 3, 4, 7, 8, 14, 52] Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 28

Right Biased Sort Cont How long did that take? q We did O(n) merges…but each one took O(n) time q O(n 2) q We wanted merge sort, but instead we got insertion sort! q Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 29

![Tree Shape Sort Start with 14 3 4 8 7 52 1 Map to Tree Shape Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to](https://slidetodoc.com/presentation_image_h2/62b6947ce9641ae1ad195b6b4413b92f/image-30.jpg)

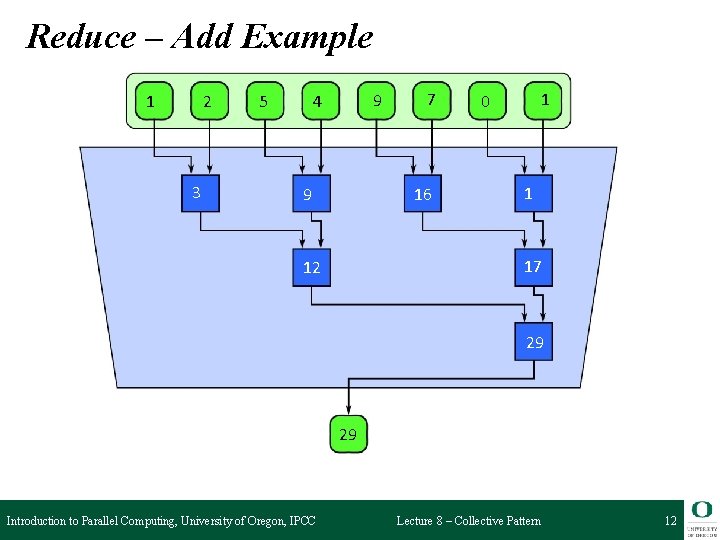

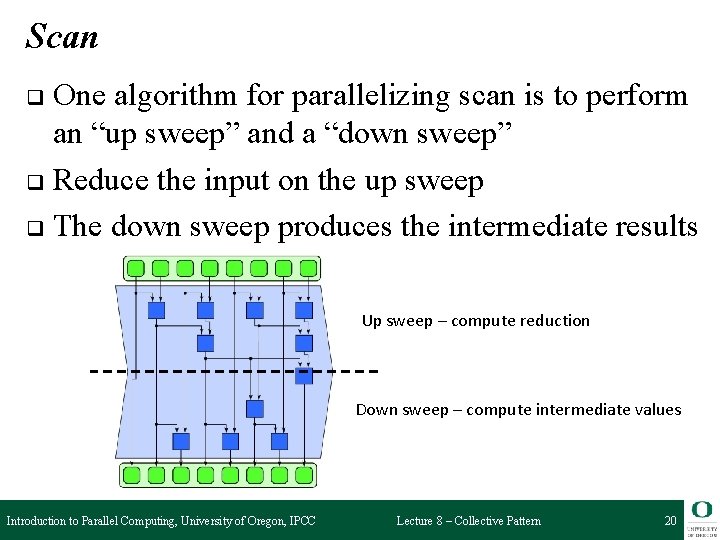

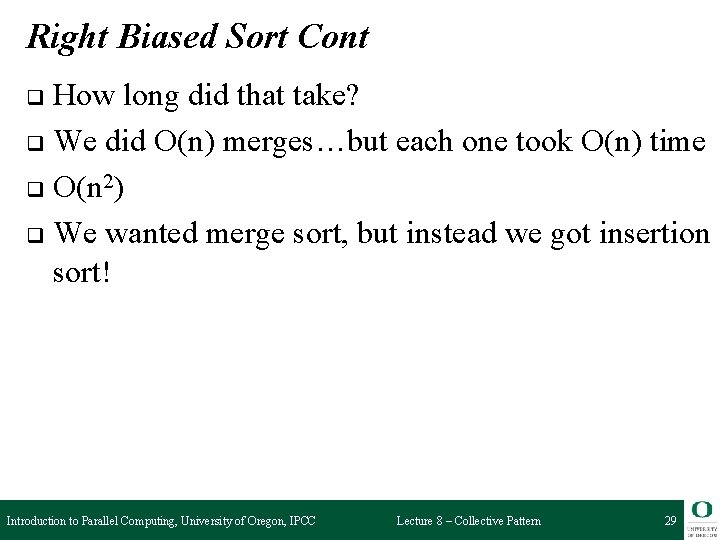

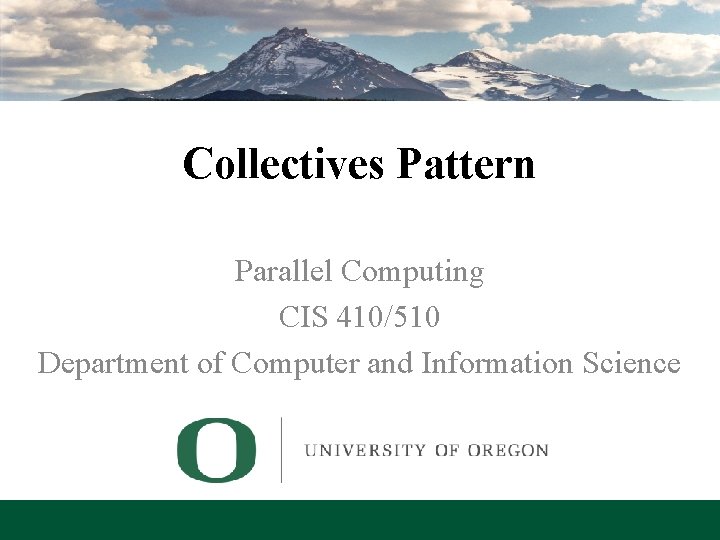

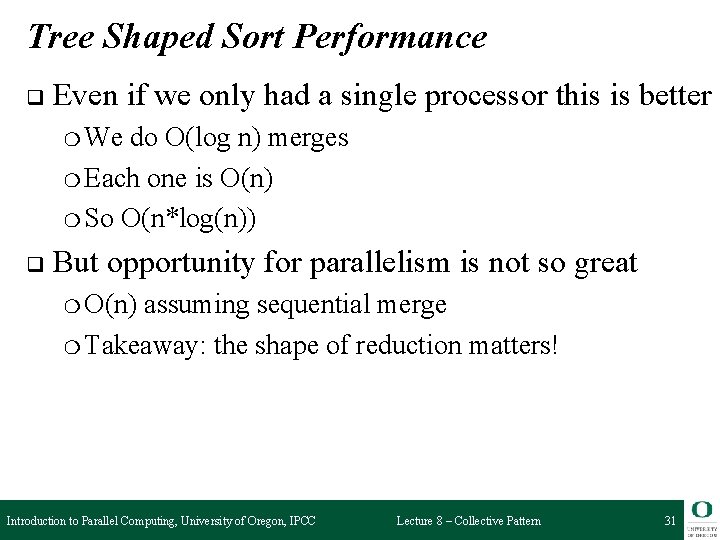

Tree Shape Sort Start with [14, 3, 4, 8, 7, 52, 1] Map to [[14], [3], [4], [8], [7], [52], [1]] Reduce: (([14] <> [3]) <> ([4] <> [8])) <> (([7] <> [52]) <> [1]) = ([3, 14] <> [4, 8]) <> ([7, 52] <> [1]) = [3, 4, 8, 14] <> [1, 7, 52] = [1, 3, 4, 7, 8, 14, 52] Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 30

Tree Shaped Sort Performance q Even if we only had a single processor this is better ❍ We do O(log n) merges ❍ Each one is O(n) ❍ So O(n*log(n)) q But opportunity for parallelism is not so great ❍ O(n) assuming sequential merge ❍ Takeaway: the shape of reduction matters! Introduction to Parallel Computing, University of Oregon, IPCC Lecture 8 – Collective Pattern 31