159 403703 Parallel Computing Section 3 Decomposition Techniques1

![Find the minimum in an array A of length n int Finding_the_minimum(int A[], int Find the minimum in an array A of length n int Finding_the_minimum(int A[], int](https://slidetodoc.com/presentation_image_h/0fe8d4f2becc5ada3fb43d2c5ea9b216/image-5.jpg)

![A Serial Program procedure SERIAL_MIN(A, n) begin min=A[0]; for i: =1 to n-1 do A Serial Program procedure SERIAL_MIN(A, n) begin min=A[0]; for i: =1 to n-1 do](https://slidetodoc.com/presentation_image_h/0fe8d4f2becc5ada3fb43d2c5ea9b216/image-6.jpg)

![A Recursive Program procedure RECUSIVE_MIN(A, n) begin if (n=1) then min=A[0]; else lmin: = A Recursive Program procedure RECUSIVE_MIN(A, n) begin if (n=1) then min=A[0]; else lmin: =](https://slidetodoc.com/presentation_image_h/0fe8d4f2becc5ada3fb43d2c5ea9b216/image-7.jpg)

- Slides: 14

159. 403/703 Parallel Computing Section 3 Decomposition Techniques(1) Recursive decomposition v Data decomposition v 1 159. 403/703 Parallel Computing 2/24/2021

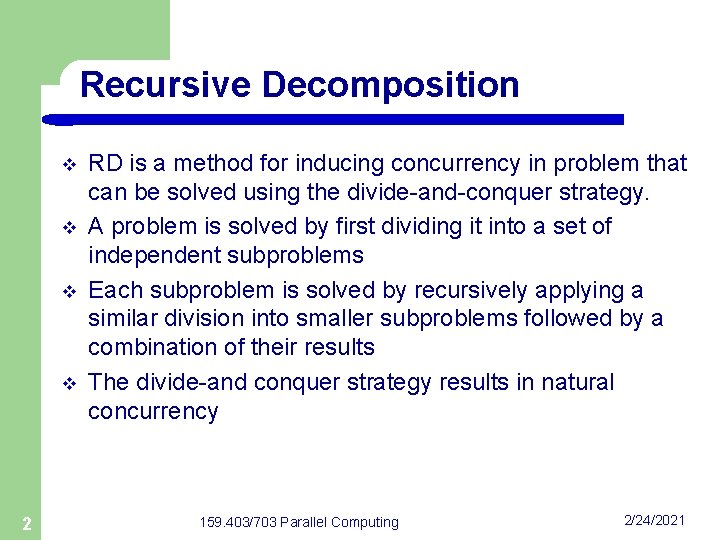

Recursive Decomposition v v 2 RD is a method for inducing concurrency in problem that can be solved using the divide-and-conquer strategy. A problem is solved by first dividing it into a set of independent subproblems Each subproblem is solved by recursively applying a similar division into smaller subproblems followed by a combination of their results The divide-and conquer strategy results in natural concurrency 159. 403/703 Parallel Computing 2/24/2021

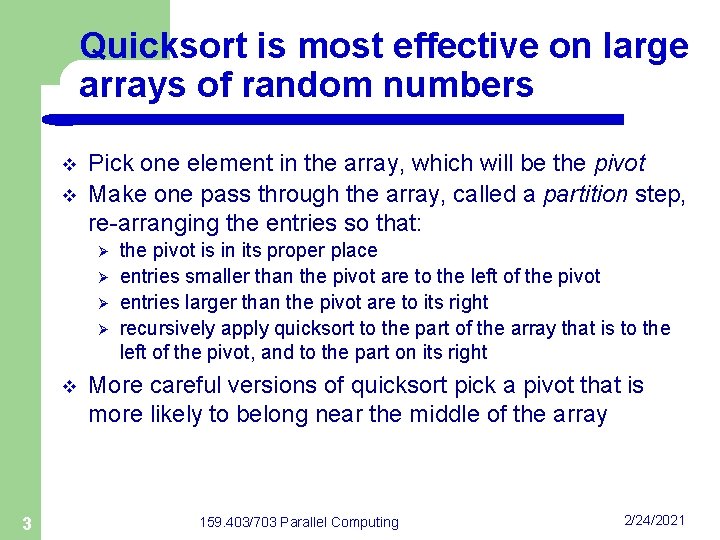

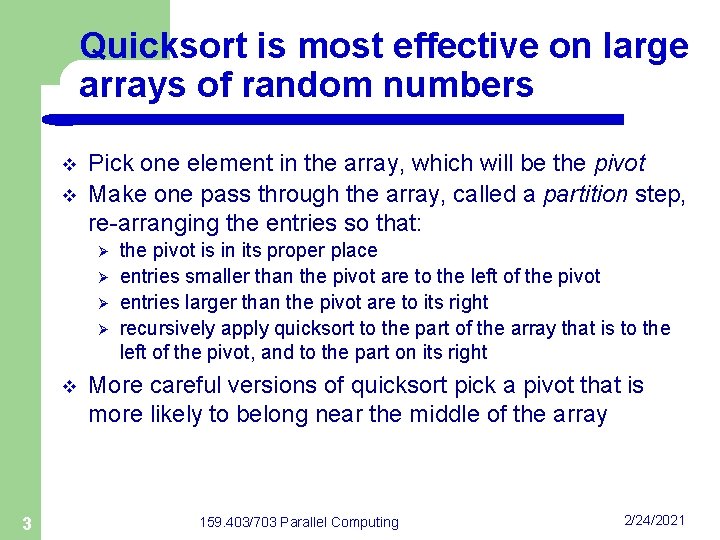

Quicksort is most effective on large arrays of random numbers v v Pick one element in the array, which will be the pivot Make one pass through the array, called a partition step, re-arranging the entries so that: Ø Ø v 3 the pivot is in its proper place entries smaller than the pivot are to the left of the pivot entries larger than the pivot are to its right recursively apply quicksort to the part of the array that is to the left of the pivot, and to the part on its right More careful versions of quicksort pick a pivot that is more likely to belong near the middle of the array 159. 403/703 Parallel Computing 2/24/2021

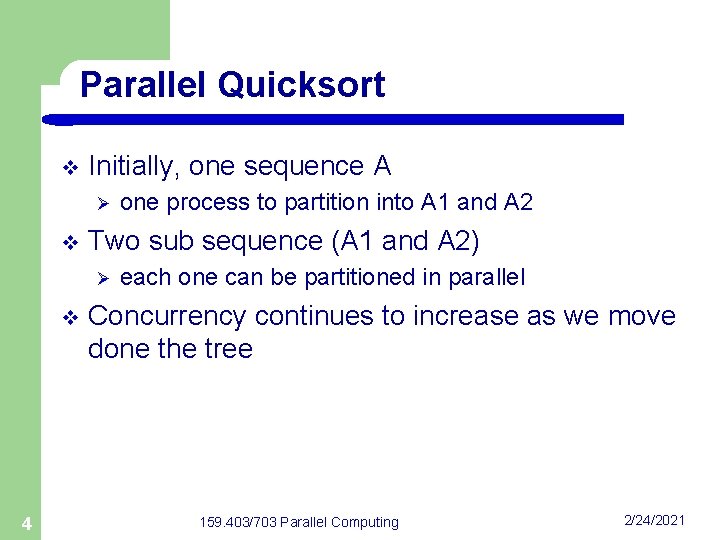

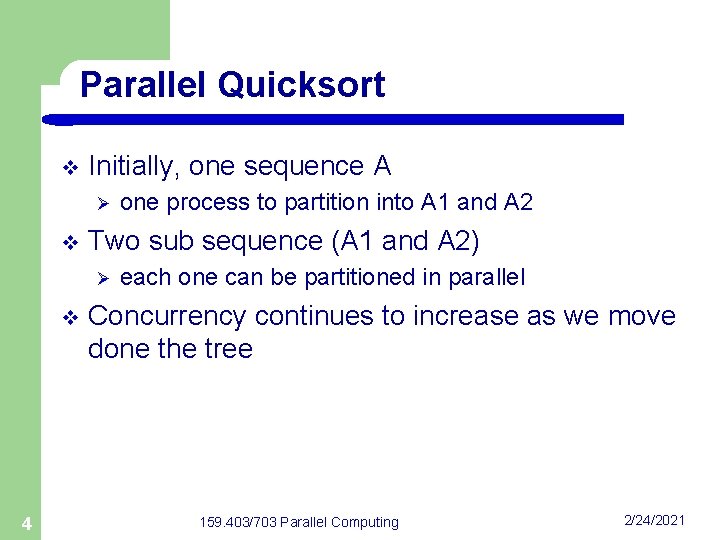

Parallel Quicksort v Initially, one sequence A Ø v Two sub sequence (A 1 and A 2) Ø v 4 one process to partition into A 1 and A 2 each one can be partitioned in parallel Concurrency continues to increase as we move done the tree 159. 403/703 Parallel Computing 2/24/2021

![Find the minimum in an array A of length n int Findingtheminimumint A int Find the minimum in an array A of length n int Finding_the_minimum(int A[], int](https://slidetodoc.com/presentation_image_h/0fe8d4f2becc5ada3fb43d2c5ea9b216/image-5.jpg)

Find the minimum in an array A of length n int Finding_the_minimum(int A[], int n) { int min=A[0]; for (j =1; j<(n-1); j++) { if (A[j]<min) min=A[j]; } return min; } 5 159. 403/703 Parallel Computing 2/24/2021

![A Serial Program procedure SERIALMINA n begin minA0 for i 1 to n1 do A Serial Program procedure SERIAL_MIN(A, n) begin min=A[0]; for i: =1 to n-1 do](https://slidetodoc.com/presentation_image_h/0fe8d4f2becc5ada3fb43d2c5ea9b216/image-6.jpg)

A Serial Program procedure SERIAL_MIN(A, n) begin min=A[0]; for i: =1 to n-1 do if (A[i]<min) min: =A[i]; endfor; return min; end SERIAL_MIN 6 159. 403/703 Parallel Computing 2/24/2021

![A Recursive Program procedure RECUSIVEMINA n begin if n1 then minA0 else lmin A Recursive Program procedure RECUSIVE_MIN(A, n) begin if (n=1) then min=A[0]; else lmin: =](https://slidetodoc.com/presentation_image_h/0fe8d4f2becc5ada3fb43d2c5ea9b216/image-7.jpg)

A Recursive Program procedure RECUSIVE_MIN(A, n) begin if (n=1) then min=A[0]; else lmin: = RECUSIVE_MIN(A, 2/n) rmin: = RECUSIVE_MIN(&(A[n/2]), n-n/2) if (lmin<rmin)min: =lmin; else (min: =rmin; endelse; return min; end RECUSIVE_MIN ----- See Construct a task decency graph 7 159. 403/703 Parallel Computing 2/24/2021

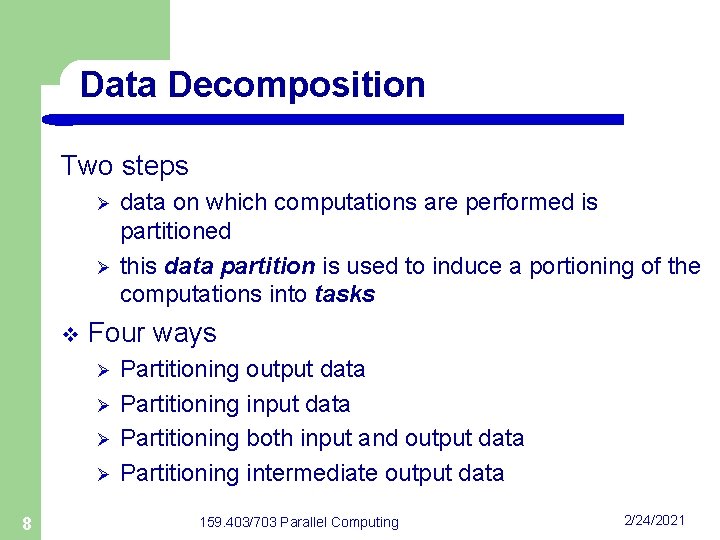

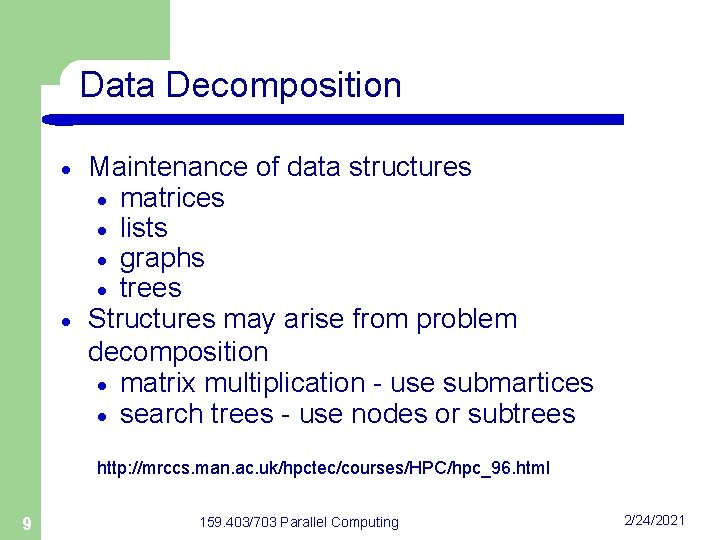

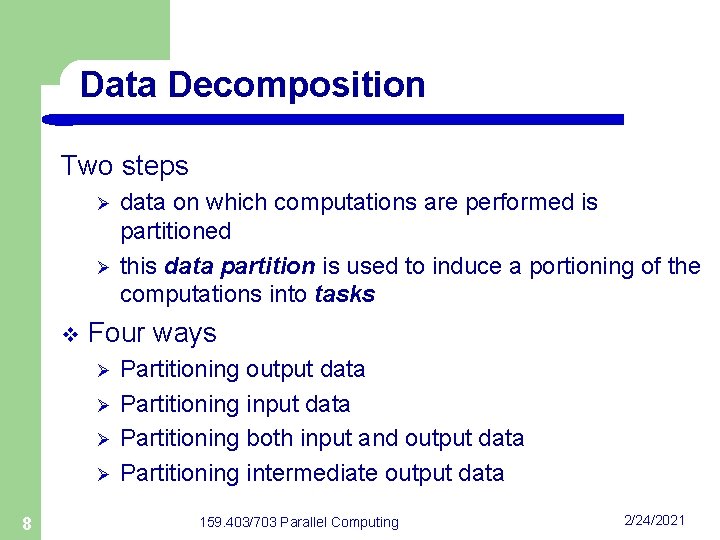

Data Decomposition Two steps Ø Ø v Four ways Ø Ø 8 data on which computations are performed is partitioned this data partition is used to induce a portioning of the computations into tasks Partitioning output data Partitioning input data Partitioning both input and output data Partitioning intermediate output data 159. 403/703 Parallel Computing 2/24/2021

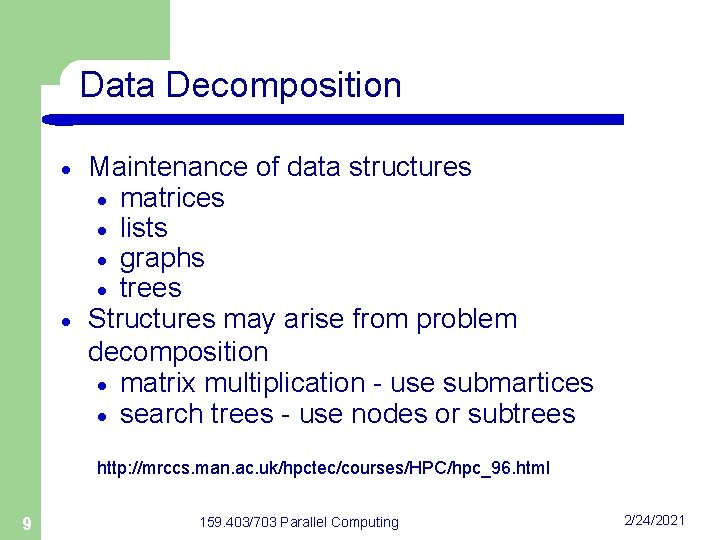

Data Decomposition · · Maintenance of data structures · matrices · lists · graphs · trees Structures may arise from problem decomposition · matrix multiplication - use submartices · search trees - use nodes or subtrees http: //mrccs. man. ac. uk/hpctec/courses/HPC/hpc_96. html 9 159. 403/703 Parallel Computing 2/24/2021

10 v More parallelism is good. v More interprocessor communication is bad. v The data needs to be distributed such that each processor is allocated a similar amount of work 159. 403/703 Parallel Computing 2/24/2021

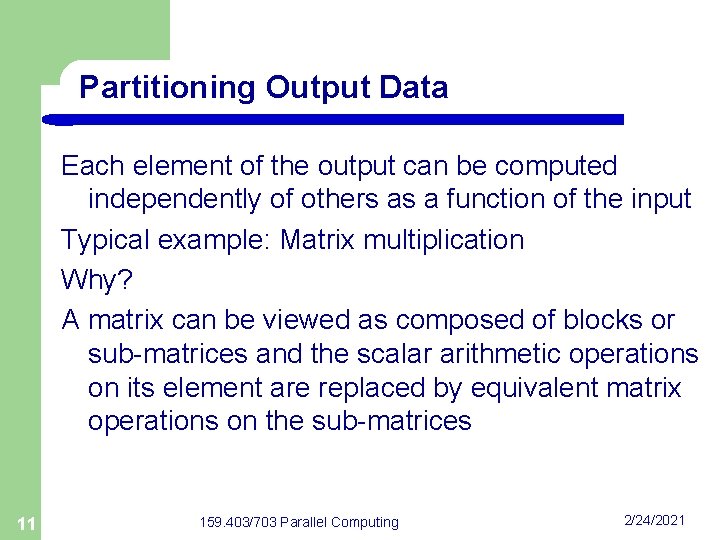

Partitioning Output Data Each element of the output can be computed independently of others as a function of the input Typical example: Matrix multiplication Why? A matrix can be viewed as composed of blocks or sub-matrices and the scalar arithmetic operations on its element are replaced by equivalent matrix operations on the sub-matrices 11 159. 403/703 Parallel Computing 2/24/2021

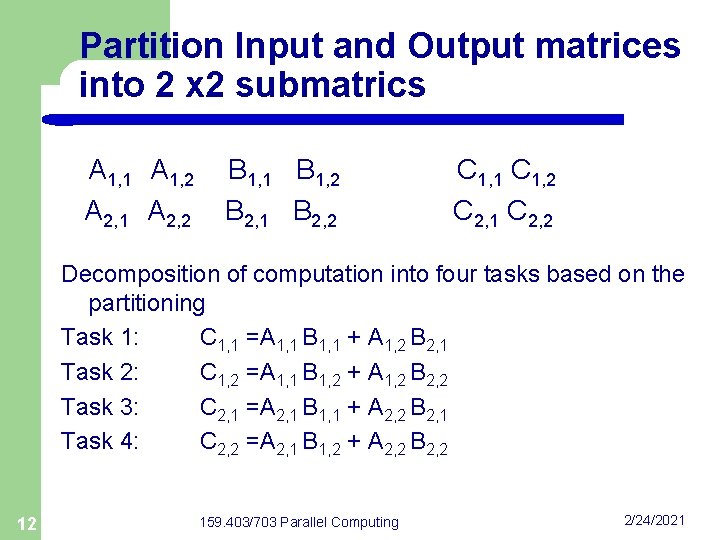

Partition Input and Output matrices into 2 x 2 submatrics A 1, 1 A 1, 2 A 2, 1 A 2, 2 B 1, 1 B 1, 2 B 2, 1 B 2, 2 C 1, 1 C 1, 2 C 2, 1 C 2, 2 Decomposition of computation into four tasks based on the partitioning Task 1: C 1, 1 =A 1, 1 B 1, 1 + A 1, 2 B 2, 1 Task 2: C 1, 2 =A 1, 1 B 1, 2 + A 1, 2 B 2, 2 Task 3: C 2, 1 =A 2, 1 B 1, 1 + A 2, 2 B 2, 1 Task 4: C 2, 2 =A 2, 1 B 1, 2 + A 2, 2 B 2, 2 12 159. 403/703 Parallel Computing 2/24/2021

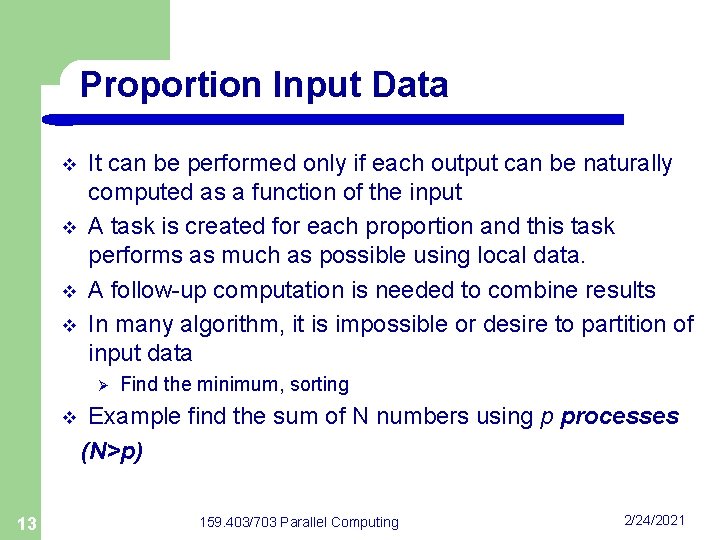

Proportion Input Data v v It can be performed only if each output can be naturally computed as a function of the input A task is created for each proportion and this task performs as much as possible using local data. A follow-up computation is needed to combine results In many algorithm, it is impossible or desire to partition of input data Ø v 13 Find the minimum, sorting Example find the sum of N numbers using p processes (N>p) 159. 403/703 Parallel Computing 2/24/2021

v Partitioning both input and output data Ø v Proportion intermediate Data Ø Ø 14 To get additional concurrency Algorithm are often structured as mulit-stage computations, i. e. output of one stage is the input to the subsequent set. Decomposition of such algorithm can done by partitioning intermediate data 159. 403/703 Parallel Computing 2/24/2021

Recursive decomposition in parallel computing

Recursive decomposition in parallel computing What is exploratory decomposition

What is exploratory decomposition Recursive decomposition in parallel computing

Recursive decomposition in parallel computing What does decomposition mean in computing

What does decomposition mean in computing Parallel decomposition of transfer function

Parallel decomposition of transfer function Petrarch sonnet 159

Petrarch sonnet 159 Ai 159

Ai 159 Iso tc 159

Iso tc 159 Page 159

Page 159 159 ap

159 ap Cs 159

Cs 159 Surah ali imran 159

Surah ali imran 159 Mokena public schools

Mokena public schools Infrastrukturmaster und globaler katalog

Infrastrukturmaster und globaler katalog Modul 159

Modul 159