Chunk Parsing II Chunking as Tagging Chunk Parsing

- Slides: 22

Chunk Parsing II Chunking as Tagging

Chunk Parsing “Shallow parsing has become an interesting alternative to full parsing. The main goal of a shallow parser is to divide a text into segments which correspond to certain syntactic units. Although the detailed information from a full parse is lost, shallow parsing can be done on nonrestricted texts in an efficient and reliable way. In addition, partial syntactical information can help to solve many natural language processing tasks, such as information extraction, text summarization, machine translation and spoken language understanding. ” Molina & Pla 2002

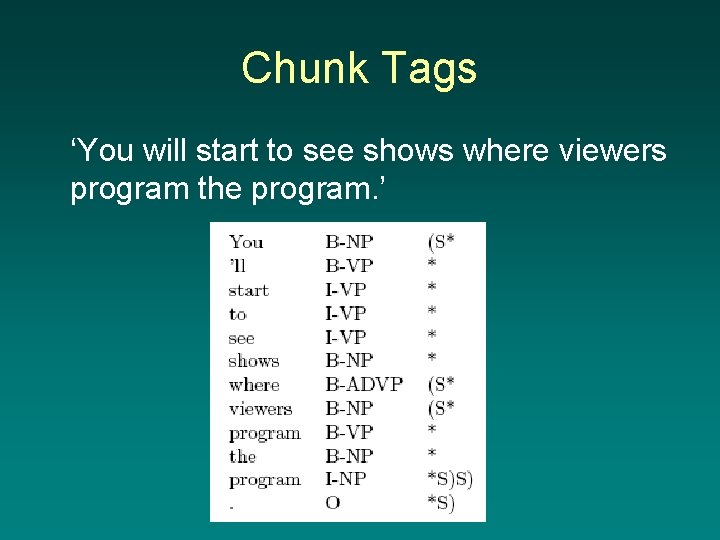

Molina & Pla • Definitions: – Text chunking: dividing input text into nonoverlapping segments – Clause identification: detecting start and end boundaries of each clause • What are the chunks of the following? What are the clauses? – ‘You will start to see shows where viewers program the program. ’

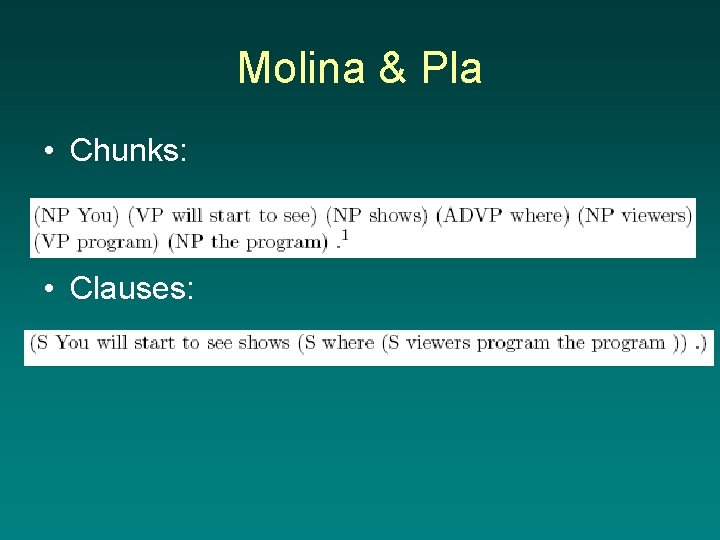

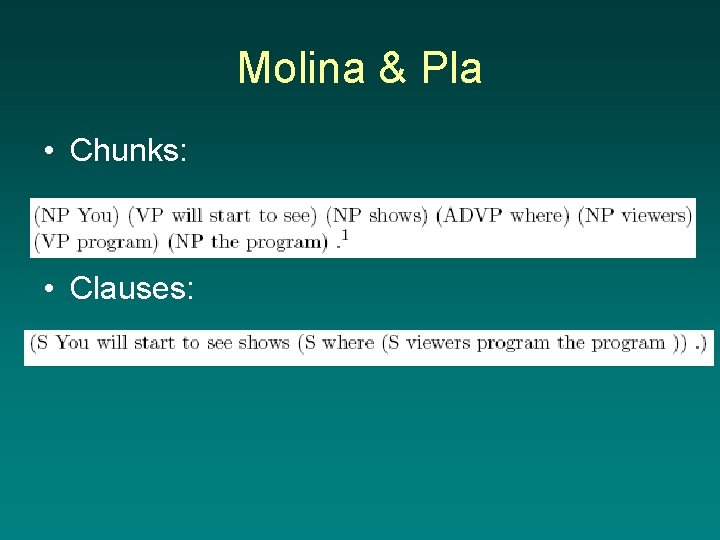

Molina & Pla • Chunks: • Clauses:

Chunk Tags • Chunks and clauses can be represented using tags. • Sang et al 2000’s tags: – B-X: first word of a chunk of type X – I-X: non-initial word of chunk – O: words or material outside chunks

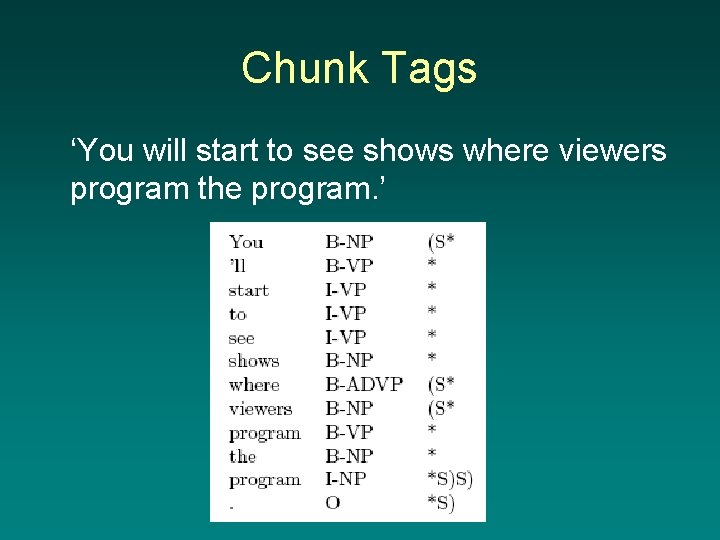

Chunk Tags ‘You will start to see shows where viewers program the program. ’

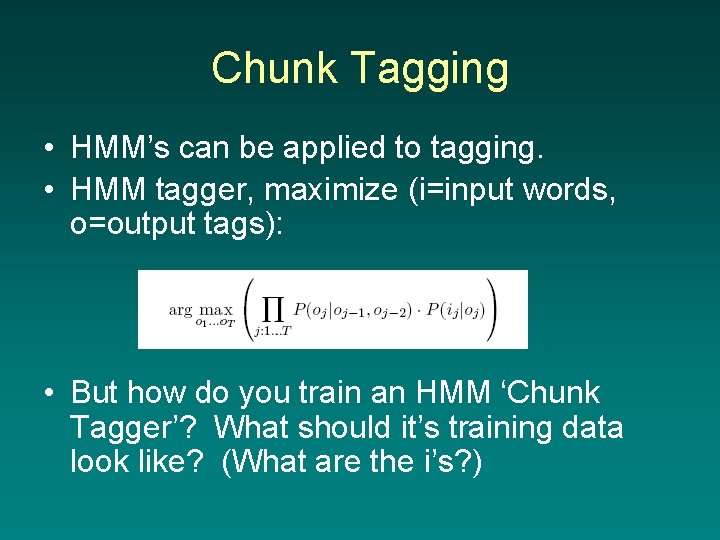

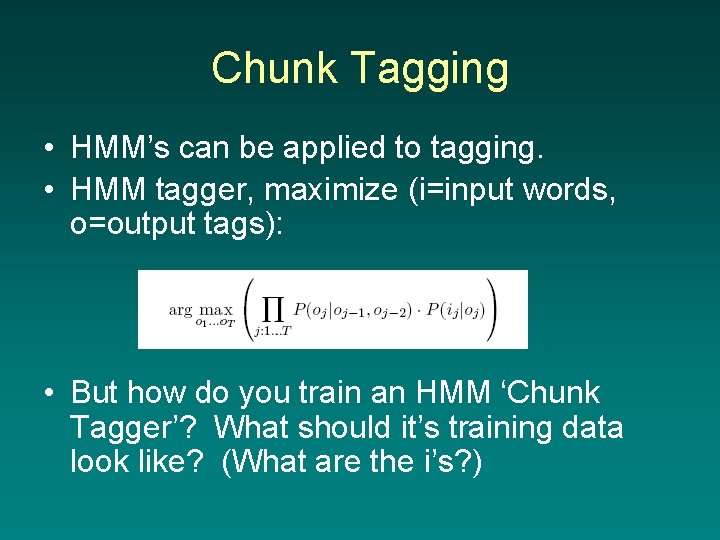

Chunk Tagging • HMM’s can be applied to tagging. • HMM tagger, maximize (i=input words, o=output tags): • But how do you train an HMM ‘Chunk Tagger’? What should it’s training data look like? (What are the i’s? )

Tagging • From Molina and Pla: – POS tagging considers only words as input. – Chunking considers words and POS tags as input. – Clause identification considers words, POS tags and chunks as input. • Problem: vocabulary could get very large and the model would be poorly estimated.

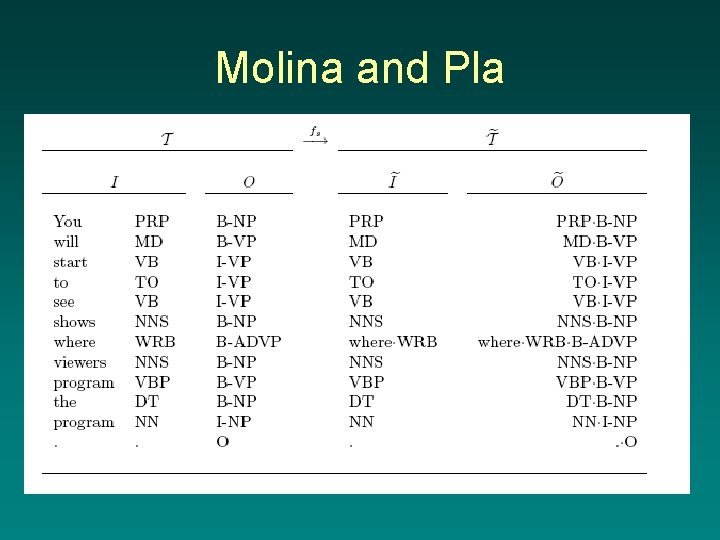

Molina & Pla • Solution: – Enrich chunk tags by adding POS information and selected words – Describe specialization function fs on the original training set T to produce a new set T~, essentially transforming every training tuple <ij, oj> to <ij~, oj~> – Training then done over the new training set

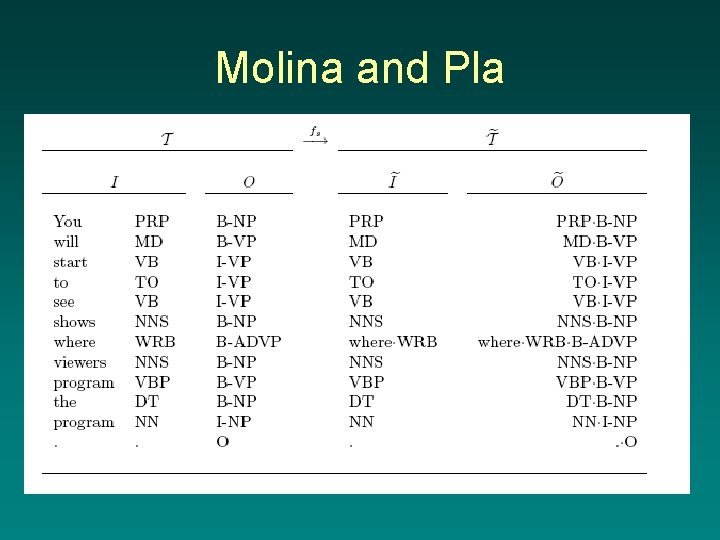

Molina and Pla • Examples: – <You PRP, B-NP> → <PRP, PRP B-NP> • Considering only POS information – <where WRB, B-ADVP> → <where WRB, where WRB B-ADVP> • Considering lexical information as well

Molina and Pla

Molina & Pla • Training process: 1. Tag corpus to get the word and tag associations. The words and tags become the new input (e. g. , You PRP, where WRB) 2. Chunk a portion of the corpus using Sang et al (2002) chunk tag outputs. These are the new outputs (e. g. , B-NP, I-NP, …) 3. Apply specialization function across the training corpus to transform the training set 4. Train HMM Tagger on transformed set

Molina & Pla • Tagging: 1. POS tag a corpus 2. Apply trained tagger against POS tagged corpus. 3. Take into account input transformations done in fs 4. Map relevant information in input to modified output O~ 5. Map output tags O~ back to O.

Molina and Pla • Give brief discussions of other approaches to chunking • Compare the relative performances of the other systems • Compare systems with different specialization functions (different f. S) • BTW, they used the TNT tagger developed by Thorsten Brants, which can be downloaded from the Web: http: //www. coli. uni-sb. de/thorsten/tnt (hardcopy licensing and registration required)

N-grams

N-grams • An N-gram, or N-grammar, represents an (N-1)th-order Markov language model • Bigram = first order • Trigram = second order

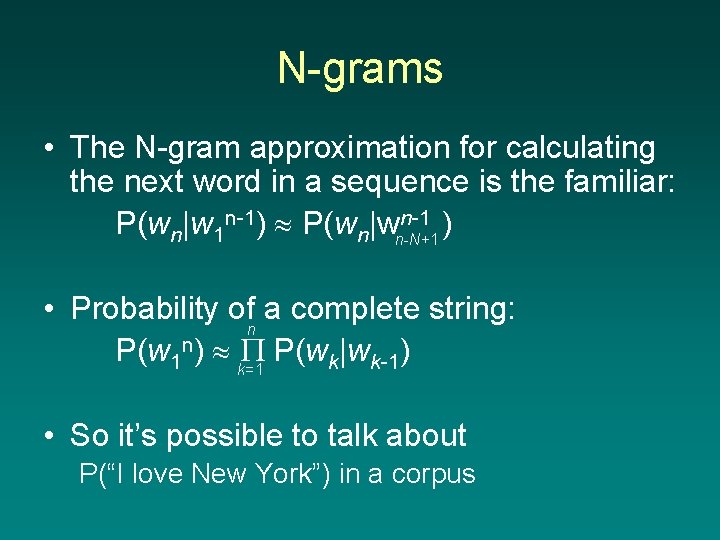

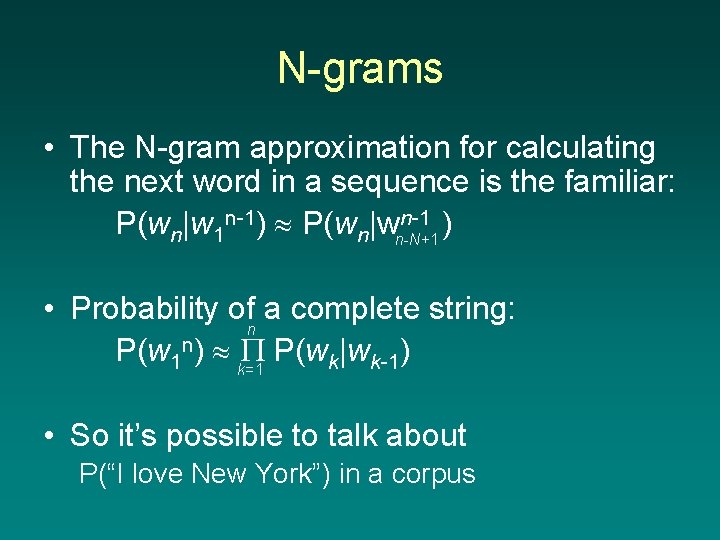

N-grams • The N-gram approximation for calculating the next word in a sequence is the familiar: n-1 ) P(wn|w 1 n-1) P(wn|wn-N+1 • Probability ofn a complete string: P(w 1 n) k=1 P(wk|wk-1) • So it’s possible to talk about P(“I love New York”) in a corpus

N-grams • Important to recognize: N-grams don’t just apply to words! • We can have n-grams of – Words, POS tags, chunks (M&P 02) – Characters (Cavnar & Trenkle 94) – Phones (Jurafsky & colleagues, and loads more) – Binary sequences (for file type identification) (Li et al 2005)

N-grams • The higher the order of the model, the more specific that model becomes to the source. • Note the discussion in J&M re: sensitivity to training corpus

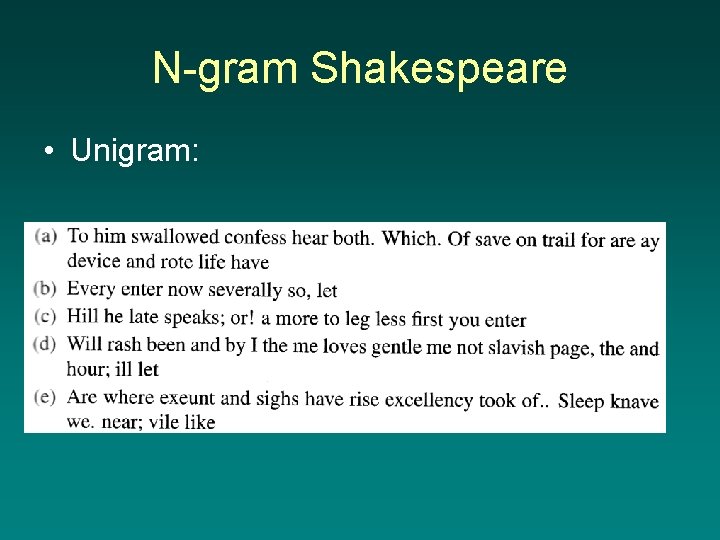

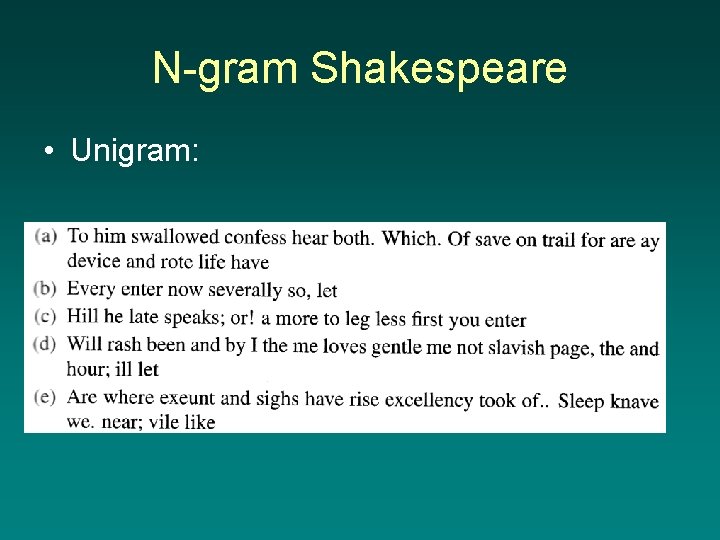

N-gram Shakespeare • Unigram:

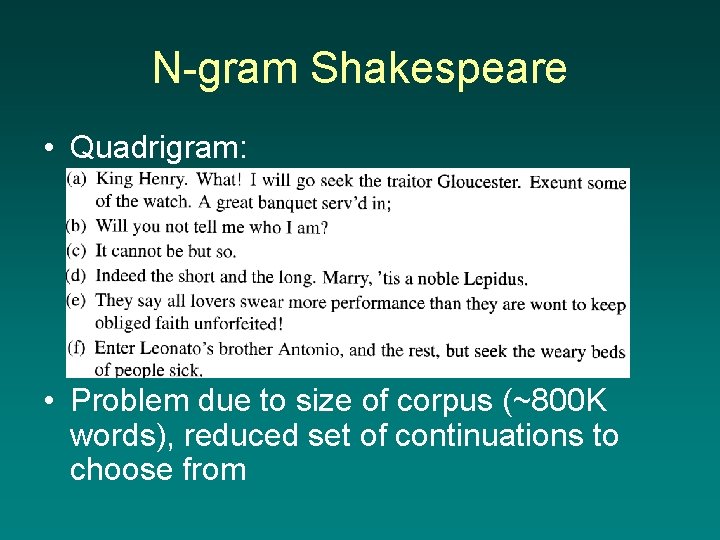

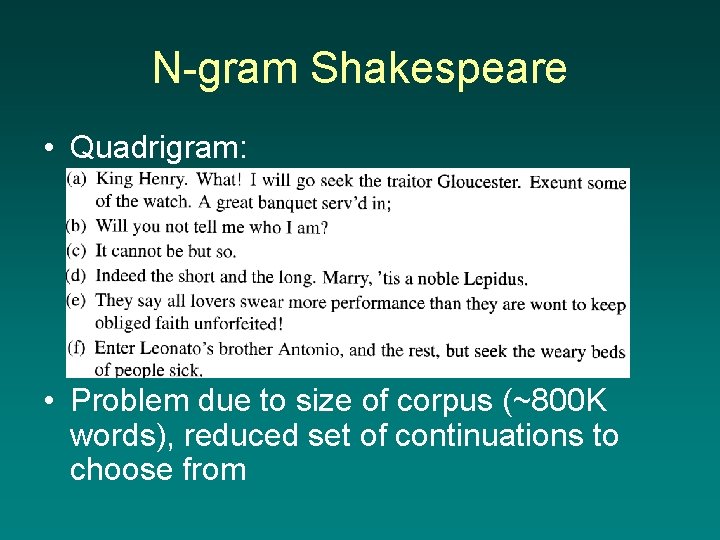

N-gram Shakespeare • Quadrigram: • Problem due to size of corpus (~800 K words), reduced set of continuations to choose from

N-grams & Language ID • If N-gram models represent “language” models, can we use N-gram models for Language Identification? • For example, can we use it to differentiate between text in German, text in English, text in Czech, etc. ? • If so, how? • What’s the lower threshold for the size of text that can ensure successful ID?