Chapter 6 Synchronization Tools Operating System Concepts 10

- Slides: 48

Chapter 6: Synchronization Tools Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Chapter 6: Synchronization Tools n Background n The Critical-Section Problem n Peterson’s Solution n Hardware Support for Synchronization n Mutex Locks n Semaphores n Monitors n Liveness n Evaluation Operating System Concepts – 10 th Edition 6. 2 Silberschatz, Galvin and Gagne © 2018

Objectives n Describe the critical-section problem and illustrate a race condition n Illustrate hardware solutions to the critical-section problem using: l memory barriers, l compare-and-swap operations, and l atomic variables n Demonstrate how: l mutex locks, l semaphores, l monitors, l and condition variables l can be used to solve the critical section problem n Evaluate tools that solve the critical-section problem in low-. Moderate-, and high- contention scenarios Operating System Concepts – 10 th Edition 6. 3 Silberschatz, Galvin and Gagne © 2018

Background n We’ve already seen that processes can execute concurrently or in parallel n A Cooperating Process: l is one that can affect or be affected by other processes executing in the system. l can either directly share a logical address space (that is, both code and data) or be allowed to share data only through shared memory or message passing n A process may be interrupted at any point in its instruction stream ? ? ? l The CPU may be assigned to execute instructions of another process l Thus, concurrent access to shared data may result in data inconsistency ? ? ? n Various mechanisms are there to ensure the orderly execution of cooperating processes that share a logical address space, l so that data consistency is maintained Operating System Concepts – 10 th Edition 6. 4 Silberschatz, Galvin and Gagne © 2018

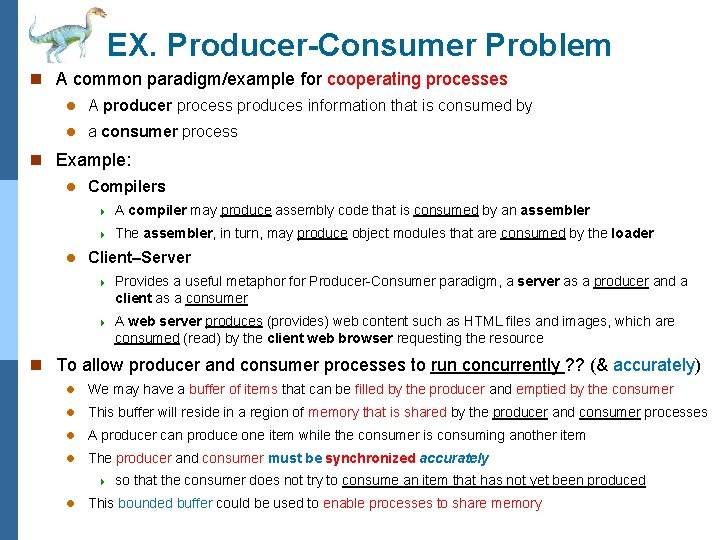

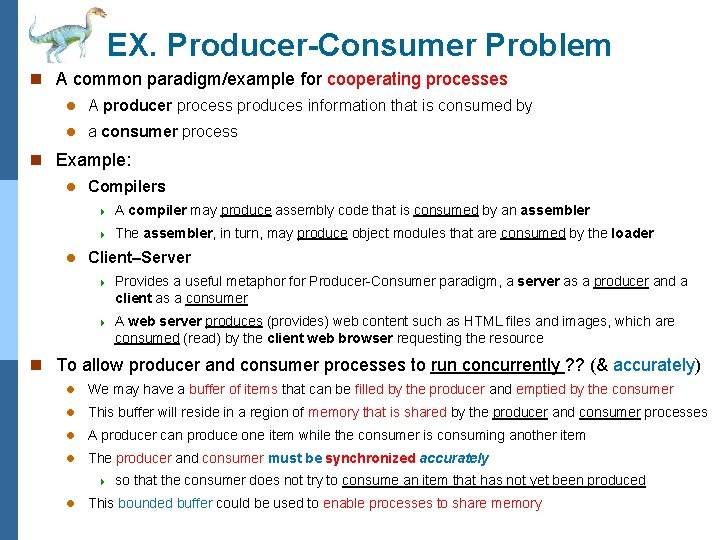

EX. Producer-Consumer Problem n A common paradigm/example for cooperating processes l A producer process produces information that is consumed by l a consumer process n Example: l l Compilers 4 A compiler may produce assembly code that is consumed by an assembler 4 The assembler, in turn, may produce object modules that are consumed by the loader Client–Server 4 Provides a useful metaphor for Producer-Consumer paradigm, a server as a producer and a client as a consumer 4 A web server produces (provides) web content such as HTML files and images, which are consumed (read) by the client web browser requesting the resource n To allow producer and consumer processes to run concurrently ? ? (& accurately) l We may have a buffer of items that can be filled by the producer and emptied by the consumer l This buffer will reside in a region of memory that is shared by the producer and consumer processes l A producer can produce one item while the consumer is consuming another item l The producer and consumer must be synchronized accurately 4 l so that the consumer does not try to consume an item that has not yet been produced This bounded buffer could be used to enable processes to share memory Operating System Concepts – 10 th Edition 6. 5 Silberschatz, Galvin and Gagne © 2018

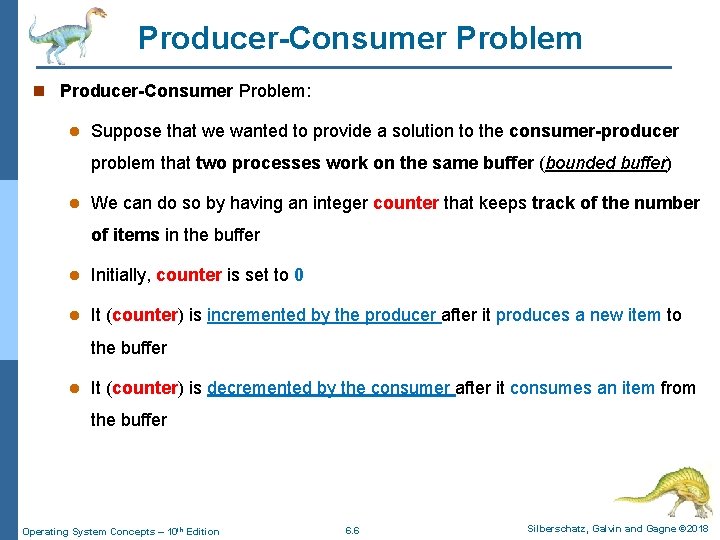

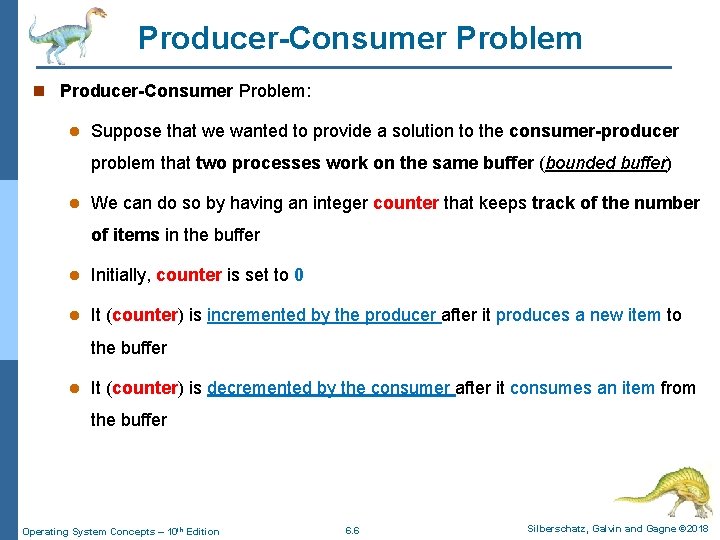

Producer-Consumer Problem n Producer-Consumer Problem: l Suppose that we wanted to provide a solution to the consumer-producer problem that two processes work on the same buffer (bounded buffer) l We can do so by having an integer counter that keeps track of the number of items in the buffer l Initially, counter is set to 0 l It (counter) is incremented by the producer after it produces a new item to the buffer l It (counter) is decremented by the consumer after it consumes an item from the buffer Operating System Concepts – 10 th Edition 6. 6 Silberschatz, Galvin and Gagne © 2018

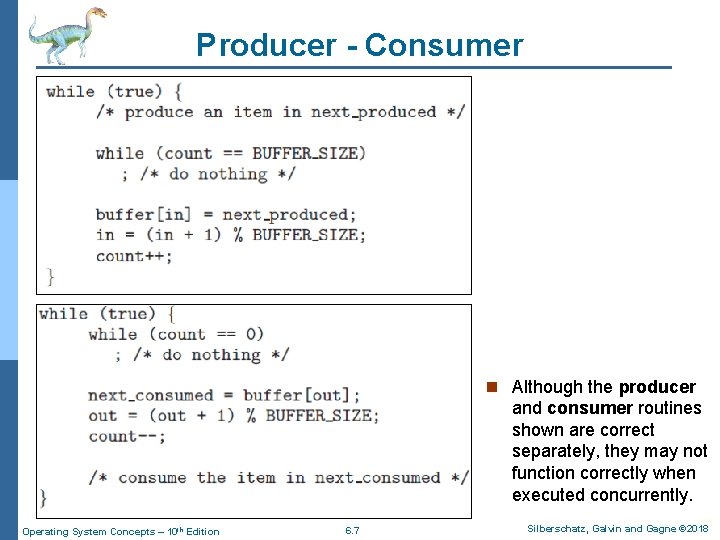

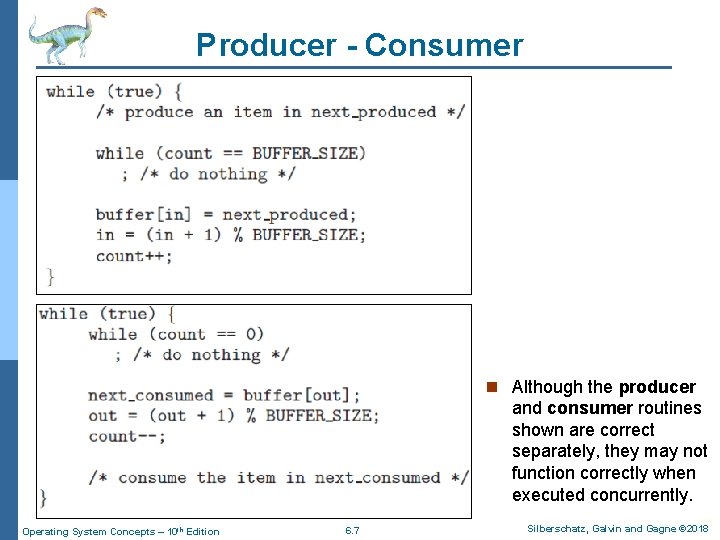

Producer - Consumer n Although the producer and consumer routines shown are correct separately, they may not function correctly when executed concurrently. Operating System Concepts – 10 th Edition 6. 7 Silberschatz, Galvin and Gagne © 2018

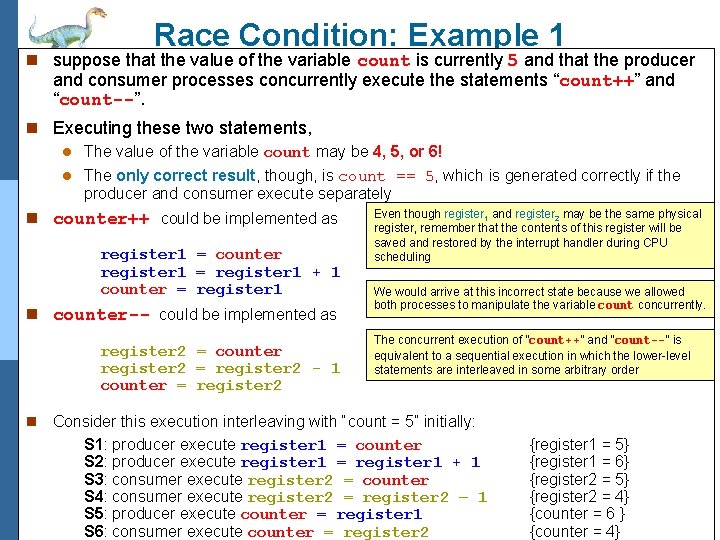

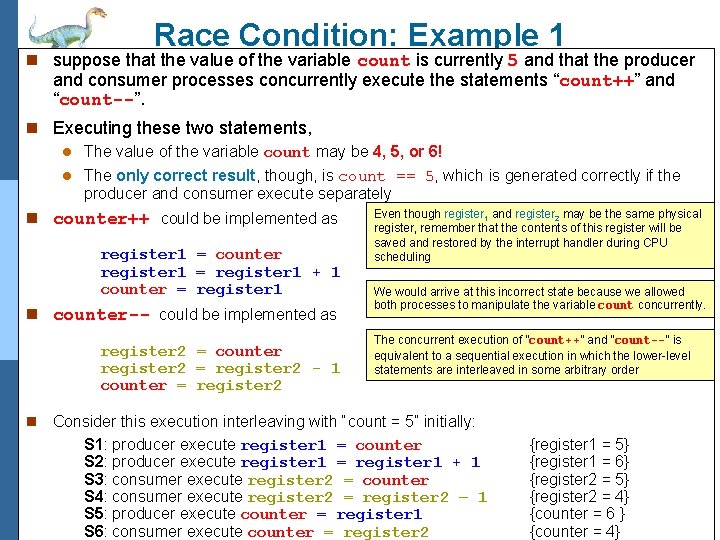

Race Condition: Example 1 n suppose that the value of the variable count is currently 5 and that the producer and consumer processes concurrently execute the statements “count++” and “count--”. n Executing these two statements, l The value of the variable count may be 4, 5, or 6! l The only correct result, though, is count == 5, which is generated correctly if the producer and consumer execute separately n counter++ could be implemented as register 1 = counter register 1 = register 1 + 1 counter = register 1 n counter-- could be implemented as register 2 = counter register 2 = register 2 - 1 counter = register 2 Even though register 1 and register 2 may be the same physical register, remember that the contents of this register will be saved and restored by the interrupt handler during CPU scheduling We would arrive at this incorrect state because we allowed both processes to manipulate the variable count concurrently. The concurrent execution of “count++” and “count--” is equivalent to a sequential execution in which the lower-level statements are interleaved in some arbitrary order Consider this execution interleaving with “count = 5” initially: S 1: producer execute register 1 = counter S 2: producer execute register 1 = register 1 + 1 S 3: consumer execute register 2 = counter S 4: consumer execute register 2 = register 2 – 1 S 5: producer execute counter = register 1 6. 8 Operating System – 10 th Edition S 6: Concepts consumer execute counter = register 2 n {register 1 = 5} {register 1 = 6} {register 2 = 5} {register 2 = 4} {counter = 6 } Silberschatz, {counter Galvin = 4} and Gagne © 2018

Process Synchronization & Coordination n In a Race Condition l A situation where several processes are allowed to access and manipulate the same shared data concurrently, and l The outcome of the execution depends on the particular order in which the access takes place n To guard against the race condition above and guarantee correctness, we need to l ensure that only one process at a time can manipulate the variable count l synchronize these processes in some way n These situations occur frequently in OS as different parts of the system manipulate resources l The prominence of multicore systems has brought an increased emphasis on developing multithreaded applications l Several threads that are quite possibly sharing data and running in parallel on different processing cores l Clearly, we want any changes that result from such activities not to interfere with one another Operating System Concepts – 10 th Edition 6. 9 Silberschatz, Galvin and Gagne © 2018

Critical Section Problem Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

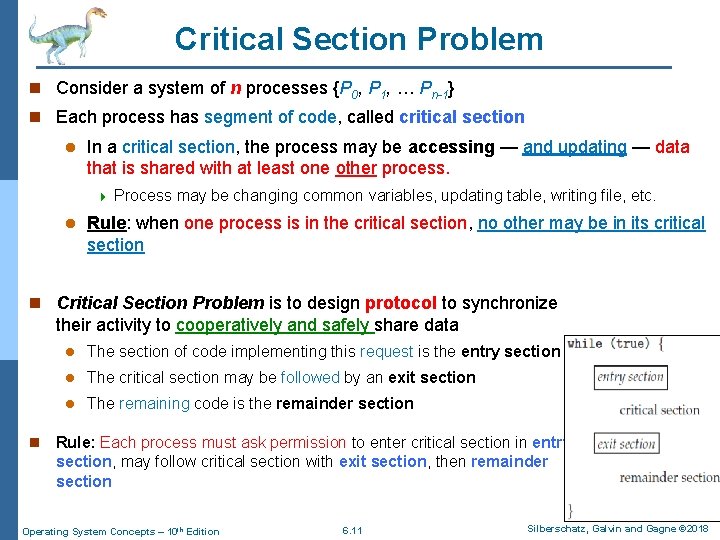

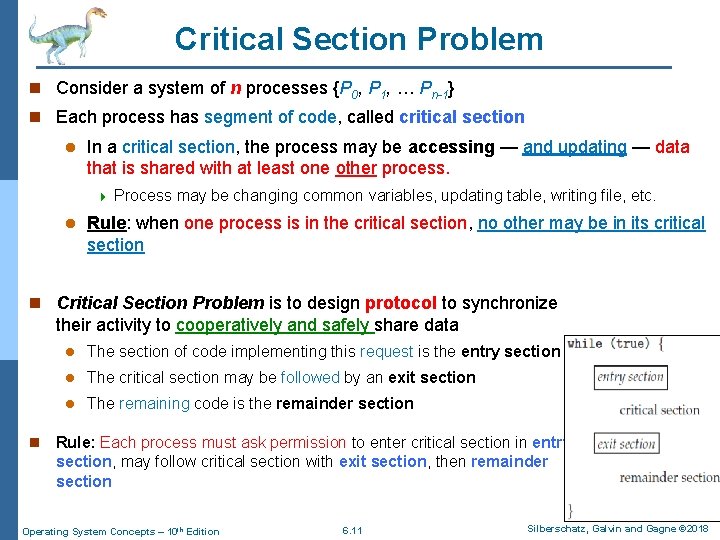

Critical Section Problem n Consider a system of n processes {P 0, P 1, … Pn-1} n Each process has segment of code, called critical section l In a critical section, the process may be accessing — and updating — data that is shared with at least one other process. 4 l Process may be changing common variables, updating table, writing file, etc. Rule: when one process is in the critical section, no other may be in its critical section n Critical Section Problem is to design protocol to synchronize their activity to cooperatively and safely share data n l The section of code implementing this request is the entry section l The critical section may be followed by an exit section l The remaining code is the remainder section Rule: Each process must ask permission to enter critical section in entry section, may follow critical section with exit section, then remainder section Operating System Concepts – 10 th Edition 6. 11 Silberschatz, Galvin and Gagne © 2018

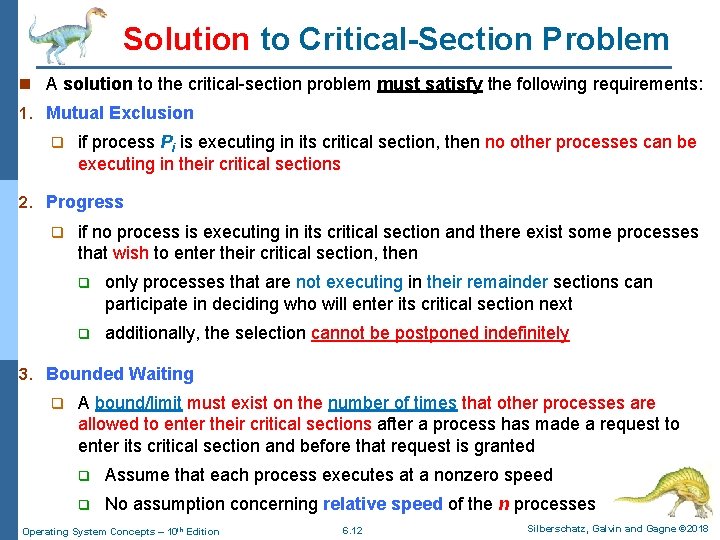

Solution to Critical-Section Problem n A solution to the critical-section problem must satisfy the following requirements: 1. Mutual Exclusion q if process Pi is executing in its critical section, then no other processes can be executing in their critical sections 2. Progress q if no process is executing in its critical section and there exist some processes that wish to enter their critical section, then q only processes that are not executing in their remainder sections can participate in deciding who will enter its critical section next q additionally, the selection cannot be postponed indefinitely 3. Bounded Waiting q A bound/limit must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted q Assume that each process executes at a nonzero speed q No assumption concerning relative speed of the n processes Operating System Concepts – 10 th Edition 6. 12 Silberschatz, Galvin and Gagne © 2018

Critical-Section Handling in OS n At a given point in time, many kernel-mode processes may be active in the OS n As a result, the code implementing an OS (kernel code) is subject to several possible race conditions n Example 1: l Kernel process that maintains the kernel data structure, i. e. the list of all opened files l This list must be modified/updated when a new file is opened or closed l If two processes were to open files simultaneously, the separate updates to this list could result in a race condition n Example 2: l Kernel process that maintains the structures for maintaining memory allocation? This process is prone to possible race conditions. Kernel developers have to ensure that the operating system is free from such potential race conditions n However, the critical-section problem could be solved simply in a single-core environment, of course, if we could prevent interrupts from occurring while a shared variable is being modified n Unfortunately, this solution is not as feasible in a multiprocessor environments Operating System Concepts – 10 th Edition 6. 13 Silberschatz, Galvin and Gagne © 2018

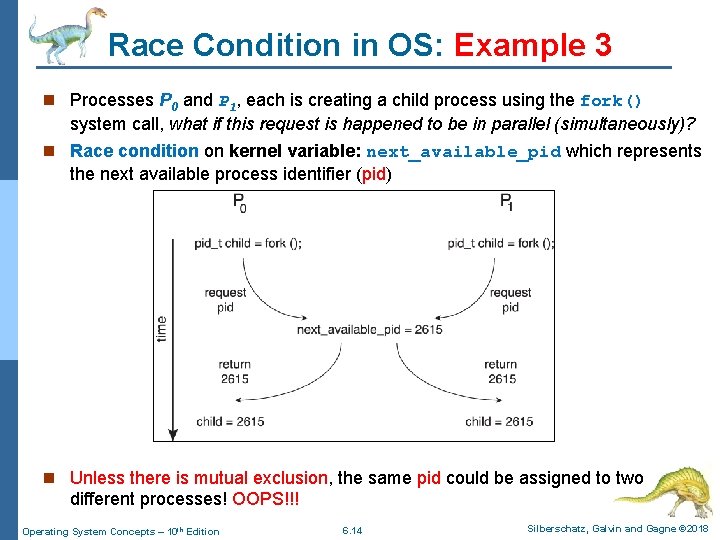

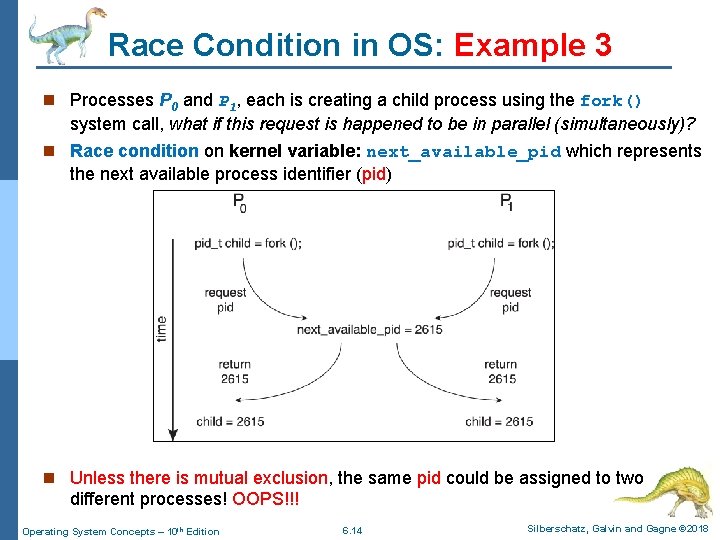

Race Condition in OS: Example 3 n Processes P 0 and P 1, each is creating a child process using the fork() system call, what if this request is happened to be in parallel (simultaneously)? n Race condition on kernel variable: next_available_pid which represents the next available process identifier (pid) n Unless there is mutual exclusion, the same pid could be assigned to two different processes! OOPS!!! Operating System Concepts – 10 th Edition 6. 14 Silberschatz, Galvin and Gagne © 2018

Critical-Section Handling in OS n Two general approaches are used to handle critical sections in OS 1. Non-Preemptive Kernels 4 Does not allow a process running in kernel mode to be preempted 4 Kernel-mode process will run until it exits kernel mode, blocks, or voluntarily yields control of the CPU 4 Essentially, this approach is free of race conditions in kernel mode; – as only one process is active in the kernel at a time 2. Preemptive Kernels 4 Allows a process to be preempted while it is running in kernel mode 4 A preemptive kernel may be more responsive – No kernel-mode process will run for an arbitrarily long period of time – A preemptive kernel is more suitable for real-time programming 4 However, preemptive kernels are especially difficult to design for SMP architectures, – since in these environments it is possible for two kernel-mode processes to run simultaneously on different CPU cores Operating System Concepts – 10 th Edition 6. 15 Silberschatz, Galvin and Gagne © 2018

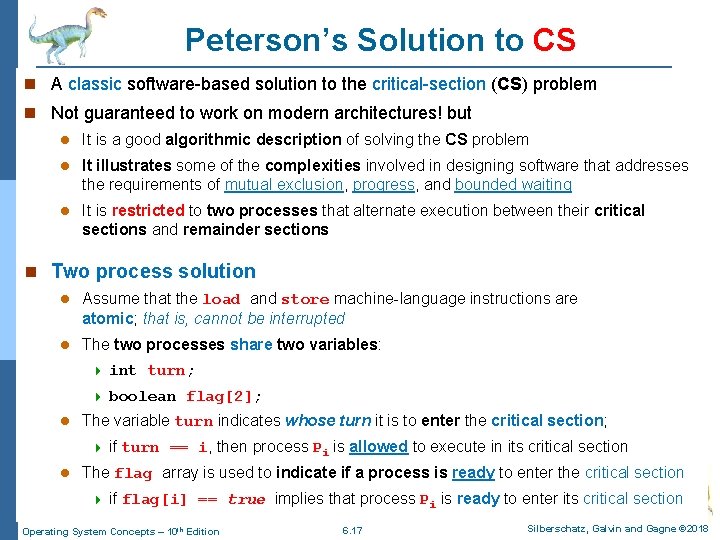

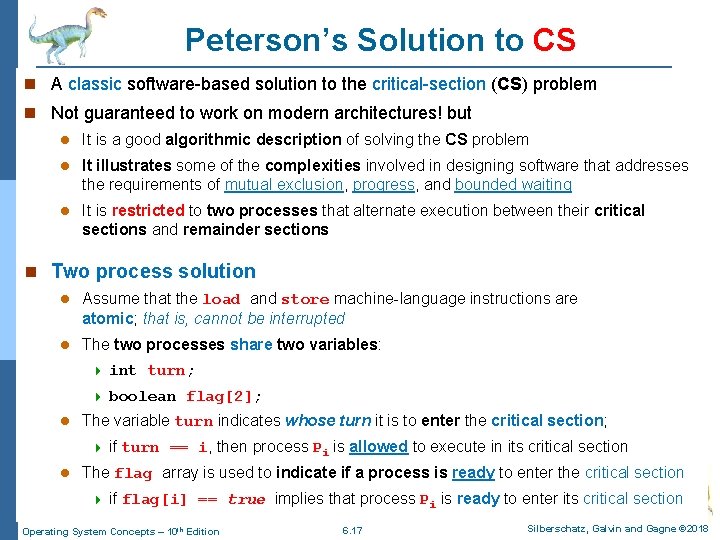

Peterson’s Solution One of the software-based solutions to the CS problem We refer to it as a software-based solution because it involves no special support from the operating system nor it requires any specific hardware instructions to ensure mutual exclusion. Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Peterson’s Solution to CS n A classic software-based solution to the critical-section (CS) problem n Not guaranteed to work on modern architectures! but l It is a good algorithmic description of solving the CS problem l It illustrates some of the complexities involved in designing software that addresses the requirements of mutual exclusion, progress, and bounded waiting l It is restricted to two processes that alternate execution between their critical sections and remainder sections n Two process solution l Assume that the load and store machine-language instructions are atomic; that is, cannot be interrupted l The two processes share two variables: l 4 int turn; 4 boolean flag[2]; The variable turn indicates whose turn it is to enter the critical section; 4 l if turn == i, then process Pi is allowed to execute in its critical section The flag array is used to indicate if a process is ready to enter the critical section 4 if flag[i] == true implies that process Pi is ready to enter its critical section Operating System Concepts – 10 th Edition 6. 17 Silberschatz, Galvin and Gagne © 2018

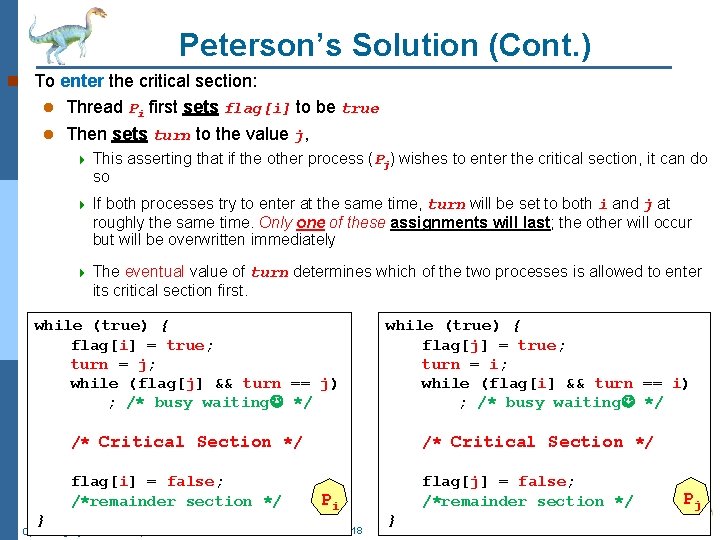

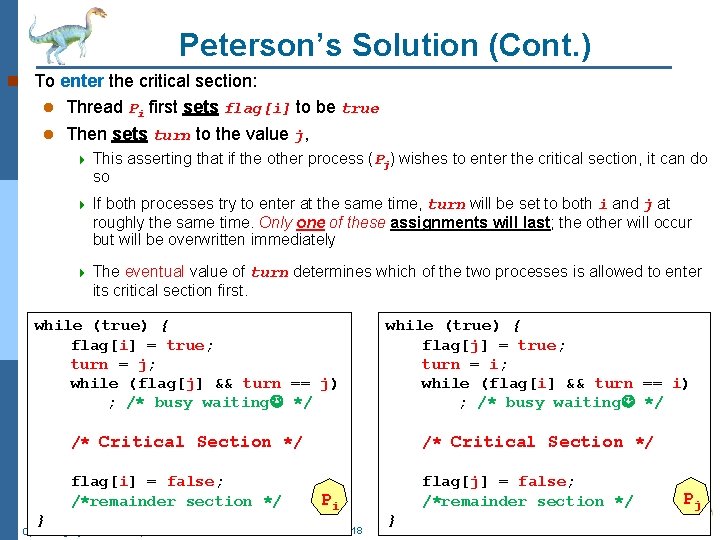

Peterson’s Solution (Cont. ) n To enter the critical section: l Thread Pi first sets flag[i] to be true l Then sets turn to the value j, 4 This asserting that if the other process (Pj) wishes to enter the critical section, it can do so 4 If both processes try to enter at the same time, turn will be set to both i and j at roughly the same time. Only one of these assignments will last; the other will occur but will be overwritten immediately 4 The eventual value of turn determines which of the two processes is allowed to enter its critical section first. while (true) { flag[i] = true; turn = j; while (flag[j] && turn == j) ; /* busy waiting */ while (true) { flag[j] = true; turn = i; while (flag[i] && turn == i) ; /* busy waiting */ /* Critical Section */ flag[i] = false; /*remainder section */ flag[j] = false; /*remainder section */ } Operating System Concepts – 10 th Edition Pi 6. 18 } Pj Silberschatz, Galvin and Gagne © 2018

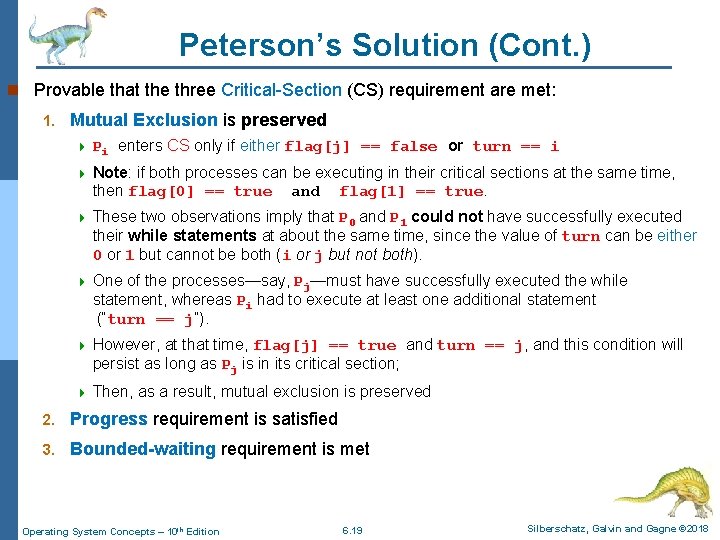

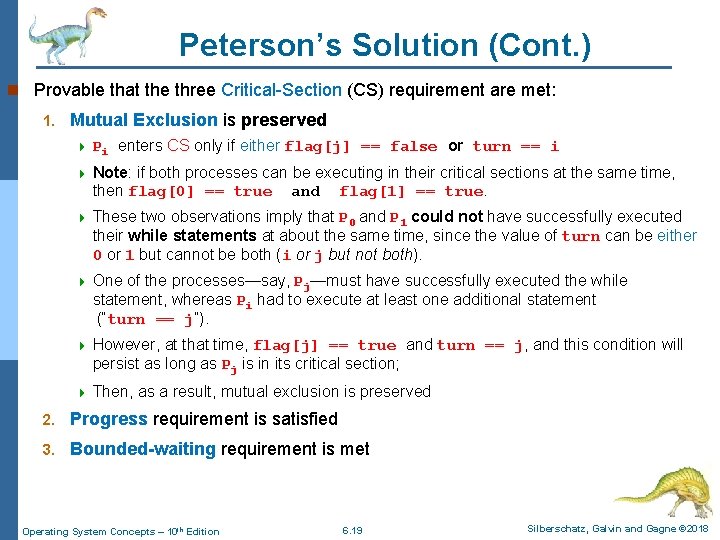

Peterson’s Solution (Cont. ) n Provable that the three Critical-Section (CS) requirement are met: 1. Mutual Exclusion is preserved 4 Pi enters CS only if either flag[j] == false or turn == i 4 Note: if both processes can be executing in their critical sections at the same time, then flag[0] == true and flag[1] == true. 4 These two observations imply that P 0 and P 1 could not have successfully executed their while statements at about the same time, since the value of turn can be either 0 or 1 but cannot be both (i or j but not both). 4 One of the processes—say, Pj—must have successfully executed the while statement, whereas Pi had to execute at least one additional statement (“turn == j”). 4 However, at that time, flag[j] == true and turn == j, and this condition will persist as long as Pj is in its critical section; 4 Then, as a result, mutual exclusion is preserved 2. Progress requirement is satisfied 3. Bounded-waiting requirement is met Operating System Concepts – 10 th Edition 6. 19 Silberschatz, Galvin and Gagne © 2018

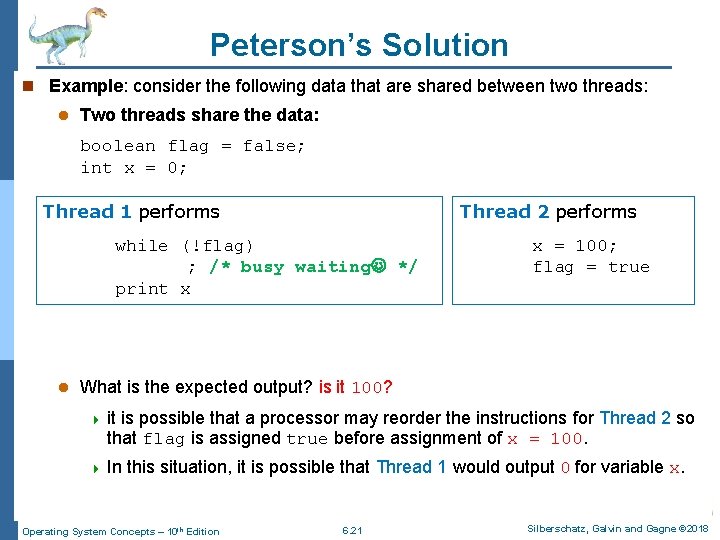

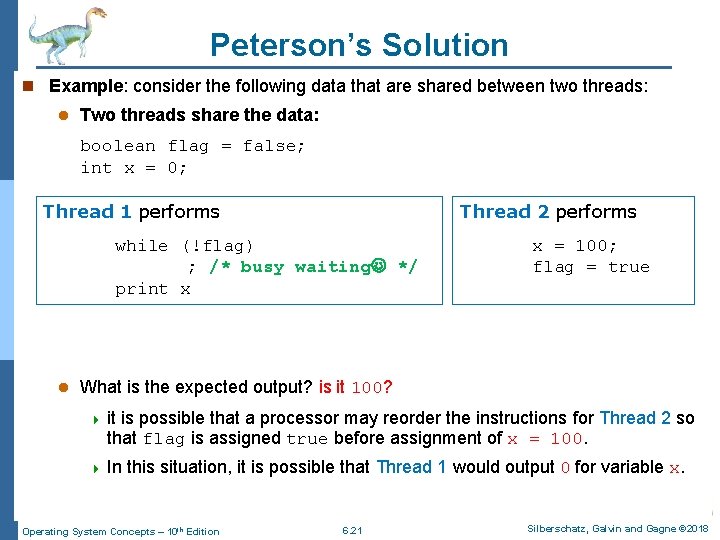

Peterson’s Solution n Although useful for demonstrating an algorithm, Peterson’s Solution is not guaranteed to work on modern architectures n Understanding why it will not work is also useful for better understanding race conditions n Nowadays, to improve performance, processors and/or compilers may reorder operations that have no dependencies l For single-threaded this is OK as the result will always be the same l For multithreaded the reordering may produce inconsistent or unexpected results! Operating System Concepts – 10 th Edition 6. 20 Silberschatz, Galvin and Gagne © 2018

Peterson’s Solution n Example: consider the following data that are shared between two threads: l Two threads share the data: boolean flag = false; int x = 0; Thread 1 1 performs Thread 2 performs while (!flag) ; ; /*/* busy waiting */*/ busy waiting print x x print x = 100; flag = true Thread 2 performs x = 100; flag l = true What is the expected output? is it 100? 4 it is possible that a processor may reorder the instructions for Thread 2 so that flag is assigned true before assignment of x = 100. 4 In this situation, it is possible that Thread 1 would output 0 for variable x. Operating System Concepts – 10 th Edition 6. 21 Silberschatz, Galvin and Gagne © 2018

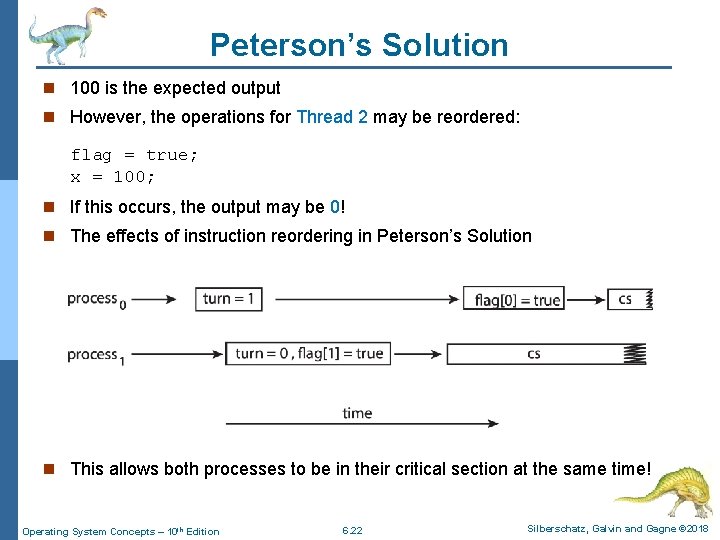

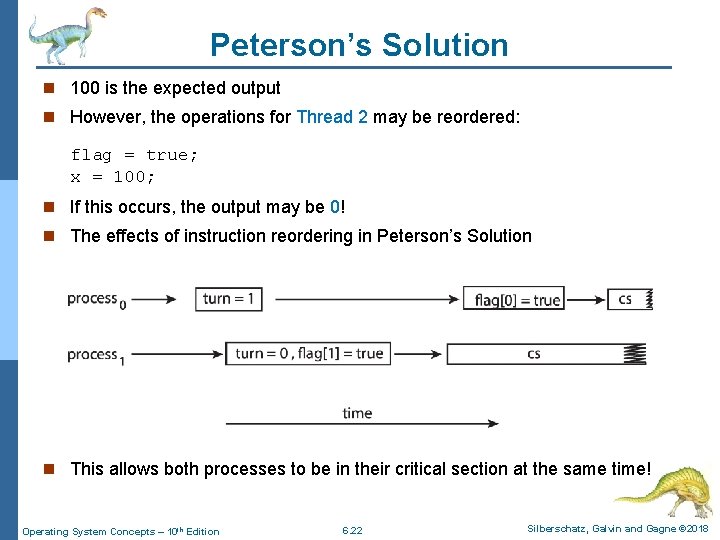

Peterson’s Solution n 100 is the expected output n However, the operations for Thread 2 may be reordered: flag = true; x = 100; n If this occurs, the output may be 0! n The effects of instruction reordering in Peterson’s Solution n This allows both processes to be in their critical section at the same time! Operating System Concepts – 10 th Edition 6. 22 Silberschatz, Galvin and Gagne © 2018

Hardware Support for Synchronization Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

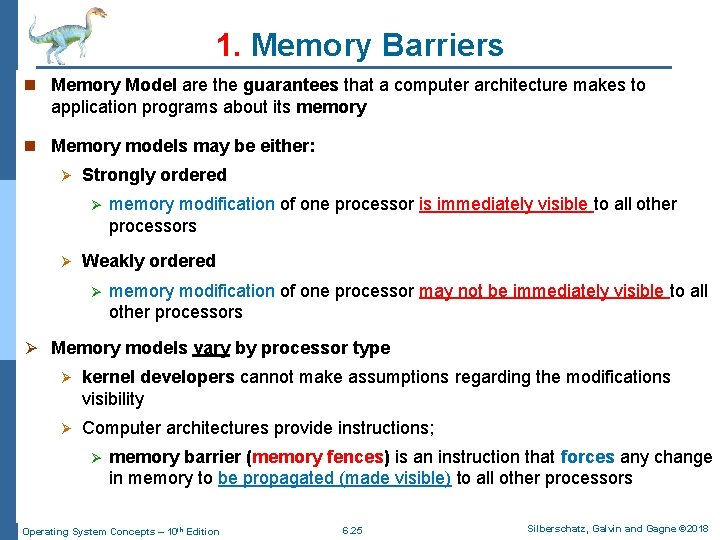

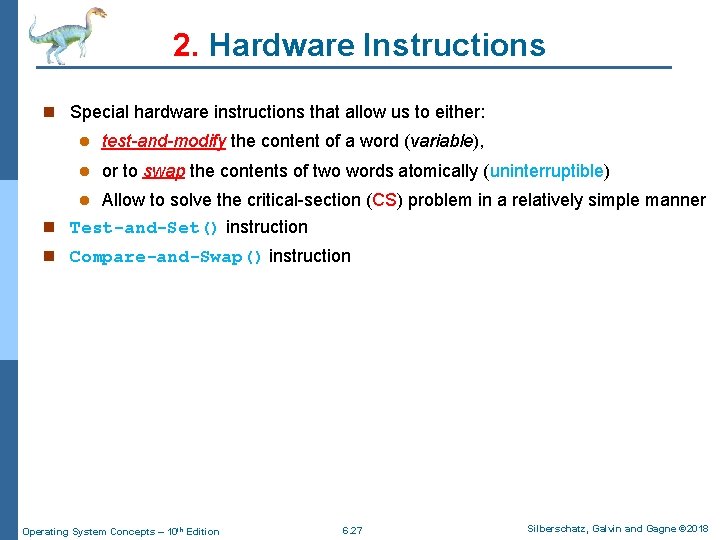

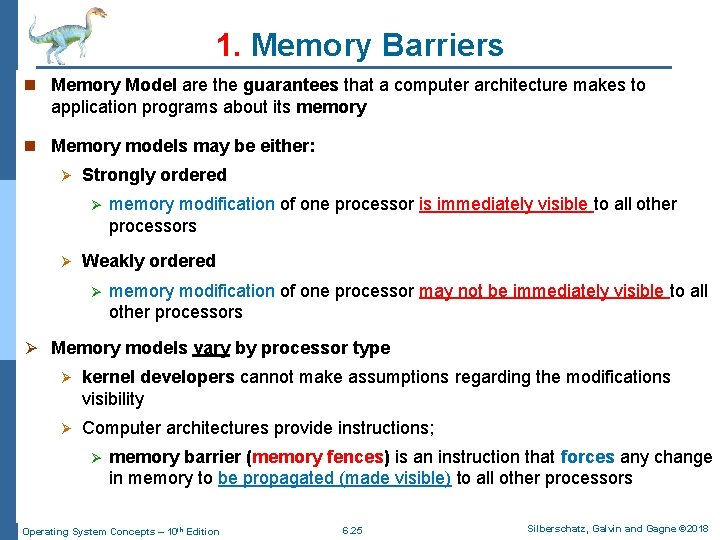

Synchronization Hardware n Uniprocessors – could disable interrupts Currently running code would execute without preemption l Generally too inefficient on multiprocessor systems 4 Operating systems using this not broadly scalable l n Multiprocessors: systems provide hardware support for implementing the critical section code l Presented as primitive operations that provide support for solving the critical-section problem l These Primitive operations can be used directly as synchronization tools l Can be used to form the foundation of more abstract synchronization mechanisms (High-Level Software Tools) n We will look at three forms of hardware support: 1. Memory Barriers 2. Hardware Instructions 3. Atomic Variables Operating System Concepts – 10 th Edition 6. 24 Silberschatz, Galvin and Gagne © 2018

1. Memory Barriers n Memory Model are the guarantees that a computer architecture makes to application programs about its memory n Memory models may be either: Ø Strongly ordered Ø Ø memory modification of one processor is immediately visible to all other processors Weakly ordered Ø memory modification of one processor may not be immediately visible to all other processors Ø Memory models vary by processor type Ø kernel developers cannot make assumptions regarding the modifications visibility Ø Computer architectures provide instructions; Ø memory barrier (memory fences) is an instruction that forces any change in memory to be propagated (made visible) to all other processors Operating System Concepts – 10 th Edition 6. 25 Silberschatz, Galvin and Gagne © 2018

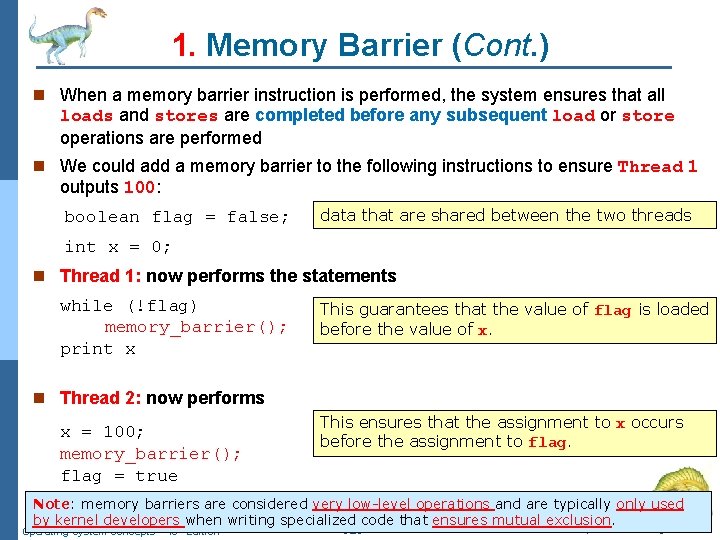

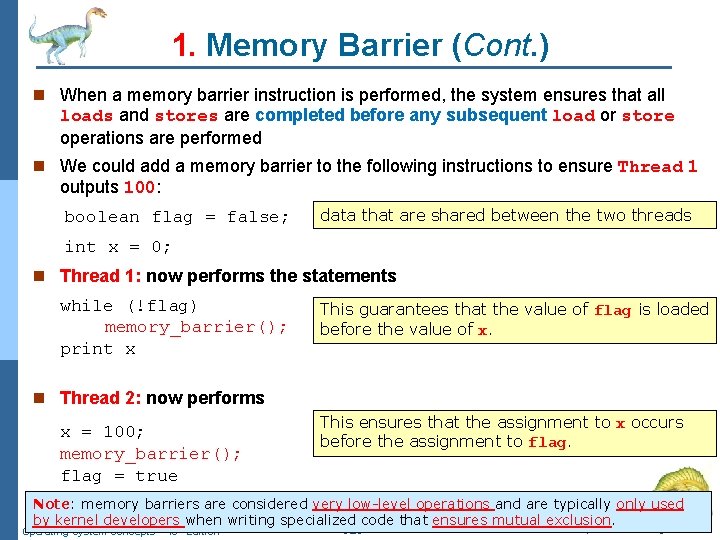

1. Memory Barrier (Cont. ) n When a memory barrier instruction is performed, the system ensures that all loads and stores are completed before any subsequent load or store operations are performed n We could add a memory barrier to the following instructions to ensure Thread 1 outputs 100: boolean flag = false; data that are shared between the two threads int x = 0; n Thread 1: now performs the statements while (!flag) memory_barrier(); print x This guarantees that the value of flag is loaded before the value of x. n Thread 2: now performs x = 100; memory_barrier(); flag = true This ensures that the assignment to x occurs before the assignment to flag. Note: memory barriers are considered very low-level operations and are typically only used by kernel developersth when writing specialized code that ensures mutual exclusion. Silberschatz, Galvin and Gagne © 2018 Operating System Concepts – 10 Edition 6. 26

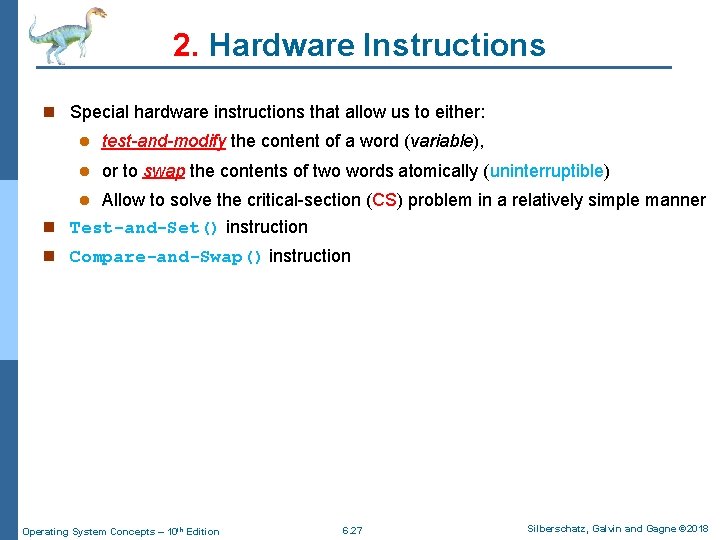

2. Hardware Instructions n Special hardware instructions that allow us to either: l test-and-modify the content of a word (variable), l or to swap the contents of two words atomically (uninterruptible) l Allow to solve the critical-section (CS) problem in a relatively simple manner n Test-and-Set() instruction n Compare-and-Swap() instruction Operating System Concepts – 10 th Edition 6. 27 Silberschatz, Galvin and Gagne © 2018

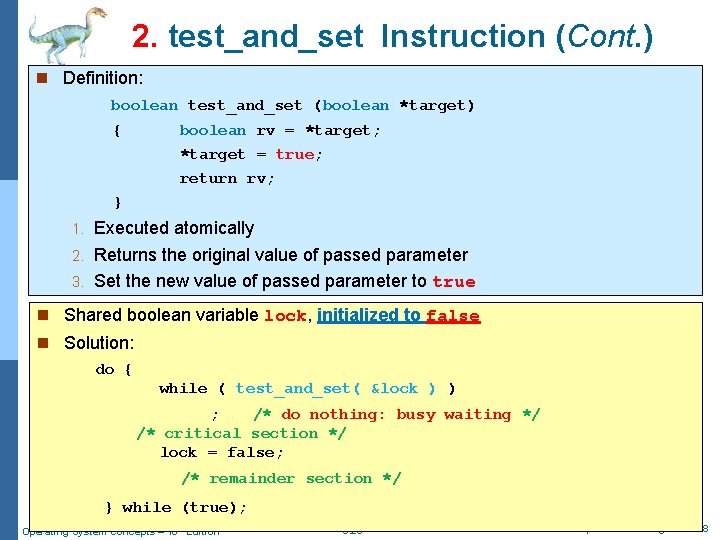

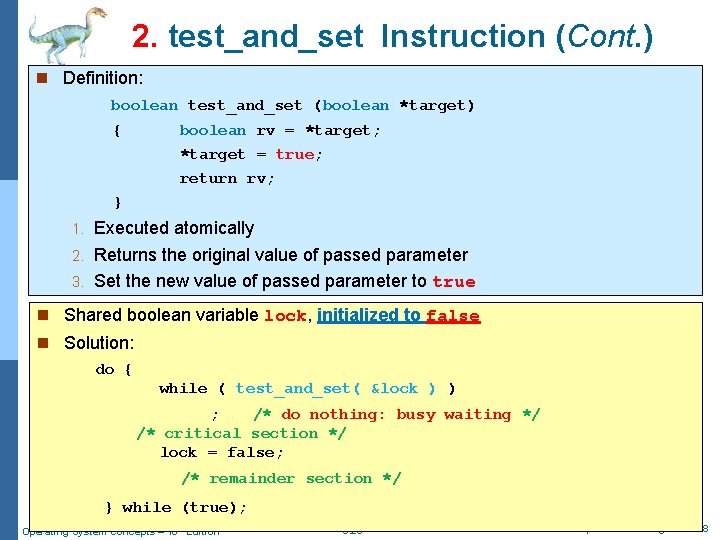

2. test_and_set Instruction (Cont. ) n Definition: boolean test_and_set (boolean *target) { boolean rv = *target; *target = true; return rv; } Executed atomically 2. Returns the original value of passed parameter 3. Set the new value of passed parameter to true 1. n Shared boolean variable lock, initialized to false n Solution: do { while ( test_and_set( &lock ) ) ; /* do nothing: busy waiting */ /* critical section */ lock = false; /* remainder section */ } while (true); Operating System Concepts – 10 th Edition 6. 28 Silberschatz, Galvin and Gagne © 2018

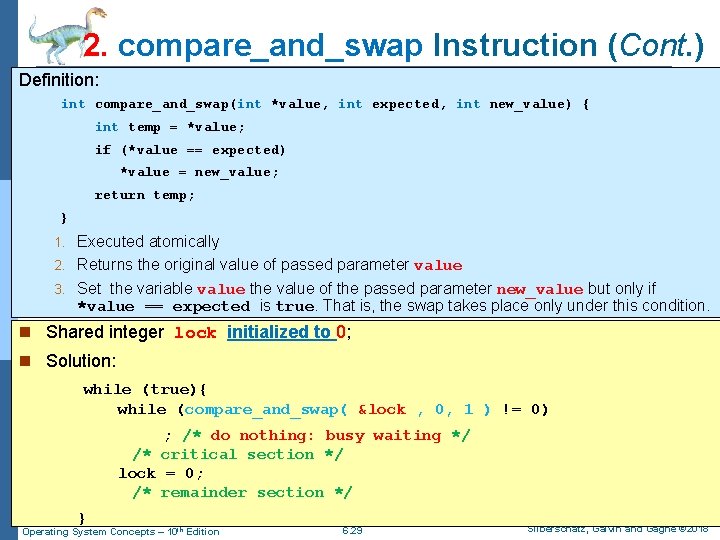

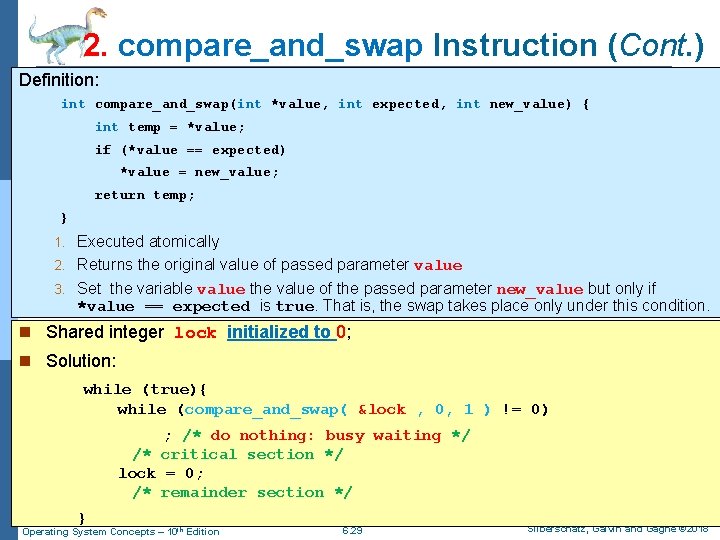

2. compare_and_swap Instruction (Cont. ) Definition: int compare_and_swap(int *value, int expected, int new_value) { int temp = *value; if (*value == expected) *value = new_value; return temp; } Executed atomically 2. Returns the original value of passed parameter value 3. Set the variable value the value of the passed parameter new_value but only if *value == expected is true. That is, the swap takes place only under this condition. 1. n Shared integer lock initialized to 0; n Solution: while (true){ while (compare_and_swap( &lock , 0, 1 ) != 0) ; /* do nothing: busy waiting */ /* critical section */ lock = 0; /* remainder section */ } Operating System Concepts – 10 th Edition 6. 29 Silberschatz, Galvin and Gagne © 2018

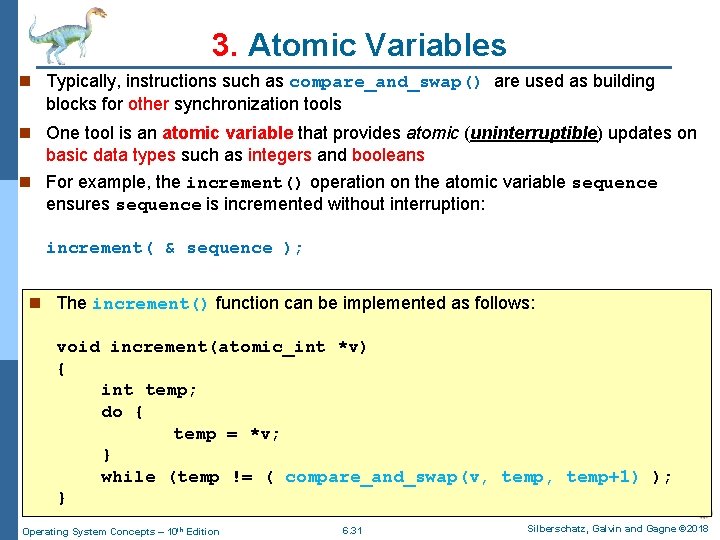

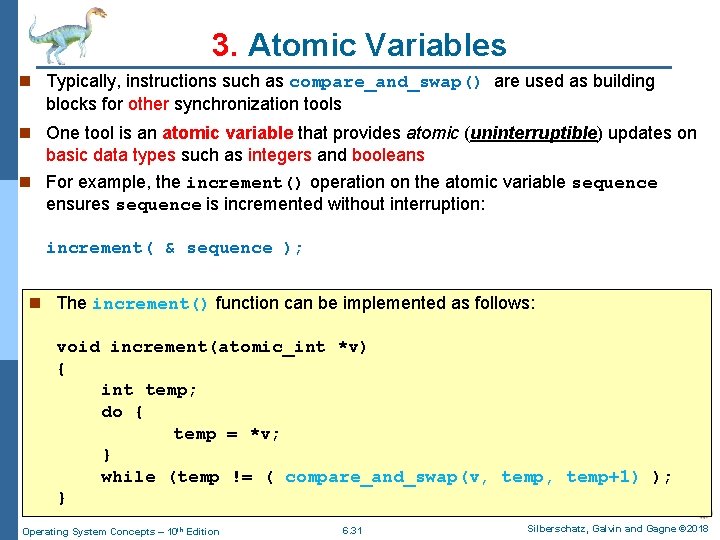

3. Atomic Variables n Typically, instructions such as compare_and_swap() are used as building blocks for other synchronization tools n One tool is an atomic variable that provides atomic (uninterruptible) updates on basic data types such as integers and booleans n For example, the increment() operation on the atomic variable sequence ensures sequence is incremented without interruption: increment( & sequence ); n The increment() function can be implemented as follows: void increment(atomic_int *v) { int temp; do { temp = *v; } while (temp != ( compare_and_swap(v, temp+1) ); } Operating System Concepts – 10 th Edition 6. 31 Silberschatz, Galvin and Gagne © 2018

Higher-Level Software Tools to the CS Problem 1. Mutex Locks 2. Semaphores 3. Monitors Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Software Based Tools for CS n The presented hardware-based solutions to the critical-section problem are l complicated l as well as generally inaccessible to application programmers n Instead, OS designers l Build higher-level software tools to solve the critical-section problem l Examples: 1. Mutex Locks 2. Semaphores 3. Monitors Operating System Concepts – 10 th Edition 6. 33 Silberschatz, Galvin and Gagne © 2018

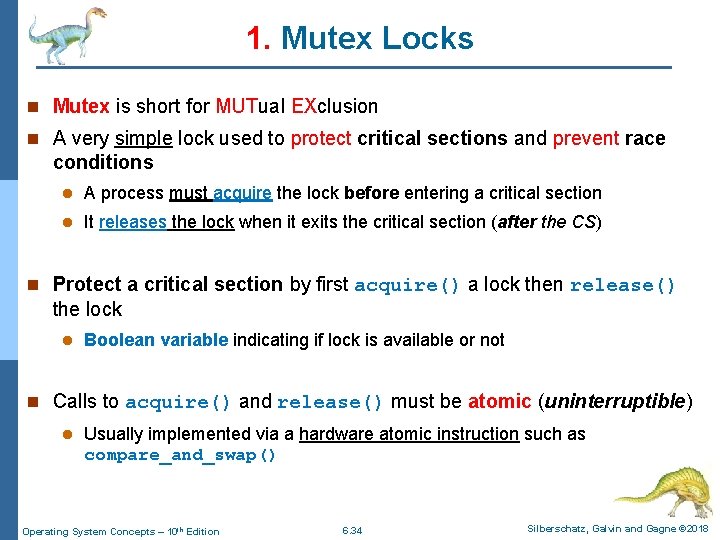

1. Mutex Locks n Mutex is short for MUTual EXclusion n A very simple lock used to protect critical sections and prevent race conditions l A process must acquire the lock before entering a critical section l It releases the lock when it exits the critical section (after the CS) n Protect a critical section by first acquire() a lock then release() the lock l Boolean variable indicating if lock is available or not n Calls to acquire() and release() must be atomic (uninterruptible) l Usually implemented via a hardware atomic instruction such as compare_and_swap() Operating System Concepts – 10 th Edition 6. 34 Silberschatz, Galvin and Gagne © 2018

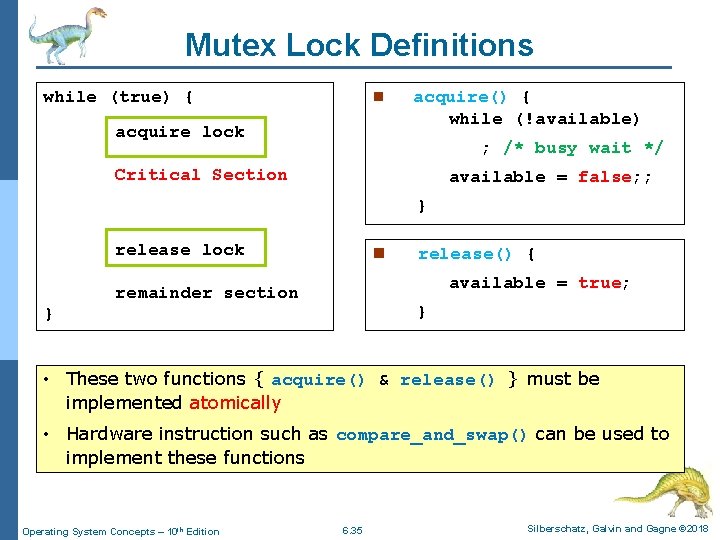

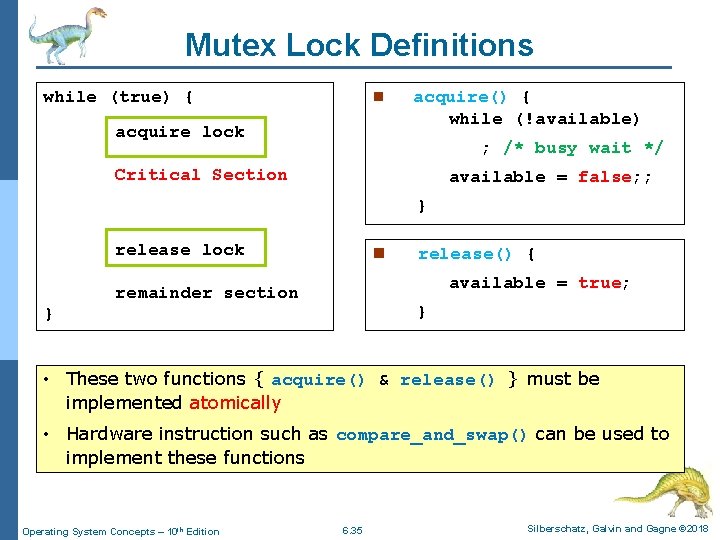

Mutex Lock Definitions while (true) { n acquire lock acquire() { while (!available) ; /* busy wait */ Critical Section available = false; ; } release lock n release() { available = true; remainder section } } • These two functions { acquire() & release() } must be implemented atomically • Hardware instruction such as compare_and_swap() can be used to implement these functions Operating System Concepts – 10 th Edition 6. 35 Silberschatz, Galvin and Gagne © 2018

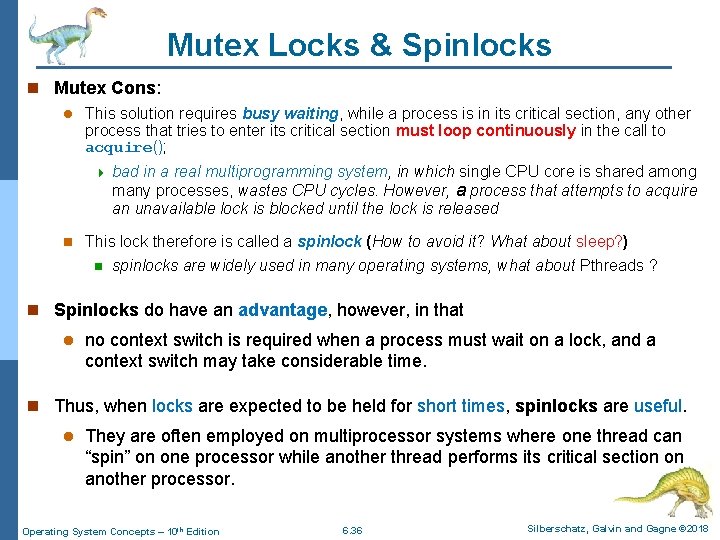

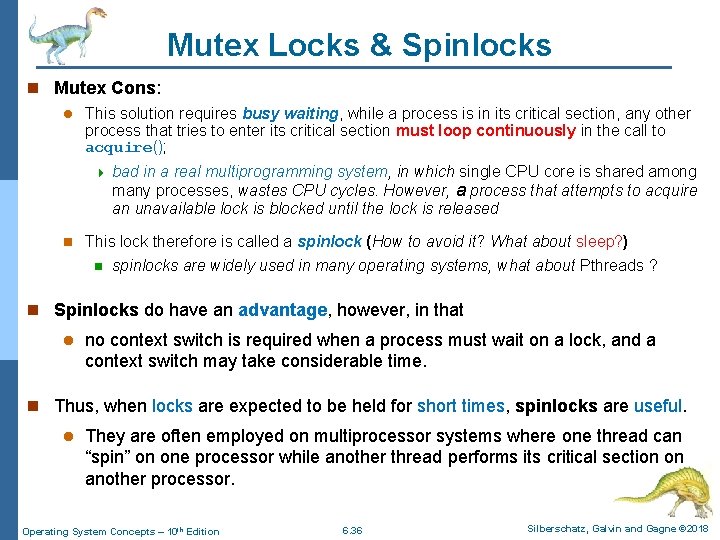

Mutex Locks & Spinlocks n Mutex Cons: l This solution requires busy waiting, while a process is in its critical section, any other process that tries to enter its critical section must loop continuously in the call to acquire(); 4 n bad in a real multiprogramming system, in which single CPU core is shared among many processes, wastes CPU cycles. However, a process that attempts to acquire an unavailable lock is blocked until the lock is released This lock therefore is called a spinlock (How to avoid it? What about sleep? ) n spinlocks are widely used in many operating systems, what about Pthreads ? n Spinlocks do have an advantage, however, in that l no context switch is required when a process must wait on a lock, and a context switch may take considerable time. n Thus, when locks are expected to be held for short times, spinlocks are useful. l They are often employed on multiprocessor systems where one thread can “spin” on one processor while another thread performs its critical section on another processor. Operating System Concepts – 10 th Edition 6. 36 Silberschatz, Galvin and Gagne © 2018

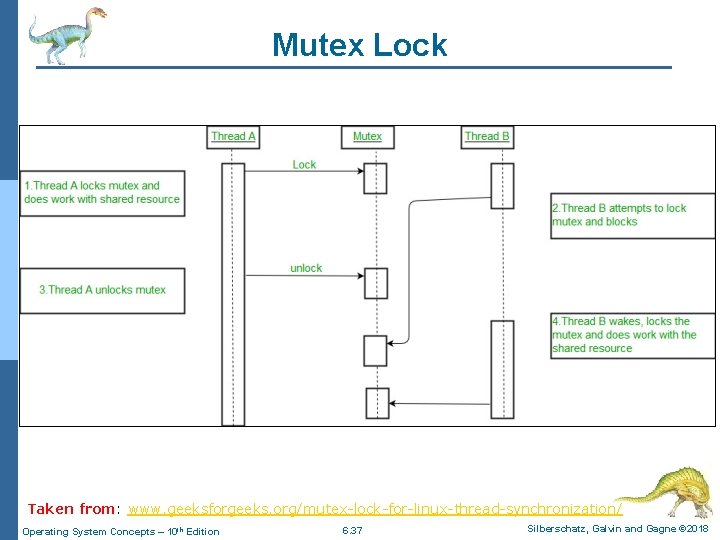

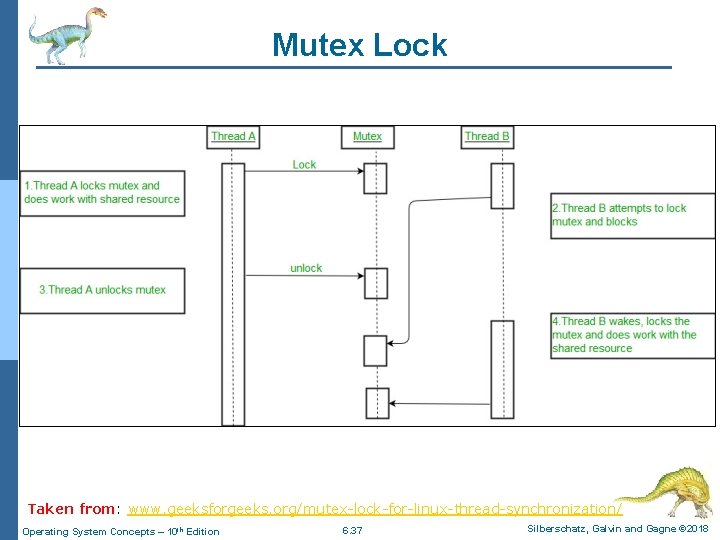

Mutex Lock Taken from: www. geeksforgeeks. org/mutex-lock-for-linux-thread-synchronization/ Operating System Concepts – 10 th Edition 6. 37 Silberschatz, Galvin and Gagne © 2018

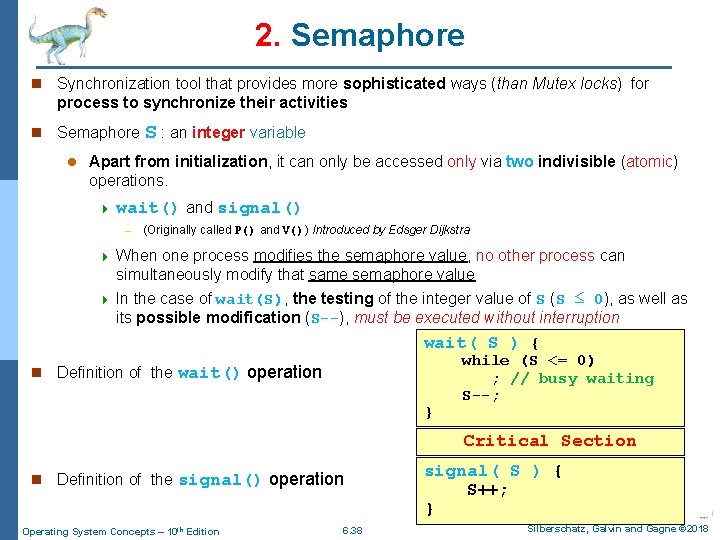

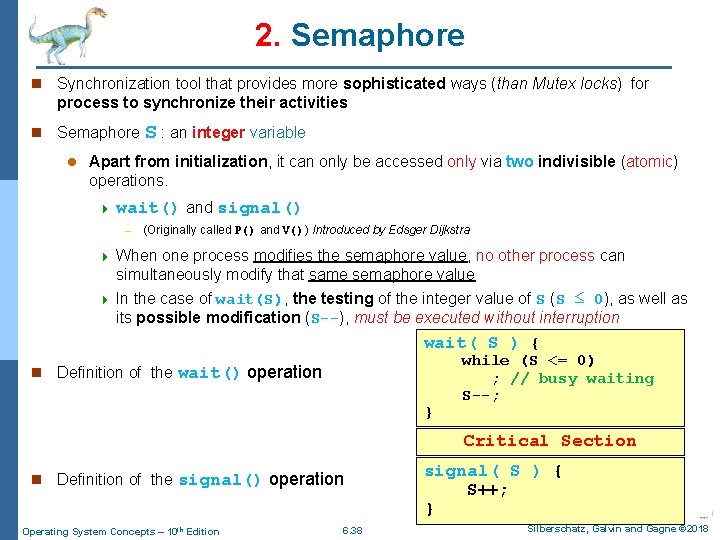

2. Semaphore n Synchronization tool that provides more sophisticated ways (than Mutex locks) for process to synchronize their activities n Semaphore S : an integer variable l Apart from initialization, it can only be accessed only via two indivisible (atomic) operations. 4 wait() and – signal() (Originally called P() and V()) Introduced by Edsger Dijkstra 4 When one process modifies the semaphore value, no other process can simultaneously modify that same semaphore value 4 In the case of wait(S), the testing of the integer value of S (S ≤ 0), as well as its possible modification (S--), must be executed without interruption wait( S ) { n Definition of the wait() operation } while (S <= 0) ; // busy waiting S--; Critical Section n Definition of the signal() operation Operating System Concepts – 10 th Edition 6. 38 signal( S ) { S++; } Silberschatz, Galvin and Gagne © 2018

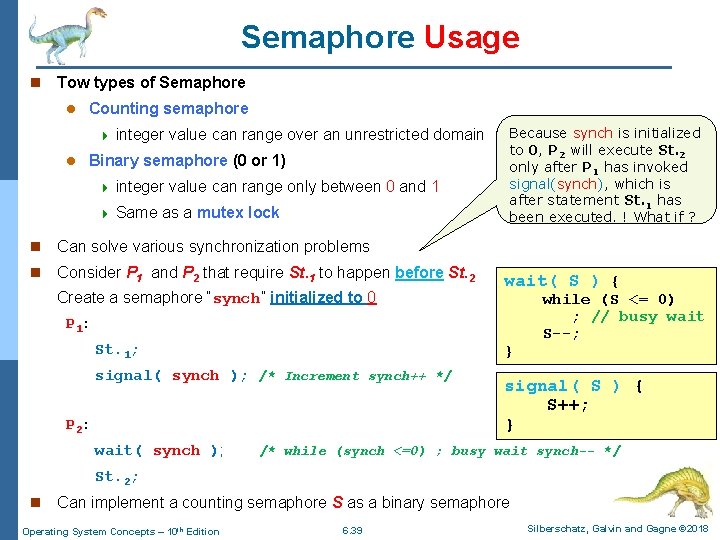

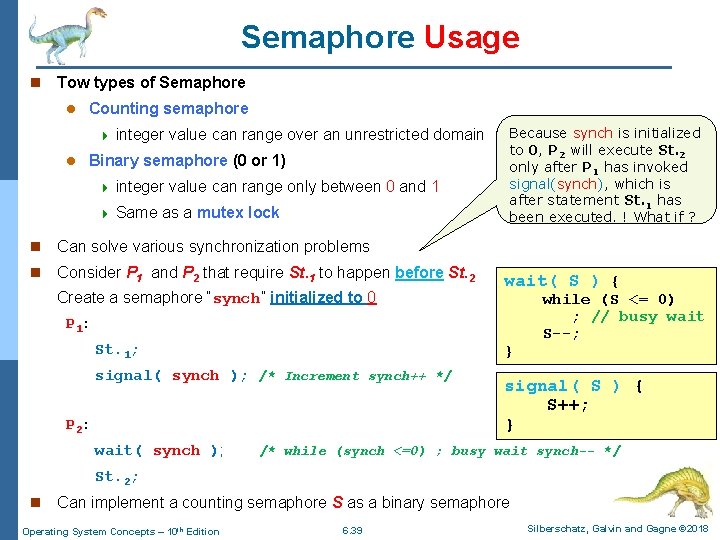

Semaphore Usage n Tow types of Semaphore l Counting semaphore 4 l integer value can range over an unrestricted domain Binary semaphore (0 or 1) 4 integer value can range only between 0 and 1 4 Same as a mutex lock n Can solve various synchronization problems n Consider P 1 and P 2 that require St. 1 to happen before St. 2 Create a semaphore “synch” initialized to 0 Because synch is initialized to 0, P 2 will execute St. 2 only after P 1 has invoked signal(synch), which is after statement St. 1 has been executed. ! What if ? wait( S ) { P 1 : St. 1; } signal( synch ); /* Increment synch++ */ P 2 : wait( synch ); while (S <= 0) ; // busy wait S--; signal( S ) { S++; } /* while (synch <=0) ; busy wait synch-- */ St. 2; n Can implement a counting semaphore S as a binary semaphore Operating System Concepts – 10 th Edition 6. 39 Silberschatz, Galvin and Gagne © 2018

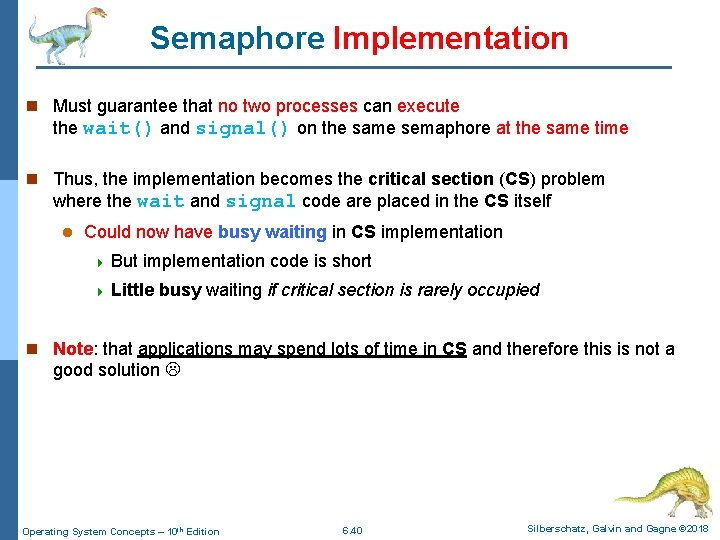

Semaphore Implementation n Must guarantee that no two processes can execute the wait() and signal() on the same semaphore at the same time n Thus, the implementation becomes the critical section (CS) problem where the wait and signal code are placed in the CS itself l Could now have busy waiting in CS implementation 4 But implementation code is short 4 Little busy waiting if critical section is rarely occupied n Note: that applications may spend lots of time in CS and therefore this is not a good solution Operating System Concepts – 10 th Edition 6. 40 Silberschatz, Galvin and Gagne © 2018

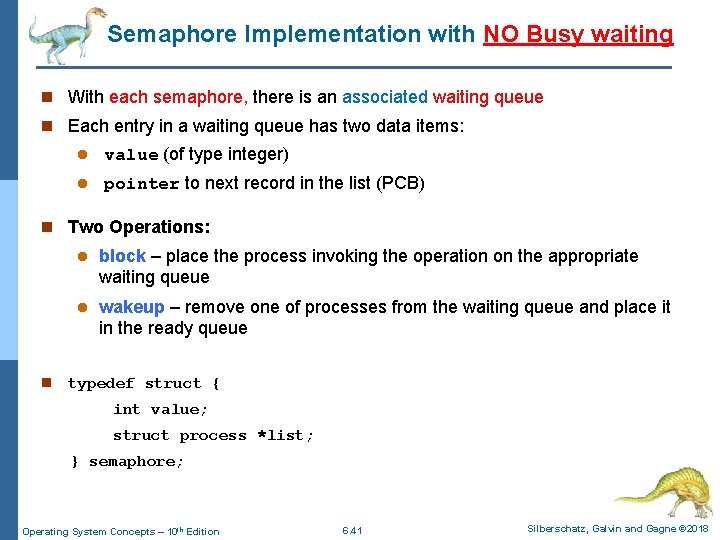

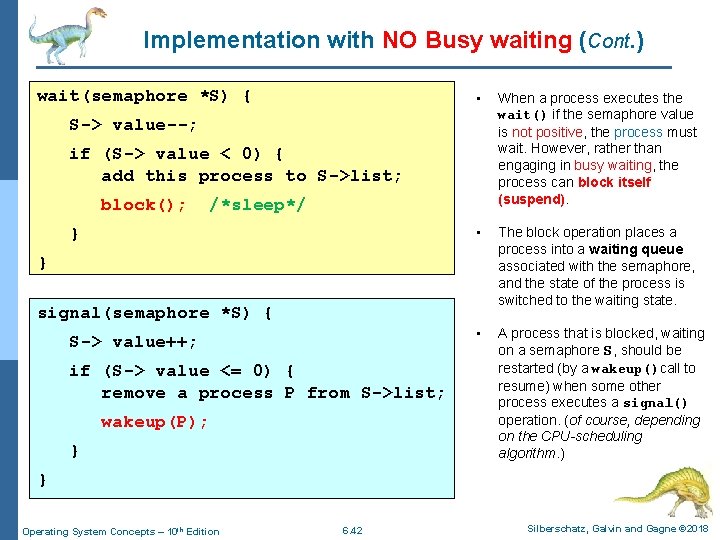

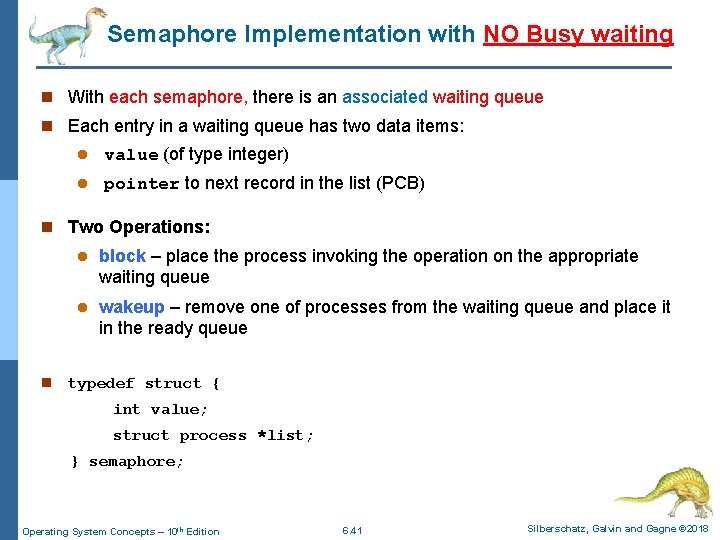

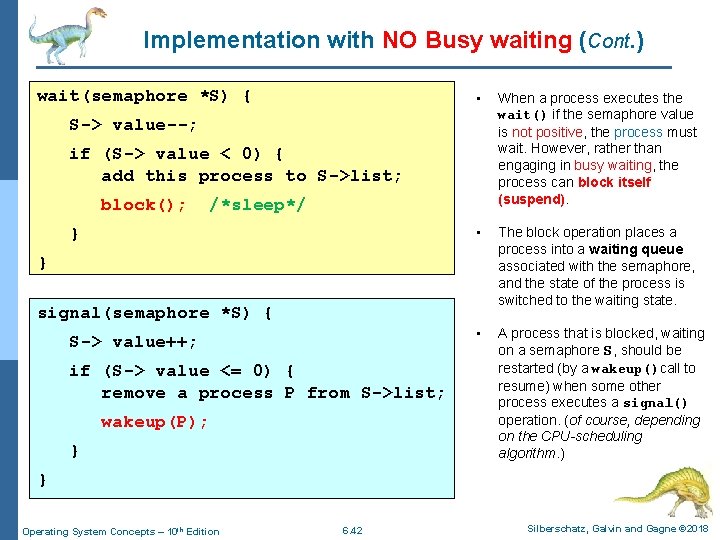

Semaphore Implementation with NO Busy waiting n With each semaphore, there is an associated waiting queue n Each entry in a waiting queue has two data items: l value (of type integer) l pointer to next record in the list (PCB) n Two Operations: n l block – place the process invoking the operation on the appropriate waiting queue l wakeup – remove one of processes from the waiting queue and place it in the ready queue typedef struct { int value; struct process *list; } semaphore; Operating System Concepts – 10 th Edition 6. 41 Silberschatz, Galvin and Gagne © 2018

Implementation with NO Busy waiting (Cont. ) wait(semaphore *S) { • When a process executes the wait() if the semaphore value is not positive, the process must wait. However, rather than engaging in busy waiting, the process can block itself (suspend). • The block operation places a process into a waiting queue associated with the semaphore, and the state of the process is switched to the waiting state. • A process that is blocked, waiting on a semaphore S, should be restarted (by a wakeup()call to resume) when some other process executes a signal() operation. (of course, depending on the CPU-scheduling algorithm. ) S-> value--; if (S-> value < 0) { add this process to S->list; block(); /*sleep*/ } } signal(semaphore *S) { S-> value++; if (S-> value <= 0) { remove a process P from S->list; wakeup(P); } } Operating System Concepts – 10 th Edition 6. 42 Silberschatz, Galvin and Gagne © 2018

Problems with Semaphores n Although semaphores provide a convenient and effective mechanism for process synchronization l Using them incorrectly can result in timing errors that are difficult to detect l When all processes share a binary semaphore variable (mutex), which is initialized to 1 n Incorrect use of semaphore operations can occur: l Each process must execute wait(mutex) before entering the critical section and signal(mutex) afterward. l If this sequence is not preserved, two processes may be in their CS simultaneously 4 4 4 Example 1: suppose that a program interchanges the order wait() and signal() …critical section… – signal(mutex) wait(mutex) – several processes may be executing the critical sections simultaneously, no mutual-exclusion Example 2: suppose that a program replaces signal(mutex) with wait(mutex). – wait(mutex) …critical section… – Then, the process will permanently block on the second call to wait(), wait(mutex) Example 3: suppose that a process omits wait(mutex) and/or signal(mutex) – either mutual exclusion is violated or the process will permanently block. n These – and others – are examples of what can occur when semaphores and other synchronization tools are used incorrectly Operating System Concepts – 10 th Edition 6. 43 Silberschatz, Galvin and Gagne © 2018

Liveness Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018

Liveness n Processes may have to wait indefinitely while trying to acquire a synchronization tool such as a mutex lock or semaphore n Waiting indefinitely violates the progress and bounded-waiting criteria discussed at the beginning of this chapter n Liveness refers to a set of properties that a system must satisfy to ensure processes make progress n Indefinite waiting is an example of a liveness failure Operating System Concepts – 10 th Edition 6. 45 Silberschatz, Galvin and Gagne © 2018

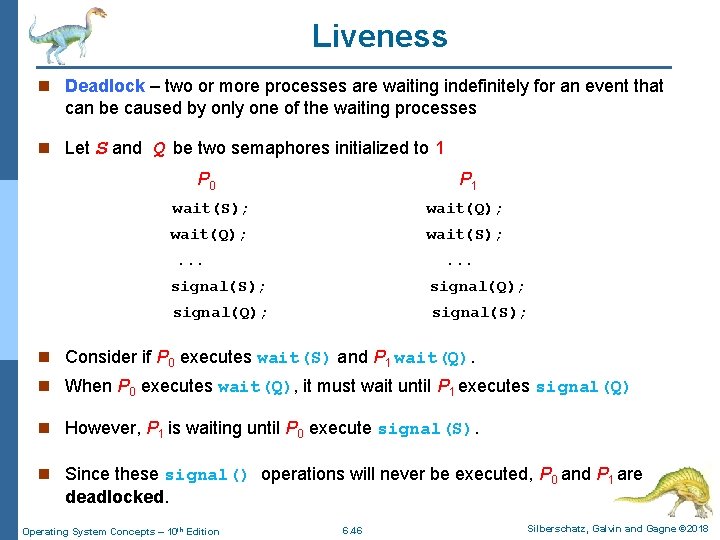

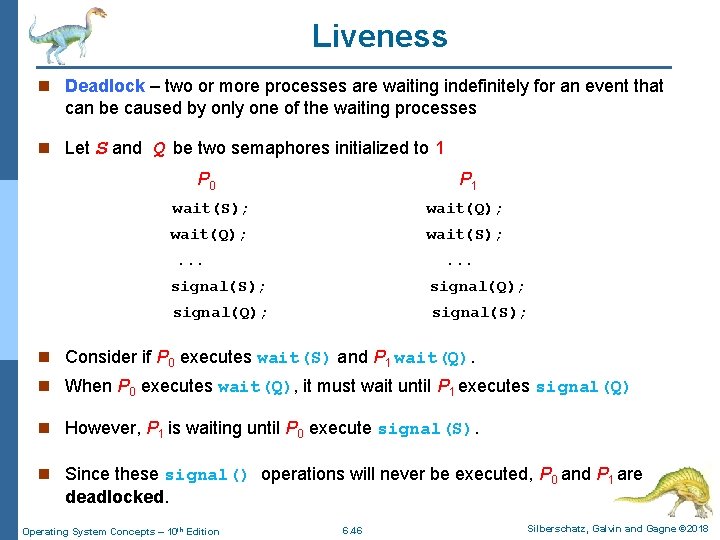

Liveness n Deadlock – two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes n Let S and Q be two semaphores initialized to 1 P 0 P 1 wait(S); wait(Q); wait(S); . . . signal(S); signal(Q); signal(S); n Consider if P 0 executes wait(S) and P 1 wait(Q). n When P 0 executes wait(Q), it must wait until P 1 executes signal(Q) n However, P 1 is waiting until P 0 execute signal(S). n Since these signal() operations will never be executed, P 0 and P 1 are deadlocked. Operating System Concepts – 10 th Edition 6. 46 Silberschatz, Galvin and Gagne © 2018

Liveness n Other forms of deadlock: n Starvation – indefinite blocking l A process may never be removed from the semaphore queue in which it is suspended n Priority Inversion – Scheduling problem when lower-priority process holds a lock needed by higher-priority process n Solved via priority-inheritance protocol Operating System Concepts – 10 th Edition 6. 47 Silberschatz, Galvin and Gagne © 2018

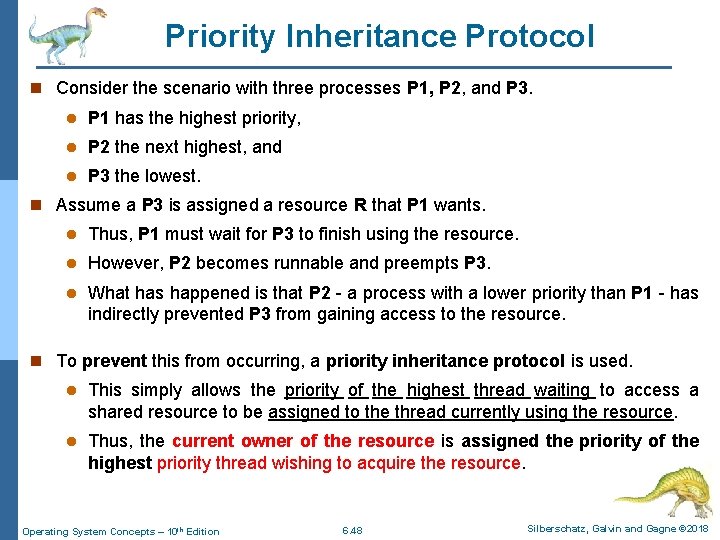

Priority Inheritance Protocol n Consider the scenario with three processes P 1, P 2, and P 3. l P 1 has the highest priority, l P 2 the next highest, and l P 3 the lowest. n Assume a P 3 is assigned a resource R that P 1 wants. l Thus, P 1 must wait for P 3 to finish using the resource. l However, P 2 becomes runnable and preempts P 3. l What has happened is that P 2 - a process with a lower priority than P 1 - has indirectly prevented P 3 from gaining access to the resource. n To prevent this from occurring, a priority inheritance protocol is used. l This simply allows the priority of the highest thread waiting to access a shared resource to be assigned to the thread currently using the resource. l Thus, the current owner of the resource is assigned the priority of the highest priority thread wishing to acquire the resource. Operating System Concepts – 10 th Edition 6. 48 Silberschatz, Galvin and Gagne © 2018

End of Chapter 6 Operating System Concepts – 10 th Edition Silberschatz, Galvin and Gagne © 2018