Chapter 5 Process Synchronization Silberschatz Galvin and Gagne

Chapter 5: Process Synchronization Silberschatz, Galvin and Gagne © 2009 Operating System Concepts – 9 th Edition, Edited by Khoury, 2015

Module 5: Process Synchronization n n n Background The Critical-Section Problem Peterson’s Solution Synchronization Hardware Semaphores Classic Problems of Synchronization Examples 5. 2

Objectives n To introduce the critical-section problem, whose solutions can be used to ensure the consistency of shared data n To present both software and hardware solutions of the critical-section problem 5. 3

Background n Multitasking: multiple cooperating processes running concurrently l Need to access shared data can lead to data inconsistency l OS needs to maintain data consistency l Requires mechanisms to ensure the orderly execution of cooperating processes n We saw one in Chapter 3: the producer-consumer bounded buffer l We’ll modify it to keep track of the count of items produced #define BUFFER_SIZE 10 typedef struct {. . . } item; item buffer[BUFFER_SIZE]; int in = 0; int out = 0; int counter = 0; 5. 4

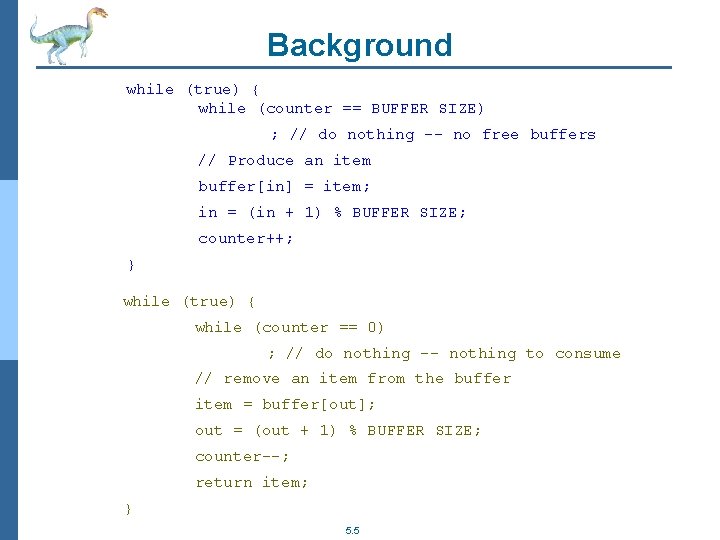

Background while (true) { while (counter == BUFFER SIZE) ; // do nothing -- no free buffers // Produce an item buffer[in] = item; in = (in + 1) % BUFFER SIZE; counter++; } while (true) { while (counter == 0) ; // do nothing -- nothing to consume // remove an item from the buffer item = buffer[out]; out = (out + 1) % BUFFER SIZE; counter--; return item; } 5. 5

![Background n Producer’s counter++ could be implemented as MOV AX, [counter] INC AX MOV Background n Producer’s counter++ could be implemented as MOV AX, [counter] INC AX MOV](http://slidetodoc.com/presentation_image/26ba98d7b0492978bafd0f6689d1fae5/image-6.jpg)

Background n Producer’s counter++ could be implemented as MOV AX, [counter] INC AX MOV [counter], AX n Consumer’s counter-- could be implemented as MOV AX, [counter] DEC AX MOV [counter], AX n Concurrent execution, process preempted after 2 CPU commands, counter = 5 MOV INC MOV DEC MOV AX, [counter] AX [counter], AX counter = 5 counter = 6 counter = 4 5. 6

Background n Race condition: when several processes manipulate the same data, and the outcome depends on the particular (and often unpredictable) execution order l Unavoidable consequence of multitasking and multithreading with shared data and resources l Made worse by multicore systems, where several threads of a process are literally running at the same time using the same global data n Solution: process synchronization, finding ways to coordinate multiple cooperating processes so that they do not interfere with each other 5. 7

Critical Section n A process’ critical section is the segment of code in which it modifies common variables n Solving the race condition requires insuring that no two process can execute their critical section at once l Designing a protocol to do this is the critical section problem n Basic idea: l l l Before running the critical section, request permission and wait for it in an entry section After finished running the critical section, release permission in an exit section Rest of the program after the exit section is the remainder section 5. 8 do{ entry section critical section exit section remainder section } while (true)

Critical Section n Mutual Exclusion l If a process is executing in its critical section, then no other processes can be executing in their critical sections n Progress l If no process is executing in its critical section and there exist some processes that wish to enter their critical section l The selection of the next process to enter its critical section cannot be postponed indefinitely n Bounded Waiting l When a process requests to enter its critical section l There is a bound on the number of times other processes are allowed to enter before it, before its request is granted 5. 9

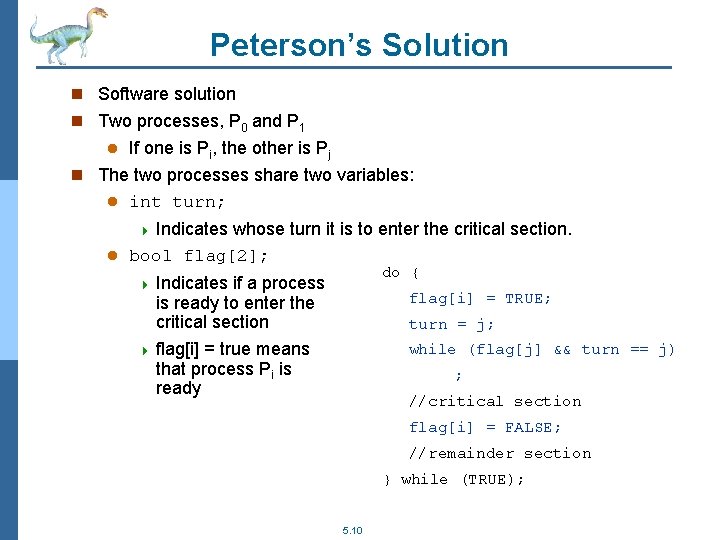

Peterson’s Solution n Software solution n Two processes, P 0 and P 1 If one is Pi, the other is Pj n The two processes share two variables: l int turn; l 4 Indicates whose turn it is to enter the critical section. l bool flag[2]; do { 4 Indicates if a process is ready to enter the critical section flag[i] = TRUE; turn = j; 4 flag[i] = true means that process Pi is ready while (flag[j] && turn == j) ; //critical section flag[i] = FALSE; //remainder section } while (TRUE); 5. 10

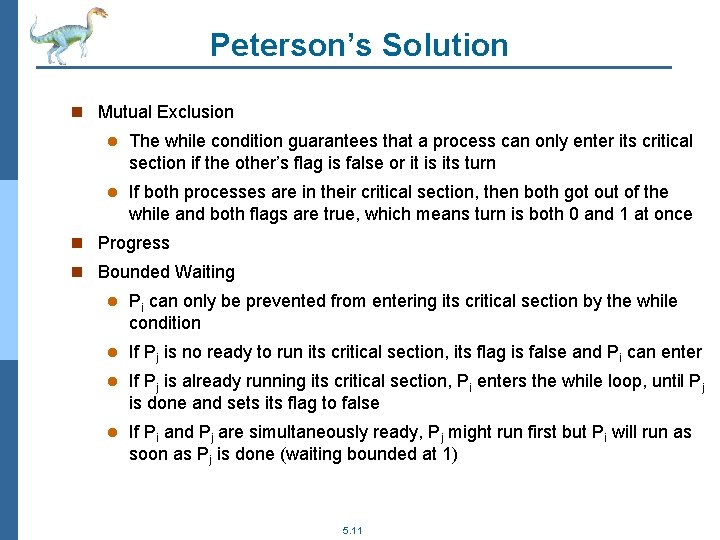

Peterson’s Solution n Mutual Exclusion l The while condition guarantees that a process can only enter its critical section if the other’s flag is false or it is its turn l If both processes are in their critical section, then both got out of the while and both flags are true, which means turn is both 0 and 1 at once n Progress n Bounded Waiting l Pi can only be prevented from entering its critical section by the while condition l If Pj is no ready to run its critical section, its flag is false and Pi can enter l If Pj is already running its critical section, Pi enters the while loop, until Pj is done and sets its flag to false l If Pi and Pj are simultaneously ready, Pj might run first but Pi will run as soon as Pj is done (waiting bounded at 1) 5. 11

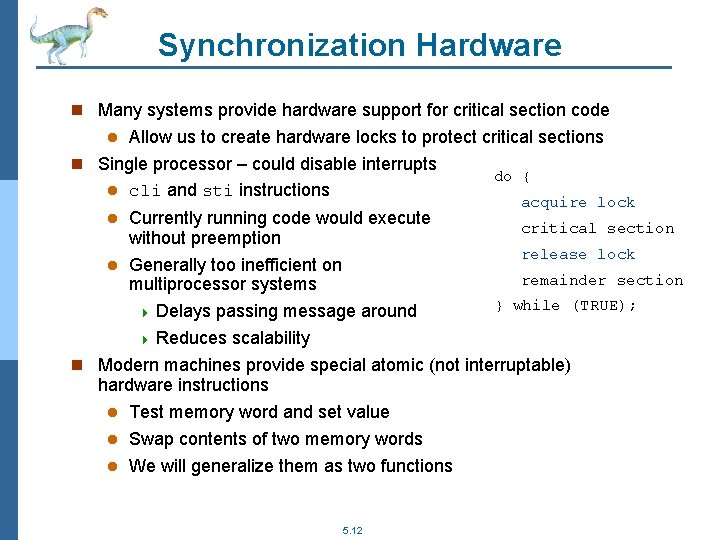

Synchronization Hardware n Many systems provide hardware support for critical section code Allow us to create hardware locks to protect critical sections n Single processor – could disable interrupts do { l cli and sti instructions l Currently running code would execute without preemption l Generally too inefficient on multiprocessor systems 4 Delays passing message around 4 Reduces scalability l acquire lock critical section release lock remainder section } while (TRUE); n Modern machines provide special atomic (not interruptable) hardware instructions l Test memory word and set value l Swap contents of two memory words l We will generalize them as two functions 5. 12

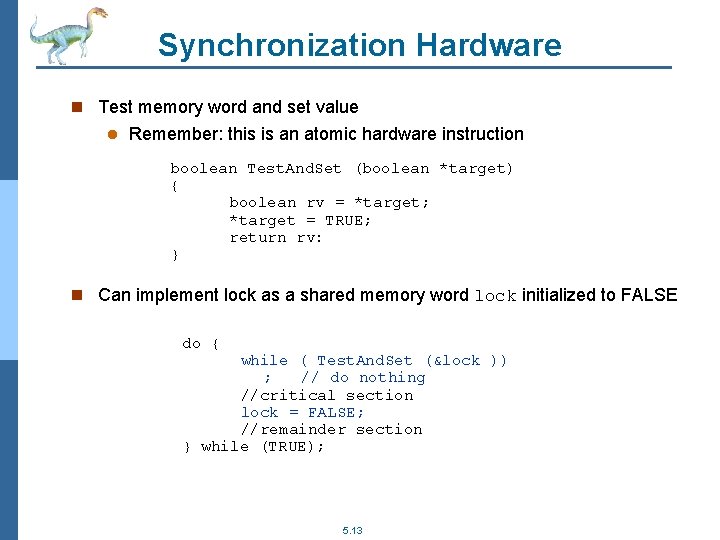

Synchronization Hardware n Test memory word and set value l Remember: this is an atomic hardware instruction boolean Test. And. Set (boolean *target) { boolean rv = *target; *target = TRUE; return rv: } n Can implement lock as a shared memory word lock initialized to FALSE do { while ( Test. And. Set (&lock )) ; // do nothing //critical section lock = FALSE; //remainder section } while (TRUE); 5. 13

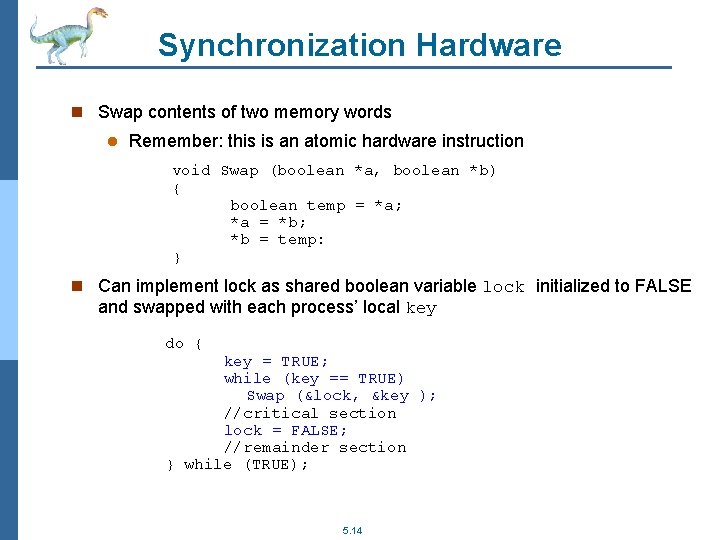

Synchronization Hardware n Swap contents of two memory words l Remember: this is an atomic hardware instruction void Swap (boolean *a, boolean *b) { boolean temp = *a; *a = *b; *b = temp: } n Can implement lock as shared boolean variable lock initialized to FALSE and swapped with each process’ local key do { key = TRUE; while (key == TRUE) Swap (&lock, &key ); //critical section lock = FALSE; //remainder section } while (TRUE); 5. 14

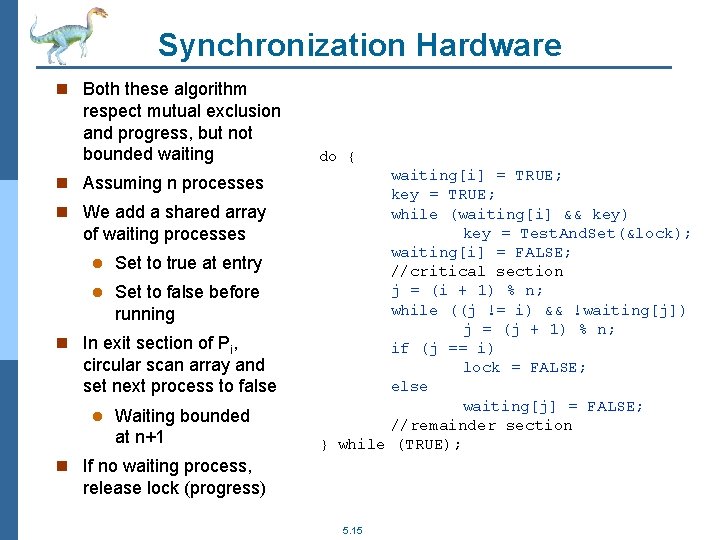

Synchronization Hardware n Both these algorithm respect mutual exclusion and progress, but not bounded waiting n Assuming n processes n We add a shared array of waiting processes l Set to true at entry l Set to false before running n In exit section of Pi, circular scan array and set next process to false l Waiting bounded at n+1 do { waiting[i] = TRUE; key = TRUE; while (waiting[i] && key) key = Test. And. Set(&lock); waiting[i] = FALSE; //critical section j = (i + 1) % n; while ((j != i) && !waiting[j]) j = (j + 1) % n; if (j == i) lock = FALSE; else waiting[j] = FALSE; //remainder section } while (TRUE); n If no waiting process, release lock (progress) 5. 15

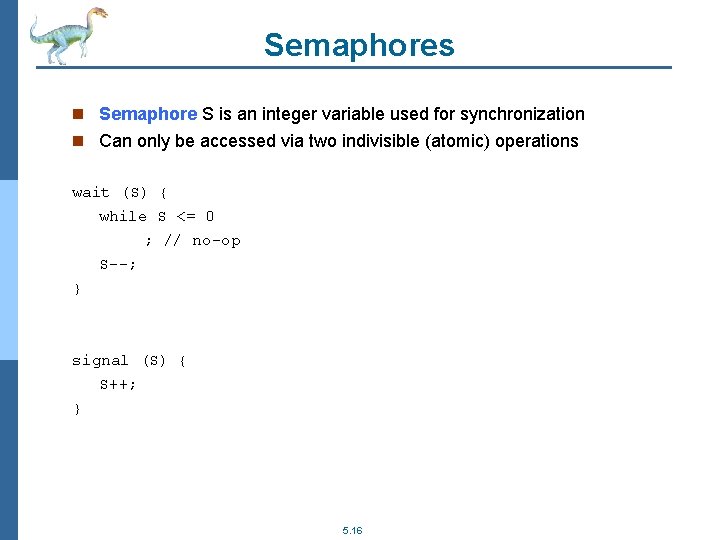

Semaphores n Semaphore S is an integer variable used for synchronization n Can only be accessed via two indivisible (atomic) operations wait (S) { while S <= 0 ; // no-op S--; } signal (S) { S++; } 5. 16

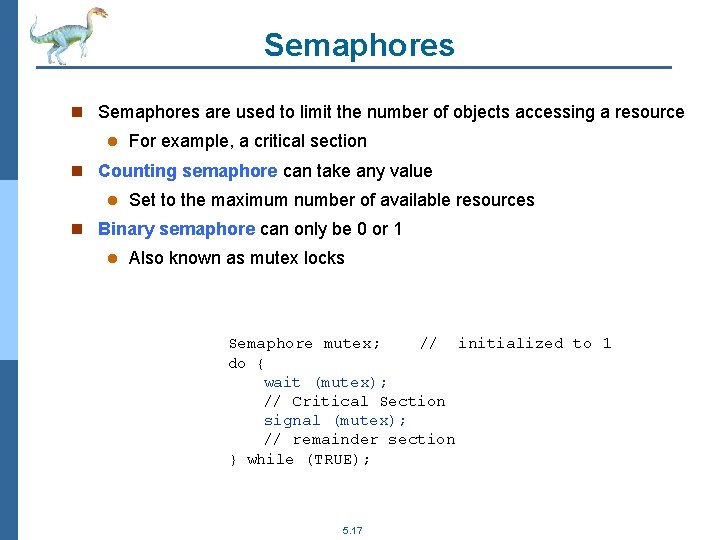

Semaphores n Semaphores are used to limit the number of objects accessing a resource l For example, a critical section n Counting semaphore can take any value l Set to the maximum number of available resources n Binary semaphore can only be 0 or 1 l Also known as mutex locks Semaphore mutex; // initialized to 1 do { wait (mutex); // Critical Section signal (mutex); // remainder section } while (TRUE); 5. 17

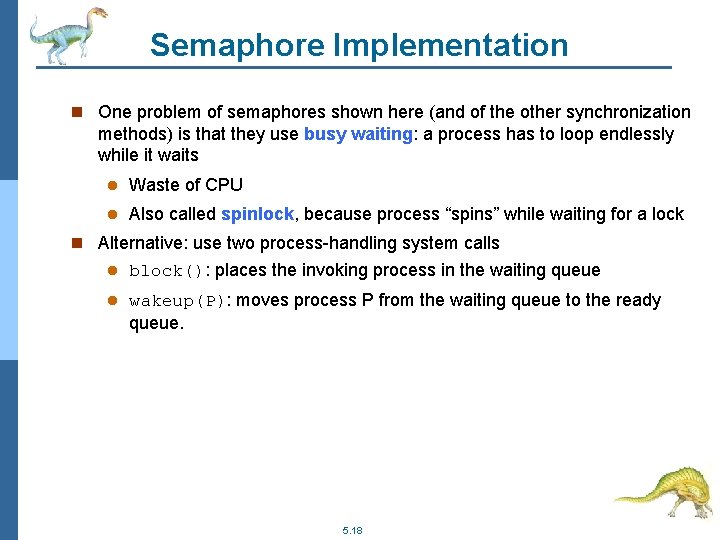

Semaphore Implementation n One problem of semaphores shown here (and of the other synchronization methods) is that they use busy waiting: a process has to loop endlessly while it waits l Waste of CPU l Also called spinlock, because process “spins” while waiting for a lock n Alternative: use two process-handling system calls l block(): places the invoking process in the waiting queue l wakeup(P): moves process P from the waiting queue to the ready queue. 5. 18

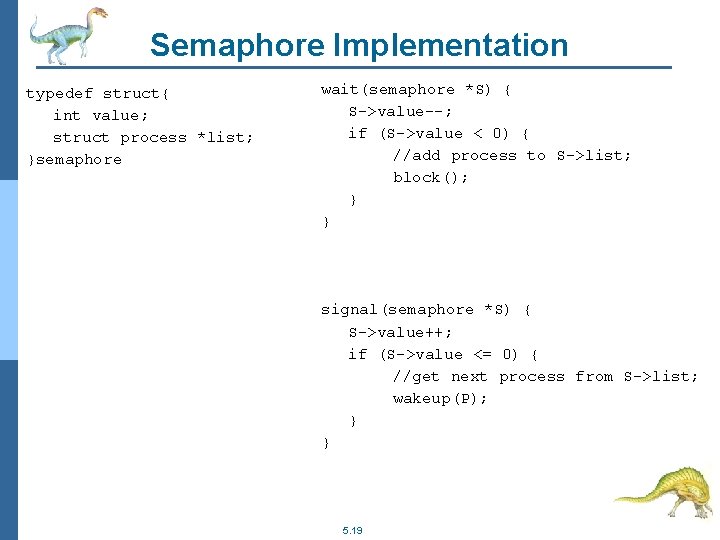

Semaphore Implementation typedef struct{ int value; struct process *list; }semaphore wait(semaphore *S) { S->value--; if (S->value < 0) { //add process to S->list; block(); } } signal(semaphore *S) { S->value++; if (S->value <= 0) { //get next process from S->list; wakeup(P); } } 5. 19

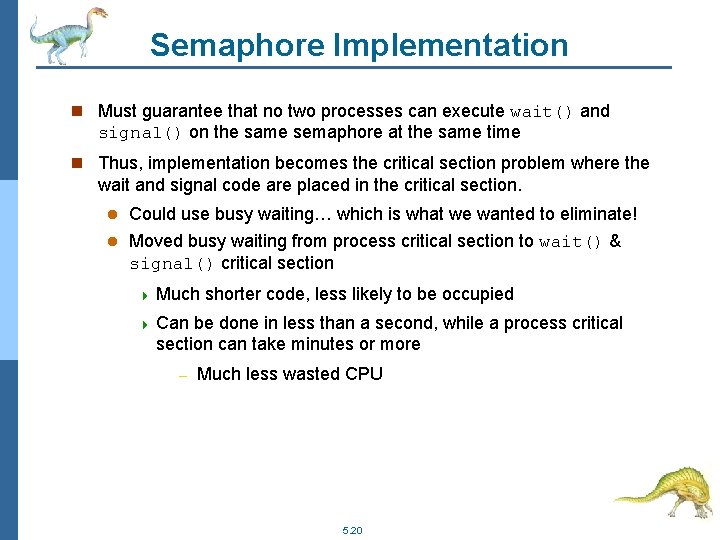

Semaphore Implementation n Must guarantee that no two processes can execute wait() and signal() on the same semaphore at the same time n Thus, implementation becomes the critical section problem where the wait and signal code are placed in the critical section. l Could use busy waiting… which is what we wanted to eliminate! l Moved busy waiting from process critical section to wait() & signal() critical section 4 Much shorter code, less likely to be occupied 4 Can be done in less than a second, while a process critical section can take minutes or more – Much less wasted CPU 5. 20

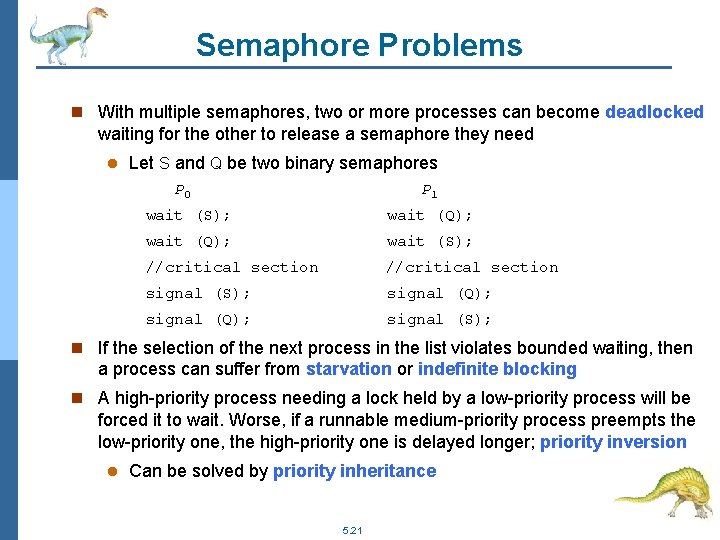

Semaphore Problems n With multiple semaphores, two or more processes can become deadlocked waiting for the other to release a semaphore they need l Let S and Q be two binary semaphores P 0 P 1 wait (S); wait (Q); wait (S); //critical section signal (S); signal (Q); signal (S); n If the selection of the next process in the list violates bounded waiting, then a process can suffer from starvation or indefinite blocking n A high-priority process needing a lock held by a low-priority process will be forced it to wait. Worse, if a runnable medium-priority process preempts the low-priority one, the high-priority one is delayed longer; priority inversion l Can be solved by priority inheritance 5. 21

Classical Problems of Synchronization n Three typical synchronization problems used as benchmarks and tests l Bounded-Buffer Problem l Readers and Writers Problem l Dining-Philosophers Problem 5. 22

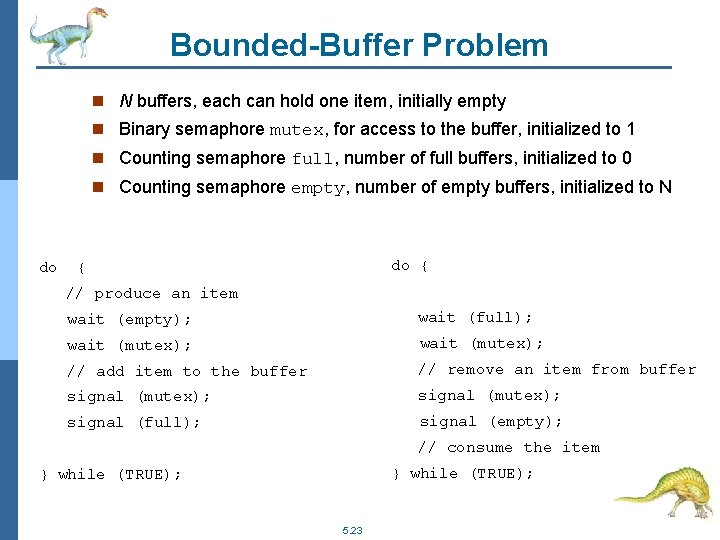

Bounded-Buffer Problem n N buffers, each can hold one item, initially empty n Binary semaphore mutex, for access to the buffer, initialized to 1 n Counting semaphore full, number of full buffers, initialized to 0 n Counting semaphore empty, number of empty buffers, initialized to N do do { { // produce an item wait (empty); wait (full); wait (mutex); // add item to the buffer // remove an item from buffer signal (mutex); signal (full); signal (empty); // consume the item } while (TRUE); 5. 23

Readers-Writers Problem n Data is shared among a number of concurrent processes l Readers that only read the data, but never write or update it l Writers that both read and write the data n Problem: multiple readers should be allowed simultaneously, but each writer should have exclusive access l First variation: new readers can read while writer waits (writer might starve) l Second variation: FCFS, new readers wait after writer (reader might starve) n Solution for second one simple with a single semaphore n Solution for first one: l Binary semaphore mutex initialized to 1 l Binary semaphore wrt initialized to 1 l Integer readcount initialized to 0 5. 24

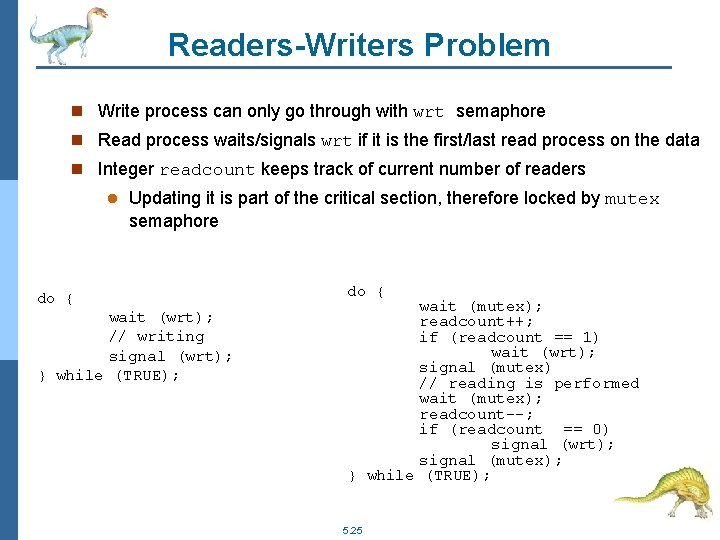

Readers-Writers Problem n Write process can only go through with wrt semaphore n Read process waits/signals wrt if it is the first/last read process on the data n Integer readcount keeps track of current number of readers l Updating it is part of the critical section, therefore locked by mutex semaphore do { wait (wrt); // writing signal (wrt); } while (TRUE); do { wait (mutex); readcount++; if (readcount == 1) wait (wrt); signal (mutex) // reading is performed wait (mutex); readcount--; if (readcount == 0) signal (wrt); signal (mutex); } while (TRUE); 5. 25

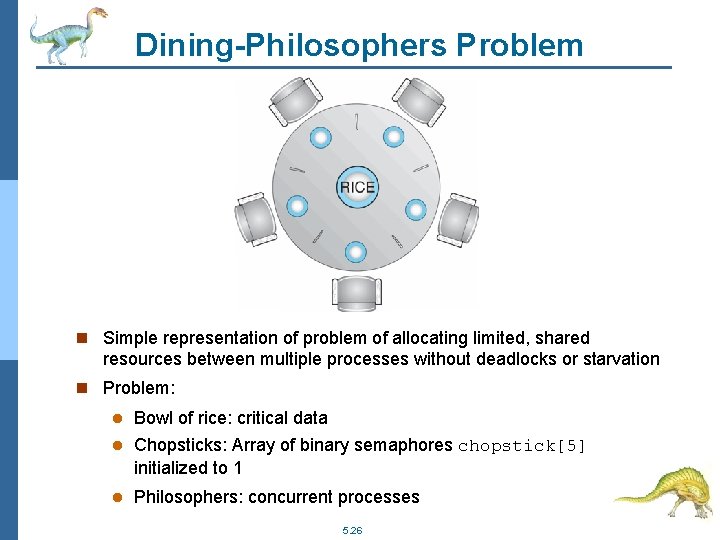

Dining-Philosophers Problem n Simple representation of problem of allocating limited, shared resources between multiple processes without deadlocks or starvation n Problem: l Bowl of rice: critical data l Chopsticks: Array of binary semaphores chopstick[5] initialized to 1 l Philosophers: concurrent processes 5. 26

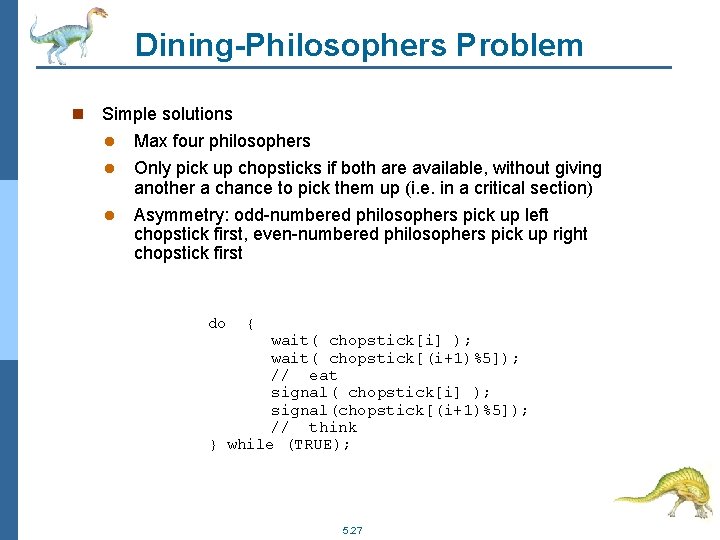

Dining-Philosophers Problem n Simple solutions l l l Max four philosophers Only pick up chopsticks if both are available, without giving another a chance to pick them up (i. e. in a critical section) Asymmetry: odd-numbered philosophers pick up left chopstick first, even-numbered philosophers pick up right chopstick first do { wait( chopstick[i] ); wait( chopstick[(i+1)%5]); // eat signal( chopstick[i] ); signal(chopstick[(i+1)%5]); // think } while (TRUE); 5. 27

Problems with Semaphores n Incorrect use of semaphore operations: l signal(mutex) … l wait(mutex) l Omitting wait(mutex) or signal(mutex) (or both) … wait(mutex) n Causes timing and synchronization errors that can be difficult to detect l Errors in the entire system (no mutual exclusion, deadlocks) caused by only one poorly-programmed user process l Might only occur given a specific execution sequence 5. 28

Synchronization Examples n Solaris n Windows XP n Linux n Pthreads 5. 29

Solaris Synchronization n Implements a variety of locks to support multitasking, multithreading (including real-time threads), and multiprocessing n Implements semaphores as we studied n Adaptive mutexes used to protect short (<100 instructions) critical data in multi-CPU systems l If process holding lock is currently running on another CPU, spinlock l Otherwise, process holding lock is not currently running, so sleep n Reader-writer locks used to protect long critical data that is often read (best for multithreading) n Turnstiles used to order the list of threads waiting to acquire lock l A thread needs to enter a turnstile for each object it is waiting for l Implemented as one turnstile per kernel thread rather than per object 4 First thread to lock on an object becomes its turnstile 4 Since a thread can only be waiting after one object at a time, this is more efficient 5. 30

Windows XP Synchronization n Multithreaded kernel l On single-processor system, simply masks all interrupts whose interrupt handlers can access the critical resource l On multiprocessor systems, uses spinlocks and prevents preempting of the thread using the resource n Outside the kernel, synchronization done with dispatcher objects l Can make use of mutexes, semaphores, timers l A dispatcher object can be signaled (available to be used by a thread) or nonsignaled (already used, the new thread must wait) l When a dispatcher object moves to signaled state, kernel checks for waiting threads and moves a number of them (depending on the nature of the dispatcher object) to the ready queue 5. 31

Linux Synchronization n For short-term locks l On single-processor systems: disable kernel preemption l On multi-processor systems: spinlock n For long-term locks: semaphores 5. 32

Pthreads Synchronization n Pthreads API is an IEEE standard, OS-independent n Pthread standard includes: l mutex locks l reader-writer locks n Certain non-standard extensions add: l semaphores l spinlocks 5. 33

Review n Any solution to the critical section problem has to respect three properties. What are they and why are they important? n What is a spinlock, and why is it often used in multi-CPU systems but must be avoided in single-CPU systems? n What is priority inheritance; what problem does it solve and how? 5. 34

Exercises n Read sections 5. 1 to 5. 7 and 5. 9 l If you have the “with Java” textbook, skip the Java sections and subtract 1 to the following section numbers n 5. 1 n 5. 3 n 5. 4 n 5. 5 n 5. 7 n 5. 8 n 5. 9 n 5. 10 n 5. 11 n 5. 12 n 5. 16 n 5. 17 n 5. 28 5. 35

End of Chapter 5 Silberschatz, Galvin and Gagne © 2009 Operating System Concepts – 9 th Edition, Edited by Khoury, 2015

- Slides: 36