Chapter 6 Standardized Measurement and Assessment http www

- Slides: 43

Chapter 6 - Standardized Measurement and Assessment http: //www. offthemarkcartoons. com/cartoons/2005 -08 -21. gif

What is measurement? • the act of measuring • assigning symbols or numbers to something according to a specific set of rules

What are the four different levels or scales of measurement? • Nominal Scale • Ordinal Scale • Interval Scale • Ratio Scale

What is essential to know about the Nominal Scale? • it’s the simplest form of measurement • it uses symbols, such as words or numbers • it measures categorical variables IDENTIFY LABEL CLASSIFY

What is essential to know about the Ordinal Scale? • it’s a rank-order scale • it doesn’t indicate how much greater one ranking is over another

What is essential to know about the Interval Scale? • it’s also a rank-order scale • includes equal distances or intervals between adjacent numbers • the absence of a zero points means you cannot make “ratio statements”

What is essential to know about the Ratio Scale? • it’s the highest level of quantitative measure • it has all the properties of the nominal, ordinal, and interval scales plus it has a true zero point • it is not often used in educational research

How do we define testing? • the measurement of variables

How do we define assessment? • gathering data to make evaluations

How do we define error? • the difference between true scores and observed scores

How do we define traits? • distinguishable, enduring ways in which one individual differs from another

How do we define states? • distinguishable but less enduring ways in which individuals vary

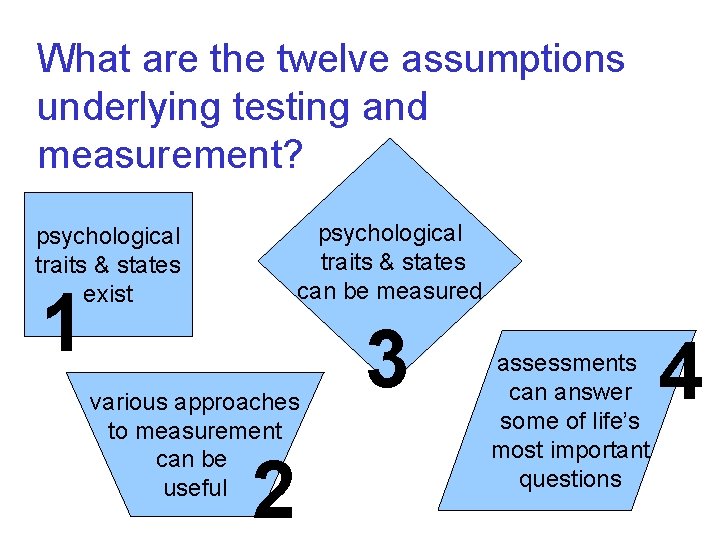

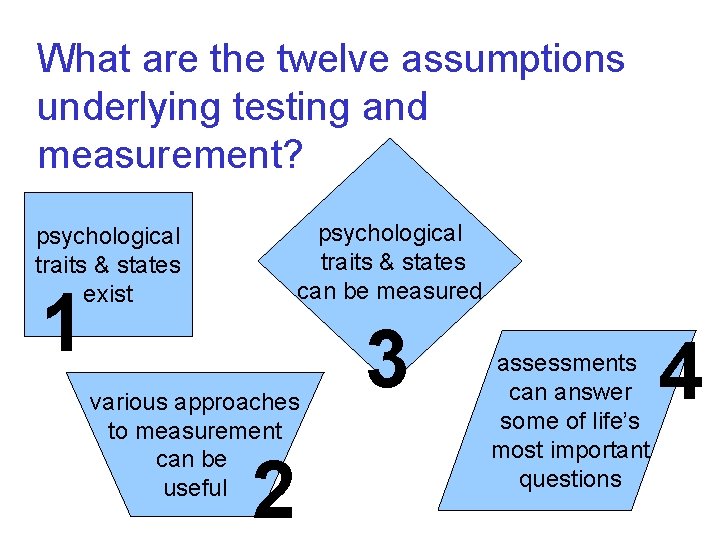

What are the twelve assumptions underlying testing and measurement? psychological traits & states can be measured psychological traits & states exist 1 various approaches to measurement can be useful 2 3 assessments can answer some of life’s most important questions 4

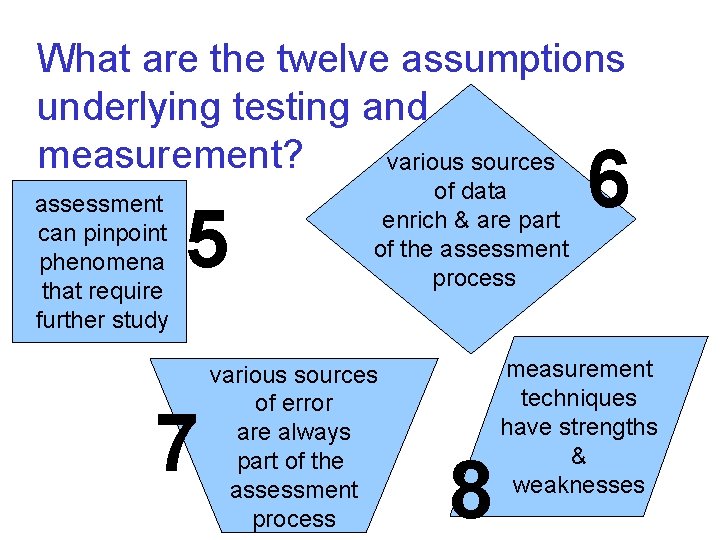

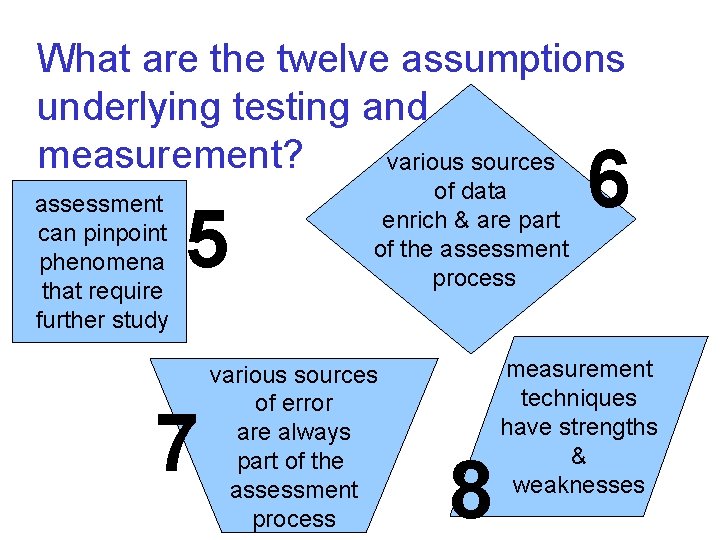

What are the twelve assumptions underlying testing and measurement? various sources assessment can pinpoint phenomena that require further study 5 7 of data enrich & are part of the assessment process various sources of error are always part of the assessment process 8 6 measurement techniques have strengths & weaknesses

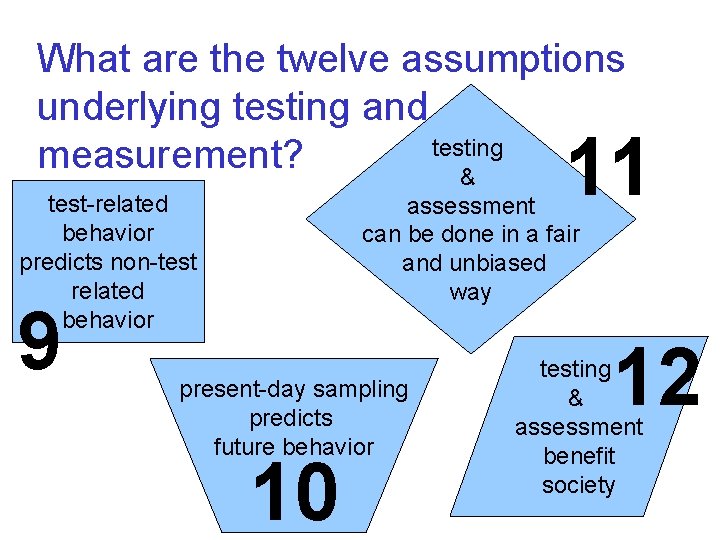

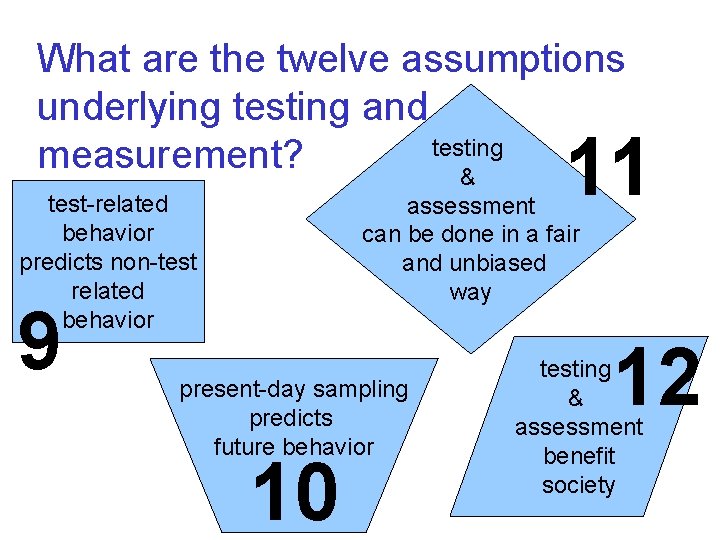

What are the twelve assumptions underlying testing and testing measurement? & 11 test-related behavior predicts non-test related behavior 9 assessment can be done in a fair and unbiased way present-day sampling predicts future behavior 10 12 testing & assessment benefit society

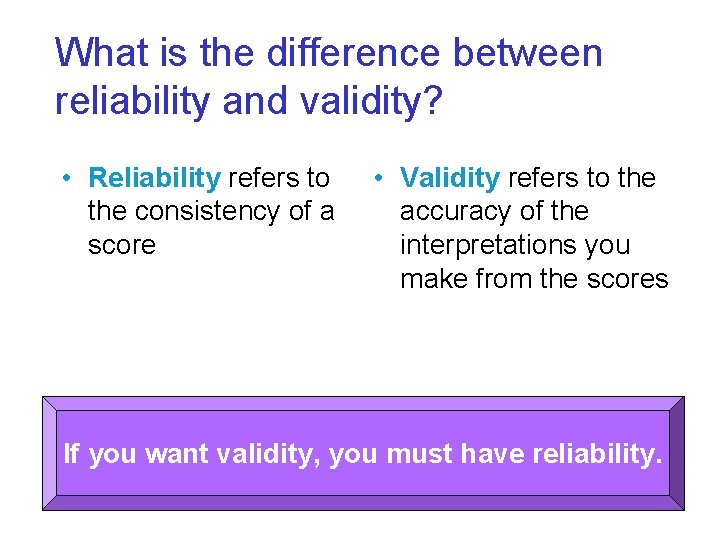

What is the difference between reliability and validity? • Reliability refers to the consistency of a score • Validity refers to the accuracy of the interpretations you make from the scores If you want validity, you must have reliability.

What is a reliability coefficient? • a correlation coefficient that is used as an index of reliability • researchers want reliability coefficients to be as close to +1. 00 as possible

What are four different ways of assessing reliability? 1. 2. 3. 4. Test-Retest Reliability Equivalent Forms Reliability Internal Consistency Reliability Interscorer Reliability

What is test-retest reliability? • • a measure of the consistency of scores over time the time interval can have an effect on testretest reliability because people change over time

What is equivalent forms reliability? • • the consistency of a group of individuals’ scores on two equivalent forms of a test measuring the same thing the success of this method depends on the ability to construct two equivalent forms of the same test

What is internal consistency reliability? • the consistency with which the items on a test measure a single construct

What is split-half reliability? • • splitting a test into two equivalent halves and then assessing the consistency of the scores across the two halves of the test each half needs to be equal to the other in format, style, content, and other aspects

What is coefficient alpha? • • • a formula that provides an estimate of the reliability of a homogeneous test or an estimate of the reliability of each dimension in a multidimensional test tells you the degree to which the items are interrelated need to consider the number of items; don’t just assume that because the coefficient alpha is large, the items are strongly related

What is interscorer reliability? • • the degree of agreement or consistency between two or more scorers, judges, or raters some degree of training and practice for the scorers is advised to improve the reliability of an evaluation

What is the definition of validity? • • • the accuracy of the inferences, interpretations, or actions made on the basis of test scores to make sure that our test is measuring what we intended it to measure for the particular people in a particular context and that the interpretations we make on the basis of the test scores are correct we want our inferences to be accurate and our actions to be appropriate

What is the definition of validity evidence? • the empirical evidence and theoretical rationales that support the inferences or interpretations made from the test scores

What is the definition of validation? • • • the process of gathering evidence that supports inferences made on the basis of test scores the best rule is to collect multiple sources of evidence validation should be viewed as a neverending process

What are the characteristics of the different ways of obtaining validity evidence? • • • Evidence based on content Evidence based on internal structure Evidence based on relations to other variables

What are the characteristics of evidence based on content? • • content-related evidence is when you make a judgment of the degree to which the evidence suggests that the items, tasks, or questions on the test adequately represent the domain of interest it’s based on item content, but it is also based on the formatting, working, administration, and storing of the test

What are the characteristics of evidence based on internal structure? • Factor Analysis – a statistical procedure that analyzes the relationships among items to determine whether a test is unidimensional or multidimensional • Homogeneity – refers to how well the different items in a test measure the same construct or trait

What are the characteristics of evidence based on relations to other variables? • • Criterion-Related Evidence Validity coefficient Concurrent evidence Predictive evidence Convergent evidence Discriminant evidence Known groups evidence

Define criterion. the standard or benchmark that you want to predict accurately on the basis of the test scores

Define criterion-related evidence. validity evidence based on the extent to which scores from a test can be used to predict or infer performance on some criterion such as a test or future performance

Define validity coefficient. a correlation coefficient that is computed to provide validity evidence, such as the correlation between test scores and criterion scores

Define concurrent evidence. validity evidence based on the relationship between test scores and criterion scores obtained at the same time

Define predictive evidence. validity evidence based on the relationship between test scores collected at one point in time and criterion scores obtained at a later time

Define convergent evidence. validity evidence based on the relationship between the focal test scores and independent measure of the same construct

Define discriminant evidence that the scores on your focal test are not highly related to the scores from other tests that are designed to measure theoretically different constructs

Define known groups evidence that groups that are known to differ on the construct do differ on the test in the hypothesized direction

What are the characteristics of the different ways of obtaining validity evidence? • • • norming group - the specific group for whaich the test publishers or researcher provides evidence for test validity and reliability it is not wise to rely solely on previously reported reliability and validity information the characteristics of you participants should closely match the characteristics of the norming group

What are some different types of psychological tests? • • intelligence tests - the ability to think abstractly and to learn readily from experience personality tests - patterns that characterize and classify people – self-report - participants rate themselves – performance measures - participants perform some real-life, observable behavior – projective measures - participants provide responses to ambiguous stimuli

What are some different types of educational assessment tests? • • preschool assessment tests - assess the various behaviors and cognitive skills of young children achievement tests - designed to measure the degree of learning that has taken place after a person is exposed to a specific learning experience aptitude tests - focuses on the information acquired through the informal learning that goes on through life diagnostic tests - identify where a student is having difficulty