Cache Simulations and Application Performance Christopher Kerr kerrgfdl

- Slides: 18

Cache Simulations and Application Performance Christopher Kerr (kerr@gfdl. gov) Philip Mucci (mucci@cs. utk. edu) Jeff Brown (jeffb@lanl. gov Los Alamos, Sandia National Laboratories

Goal • To optimize large, numerically intensive applications with poor cache utilization. • By taking advantage of the memory hierarchy and we can often achieve the greatest performance improvements for our time.

Philosophy • By simulating the cache hierarchy, we wish to understand how the application’s data maps to a specific cache architecture. • In addition, we wish to understand the application’s reference pattern and the relationship to the mapping. • Performance improvements can be obtained from this information algorithmically.

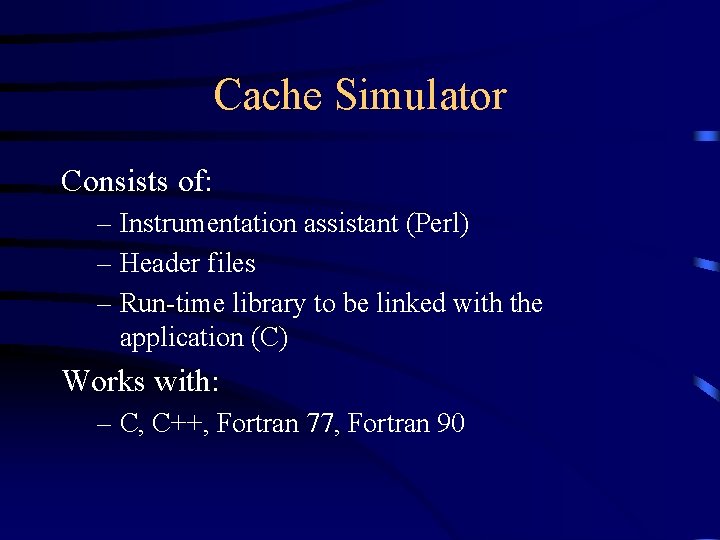

Cache Simulator Consists of: – Instrumentation assistant (Perl) – Header files – Run-time library to be linked with the application (C) Works with: – C, C++, Fortran 77, Fortran 90

How it works • Cache simulator is called on memory (array) references • Cache simulator reads a configuration file containing an architectural description of the memory hierarchy for multiple machines. • Environment variables enable different options

How it works (cont) • Each call to the simulator provides as input: – Address of the reference – Size of the datum being accessed – Symbolic name consisting of the name, file and line number

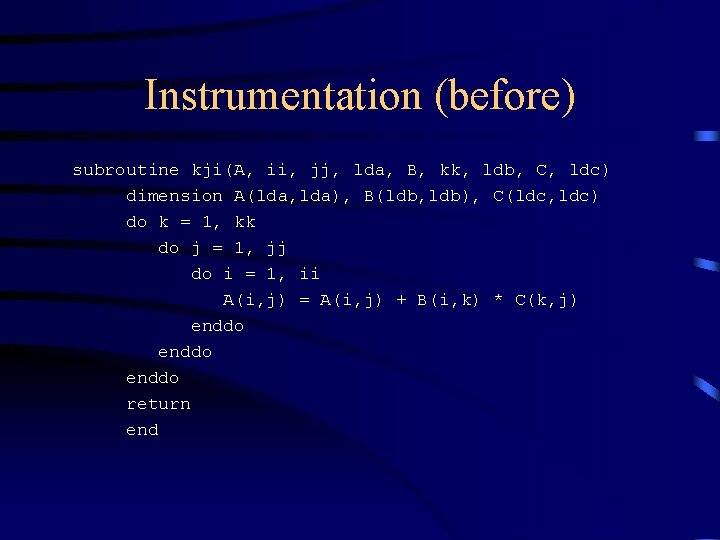

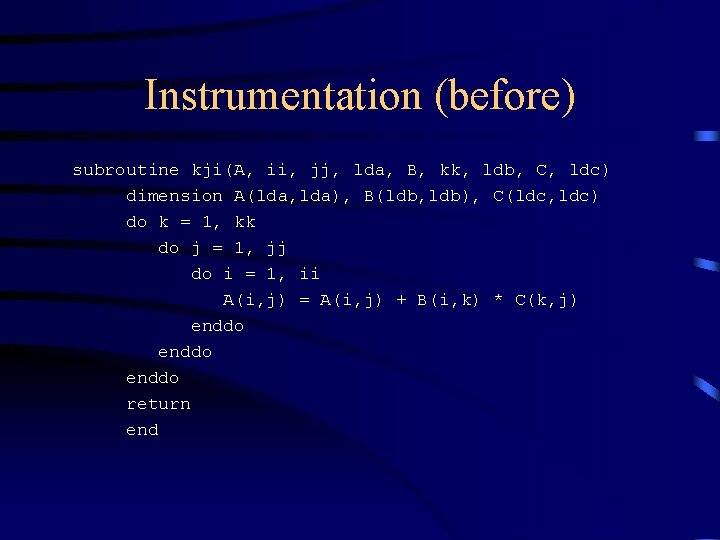

Instrumentation (before) subroutine kji(A, ii, jj, lda, B, kk, ldb, C, ldc) dimension A(lda, lda), B(ldb, ldb), C(ldc, ldc) do k = 1, kk do j = 1, jj do i = 1, ii A(i, j) = A(i, j) + B(i, k) * C(k, j) enddo return end

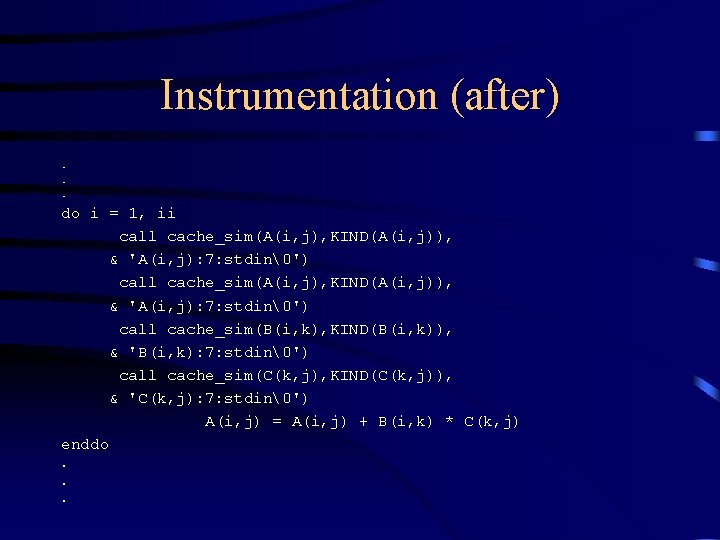

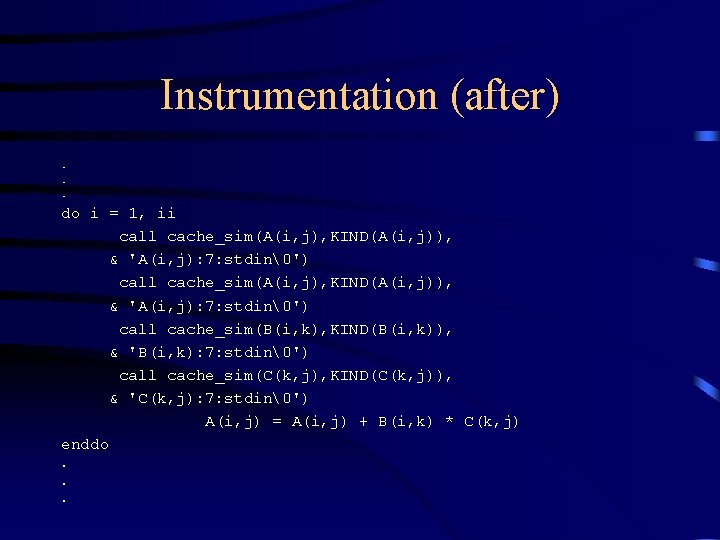

Instrumentation (after). . . do i = 1, ii call cache_sim(A(i, j), KIND(A(i, j)), & 'A(i, j): 7: stdin�') call cache_sim(B(i, k), KIND(B(i, k)), & 'B(i, k): 7: stdin�') call cache_sim(C(k, j), KIND(C(k, j)), & 'C(k, j): 7: stdin�') A(i, j) = A(i, j) + B(i, k) * C(k, j) enddo. . .

Output • • • Summary Misses by name Misses by address Conflict matrix Address trace

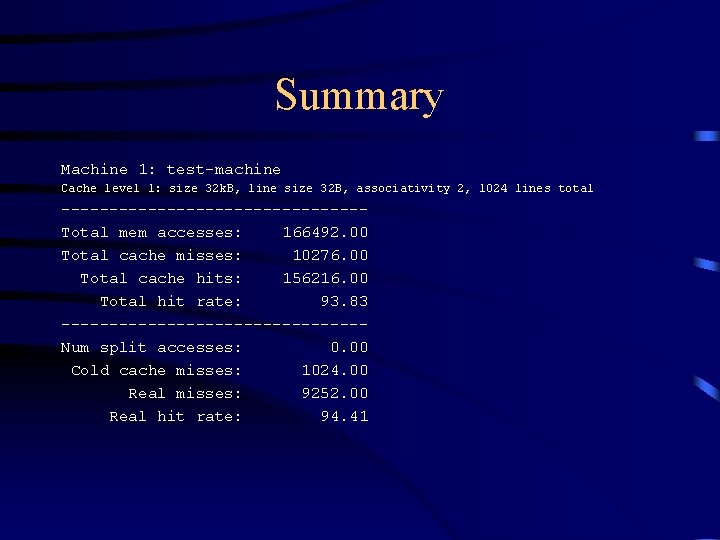

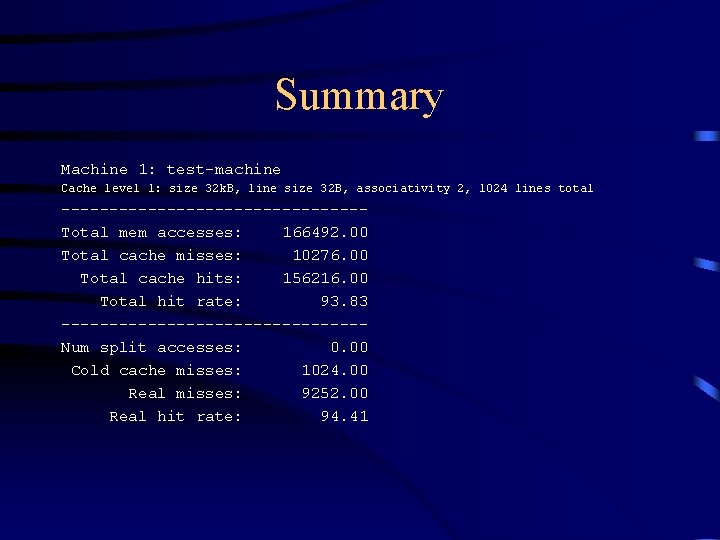

Summary Machine 1: test-machine Cache level 1: size 32 k. B, line size 32 B, associativity 2, 1024 lines total ----------------Total mem accesses: 166492. 00 Total cache misses: 10276. 00 Total cache hits: 156216. 00 Total hit rate: 93. 83 ----------------Num split accesses: 0. 00 Cold cache misses: 1024. 00 Real misses: 9252. 00 Real hit rate: 94. 41

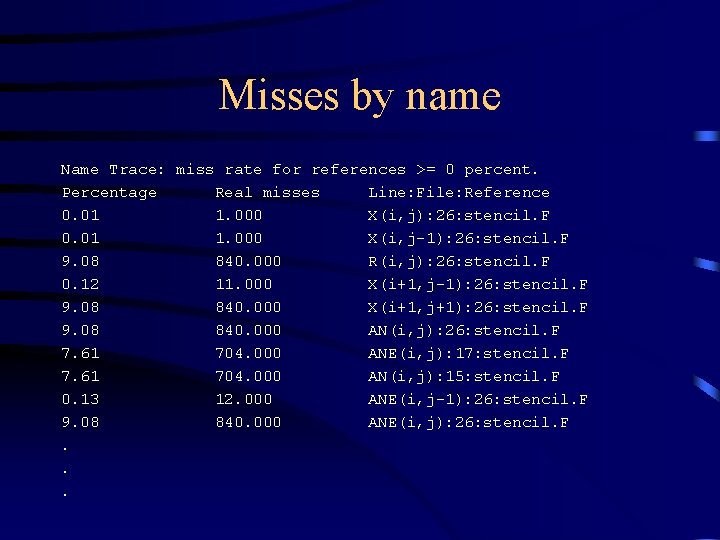

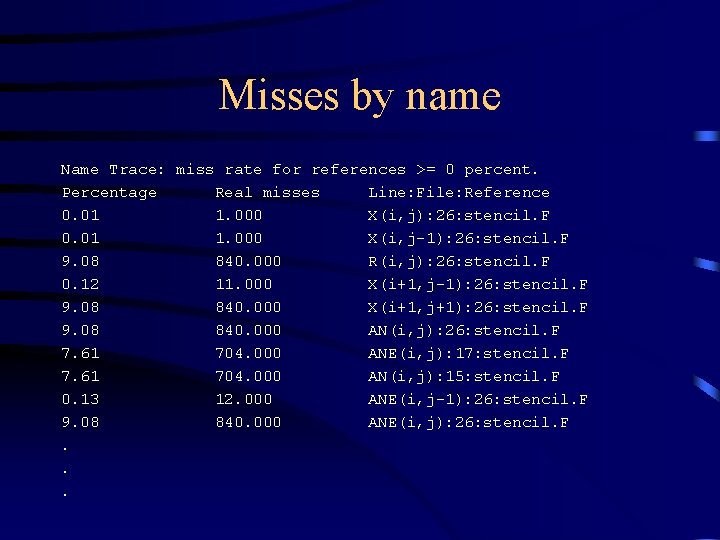

Misses by name Name Trace: miss rate for references >= 0 percent. Percentage Real misses Line: File: Reference 0. 01 1. 000 X(i, j): 26: stencil. F 0. 01 1. 000 X(i, j-1): 26: stencil. F 9. 08 840. 000 R(i, j): 26: stencil. F 0. 12 11. 000 X(i+1, j-1): 26: stencil. F 9. 08 840. 000 X(i+1, j+1): 26: stencil. F 9. 08 840. 000 AN(i, j): 26: stencil. F 7. 61 704. 000 ANE(i, j): 17: stencil. F 7. 61 704. 000 AN(i, j): 15: stencil. F 0. 13 12. 000 ANE(i, j-1): 26: stencil. F 9. 08 840. 000 ANE(i, j): 26: stencil. F. . .

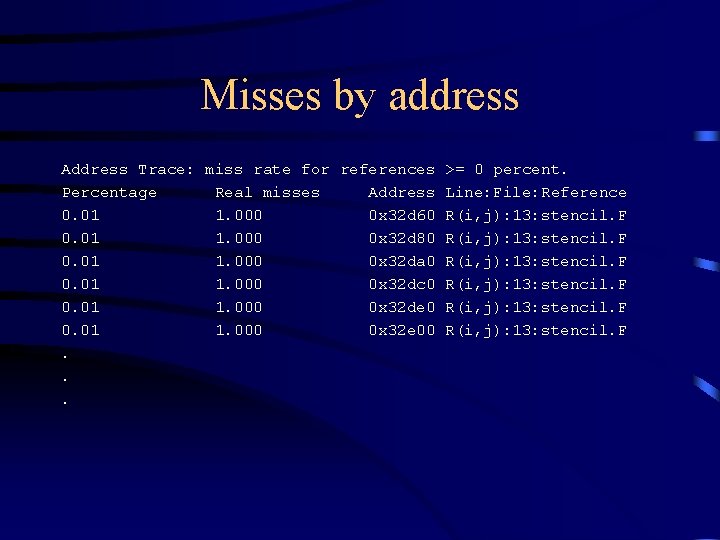

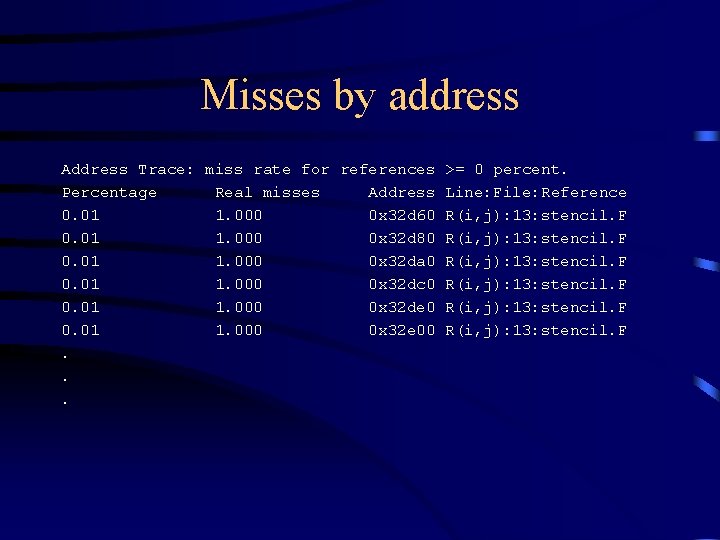

Misses by address Address Trace: miss rate for references >= 0 percent. Percentage Real misses Address Line: File: Reference 0. 01 1. 000 0 x 32 d 60 R(i, j): 13: stencil. F 0. 01 1. 000 0 x 32 d 80 R(i, j): 13: stencil. F 0. 01 1. 000 0 x 32 da 0 R(i, j): 13: stencil. F 0. 01 1. 000 0 x 32 dc 0 R(i, j): 13: stencil. F 0. 01 1. 000 0 x 32 de 0 R(i, j): 13: stencil. F 0. 01 1. 000 0 x 32 e 00 R(i, j): 13: stencil. F. . .

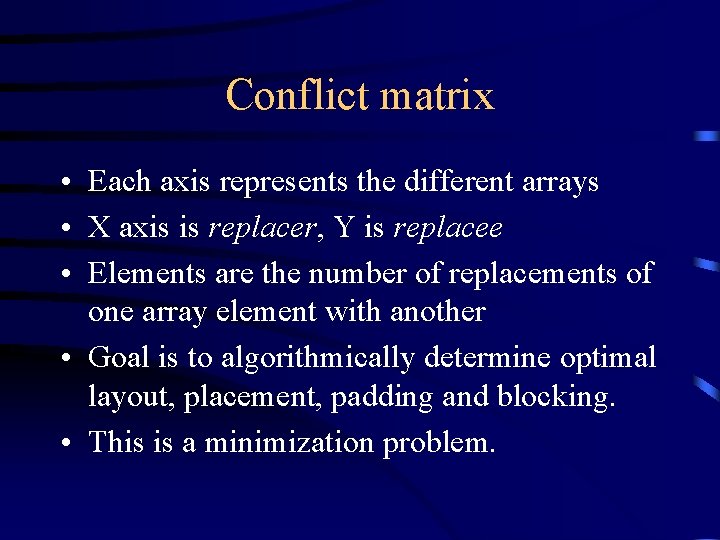

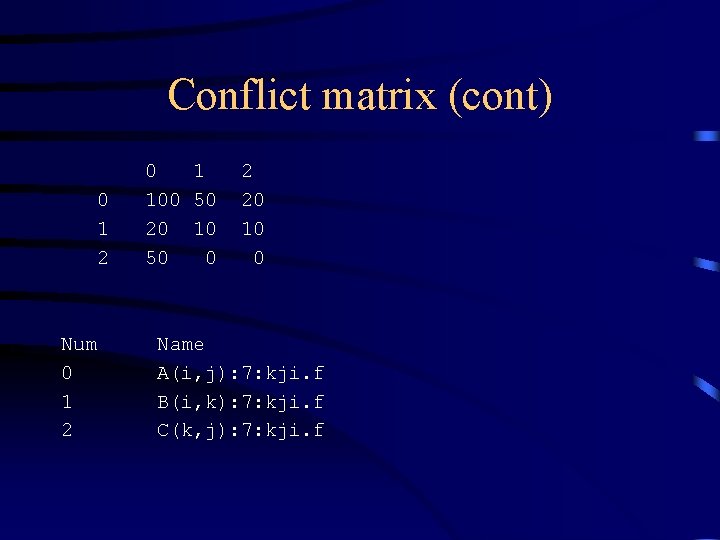

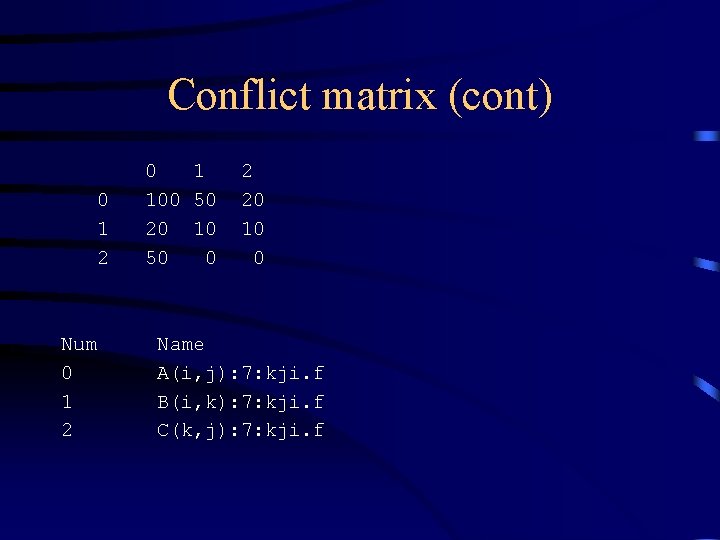

Conflict matrix • Each axis represents the different arrays • X axis is replacer, Y is replacee • Elements are the number of replacements of one array element with another • Goal is to algorithmically determine optimal layout, placement, padding and blocking. • This is a minimization problem.

Conflict matrix (cont) 0 1 2 Num 0 1 2 0 1 100 50 20 10 50 0 2 20 10 0 Name A(i, j): 7: kji. f B(i, k): 7: kji. f C(k, j): 7: kji. f

Address dump • • Symbolic name Virtual address Cache line Goal is to use this for replay.

Future • Handle nonblocking caches, replacement policies, write strategies and buffering. • Add output file with starting address and extents for each array. • Facility for replay of the simulator using the address dump, and data regarding padding, blocking and alignment. This will eliminates the need for additional runs.

Future (cont) • Categorize cold misses for repeatedly accessed data items. • Provide cost metrics to analyze approximate performance loss due to poor locality. • Full Lex/Yacc based parser. • Perl/Tk GUI for finer control of instrumentation.

Future (cont) • • MPI, Thread aware Reduction in run-time requirements MUT integration Tools to compare data sets