Automatic Keyphrase Extraction via Topic Decomposition Presenter WU

- Slides: 19

Automatic Keyphrase Extraction via Topic Decomposition Presenter: WU, MIN-CONG Authors: Zhiyuan Liu, Wenyi Huang, Yabin Zheng and Maosong Sun 2010, ACM Intelligent Database Systems Lab

Outlines n Motivation n Objectives n Methodology n Experiments n Conclusions n Comments 1 Intelligent Database Systems Lab

Motivation • Existing graph-based ranking methods for keyphrase extraction just compute a single importance score for each word via a single random walk. • Motivated by the fact that both documents and words can be represented by a mixture of semantic topics. 2 Intelligent Database Systems Lab

Objectives • We thus build a Topical Page. Rank (TPR) on word graph to measure word importance with respect to different topics. • we further calculate the ranking scores of words and extract the top ranked ones as keyphrases. 3 Intelligent Database Systems Lab

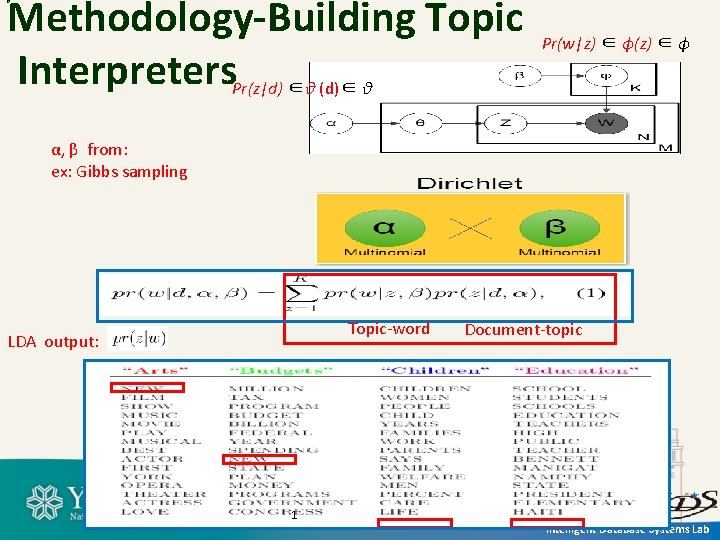

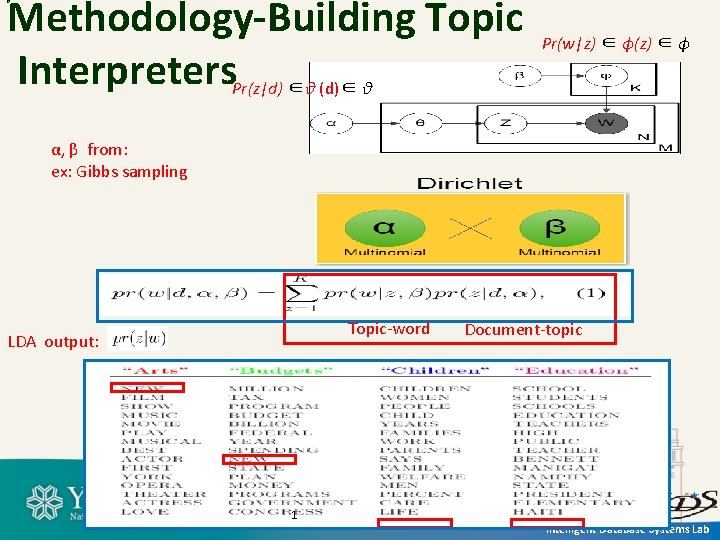

Methodology-Building Topic Interpreters. Pr(z|d) ∈θ (d)∈ θ θ Pr(w|z) ∈ ϕ(z) ∈ ϕ α, β from: ex: Gibbs sampling Topic-word LDA output: 1 Document-topic Intelligent Database Systems Lab

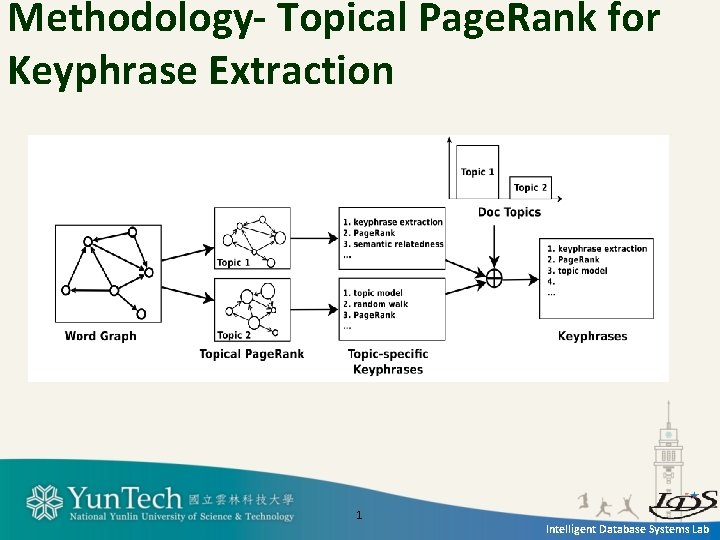

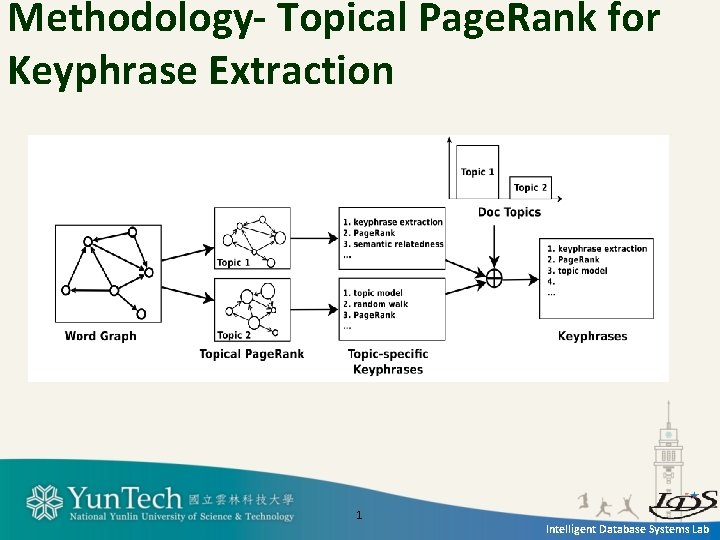

Methodology- Topical Page. Rank for Keyphrase Extraction 1 Intelligent Database Systems Lab

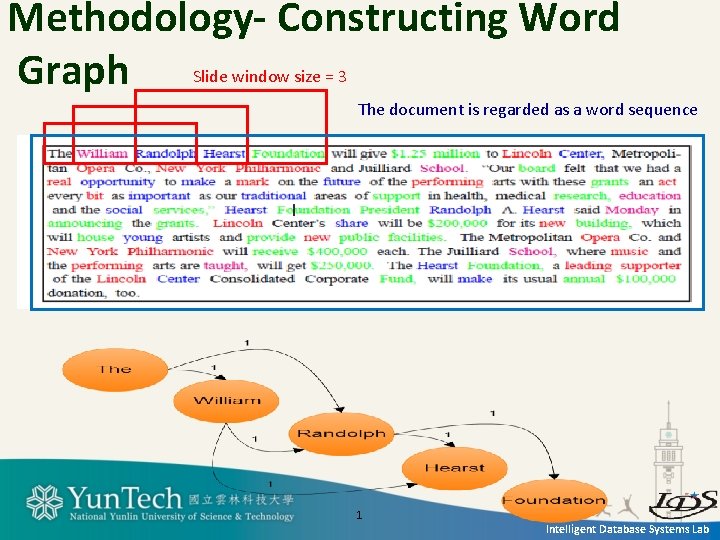

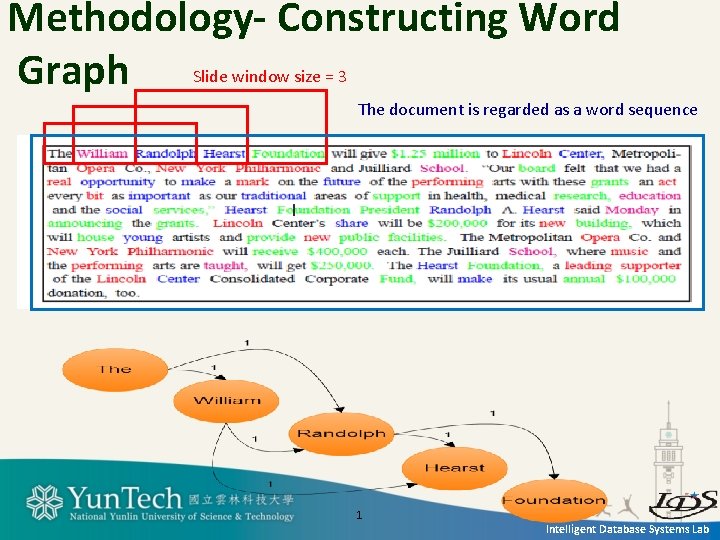

Methodology- Constructing Word Graph Slide window size = 3 The document is regarded as a word sequence 1 Intelligent Database Systems Lab

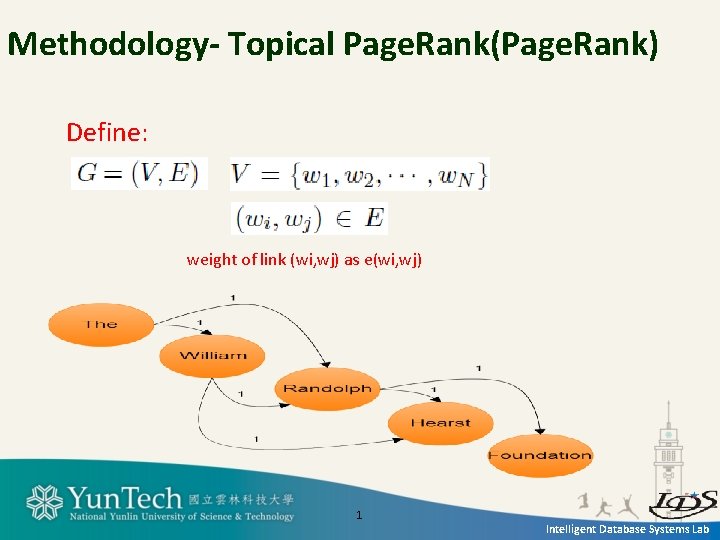

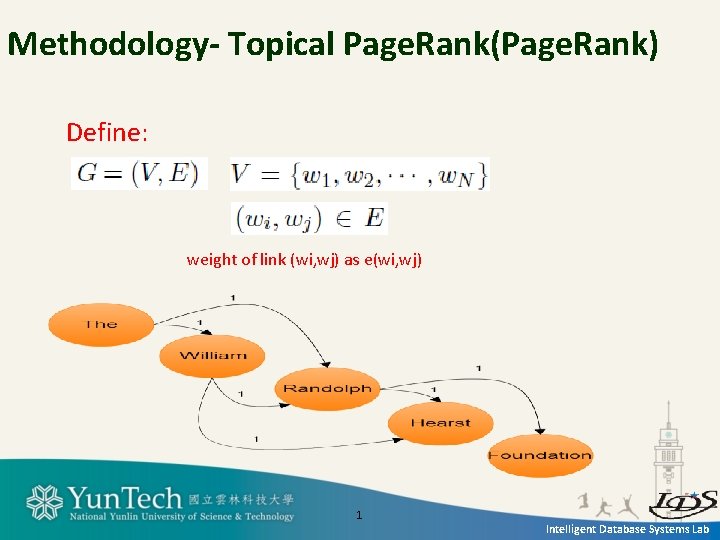

Methodology- Topical Page. Rank(Page. Rank) Define: weight of link (wi, wj) as e(wi, wj) 1 Intelligent Database Systems Lab

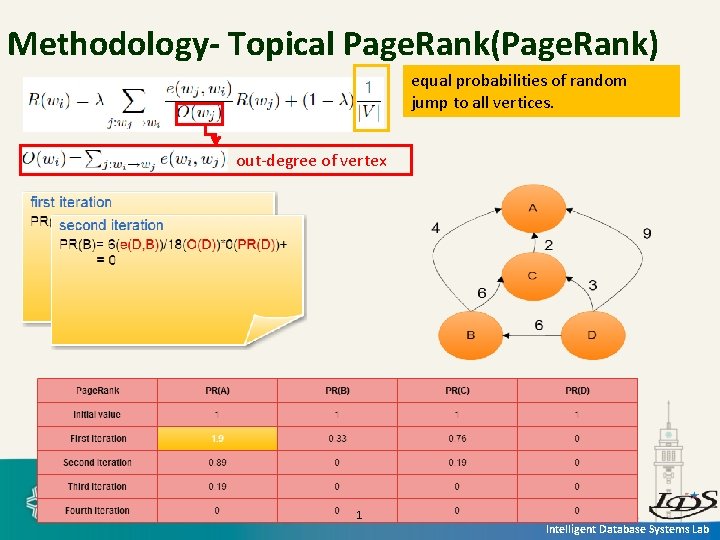

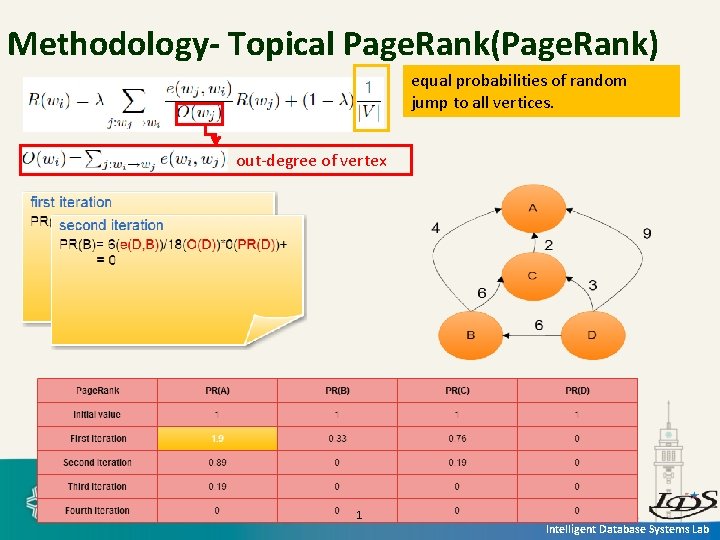

Methodology- Topical Page. Rank(Page. Rank) equal probabilities of random jump to all vertices. out-degree of vertex 1 Intelligent Database Systems Lab

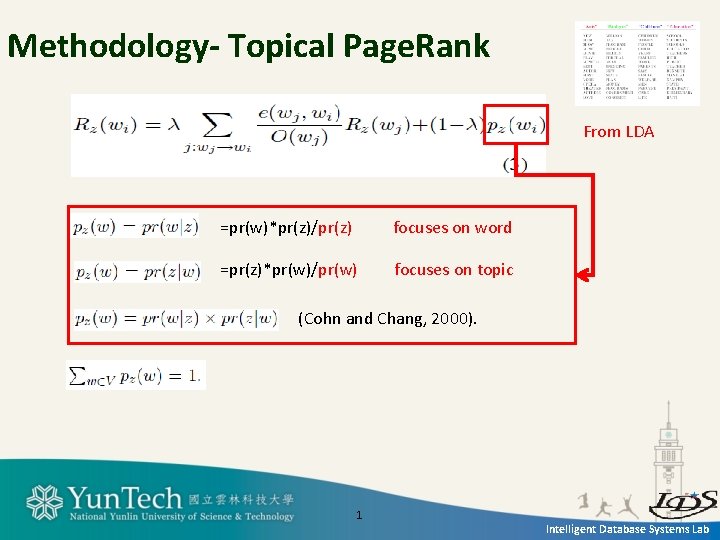

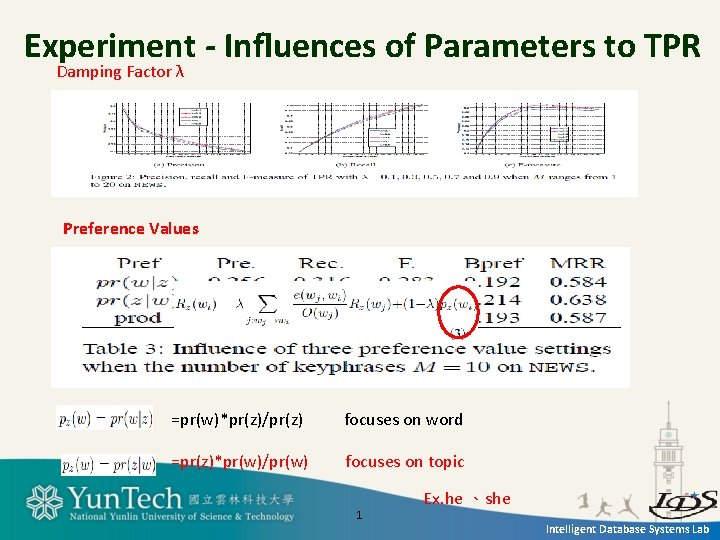

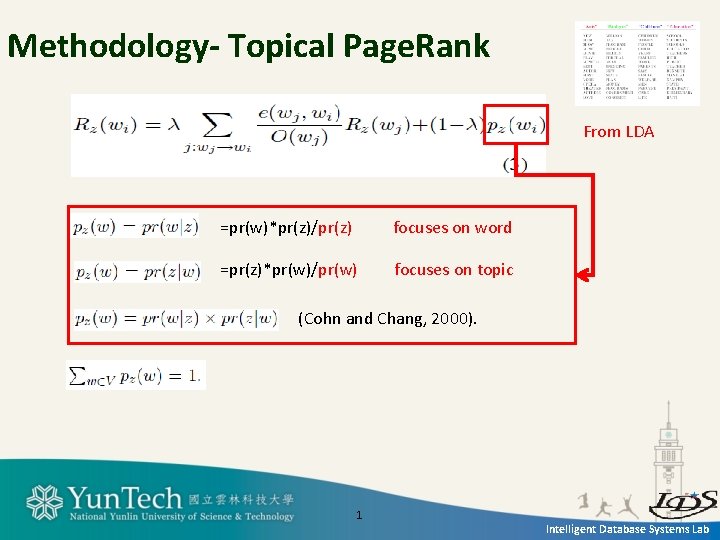

Methodology- Topical Page. Rank From LDA =pr(w)*pr(z)/pr(z) focuses on word =pr(z)*pr(w)/pr(w) focuses on topic (Cohn and Chang, 2000). 1 Intelligent Database Systems Lab

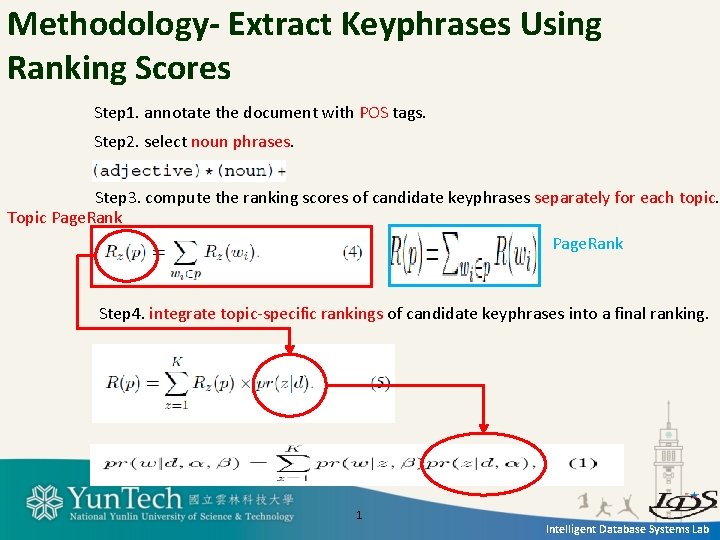

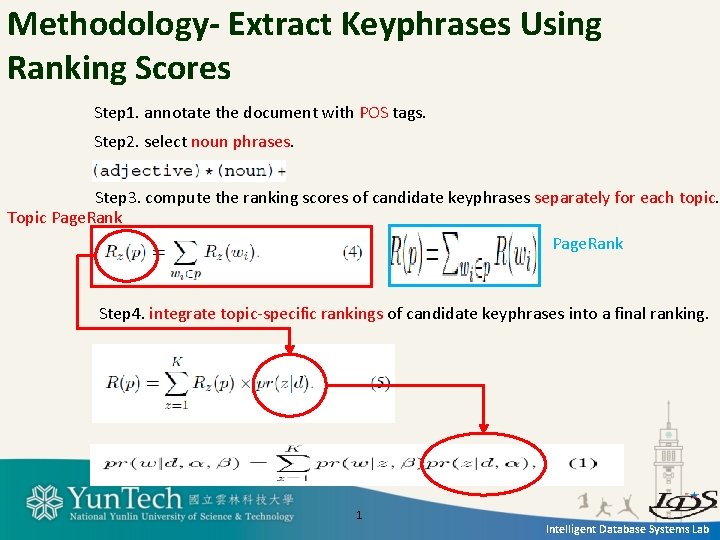

Methodology- Extract Keyphrases Using Ranking Scores Step 1. annotate the document with POS tags. Step 2. select noun phrases. Step 3. compute the ranking scores of candidate keyphrases separately for each topic. Topic Page. Rank Step 4. integrate topic-specific rankings of candidate keyphrases into a final ranking. 1 Intelligent Database Systems Lab

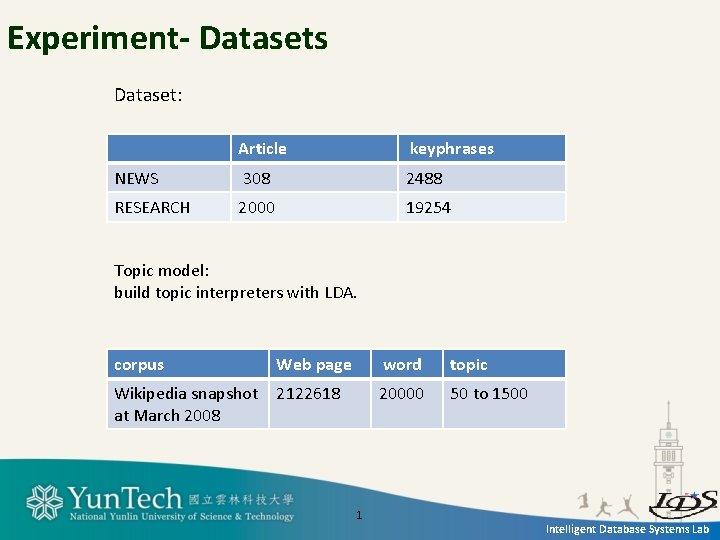

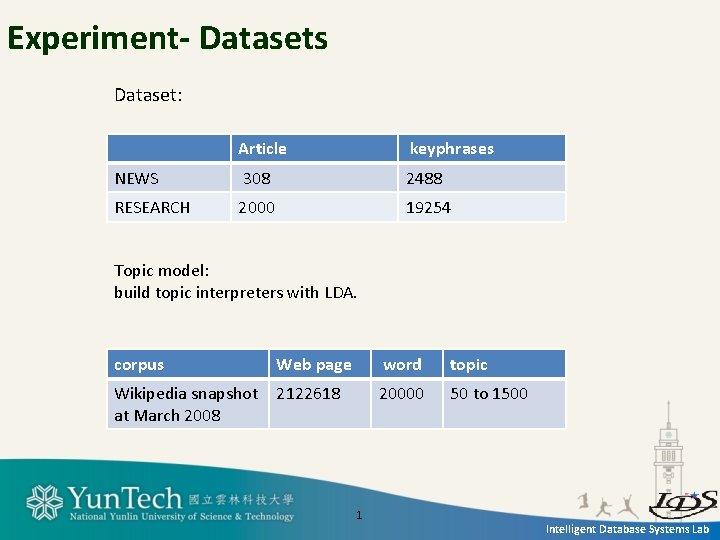

Experiment- Datasets Dataset: Article keyphrases NEWS 308 2488 RESEARCH 2000 19254 Topic model: build topic interpreters with LDA. corpus Web page word topic Wikipedia snapshot at March 2008 2122618 20000 50 to 1500 1 Intelligent Database Systems Lab

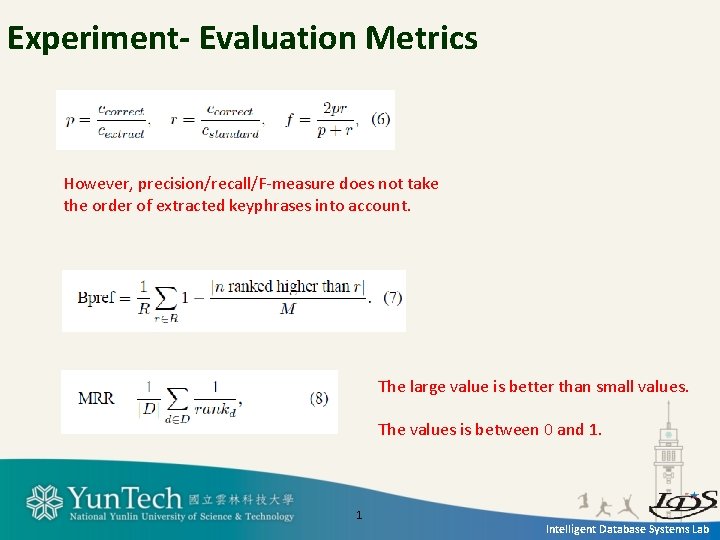

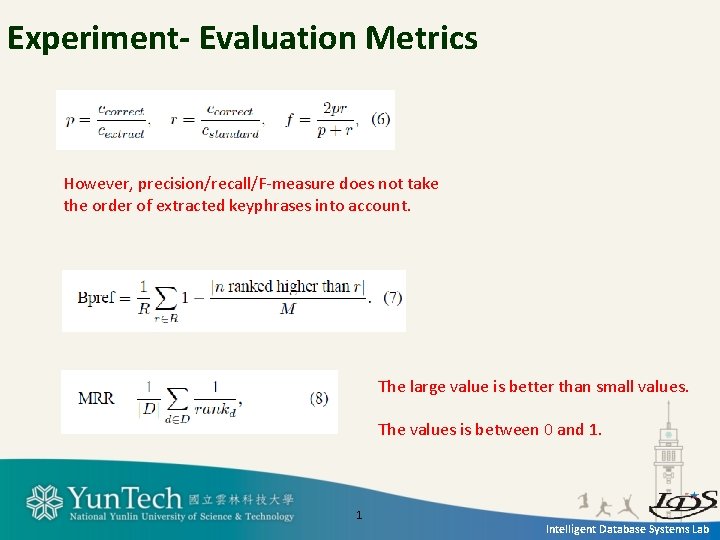

Experiment- Evaluation Metrics However, precision/recall/F-measure does not take the order of extracted keyphrases into account. The large value is better than small values. The values is between 0 and 1. 1 Intelligent Database Systems Lab

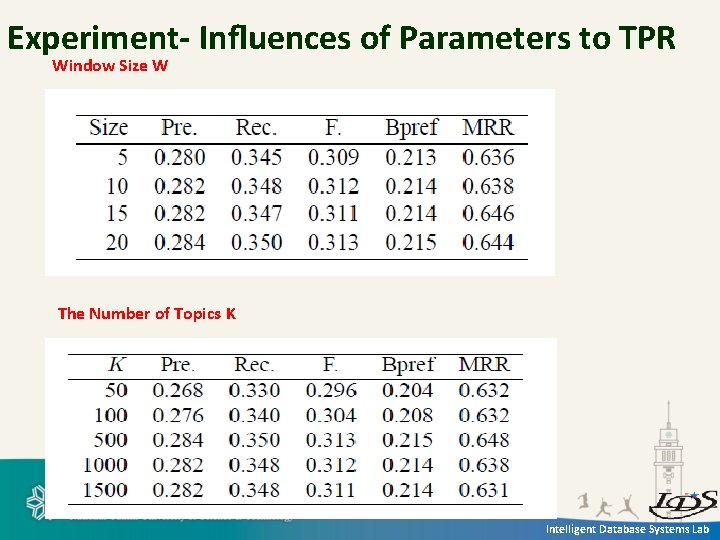

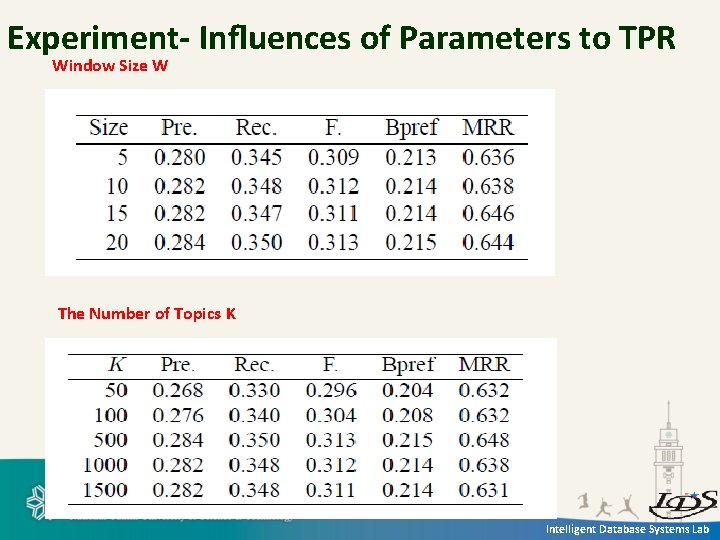

Experiment- Influences of Parameters to TPR Window Size W The Number of Topics K 1 Intelligent Database Systems Lab

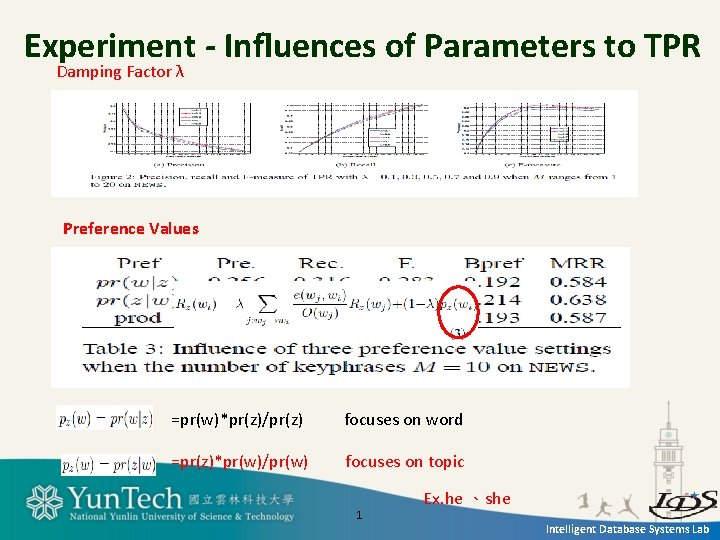

Experiment - Influences of Parameters to TPR Damping Factor λ Preference Values =pr(w)*pr(z)/pr(z) focuses on word =pr(z)*pr(w)/pr(w) focuses on topic 1 Ex. he 、she Intelligent Database Systems Lab

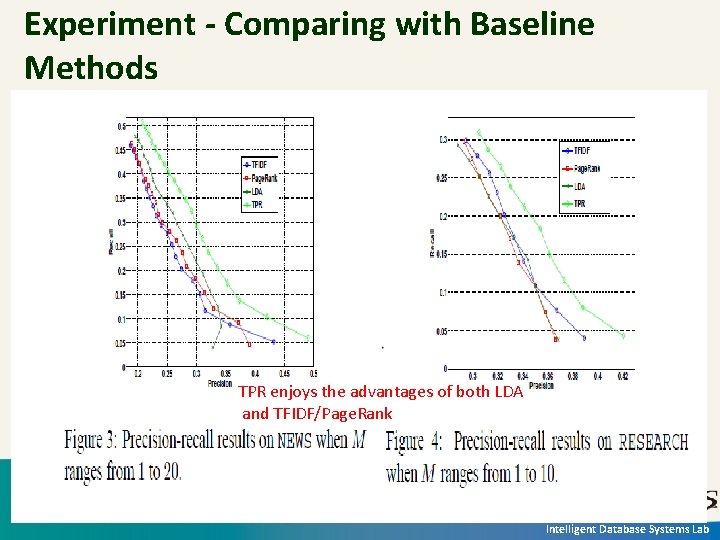

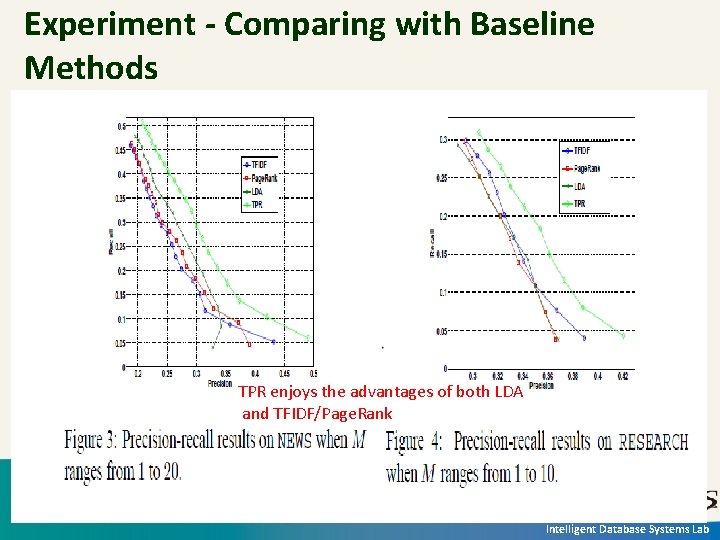

Experiment - Comparing with Baseline Methods do not use topic information TPR enjoys the advantages of both LDA and TFIDF/Page. Rank 1 Intelligent Database Systems Lab

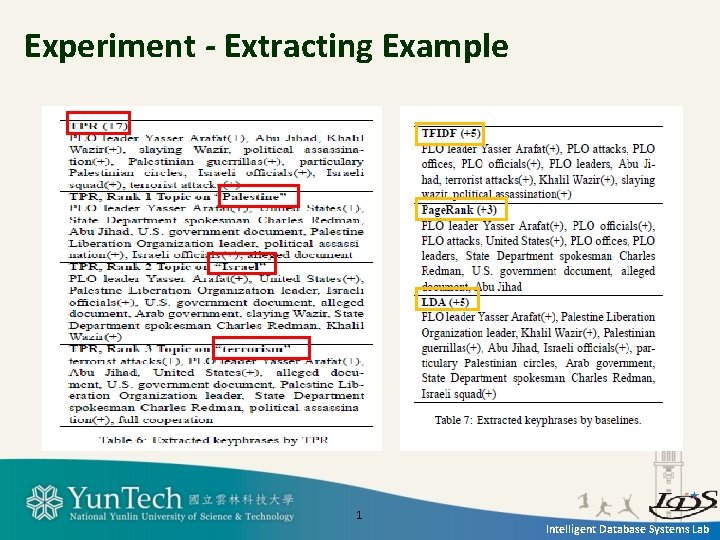

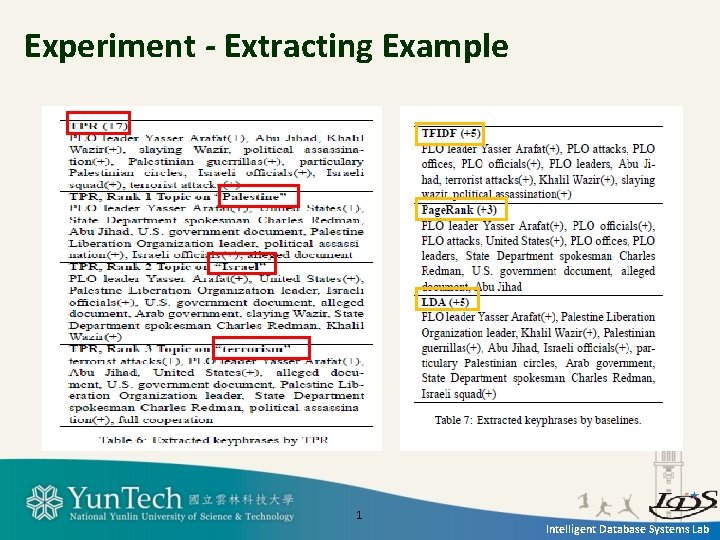

Experiment - Extracting Example 1 Intelligent Database Systems Lab

Conclusions • Experiments on two datasets show that TPR achieves better performance than other baseline methods. 1 Intelligent Database Systems Lab

Comments • Advantages – TPR incorporates topic information within random walk for keyphrase extraction. • Applications – Automatic Keyphrase Extraction. 1 Intelligent Database Systems Lab