ATLAS Computing Alessandro De Salvo CCR Workshop 18

- Slides: 23

ATLAS Computing Alessandro De Salvo CCR Workshop, 18 -5 -2016

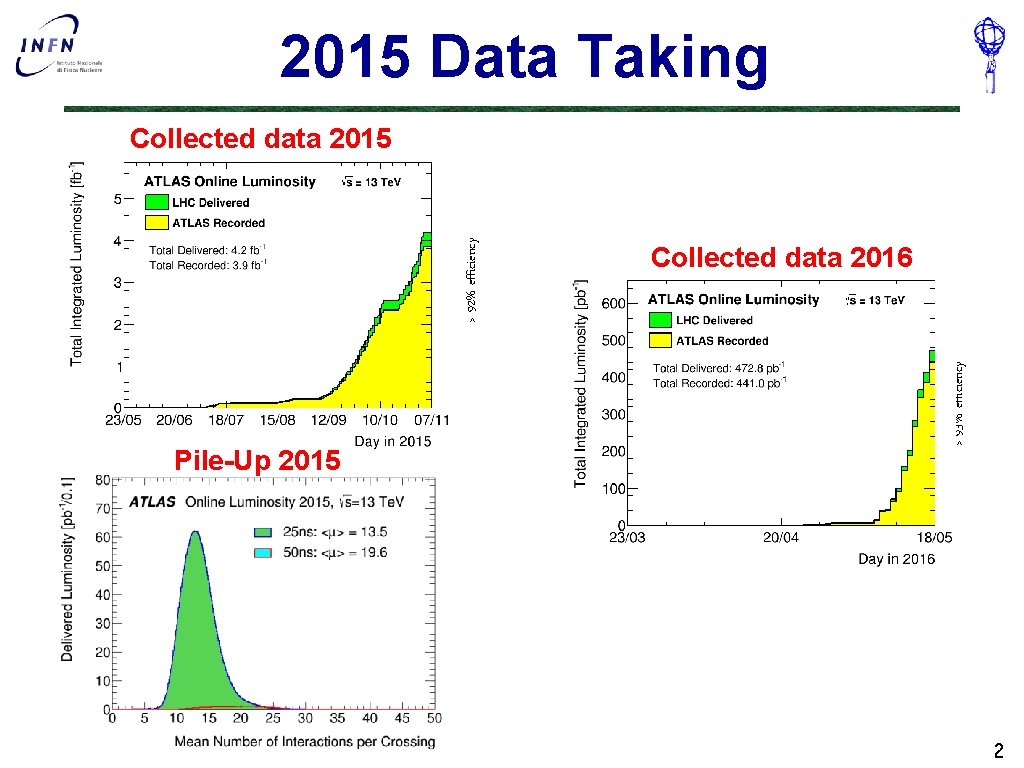

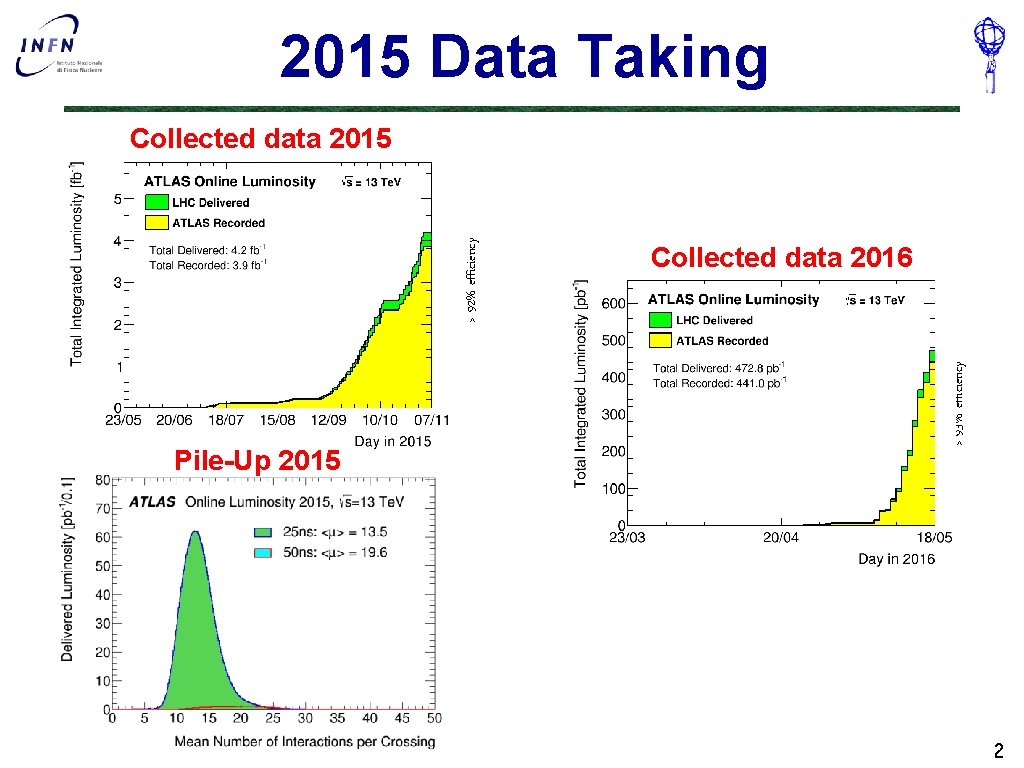

2015 Data Taking Pile-Up 2015 Collected data 2016 > 93% efficiency > 92% efficiency Collected data 2015 2

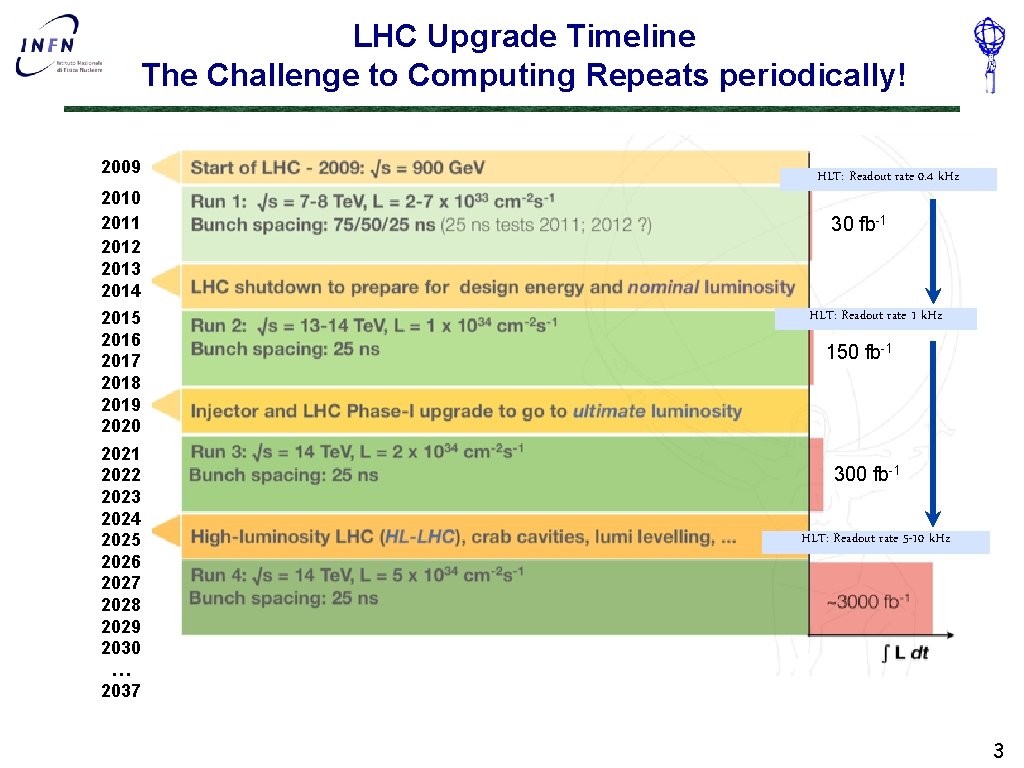

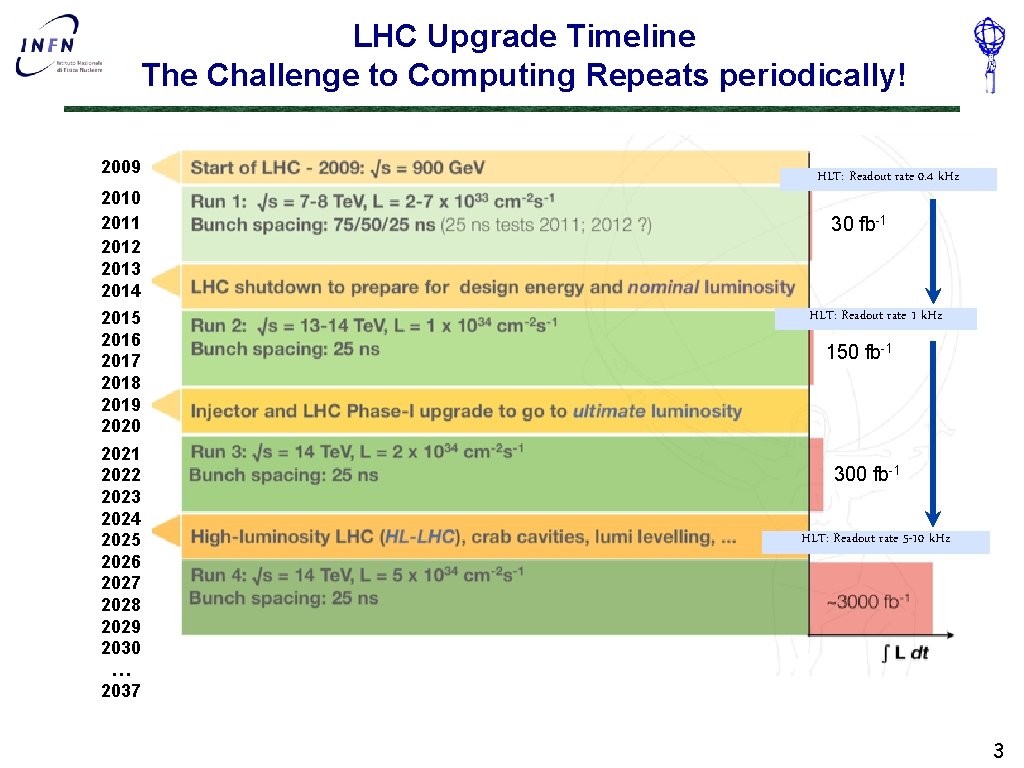

LHC Upgrade Timeline The Challenge to Computing Repeats periodically! 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025 2026 2027 2028 2029 2030 … 2037 HLT: Readout rate 0. 4 k. Hz 30 fb-1 HLT: Readout rate 1 k. Hz 150 fb-1 300 fb-1 HLT: Readout rate 5 -10 k. Hz 3

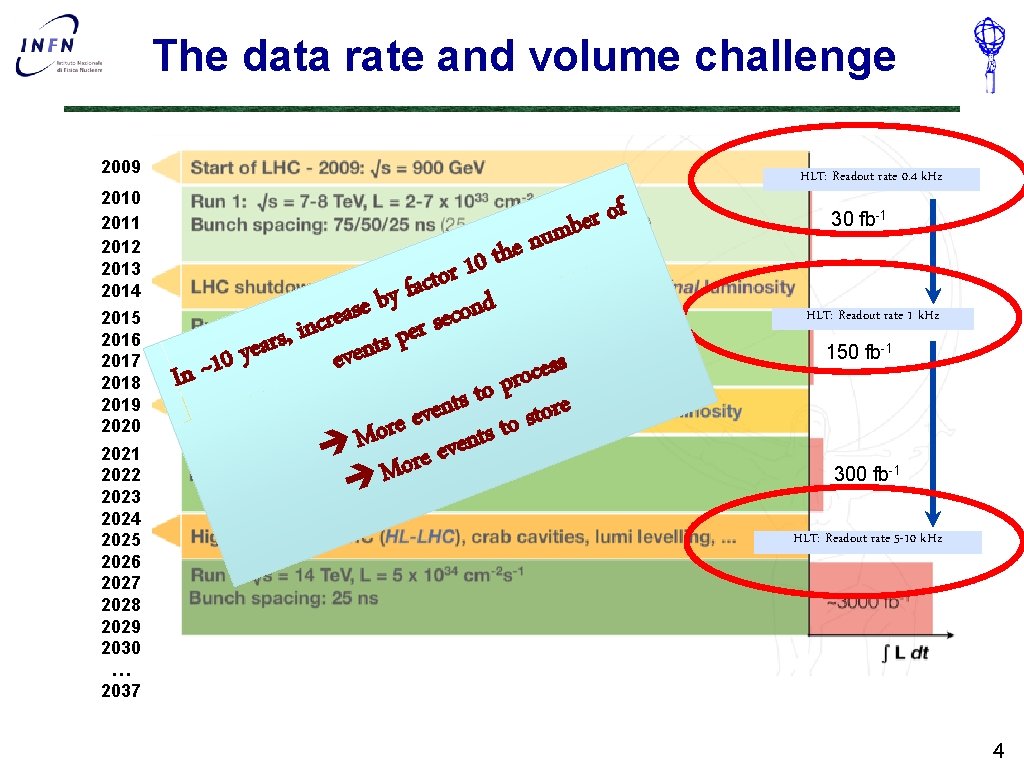

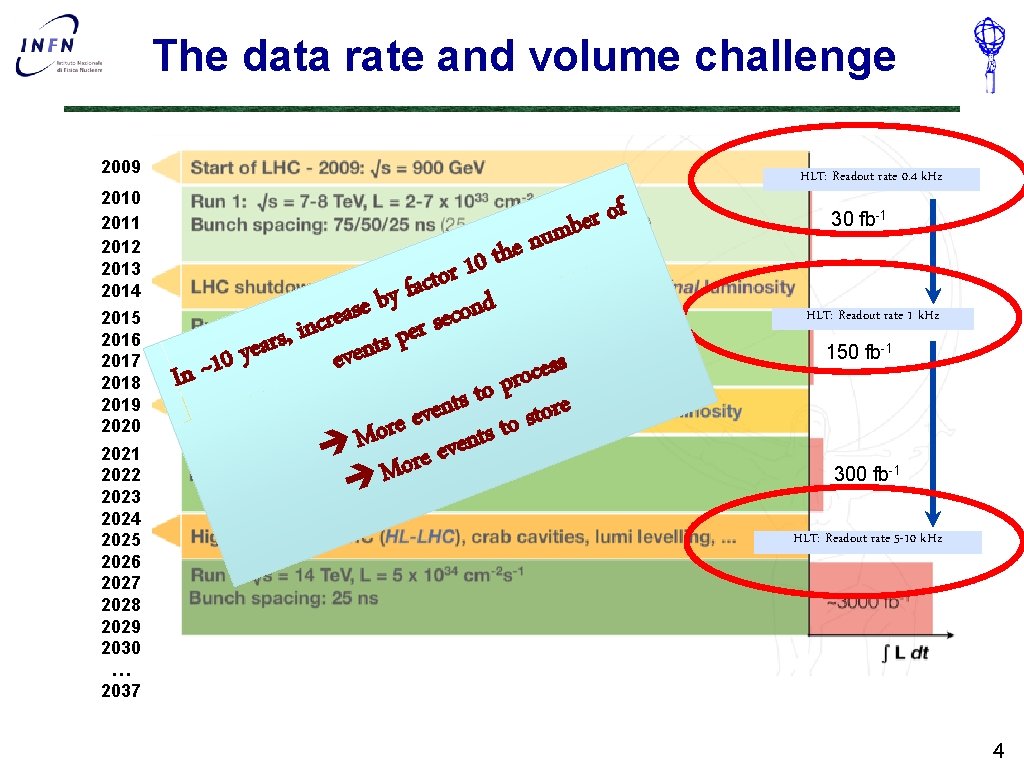

The data rate and volume challenge 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025 2026 2027 2028 2029 2030 … 2037 HLT: Readout rate 0. 4 k. Hz f o r mbe u n e th 0 1 r o t c fa y b d e n s o a c e e r s c n r i e , p s r s t a e n y e v 0 e s 1 s ~ e c n o I r p o t s t e n r e o v t s e e o r t o s t M è ore even èM 30 fb-1 HLT: Readout rate 1 k. Hz 150 fb-1 300 fb-1 HLT: Readout rate 5 -10 k. Hz 4

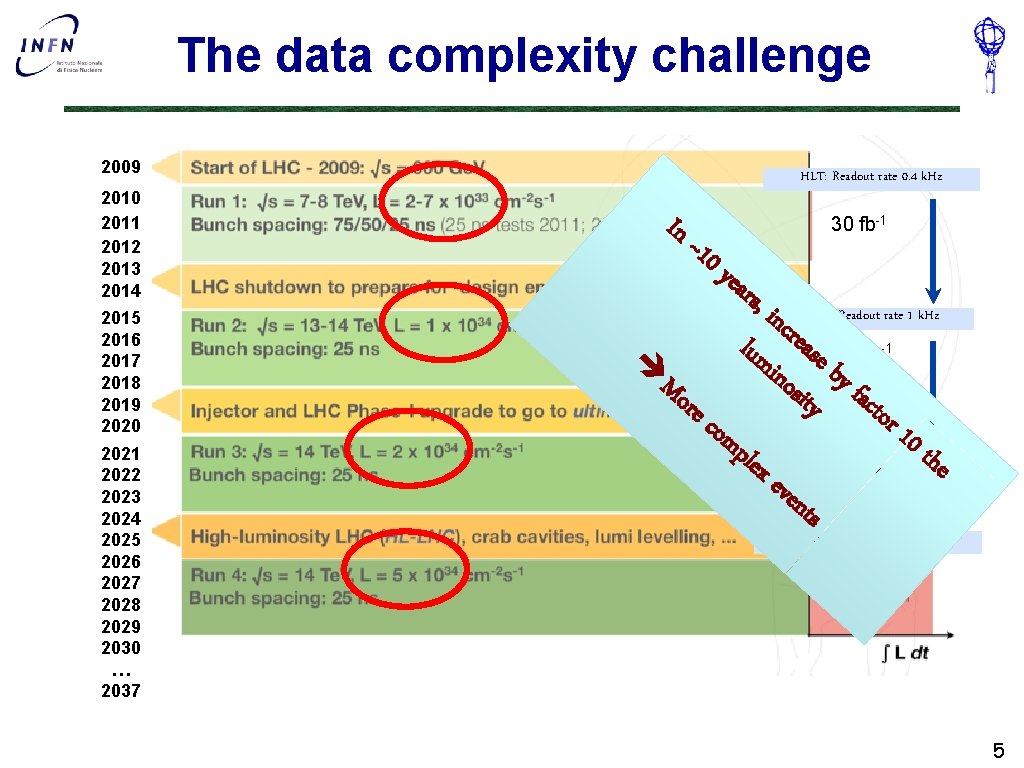

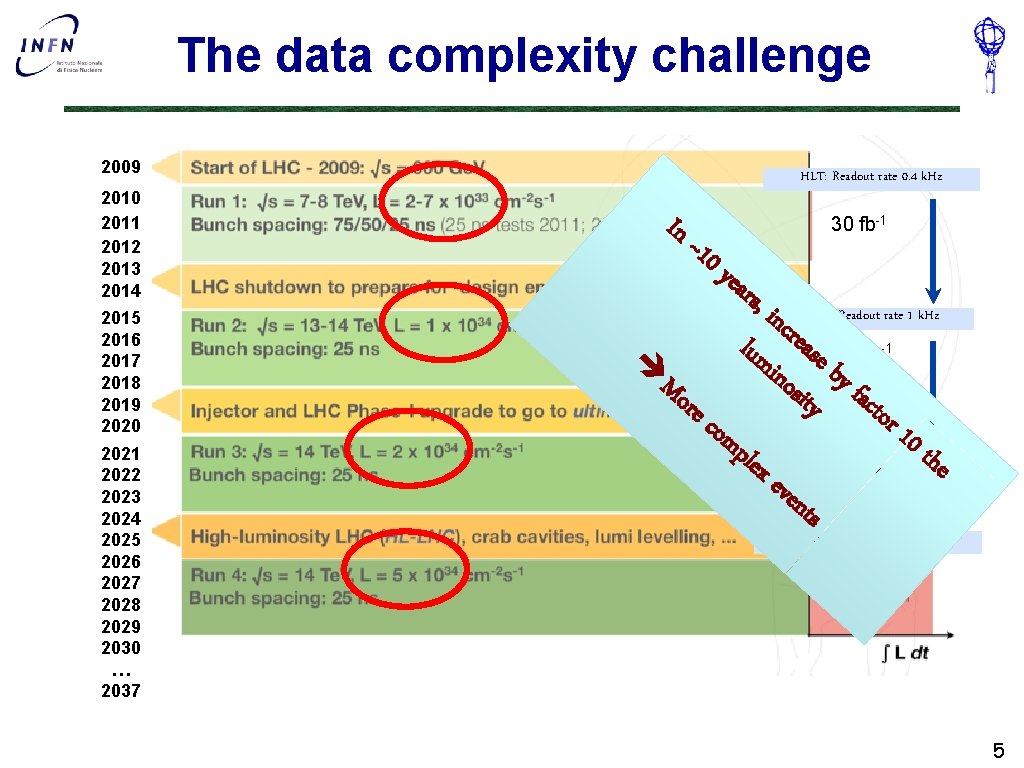

The data complexity challenge 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2021 2022 2023 2024 2025 2026 2027 2028 2029 2030 … 2037 HLT: Readout rate 0. 4 k. Hz In ~10 30 fb-1 yea rs, inc HLT: Readout rate 1 k. Hz r eas 150 fb l u è min e b osi y fa Mo ty cto re r 1 com 0 t ple he xe ven 300 fb ts -1 -1 HLT: Readout rate 5 -10 k. Hz 5

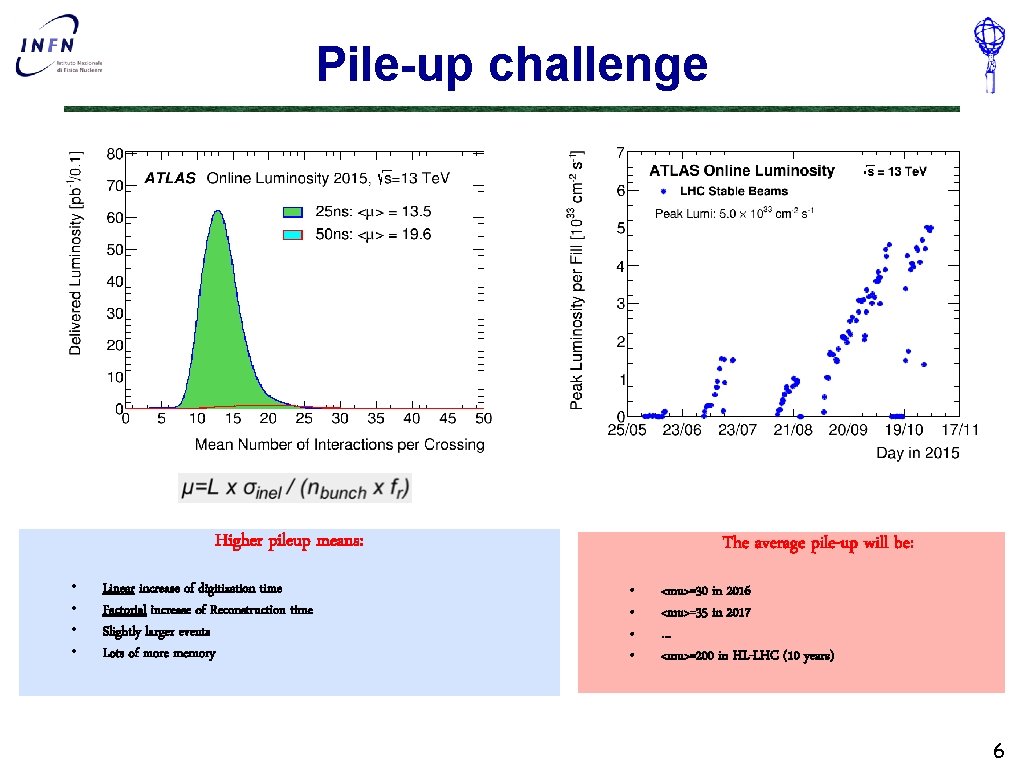

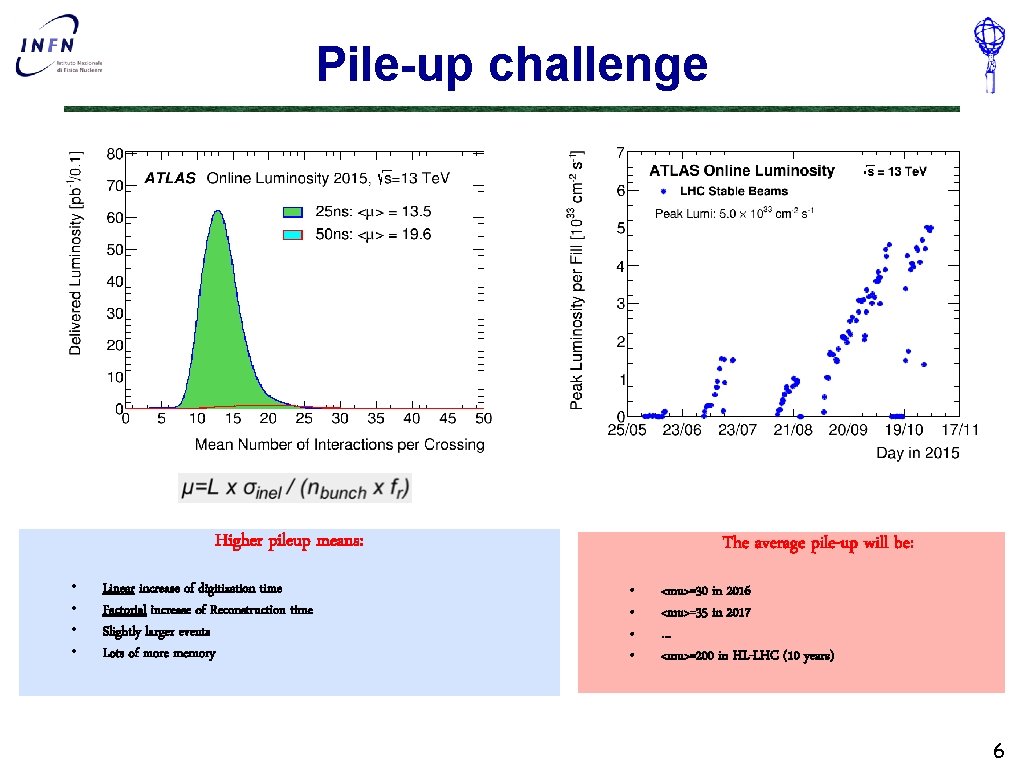

Pile-up challenge Higher pileup means: • • Linear increase of digitization time Factorial increase of Reconstruction time Slightly larger events Lots of more memory The average pile-up will be: • • <mu>=30 in 2016 <mu>=35 in 2017 … <mu>=200 in HL-LHC (10 years) 6

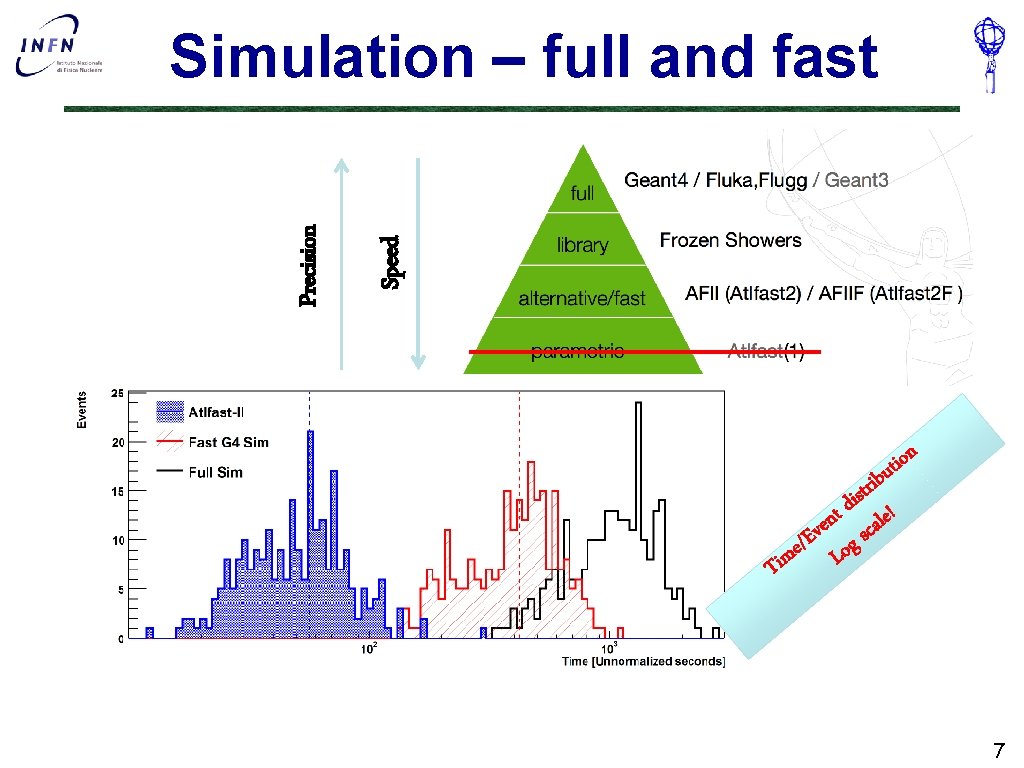

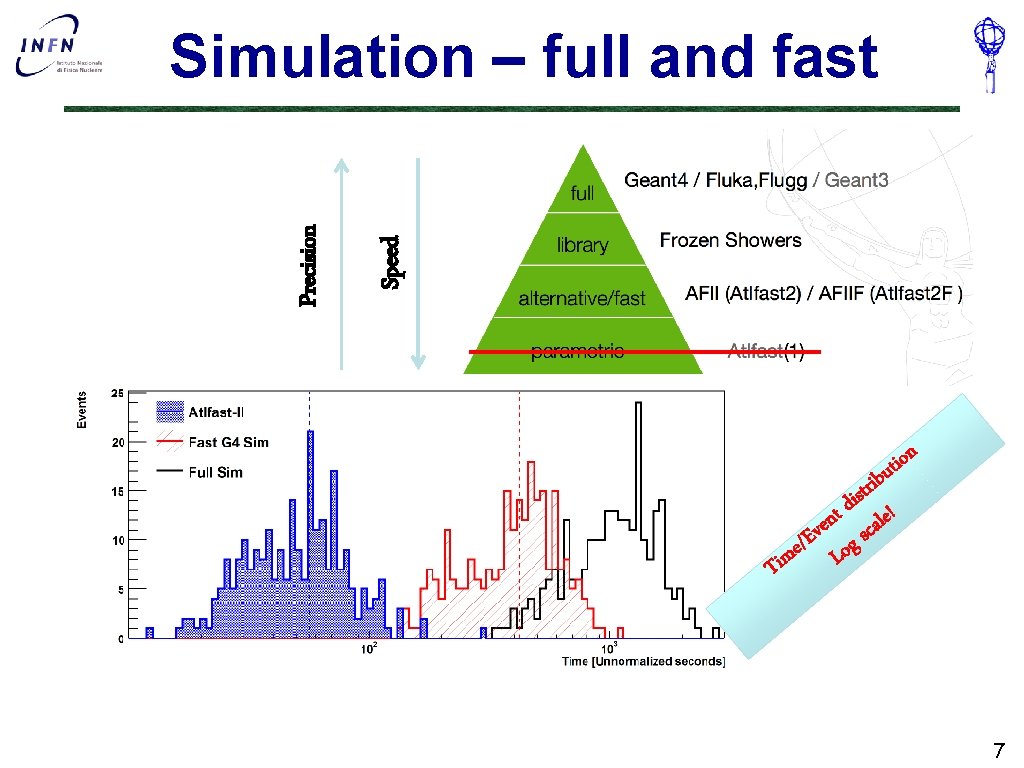

Speed Precision Simulation – full and fast n o i ut tis rib d e! t n cal e v /E og s e Tim L 7

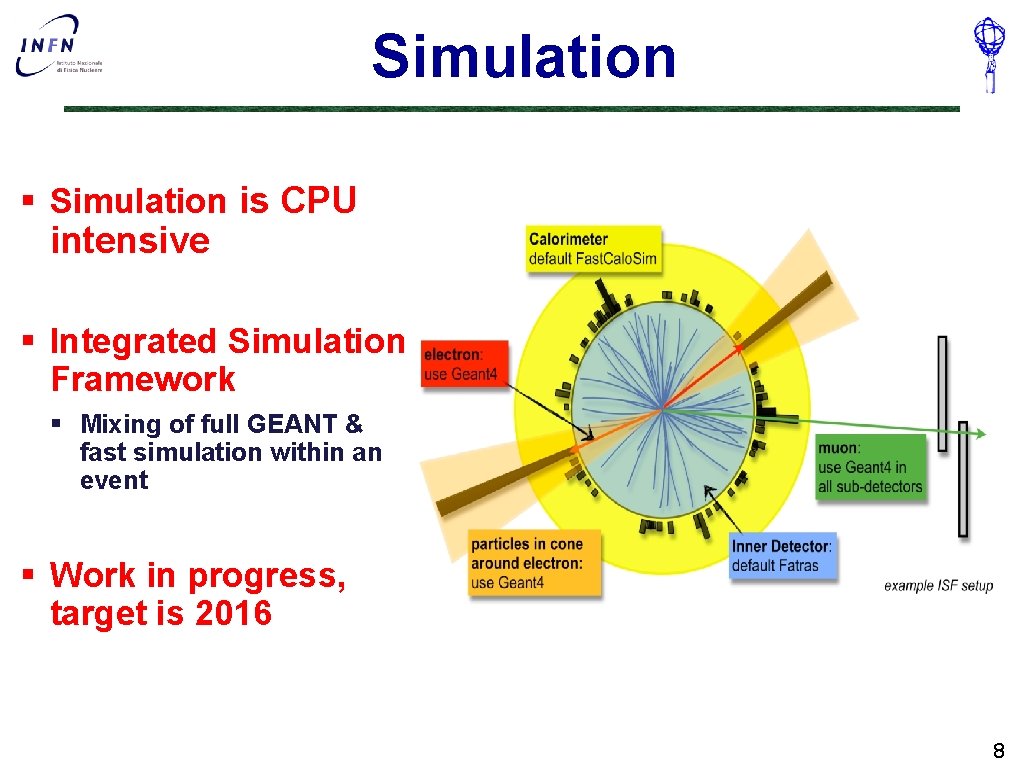

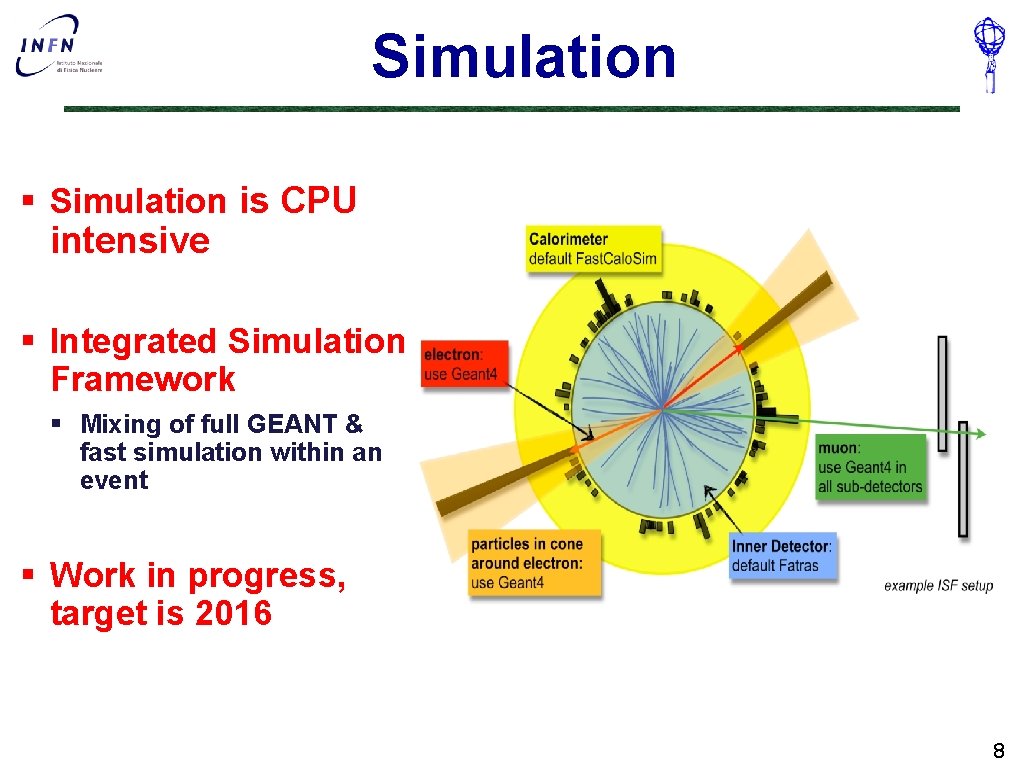

Simulation § Simulation is CPU intensive § Integrated Simulation Framework § Mixing of full GEANT & fast simulation within an event § Work in progress, target is 2016 8

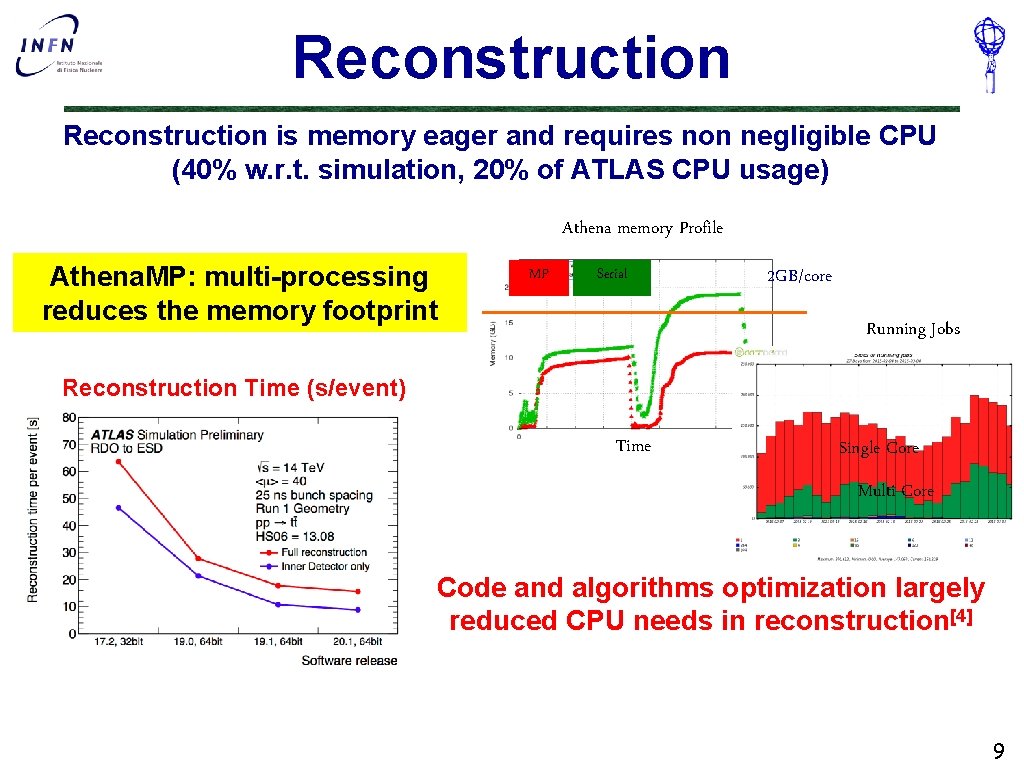

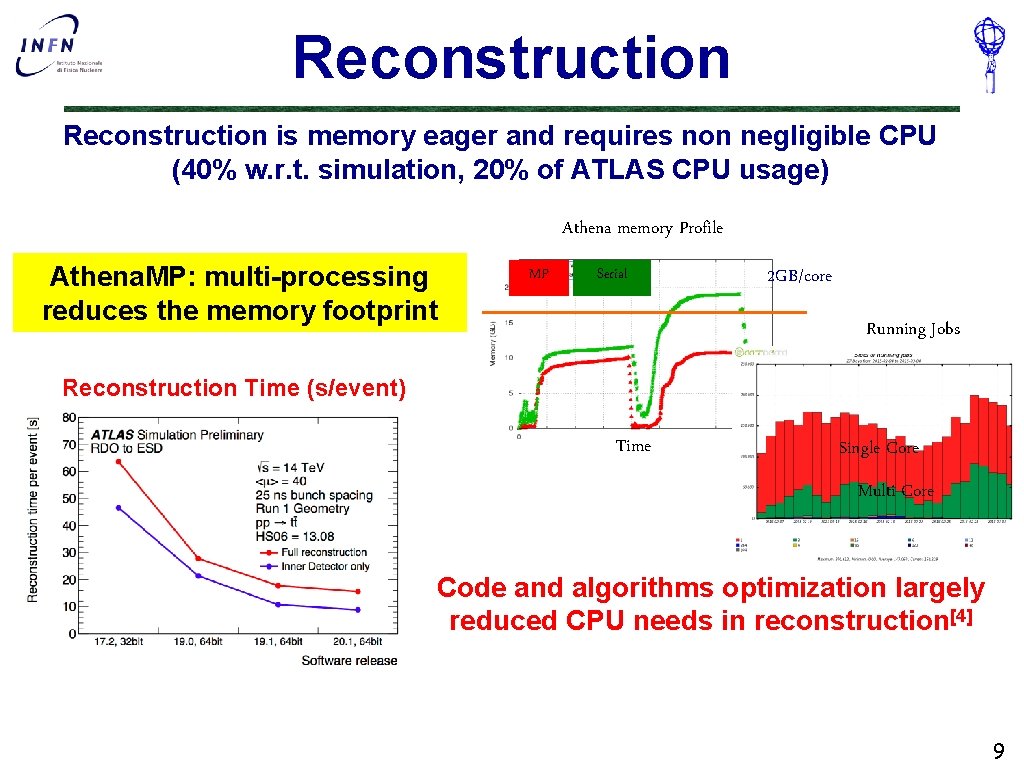

Reconstruction is memory eager and requires non negligible CPU (40% w. r. t. simulation, 20% of ATLAS CPU usage) Athena memory Profile Athena. MP: multi-processing reduces the memory footprint MP Serial 2 GB/core Running Jobs Reconstruction Time (s/event) Time Single Core Multi Core Code and algorithms optimization largely reduced CPU needs in reconstruction[4] 9

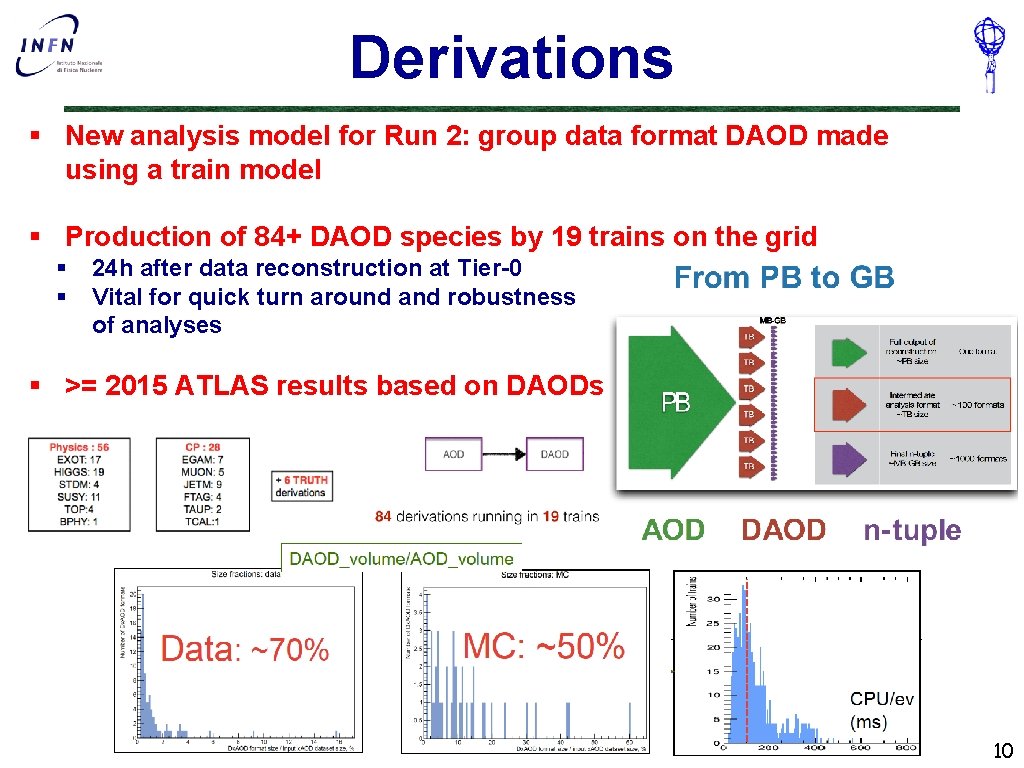

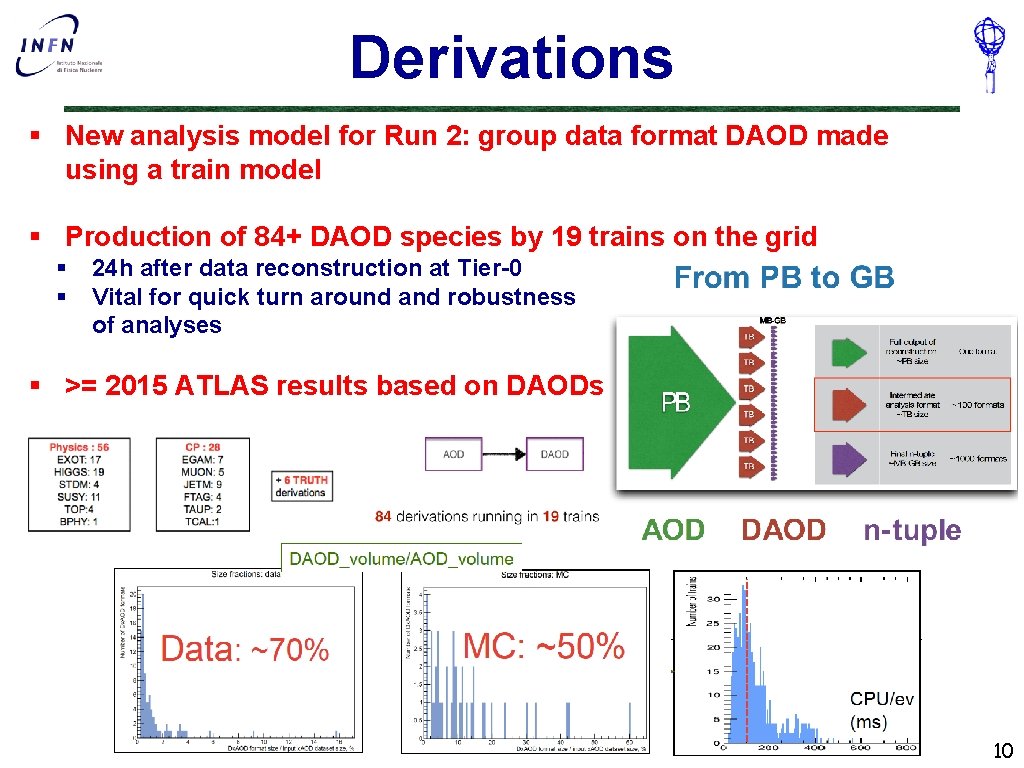

Derivations § New analysis model for Run 2: group data format DAOD made using a train model § Production of 84+ DAOD species by 19 trains on the grid § § 24 h after data reconstruction at Tier-0 Vital for quick turn around and robustness of analyses § >= 2015 ATLAS results based on DAODs 10

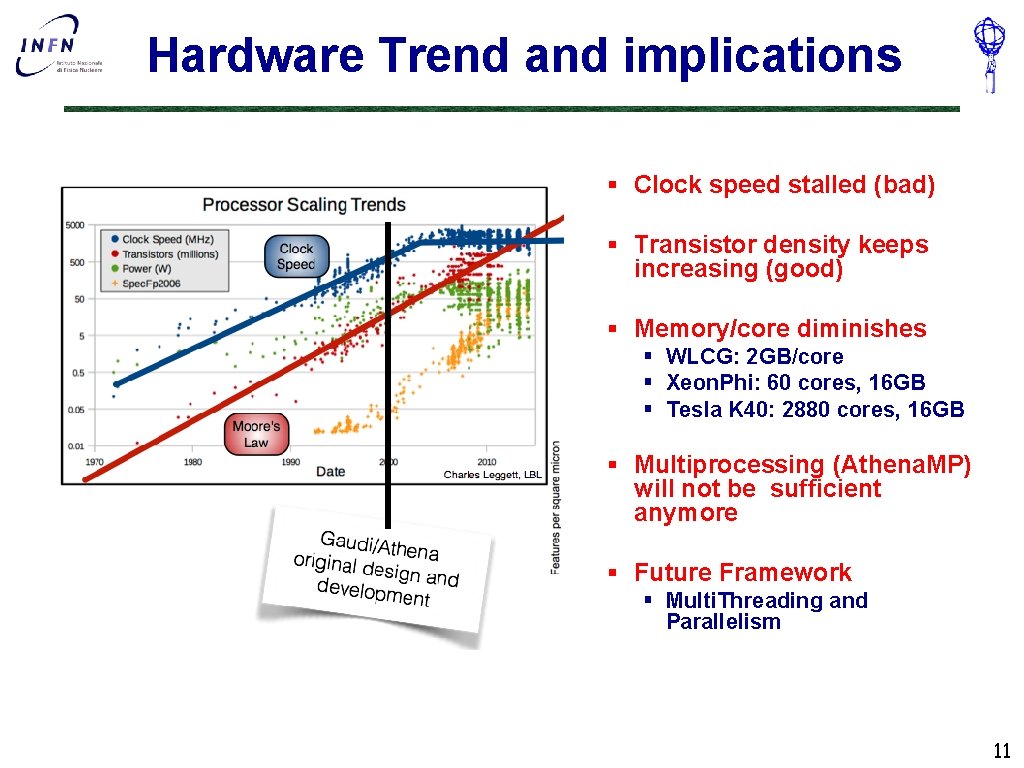

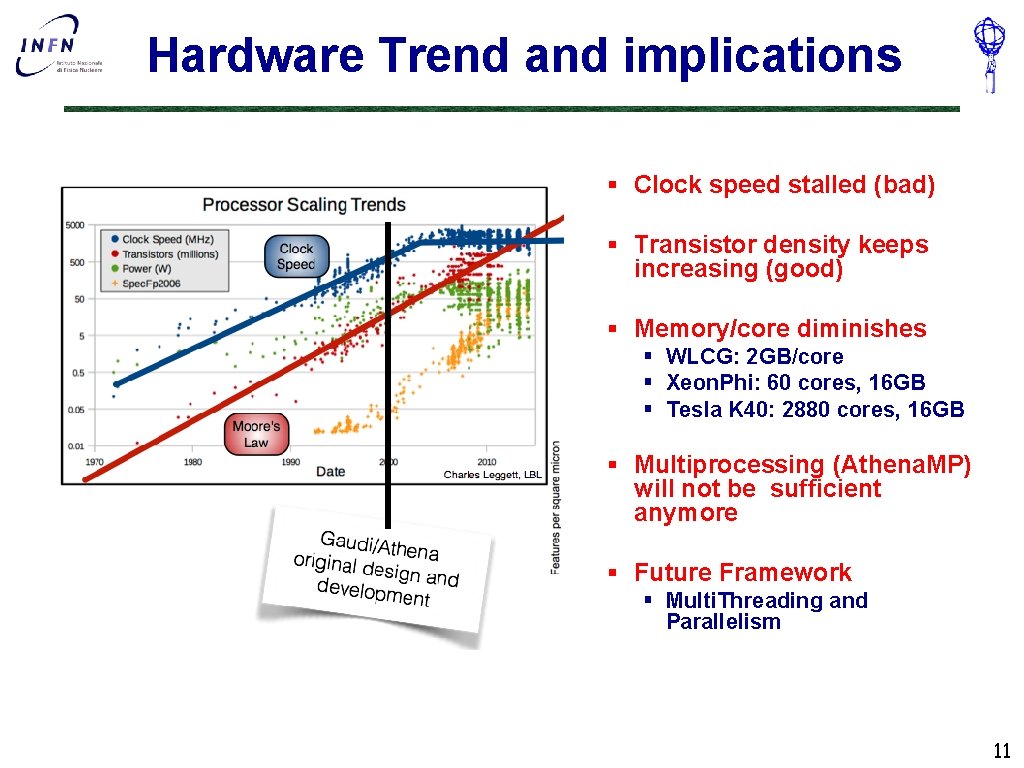

Hardware Trend and implications § Clock speed stalled (bad) § Transistor density keeps increasing (good) § Memory/core diminishes § WLCG: 2 GB/core § Xeon. Phi: 60 cores, 16 GB § Tesla K 40: 2880 cores, 16 GB § Multiprocessing (Athena. MP) will not be sufficient anymore § Future Framework § Multi. Threading and Parallelism 11

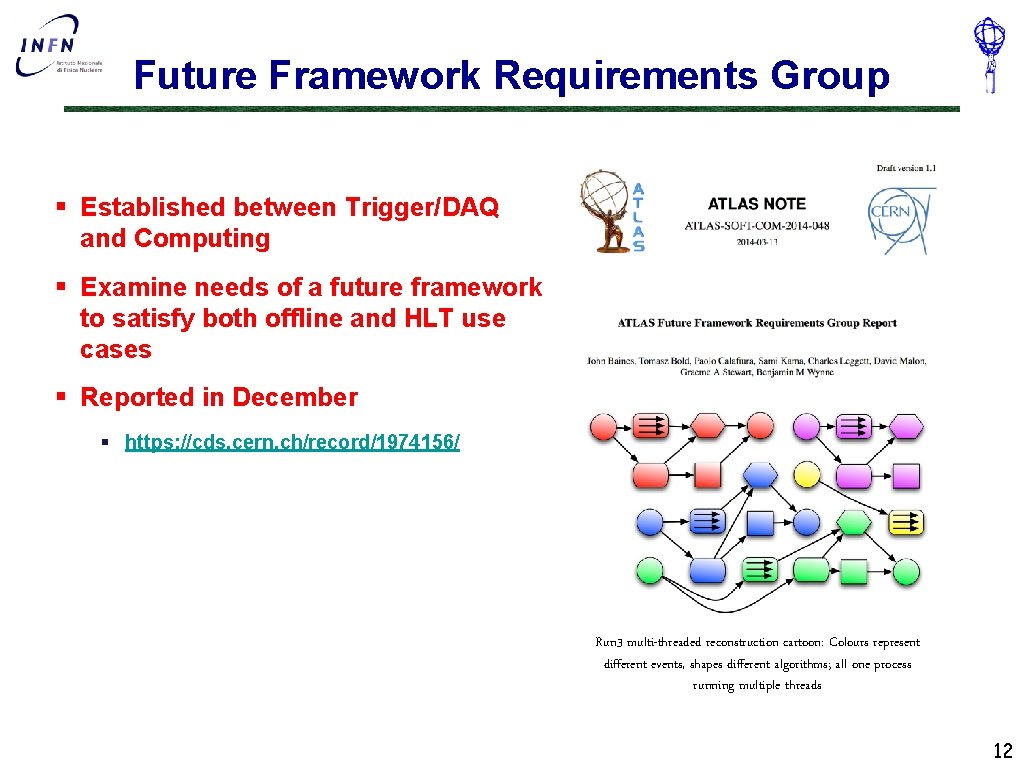

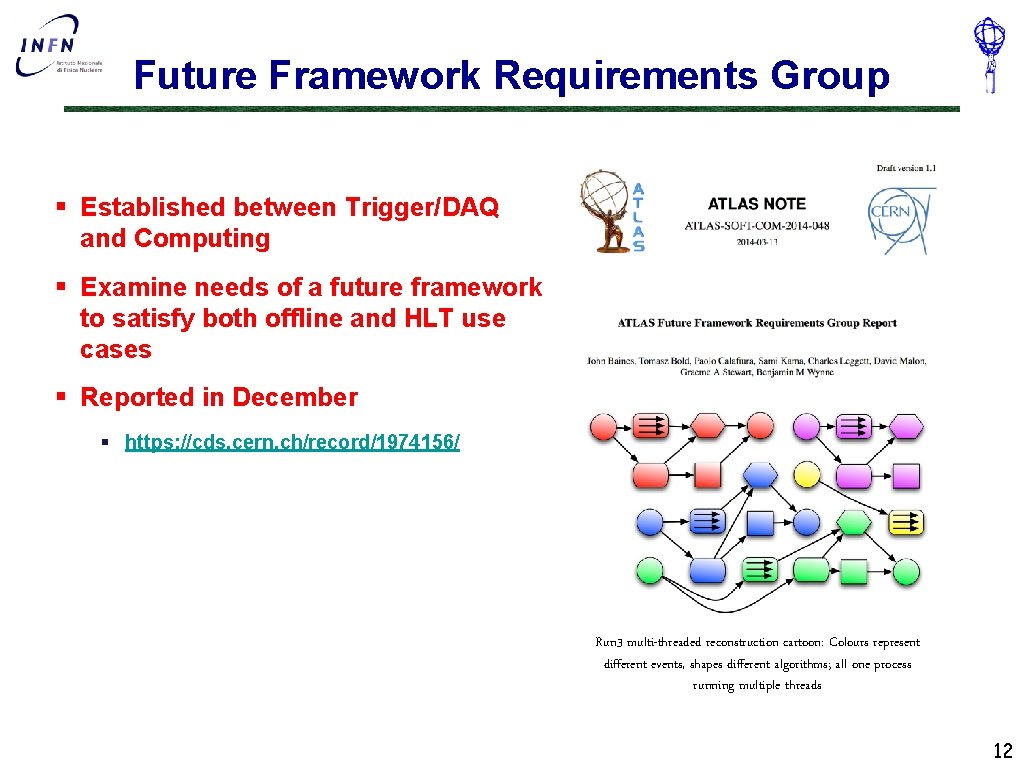

Future Framework Requirements Group § Established between Trigger/DAQ and Computing § Examine needs of a future framework to satisfy both offline and HLT use cases § Reported in December § https: //cds. cern. ch/record/1974156/ Run 3 multi-threaded reconstruction cartoon: Colours represent different events, shapes different algorithms; all one process running multiple threads 12

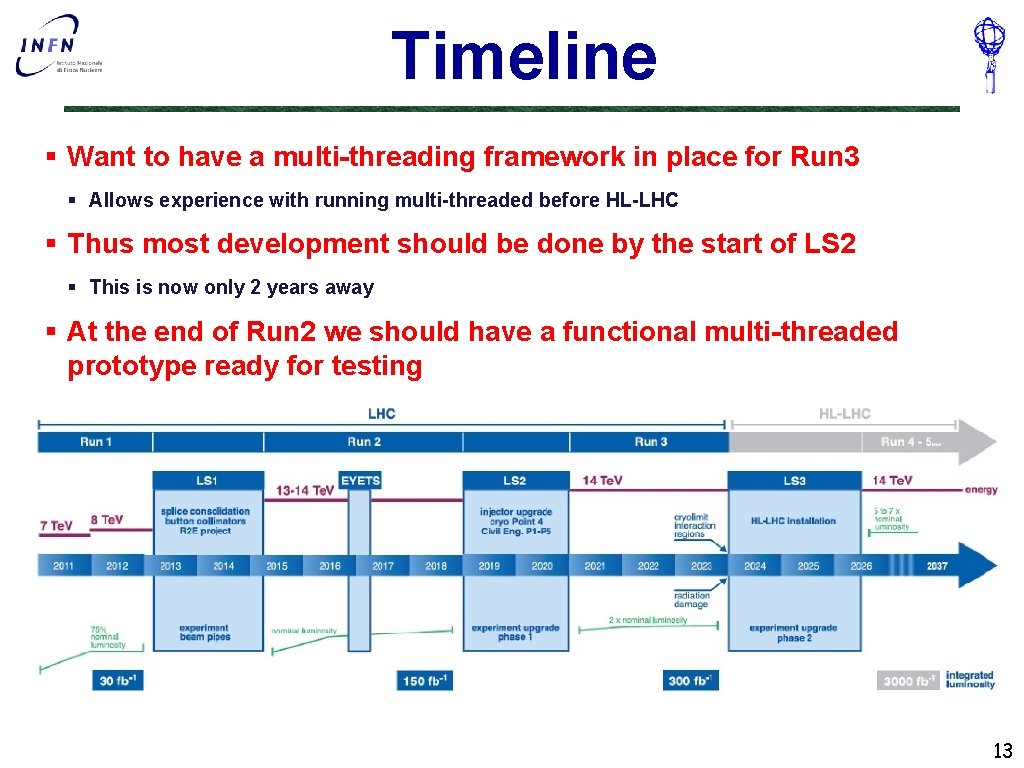

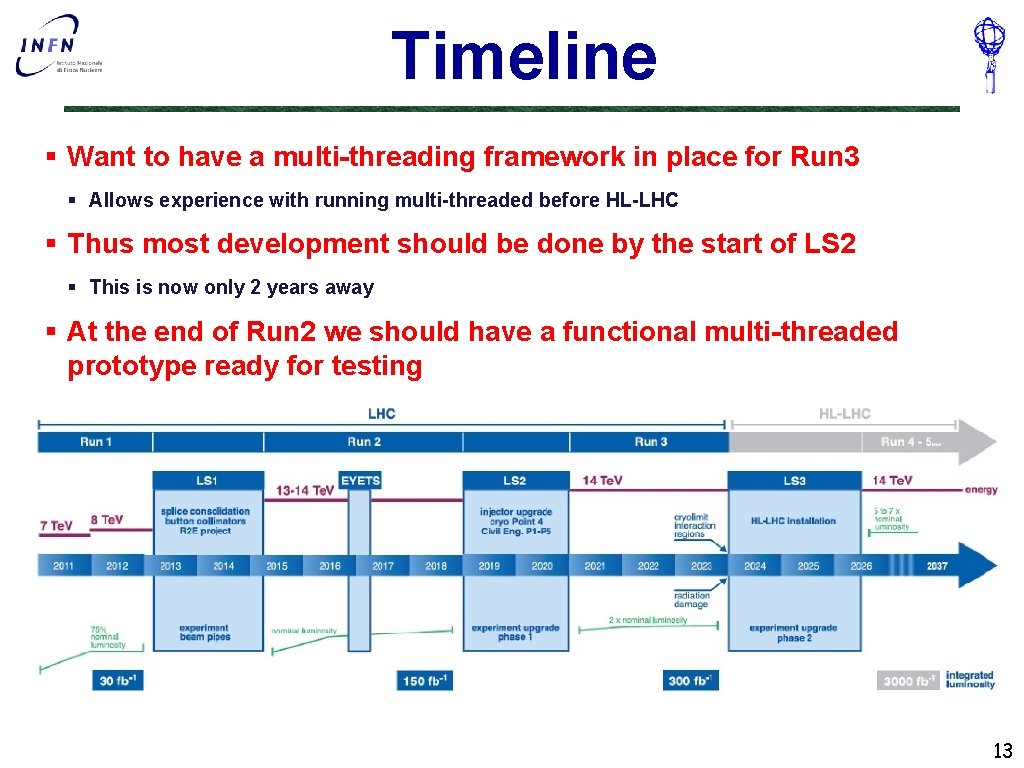

Timeline § Want to have a multi-threading framework in place for Run 3 § Allows experience with running multi-threaded before HL-LHC § Thus most development should be done by the start of LS 2 § This is now only 2 years away § At the end of Run 2 we should have a functional multi-threaded prototype ready for testing 13

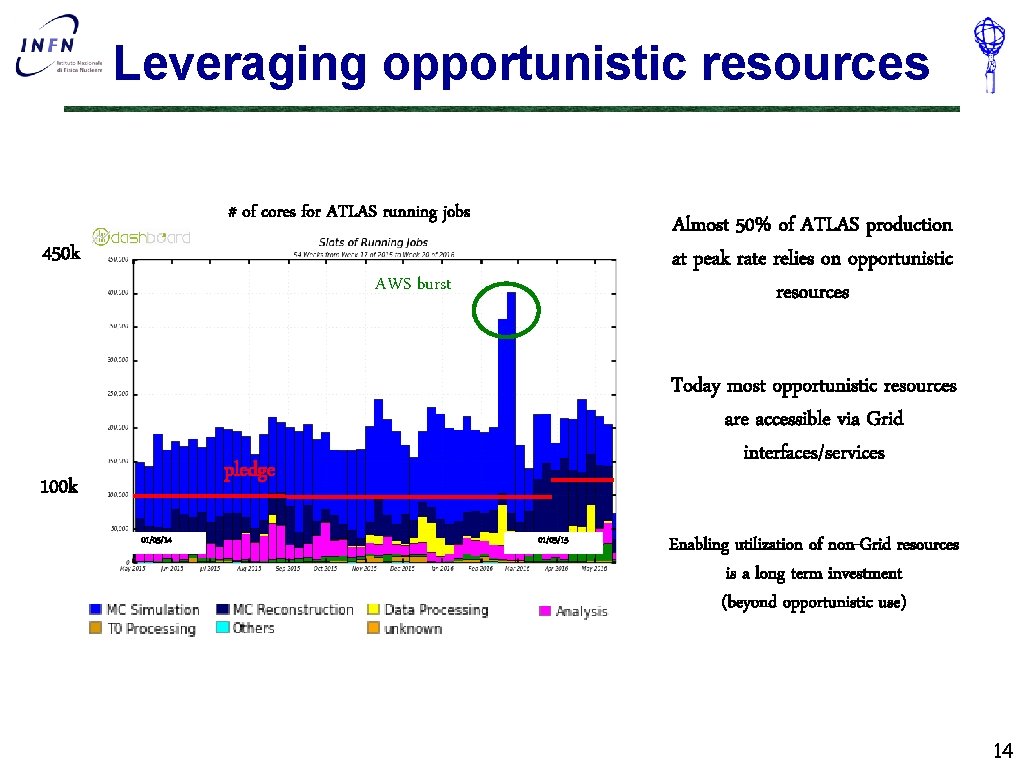

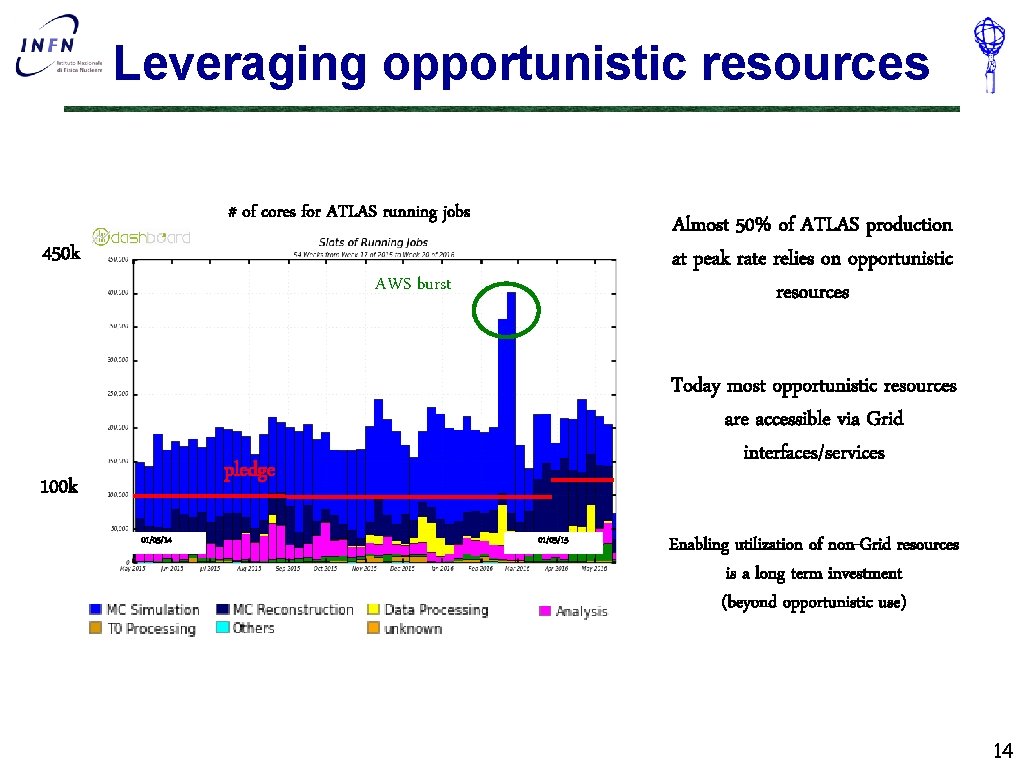

Leveraging opportunistic resources # of cores for ATLAS running jobs 450 k Almost 50% of ATLAS production at peak rate relies on opportunistic resources AWS burst Today most opportunistic resources are accessible via Grid interfaces/services pledge 100 k 01/05/14 01/03/15 Enabling utilization of non-Grid resources is a long term investment (beyond opportunistic use) 14

Grid and off-Grid resources §Global community did not fully buy into Grid technologies, which were very successful for us § We have a dedicated network of sites, using custom software and serving (mostly) the WLCG community §Finding opportunistic/common resources: § High Performance Computer centers § https: //en. wikipedia. org/wiki/Supercomputer § Opportunistic and commercial cloud resources § https: //en. wikipedia. org/wiki/Cloud_computing § You ask for resources though a defined interface and you get access and control of a (virtual) machine, rather than a job slot on the Grid 15

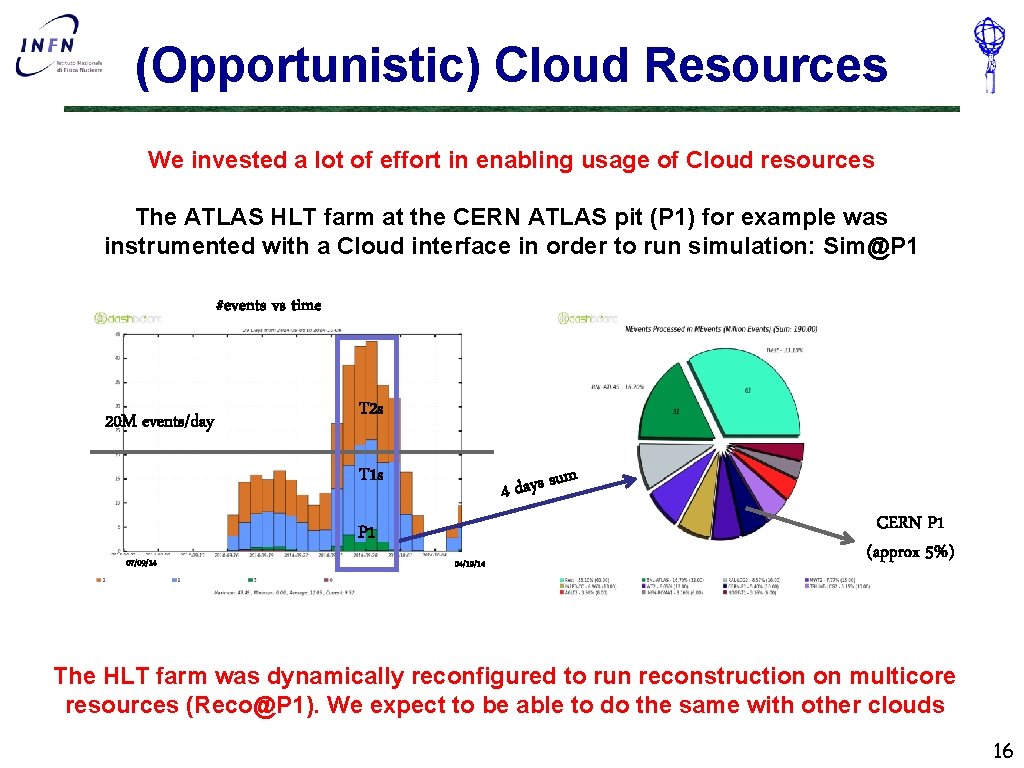

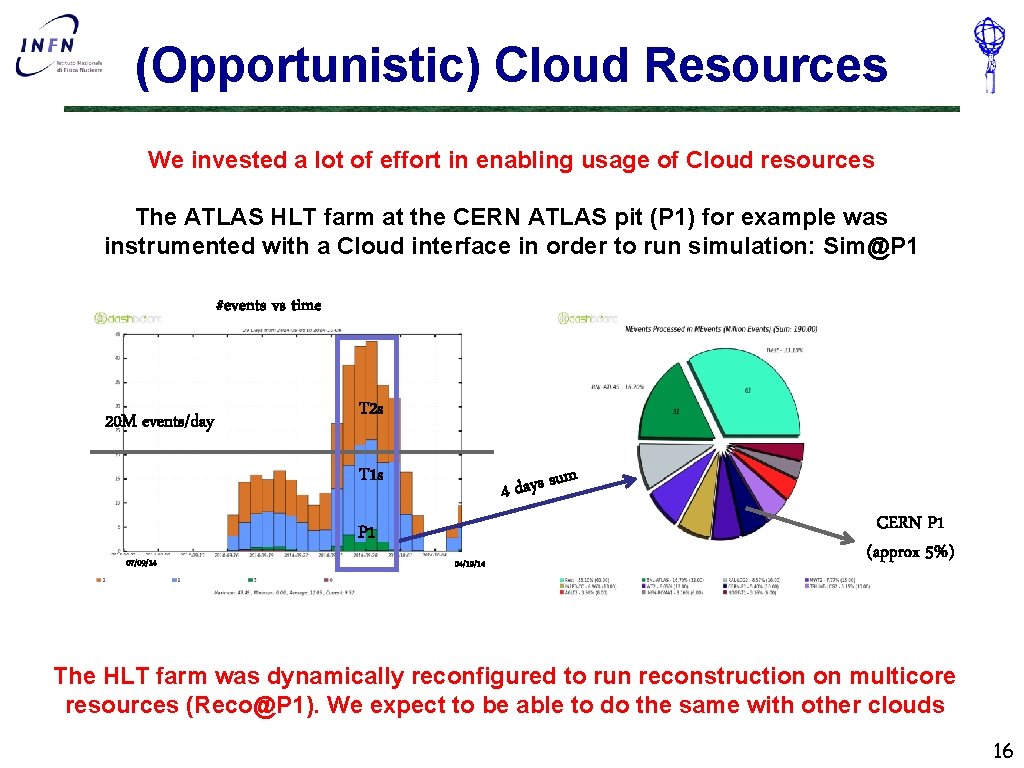

(Opportunistic) Cloud Resources We invested a lot of effort in enabling usage of Cloud resources The ATLAS HLT farm at the CERN ATLAS pit (P 1) for example was instrumented with a Cloud interface in order to run simulation: Sim@P 1 #events vs time 20 M events/day T 2 s T 1 s um s s y a d 4 P 1 07/09/14 04/10/14 CERN P 1 (approx 5%) The HLT farm was dynamically reconfigured to run reconstruction on multicore resources (Reco@P 1). We expect to be able to do the same with other clouds 16

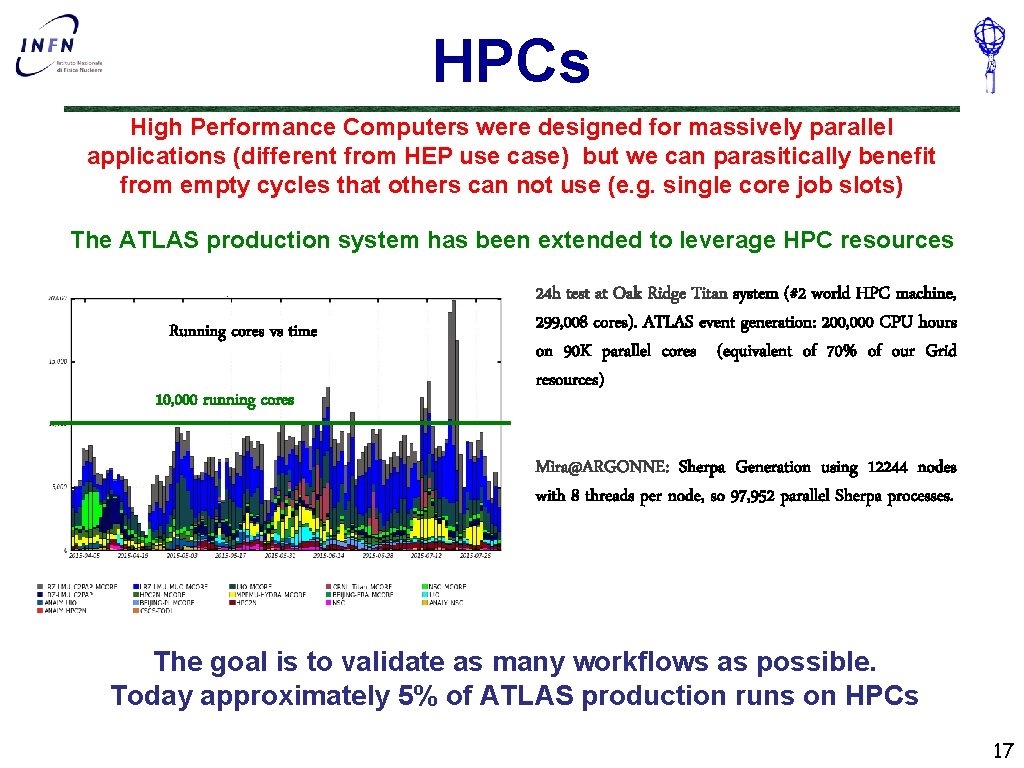

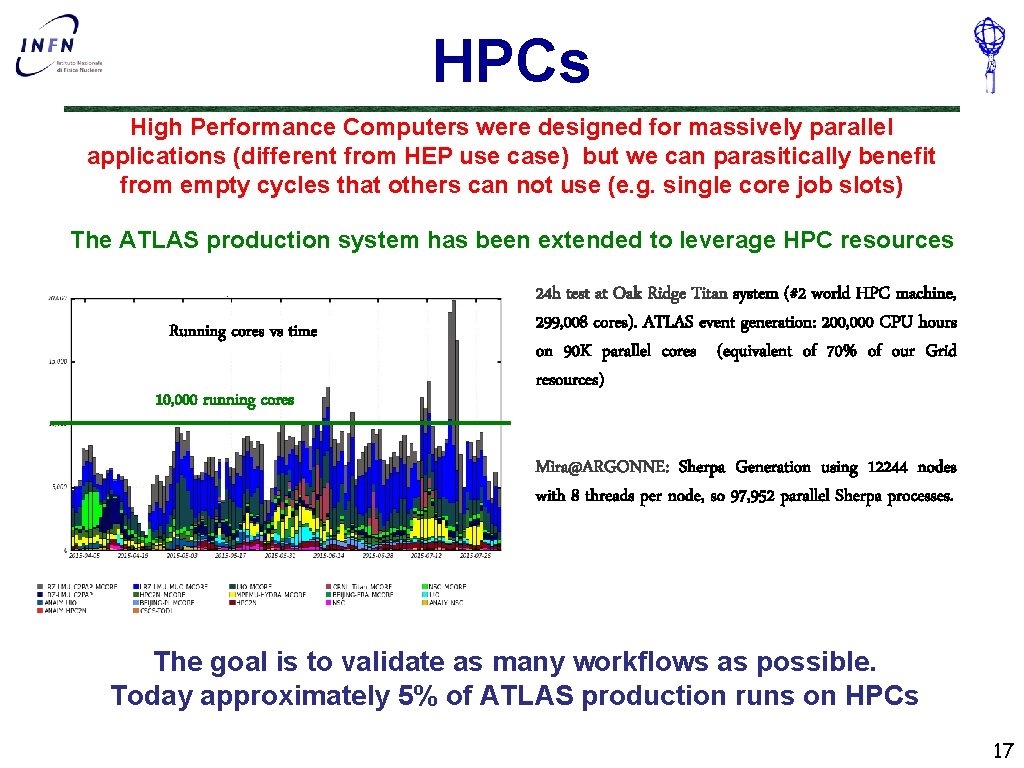

HPCs High Performance Computers were designed for massively parallel applications (different from HEP use case) but we can parasitically benefit from empty cycles that others can not use (e. g. single core job slots) The ATLAS production system has been extended to leverage HPC resources Running cores vs time 10, 000 running cores 24 h test at Oak Ridge Titan system (#2 world HPC machine, 299, 008 cores). ATLAS event generation: 200, 000 CPU hours on 90 K parallel cores (equivalent of 70% of our Grid resources) Mira@ARGONNE: Sherpa Generation using 12244 nodes with 8 threads per node, so 97, 952 parallel Sherpa processes. The goal is to validate as many workflows as possible. Today approximately 5% of ATLAS production runs on HPCs 17

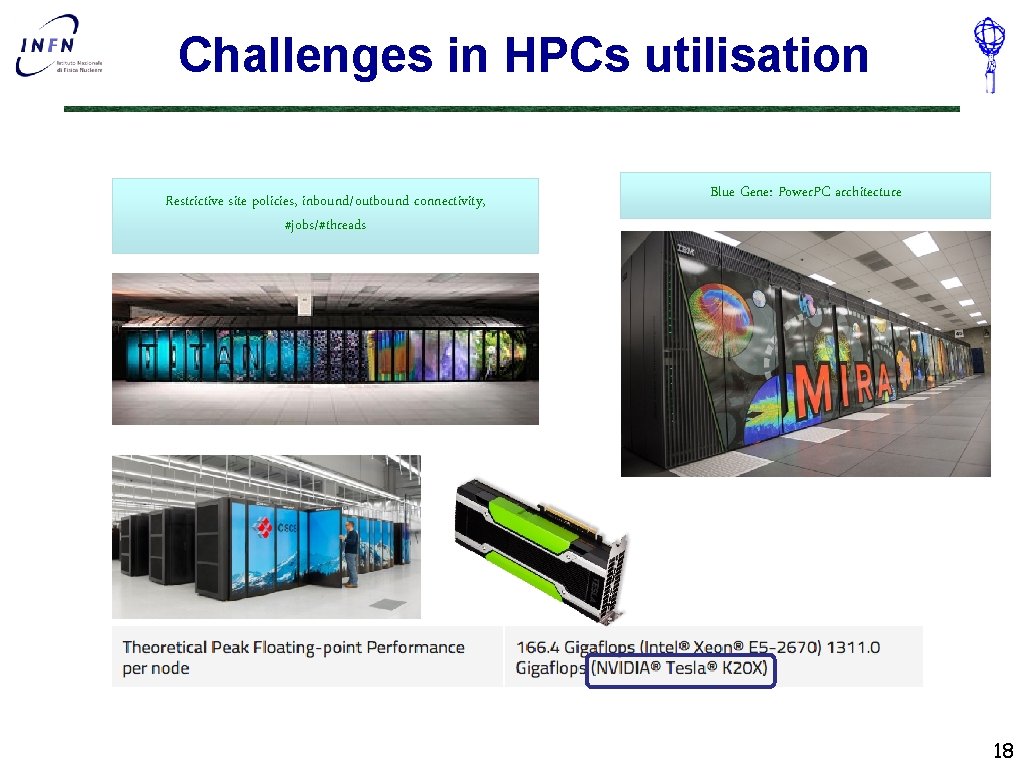

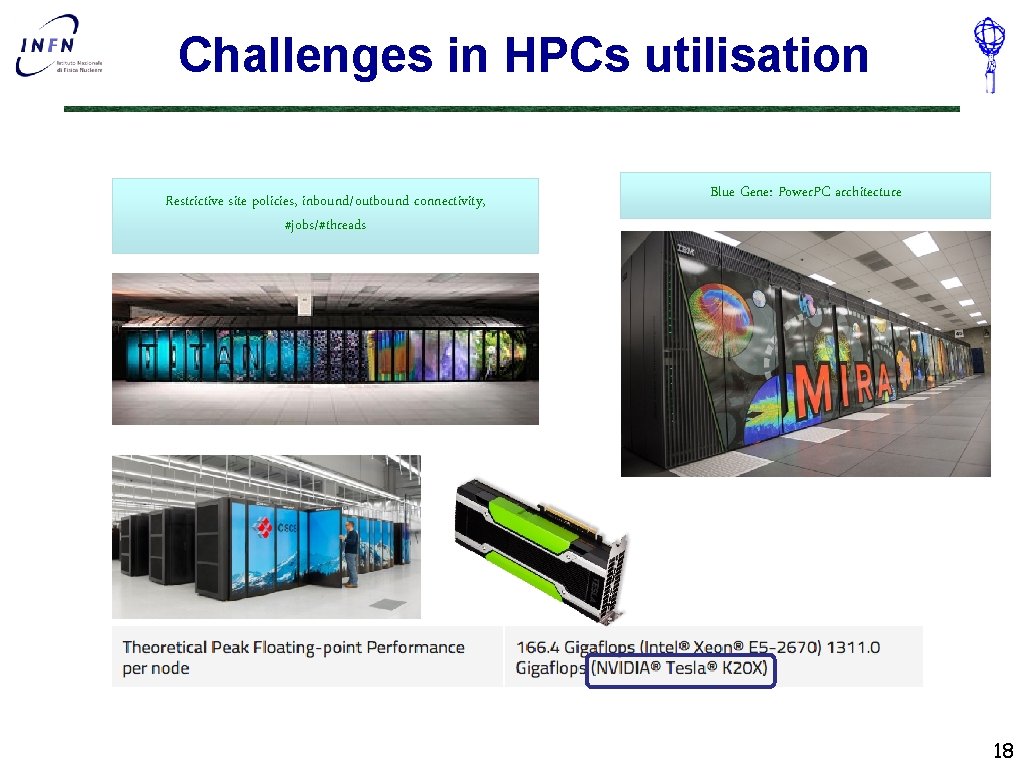

Challenges in HPCs utilisation Restrictive site policies, inbound/outbound connectivity, #jobs/#threads Blue Gene: Power. PC architecture 18

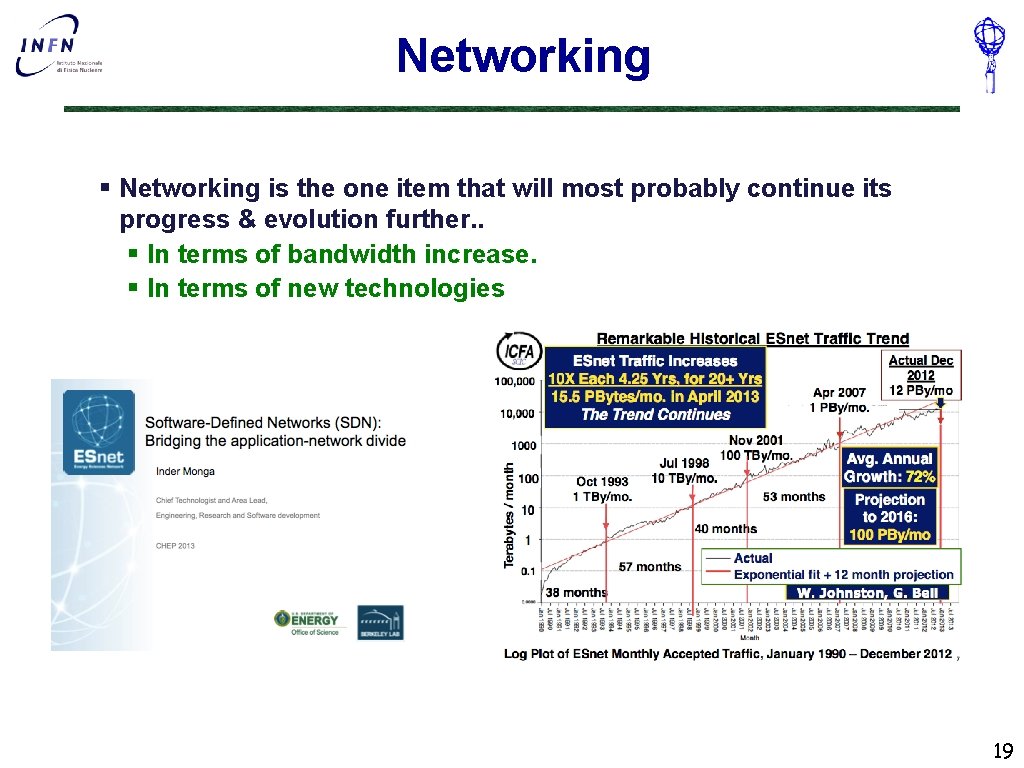

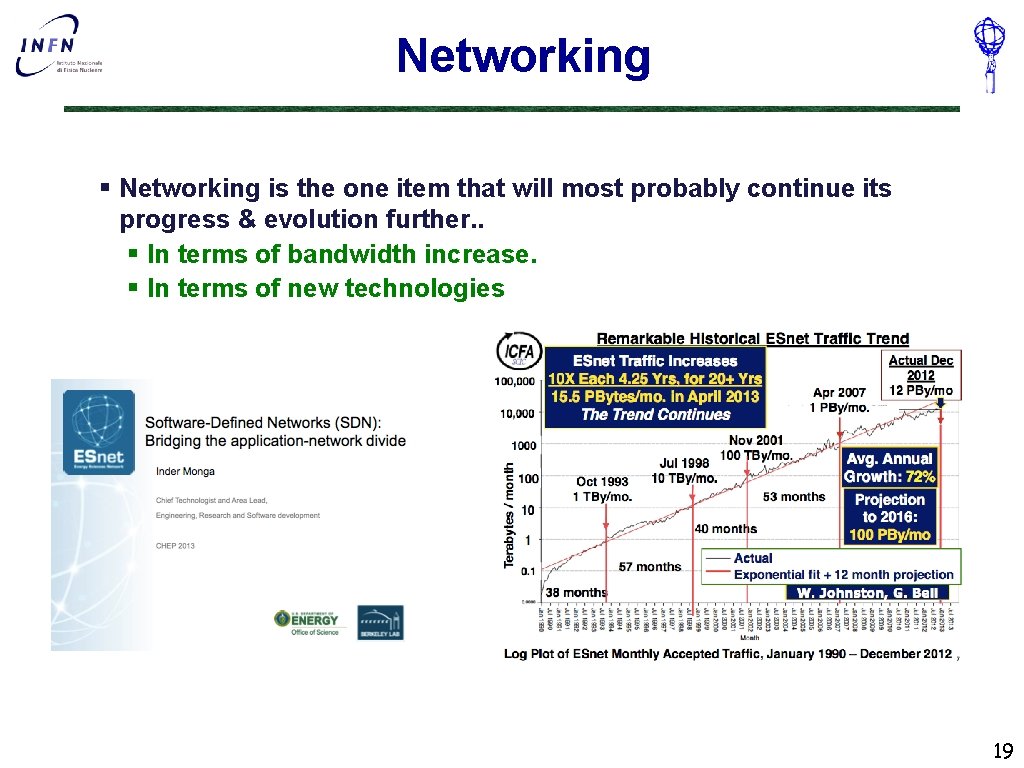

Networking § Networking is the one item that will most probably continue its progress & evolution further. . § In terms of bandwidth increase. § In terms of new technologies 19

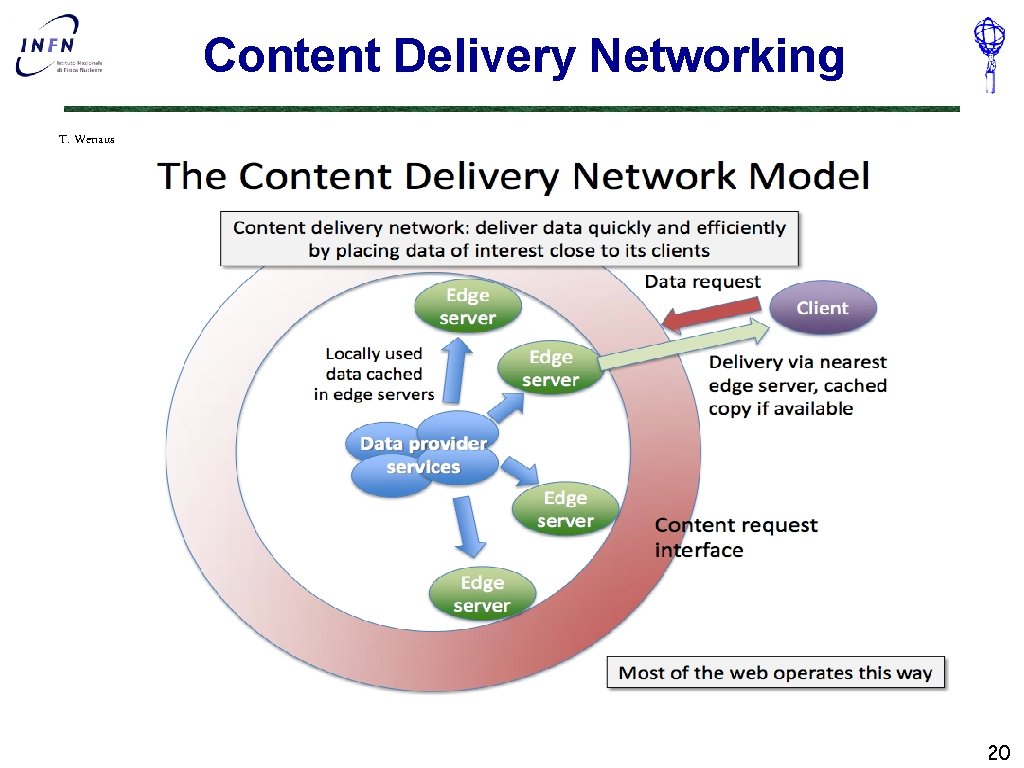

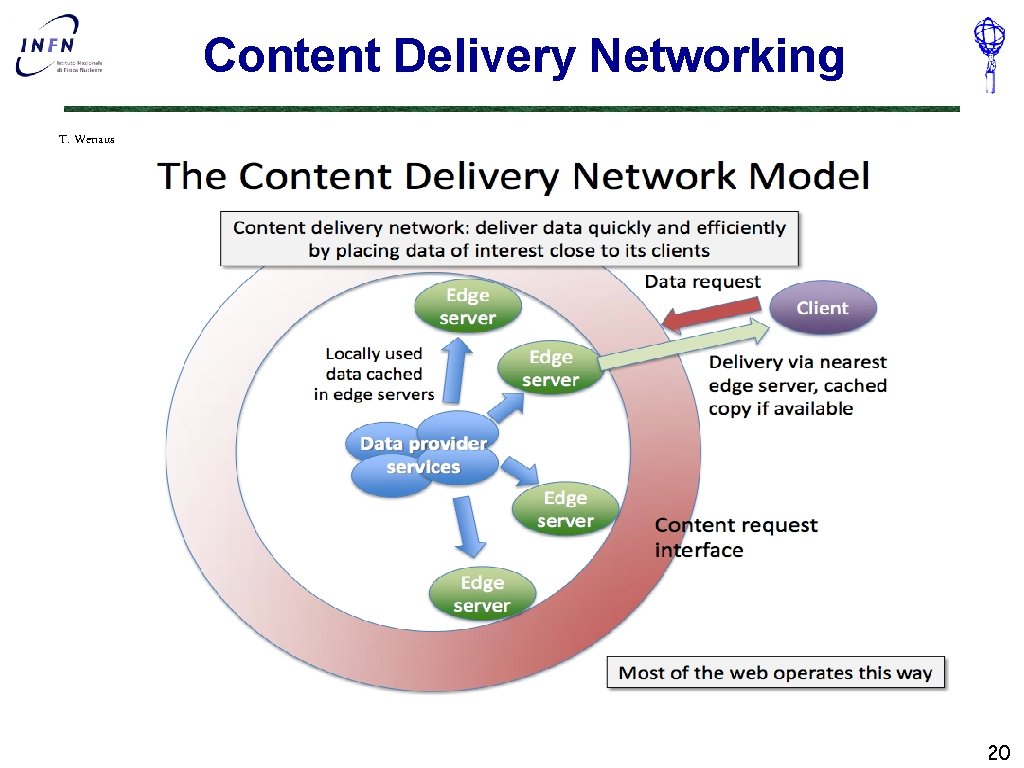

Content Delivery Networking T. Wenaus 20

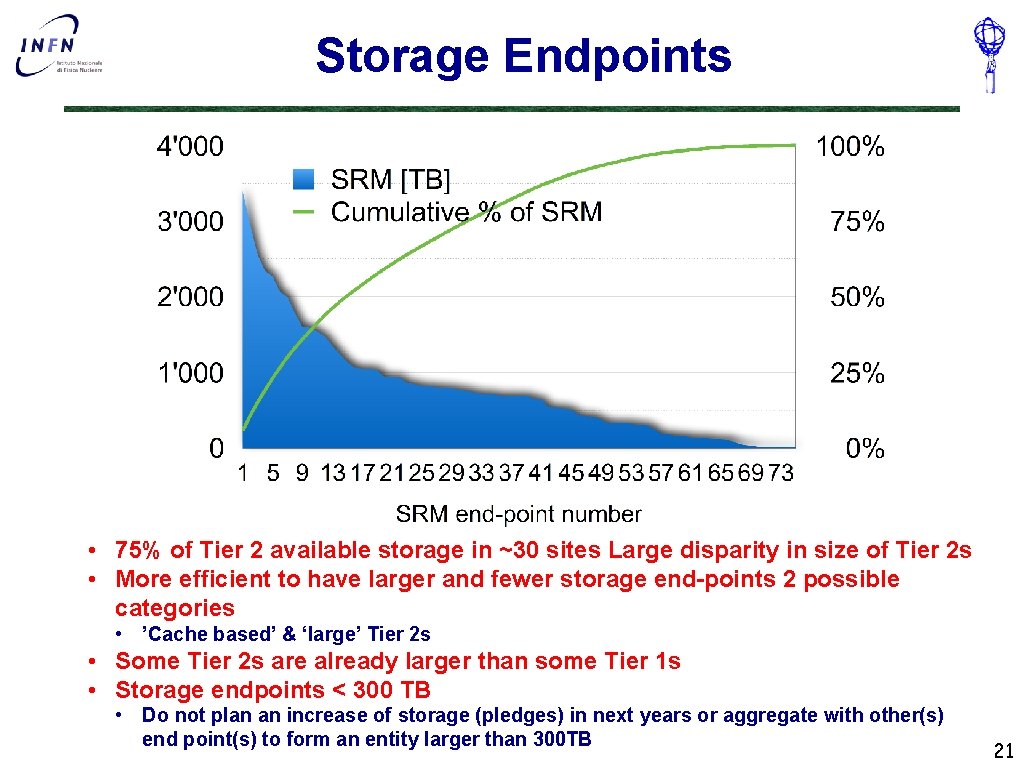

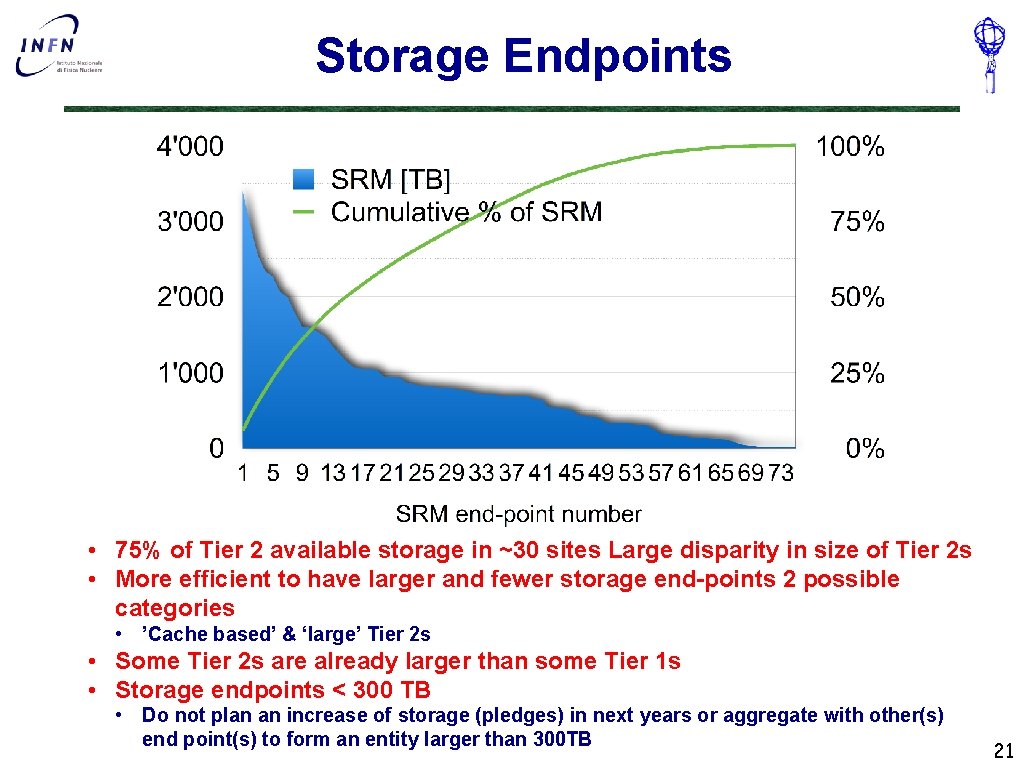

Storage Endpoints • 75% of Tier 2 available storage in ~30 sites Large disparity in size of Tier 2 s • More efficient to have larger and fewer storage end-points 2 possible categories • ’Cache based’ & ‘large’ Tier 2 s • Some Tier 2 s are already larger than some Tier 1 s • Storage endpoints < 300 TB • Do not plan an increase of storage (pledges) in next years or aggregate with other(s) end point(s) to form an entity larger than 300 TB 21

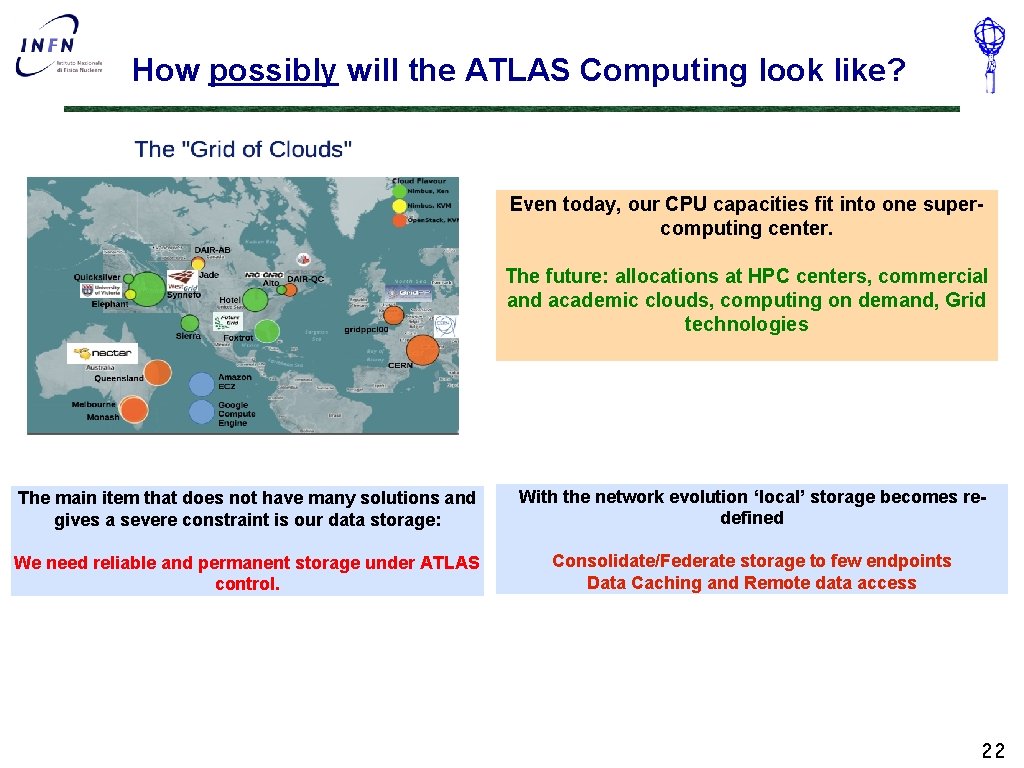

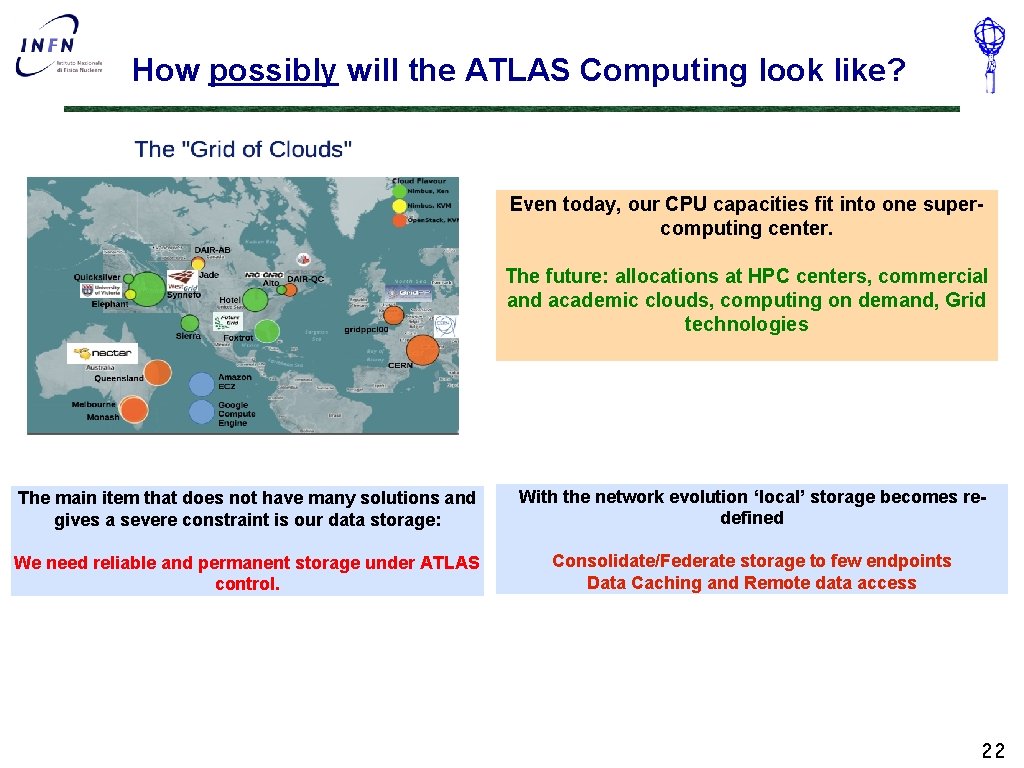

How possibly will the ATLAS Computing look like? Even today, our CPU capacities fit into one supercomputing center. The future: allocations at HPC centers, commercial and academic clouds, computing on demand, Grid technologies The main item that does not have many solutions and gives a severe constraint is our data storage: With the network evolution ‘local’ storage becomes redefined We need reliable and permanent storage under ATLAS control. Consolidate/Federate storage to few endpoints Data Caching and Remote data access 22

Conclusions § Computing model has been adapted to Run 2 § 2015 data processing and distribution has been a success § 2016 data taking has been started smoothly § No big changes envisaged for Run 3 § In the future more efficient usage of opportunistic resources and reorganization of the global storage facilities 23