ANSE Pan DA Status Kaushik De Artem Petrosyan

- Slides: 16

ANSE Pan. DA Status Kaushik De, Artem Petrosyan Univ. of Texas at Arlington ANSE@Snowmass, UM July 30, 2013

Introduction § Started the work on integrating network information into Pan. DA couple of months ago § Step 1: Introduce network information into Pan. DA § Start with slowly varying (static) information from external probes § Populate various databases used by Pan. DA § Step 2: Use network information for workload management § Start with simple use cases that lead to measurable improvements in work flow / user experience § From Pan. DA perspective we assume § Network measurements are available § Network information is reliable § We can examine this assumption later – need to start somewhere Kaushik De July 30, 2013

Status of Work § New people § Artem Petrosyan and Danila Oleynik started at UTA in June § Getting integrated into core Pan. DA development team § Funding - 0. 5 FTE ANSE, 1. 5 FTE ASCR § Both will be at UTA for ~2 years § There is commitment from Dubna for 1 -2 more years § Nominally, Artem will be paid by ANSE – but they work as team § Step 1 – information system changes § Work started § Step 2 – WMS improvements § Will start in about 1 month Kaushik De July 30, 2013

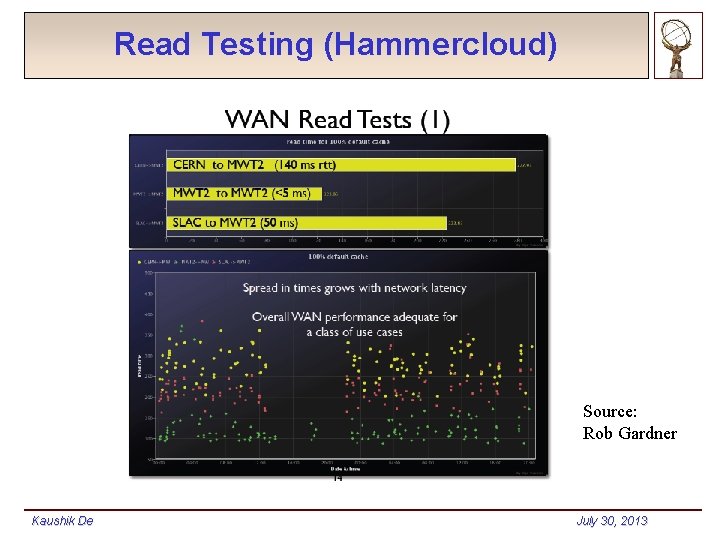

Sources of Network Information § DDM Sonar tests § ATLAS measures transfer rates for files between Tier 1 and Tier 2 sites (information used for site white/blacklisting) § Measurements available for small, medium, and large files § perf. Sonar measurements § All WLCG sites are being instrumented with PS boxes § US sites are already instrumented and fully monitored § FAX measurements § Read-time for remote files are probed/measured for pairs of sites § Standard Pan. DA test jobs (Hammer. Cloud jobs) are used Kaushik De July 30, 2013

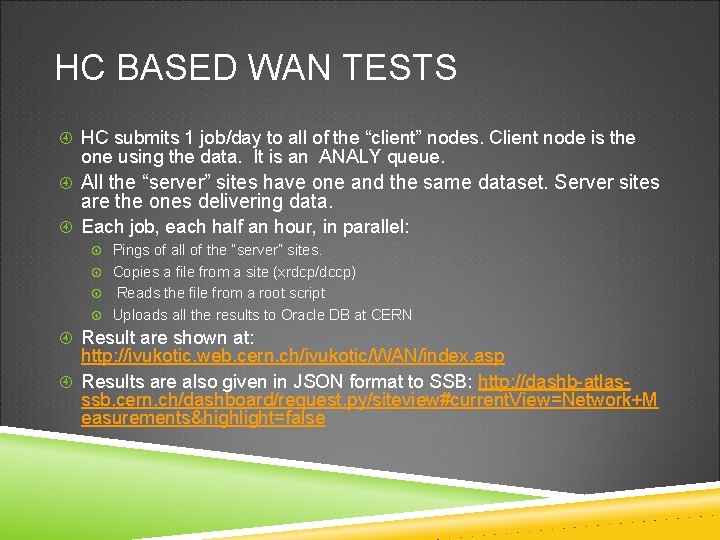

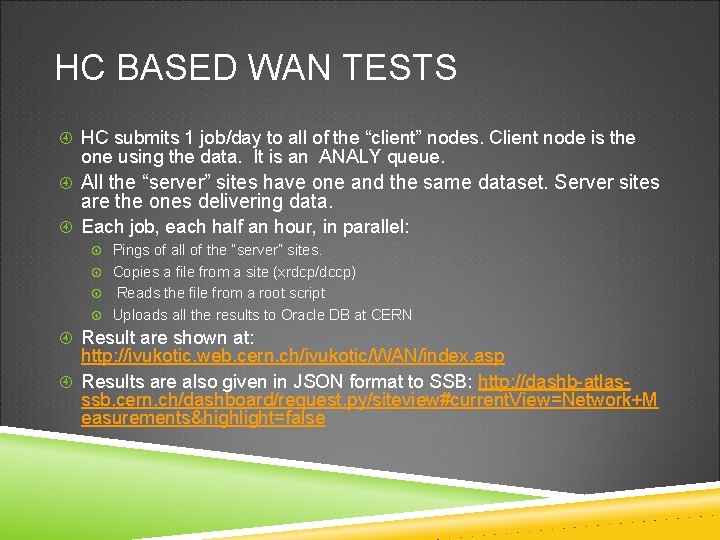

HC BASED WAN TESTS HC submits 1 job/day to all of the “client” nodes. Client node is the one using the data. It is an ANALY queue. All the “server” sites have one and the same dataset. Server sites are the ones delivering data. Each job, each half an hour, in parallel: Pings of all of the “server” sites. Copies a file from a site (xrdcp/dccp) Reads the file from a root script Uploads all the results to Oracle DB at CERN Result are shown at: http: //ivukotic. web. cern. ch/ivukotic/WAN/index. asp Results are also given in JSON format to SSB: http: //dashb-atlasssb. cern. ch/dashboard/request. py/siteview#current. View=Network+M easurements&highlight=false

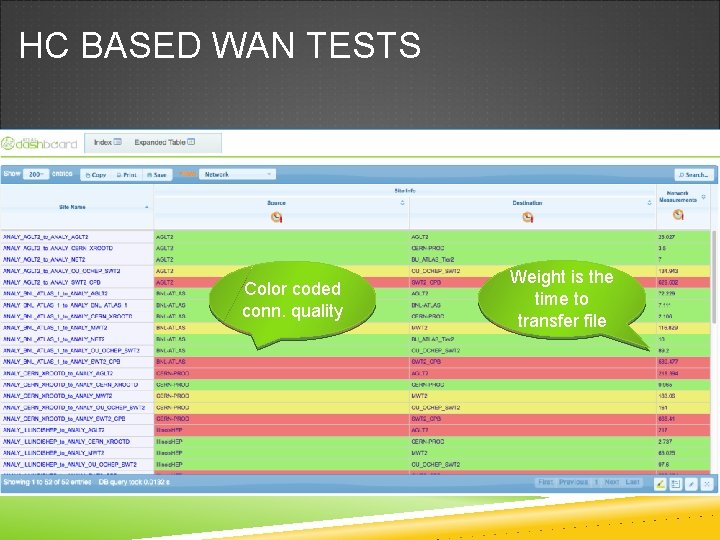

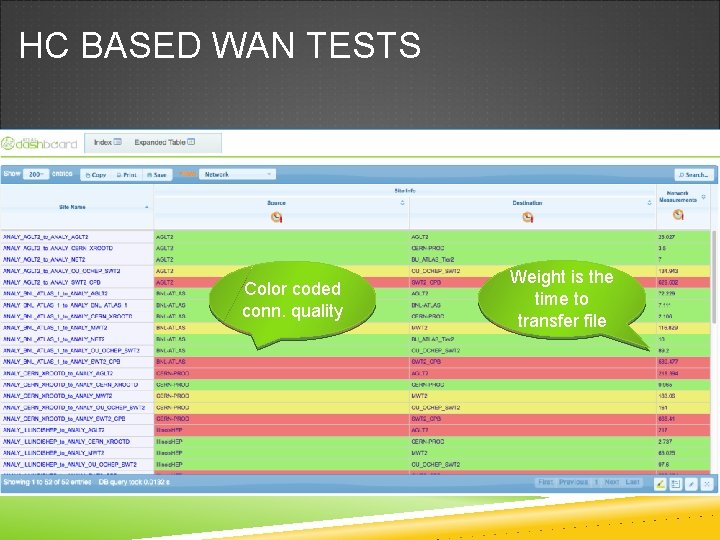

HC BASED WAN TESTS Color coded conn. quality Weight is the time to transfer file

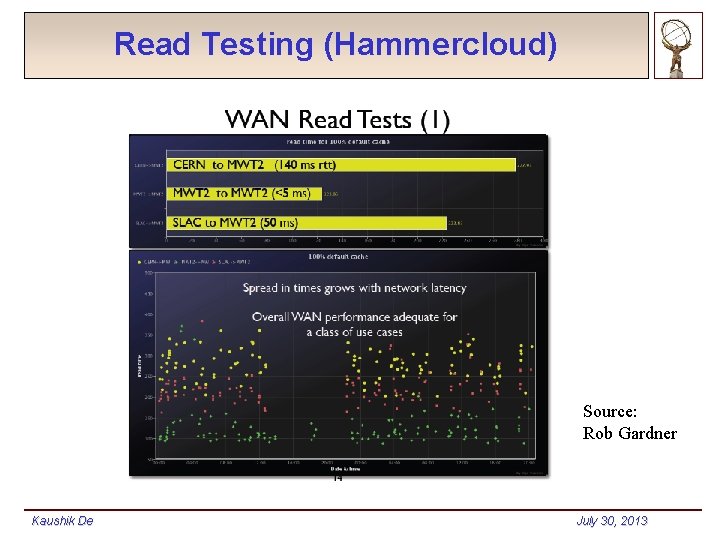

Read Testing (Hammercloud) Source: Rob Gardner Kaushik De July 30, 2013

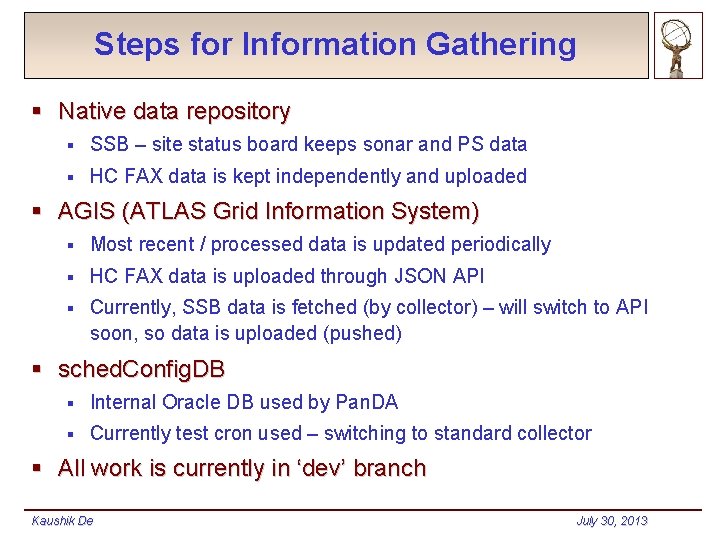

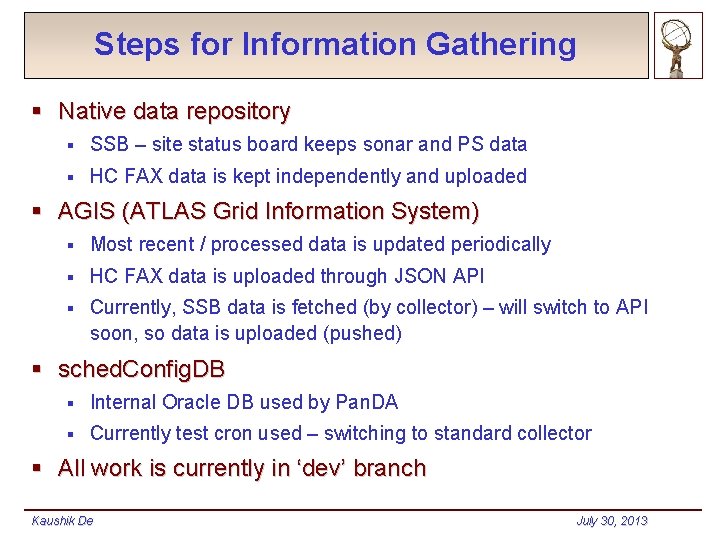

Steps for Information Gathering § Native data repository § SSB – site status board keeps sonar and PS data § HC FAX data is kept independently and uploaded § AGIS (ATLAS Grid Information System) § Most recent / processed data is updated periodically § HC FAX data is uploaded through JSON API § Currently, SSB data is fetched (by collector) – will switch to API soon, so data is uploaded (pushed) § sched. Config. DB § Internal Oracle DB used by Pan. DA § Currently test cron used – switching to standard collector § All work is currently in ‘dev’ branch Kaushik De July 30, 2013

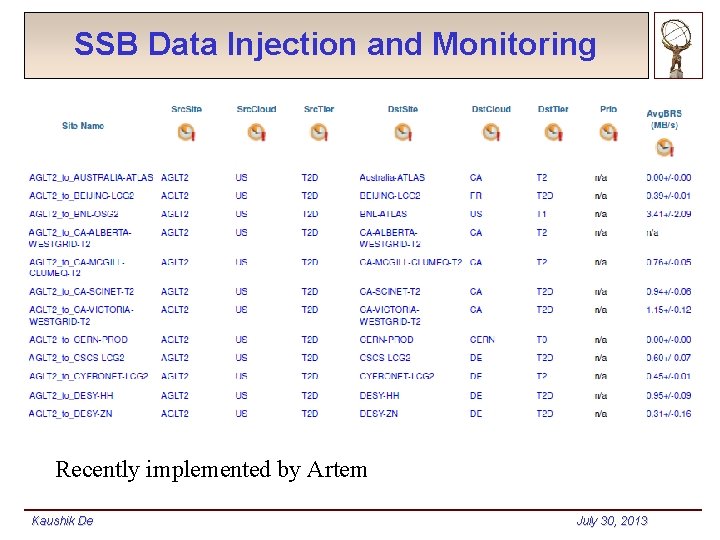

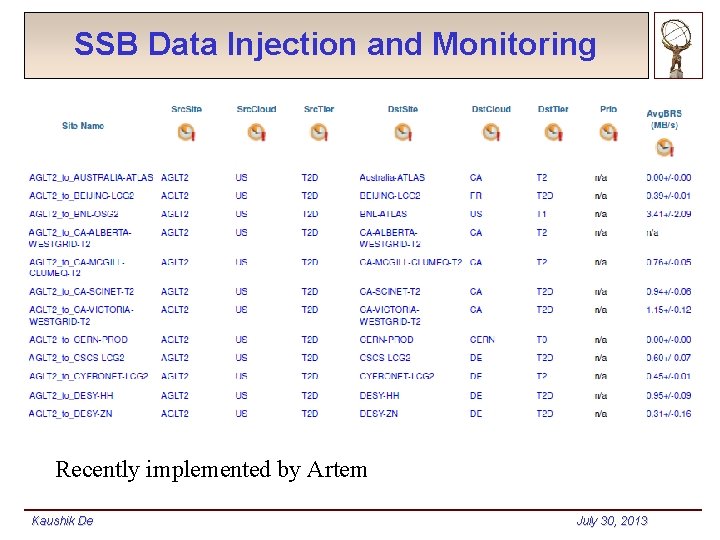

SSB Data Injection and Monitoring Recently implemented by Artem Kaushik De July 30, 2013

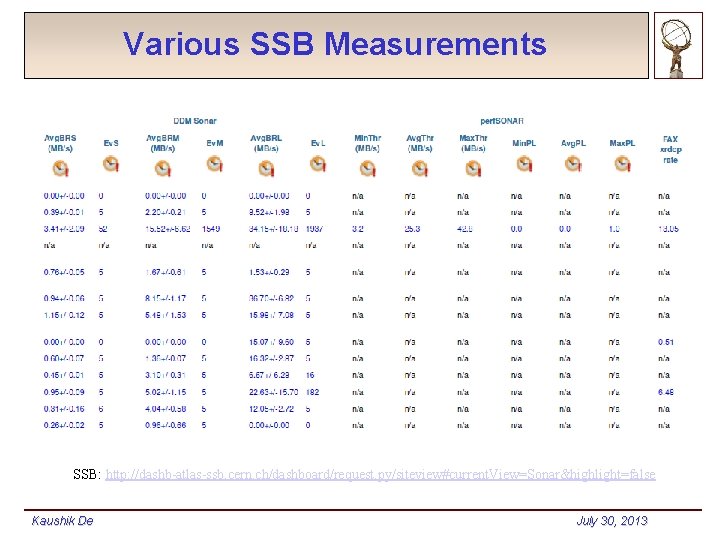

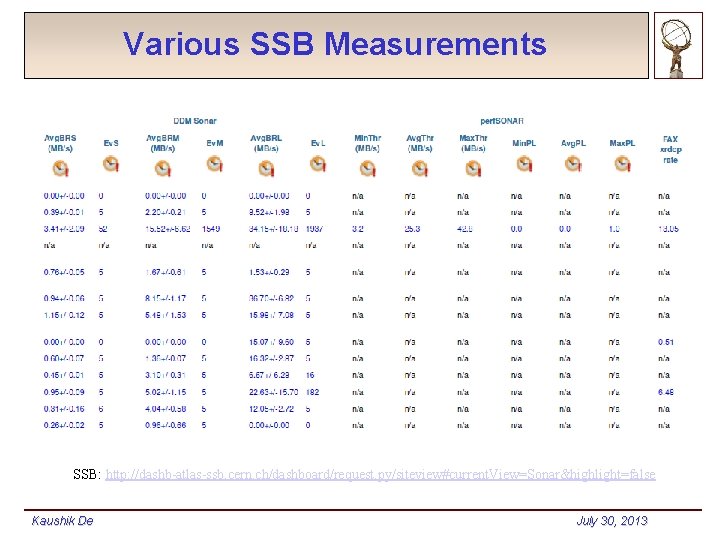

Various SSB Measurements SSB: http: //dashb-atlas-ssb. cern. ch/dashboard/request. py/siteview#current. View=Sonar&highlight=false Kaushik De July 30, 2013

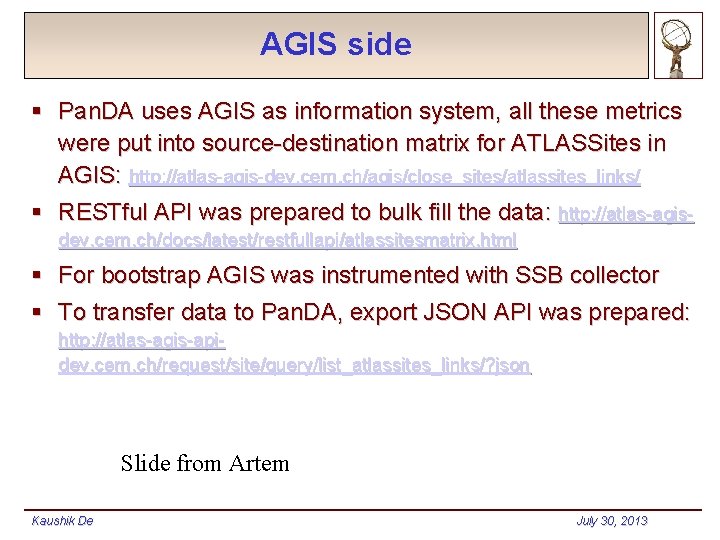

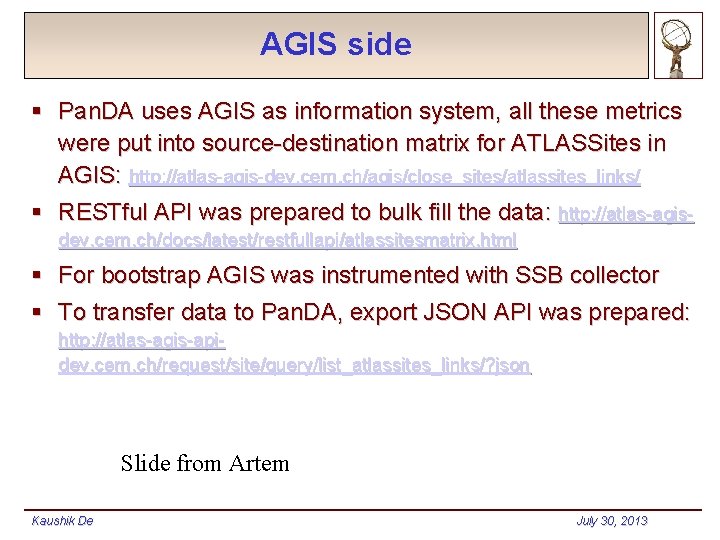

AGIS side § Pan. DA uses AGIS as information system, all these metrics were put into source-destination matrix for ATLASSites in AGIS: http: //atlas-agis-dev. cern. ch/agis/close_sites/atlassites_links/ § RESTful API was prepared to bulk fill the data: http: //atlas-agisdev. cern. ch/docs/latest/restfullapi/atlassitesmatrix. html § For bootstrap AGIS was instrumented with SSB collector § To transfer data to Pan. DA, export JSON API was prepared: http: //atlas-agis-apidev. cern. ch/request/site/query/list_atlassites_links/? json Slide from Artem Kaushik De July 30, 2013

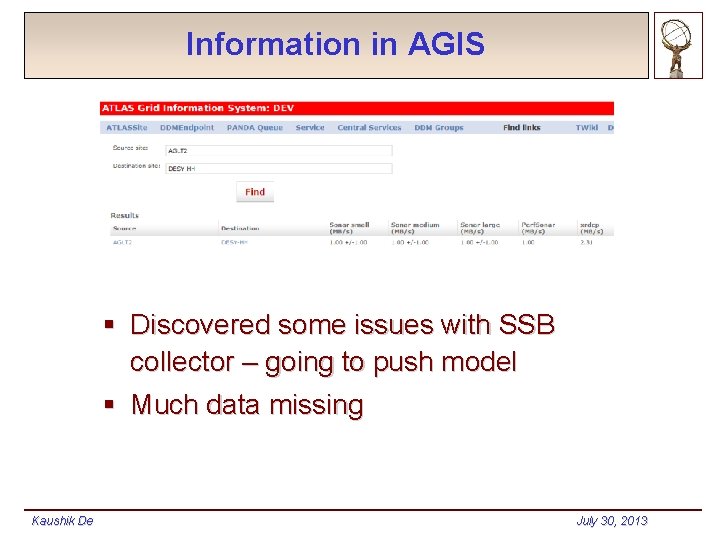

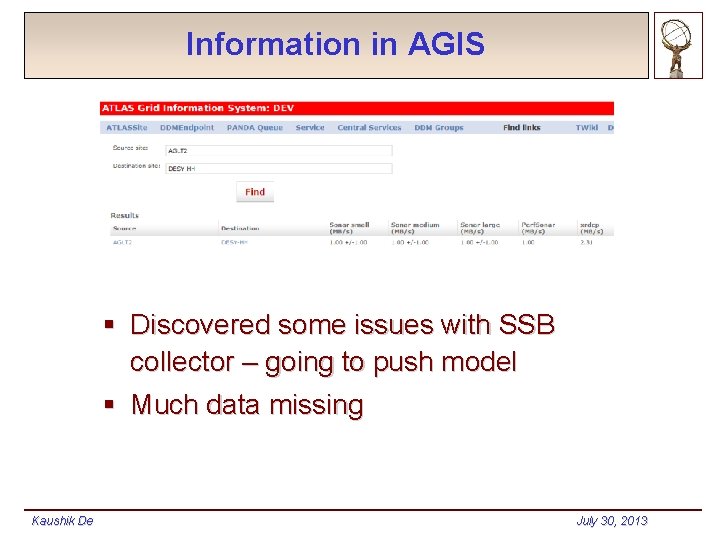

Information in AGIS § Discovered some issues with SSB collector – going to push model § Much data missing Kaushik De July 30, 2013

Pan. DA sched. Config. DB § Tables structure was discussed with Gancho, tables were created in Pan. DA DB (development) § Development machine was requested and received (voatlas 142) § Module to collect data from AGIS and put them into Pan. DA DB is in development Slide from Artem Kaushik De July 30, 2013

Short Term Plan § Finish adding information to Pan. DA § Using network information in Pan. DA § Optimize choice of T 1 -T 2 pairings (cloud selection) § § § In ATLAS, production tasks are assigned to Tier 1’s Tier 2’s attached to the Tier 1 participate in the processing Any T 2 may be attached to multiple T 1’s Operations team makes this assignment manually This could/should be automated using network information Reduce waiting time for user jobs (job brokerage) § § Kaushik De User analysis jobs go to sites with local input data This can lead to long wait times occasionally (PD 2 P will make more copies eventually to reduce congestion) While nearby sites with good network access may have idle CPU’s We could use network information to assign work to ‘nearby’ sites July 30, 2013

Medium Term Plan § Improve source of information § Dynamic information § Internal measurements § Additional use of information § Other brokerage § PD 2 P § Job state transitions Kaushik De July 30, 2013

Long Term Plans § Resource provisioning Kaushik De July 30, 2013

Artem petrosyan

Artem petrosyan Tamara petrosyan

Tamara petrosyan Accalmie traitresse de dieulafoy

Accalmie traitresse de dieulafoy Sphincteroscopie

Sphincteroscopie Mésentère commun incomplet

Mésentère commun incomplet Anse sentinelle

Anse sentinelle Immagine a coccarda ecografia

Immagine a coccarda ecografia Péritubulaire

Péritubulaire Soludactone dci

Soludactone dci Anfora greca con corpo gonfio nella parte inferiore

Anfora greca con corpo gonfio nella parte inferiore Trituration compounding

Trituration compounding Artem mbti

Artem mbti Artem bityutskiy

Artem bityutskiy Artem moorlag

Artem moorlag Artem kazakov zug

Artem kazakov zug Artem metlin

Artem metlin Artem kononov

Artem kononov