An Optimization Framework for Collective Communication Sameer Kumar

- Slides: 20

An Optimization Framework for Collective Communication Sameer Kumar Parallel Programming Laboratory University Of Illinois at Urbana-Champaign

Collective Communication w Communication operation where all (or most) the processors participate n n For example broadcast, barrier, all reduce, all to all communication etc Applications: NAMD multicast, NAMD PME, CPAIMD w Issues n n n Performance impediment Naïve implementations often do not scale Synchronous implementations do not utilize the coprocessor effectively Charm++ Workshop 2003

All to All Communication w All processors send data to all other processors n All to all personalized communication (AAPC) l n MPI_Alltoall All to all multicast/broadcast (AAMC) l MPI_Allgather Charm++ Workshop 2003

Optimization Strategies w Short message optimizations n n High software over head (α) Message combining w Large messages n Network contention w Performance metrics n n Completion time Compute overhead Charm++ Workshop 2003

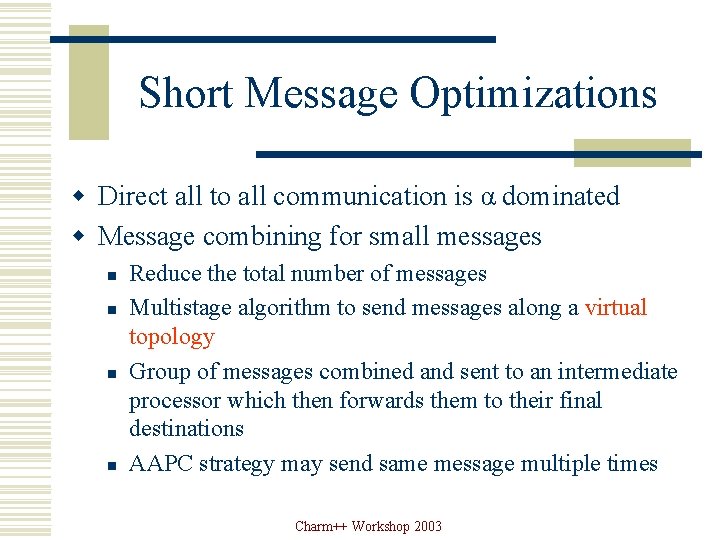

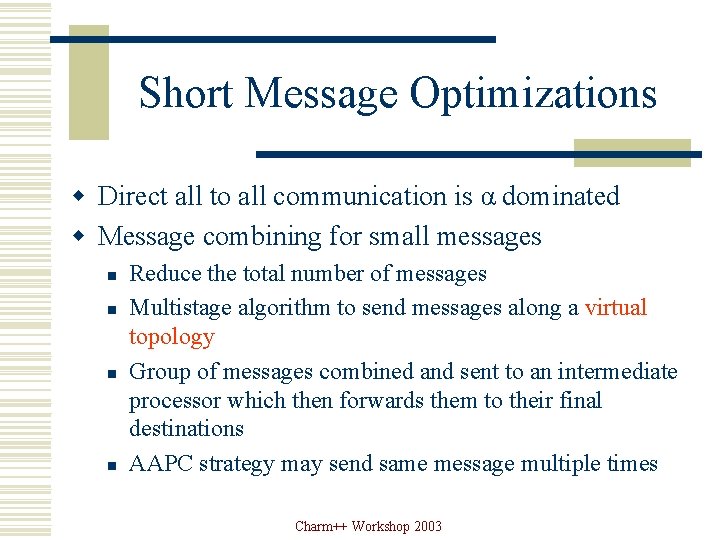

Short Message Optimizations w Direct all to all communication is α dominated w Message combining for small messages n n Reduce the total number of messages Multistage algorithm to send messages along a virtual topology Group of messages combined and sent to an intermediate processor which then forwards them to their final destinations AAPC strategy may send same message multiple times Charm++ Workshop 2003

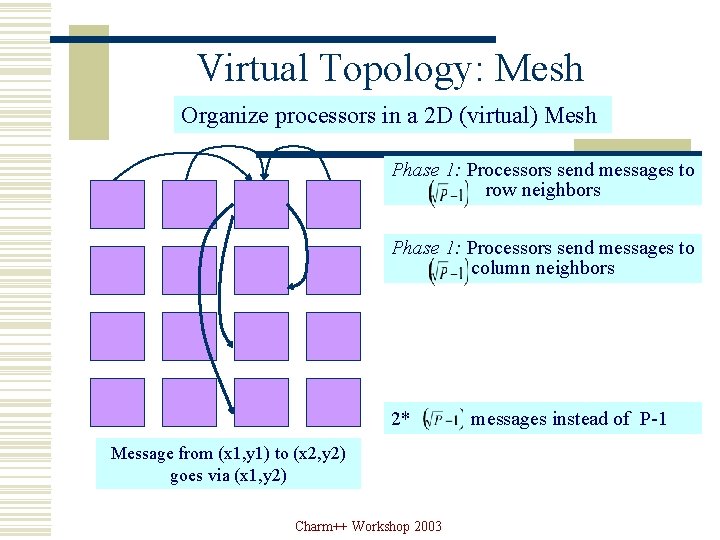

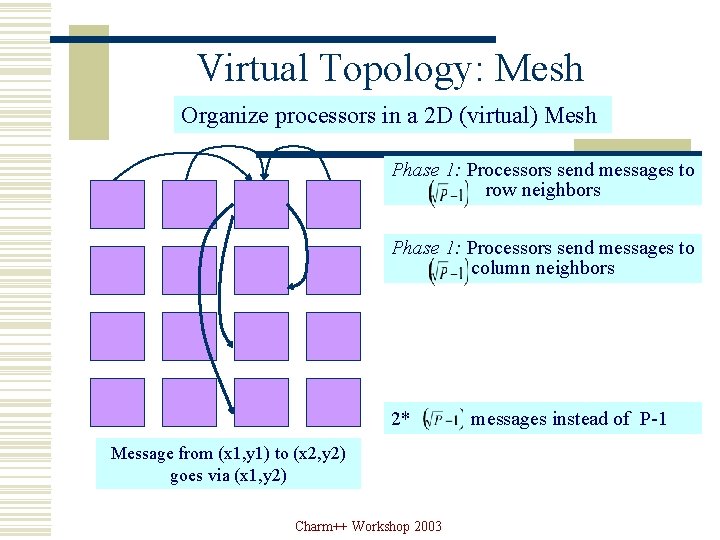

Virtual Topology: Mesh Organize processors in a 2 D (virtual) Mesh Phase 1: Processors send messages to row neighbors Phase 1: Processors send messages to column neighbors 2* Message from (x 1, y 1) to (x 2, y 2) goes via (x 1, y 2) Charm++ Workshop 2003 messages instead of P-1

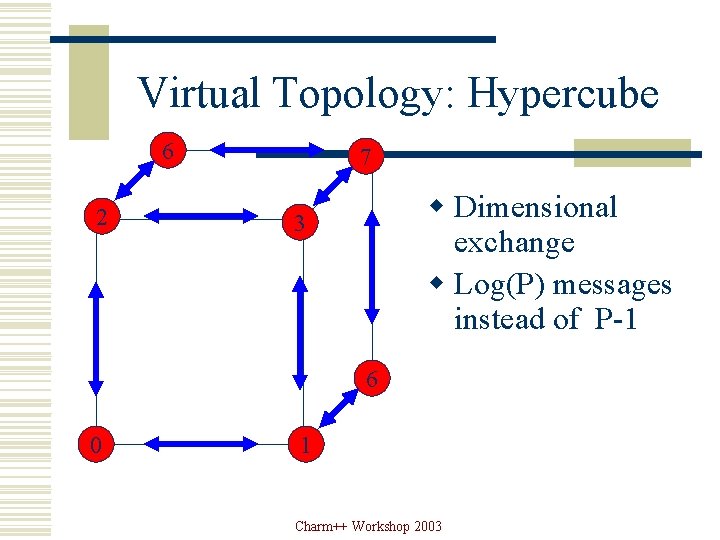

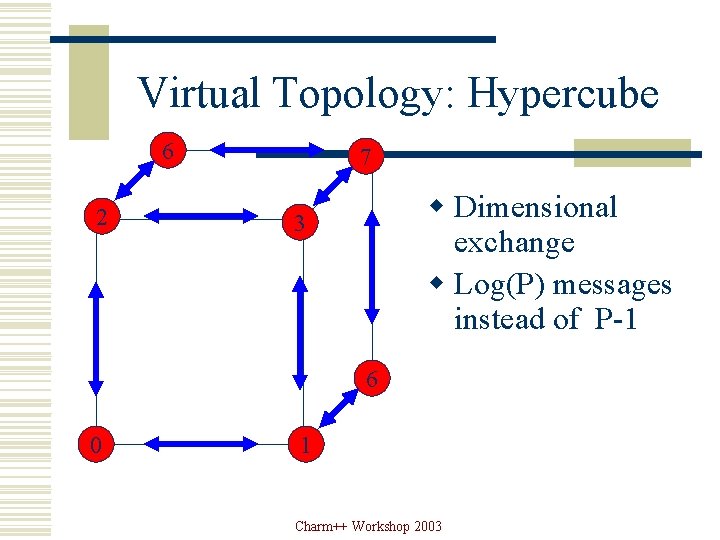

Virtual Topology: Hypercube 6 2 7 w Dimensional exchange w Log(P) messages instead of P-1 3 6 0 1 Charm++ Workshop 2003

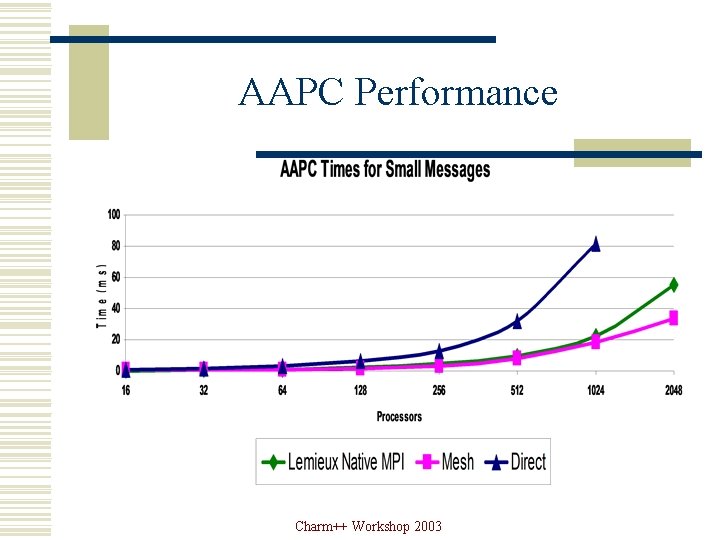

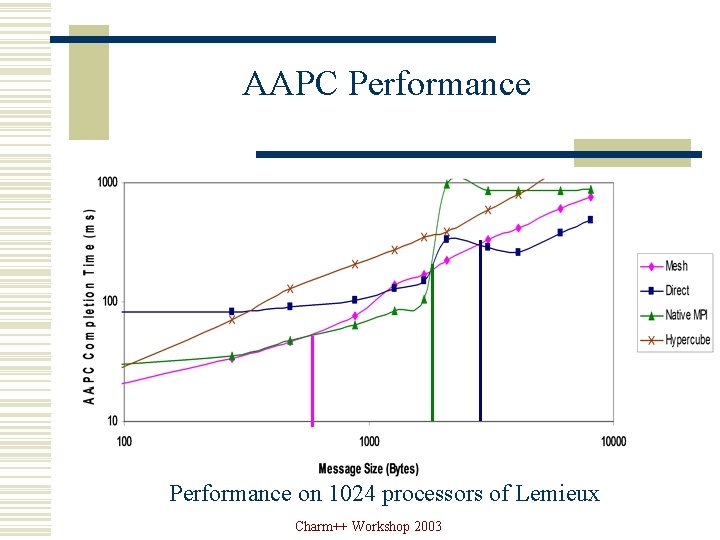

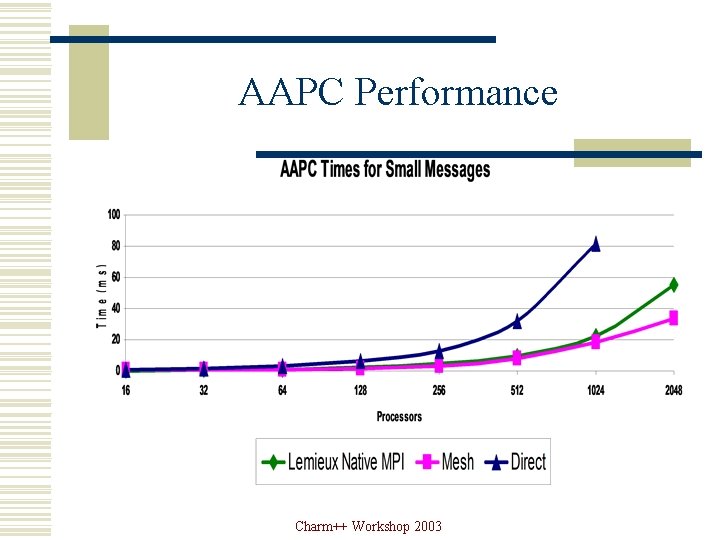

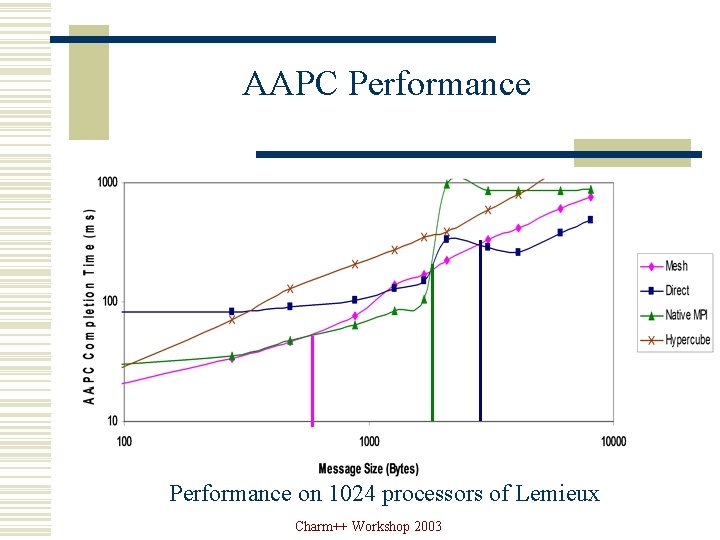

AAPC Performance Charm++ Workshop 2003

AAPC Performance on 1024 processors of Lemieux Charm++ Workshop 2003

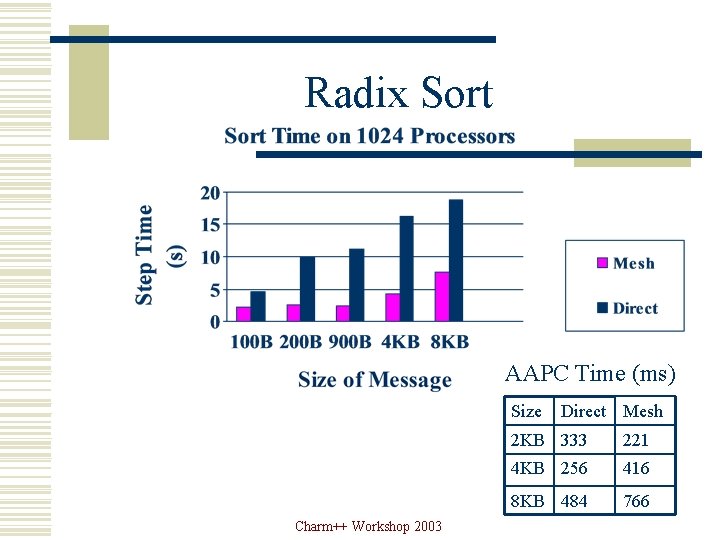

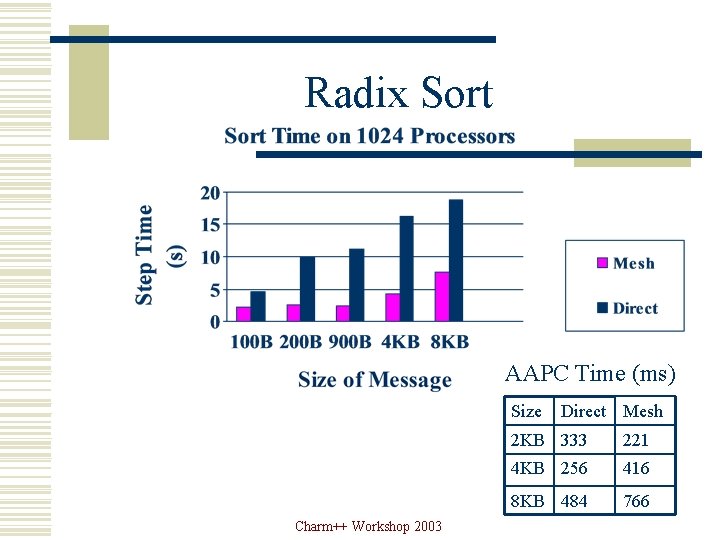

Radix Sort AAPC Time (ms) Size Charm++ Workshop 2003 Direct Mesh 2 KB 333 221 4 KB 256 416 8 KB 484 766

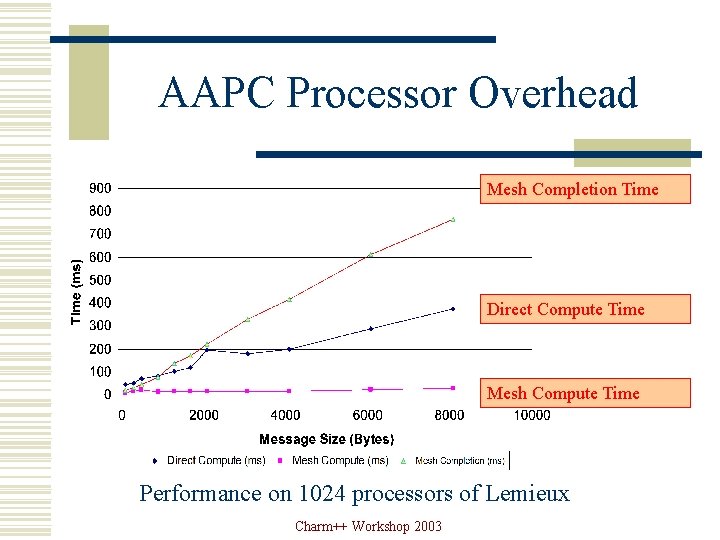

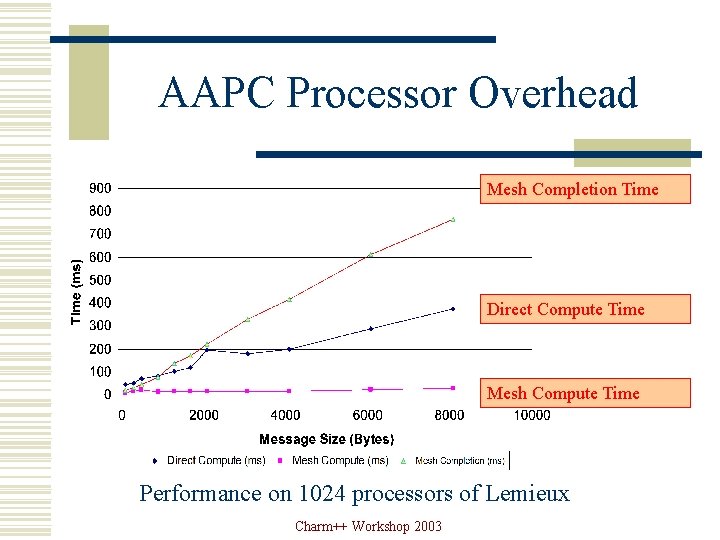

AAPC Processor Overhead Mesh Completion Time Direct Compute Time Mesh Compute Time Performance on 1024 processors of Lemieux Charm++ Workshop 2003

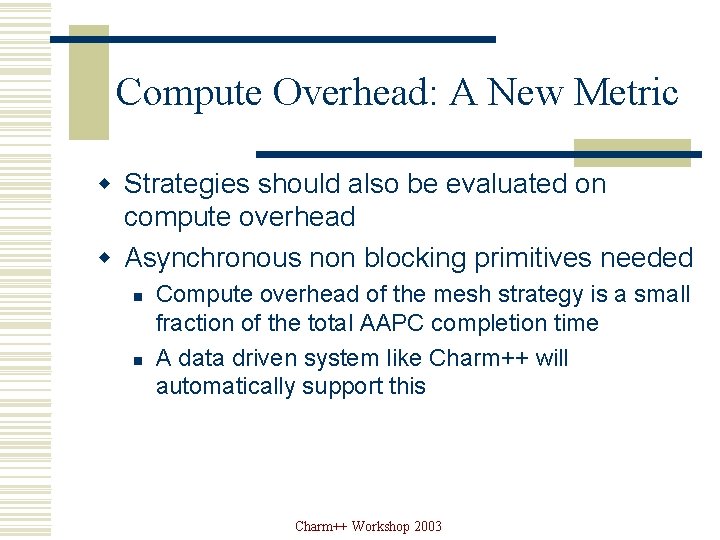

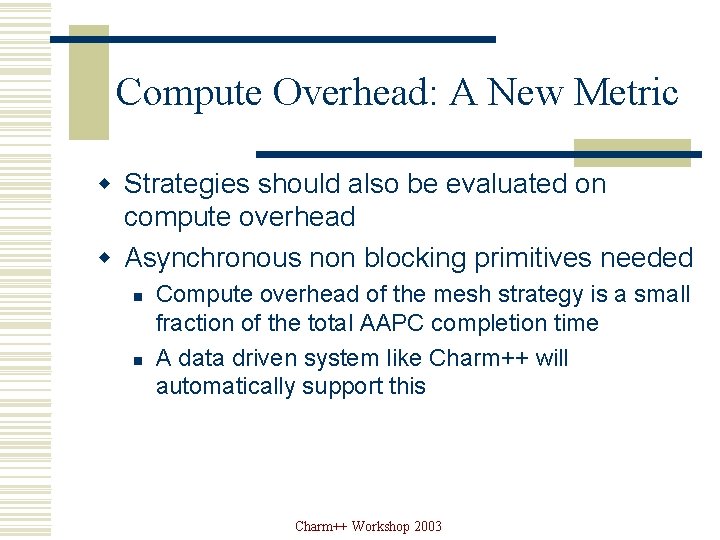

Compute Overhead: A New Metric w Strategies should also be evaluated on compute overhead w Asynchronous non blocking primitives needed n n Compute overhead of the mesh strategy is a small fraction of the total AAPC completion time A data driven system like Charm++ will automatically support this Charm++ Workshop 2003

NAMD Performance of Namd with the Atpase molecule. PME step in Namd involves an a 192 X 144 processor collective operation with 900 byte messages Charm++ Workshop 2003

Large Message Issues w Network contention n n Topology specific optimizations Contention free schedules Charm++ Workshop 2003

Fat-tree Topology w Used by many clustering technologies w Properties n n High bisection bandwidth O(P) Best topology for any volume of hardware Can simulate any other network within a logarithmic factor Many communication patterns are congestion free Charm++ Workshop 2003

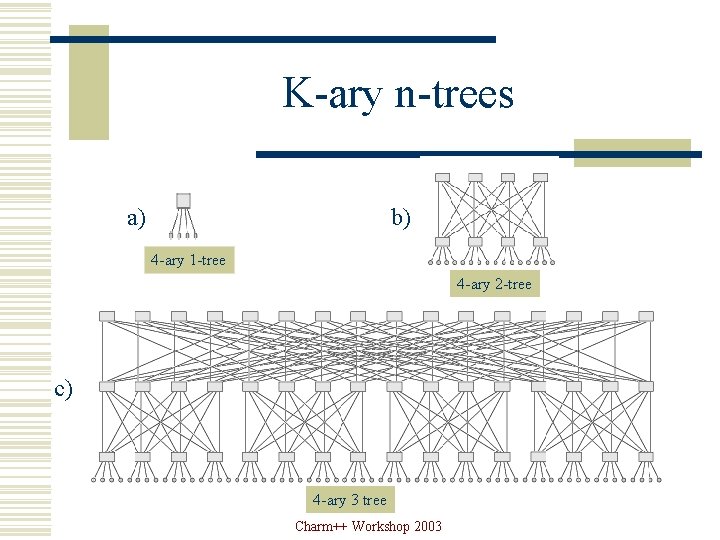

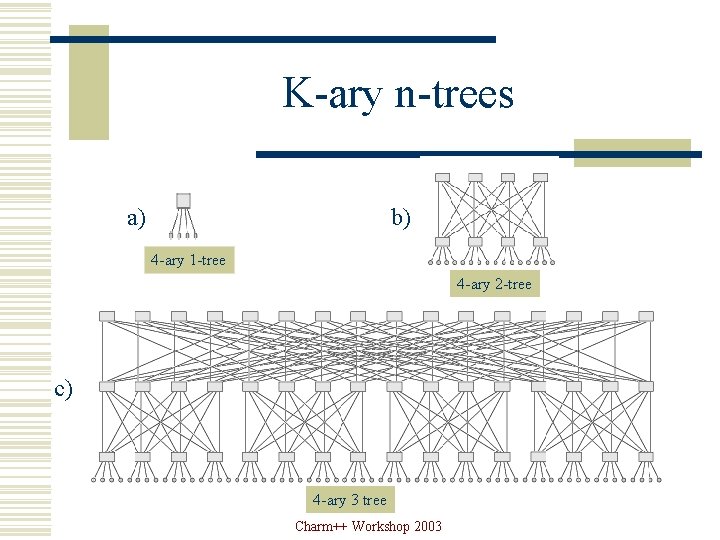

K-ary n-trees a) b) 4 -ary 1 -tree 4 -ary 2 -tree c) 4 -ary 3 tree Charm++ Workshop 2003

Contention Free Permutations w Dimensional exchange n In stage j processor i sends data to i (XOR) 2 j w Prefix send n In stage j processor i sends data to i (XOR) j Charm++ Workshop 2003

Prefix Send Strategy w All to all communication with large messages w P-1 stages n In stage j, processor i sends a message to processor (i XOR (j+1)) w Can be used for both AAPC and AAMC w Effective bandwidth of 520 MB/s/node on 64 nodes of Lemieux n 80% of the achievable bisection bandwidth) Charm++ Workshop 2003

Summary w We present optimization strategies for collective communication for both small and large messages w Small message collective communication optimized by message combining w Large message performance enhanced by the use of contention free schedules w New performance metric n Computation overhead Charm++ Workshop 2003

Future Work w Optimal strategy depends on (P, m) n Develop a learning framework using principle of persistence w Physical topologies n Bluegene (3 -d grid) w Many to many communication n Analysis and new strategies w Smart strategies for multiple simultaneous AAPCs over sections of processors Charm++ Workshop 2003