Scaling Collective Multicast Fat tree Networks Sameer Kumar

- Slides: 30

Scaling Collective Multicast Fat -tree Networks Sameer Kumar Parallel Programming Laboratory University Of Illinois at Urbana Champaign ICPADS’ 04 1

Collective Communication n Communication operation in which all or a large subset participate n n n For example broadcast Performance impediment All to all communication n n 07/07/04 All to all personalized communication (AAPC) All to all multicast (AAM) ICPADS’ 04 2

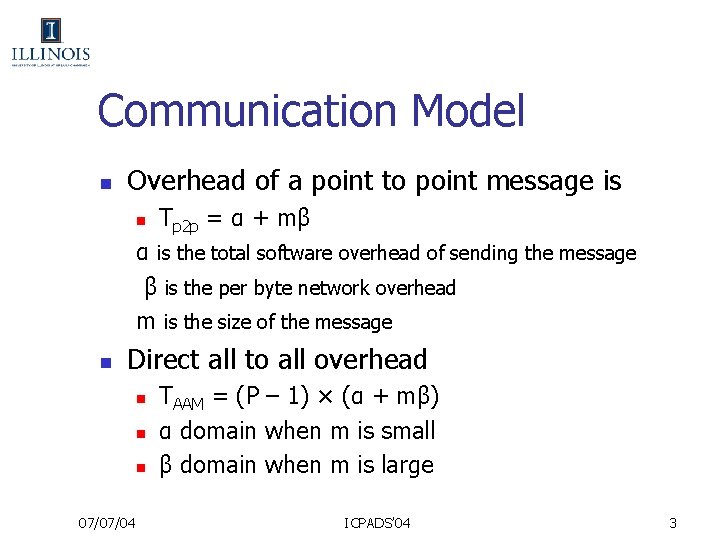

Communication Model n Overhead of a point to point message is n Tp 2 p = α + mβ α is the total software overhead of sending the message β is the per byte network overhead m is the size of the message n Direct all to all overhead n n n 07/07/04 TAAM = (P – 1) × (α + mβ) α domain when m is small β domain when m is large ICPADS’ 04 3

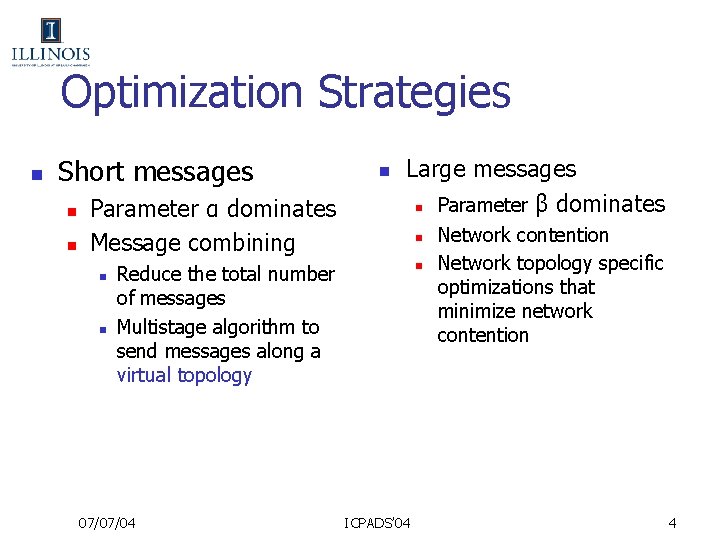

Optimization Strategies n Short messages n n Parameter α dominates Message combining n n n Large messages n Parameter β dominates n n Reduce the total number of messages Multistage algorithm to send messages along a virtual topology 07/07/04 ICPADS’ 04 Network contention Network topology specific optimizations that minimize network contention 4

Direct Strategies n Direct strategies optimize all to all multicast for large messages n n 07/07/04 Minimize network contention Topology specific optimizations that take advantage of contention free schedules ICPADS’ 04 5

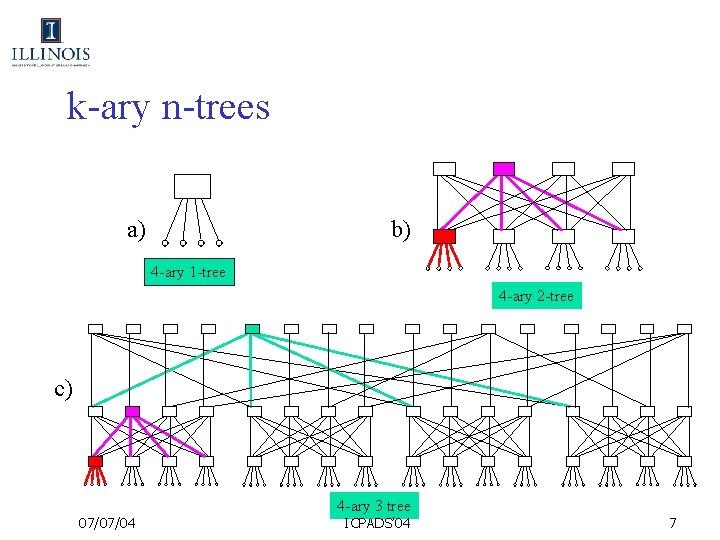

Fat-tree Networks n n Popular network topology for clusters Bisection bandwidth O(P) Network scales to several thousands of nodes Topology: k-ary, n-tree 07/07/04 ICPADS’ 04 6

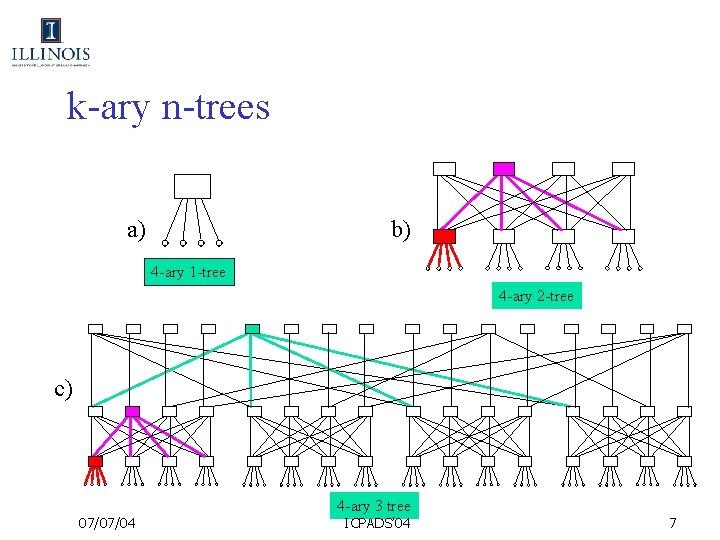

k-ary n-trees a) b) 4 -ary 1 -tree 4 -ary 2 -tree c) 07/07/04 4 -ary 3 tree ICPADS’ 04 7

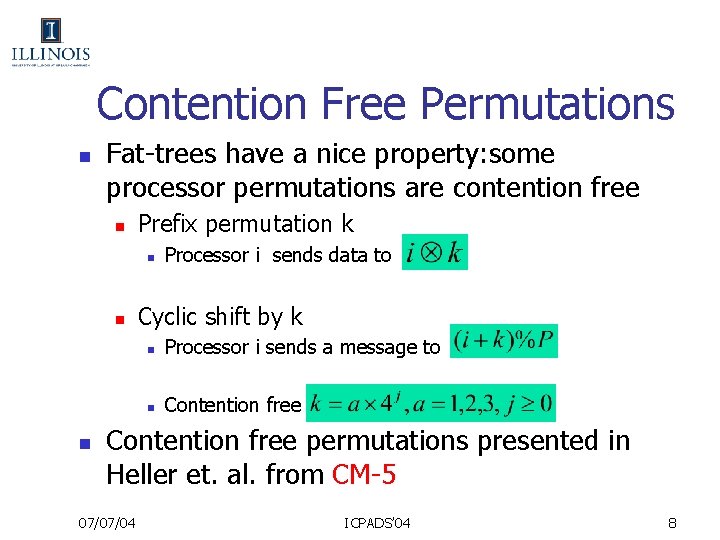

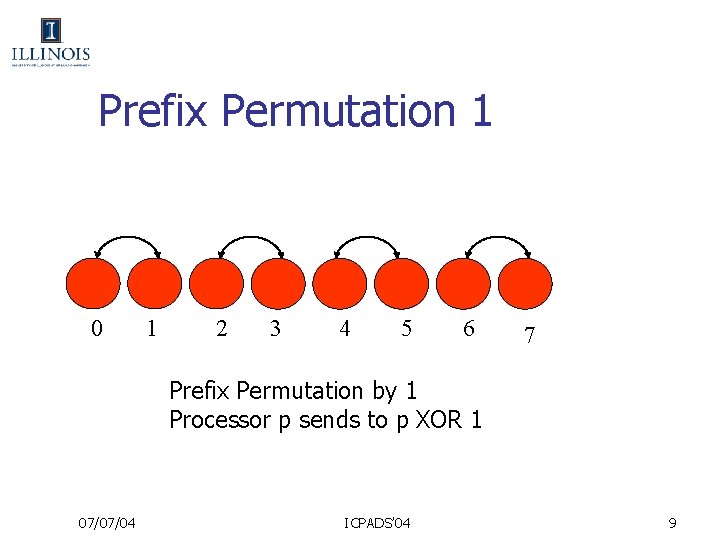

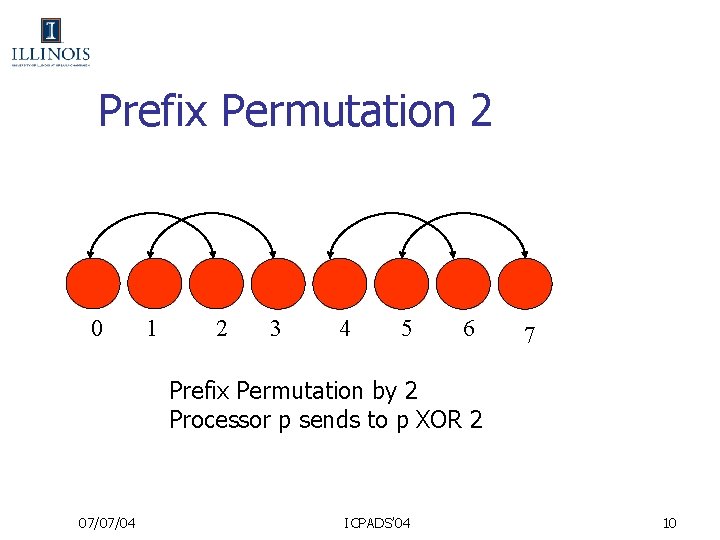

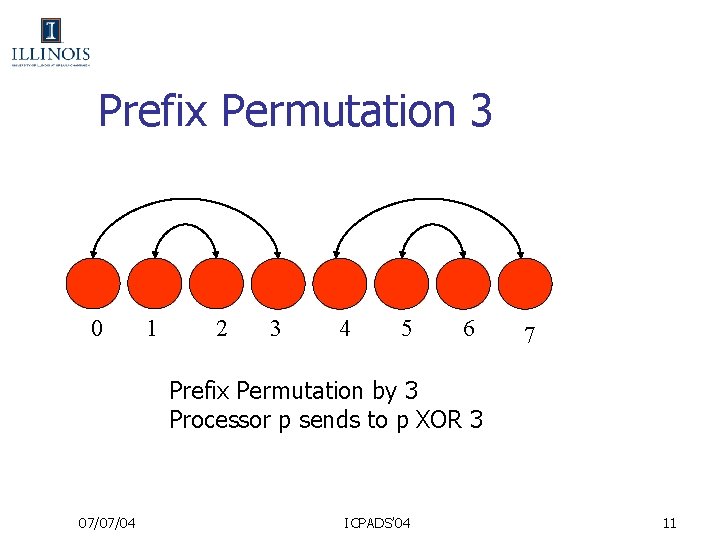

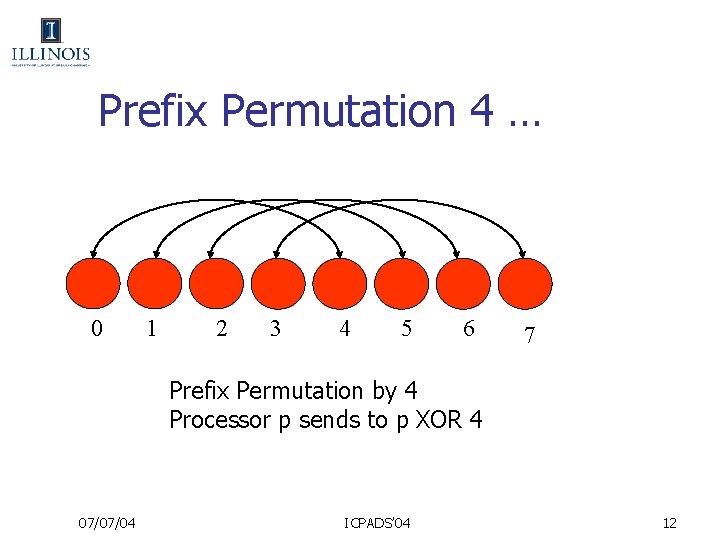

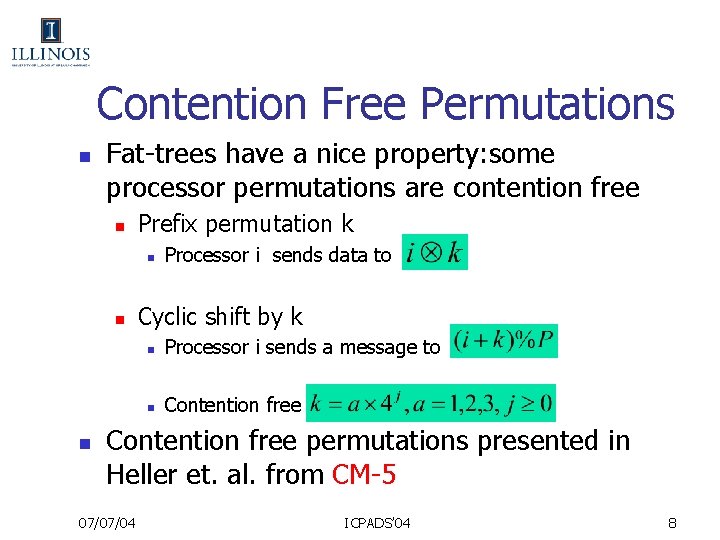

Contention Free Permutations n Fat-trees have a nice property: some processor permutations are contention free n Prefix permutation k n n n Processor i sends data to Cyclic shift by k n Processor i sends a message to n Contention free if Contention free permutations presented in Heller et. al. from CM-5 07/07/04 ICPADS’ 04 8

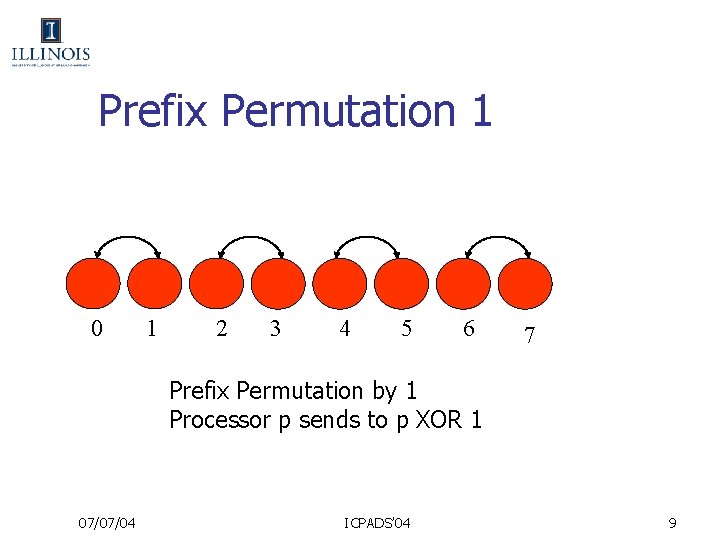

Prefix Permutation 1 0 1 2 3 4 5 6 7 Prefix Permutation by 1 Processor p sends to p XOR 1 07/07/04 ICPADS’ 04 9

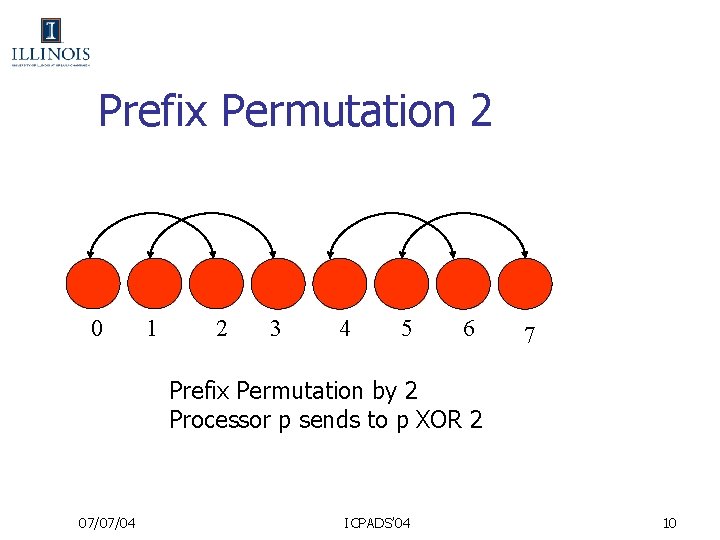

Prefix Permutation 2 0 1 2 3 4 5 6 7 Prefix Permutation by 2 Processor p sends to p XOR 2 07/07/04 ICPADS’ 04 10

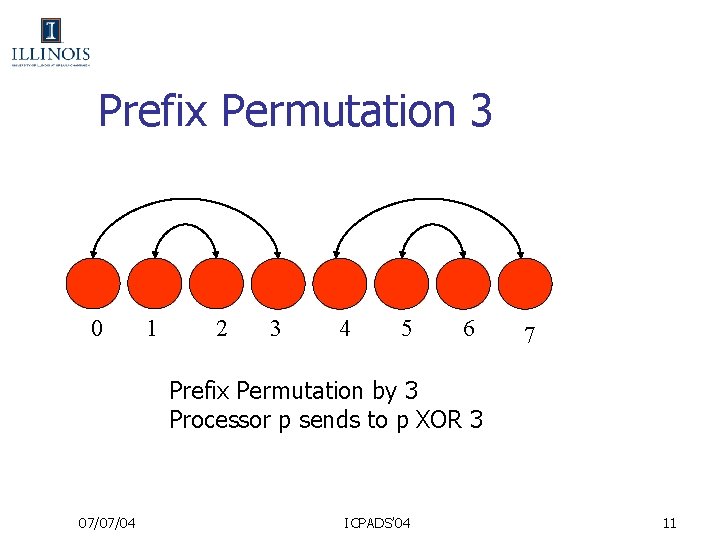

Prefix Permutation 3 0 1 2 3 4 5 6 7 Prefix Permutation by 3 Processor p sends to p XOR 3 07/07/04 ICPADS’ 04 11

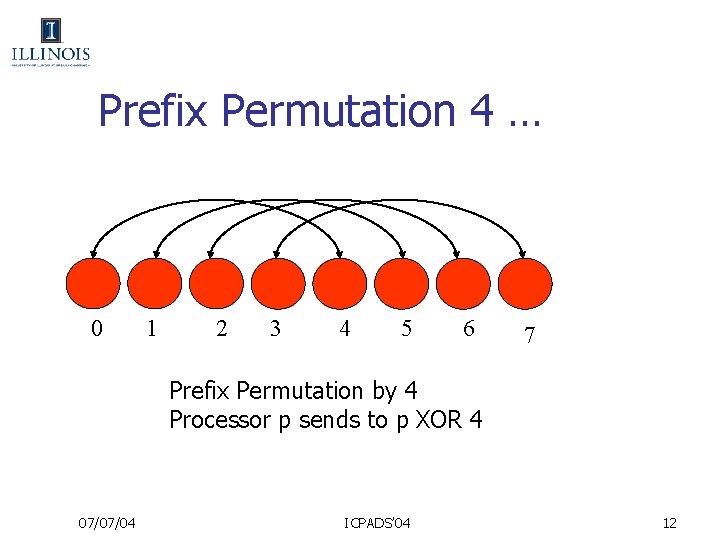

Prefix Permutation 4 … 0 1 2 3 4 5 6 7 Prefix Permutation by 4 Processor p sends to p XOR 4 07/07/04 ICPADS’ 04 12

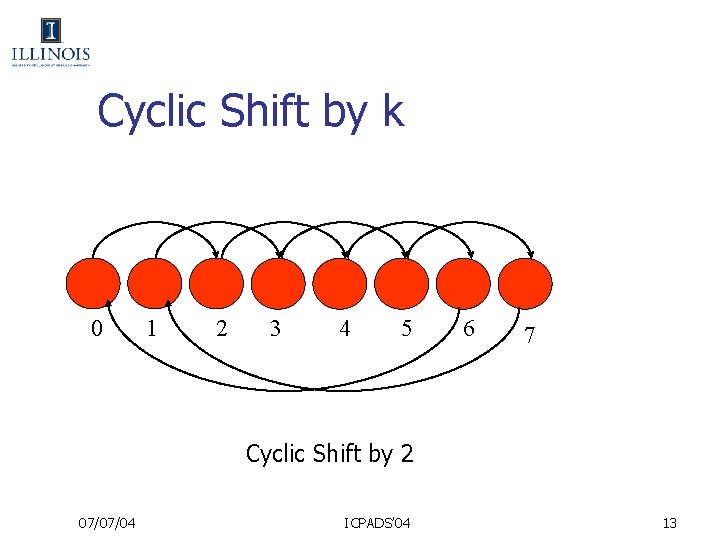

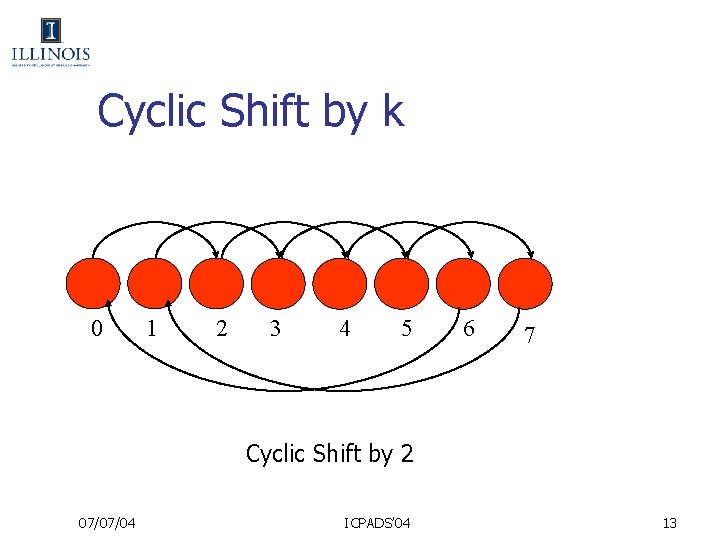

Cyclic Shift by k 0 1 2 3 4 5 6 7 Cyclic Shift by 2 07/07/04 ICPADS’ 04 13

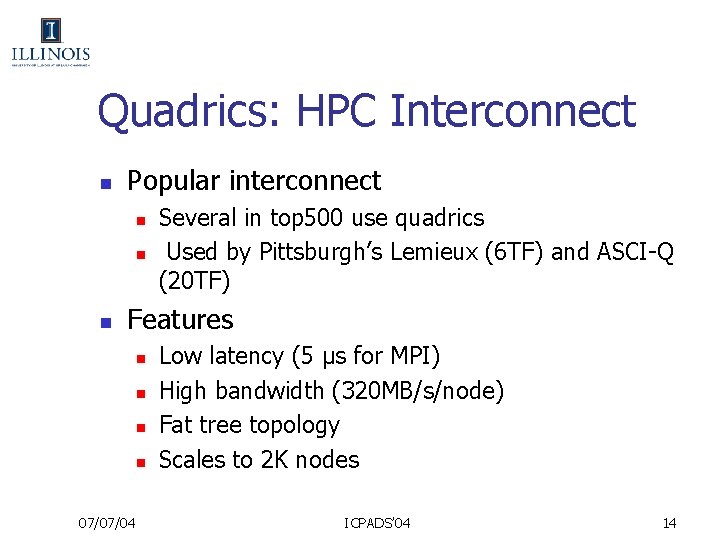

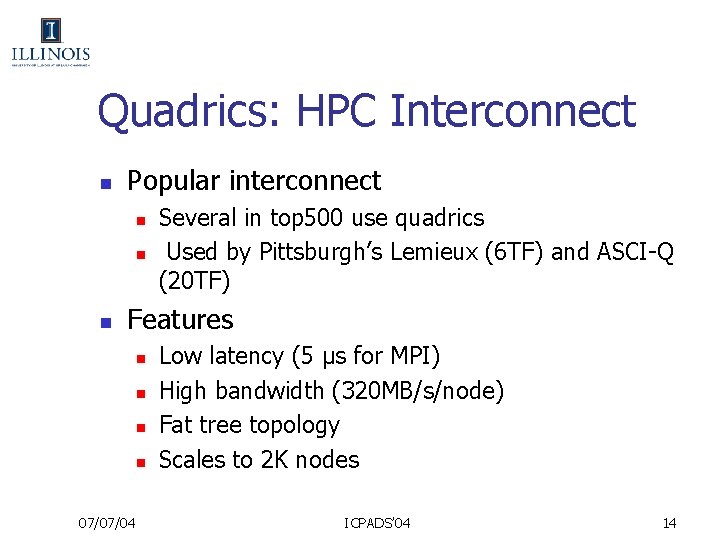

Quadrics: HPC Interconnect n Popular interconnect n n n Several in top 500 use quadrics Used by Pittsburgh’s Lemieux (6 TF) and ASCI-Q (20 TF) Features n n 07/07/04 Low latency (5 μs for MPI) High bandwidth (320 MB/s/node) Fat tree topology Scales to 2 K nodes ICPADS’ 04 14

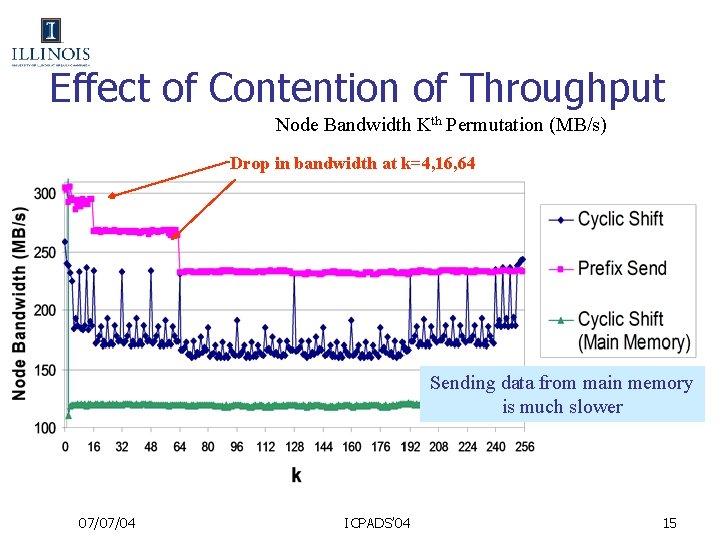

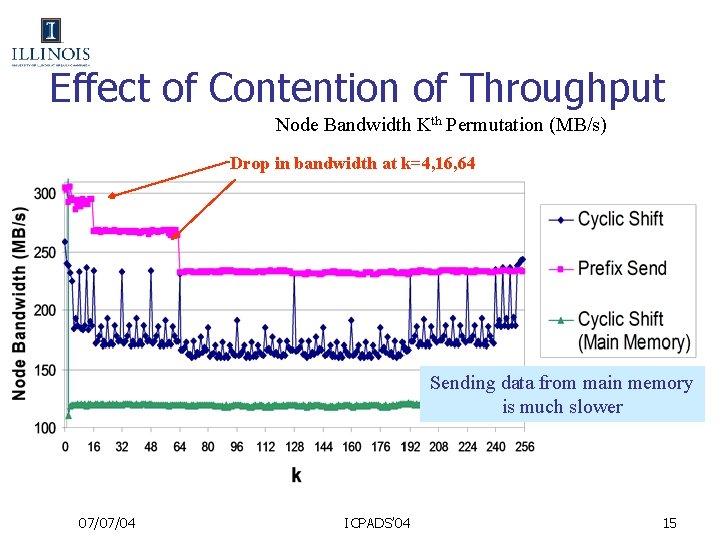

Effect of Contention of Throughput Node Bandwidth Kth Permutation (MB/s) Drop in bandwidth at k=4, 16, 64 Sending data from main memory is much slower 07/07/04 ICPADS’ 04 15

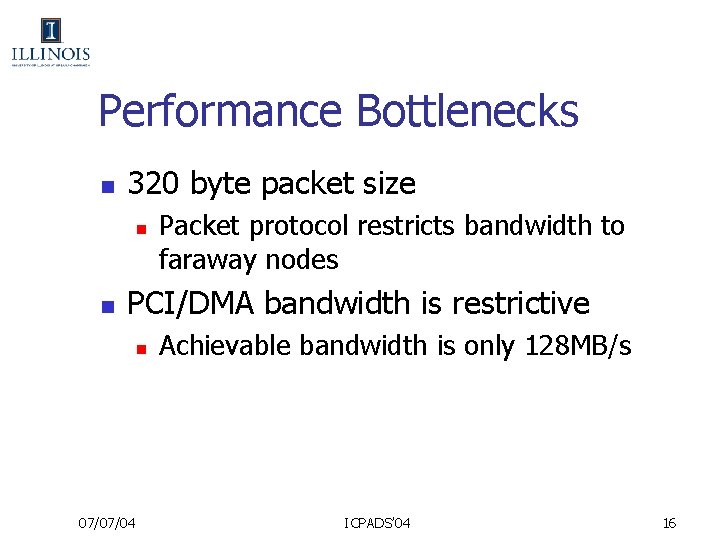

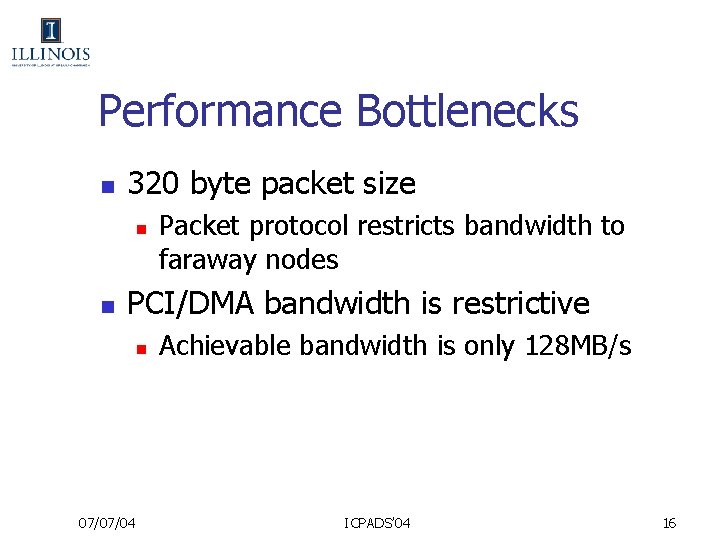

Performance Bottlenecks n 320 byte packet size n n Packet protocol restricts bandwidth to faraway nodes PCI/DMA bandwidth is restrictive n 07/07/04 Achievable bandwidth is only 128 MB/s ICPADS’ 04 16

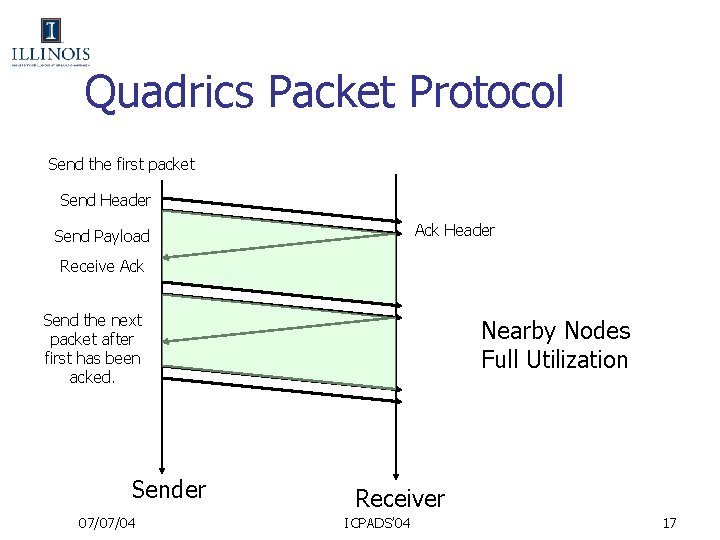

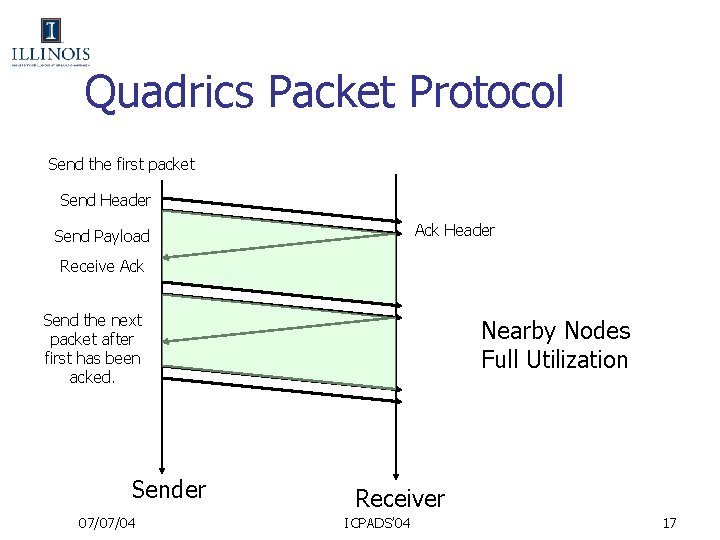

Quadrics Packet Protocol Send the first packet Send Header Ack Header Send Payload Receive Ack Send the next packet after first has been acked. Sender 07/07/04 Nearby Nodes Full Utilization Receiver ICPADS’ 04 17

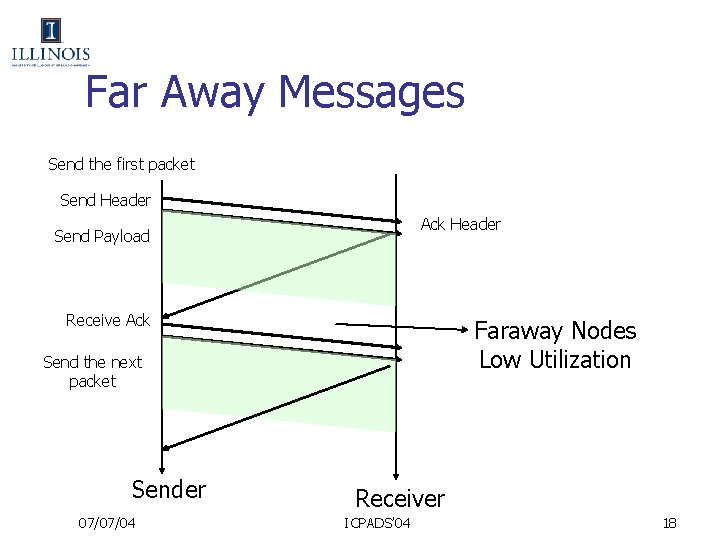

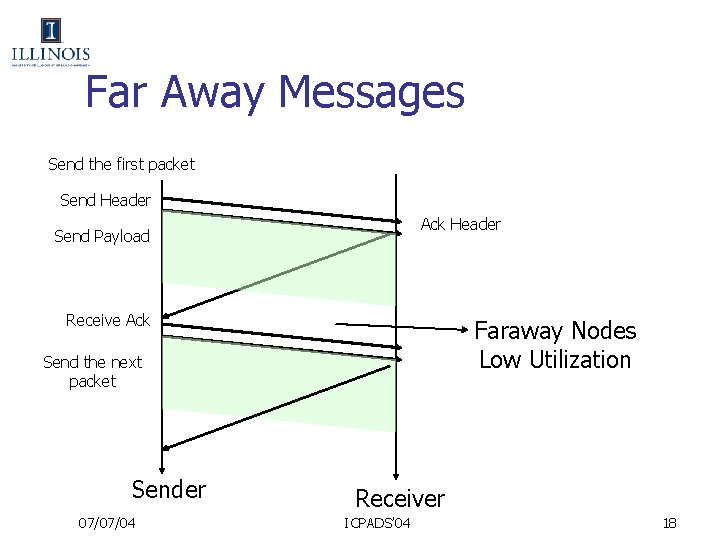

Far Away Messages Send the first packet Send Header Ack Header Send Payload Receive Ack Faraway Nodes Low Utilization Send the next packet Sender 07/07/04 Receiver ICPADS’ 04 18

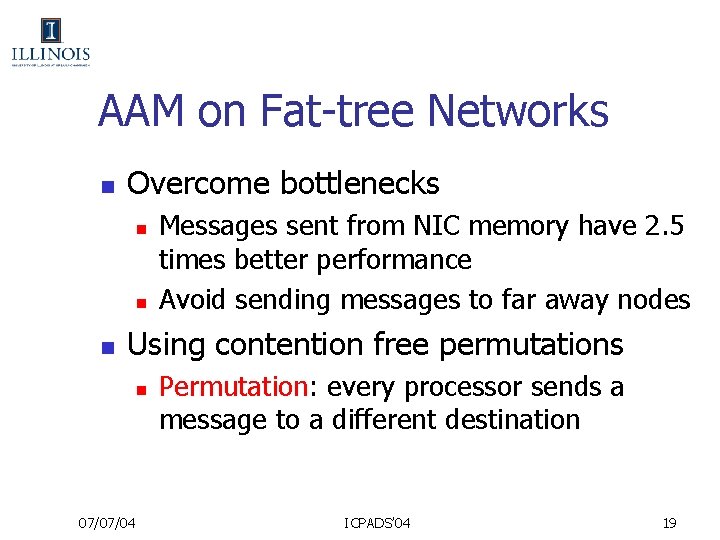

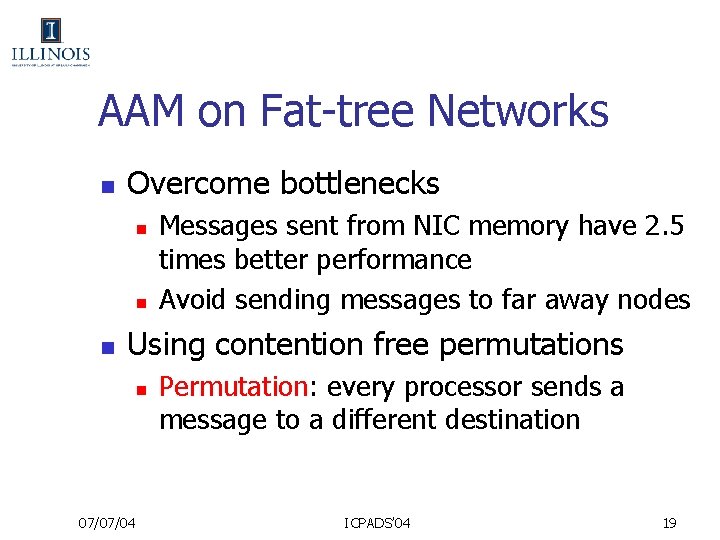

AAM on Fat-tree Networks n Overcome bottlenecks n n n Messages sent from NIC memory have 2. 5 times better performance Avoid sending messages to far away nodes Using contention free permutations n 07/07/04 Permutation: every processor sends a message to a different destination ICPADS’ 04 19

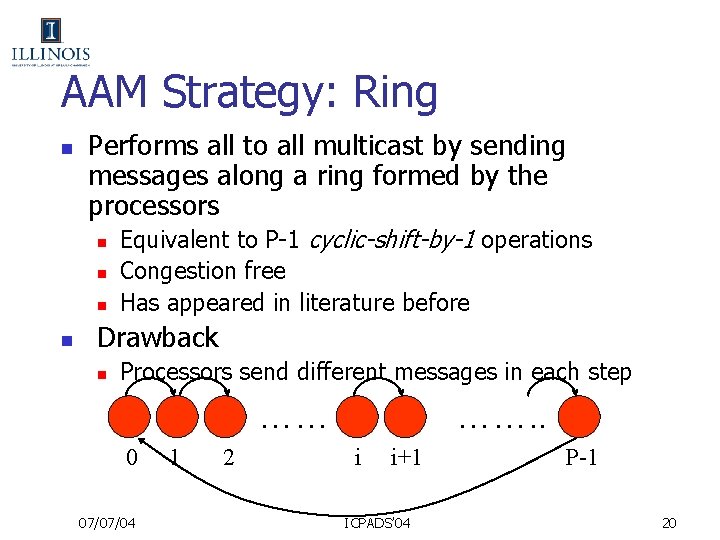

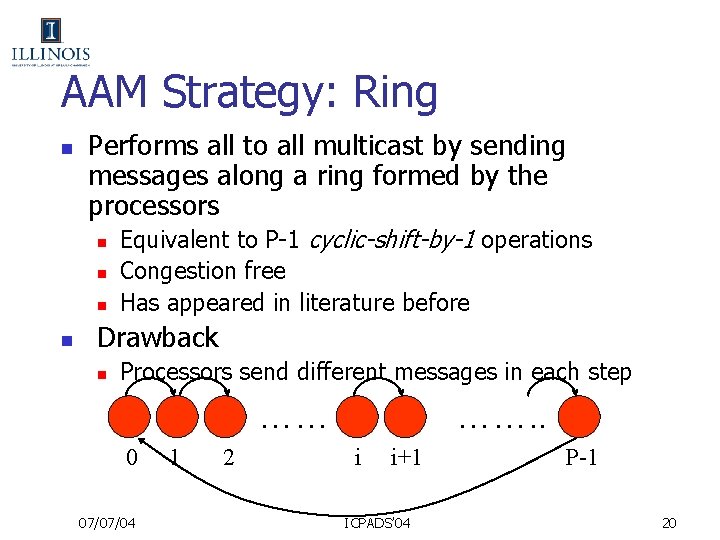

AAM Strategy: Ring n Performs all to all multicast by sending messages along a ring formed by the processors n n Equivalent to P-1 cyclic-shift-by-1 operations Congestion free Has appeared in literature before Drawback n Processors send different messages in each step …… 0 07/07/04 1 2 ……. . i i+1 ICPADS’ 04 P-1 20

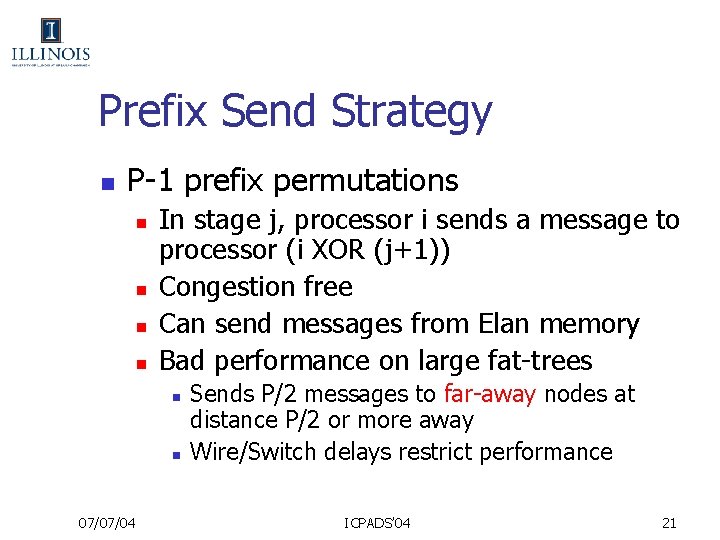

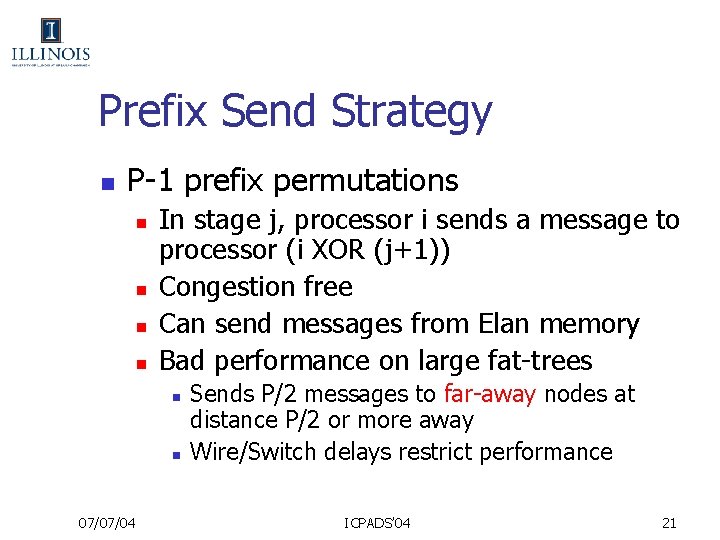

Prefix Send Strategy n P-1 prefix permutations n n In stage j, processor i sends a message to processor (i XOR (j+1)) Congestion free Can send messages from Elan memory Bad performance on large fat-trees n n 07/07/04 Sends P/2 messages to far-away nodes at distance P/2 or more away Wire/Switch delays restrict performance ICPADS’ 04 21

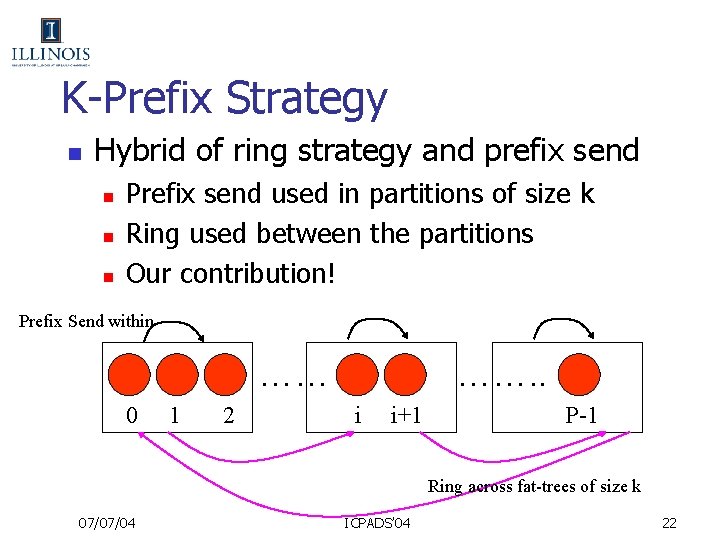

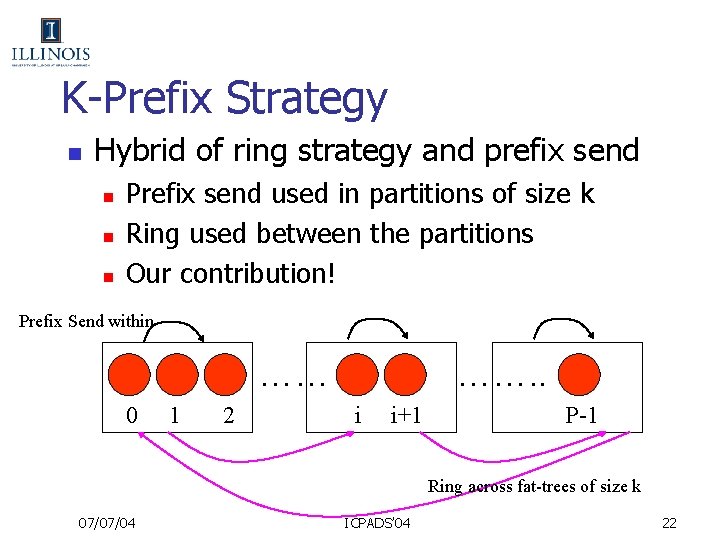

K-Prefix Strategy n Hybrid of ring strategy and prefix send n n n Prefix send used in partitions of size k Ring used between the partitions Our contribution! Prefix Send within …… 0 1 2 ……. . i i+1 P-1 Ring across fat-trees of size k 07/07/04 ICPADS’ 04 22

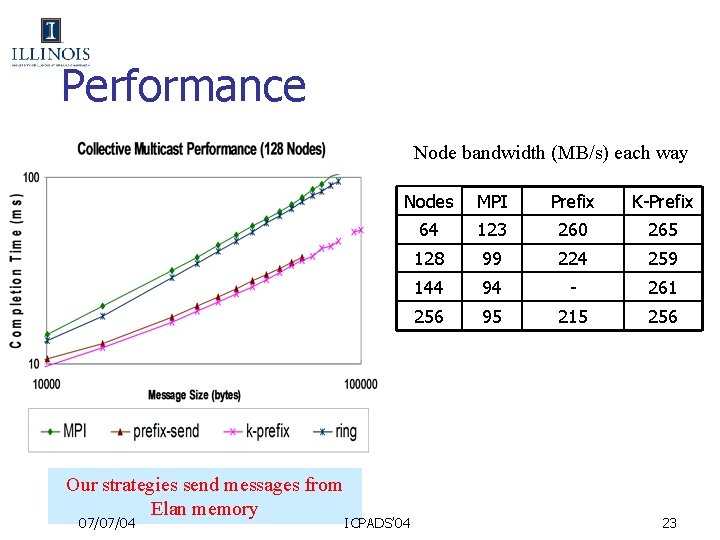

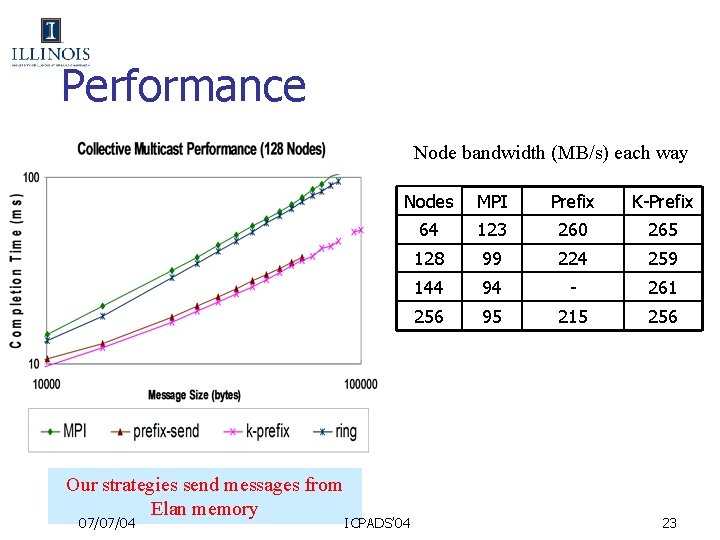

Performance Node bandwidth (MB/s) each way Our strategies send messages from Elan memory 07/07/04 Nodes MPI Prefix K-Prefix 64 123 260 265 128 99 224 259 144 94 - 261 256 95 215 256 ICPADS’ 04 23

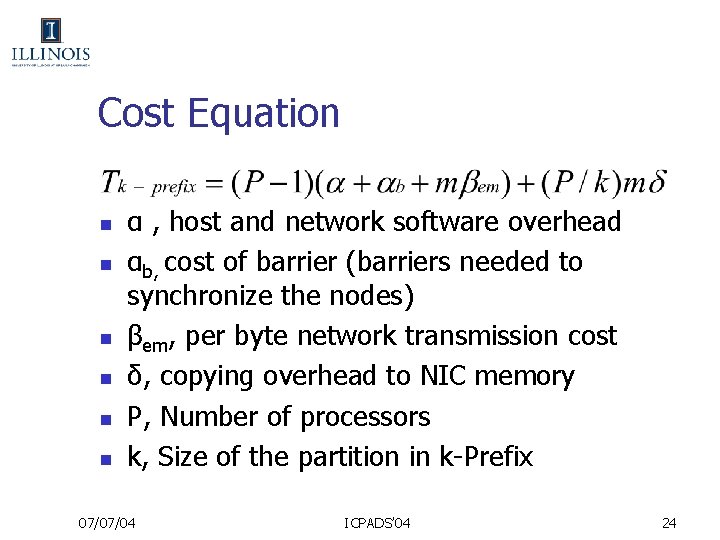

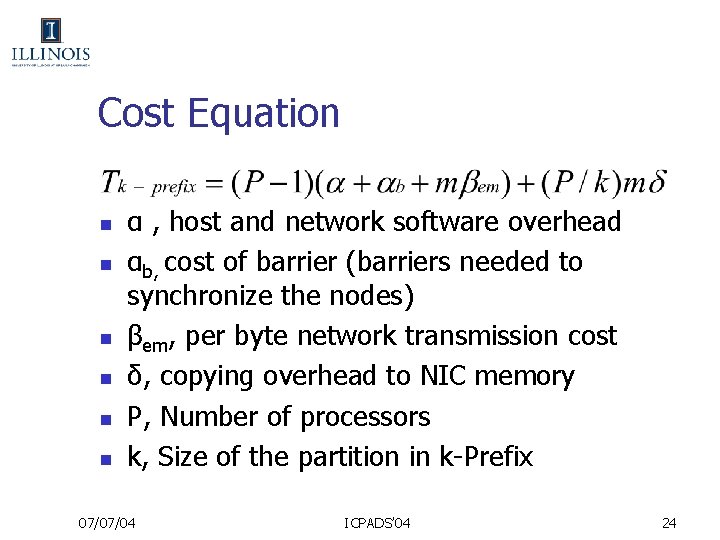

Cost Equation n n n α , host and network software overhead αb, cost of barrier (barriers needed to synchronize the nodes) βem, per byte network transmission cost δ, copying overhead to NIC memory P, Number of processors k, Size of the partition in k-Prefix 07/07/04 ICPADS’ 04 24

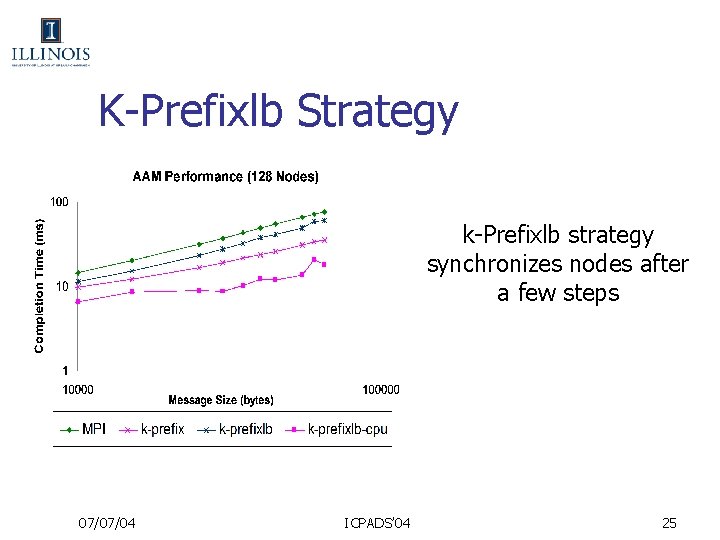

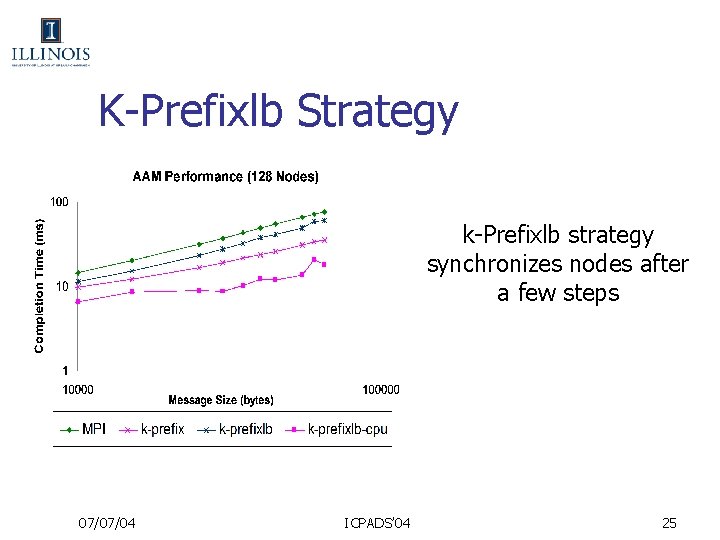

K-Prefixlb Strategy k-Prefixlb strategy synchronizes nodes after a few steps 07/07/04 ICPADS’ 04 25

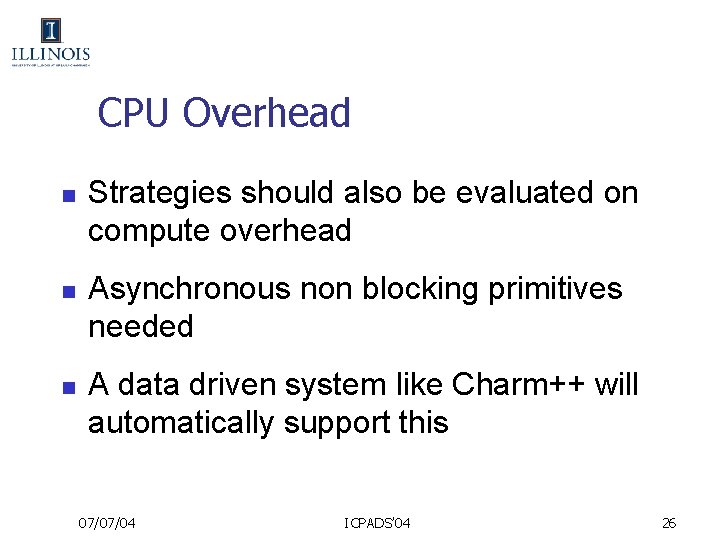

CPU Overhead n n n Strategies should also be evaluated on compute overhead Asynchronous non blocking primitives needed A data driven system like Charm++ will automatically support this 07/07/04 ICPADS’ 04 26

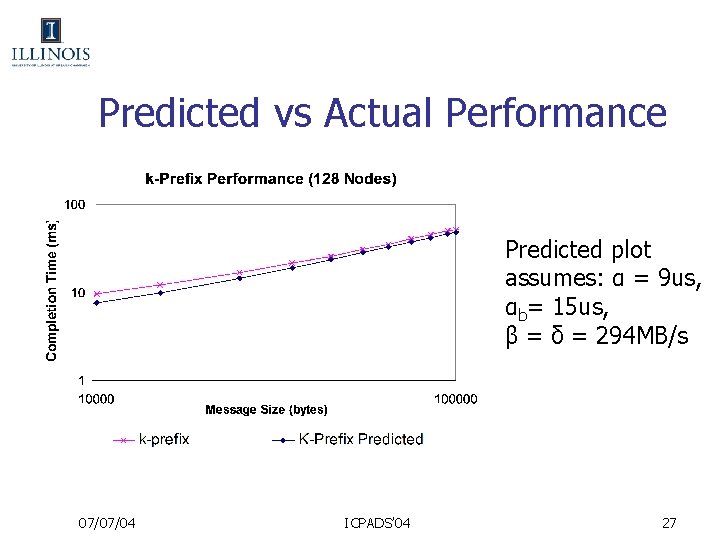

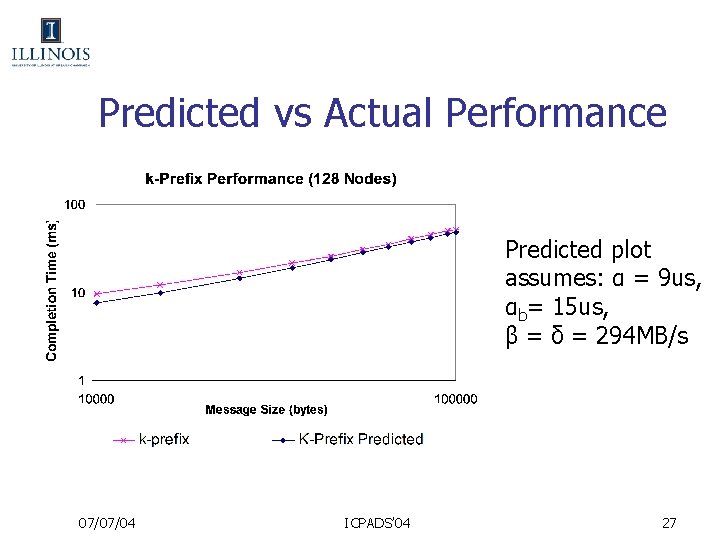

Predicted vs Actual Performance Predicted plot assumes: α = 9 us, αb= 15 us, β = δ = 294 MB/s 07/07/04 ICPADS’ 04 27

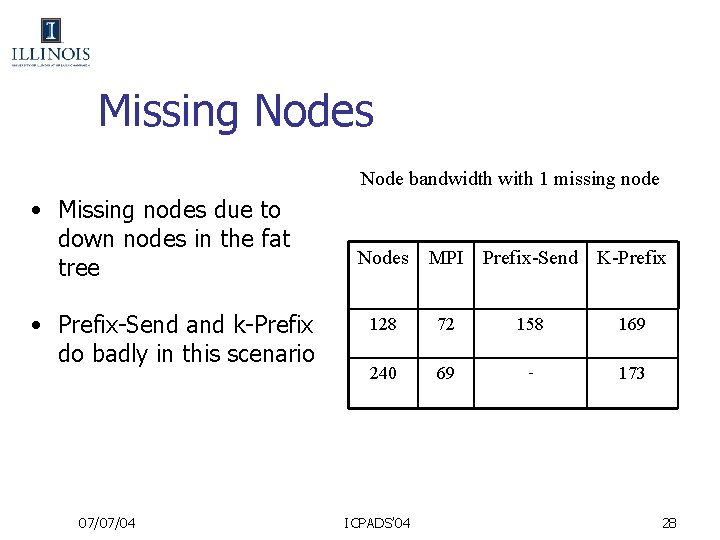

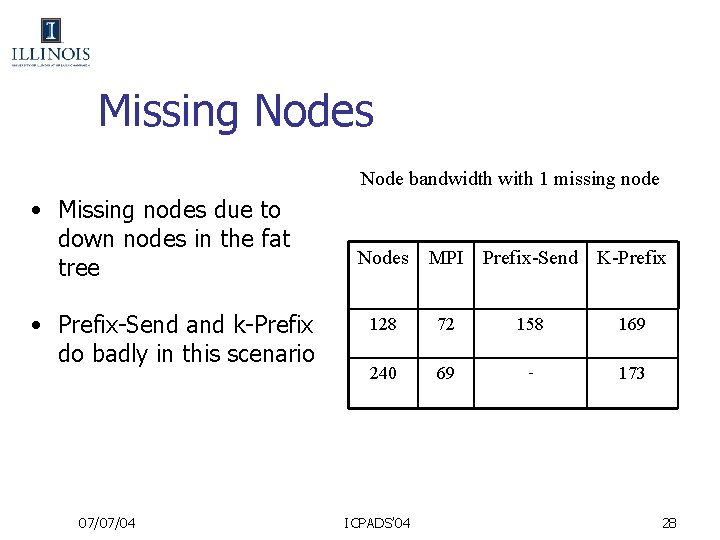

Missing Nodes Node bandwidth with 1 missing node • Missing nodes due to down nodes in the fat tree • Prefix-Send and k-Prefix do badly in this scenario 07/07/04 Nodes MPI Prefix-Send K-Prefix 128 72 158 169 240 69 - 173 ICPADS’ 04 28

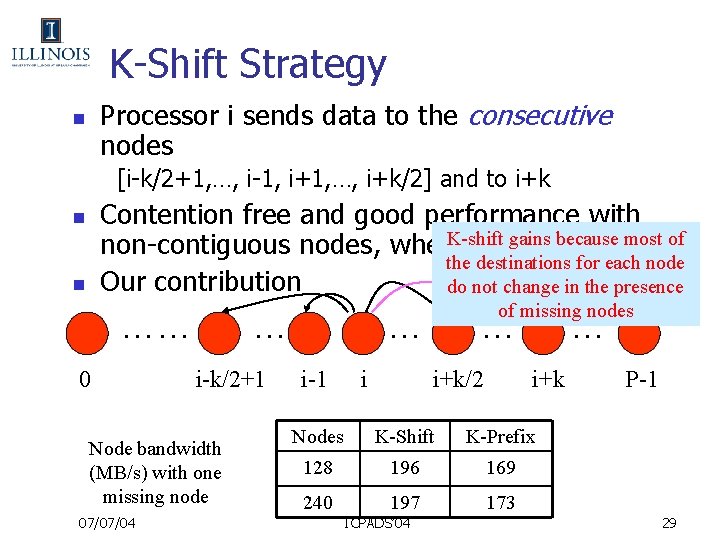

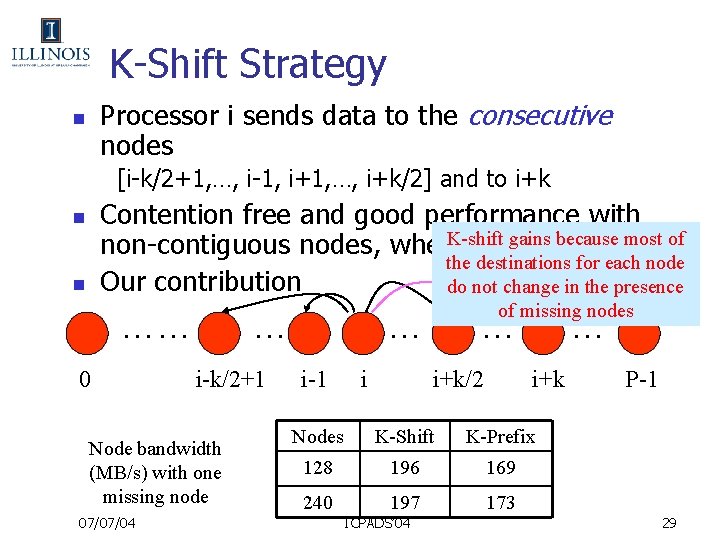

K-Shift Strategy Processor i sends data to the consecutive nodes n [i-k/2+1, …, i-1, i+1, …, i+k/2] and to i+k Contention free and good performance with non-contiguous nodes, when. K-shift k=8 gains because most of the destinations for each node Our contribution do not change in the presence n n …… 0 … i-k/2+1 Node bandwidth (MB/s) with one missing node 07/07/04 of missing nodes … i-1 i … i+k/2 … i+k Nodes K-Shift K-Prefix 128 196 169 240 197 173 ICPADS’ 04 P-1 29

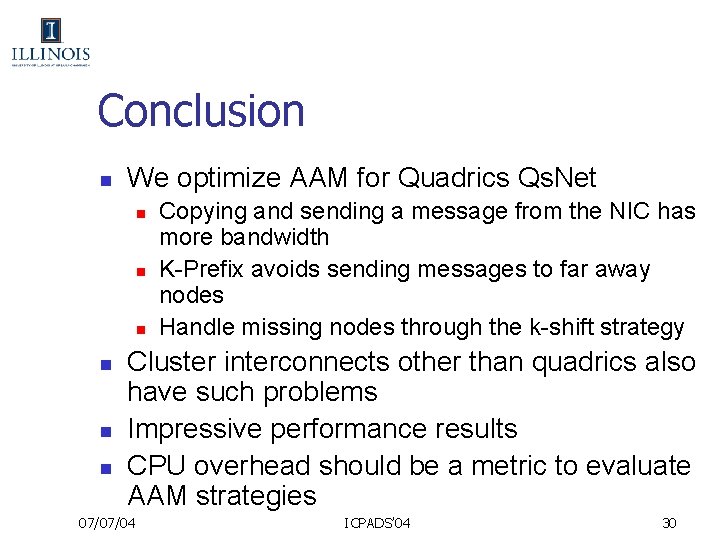

Conclusion n We optimize AAM for Quadrics Qs. Net n n n Copying and sending a message from the NIC has more bandwidth K-Prefix avoids sending messages to far away nodes Handle missing nodes through the k-shift strategy Cluster interconnects other than quadrics also have such problems Impressive performance results CPU overhead should be a metric to evaluate AAM strategies 07/07/04 ICPADS’ 04 30