4 MEASUREMENT ERRORS 1 4 MEASUREMENT ERRORS Practically

- Slides: 25

4. MEASUREMENT ERRORS 1 4. MEASUREMENT ERRORS Practically all measurements of continuums involve errors. Understanding the nature and source of these errors can help in reducing their impact. In earlier times it was thought that errors in measurement could be eliminated by improvements in technique and equipment, however most scientists now accept this is not the case. The types of errors include: systematic errors and random errors. Reference: www. capgo. com

4. MEASUREMENT ERRORS. 4. 1. Systematic errors 2 4. 1. Systematic errors Systematic error are deterministic; they may be predicted and hence eventually removed from data. Systematic errors may be traced by a careful examination of the measurement path: from measurement object, via the measurement system to the observer. Another way to reveal a systematic error is to use the repetition method of measurements. NB: Systematic errors may change with time, so it is important that sufficient reference data be collected to allow the systematic errors to be quantified. References: www. capgo. com, [1]

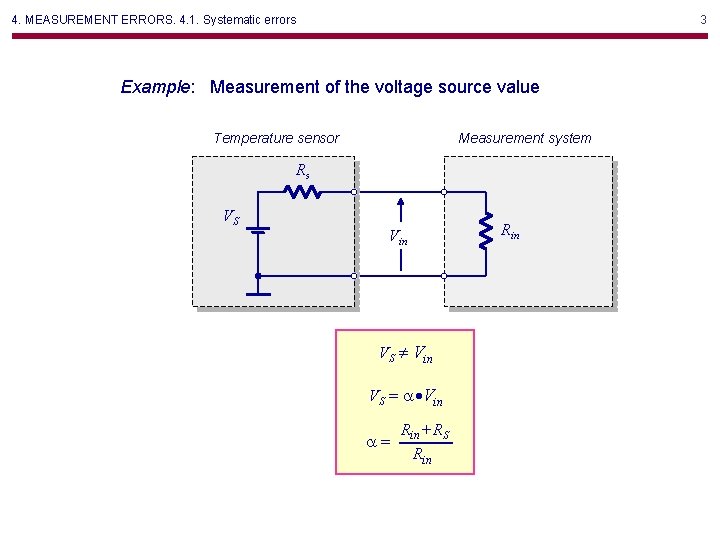

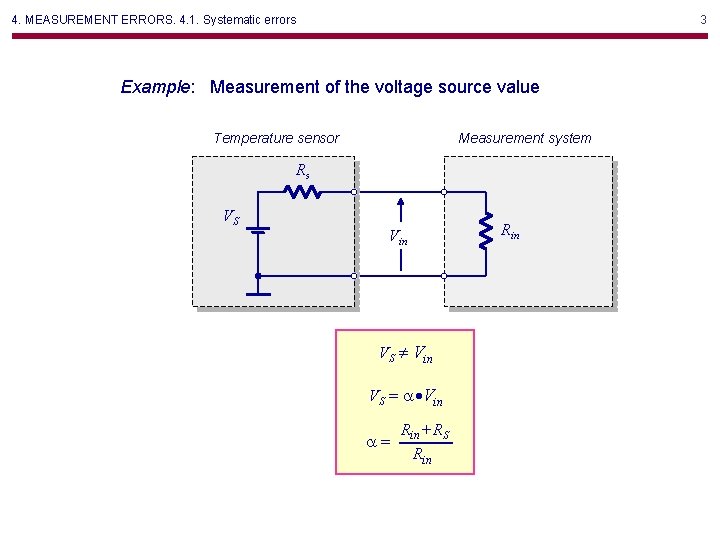

4. MEASUREMENT ERRORS. 4. 1. Systematic errors 3 Example: Measurement of the voltage source value Temperature sensor Measurement system Rs VS Rin VS Vin VS = a·Vin a= Rin+ RS Rin

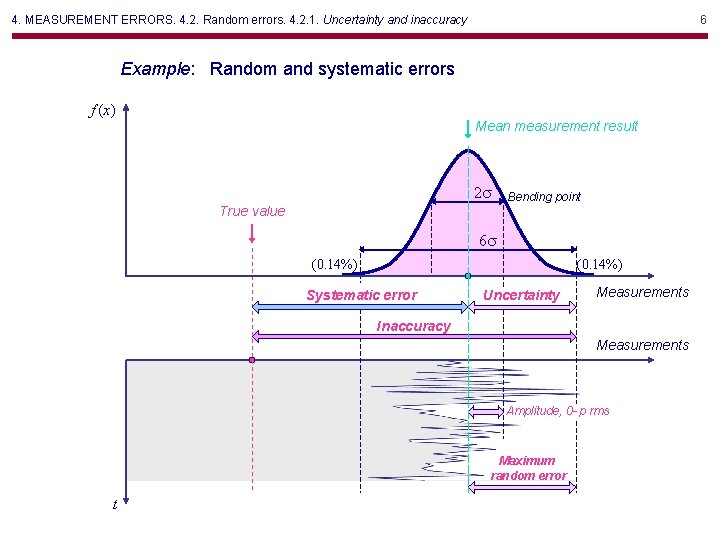

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 1. Uncertainty and inaccuracy 4 4. 2. Random errors 4. 2. 1. Uncertainty and inaccuracy Random error vary unpredictably for every successive measurement of the same physical quantity, made with the same equipment under the same conditions. We cannot correct random errors, since we have no insight into their cause and since they result in random (non-predictable) variations in the measurement result. When dealing with random errors we can only speak of the probability of an error of a given magnitude. Reference: [1]

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 1. Uncertainty and inaccuracy 5 NB: Random errors are described in probabilistic terms, while systematic errors are described in deterministic terms. Unfortunately, this deterministic character makes it more difficult to detect systematic errors. Reference: [1]

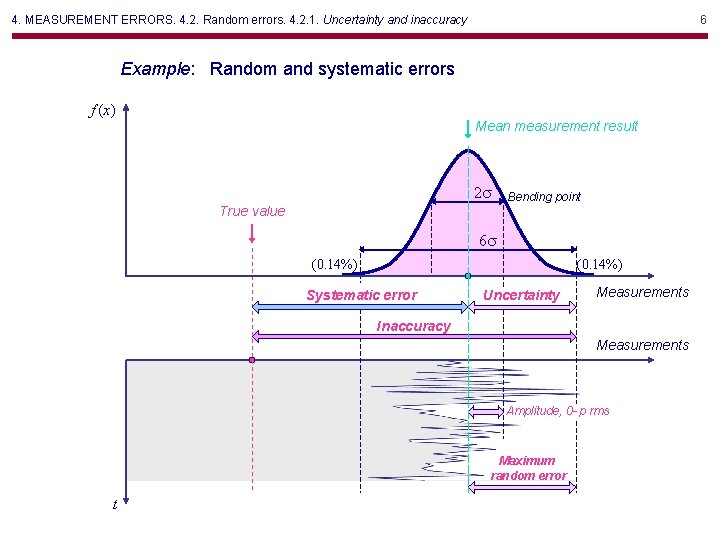

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 1. Uncertainty and inaccuracy 6 Example: Random and systematic errors f (x ) Mean measurement result 2 s True value Bending point 6 s (0. 14%) Systematic error Uncertainty Measurements Inaccuracy Measurements Amplitude, 0 -p rms Maximum random error t

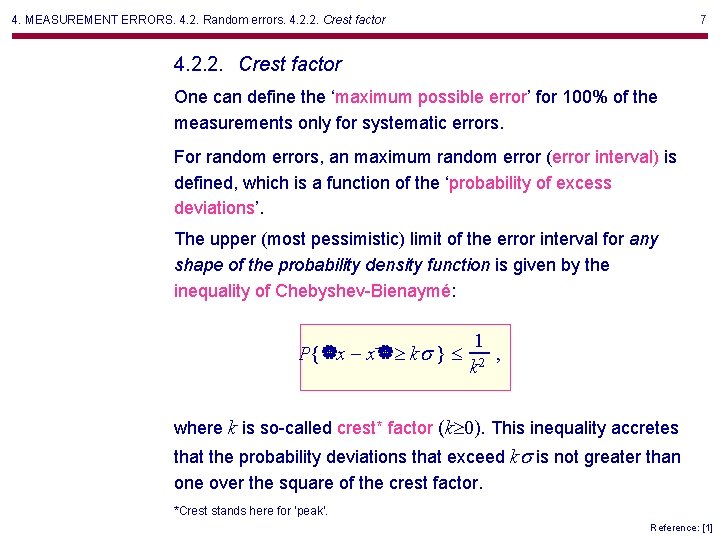

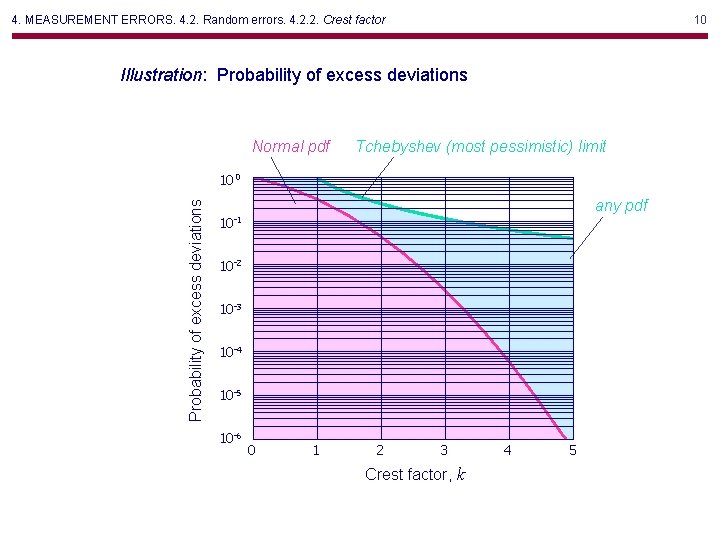

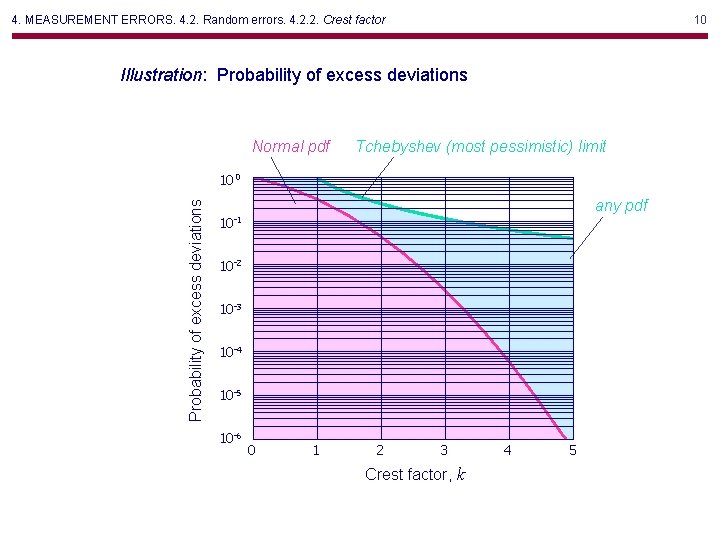

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 2. Crest factor 7 4. 2. 2. Crest factor One can define the ‘maximum possible error’ for 100% of the measurements only for systematic errors. For random errors, an maximum random error (error interval) is defined, which is a function of the ‘probability of excess deviations’. The upper (most pessimistic) limit of the error interval for any shape of the probability density function is given by the inequality of Chebyshev-Bienaymé: 1 P { x - x k s } 2 , k where k is so-called crest* factor (k 0). This inequality accretes that the probability deviations that exceed ks is not greater than one over the square of the crest factor. *Crest stands here for ‘peak’. Reference: [1]

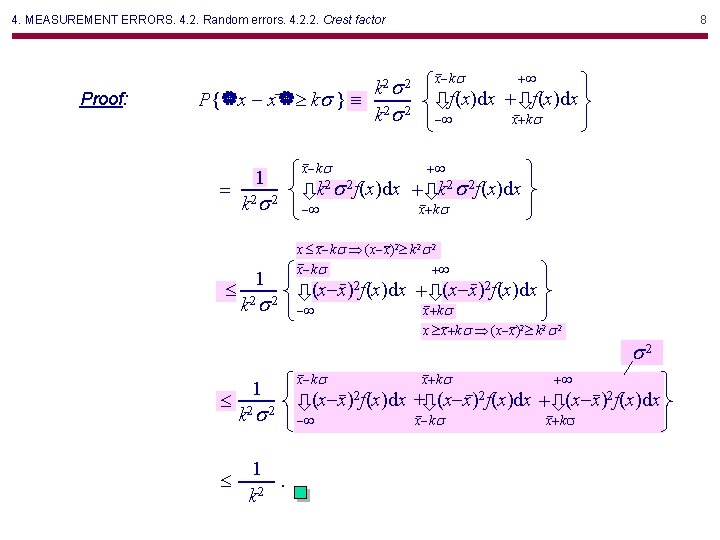

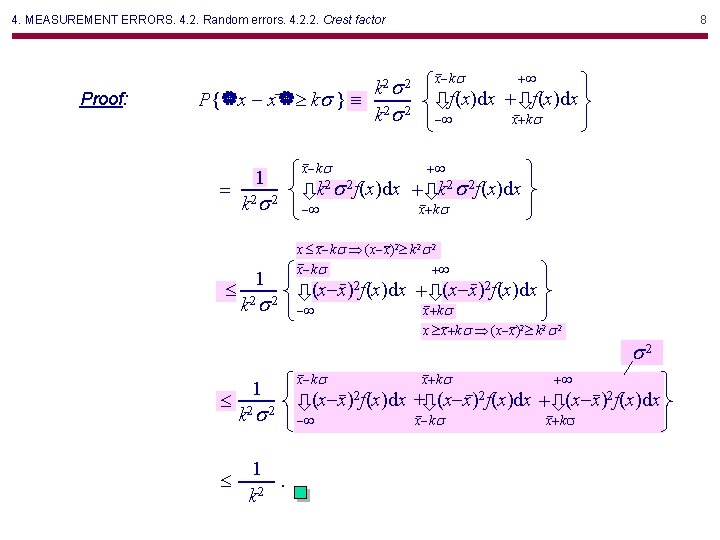

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 2. Crest factor Proof: k 2 s 2 P { x - x k s } 2 2 ks = x-ks 1 k 2 s 2 + f(x)dx + f(x)dx - x+ks + k 2 s 2 f(x)dx + k 2 s 2 f(x)dx - x+ks (x-x)2 f(x)dx + (x-x)2 f(x)dx - x-ks 1 s x-ks x x - k s (x - x )2 k 2 s 2 x-ks + 1 k 2 8 2 1. 2 k x+ks x x + k s (x - x )2 k 2 s 2 x+ks + s 2 (x-x)2 f(x)dx + (x-x)2 f(x)dx - x-ks x+ks

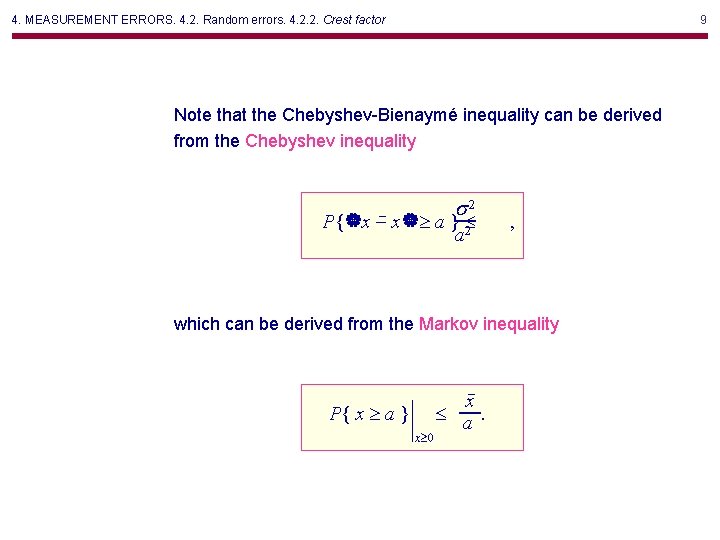

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 2. Crest factor 9 Note that the Chebyshev-Bienaymé inequality can be derived from the Chebyshev inequality s 2 P { x - x a } a 2 , which can be derived from the Markov inequality P{ x a } x 0 x a.

4. MEASUREMENT ERRORS. 4. 2. Random errors. 4. 2. 2. Crest factor 10 Illustration: Probability of excess deviations Normal pdf Tchebyshev (most pessimistic) limit Probability of excess deviations 10 0 any pdf 10 -1 10 -2 10 -3 10 -4 10 -5 10 -6 0 1 2 3 Crest factor, k 4 5

4. MEASUREMENT ERRORS. 4. 3. Error propagation 11 4. 3. Error sensitivity analysis The sensitivity of a function to the errors in arguments is called error sensitivity analysis or error propagation analysis. We will discuss this analysis first for systematic errors and then for random errors. 4. 3. 1. Systematic errors Let us define the absolute error as the difference between the measured and true values of a physical quantity, Da a - a 0 , Reference: [1]

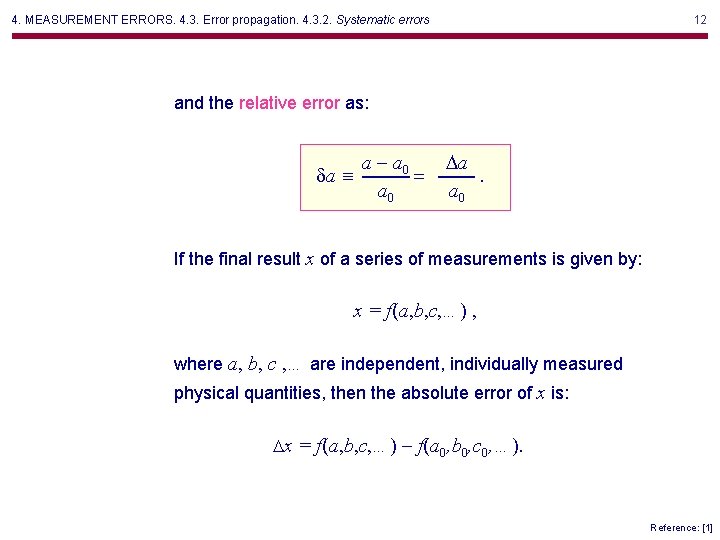

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Systematic errors 12 and the relative error as: da a - a 0 = a 0 Da. a 0 If the final result x of a series of measurements is given by: x = f(a, b, c, …) , where a, b, c , … are independent, individually measured physical quantities, then the absolute error of x is: Dx = f(a, b, c, …) - f(a 0, b 0, c 0, …). Reference: [1]

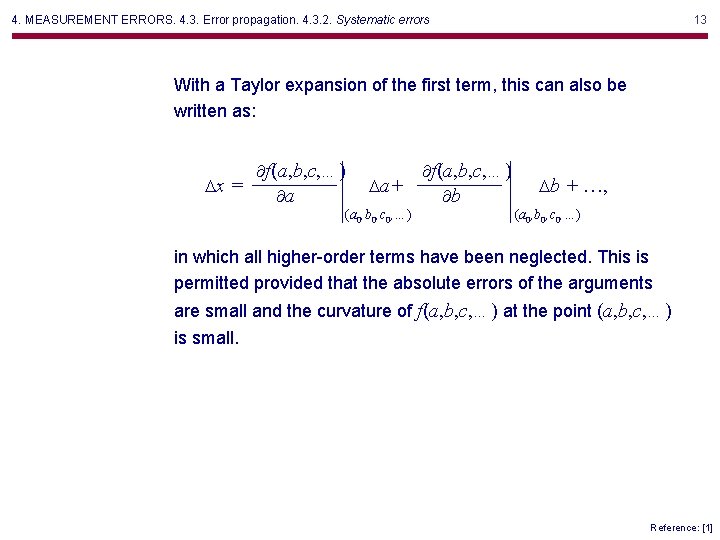

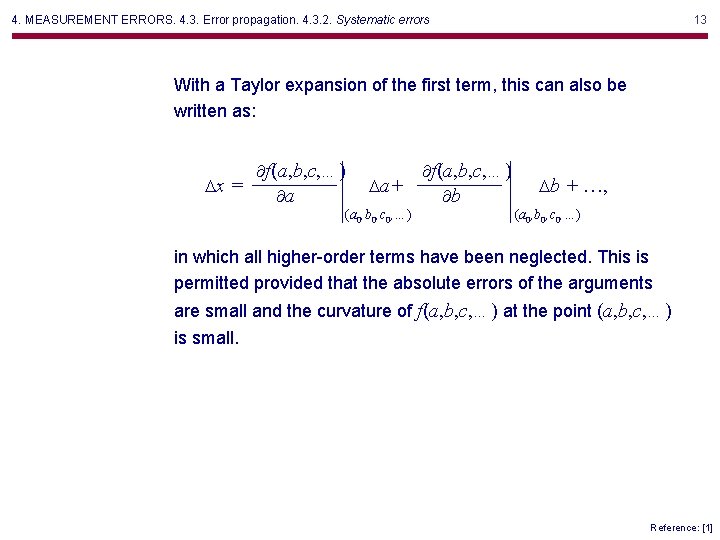

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Systematic errors 13 With a Taylor expansion of the first term, this can also be written as: f(a, b, c, …) Dx = a Da + (a 0, b 0, c 0, …) f(a, b, c, …) b Db + …, (a 0, b 0, c 0, …) in which all higher-order terms have been neglected. This is permitted provided that the absolute errors of the arguments are small and the curvature of f(a, b, c, …) at the point (a, b, c, …) is small. Reference: [1]

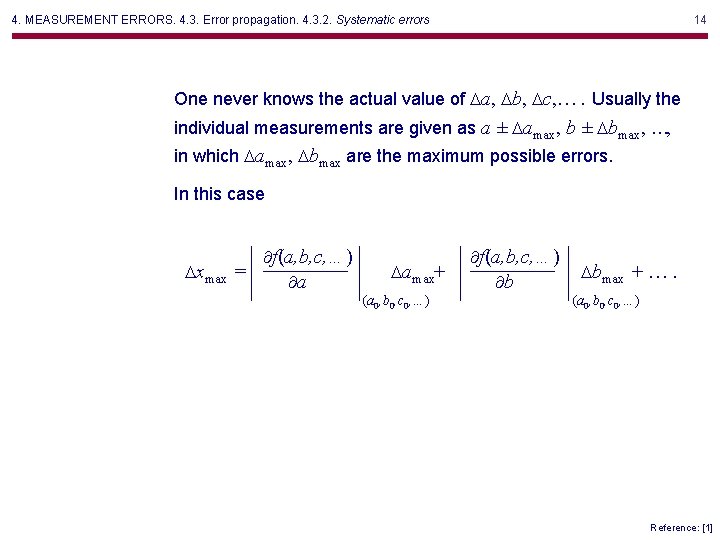

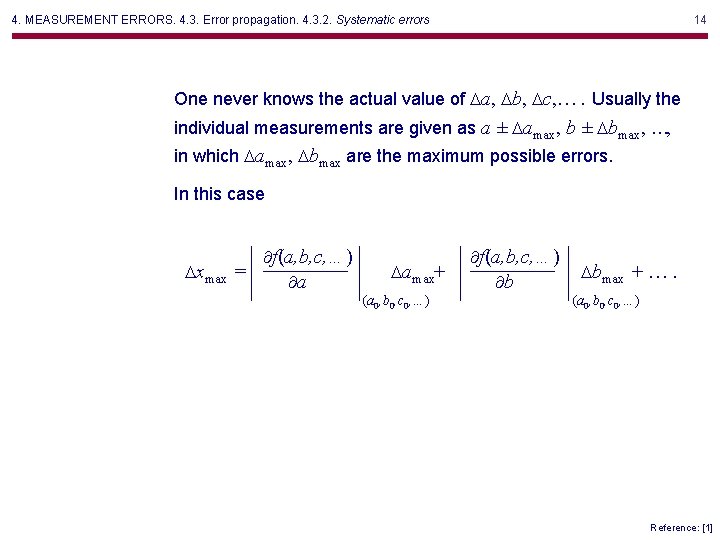

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Systematic errors 14 One never knows the actual value of Da, Db, Dc, …. Usually the individual measurements are given as a ± Damax, b ± Dbmax, …, in which Damax, Dbmax are the maximum possible errors. In this case f(a, b, c, …) Dxmax = a Damax+ (a 0, b 0, c 0, …) f(a, b, c, …) b Dbmax + …. (a 0, b 0, c 0, …) Reference: [1]

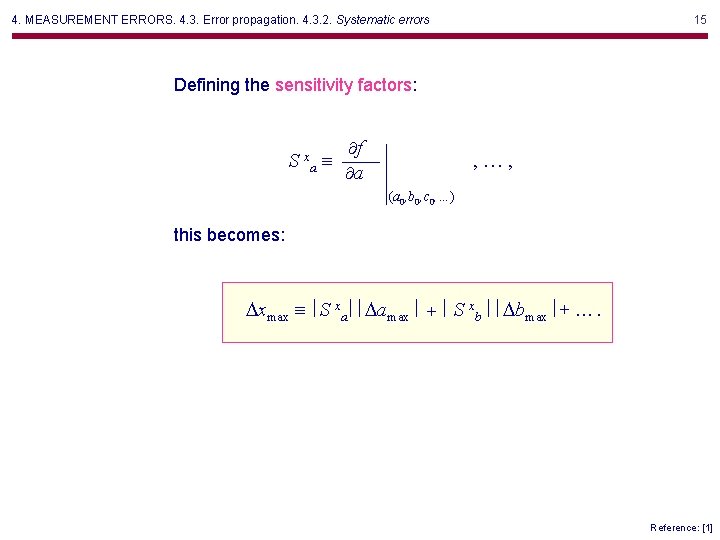

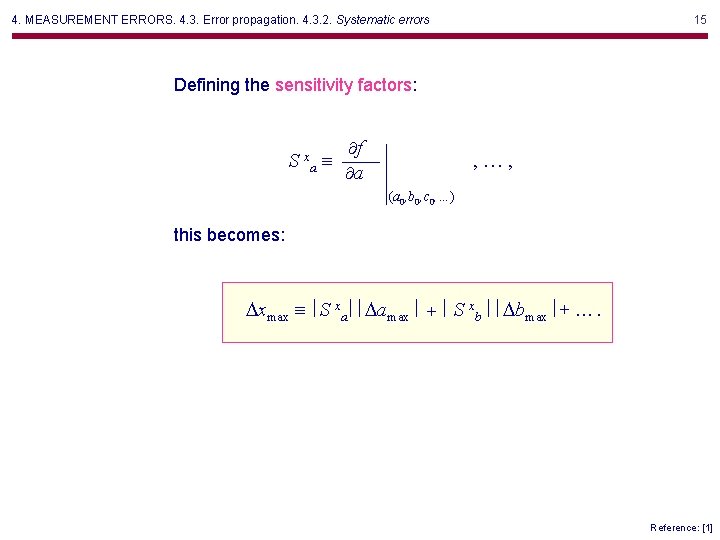

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Systematic errors 15 Defining the sensitivity factors: S xa f a , …, (a 0, b 0, c 0, …) this becomes: Dxmax S xa Damax + S xb Dbmax + …. Reference: [1]

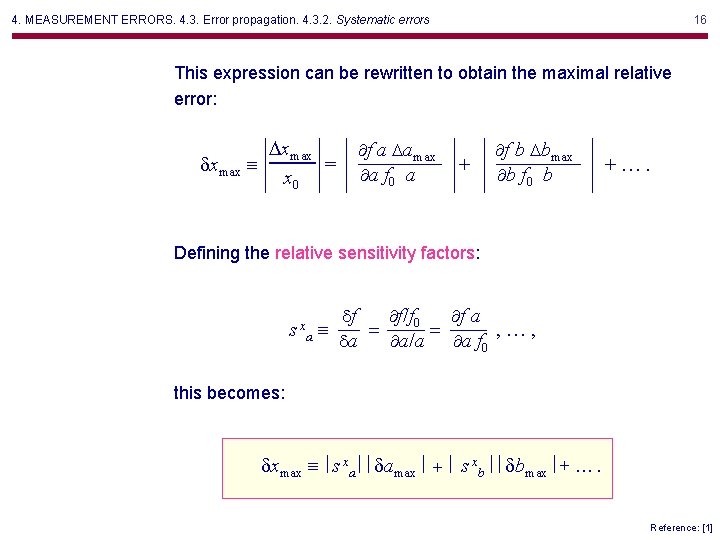

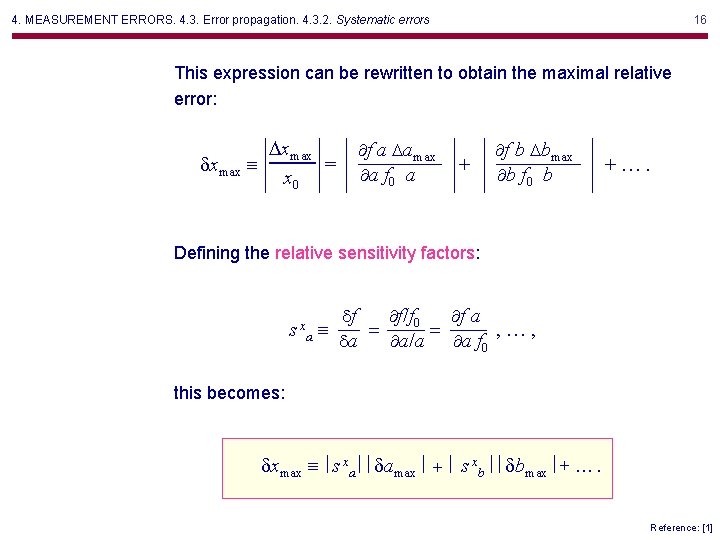

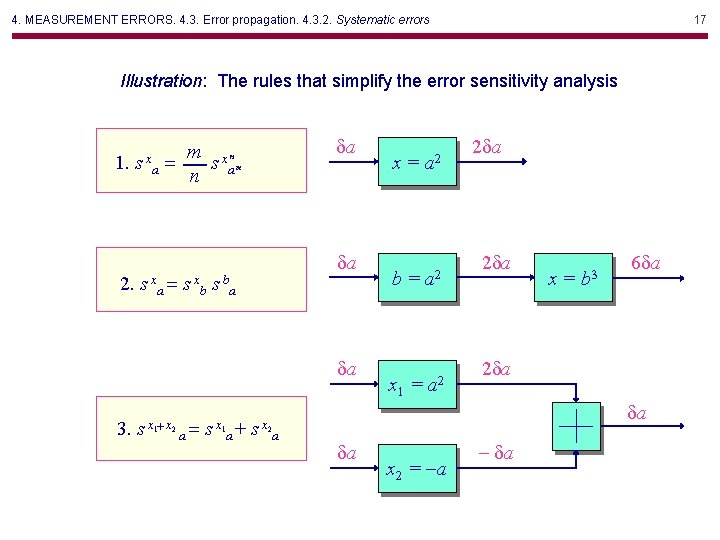

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Systematic errors 16 This expression can be rewritten to obtain the maximal relative error: Dxmax dxmax = x 0 f a Damax a f 0 a + f b Dbmax b f 0 b +…. Defining the relative sensitivity factors: s xa f a df f / f 0 = = , …, a f 0 da a/a this becomes: dxmax s xa damax + s xb dbmax + …. Reference: [1]

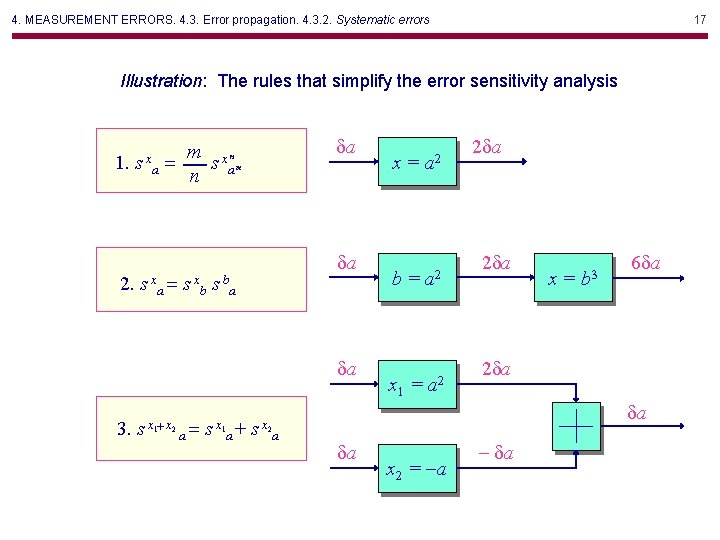

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Systematic errors 17 Illustration: The rules that simplify the error sensitivity analysis 1. sx 2. m x = s n a da n a sx a= sx b sb m da a da 3. s x 1+ x 2 a= s x 1 a+ s x 2 a da x= a 2 b= a 2 x 1 = a 2 x 2 = -a 2 da x= b 3 6 da 2 da da - da

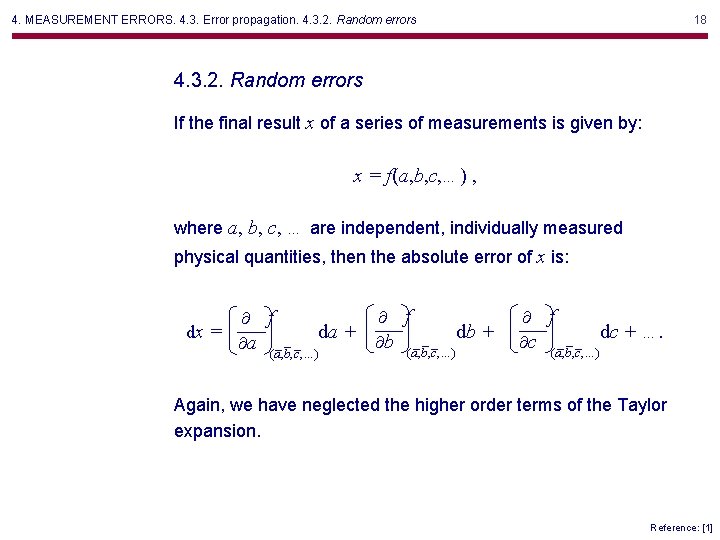

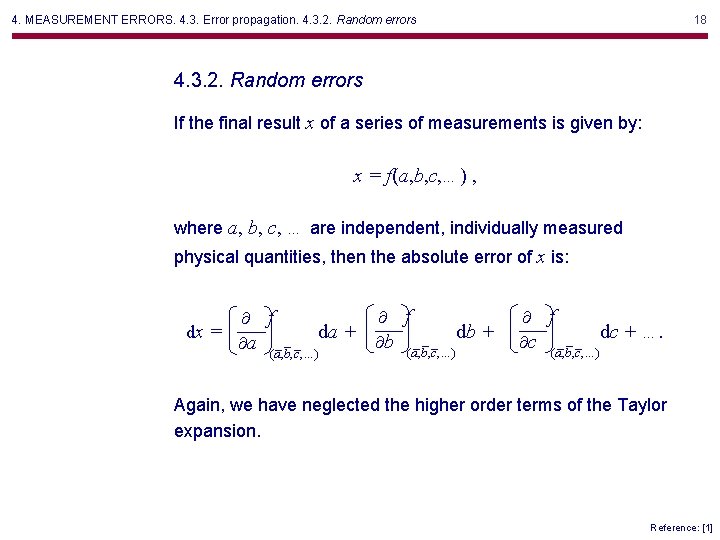

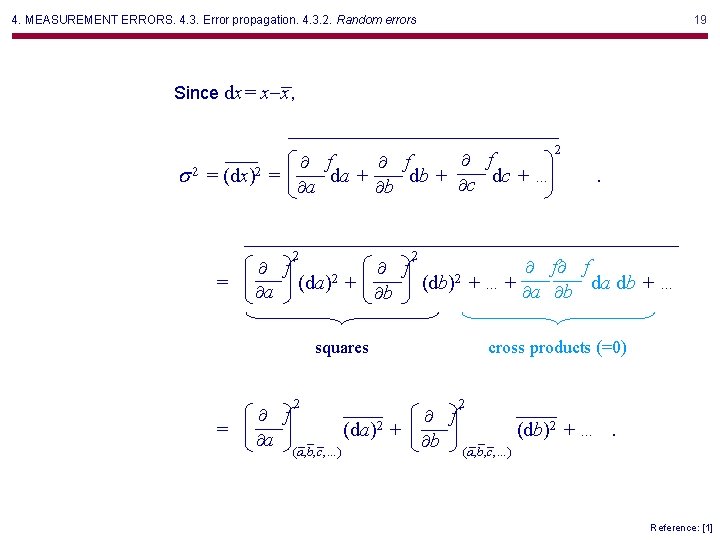

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors 18 4. 3. 2. Random errors If the final result x of a series of measurements is given by: x = f(a, b, c, …) , where a, b, c, … are independent, individually measured physical quantities, then the absolute error of x is: f dx = a da + (a, b, c, …) f b db + (a, b, c, …) f c dc + …. (a, b, c, …) Again, we have neglected the higher order terms of the Taylor expansion. Reference: [1]

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors 19 Since dx= x-x, f f f 2 2 s = (dx) = da + db + c dc + … a b 2 = . 2 f f f f 2 2 (da) + (db) + …+ a b da db + … a b squares = 2 f a cross products (=0) 2 (da)2 (a, b, c, …) + f b 2 (db)2 + …. (a, b, c, …) Reference: [1]

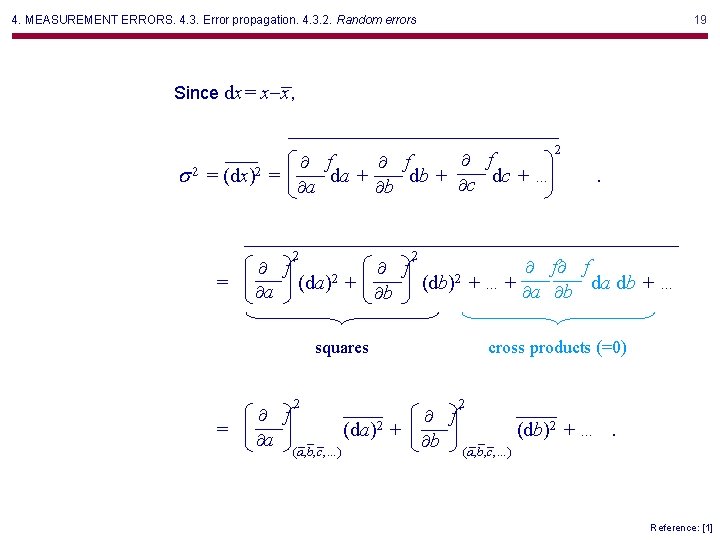

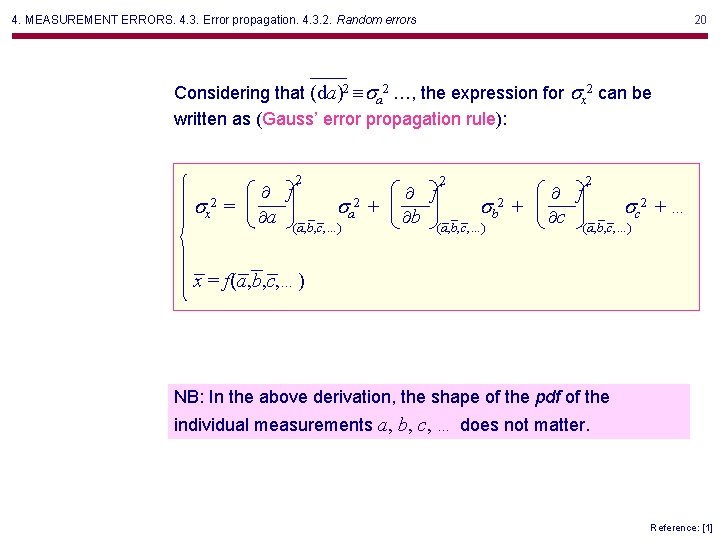

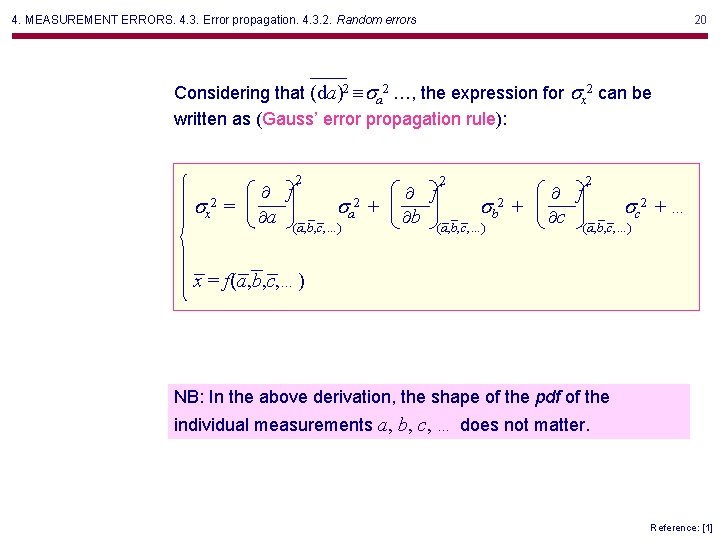

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors 20 Considering that (da)2 sa 2 …, the expression for sx 2 can be written as (Gauss’ error propagation rule): 2 sx 2 = f 2+ s a a (a, b, c, …) 2 f 2+ s b b (a, b, c, …) 2 f 2+… s c c (a, b, c, …) x = f(a, b, c, …) NB: In the above derivation, the shape of the pdf of the individual measurements a, b, c, … does not matter. Reference: [1]

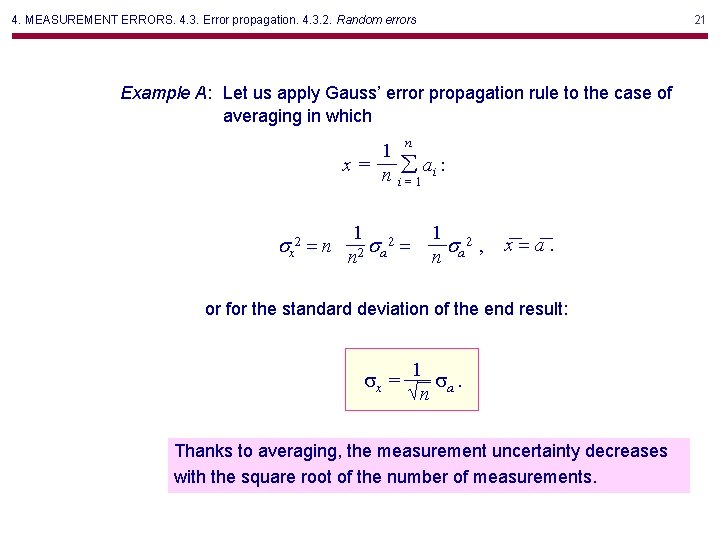

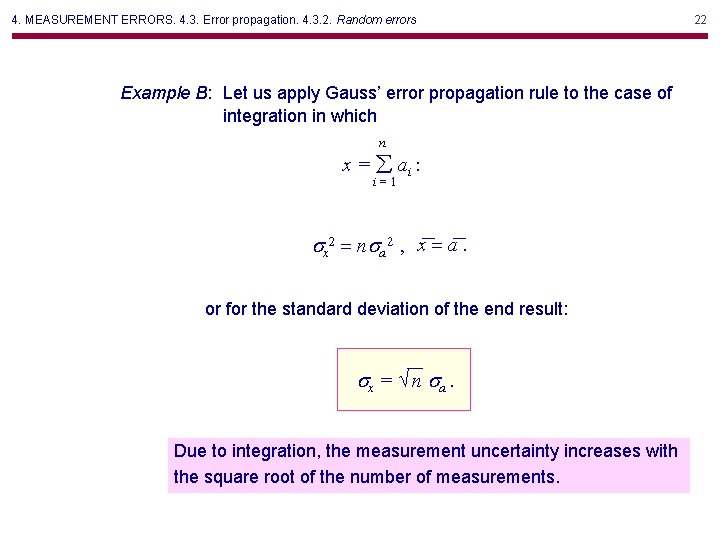

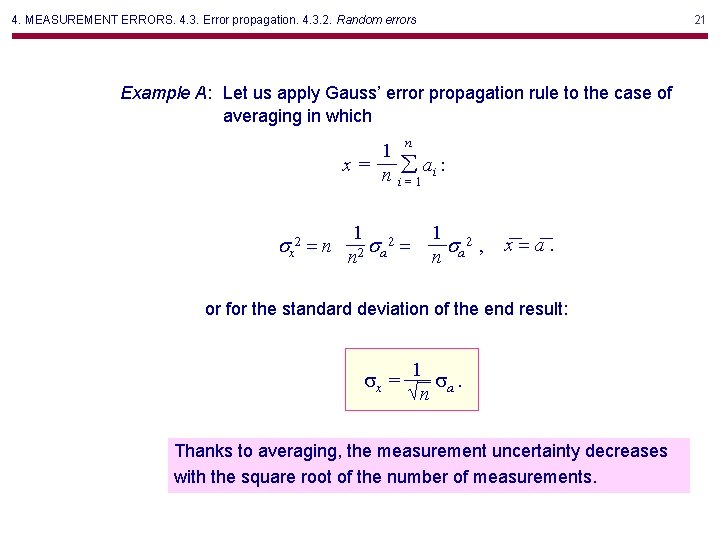

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors 21 Example A: Let us apply Gauss’ error propagation rule to the case of averaging in which 1 x= n sx 2 = n n ai : i=1 1 2 s = n 2 a 1 2 s , n a x=a. or for the standard deviation of the end result: sx = 1 s. n a Thanks to averaging, the measurement uncertainty decreases with the square root of the number of measurements.

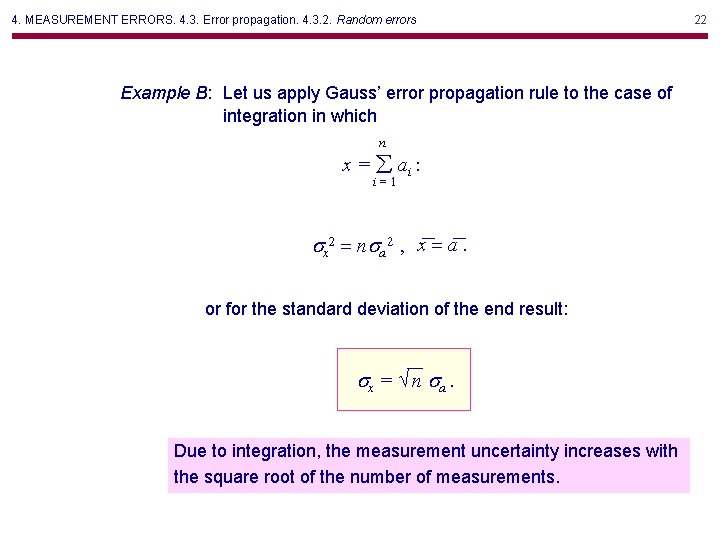

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors Example B: Let us apply Gauss’ error propagation rule to the case of integration in which n x = ai : i=1 sx 2 = nsa 2 , x = a. or for the standard deviation of the end result: sx = n sa. Due to integration, the measurement uncertainty increases with the square root of the number of measurements. 22

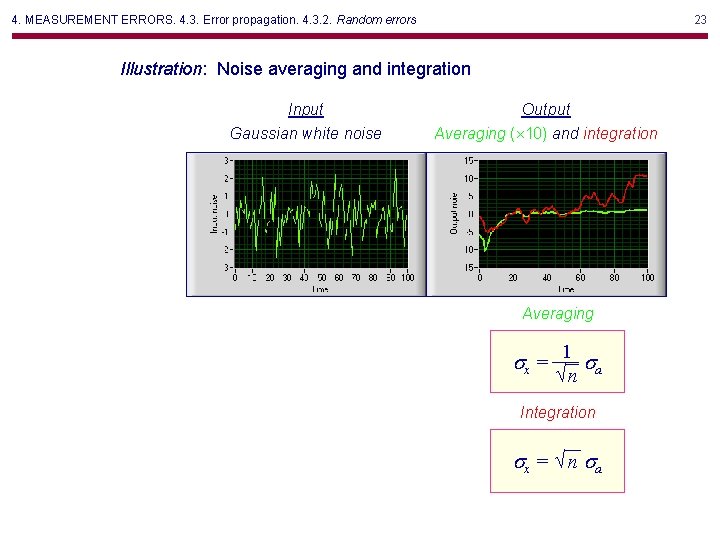

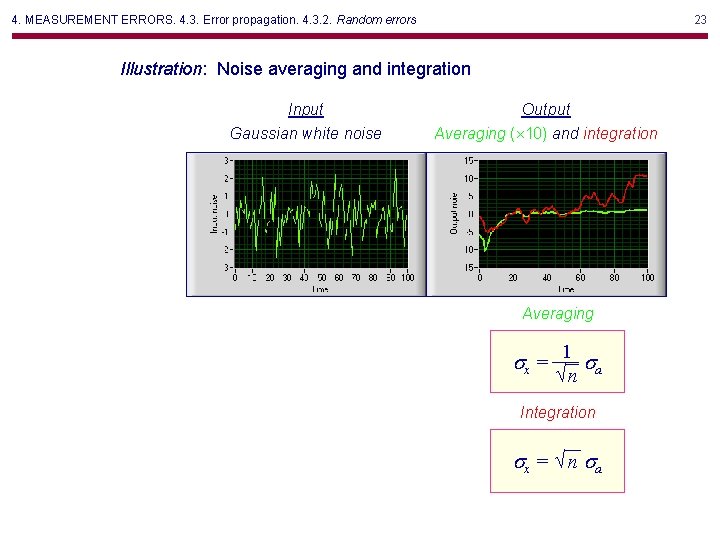

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors 23 Illustration: Noise averaging and integration Input Gaussian white noise Output Averaging ( 10) and integration Averaging sx = 1 s n a Integration sx = n sa

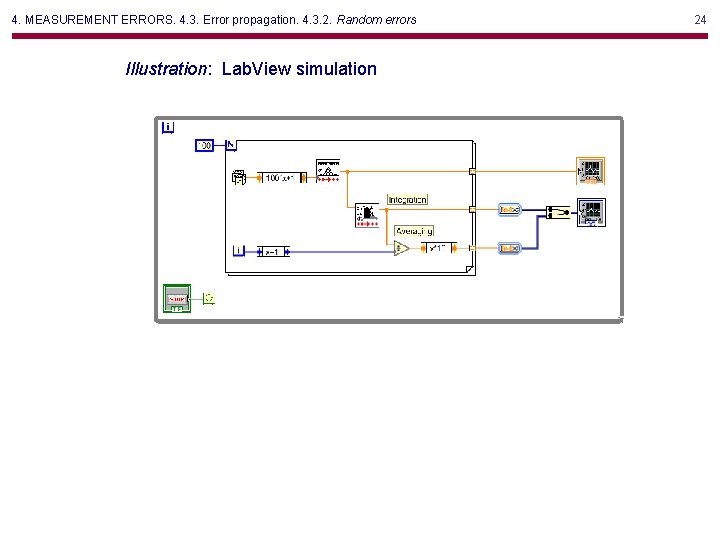

4. MEASUREMENT ERRORS. 4. 3. Error propagation. 4. 3. 2. Random errors Illustration: Lab. View simulation 24

Next lecture 25 Next lecture: