Trigger Coordination Report Wesley H Smith U Wisconsin

- Slides: 26

Trigger Coordination Report Wesley H. Smith U. Wisconsin CMS Trigger Coordinator LHCC Rehearsal Meeting April 18, 2008 Outline: Level 1 Trigger DAQ & HLT Infrastructure HLT Algorithm performance -- Christos Leonidopoulos W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 1

Calorimeter Trigger Primitives ECAL Barrel: • Barrel: All Trigger Concentrator Cards (TCC-68) installed • Tests with Regional Cal. Trig. Ongoing: patterns, timing • Ready for operation in CR 0 T ECAL Endcap: • TCC-48 submitted for board manufacture • Present “schedule” (under negotiation!): • Qualification July 1 • 1 st batch October 15 • 2 nd batch November 7 • 3 rd batch December 1 HCAL: • All installed, operating in Global Runs W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 2

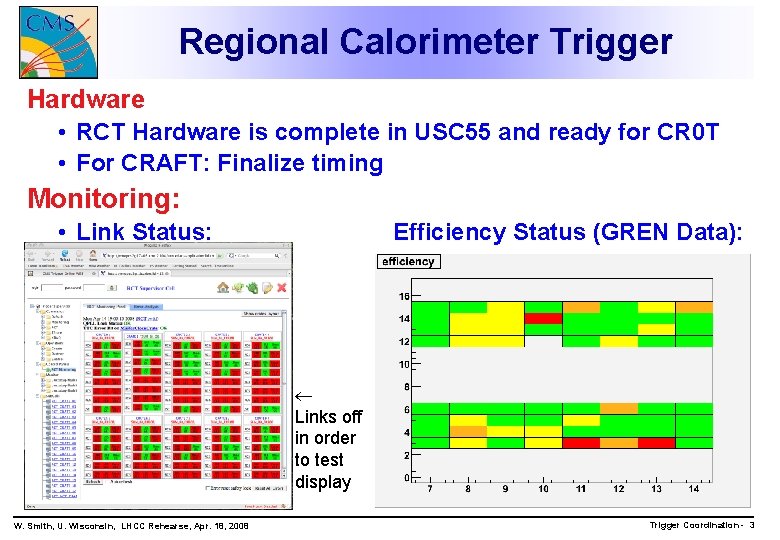

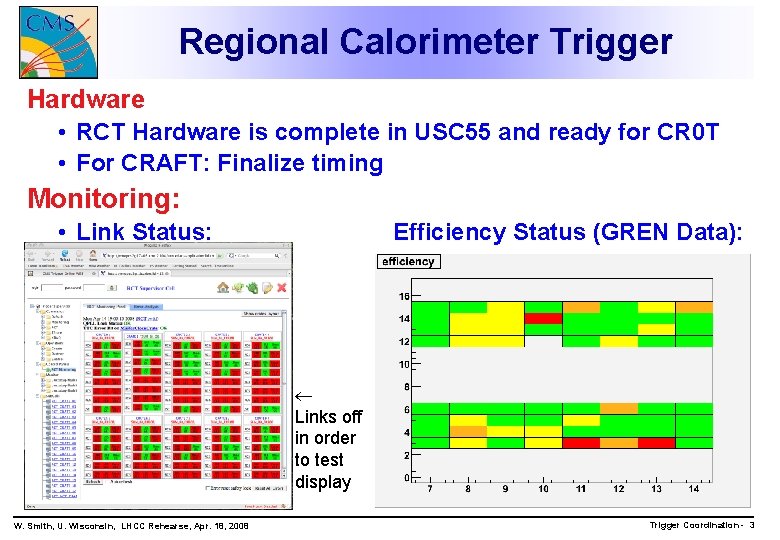

Regional Calorimeter Trigger Hardware • RCT Hardware is complete in USC 55 and ready for CR 0 T • For CRAFT: Finalize timing Monitoring: • Link Status: Efficiency Status (GREN Data): Links off in order to test display W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 3

Global Calorimeter Trigger Status: • All 63 required Source Cards are in the system and debugged • One Wheel card with 3 Jet Leaf Cards installed in GCT main crate before Easter & corresponding jet fibres connected Available for May run: • The Electron Trigger. • The Positive Rapidity Jet Data. • use the emulator on these data to produce trigger distributions. • As soon as the second Wheel Card goes in we will have a very good picture how the Jet-Et distributions look without beam. • All Hardware Integrated in the Trigger Supervisor • Source Cards, Electrons and Jets Available for June run: • All Electron & Jet Triggers W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 4

Drift Tube Trigger Local Trigger + Sector Collector ready (5 wheels) • Phi DTTF connection ready • Eta connection being prepared All DTTF Crates mounted • All 72 PHTFs will be installed • 20 spare boards to assemble and test soon • TIM and DLI are all available + spares Input fibers • Input fibers in the final position CSC connection • All cables in final position • Transition Boards being installed W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 5

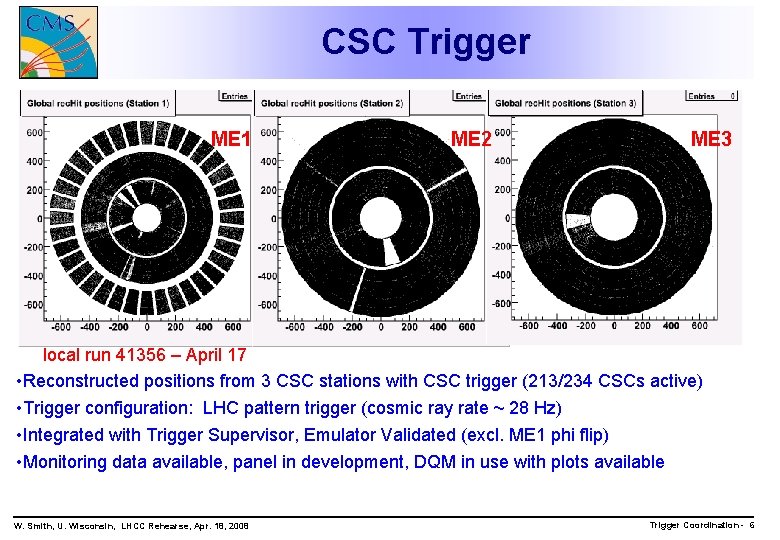

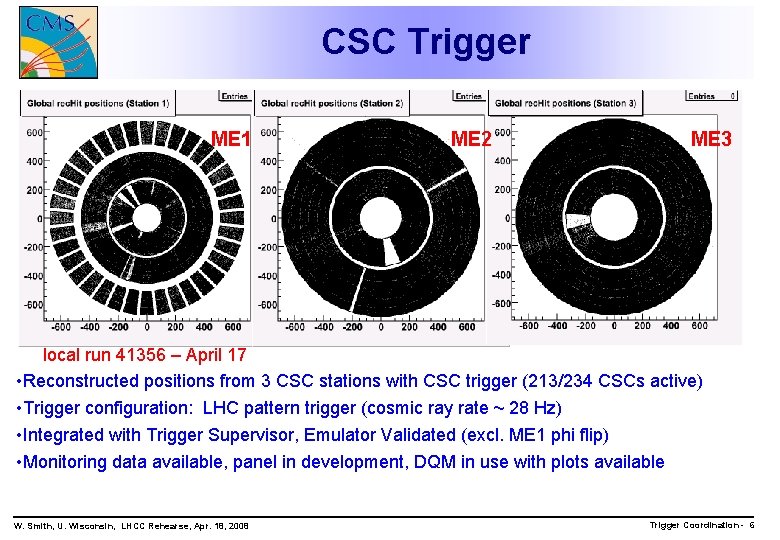

CSC Trigger ME 1 ME 2 ME 3 local run 41356 – April 17 • Reconstructed positions from 3 CSC stations with CSC trigger (213/234 CSCs active) • Trigger configuration: LHC pattern trigger (cosmic ray rate ~ 28 Hz) • Integrated with Trigger Supervisor, Emulator Validated (excl. ME 1 phi flip) • Monitoring data available, panel in development, DQM in use with plots available W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 6

RPC Trigger Link System (UX) • YB+2, +1, 0 - commissioned from USC; • YB-1, -2 - in commissioning from USC now; • YE+3, +1 - LBBs installed and cabled; • YE+3, +1 - boards installed in 75%, waiting for LV; • YE-1, -3 - LBBs installed and cabled, boards not installed. • Enough modules for barrel & + endcap installation, BUT lack of modules for - endcap and spare modules - need to fix broken modules or small production! Trigger System (US) • Trigger Boards: 64 TBs (all needed for staged RPC trigger system) at CERN • Trigger Crates (12): • 6 - installed now in USC (4 with TBs, installed & cabled), • 6 - will be installed, filled with TBs and cabled in one month. • UX-US cables • YE+3: YB-2 - installed, YE-1, -3 - waiting for installation; • USC trigger cabling • TTC, GMT, DCs, Slink, DCC cables – installed/on demand, • Splitter cables delivered to CERN, cabling in April and May W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 7

RPC Trigger News Optical transmission problems between RMB and DCC • Eliminated adjusting GOL laser power (default settings too small). • Minor hardware fix needed. • All recently shipped boards have the fix in. Need retrofit on 3 crates. DCC firmware • Updated. Improved distribution of L 1 on board. Currently under test Noise Problems • One sector has fluctuating noise -- special rate histograms implemented on LB Software • Integrated with Trigger Supervisor • Emulator has been stable for a year • Inconsistency in CMSSW geometry: no endcap RPC triggers for the moment • L 1 DQM working with initial histograms available • Integrated with Configuration Database, preparing for Conditions Database • Working on masked channels, parallelizing crate configuration W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 8

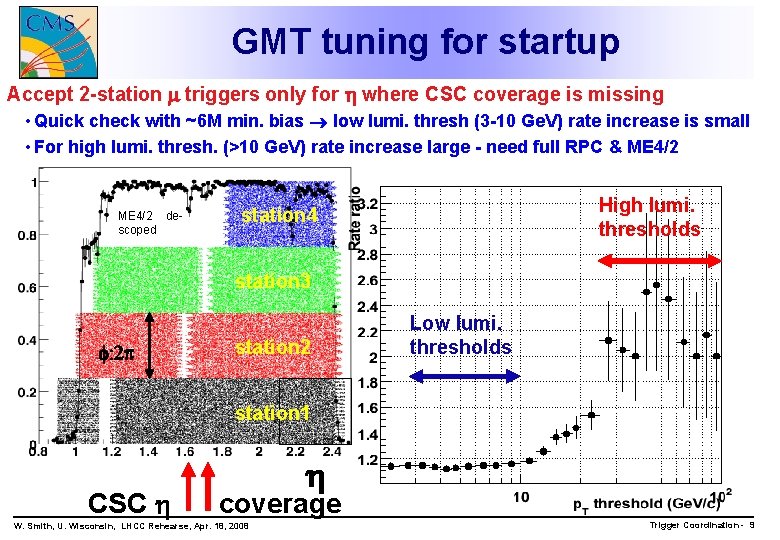

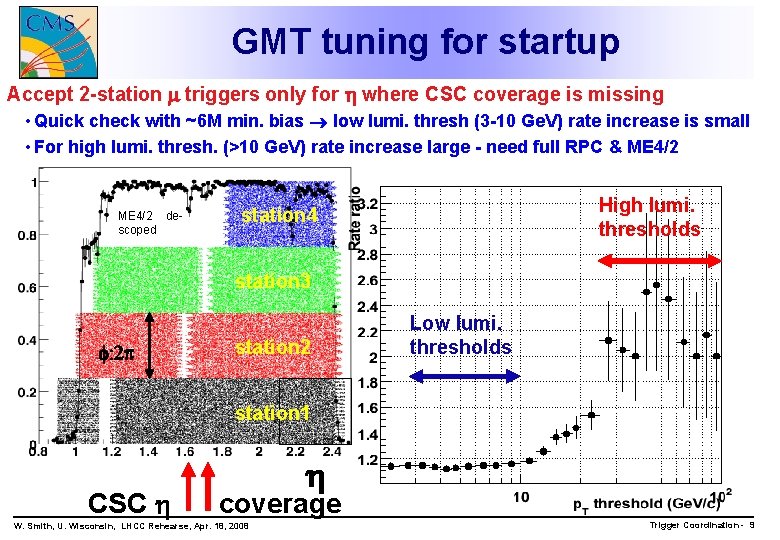

GMT tuning for startup Accept 2 -station triggers only for where CSC coverage is missing • Quick check with ~6 M min. bias low lumi. thresh (3 -10 Ge. V) rate increase is small • For high lumi. thresh. (>10 Ge. V) rate increase large - need full RPC & ME 4/2 descoped High lumi. thresholds station 4 station 3 station 2 Low lumi. thresholds station 1 CSC coverage W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 9

Global Trigger News Installed a “hot spare” crate (GT 2) in USC 55 • • • on top of “production” crate (GT 1) each crate is controlled via CAEN by a separate crate PC TTC clock/orbit signals fanned out to serve both crates can quickly switch between crates can test spare modules or upgrade software without interfering with normal operation of Global Trigger Software: • Integrated with online configuration database • New database schema including keys, menus, scales • Design finished and documented, implementation under way • XDAQ/Trigger Supervisor monitoring implemented • Panels for monitoring, control, configuration W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 10

Trigger Supervisor Developments: • Provision of progress bar • Improved startup • Provision/integration of TTCci Cell New Release: • • • Error handling and tracking Upgrade to new version of XDAQ Reporting and monitoring Handling of alarms Integration with Configuration & Conditions Databases W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 11

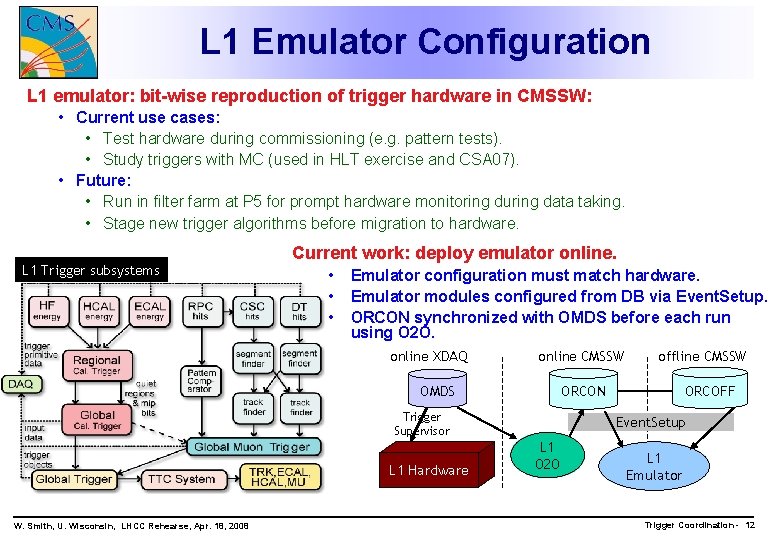

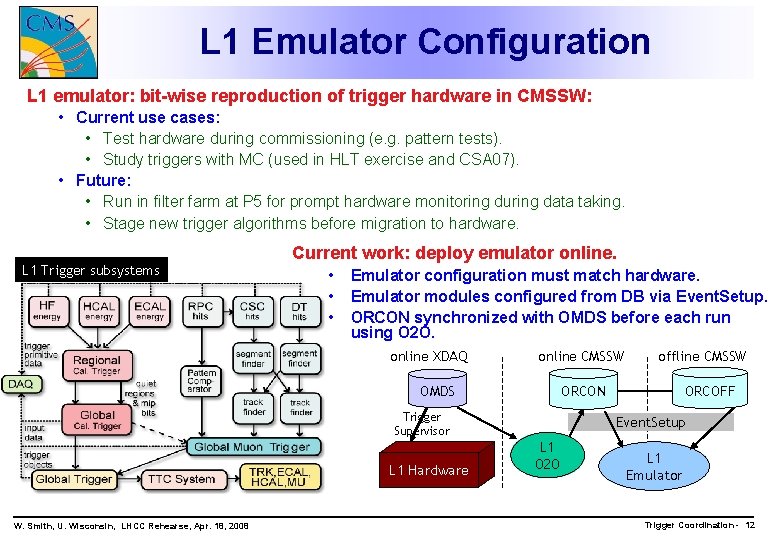

L 1 Emulator Configuration L 1 emulator: bit-wise reproduction of trigger hardware in CMSSW: • Current use cases: • Test hardware during commissioning (e. g. pattern tests). • Study triggers with MC (used in HLT exercise and CSA 07). • Future: • Run in filter farm at P 5 for prompt hardware monitoring during data taking. • Stage new trigger algorithms before migration to hardware. L 1 Trigger subsystems Current work: deploy emulator online. • • • Emulator configuration must match hardware. Emulator modules configured from DB via Event. Setup. ORCON synchronized with OMDS before each run using O 2 O. online XDAQ online CMSSW OMDS W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 ORCOFF ORCON Trigger Supervisor L 1 Hardware offline CMSSW Event. Setup L 1 O 2 O L 1 Emulator Trigger Coordination - 12

L 1 Emulator Development CMSSW 2_0_0: • New HF-based minbias trigger quantities (HCAL, GCT) • Feature bits (tower over threshold), ring sums • CSC beam halo trigger (CSC TF) • New L 1 trigger menus for 1030, 1031 lumi (GT) • HLT seeding possible from ‘technical triggers’ (GT) • eg. RPC cosmic trigger, beam scintillator counters • No technical triggers are emulated yet. • Lightweight interface to trigger results for AOD (GT) • Firmware related updates to CSC TPG, CSC TF, DTTF • First version DB interface W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 13

Level 1 Trigger DQM GRUMM: • L 1 T sources for almost all trigger systems run online during GRUMM (from two SM event servers). • Created two sets of configurations to run in/out of P 5. • Emulator based data validation ongoing Plans: • Attempt semi-automated procedure to run at CAF, (replica of the online DQM but on all events). • Run L 1 T DQM at Tier 0 and FNAL Tier 1. • integrate with offline DQM workflow. • Run Emulator DQM in HLT. W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 14

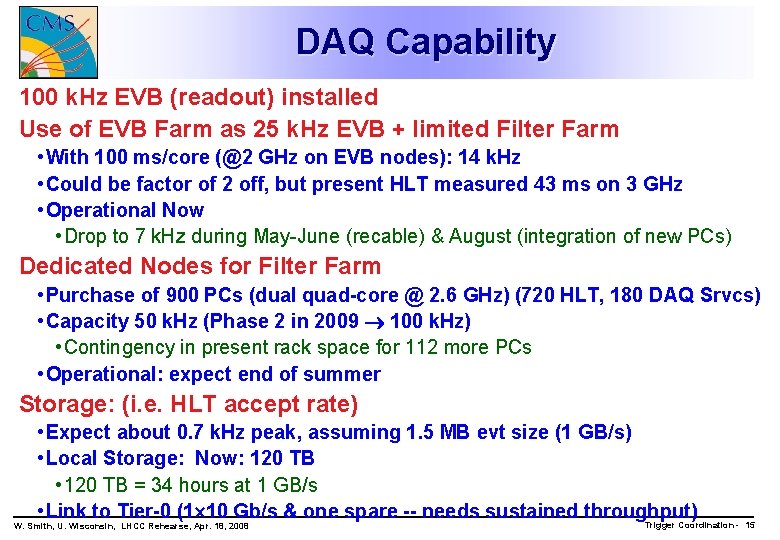

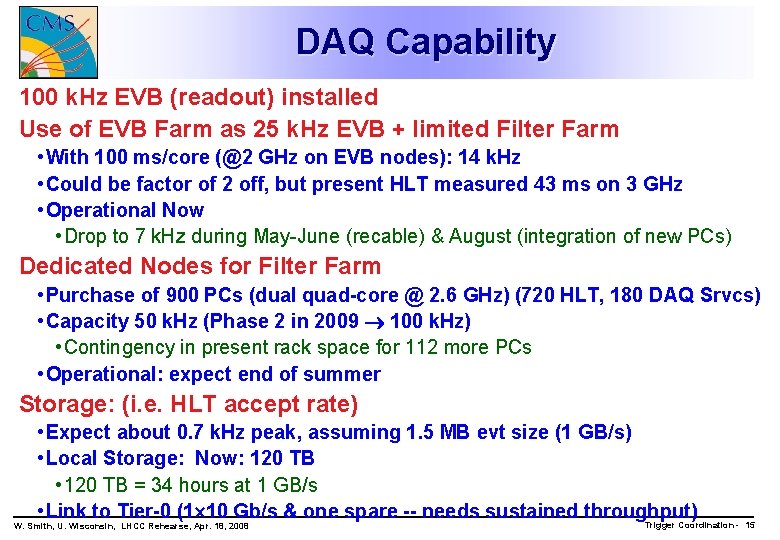

DAQ Capability 100 k. Hz EVB (readout) installed Use of EVB Farm as 25 k. Hz EVB + limited Filter Farm • With 100 ms/core (@2 GHz on EVB nodes): 14 k. Hz • Could be factor of 2 off, but present HLT measured 43 ms on 3 GHz • Operational Now • Drop to 7 k. Hz during May-June (recable) & August (integration of new PCs) Dedicated Nodes for Filter Farm • Purchase of 900 PCs (dual quad-core @ 2. 6 GHz) (720 HLT, 180 DAQ Srvcs) • Capacity 50 k. Hz (Phase 2 in 2009 100 k. Hz) • Contingency in present rack space for 112 more PCs • Operational: expect end of summer Storage: (i. e. HLT accept rate) • Expect about 0. 7 k. Hz peak, assuming 1. 5 MB evt size (1 GB/s) • Local Storage: Now: 120 TB • 120 TB = 34 hours at 1 GB/s • Link to Tier-0 (1 10 Gb/s & one spare -- needs sustained throughput) W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 15

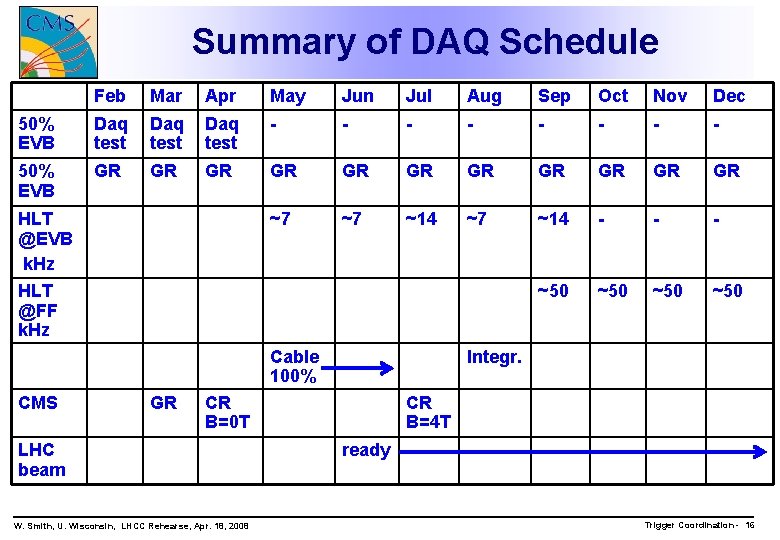

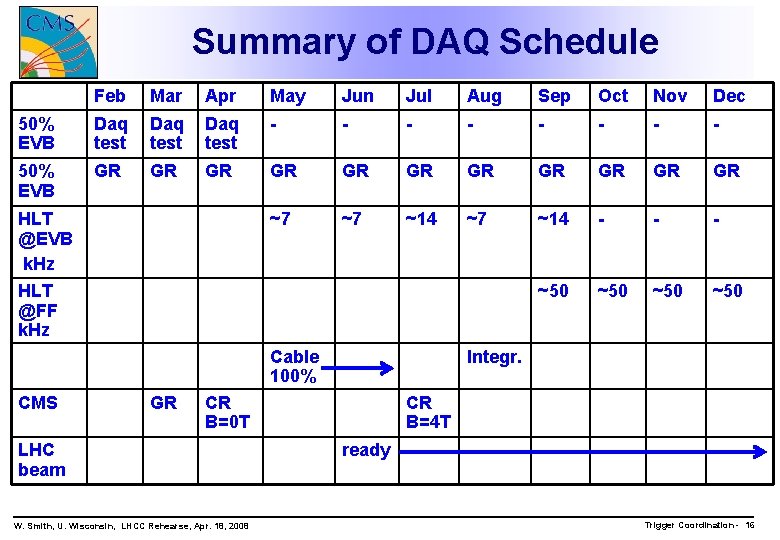

Summary of DAQ Schedule Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec 50% EVB Daq test - - - - 50% EVB GR GR GR ~7 ~7 ~14 - - - ~50 ~50 HLT @EVB k. Hz HLT @FF k. Hz Cable 100% CMS GR Integr. CR B=0 T LHC beam W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 CR B=4 T ready Trigger Coordination - 16

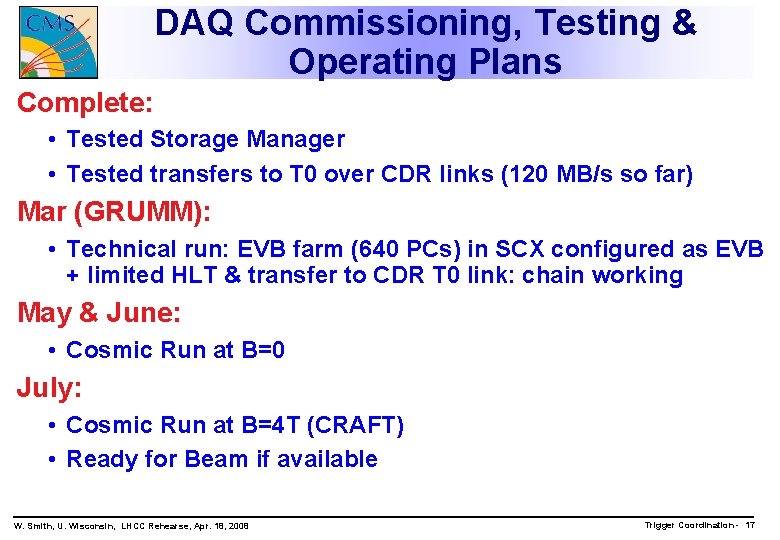

DAQ Commissioning, Testing & Operating Plans Complete: • Tested Storage Manager • Tested transfers to T 0 over CDR links (120 MB/s so far) Mar (GRUMM): • Technical run: EVB farm (640 PCs) in SCX configured as EVB + limited HLT & transfer to CDR T 0 link: chain working May & June: • Cosmic Run at B=0 July: • Cosmic Run at B=4 T (CRAFT) • Ready for Beam if available W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 17

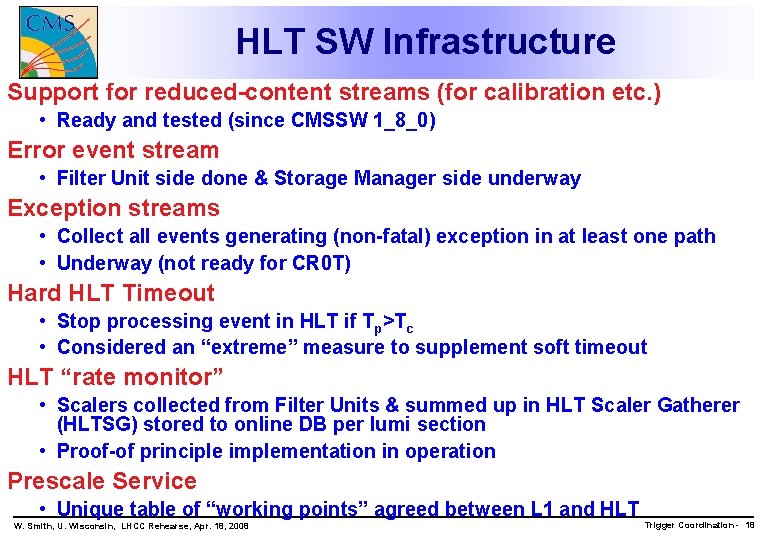

HLT SW Infrastructure Support for reduced-content streams (for calibration etc. ) • Ready and tested (since CMSSW 1_8_0) Error event stream • Filter Unit side done & Storage Manager side underway Exception streams • Collect all events generating (non-fatal) exception in at least one path • Underway (not ready for CR 0 T) Hard HLT Timeout • Stop processing event in HLT if Tp>Tc • Considered an “extreme” measure to supplement soft timeout HLT “rate monitor” • Scalers collected from Filter Units & summed up in HLT Scaler Gatherer (HLTSG) stored to online DB per lumi section • Proof-of principle implementation in operation Prescale Service • Unique table of “working points” agreed between L 1 and HLT W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 18

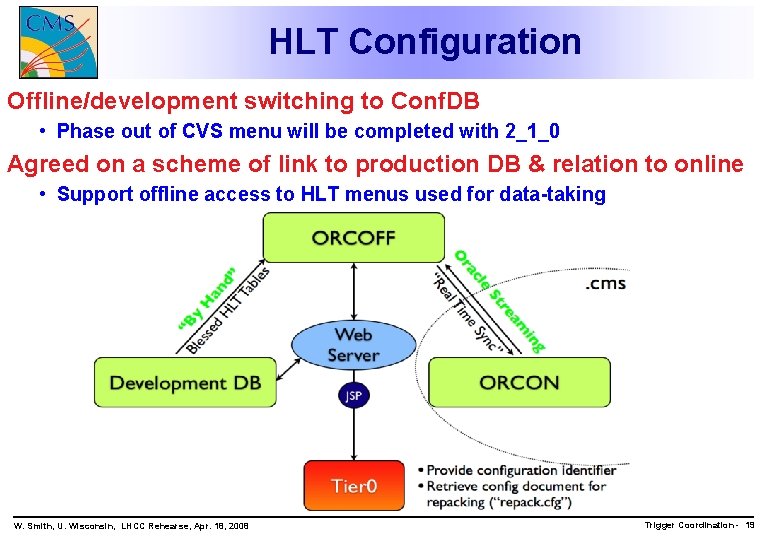

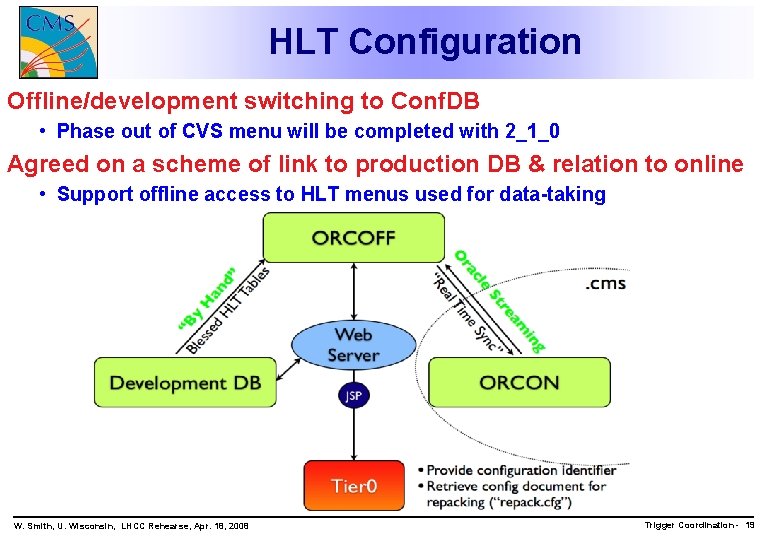

HLT Configuration Offline/development switching to Conf. DB • Phase out of CVS menu will be completed with 2_1_0 Agreed on a scheme of link to production DB & relation to online • Support offline access to HLT menus used for data-taking W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 19

Validation Farm: status and plans Currently: 20 DAQ PC (2 x 2 GHz dual-core, 4 GB) • install 2 TB disk on hilton 01 to serve input data • Playback MC from CASTOR • Playback cosmics from SM Hi. LTon overhaul phase 1(June) • Replace current nodes with 25 -30 1 U PC (same as filter farm phase 1) (move current PCs back in DAQ spare pool) • 0. 5 -1 TB SATA disk each (playback input, test samples) Hi. LTon overhaul phase 2 (December) • Duplicate system with second rack • Introduce CDR relay via SM loggers for fast transfer from CASTOR W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 20

HLT Online Operations Gear up for 24/7 operation of HLT in P 5 Ev. F core team in charge of operations • Adding to experts team HLT Online Operations Forum to coordinate HLT online decisions with other stakeholders • Checkpoint validation of HLT menus for data-taking • CMSSW version and related issues • Coordinate with offline reconstruction • Calibrations, configurations etc. • Coordinate with tier 0/DM/WM • E. g. number/content of online streams etc. • Coordinate with sub-detector communities • Via DPG and GDA contacts W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 21

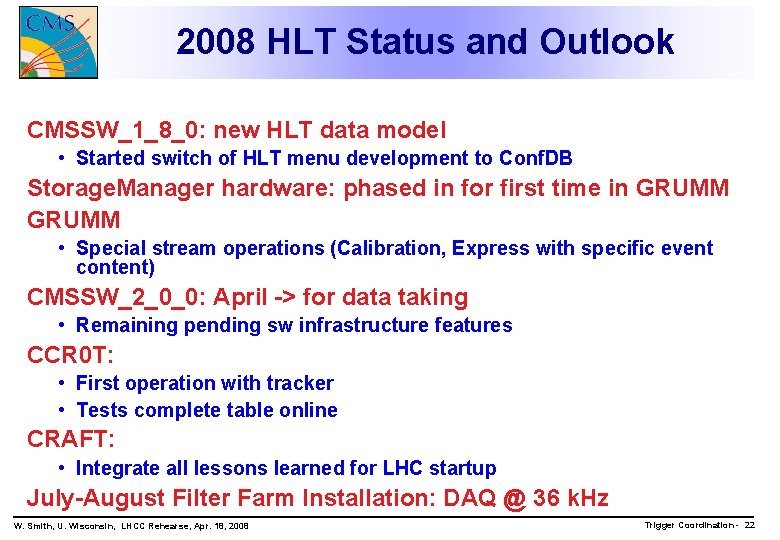

2008 HLT Status and Outlook CMSSW_1_8_0: new HLT data model • Started switch of HLT menu development to Conf. DB Storage. Manager hardware: phased in for first time in GRUMM • Special stream operations (Calibration, Express with specific event content) CMSSW_2_0_0: April -> for data taking • Remaining pending sw infrastructure features CCR 0 T: • First operation with tracker • Tests complete table online CRAFT: • Integrate all lessons learned for LHC startup July-August Filter Farm Installation: DAQ @ 36 k. Hz W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 22

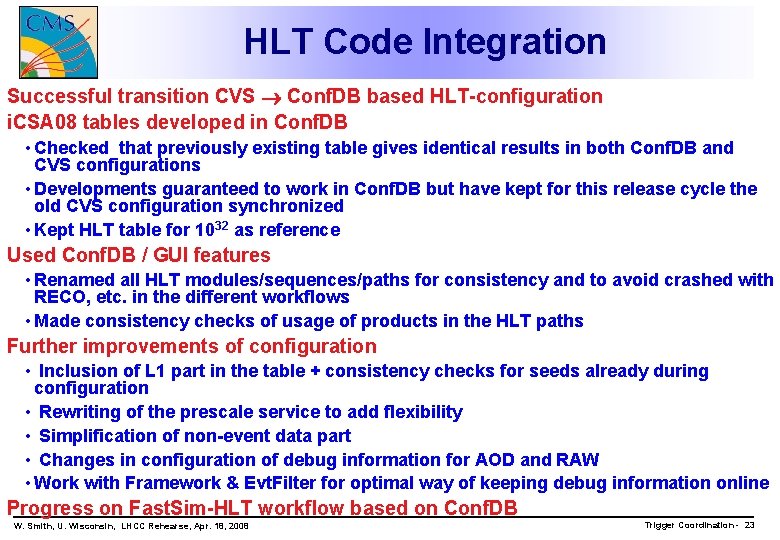

HLT Code Integration Successful transition CVS Conf. DB based HLT-configuration i. CSA 08 tables developed in Conf. DB • Checked that previously existing table gives identical results in both Conf. DB and CVS configurations • Developments guaranteed to work in Conf. DB but have kept for this release cycle the old CVS configuration synchronized • Kept HLT table for 1032 as reference Used Conf. DB / GUI features • Renamed all HLT modules/sequences/paths for consistency and to avoid crashed with RECO, etc. in the different workflows • Made consistency checks of usage of products in the HLT paths Further improvements of configuration • Inclusion of L 1 part in the table + consistency checks for seeds already during configuration • Rewriting of the prescale service to add flexibility • Simplification of non-event data part • Changes in configuration of debug information for AOD and RAW • Work with Framework & Evt. Filter for optimal way of keeping debug information online Progress on Fast. Sim-HLT workflow based on Conf. DB W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 23

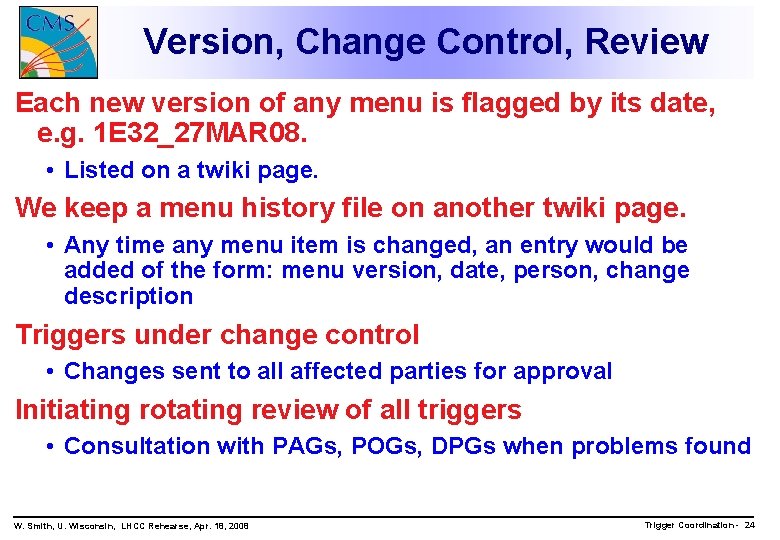

Version, Change Control, Review Each new version of any menu is flagged by its date, e. g. 1 E 32_27 MAR 08. • Listed on a twiki page. We keep a menu history file on another twiki page. • Any time any menu item is changed, an entry would be added of the form: menu version, date, person, change description Triggers under change control • Changes sent to all affected parties for approval Initiating rotating review of all triggers • Consultation with PAGs, POGs, DPGs when problems found W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 24

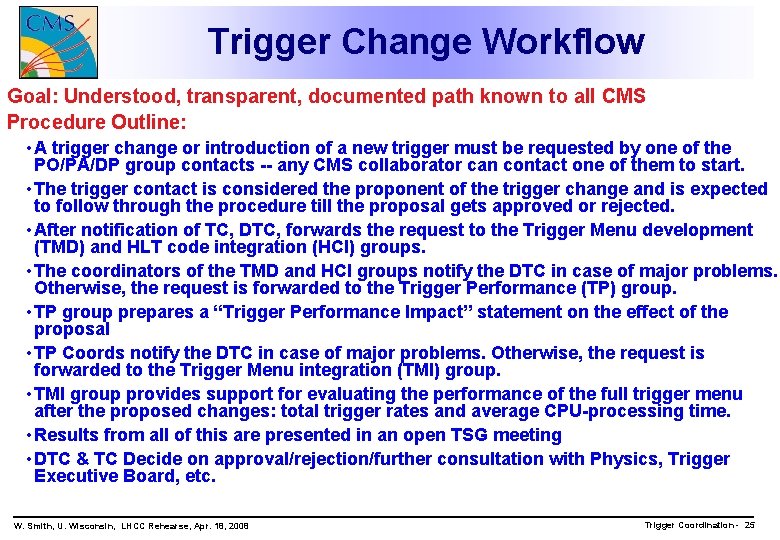

Trigger Change Workflow Goal: Understood, transparent, documented path known to all CMS Procedure Outline: • A trigger change or introduction of a new trigger must be requested by one of the PO/PA/DP group contacts -- any CMS collaborator can contact one of them to start. • The trigger contact is considered the proponent of the trigger change and is expected to follow through the procedure till the proposal gets approved or rejected. • After notification of TC, DTC, forwards the request to the Trigger Menu development (TMD) and HLT code integration (HCI) groups. • The coordinators of the TMD and HCI groups notify the DTC in case of major problems. Otherwise, the request is forwarded to the Trigger Performance (TP) group. • TP group prepares a “Trigger Performance Impact” statement on the effect of the proposal • TP Coords notify the DTC in case of major problems. Otherwise, the request is forwarded to the Trigger Menu integration (TMI) group. • TMI group provides support for evaluating the performance of the full trigger menu after the proposed changes: total trigger rates and average CPU-processing time. • Results from all of this are presented in an open TSG meeting • DTC & TC Decide on approval/rejection/further consultation with Physics, Trigger Executive Board, etc. W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 25

Trigger Coordination Summary All trigger systems are operating & have been used to trigger CMS Global Calorimeter trigger is on fast track for full operation L 1 Trigger software is functional and maturing L 1 Emulators are operational DQM available for almost all trigger systems 7 k. Hz DAQ operational now. 50 k. Hz DAQ operational by Sep. 1 HLT infrastructure is mature and robust HLT validation Farm is working Plan for HLT operations HLT code is well integrated within CMSSW and Cond. DB, Conf. DB Versioning, Change Control & Change Workflow being developed Concerns: ECAL Endcap TPG & RPC Trigger Next: Trigger Menus (talk by C. Leonidopoulos) W. Smith, U. Wisconsin, LHCC Rehearse, Apr. 18, 2008 Trigger Coordination - 26